Abstract

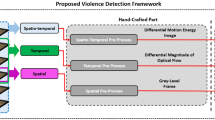

Human behavior detection is essential for public safety and monitoring. However, in human-based surveillance systems, it requires continuous human attention and observation, which is a difficult task. Detection of violent human behavior using autonomous surveillance systems is of critical importance for uninterrupted video surveillance. In this paper, we propose a novel method to detect fights or violent actions based on learning both the spatial and temporal features from equally spaced sequential frames of a video. Multi-level features for two sequential frames, extracted from the convolutional neural network’s top and bottom layers, are combined using the proposed feature fusion method to take into account the motion information. We also proposed Wide-Dense Residual Block to learn these combined spatial features from the two input frames. These learned features are then concatenated and fed to long short-term memory units for capturing temporal dependencies. The feature fusion method and use of additional wide-dense residual blocks enable the network to learn combined features from the input frames effectively and yields better accuracy results. Experimental results evaluated on four publicly available datasets: HockeyFight, Movies, ViolentFlow and BEHAVE show the superior performance of the proposed model in comparison with the state-of-the-art methods.

Similar content being viewed by others

Notes

StratifiedShuffleSplit and StratifiedKFold classes are used from sci-kit learn library (https://scikit-learn.org).

References

Nievas, E. B., Suarez, O. D., García, G. B., Sukthankar, R.: Violence detection in video using computer vision techniques. In: International Conference on Computer Analysis of Images and Patterns, Springer, pp. 332–339 (2011)

Hassner, T., Itcher, Y., Kliper-Gross, O.: Violent flows: real-time detection of violent crowd behavior. In: 2012 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, IEEE, pp. 1–6 (2012)

Karpathy, A., Toderici, G., Shetty, S., Leung, T., Sukthankar, R., Fei-Fei, L.: Large-scale video classification with convolutional neural networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1725–1732 (2014)

Simonyan, K., Zisserman, A.: Two-stream convolutional networks for action recognition in videos. In: Advances in Neural Information Processing Systems, pp. 568–576 (2014)

Tran, D., Bourdev, L., Fergus, R., Torresani, L., Paluri, M.: Learning spatiotemporal features with 3D convolutional networks. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 4489–4497 (2015)

Donahue, J., Anne Hendricks, L., Guadarrama, S., Rohrbach, M., Venugopalan, S., Saenko, K., Darrell, T.: Long-term recurrent convolutional networks for visual recognition and description. In: Proceedings of the IEEE Conference On Computer Vision And Pattern Recognition, pp. 2625–2634 (2015)

Giannakopoulos, T., Kosmopoulos, D., Aristidou, A., Theodoridis, S.: Violence content classification using audio features. In: Hellenic Conference on Artificial Intelligence, Springer, pp. 502–507 (2006)

Rashid, M., Abu-Bakar, S., Mokji, M.: Human emotion recognition from videos using spatio-temporal and audio features. Vis. Comput. 29(12), 1269–1275 (2013)

Cristani, M., Bicego, M., Murino, V.: Audio-visual event recognition in surveillance video sequences. IEEE Trans. Multimed. 9(2), 257–267 (2007)

Giannakopoulos, T., Pikrakis, A., Theodoridis, S.: A multimodal approach to violence detection in video sharing sites. In: 2010 20th International Conference on Pattern Recognition, IEEE, pp. 3244–3247 (2010)

Nam, J., Alghoniemy, M., Tewfik, A. H.: Audio-visual content-based violent scene characterization, in: Proceedings 1998 International Conference on Image Processing. ICIP98 (Cat. No. 98CB36269), vol. 1, IEEE, pp. 353–357 (1998)

Gracia, I.S., Suarez, O.D., Garcia, G.B., Kim, T.-K.: Fast fight detection. PloS One 10(4), e0120448 (2015)

Zhang, T., Jia, W., He, X., Yang, J.: Discriminative dictionary learning with motion weber local descriptor for violence detection. IEEE Trans. Circuits Syst. Video Technol. 27(3), 696–709 (2016)

Datta, A., Shah, M., Lobo, N. D. V.: Person-on-person violence detection in video data. In: Object Recognition Supported by User Interaction for Service Robots, vol. 1, IEEE, pp. 433–438 (2002)

De Souza, F. D., Chavez, G. C., do Valle Jr, E. A., Araújo, A. d. A.: Violence detection in video using spatio-temporal features. In: 2010 23rd SIBGRAPI Conference on Graphics, Patterns and Images, IEEE, pp. 224–230 (2010)

Mohammadi, S., Kiani, H., Perina, A., Murino, V.: Violence detection in crowded scenes using substantial derivative. In: 2015 12th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), IEEE, pp. 1–6 (2015)

Dalal, N., Triggs, B., Schmid, C.: Human detection using oriented histograms of flow and appearance. In: European Conference on Computer Vision, Springer, pp. 428–441 (2006)

Laptev, I.: On space-time interest points. Int. J. Comput. Vis. 64(2–3), 107–123 (2005)

Dawn, D.D., Shaikh, S.H.: A comprehensive survey of human action recognition with spatio-temporal interest point (stip) detector. Vis. Comput. 32(3), 289–306 (2016)

Dalal, N., Triggs, B.: Histograms of oriented gradients for human detection. In: International Conference on Computer Vision & Pattern Recognition (CVPR’05), vol. 1, IEEE Computer Society, pp. 886–893 (2005)

Chen, M., Hauptmann, A.: Mosift: Recognizing human actions in surveillance videos. Research showcase, Computer Science Department, School of Computer Science, Carnegie Mellon University

Gao, Y., Liu, H., Sun, X., Wang, C., Liu, Y.: Violence detection using oriented violent flows. Image Vis. Comput. 48, 37–41 (2016)

Krizhevsky, A., Sutskever, I., Hinton, G. E.: Imagenet classification with deep convolutional neural networks. In: Advances in Neural Information Processing Systems, pp. 1097–1105 (2012)

Kuanar, S., Rao, K., Bilas, M., Bredow, J.: Adaptive cu mode selection in hevc intra prediction: a deep learning approach. Circuits Syst. Signal Process. 38(11), 5081–5102 (2019)

Cai, J., Hu, J.: 3D RANs: 3d residual attention networks for action recognition. Vis. Comput. 36, 1261–1270 (2020). https://doi.org/10.1007/s00371-019-01733-3

Kumar, N., Sukavanam, N.: Weakly supervised deep network for spatiotemporal localization and detection of human actions in wild conditions. Vis. Comput. (2019). https://doi.org/10.1007/s00371-019-01777-5

Liang, D., Liang, H., Yu, Z., Zhang, Y.: Deep convolutional bilstm fusion network for facial expression recognition. Vis. Comput. 36, 499–508 (2020). https://doi.org/10.1007/s00371-019-01636-3

Xu, D., Yan, Y., Ricci, E., Sebe, N.: Detecting anomalous events in videos by learning deep representations of appearance and motion. Comput. Vis. Image Underst. 156, 117–127 (2017)

Bansod, S.D., Nandedkar, A.V.: Crowd anomaly detection and localization using histogram of magnitude and momentum. Vis. Comput. 36(3), 609–620 (2020)

Liu, J., Xia, Y., Tang, Z.: Privacy-preserving video fall detection using visual shielding information. Vis. Comput. (2020). https://doi.org/10.1007/s00371-020-01804-w

Kuanar, S., Athitsos, V., Pradhan, N., Mishra, A., Rao, K. R.: Cognitive analysis of working memory load from eeg, by a deep recurrent neural network. In: 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), IEEE, pp. 2576–2580 (2018)

Kuanar, S., Athitsos, V., Mahapatra, D., Rao, K., Akhtar, Z., Dasgupta, D.: Low dose abdominal ct image reconstruction: an unsupervised learning based approach. In: 2019 IEEE International Conference on Image Processing (ICIP), IEEE, pp. 1351–1355 (2019)

Xingjian, S., Chen, Z., Wang, H., Yeung, D.-Y., Wong, W.-K., Woo, W.-c.: Convolutional lstm network: a machine learning approach for precipitation nowcasting. In: Advances in neural information processing systems, pp. 802–810 (2015)

Asad, M., Yang, Z., Khan, Z., Yang, J., He, X.: Feature fusion based deep spatiotemporal model for violence detection in videos. In: International Conference on Neural Information Processing, Springer, pp. 405–417 (2019)

Hanson, A., PNVR, K., Krishnagopal, S., Davis, L.: Bidirectional convolutional lstm for the detection of violence in videos. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 0–0 (2018)

Sudhakaran, S., Lanz, O.: Learning to detect violent videos using convolutional long short-term memory. In: 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), IEEE, pp. 1–6 (2017)

Senst, T., Eiselein, V., Kuhn, A., Sikora, T.: Crowd violence detection using global motion-compensated lagrangian features and scale-sensitive video-level representation. IEEE Trans. Inf. Forensics Secur. 12(12), 2945–2956 (2017)

Dosovitskiy, A., Fischer, P., Ilg, E., Hausser, P., Hazirbas, C., Golkov, V., Van Der Smagt, P., Cremers, D., Brox, T.: Flownet: Learning optical flow with convolutional networks. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2758–2766 (2015)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition, arXiv preprint arXiv:1409.1556

Deng, J., Dong, W., Socher, R., Li, L.-J., Li, K., Fei-Fei, L.: Imagenet: A large-scale hierarchical image database. In: 2009 IEEE Conference on Computer Vision and Pattern Recognition, IEEE, pp. 248–255 (2009)

Du, C., Wang, C., Shi, C., Xiao, B.: Selective feature connection mechanism: Concatenating multi-layer cnn features with a feature selector, arXiv preprint arXiv:1811.06295

Ronneberger, O., Fischer, P., Brox, T.: U-net: Convolutional networks for biomedical image segmentation. In: International Conference on Medical image computing and computer-assisted intervention, Springer, pp. 234–241 (2015)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Zagoruyko, S., Komodakis, N.: Wide residual networks, arXiv preprint arXiv:1605.07146

Zhang, Y., Tian, Y., Kong, Y., Zhong, B., Fu, Y.: Residual dense network for image super-resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2472–2481 (2018)

Srivastava, N., Hinton, G., Krizhevsky, A., Sutskever, I., Salakhutdinov, R.: Dropout: a simple way to prevent neural networks from overfitting. J. Mach. Learning Res. 15(1), 1929–1958 (2014)

Ioffe, S., Szegedy, C.: Batch normalization: Accelerating deep network training by reducing internal covariate shift, arXiv preprint arXiv:1502.03167

Blunsden, S., Fisher, R.: The behave video dataset: ground truthed video for multi-person behavior classification. Ann. BMVA 4(1–12), 4 (2010)

Pascanu, R., Mikolov, T., Bengio, Y.: On the difficulty of training recurrent neural networks. In: International conference on machine learning, pp. 1310–1318 (2013)

Zaremba, W., Sutskever, I., Vinyals, O.: Recurrent neural network regularization, arXiv preprint arXiv:1409.2329

Maaten, L.V.D., Hinton, G.: Visualizing data using t-sne. J. Mach. Learning Res. 9, 2579–2605 (2008)

Mohammadi, S., Perina, A., Kiani, H., Murino, V.: Angry crowds: Detecting violent events in videos. In: European Conference on Computer Vision, Springer, pp. 3–18 (2016)

Xu, L., Gong, C., Yang, J., Wu, Q., Yao, L.: Violent video detection based on mosift feature and sparse coding. In: 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), IEEE, pp. 3538–3542 (2014)

Deniz, O., Serrano, I., Bueno, G., Kim, T.-K.: Fast violence detection in video. In: 2014 International Conference on Computer Vision Theory and Applications (VISAPP), Vol. 2, IEEE, pp. 478–485 (2014)

Dong, Z., Qin, J., Wang, Y.: Multi-stream deep networks for person to person violence detection in videos. In: Chinese Conference on Pattern Recognition, Springer, pp. 517–531 (2016)

Mahadevan, V., Li, W., Bhalodia, V., Vasconcelos, N.: Anomaly detection in crowded scenes. In: 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, IEEE, pp. 1975–1981 (2010)

Acknowledgements

This research is partly supported by NSFC, China (No: 61876107, U1803261) and Committee of Science and Technology, Shanghai, China (No. 19510711200).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that we have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Asad, M., Yang, J., He, J. et al. Multi-frame feature-fusion-based model for violence detection. Vis Comput 37, 1415–1431 (2021). https://doi.org/10.1007/s00371-020-01878-6

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-020-01878-6