Abstract

Stochastic Galerkin methods for non-affine coefficient representations are known to cause major difficulties from theoretical and numerical points of view. In this work, an adaptive Galerkin FE method for linear parametric PDEs with lognormal coefficients discretized in Hermite chaos polynomials is derived. It employs problem-adapted function spaces to ensure solvability of the variational formulation. The inherently high computational complexity of the parametric operator is made tractable by using hierarchical tensor representations. For this, a new tensor train format of the lognormal coefficient is derived and verified numerically. The central novelty is the derivation of a reliable residual-based a posteriori error estimator. This can be regarded as a unique feature of stochastic Galerkin methods. It allows for an adaptive algorithm to steer the refinements of the physical mesh and the anisotropic Wiener chaos polynomial degrees. For the evaluation of the error estimator to become feasible, a numerically efficient tensor format discretization is developed. Benchmark examples with unbounded lognormal coefficient fields illustrate the performance of the proposed Galerkin discretization and the fully adaptive algorithm.

Similar content being viewed by others

1 Introduction

In the thriving field of uncertainty quantification (UQ), efficient numerical methods for the approximate solution of random PDEs have been a topic of vivid research. As common benchmark problem, one often considers the Darcy equation (as a model for flow through a porous medium) with different types of random coefficients in order to assess the efficiency of a numerical approach. Two important properties are the length of the expansion of random fields, which often directly translates to the number of independent random variables describing the variability in the model, and the type of dependence on these random variables. The affine case with uniform random variables has been studied extensively, since it represents a rather simple model which can easily be treated with standard methods. Opposite to that, the lognormal case with Gaussian random variables is quite challenging, from the analytical as well as numerical point of view. A theory for the solvability of linear elliptic PDEs with respective unbounded coefficients (and hence a lack of uniform ellipticity) in a variational formulation was only developed recently in [25, 41, 49]. Computationally, the problems quickly become difficult or even intractable with many stochastic dimensions, which might be required to accurately represent the stochasticity in the random field expansion. This paper is concerned with the development of an efficient numerical method for this type of problems.

While popular sample-based Monte Carlo methods obtain dimension-independent convergence rates, these are rather low despite often encountered higher regularity of the parameter dependence. Moreover, such methods can only be used to evaluate functionals of the solution (QoIs = quantities of interest) and an a posteriori error control usually is not feasible reliably. Some recent developments in this field can e.g. be found in [32, 33, 36, 38] for the model problem with a lognormal coefficient. Some ideas on a posteriori adaptivity for Monte Carlo methods can e.g. be found in [11, 20].

An alternative are functional (often called spectral) approximations, which for instance are obtained by Stochastic Collocation (SC) [2, 43, 44], the related Multilevel Quadraure (MLQ) [30, 31] and Stochastic Galerkin (SGFootnote 1.) methods. The latter in particular is popular in the engeneering sciences since it can be perceived as an extension of classical finite element methods (FEM). These approaches provide a complete parameter to solution map based on which e.g. statistical moments of the stochastic solution can be evaluated. Notably, the regularity of the solution can be exploited in order to obtain quasi-optimal convergence rates. However, the number of random variables and nonlinear parameter representations have a significant impact on the computational feasibility and techniques for a model order reduction are required. Collocation methods with pointwise evaluations in the parameter space are usually constructed either based on some a priori knowledge or by means of an iterative refinement algorithm which takes into account the hierarchical surplus on possible new discretization levels. While these approaches work reasonably well, methods for a reliable error control do not seem immediate since the approximation relies only on interpolation properties. Nevertheless, for the affine case and under certain assumptions, first ideas were recently presented in [28].

The computationally more elaborate Stochastic Galerkin methods carry out an orthogonal projection with respect to the energy norm onto a discrete space which is usually spanned by a tensor basis consisting of FE basis functions in physical space and polynomial chaos polynomials orthogonal with respect to the joint parameter measure in (stochastic) parameter space. The use of global polynomials is justified by the high (analytic) regularity of the solution map with respect to the parameters [8, 9, 34]. However, in particular the large computational cost of Galerkin methods make adaptivity and model reduction techniques a necessity.

In order to achieve this, different paths have been pursued successfully. As a first approach, sparse approximations as in [15, 16, 19] or [4, 5, 10] with either a residual based or a hierarchical a posteriori error estimators can be computed. Here, the aim is to restrict an exponentially large discrete basis to the most relevant functions explictly by iteratively constructing a quasi-optimal subset. In [16], convergence of the adaptive algorithm could be shown. Moreover, adjoint based error estimators are considered in [6, 48].

As a second approach, an adaptive discretization in hierarchical tensor representations can be derived as described in [21]. These modern compression formats have lately been investigated intensively in the numerical analysis community [3, 29, 45, 46]. It has been examined that with appropriate assumptions the curse of dimensionality can be alleviated, particularly so when employed with typical random PDE problems in UQ, see [12, 13] for examples with sample-based reconstruction strategies. Such representations can be understood as an implicit model order reduction technique, closely related to (but more general than e.g.) reduced basis methods.

In the mentioned adaptive approaches, the FE mesh for the physical space and the parametric polynomial chaos space are adapted automatically with respect to the considered problem. In the case of tensor approximations, also the ranks of the representation are adjusted.

However, in all adaptive SGFEM research, so far only the affine case with uniform elliptic coefficient has been considered. In this paper, we extend the ASGFEM approach developed in [21] to the significantly more involved case of lognormal (non-affine) coefficients. This poses several severe complications analytically and numerically. Analytical aspects have recently been tackled in [25, 26, 34, 41]. Numerically, in particular computationally efficient Galerkin methods are quite diffucult to construct for this case and have not been devised. Compression techniques and adaptivity most certainly are required in order to make these problems tractable with SGFEM, as described in this paper. Of particular interest is the construction of a computable a posteriori error estimator, which also greatly benefits from using tensor formats. In order to obtain a well-posed discretization, problem adapted spaces according to the presentation in [49] are used.

Main contributions of this work are a representation of the coefficient in the tensor train (TT) format, the operator discretization in tensor format and the derivation of an reliable residual based error estimatator. This then serves as the basis for an adaptive algorithm which steers all discretization parameters of the SGFEM. The performance of the proposed method is demonstrated with some benchmark problems. Here, the used field models are not artificially bounded or shifted away from zero.

We point out that, to our knowledge, an SGFEM for the lognormal case so far has only been practically computed in the weighted function space setting in [42] for a small 1d model as proof of concept. Moreover, there has not been any adaptive numerical method with reliable a posteriori error estimation as derived in this work. However, we note that our approach relies on the assumption that the coefficient is discretized sufficiently accurately and hence the related discretization error can be neglected. In practice, this can be ensured with high probability by sampling the error of the discrete coefficient. Additionally, since constants in the error bound can become quite large, we interpret the error estimate as a refinement indicator.

It should be noted that a functional adaptive evluation of the forward map allows for the derivation of an explicit adaptive Bayesian inversion with functional tensor representations as in [17]. The results of the present work lay the ground for a similar approach with a Gaussian prior assumption. This will be the topic of future research. Moreover, the described approach enables to construct SGFEM with arbitrary densities (approximated in hierarchical tensor formats). This generalization should also be examined in more detail in further research. Lastly, while sparse discretizations seem infeasible for the lognormal coefficient, a transformation [51] yields a convection-diffusion formulation of the problem with affine parameter dependence, which then again is amenable to an adaptive sparse SGFEM. This direction is examined in [14].

The structure of this paper is as follows: We first introduce the setting of parametric PDEs with our model problem in Sect. 2. The variational formulation in problem dependent weighted function spaces and the finite element (FE) setting are described in Sect. 3. The employed tensor formats and the tensor representations of the coefficient and the differential operator are examined in Sect. 4. As a central part, in Sect. 5 we derive the a posteriori error estimators and define a fully adaptive algorithm in Sect. 6, including efficient ways to compute error indicators for physical and stochastic refinement. Numerical examples in Sect. 7 illustrate the performance of the presented method and conclude the paper.

2 Setting and discretization

In the following, we introduce the considered model problem formally, present its weak formulation and describe the employed discretizations in finite dimensional function spaces. We closely follow the presentations in [26, 34, 49] regarding the lognormal problem in problem-dependent function spaces. In [21], a related formulation for the solution and evaluation of a posteriori error estimators for parametric PDEs with affine coefficient fields in hierarchical tensor formats is derived.

2.1 Model problem

We assume some bounded domain \(D \subset {\mathbb {R}}^d\), \(d=1,2,3\), with Lipschitz boundary \(\partial D\) and a probability space \((\varOmega , {\mathcal {F}}, {\mathbb {P}})\). For \({\mathbb {P}}\)-almost all \(\omega \in \varOmega \), we consider the random elliptic problem

The coefficient \(a:D\times \varOmega \mapsto {\mathbb {R}}\) denotes a lognormal, isotropic diffusion coefficient, i.e., \(\log (a)\) is an isotropic Gaussian random field.

Remark 2.1

The source term \(f\in L^2(D)\) is assumed deterministic. However, it would not introduce fundamental additional difficulties to also model f and the boundary conditions as stochastic fields not correlated to the coefficient \(a(x, \omega )\) as long as appropriate integrability of the data is given.

For the coefficient \(a(x,\omega )\) of (2.1), we assume a Karhunen-Loève type expansion of \(b:=\log (a)\) of the form

Here, the parameter vector \(Y = (Y_\ell )_{\ell \in {\mathbb {N}}}\) consists of independent standard normal Gaussian random variables in \({\mathbb {R}}\). Then, by passing to the image space \(({\mathbb {R}}^{\mathbb {N}},{{\mathcal {B}}}({\mathbb {R}}^{\mathbb {N}}),\gamma )\) with the Borel \(\sigma \)-algebra \({{\mathcal {B}}}({\mathbb {R}}^{\mathbb {N}})\) of all open sets of \({\mathbb {R}}^{\mathbb {N}}\) and the Gaussian product measure

we can consider the parameter vector \(y=(y_\ell )_{\ell \in {\mathbb {N}}}=(Y_\ell (\omega ))_{\ell \in {\mathbb {N}}}\), \(\omega \in \varOmega \).

For any sequence \({\varvec{\beta }}\in \ell ^1({\mathbb {N}})\) with

we define the set

The set \(\varGamma _{\varvec{\beta }}\) of admissible parameter vectors is \(\gamma \)-measurable and of full measure.

Lemma 2.2

([34, Lemma 2.1]) For any sequence \({\varvec{\beta }}\in \ell ^1({\mathbb {N}})\), there holds

For any \(y\in \varGamma _{\varvec{\beta }}\), we define the deterministic parametric coefficient

This series converges in \(L^\infty (D)\) for all \(y\in \varGamma _{\varvec{\beta }}\).

Lemma 2.3

([34, Lemma 2.2]) For all \(y\in \varGamma _{\varvec{\beta }}\), the diffusion coefficient (2.2) is well-defined and satisfies

with

Due to Lemmas 2.2 and 2.3 , we consider \(\varGamma =\varGamma _{\varvec{\beta }}\) as the parameter space instead of \({\mathbb {R}}^{\mathbb {N}}\). By Lemma 2.3, the stochastic coefficient a(x, y) is well defined, bounded from above and admits a positive lower bound for almost all \(y\in \varGamma \). Thus, the equations (2.1) and (2.2) have a unique solution \(u(y)\in {{\mathcal {X}}}\) for almost all \(y\in \varGamma \).

Let \({{\mathcal {X}}}:=H^1_0(D)\) denote the closed subspace of functions in the Sobolev space \(H^1(D)\) with vanishing boundary values in the sense of trace and define the norm

We denote by \(\langle \cdot ,\cdot \rangle =\langle \cdot ,\cdot \rangle _{{{\mathcal {X}}}^*,{{\mathcal {X}}}}\) the duality pairing of \({{\mathcal {X}}}^*\) and \({{\mathcal {X}}}\) and consider \(f\in L^2(D)\) as an element of the dual \({{\mathcal {X}}}^*= H^{-1}(D)\).

For any \(y\in \varGamma \), the variational formulation of (2.1) reads: find \(u(y)\in {{\mathcal {X}}}\) such that

where \(B:{{\mathcal {X}}}\times {{\mathcal {X}}}\times \varGamma \rightarrow {\mathbb {R}}\) is defined by

Hence, pathwise existence and uniqueness of the solution u(y) is obtained by the Lax-Milgram lemma due to uniform ellipticity for any fixed \(y\in \varGamma \). In particular, for all \(y\in \varGamma \), (2.3), it holds

with some \(0 < {\check{a}}(y)\le a(x,y)\) on D. The integration of (2.3) over \(\varGamma \) with respect to the standard normal Gaussian measure \(\gamma \) does not lead to a well-defined problem since the coefficient a(x, y) is not uniformly bounded in \(y\in \varGamma \) and not bounded away from zero. Hence, a more involved approach has to be pursued, which is elaborated in Sect. 3.2. Alternative results for this equation were presented in [25, 26, 49].

The formulation of (2.1) as a parametric deterministic elliptic problem with solution \(u(y)\in {{\mathcal {X}}}\) for each parameter \(y\in \varGamma \) reads

3 Variational formulation and discretization

This section is concerned with the introduction of appropriate function spaces required for the discretization of the model problem. In particular, a problem-adapted probability measure is introduced which allows for a well-defined formulation of the weak problem rescaled polynomial chaos basis.

3.1 Problem-adapted function spaces

Let \({{\mathcal {F}}}\) be the set of finitely supported multi-indices

A full tensor index set of order \(M\in {\mathbb {N}}\) is defined by

with complete index sets of size \(d_m\) given by

For any such subset \(\varLambda \subset {{\mathcal {F}}}\), we define \({{\,\mathrm{supp}\,}}\varLambda := \bigcup _{\mu \in \varLambda } {{\,\mathrm{supp}\,}}\mu \subset {\mathbb {N}}\).

We denote by \((H_n)_{n=0}^\infty \) the orthonormal basis of \(L^2({\mathbb {R}}, \gamma _m) = L^2({\mathbb {R}}, \gamma _1)\) with respect to the standard Gaussian measure consisting of Hermite polynomials \(H_n\) of degree \(n\in {\mathbb {N}}_0\) on \({\mathbb {R}}\). An orthogonal basis of \(L^2(\varGamma , \gamma )\) is obtained by tensorization of the univariate polynomials, see [49, 50]. To reduce notation, we drop the explicit dependency on the sigma-algebra which is always assumed to be rich enough. For any multi-index \(\mu \in {{\mathcal {F}}}\), the tensor product polynomial \(H_\mu :=\bigotimes _{m=1}^\infty H_{\mu _m}\) in \(y\in \varGamma \) is expressed as the finite product

For practical computations, an analytic expression for the triple product of Hermite polynomials can be used.

Lemma 3.1

([40, 50]) For \(\mu ,\nu ,\xi \in {{\mathcal {F}}}\), \(m\in {\mathbb {N}}\), it holds

where for \(\eta _m = \nu _m + \mu _m - 2\xi _m\)

Lemma 3.2

([22, 41]) Let \(Y\sim {{\mathcal {N}}}_1\), \(t\in {\mathbb {R}}\) and \(X=\exp (t Y) \in L^2({\mathbb {R}},\gamma )\). The expansion of \(X=\exp (t Y)\) in Hermite polynomials is given by

We recall some results from [49] required in our setting. Let \({\varvec{\sigma }}= (\sigma _m)_{m\in {\mathbb {N}}}\in \exp (\ell ^1({\mathbb {N}}))\) and define

Then, \(\,\mathrm {d}{{{\mathcal {N}}}_{\sigma _m^2}} = \zeta _{{\varvec{\sigma }},m}\,\mathrm {d}{{{\mathcal {N}}}_1}\) where

is the one-dimensional Radon–Nikodym derivative of \(\gamma _{\sigma _m}\) with respect to \(\gamma _m\), i.e., the respective probability density. We assume that the sequence \({\varvec{\sigma }}\) depends exponentially on \({\varvec{\beta }}= (\beta _m)_{m\in {\mathbb {N}}}\) and some \(\varrho \in {\mathbb {R}}\), namely

and define

By multiplication, this yields the multivariate identity

A basis of orthonormal polynomials with respect to the weighted measure \(\gamma _\varrho \) can be defined by the transformation

Then, for all \(v\in L^2(\varGamma ,\gamma )\),

We define the scaled Hermite polynomials \(H^{\tau _\varrho }_\mu := H_\mu \circ \tau _\varrho \).

Remark 3.3

Lemmas 3.1 and 3.2 are also valid with the transformed multivariate Hermite polynomials \(H^{\tau _\varrho }\). In particular, \(\kappa _{\xi _m, \nu _m, \mu _m}\) does not change under transformation and the expansion in Lemma 3.2 holds by substituting \(t\in {\mathbb {R}}\) with \(\sigma _m t\) in the corresponding dimension \(m\in {\mathbb {N}}\).

3.2 Weak formulation in problem-dependent spaces

In order to obtain a well-posed variational formulation of (2.1) on \(L^2(\varGamma ,\gamma ;{{\mathcal {X}}})\), we follow the approach in [49] and introduce a measure \(\gamma _\varrho \) which is stronger than \(\gamma \) and assume integrability of f with respect to this measure. For \(\varrho > 0\) and \(0\le \theta < 1\), let the bilinear form \(B_{\theta \varrho }:{{\mathcal {V}}}_{\theta \varrho }\times {{\mathcal {V}}}_{\theta \varrho }\rightarrow {\mathbb {R}}\) be given by

The solution space is then defined as the Hilbert space

endowed with the inner product \(B_{\theta \varrho }(\cdot ,\cdot )\), the induced energy norm \(\Vert v\Vert _{\theta \varrho }:=B_{\theta \varrho }(v,v)^{1/2}\) for \(v\in {{\mathcal {V}}}_{\theta \varrho }\) and the respective dual pairing \(\langle \cdot ,\cdot \rangle _{\theta \varrho }\) between \({{\mathcal {V}}}_{\theta \varrho }^*\) and \({{\mathcal {V}}}_{\theta \varrho }\). The different employed spaces are related as follows.

Lemma 3.4

([49, Proposition 2.43]) For \(0<\theta <1\),

are continuous embeddings.

It can be shown that the bilinear form \(B_{\theta \varrho }(\cdot ,\cdot )\) is \({{\mathcal {V}}}_{\theta \varrho }\)-elliptic in the sense of the following Lemma.

Lemma 3.5

([49, Lemma 2.41, 2.42]) For \(w,v\in L^2(\varGamma ,\gamma _\varrho ;{{\mathcal {X}}})\),

and for \(v \in L^2(\varGamma ,\gamma ;{{\mathcal {X}}})\),

Moreover, for \(f\in {\mathcal {X}}^*\) we define the continuous linear form

For \(F_{\theta \varrho }\in {{\mathcal {V}}}_{\theta \varrho }^*\), (3.2) and (3.3) in particular lead to the unique solvability of the variational problem in \({{\mathcal {V}}}_{\theta \varrho }\),

and \(u\in {{\mathcal {V}}}_{\theta \varrho }\) is the unique solution of (2.1).

3.3 Deterministic discretization

We discretise the deterministic space \({{\mathcal {X}}}\) by a conforming finite element space \({{\mathcal {X}}}_p({{\mathcal {T}}}) := \mathrm {span} \{\varphi _i\}_{i=1}^N \subset {{\mathcal {X}}}\) of degree p on some simplicial regular mesh \({{\mathcal {T}}}\) of domain D with the set of faces \({{\mathcal {S}}}\) (i.e., edges for \(d=2\)) and basis functions \(\varphi _i\).

In order to circumvent complications due to an inexact approximation of boundary values, we assume that D is a polygon. We denote by \(P_p({{\mathcal {T}}})\) the space of piecewise polynomials of degree p on the triangulation \({{\mathcal {T}}}\). The assumed FE discretization with Lagrange elements of order p then satisfies \({{\mathcal {X}}}_p({{\mathcal {T}}})\subset P_p({{\mathcal {T}}})\cap C(\overline{{{\mathcal {T}}}})\). For any element \(T\in {{\mathcal {T}}}\) and face \(F\in {{\mathcal {S}}}\), we set the entity sizes \(h_T := {{\,\mathrm{diam}\,}}T\) and \(h_F := {{\,\mathrm{diam}\,}}F\). Let \(n_F\) denote the exterior unit normal on any face F. The jump of some \(\chi \in H^1(D;{\mathbb {R}}^d)\) on \(F = \overline{T_1}\cap \overline{T_2}\) in normal direction  is then defined by

is then defined by

By \(\omega _T\) and \(\omega _F\) we denote the element and facet patches defined by the union of all elements which share at least a vertex with T or F, respectively. Consequently, the Clément interpolation operator \(I:{{\mathcal {X}}}\rightarrow {{\mathcal {X}}}_p({{\mathcal {T}}})\) satisfies

where the seminorms \(|\;\cdot \;|_{{{\mathcal {X}}},\omega _T}\) and \(|\;\cdot \;|_{{{\mathcal {X}}},\omega _F}\) are the restrictions of \(\Vert \;\cdot \;\Vert _{{{\mathcal {X}}}}\) to \(\omega _T\) and \(\omega _F\), respectively.

The fully discrete approximation space with \(|\varLambda |<\infty \) is given by

and it holds \({{\mathcal {V}}}_N(\varLambda ;{{\mathcal {T}}},p)\subset {{\mathcal {V}}}_{\theta \varrho }\). The Galerkin projection of u is the unique \(u_N\in {{\mathcal {V}}}_N(\varLambda ;{{\mathcal {T}}},p)\) which satisfies

We define a tensor product interpolation operator \({{\mathcal {I}}}:L^2(\varGamma ,\gamma ;{{\mathcal {X}}}) \rightarrow {{\mathcal {V}}}_N(\varLambda ;{{\mathcal {T}}},p)\) for \(v = \sum _{\mu \in {\mathcal {F}}} v_\mu H_\mu \in L^2(\varGamma ,\gamma ;{{\mathcal {X}}})\) by setting

For \(v \in {{\mathcal {V}}}_{\vartheta \varrho }(\varLambda ) {\; := \bigl \{v = \sum _{\mu \in \varLambda } v_\mu H_\mu \, ; \; v_\mu \in {{\mathcal {X}}}\bigr \}}\) and all \(T \in {{\mathcal {T}}}\), this yields the interpolation estimate

Likewise, on the edges \(F \in {{\mathcal {S}}}\) we derive

Here,

Theorem 3.6

([49, Theorem 2.45]). If \(f\in L^p(\varGamma ,\gamma _\varrho ;{{\mathcal {X}}}^*)\) for \(p>2\), the Galerkin projection \(u_N\in {{\mathcal {V}}}_N\) satisfies

Remark 3.7

It should be noted that the constant \(\frac{{\hat{c}}_{\vartheta \varrho }}{{\check{c}}_{\vartheta \varrho }}\) tends to \(\infty \) as \(\varrho \rightarrow \{0,\infty \}\) and for \(\theta \rightarrow \{0,1\}\), see Remark 2.46 in [49]. This is expected, as the problem is ill-posed in these limits. In order to obtain reasonable upper error bounds, the parameters have hence to be chosen judiciously. A more detailed investigation of an optimal parameter choice is postponed to future research.

4 Decomposition of the operator

In this section, we introduce the discretization of the operator in an appropriate tensor format. For this, an efficient representation of the non-linear coefficient is derived. We first introduce basic aspects of the employed Tensor Train (TT) format.

4.1 The tensor train format

We only provide a brief overview of the notation used regarding the tensor train representation. For further details, we refer the reader to [3, 21] and the references therein.

Any function \(w_N \in {\mathcal {V}}_N(\varLambda ;{\mathcal {T}},p)\) can be written as

Thus, the discretization space is isomorphic to the tensor space, namely

The tensor W grows exponentially with the order M, which constitutes the so called curse of dimensionality. We employ a low-rank decomposition of the tensor for a dimension reduction. In this paper, we adhere to the Tensor Train (TT) format for tensor decomposition [46]. This seems reasonable, as the components (of the operator and hence the solution) are of decreasing importance due to the decay of the coefficient functions \(b_m\) and therefore we can expect decreasing ranks in the tensor train format. Nevertheless, other tensor formats are also feasible in principle.

The TT representation of a tensor \(W \in {\mathbb {R}}^{N \times d_1 \times \cdots \times d_M}\) is given as

For simplicity of notation, we set \(r_0 = r_{M+1} = 1\). If all dimensions \(r_m\) are minimal, then this is called the TT decomposition of W and \(\mathrm {rank}_{\mathrm {TT}}(W) := r = (1,r_1,\ldots ,r_M,1)\) is called the TT rank of W. The TT decomposition always exists and it can be computed in polynomial time using the hierarchical SVD (HSVD) [35]. A truncated HSVD yields a quasi-optimal approximation in the Frobenius norm [27, 29, 39, 46]. Most algebraic operations can be performed efficiently in the TT format [3].

Once the function \(w_N\) has a low-rank TT decomposition, it is advisable to obtain a similar representation for the Galerkin operator on \({\mathcal {V}}_N(\varLambda ;{\mathcal {T}},p)\) in order to allow for efficient tensor solvers. For the lognormal coefficient a(x, y), this can only be done approximately.

Later, it will be useful to express the storage complexity of a tensor train. We distinguish the degrees of freedom given by the tensor train representation and the full (uncompressed) degrees of freedom. For a tensor \(U\in {\mathbb {R}}^{q_0\times \ldots \times q_L}\) of TT-rank \(r=(1, r_1,\ldots ,r_{L},1)\), the dimension of the low-rank tensor manifold is given by

while the dimension of the full tensor space and hence its representation is

One can conclude from (4.1) that the complexity of tensor trains depend only linearly on the dimension, i.e., we have to store

entries instead of \({\mathcal {O}}(\hat{q}^L)\), which is much smaller for moderate TT-ranks r.

4.2 TT representation of the non-linear coefficient

s

We approximate the coefficient

using the coefficient splitting algorithm described in [47]. This results in a discretized coefficient on a tensor set

with TT-rank \(s = (1, s_1,\ldots ,s_L, 1)\). Here, we exploit Lemma 3.2, i.e., the fact that every factor of (4.2) has a Hermite expansion of the form

with

The procedure is as follows: First, we fix an adequate quadrature rule for solving the involved integrals by choosing quadrature points \(\chi _q \in D\) and weights \(w_q \in {\mathbb {R}}\) for \(q = 1,\ldots ,P_{\mathrm {quad}}\). We begin the discretization at the right most side and define the correlation matrix

for \(\nu _L,\nu _L' = 0,\ldots ,q_L-1\). This means that we have truncated the expansion (4.3) according to the tensor set \(\varDelta \), which yields an approximation of the factors. This matrix is symmetric and positive semidefinite and it therefore admits an eigenvalue decomposition

This yields reduced basis functions

for \(k_L = 1,\ldots ,s_L\) that we can store explicitly at the quadrature points of the integral. If we choose \(s_L = q_L\) then this is just a transformation without any reduction.

We proceed successively for \(\ell = L-1,\dots ,1\) by defining correlation matrices

with eigenvalue decompositions

and the resulting reduced basis functions at the quadrature points

see Algorithm 1.

This results in a first component

for \(k_1 = 1,\ldots ,s_1\). Note that on the one hand, it is possible to evaluate this component at any point \(x \in D\) by converting the reduced basis functions back into their original form by means of the tensor components \(A_\ell \) and the coefficient functions \(c_{\nu _\ell }^{(\ell )}\). More specifically,

On the other hand, each original coefficient function is approximated by the reduced basis representation

This approximation is exact if the ranks \(s = (s_1,\ldots ,s_L)\) are full.

By the described procedure we obtain an approximate discretization \(a_{\varDelta ,s} \approx a\) in a TT-like format that is continuous in the first component and that has the decomposition

see Fig. 1.

On \({\mathcal {V}}_N(\varLambda ;{\mathcal {T}},p)\) the linear Galerkin operator \({\mathbf {A}}\) is in the TT matrix or matrix product operator (MPO) format:

where

and for all \(m = 1,\ldots ,M-1\)

and

Since the integral over the triple product \(\kappa _{\mu _m,\mu _m',\nu _m} = 0\) for all \(\nu _m > 2\max (\mu _m,\mu _m')\), it is sufficient to set \(q_\ell = 2d_\ell - 1\) for all \(\ell = 1,\ldots ,L\). If the rank s of the decomposition of the coefficient is full, then the discretised coefficient \(a_{\varDelta ,s}\) is exact on the discrete space\({\mathcal {V}}_N\) (up to quadrature errors).

However, this is generally infeasible as the rank would grow exponentially with M. Therefore, a truncation of the rank becomes necessary and the coefficient is only an approximation. We assume in the following that the error that is due to this approximation of the coefficient is small. A thorough estimation of this error is subject to future research.

Remark 4.1

A similar approach to decomposing the coefficient has been chosen in [23] where the knowledge of the eigenfunctions of the covariance operator was assumed a priori. This means that one has an orthogonal basis also for the deterministic part in x and all that remains to do is to decompose the coefficient tensor for this basis representation. This is also done using some quadrature and the \(L^2\)-error of this approximation can be estimated.

According to the discretization of the coefficient, we introduce the splitting of the operator,

with the active and inactive operators \({\mathcal {A}}_+ : {\mathcal {V}}_N(\varLambda ;{\mathcal {T}},p) \rightarrow {\mathcal {V}}_N(\varLambda ;{\mathcal {T}},p)^*\) and \({\mathcal {A}}_- : {\mathcal {V}}_N(\varLambda ;{\mathcal {T}},p) \rightarrow {\mathcal {V}}_N(\varLambda ;{\mathcal {T}},p)^*\) defined by

for \(v \in {\mathcal {V}}_N(\varLambda ;{\mathcal {T}},p)\).

The above considerations yield that the approximate operator \({\mathcal {A}}_+\) can be represented by the following tensor product structure

with the operator components

and for all \(\ell = 1,\ldots ,L\),

5 Error estimates

This section is concerned with the derivation of a reliable a posteriori error estimator based on the stochastic residual. In comparison to the derivation in [15, 16, 21], the lognormal coefficient requires a more involved approach directly related to the employed weighted function spaces introduced in Sect. 3. In theory, an additional error occurs because of the discretization of the coefficient which we assume to be negligible. The developed adaptive algorithm makes possible a computable a posteriori steering of the error components by a refinement of the FE mesh and the anisotropic Hermite polynomial chaos of the solution. The efficient implementation is due to the formulation in the TT format the ranks of which are also set adaptively.

The definition of the operators as in (4.6) leads to a decomposition of the residual

with

The discrete solution \(w_N \in {\mathcal {V}}_N\) reads

We assume that the operator is given in its approximate semi-discrete form \({\mathcal {A}}_+\) and aim to estimate the energy error

Remark 5.1

As stated before, we assume that the error that results from approximating the coefficient is small. Estimation of this error is subject to future research. Work in this direction has e.g. been carried out in [7, 23]. Additionally, we require that the bounds (3.2) and (3.3) still hold, possibly with different constants \({\hat{c}}_{\vartheta \varrho }^+\) and \({\check{c}}_{\vartheta \varrho }^+\). This is for example guaranteed if \(a_{\varDelta ,s}\) is positive, i.e., if

Then, since the approximated coefficient is polynomial in y, the arguments in Lemma 3.5 yield the same constants

We recall Theorem 5.1 from [15] and also provide the proof for the sake of a complete presentation. Note that the result allows for non-orthogonal approximations \(w_N\in {{\mathcal {V}}}_N\).

Theorem 5.2

Let \({{\mathcal {V}}}_N\subset {{\mathcal {V}}}_{\vartheta \varrho }\) a closed subspace and \(w_N\in {{\mathcal {V}}}_N\), and let \(u_N\) denote the \({{\mathcal {A}}}_+\) Galerkin projection of u onto \({{\mathcal {V}}}_N\). Then it holds

Here, \({\mathcal {I}}\) denotes the Clément interpolation operator in (3.4) and \(c_{{\mathcal {I}}}\) is the operator norm of \({{\,\mathrm{id}\,}}- {\mathcal {I}}\) with respect to the energy norm \(\Vert \cdot \Vert _{{{\mathcal {A}}}_+}\). The constant \({\check{c}}_{\theta \varrho }^+\) is derived from the assumed coercivity of the bilinear form induced by \({{\mathcal {A}}}_+\) similar to (3.2) and (3.3).

Proof

Due to Galerkin orthogonality of \(u_N\), it holds

By the Riesz representation theorem, the first part is

We now utilise the Galerkin orthogonality and introduce the bounded linear map \({{\mathcal {I}}}: {{\mathcal {V}}}_{\theta \varrho } \rightarrow {{\mathcal {V}}}_N\) to obtain

Since we do not have access to the Galerkin solution \(u_N\), we reintroduce \(w_N\)

We apply the coercivity of the operator \({{\mathcal {A}}}_+\) to the denominator, which yields the desired result. For the last inequality, we used the boundedness of \({\mathcal {I}}\) in the energy norm by defining the constant as the operator norm

\(\square \)

Since the product of the Hermite polynomials for each \(m = 1,\ldots ,M\) has degree at most \(q_m + d_m -2\), it is useful to define the index set

Then, the residual can be split into an active and an inactive part by using the tensor sets \(\varXi \) and \(\varLambda \),

with

where \({{\,\mathrm{div}\,}}{{\,\mathrm{res}\,}}(\cdot ,\eta ) \in {\mathcal {X}}^*\) for all \(\eta \in \varXi \).

For all \(\eta \in \varXi \), the function \({{\,\mathrm{res}\,}}\) is given as

with continuous first component

and stochastic components for \(m = 1,\ldots ,M\),

The function \({{\,\mathrm{res}\,}}\) is again a TT tensor with continuous first component with TT ranks \(r_ms_m\) for \(m=1,\ldots ,M\) and \(s_\ell \) for \(\ell = M+1,\ldots ,L\). The physical dimensions are \(d_m + q_m - 2\) for all \(m = 1,\ldots ,M\) and \(d_\ell -1\) for \(\ell = M+1,\ldots ,L\).

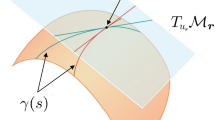

The above considerations suggest that the error can be decomposed into errors that derive from the respective approximations in the deterministic domain, the parametric domain and in the ranks. This is indeed the case, as we will see in the following. In a nutshell, if \(u_N\) is the Galerkin solution in \({{\mathcal {V}}}_N\) and \(u_\varLambda \) is the Galerkin solution in the semi-discrete space \({{\mathcal {V}}}(\varLambda )\), then the deterministic error\({{\,\mathrm{err}\,}}_{\mathrm {det}}= \Vert u_\varLambda - u_N\Vert _{{{\mathcal {A}}}_+}\) corresponds to the error of the active residual\({\mathcal {R}}_{+,\varLambda }\), the parametric error\({{\,\mathrm{err}\,}}_{\mathrm {param}}= \Vert u - u_\varLambda \Vert _{{{\mathcal {A}}}_+}\) corresponds to the inactive residual\({\mathcal {R}}_{+,\varXi \setminus \varLambda }\) and the error made by restricting the ranks is the error in the discrete space \({{\,\mathrm{err}\,}}_{\mathrm {disc}}(w_N) = \Vert u_N - w_N\Vert _{{{\mathcal {A}}}_+}^2\), see Fig. 2 for an illustration.

5.1 Deterministic error estimation

We define the deterministic error estimator

where the flow is given by the residual contributions

This estimates the active residual as follows.

Proposition 5.3

For any \(v \in {{\mathcal {V}}}_{\vartheta \varrho }\) and any \(w_N \in {{\mathcal {V}}}_N\), it holds

Proof

By localization to the elements of the triangulation \({{\mathcal {T}}}\) and integration by parts,

The Cauchy-Schwarz inequality yields

With the interpolation properties (3.4) we obtain

Since the overlaps of the patches \(\omega _T\) and \(\omega _F\) are bounded uniformly, a Cauchy-Schwarz estimate leads to

Here, the constant \(c_{\mathrm {det}}\) depends on the properties of the interpolation operator (3.4). \(\square \)

Remark 5.4

Note that an \(L^2\)-integration of the residual, which is an element of the dual space \({{\mathcal {V}}}_{\vartheta \varrho }^*\), is possible since the solution consists of finite element functions. These are piecewise polynomial and thus smooth on each element \(T \in {\mathcal {T}}\).

5.2 Tail error estimation

The parametric or tail estimator is given by

and bounds the parametric error as follows.

Proposition 5.5

For any \(v \in {{\mathcal {V}}}_{\vartheta \varrho }\) and any \(w_N \in {{\mathcal {V}}}_N\), it holds

Proof

Recall that \(\langle {\mathcal {R}}_{+,\varXi \setminus \varLambda }(w_N) , {\mathcal {I}} v \rangle _{\theta \varrho } = 0\) since \({\mathcal {I}} v \in {{\mathcal {V}}}_N\).

Instead of factorizing out the \(L^\infty \)-norm of the diffusion coefficient as in [15, 16, 21], we use the Cauchy-Schwarz inequality to obtain

\(\square \)

5.3 Algebraic error estimation

In order to define the algebraic error estimator, we need to state the linear basis change operator that translates integrals over two Hermite polynomials in the measure \(\gamma _{\vartheta \varrho }\) to the measure \(\gamma \):

This yields the estimator

Proposition 5.6

For any \(w_N \in {{\mathcal {V}}}_N\) and the Galerkin solution \(u_N \in {{\mathcal {V}}}_N\), it holds

Proof

For \(v_N = \sum _{i=0}^{N-1} \sum _{\mu \in \varLambda } V(i,\mu ) \varphi _i H^{\tau _{\theta \varrho }}_\mu \in {\mathcal {V}}_N\), it holds

With this and using the coercivity of \({{\mathcal {A}}}_+\), we can see that

and thus

\(\square \)

5.4 Overall error estimation

A combination of the above estimates yields an overall error estimator.

Corollary 5.7

For any \(w_N \in {{\mathcal {V}}}_N\), the energy error can be bounded by

with the error estimator given by

Remark 5.8

In order to get suitable measures for the estimators, the squared density \(\zeta _{\vartheta \varrho }^2\) appears, which upon scaling with

again is a Gaussian measure with standard deviation \(\sigma ' = (\sigma _\ell ')_{1\le \ell \le L}\) for

First, this adds a restriction on \(\vartheta \) such that the argument in the square root is positive. This is fulfilled if \(\exp (2 \vartheta \varrho \alpha _1) < 2\), since \((\alpha _m)_{1\le m \le M}\) is a decreasing series, which can be ensured for some \(\vartheta \) small enough. Second, it is important to check whether the new measure is weaker or stronger than \(\gamma _{\vartheta \varrho }\) [49], i.e., which space contains the other. Since

functions that are integrable with respect to the measure \(\gamma _{\vartheta \varrho }\) are not necessarily integrable with respect to the squared measure. However, since f is independent of the parameters and \({\mathcal {A}}_+(w_N) \in {\mathcal {X}}^* \otimes {\mathcal {Y}}(\varXi )\) has a polynomial chaos expansion of finite degree, the residual \({\mathcal {R}}_+(w_N)\) is integrable over the parameters for any Gaussian measure and therefore it is also integrable with respect to the squared measure.

5.5 Efficient computation of the different estimators

The error estimators can be calculated efficiently in the TT format. For each element \(T \in {\mathcal {T}}\) of the triangulation, the residual estimator is given by

A complication of the change of the measure to \(\gamma \) and the involved weight \(\zeta _{\vartheta \varrho }^2\) is the fact that the shifted Hermite polynomials \(H^{\tau _{\theta \varrho }}\) are not orthogonal with respect to this measure. However, this property can be restored easily by calculating the basis change integrals beforehand. This results in another tensor product operator that is defined element-wise for \(\eta ,\eta ' \in \varXi \) by

This operator encodes the basis change to the squared measure and can be inserted in order to calculate the scalar product. With this, the estimator takes the form

Since \(\tilde{{\mathbf {H}}}\) is a tensor product operator, this summation can be done component-wise, i.e., performing a matrix-vector multiplication of every component of the operator \(\tilde{{\mathbf {H}}}\) with the corresponding component of the tensor function r.

Similarly, for the jump over the edge F we obtain the estimator

Analogously to the affine case dealt with in [21], both of these estimators can then be computed efficiently in the TT format. The parametric error estimator \({{\,\mathrm{est}\,}}_{\mathrm {param}}(w_N)\) can be estimated in a similar way.

To gain additional information about the residual influence of certain stochastic dimensions, we sum over specific index sets. Let

where the strike through means that \(\ell \) takes all values but m. For every \(m=1,2,\ldots \), and \(w_N \in {\mathcal {V}}_N\) we define

Using the same arguments and notation as above, we can simplify

These operations, including the calculation of the discrete error estimator (5.1), can be executed efficiently in the TT format.

6 Fully adaptive algorithm

With the derived error estimators of the preceding sections, it is possible to refine all discretization parameters accordingly. As discussed before, the deterministic estimator assesses the error that arises from the finite element method. The discrete error estimator evaluates the error made by a low rank approximation. The rest of the error is estimated by the parametric error estimator.

The adaptive algorithm described in this section is similar to the algorithms presented in [15, 16, 21]. Given some mesh \({\mathcal {T}}\), a fixed polynomial degree p, a finite tensor set \(\varLambda \subset {\mathcal {F}}\), and a start tensor W with TT rank r, we assume that a numerical approximation \(w_N \in {\mathcal {V}}_N\) is obtained by a function

where the superscript \(+\) always denotes the updated object. In our implementation, we used the preconditioned ALS algorithm but other solution algorithms are feasible as well. The lognormal error indicators from Sect. 5 and thus the overall upper bound \({{\,\mathrm{est}\,}}_{\mathrm {all}}(w_N)\) in Corollary 5.7 are computed by the methods

Depending on which error is largest, either the mesh is refined, or the index set \(\varLambda \) is enlarged, or the rank r of the solution is increased. This is done as follows:

-

If the deterministic error \({{\,\mathrm{est}\,}}_{\mathrm {det}}(w_N)\) outweighs the others, we mark the elements \(T \in {\mathcal {T}}\) that have the largest error \({{\,\mathrm{est}\,}}_{\mathrm {det},T}(w_N)\) until the sum of errors on the elements in this marked subset \({\mathcal {T}}_{\mathrm {mark}} \subset {\mathcal {T}}\) exceeds a certain ratio \(0< \vartheta < 1\). This is called the Dörfler marking strategy

$$\begin{aligned} \sum _{T \in {\mathcal {T}}_{\mathrm {mark}}} {{\,\mathrm{est}\,}}_{\mathrm {det},T}(w_N) \ge \vartheta {{\,\mathrm{est}\,}}_{\mathrm {det}}(w_N). \end{aligned}$$We denote this procedure by

$$\begin{aligned} {\mathcal {T}}_{\mathrm {mark}} \leftarrow {{\,\mathrm{Mark}\,}}_x[\vartheta ,({{\,\mathrm{est}\,}}_{\mathrm {det},T}(w_N,\varLambda ))_{T\in {\mathcal {T}}}, {{\,\mathrm{est}\,}}_{\mathrm {det}}(w_N,\varLambda ,{\mathcal {T}})]. \end{aligned}$$The elements in this subset are subsequently refined by

$$\begin{aligned} {\mathcal {T}}^+ \leftarrow {{\,\mathrm{Refine}\,}}_x[{\mathcal {T}}, {\mathcal {T}}_{\mathrm {mark}}]. \end{aligned}$$ -

In case the parametric error \({{\,\mathrm{est}\,}}_{\mathrm {param}}(w_N)\) dominates, we use estimators \({{\,\mathrm{est}\,}}_{\mathrm {param},m}(w_N)\) in order to determine which components need to be refined. Here, we also mark until the Dörfler property is satisfied, that is, we obtain a subset \({\mathcal {I}}_{\mathrm {mark}} \subset {\mathbb {N}}\) such that

$$\begin{aligned} \sum _{m \in {\mathcal {I}}_{\mathrm {mark}}} {{\,\mathrm{est}\,}}_{\mathrm {param},m}(w_N) \ge \vartheta {{\,\mathrm{est}\,}}_{\mathrm {param}}(w_N). \end{aligned}$$This is the marking

$$\begin{aligned} {\mathcal {I}}_{\mathrm {mark}} \leftarrow {{\,\mathrm{Mark}\,}}_y[\vartheta ,({{\,\mathrm{est}\,}}_{\mathrm {param},m}(w_N))_{m\in {\mathbb {N}}}, {{\,\mathrm{est}\,}}_{\mathrm {param}}(w_N)], \end{aligned}$$and we refine by increasing \(d_m^+ \leftarrow d_m + 1\) for \(m \in {\mathcal {I}}_{\mathrm {mark}}\)

$$\begin{aligned} \varLambda ^+ \leftarrow {{\,\mathrm{Refine}\,}}_y[\varLambda ,{\mathcal {I}}_{\mathrm {mark}}]. \end{aligned}$$ -

Finally, if \({{\,\mathrm{est}\,}}_{\mathrm {disc}}(w_N)\) exceeds the other errors, we simply add a random tensor of rank 1 to the solution tensor W. This increases all TT ranks of W by 1 almost surely. It would also be possible to add an approximation of the discrete residual as this is also in TT format. However, since the ALS algorithm will be performed after the refinement, the advantage of this rather costly improvement has shown to be negligible [52]. Thus we get

$$\begin{aligned} W^+ \leftarrow {{\,\mathrm{Refine}\,}}_{\mathrm {TT}}[W]. \end{aligned}$$

A single iteration step of the adaptive algorithm returns either a refined \({\mathcal {T}}^+\) or \(\varLambda ^+\) or the tensor format solution with increased rank \(W^+\) and then solves the problem with these properties. This is done repeatedly until the overall error estimator \({{\,\mathrm{err}\,}}_{\mathrm {all}}(w_N)\) is sufficiently small, i.e., defined error bound \(\epsilon _{\mathrm {TTASGFEM}}\) or a maximum problem size is reached. This procedure is given by the function \(\mathrm {TTASGFEM}\), displayed in Algorithm 2. Note that we omit the dependence of \(w_N\) for the estimators in the algorithm. The upper error bounds directly follow from Corollary 5.7.

7 Numerical experiments

In this section we examine the performance of the proposed adaptive algorithm with some benchmark problems. The computations are carried out with the open source framework ALEA [18]. The FE discretization is based on the open source package FEniCS [24]. The shown experiments are similar to the ones of the predecessor paper [21] in Section 7. As before, the model equation (2.1) is computed for different lognormal coefficients on the square domain. The derived error estimator is used as a refinement indicator. Of particular interest is the observed convergence of the true (sampled) expected energy error and the behaviour of the error indicator. Moreover, we comment on the complexity of the coefficient discretization.

7.1 Evaluation of the error

The accuracy of the computed approximation is determined by a Monte Carlo estimation. For this, a set of \({N_{\mathrm {MC}}}\) independent samples \(\left( y^{(i)}\right) _{i=1}^{N_{\mathrm {MC}}}\) of the stochastic parameter vector is considered. By the tensor structure of the probability measure \(\gamma =\bigotimes _{m\in {\mathbb {N}}} \gamma _1\), each entry of the vector valued sample \(y^{(i)}\) is drawn with respect to \({\mathcal {N}}_1\). The parametric solution \(w_N\in {\mathcal {V}}_N(\varLambda ; {{\mathcal {T}}}, p)\) obtained by the adaptive algorithm at a sample \(y^{(i)}\), is compared to the corresponding (deterministic) sampled solution \(u(y^{(i)})\in {{\mathcal {X}}}_{p'}({{\widetilde{{{\mathcal {T}}}}}})\) on a much finer finite element space subject to a reference triangulation \({{\widetilde{{{\mathcal {T}}}}}}\), obtained from uniform refinements of the finest adaptively created mesh, up to the point where we obtain at least \(10^5\) degrees of freedom with Lagrange elements of order \(p'=3\). For computations, the truncation parameter applied to the infinite sum in (2.2), controlling the length of the affine field representation, is set to \(M=100\). The mean squared error is determined by a Monte Carlo quadrature,

Note that the sample reference quantity u(y) exhibits approximation solely by a finite element method and does not involve the computation of a unified reference solution. A choice of \({N_{\mathrm {MC}}}=250\) proved to be sufficient to obtain a reliable estimate in our experiments.

7.2 The stochastic model problem

In the numerical experiments, we consider the stationary diffusion problem (2.1) on the square domain \(D=(0,1)^2\). As in [15, 16, 19, 21], the expansion coefficients of the stochastic field (2.2) are given by

We chose the scaling \(\gamma =0.9\).

Moreover,

with \(k(m) = \lfloor -1/2 + \sqrt{1/4+2m} \rfloor \), i.e., the coefficient functions \(b_m\) enumerate all planar Fourier sine modes in increasing total order. To illustrate the influence which the stochastic coefficient plays in the adaptive algorithm, we examine the expansion with varying decay, setting \(\sigma \) in (7.1) to different values. The computations are carried out with conforming FE spaces of polynomial degree 1 and 3.

The fully discretized problem is solved in the TT format using the Alternating Least Squares (ALS) algorithm as introduced in [21]. Other algorithms like Riemannian optimization are also feasible [1, 37]. The ALS has a termination threshold of \(10^{-12}\) that needs to be reached in the Frobenius norm of the difference of two successive iteration results.

For our choice of the coefficient field, the introduced weights in (3.1) are set to \(\varrho =1\) and \(\theta =0.1\).

7.3 Tensor train representation of the coefficient

Since the tensor approximation of the coefficient is the starting point for the discretization of the operator, we begin with an examination of the rank dependence of the coefficient approximation scheme given in Algorithm 1. For this, we fix the multi-index set such that the incorporated Hermite polynomials are of degree 15 in each stochastic dimension, i.e.,

As a benchmark, we chose a slow decay with \(\sigma =2\) and set the quadrature ruleFootnote 2 to exactly integrate polynomials of degree 7 with a grid such that the number of quadrature nodes is at least \(P_{\mathrm {quad}} = 10^4\). In the following, the relative root mean squared (RRMS) error

is compared to the growth in degrees of freedom with respect to various tensor ranks. Numerically, this expression is computed by a Monte Carlo approximation as described in Sect. 7.1 with the reference mesh \(\widetilde{{\mathcal {T}}}\).

By denoting \(A=(A_0,A_1,\ldots , A_L)\) the component tensors of \(a_{\varDelta , s}\) in (4.4), where \(A_0[x_k, k_1] = a_0[k_1](x_k)\) corresponds to the evaluation of \(a_0[k_1]\) at every node \(x_k\) of \(\widetilde{{\mathcal {T}}}\), we can apply the notion of tt-dofs (4.1) to the discrete tensor train coefficient. To highlight the crucial choice of the rank parameter \(s=(1, s_1,\ldots , s_L, 1) \) in \(a_{\varDelta , s}\), we let the maximal attainable rank \(s_{\text {max}}\) vary.

It can be seen in Table 1 that a higher rank yields a more accurate approximation of the coefficient, as long as the stochastic expansion L is long enough. For small numbers of L, the RRMS does not decrease any further than \(6\times 10^{-4}\), even when increasing the maximal attainable rank. Otherwise, for up to \(L=100\) terms in the affine coefficient field parametrisation, a small rank parameter of \(s_{\mathrm {max}}=10\) gives a reasonable RRMS error of \(1\times 10^{-3}\) which can be improved by increasing \(s_{\mathrm {max}}\).

It should be pointed out that the used approximation only becomes feasible computationally by the employed tensor format since without low-rank representation the amount of degrees of freedom grows exponentially in der number of expansion dimensions. Furthermore, since the tensor complexity and arithmetic operations depend only linearly on the number of expansion dimensions, the computational time scales linearly as illustrated in the last column of Table 1 showing the average run time of 10 runs.Footnote 3

7.4 Adaptivity in physical space

As a first example for an adaptive discretization, we examine the automatic refinement of the physical FE space only. For this, the stochastic expansion of the coefficient is fixed to some small value \(L=5\) in (4.4), which also is assumed for the corresponding reference solution. The considered polynomial (Hermite chaos) degree of the approximation in each dimension is fixed to \(d_1 = \ldots = d_5 = 10\), which can be considered sufficiently large for the respective problem, given an algebraic decay rate of \(\sigma =2\) in the coefficient.

Although this experiment does not illustrate the performance of the complete algorithm, it nevertheless depicts the varying convergence rates for different polynomial orders in the FE approximation. The rank of the tensor train approximation is fixed to \(r_{\mathrm {max}}=10\), which is sufficient due to the low stochastic dimensions.

Relative sampled mean squared \(H^1(D)\) error for fixed stochastic dimension \(L=5\) and adaptive refinement of the physical mesh. Each setting is shown along its respective overall error estimator as defined in Corollary 5.7 and plotted against the total number of degrees of freedom in the TT representation. Considered are FE approximations of order \(p=1\) and \(p=3\)

It can be observed in Fig. 3 that the error estimator follows the rate of convergence of the sampled error. Moreover, the higher-order FE method exhibits a higher convergence rate as expected. This could also be observed in the (much simpler) affine case scrutinized in the preceding works [15, 16, 19, 21].

7.5 Fully adaptive algorithm

The fully adaptive algorithm described in Algorithm 2 is instantiated with a small initial tensor with full tensor rank \(r_1=2\) consisting of a single \(M=1\) stochastic component discretized with a linear polynomial \(d_1=2\) and a physical mesh with \(|{{\mathcal {T}}}|=32\) elements for linear ansatz functions \(p=1\) and \(|{{\mathcal {T}}}|=8\) for \(p=3\). The marking parameter is set to \(\vartheta =0.5\).

Relative, sampled, mean squared \(H^1(D)\) error for the fully adaptive refinement. Each setting is shown along its respective overall error estimator as defined in Corollary 5.7 and plotted against the total number of degrees of freedom in the representation. Considered are finite elements approximation of order \(p=1\) and \(p=3\) and slow decay (\(\sigma =2\), left) and fast decay (\(\sigma =4\), right)

Figure 4 depicts the real (sampled) mean squared energy error and the corresponding overall error estimator for FE discretizations of degrees \(p=1,3\). On the left-hand side of the figure, a lower decay rate (\(\sigma =2\)) in the coefficient representation is used, resulting in more stochastic modes to be relevant for an accurate approximation. The right-hand side shows results for a faster decay rate with \(\sigma =4\). As expected, the convergence rate for the \(p=3\) FE discretizations is faster than with \(p=1\) FE. Moreover, for the simpler problem with fast decay, we achieve a smaller error with a comparable number of degrees of freedom than in the harder slow decay setting.

Remark 7.1

Note that we neglect the (large) factor \({\check{c}}^+_{\theta \varrho }\) in Corollary 5.7, which depends on the choice of weights \(\theta \) and \(\varrho \) of the discrete space. A detailed analysis of how to optimally select these weights is outside the scope of this article.

We conclude the numerical observations with Table 2 and 3 to display the behaviour of the fully adaptive algorithm. To allow for more insights, we redefine the physical mesh resolution as m-dofs(\(W_N\)), which is the number of FE degrees of freedom for the respective parametric solution \(W_N\). Moreover, we define the number of degrees of freedom incorporated in the operator (4.5) generated to obtain the current solution. For a tensor train operator of dimension \({\mathbf {A}}\in {\mathbb {R}}^{(N\times N)\times (q_1 \times q_1) \times \ldots \times (q_L\times q_L)}\) and rank \(s=(s_1, \ldots , s_L)\), we define

Note that, using a sparse representation of the operator in the first (physical) component, it is possible to reduce number of op-dofs.

Tables 2 and 3 highlight some iteration steps and the employed stochastic expansion length M, the maximal polynomial degree \(d^{\text {max}}\) (which was usually naturally attained in the first stochastic component), the maximal tensor train rank \(r^{\text {max}}\) (again most of the time this rank is the separation rank of the physical space and the first stochastic dimension) and the corresponding degrees of freedom. Notably, for the fast coefficient decay \(\sigma =4\), the stochastic expansion is very short since most of the randomness is due to the first few stochastic parameters. It is reasonable that the respective parameter dimensions require higher polynomial degrees in the approximation. For the more involved slow decay \(\sigma =2\) setting we observe a larger stochastic expansion of the solution and larger tensor ranks.

Notes

We usually use SGFEM for Stochastic Galerkin FEM

The statements are with regard to the respective FE discretization in FEniCS.

Run on an 8GB Intel Core i7-6500 laptop.

References

Absil, P.-A., Mahony, R., Sepulchre, R.: Optimization Algorithms on Matrix Manifolds. Princeton University Press, Princeton (2008)

Babuška, I., Nobile, F., Tempone, R.: A stochastic collocation method for elliptic partial differential equations with random input data. SIAM J. Numer. Anal. 45(3), 1005–1034 (2007)

Bachmayr, M., Schneider, R., Uschmajew, A.: Tensor networks and hierarchical tensors for the solution of high-dimensional partial differential equations. Found. Comput. Math. 16(6), 1423–1472 (2016)

Bespalov, A., Powell, C.E., Silvester, D.: Energy norm a posteriori error estimation for parametric operator equations. SIAM J. Sci. Comput. 36(2), A339–A363 (2014)

Bespalov, A., Silvester, D.: Efficient adaptive stochastic Galerkin methods for parametric operator equations. SIAM J. Sci. Comput. 38(4), A2118–A2140 (2016)

Bryant, C.M., Prudhomme, S., Wildey, T.: Error decomposition and adaptivity for response surface approximations from pdes with parametric uncertainty. SIAM/ASA J. Uncertain. Quant. 3(1), 1020–1045 (2015)

Charrier, J.: Strong and weak error estimates for elliptic partial differential equations with random coefficients. SIAM J. Numer. Anal. 50(1), 216–246 (2012)

Cohen, A., DeVore, R., Schwab, C.: Convergence rates of best \(N\)-term Galerkin approximations for a class of elliptic sPDEs. Found. Comput. Math. 10(6), 615–646 (2010)

Cohen, A., Devore, R., Schwab, C.: Analytic regularity and polynomial approximation of parametric and stochastic elliptic PDE’s. Anal. Appl. (Singapore) 9(1), 11–47 (2011)

Crowder, A.J., Powell, C.E., Bespalov, A.: Efficient adaptive multilevel stochastic Galerkin approximation using implicit a posteriori error estimation. arXiv preprint arXiv:1806.05987 (2018)

Detommaso, G., Dodwell, T., Scheichl, R.: Continuous level Monte Carlo and sample-adaptive model hierarchies. arXiv preprint arXiv:1802.07539 (2018)

Dolgov, S., Khoromskij, B.N., Litvinenko, A., Matthies, H.G.: Polynomial chaos expansion of random coefficients and the solution of stochastic partial differential equations in the tensor train format. SIAM/ASA J. Uncertain. Quant. 3(1), 1109–1135 (2015)

Dolgov, S., Scheichl, R.: A hybrid alternating least squares–tt cross algorithm for parametric pdes. arXiv preprint arXiv:1707.04562 (2017)

Eigel, M., Martin, G., Claude, J.: Adaptive stochastic Galerkin FEM for a log-transformed PDE with non-affine unbounded random coefficients (2018). (in preparation)

Eigel, M., Gittelson, C.J., Schwab, C., Zander, E.: Adaptive stochastic Galerkin FEM. Comput. Methods Appl. Mech. Eng. 270, 247–269 (2014)

Eigel, M., Gittelson, C.J., Schwab, C., Zander, E.: A convergent adaptive stochastic Galerkin finite element method with quasi-optimal spatial meshes. ESAIM Math. Model. Numer. Anal. 49(5), 1367–1398 (2015)

Eigel, M., Marschall, M., Schneider, R.: Sampling-free bayesian inversion with adaptive hierarchical tensor representations. Inverse Prob. 34(3), 035010 (2018)

Eigel, M., Marschall, M., Zander, E.: alea—A python framework for spectral methods and low-rank approximations in uncertainty quantification. https://bitbucket.org/aleadev/alea

Eigel, M., Merdon, C.: Local equilibration error estimators for guaranteed error control in adaptive stochastic higher-order Galerkin finite element methods. SIAM/ASA J. Uncertain. Quant. 4(1), 1372–1397 (2016)

Eigel, M., Merdon, C., Neumann, J.: An adaptive multilevel monte carlo method with stochastic bounds for quantities of interest with uncertain data. SIAM/ASA J. Uncertain. Quant. 4(1), 1219–1245 (2016)

Eigel, M., Pfeffer, M., Schneider, R.: Adaptive stochastic Galerkin FEM with hierarchical tensor representations. Numerische Mathematik 136(3), 765–803 (2017)

Ernst, O.G., Mugler, A., Starkloff, H.-J., Ullmann, E.: On the convergence of generalized polynomial chaos expansions. ESAIM Math. Model. Numer. Anal. 46(2), 317–339 (2012)

Espig, M., Hackbusch, W., Litvinenko, A., Matthies, H.G., Wähnert, P.: Efficient low-rank approximation of the stochastic Galerkin matrix in tensor formats. Comput. Math. Appl. 67(4), 818–829 (2014)

FEniCS project—automated solution of differential equations by the finite element method. http://fenicsproject.org

Galvis, J., Sarkis, M.: Approximating infinity-dimensional stochastic Darcy’s equations without uniform ellipticity. SIAM J. Numer. Anal. 47(5), 3624–3651 (2009)

Gittelson, C.J.: Stochastic Galerkin discretization of the log-normal isotropic diffusion problem. Math. Models Methods Appl. Sci. 20(2), 237–263 (2010)

Grasedyck, L.: Hierarchical singular value decomposition of tensors. SIAM J. Matrix Anal. Appl. 31(4):2029–2054 (2009/10)

Guignard, D., Nobile, F.: A posteriori error estimation for the stochastic collocation finite element method. Technical report (2017)

Hackbusch, W., Schneider, R.: Tensor spaces and hierarchical tensor representations. In: Extraction of Quantifiable Information from Complex Systems, volume 102 of Lecture Notes in Computer Science Engineering, pp. 237–261. Springer, Cham (2014)

Harbrecht, H., Peters, M., Siebenmorgen, M.: Multilevel accelerated quadrature for pdes with log-normally distributed diffusion coefficient. SIAM/ASA J. Uncertain. Quant. 4(1), 520–551 (2016)

Harbrecht, H., Peters, M., Siebenmorgen, M.: On the quasi-monte carlo method with halton points for elliptic pdes with log-normal diffusion. Math. Comput. 86(304), 771–797 (2017)

Herrmann, L., Schwab, C.: QMC algorithms with product weights for lognormal-parametric, elliptic pdes (2017)

Herrmann, L., Schwab, C.: Multilevel quasi-monte carlo integration with product weights for elliptic pdes with lognormal coefficients. Techncial Report 2017–2019, Seminar for Applied Mathematics. ETH Zürich, Switzerland (2017)

Hoang, V.H., Schwab, C.: \(N\)-term Wiener chaos approximation rate for elliptic PDEs with lognormal Gaussian random inputs. Math. Models Methods Appl. Sci. 24(4), 797–826 (2014)

Holtz, S., Rohwedder, T., Schneider, R.: On manifolds of tensors of fixed TT-rank. Numer. Math. 120(4), 701–731 (2012)

Kazashi, Y.: Quasi-monte carlo integration with product weights for elliptic pdes with log-normal coefficients. arXiv preprint arXiv:1701.05974 (2017)

Kressner, D., Steinlechner, M., Vandereycken, B.: Low-rank tensor completion by Riemannian optimization. BIT 54(2), 447–468 (2014)

Kuo, F., Scheichl, R., Schwab, C., Sloan, I., Ullmann, E.: Multilevel quasi-monte carlo methods for lognormal diffusion problems. Math. Comput. 86(308), 2827–2860 (2017)

Loève, M.: Probability theory. II. Graduate Texts in Mathematics, vol. 46, 4th edn. Springer, New York (1978)

Malliavin, P.: Stochastic Analysis, vol. 313. Springer, Berlin (2015)

Mugler, A.: Verallgemeinertes polynomielles Chaos zur Lösung stationärer Diffusionsprobleme mit zufälligen Koeffizienten. PhD thesis, BTU Cottbus (2013)

Mugler, A., Starkloff, H.-J.: On the convergence of the stochastic Galerkin method for random elliptic partial differential equations. ESAIM Math. Model. Numer. Anal. 47(5), 1237–1263 (2013)

Nobile, F., Tempone, R., Webster, C.G.: An anisotropic sparse grid stochastic collocation method for partial differential equations with random input data. SIAM J. Numer. Anal. 46(5), 2411–2442 (2008)

Nobile, F., Tempone, R., Webster, C.G.: A sparse grid stochastic collocation method for partial differential equations with random input data. SIAM J. Numer. Anal. 46(5), 2309–2345 (2008)

Nouy, A.: Low-rank methods for high-dimensional approximation and model order reduction. In: Benner, P., Cohen, A., Ohlberger, M., Willcox, K. (eds.) Model reduction and approximation, pp. 171–226. SIAM, Philadelphia, PA (2017)

Oseledets, I.V., Tyrtyshnikov, E.E.: Breaking the curse of dimensionality, or how to use SVD in many dimensions. SIAM J. Sci. Comput. 31(5), 3744–3759 (2009)

Pfeffer, M.: Tensor methods for the numerical solution of high-dimensional parametric partial differential equations. PhD thesis, TU Berlin Dissertation No. 19533, Technische Universität Berlin (2018)

Prudhomme, S., Bryant, C.M.: Adaptive surrogate modeling for response surface approximations with application to bayesian inference. Adv. Model. Simul. Eng. Sci. 2(1), 22 (2015)

Schwab, C., Gittelson, C.J.: Sparse tensor discretizations of high-dimensional parametric and stochastic PDEs. Acta Numer. 20, 291–467 (2011)

Ullmann, E.: Solution strategies for stochastic finite element discretizations. PhD thesis, TU Bergakademie Freiberg (2008)

Ullmann, E., Elman, H.C., Ernst, O.G.: Efficient iterative solvers for stochastic Galerkin discretizations of log-transformed random diffusion problems. SIAM J. Sci. Comput. 34(2), A659–A682 (2012)

Uschmajew, A., Vandereycken, B.: Line-search methods and rank increase on low-rank matrix varieties. In: NOLTA2014, Luzern, Switzerland, September 14–18, 2014, pp. 52–55. IEICE, Tokyo (2014)

Acknowledgements

Open Access funding provided by Projekt DEAL. M.P. and R.S. were supported by the DFG project ERA Chemistry; additionally R.S. was supported through Matheon by the Einstein Foundation Berlin.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Eigel, M., Marschall, M., Pfeffer, M. et al. Adaptive stochastic Galerkin FEM for lognormal coefficients in hierarchical tensor representations. Numer. Math. 145, 655–692 (2020). https://doi.org/10.1007/s00211-020-01123-1

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00211-020-01123-1