Abstract

The Intergovernmental Panel on Climate Change (IPCC) currently communicates uncertainty using a lexicon that features four negative verbal probabilities to convey extremely low to medium probabilities (e.g. unlikely). We compare a positive probability lexicon with the IPCC lexicon in a series of psychology experiments. We find that although the positive and negative lexicons convey a similar level of probability, the positive lexicon directs more attention towards the outcome occurrence and encourages more cautious decisions: in our role-playing experiment, it reduced the number of type 2 errors, i.e. failures to make needed precautionary interventions. Whilst participants considered the negative lexicon more useful in making a decision, they trusted the positive lexicon more and blamed information providers less after making an incorrect decision. Our results suggest that the negative verbal framing of probabilities used by the IPCC is not neutral and has implications for how climate information is interpreted by decision-makers.

Similar content being viewed by others

1 Introduction

Despite people now mostly believing that climate change is occurring (Juanchich and Sirota 2017), climate change is not a primary concern of policy-makers and citizens (Hornsey et al. 2016). People’s beliefs do not necessarily translate into support for climate change mitigation policies or changes in behaviour that would reduce CO2 emissions (Hornsey et al. 2016; Leiserowitz 2006; Maibach et al. 2015).

A recognised strategy for how to increase climate change engagement is to better inform policy makers and the public (van der Linden et al. 2015). Because climate change is inherently uncertain (Capstick and Pidgeon 2014), it is important to learn how to best communicate probabilities of climate change events (Budescu et al. 2012; Budescu et al. 2014; Patt and Dessai 2005; Smithson et al. 2012; Sterman 2011). The current IPCC uncertainty communication guidelines feature a list of seven verbal probabilities (left column of Table 1), which are used to quantify the likelihood of occurrence of climate change outcomes.

Past work has focused on the shortcomings of communicating technical probabilistic information through those verbal probabilities. First, the numerical meaning of verbal probabilities is vague. When people are asked what a verbal probability means on the 0–100% numerical probability axis, their answers vary widely. For example, some people believe that an “unlikely” event has a 5% chance of happening, whereas others believe that it has a 50% chance of occurring (Budescu and Wallsten 1995; Harris et al. 2013). This vagueness makes the numerical interpretation of verbal probabilities vulnerable to the influence of people’s attitudes (Budescu et al. 2012; Budescu et al. 2014) and to contextual changes (Harris and Corner 2011; Patt and Schrag 2003). The second shortcoming with verbal probabilities is that they often do not convey the probability intended by the communicator (e.g. “very unlikely” conveys on average a higher probability than the IPCC prescribed 10%) (Budescu et al. 2012; Budescu et al. 2014). The provision of numerical probability translation together with the verbal lexicon (e.g. “It is very unlikely” (0–10%)…) reduces vagueness and mitigates the lack of consistency with expected numerical meaning (Budescu et al. 2012; Budescu et al. 2014).

A remaining issue with the current lexicon, however, is that the verbal probabilities prescribed to communicate probabilities ranging from 0 to 66% feature negations. This would be inconsequential if negations did not have any psychological consequences. However, negative verbal probabilities direct attention towards the non-occurrence of the predicted outcome and hence elicit thoughts compatible with evidence against the outcome occurrence (Juanchich et al. 2010b; Teigen and Brun 1995; Teigen et al. 2013). Negative verbal probabilities also generate greater inter-individual variability in interpretation (Smithson et al. 2012), hint to the recipient not to take action (Hilton 2008; Teigen and Brun 1999) and reduce trust and increase blame (Teigen and Brun 2003). As with negations in general, negative verbal probabilities are not the “normal” way to communicate uncertainty, as they mark a departure from the normal course of events (Hasson and Glucksberg 2006; Horn 1989), e.g. a fact that is denied, a trend that goes downward and a disagreement (Juanchich et al. 2010a). In contrast, positive verbal probabilities are the norm and are more commonly used (Brun and Teigen 1988; Juanchich et al. 2010b). Building on past research, we argue that the use of negative verbal probabilities in the IPCC reports goes against the mission of the IPCC to provide an assessment that is free from bias and does not nudge people in particular directions.

The consequences of negations in verbal probabilities, however, depend on the outcome they qualify, so it is important to look at their effect as a function of the outcome they qualify (e.g. climate change occurrence). Using negative verbal probabilities to describe climate change occurrence or consequences (e.g. sea level rise, temperature increase) will steer attention away from climate change occurrence and thus from mitigating actions. On the other hand, using negative verbal probabilities to describe the non-occurrence of climate change (e.g. sea level not rising, temperature being stable) will steer attention back towards climate change actually occurring. For example, in the statement “Warming is unlikely to exceed 4°C”, readers’ attention is directed to the fact that the temperature will not exceed 4°C, whereas the sentence “It is unlikely that the global mean sea level will stop rising” directs attention towards the sea level actually rising. Since the purpose of the IPCC is to report on climate change, we can assume that most uncertainty actually relates to climate change occurrence and hence that negative terms will steer attention away from it.

Currently, writers have one option to avoid using negative verbal probabilities to convey probabilities below 66%, focusing on the alternative event probability instead. Although there is a growing demand for IPCC to focus on low probability outcomes, especially the high impact ones (Sutton 2019), writers could reframe a low probability temperature increase into high probability of the temperature not increasing to that extent. There are, however, a few issues with this option. First, this is not possible for one of the four categories that feature negative verbal probabilities; it is not possible to reframe the 33 to 66% probability range, for which the recommended term is currently “as likely as not”, so this leaves the reframing possibility only for the 0–33% probability range. Second, reframing the focal event may create some confusion when it entails the use of negations (e.g. “will not rise”), which are notoriously difficult to process (Horn 1989). Finally, and most importantly, recasting a low climate change event probability (e.g. an extreme temperature rise, an extreme increase in heavy precipitation) into a high probability of the opposite event would have some psychological consequences akin to using a negative verbal probability as it would again direct attention to outcome non-occurrence (Levin and Gaeth 1988; Smith and Jucker 2014). In this case, therefore, the use of positive verbal probabilities would still steer attention away from climate change occurrence and from mitigating actions.

Here we aim to show that the uncertainty language recommended by the IPCC to describe climate change is not neutral because negative verbal probabilities direct people’s attention away from the outcome occurrence and discourage precautionary decisions. In our studies, we assessed the effect of negative verbal probabilities on climate change perception by comparing them to positive verbal probabilities. To do so, we developed three positive alternative lexicons to the negative lexicon used by the IPCC (right columns of Table 1). We hypothesised that the positive lexicons would direct more attention to the possibility that the predicted outcome would occur, convey similar probabilities, and elicit more cautious decisions and more trust.

2 Experiments 1a and 1b: negative verbal probabilities direct attention away from the outcome occurrence

Verbal probabilities are believed to be directional in the sense that they direct the attention of recipients towards a specific outcome: positive verbal probabilities direct attention towards the focal outcome occurrence (the outcome described in the prediction), whereas negative probabilities direct attention towards the outcome non-occurrence or an alternative outcome (Teigen 1988; Teigen and Brun 1995). This directionality is measured through appropriateness rating tasks in which participants evaluate how well a prediction fits with evidence in favour of, or against, the outcome occurrence. Typically, people feel that negative phrases are more appropriate (i.e. fit better) with evidence against the outcome occurrence than with evidence in favour of it and that positive phrases are more appropriate with favourable evidence than with evidence against (Teigen and Brun 1995). Therefore, we would expect that a prediction featuring the original negative lexicon would seem more appropriate with reasons against the occurrence of the focal outcome than with reasons in favour of it, whereas the alternative lexicon would be perceived as more appropriate with reasons in favour of it. We first compared the effect of the lexicons in a neutral context (i.e. whether someone will pass a school test) to avoid the interference of personal climate change belief, and we then replicated the study in a natural disaster context made more likely by climate change.

2.1 Method

Participants study 1a:

A sample of 100 Amazon Mechanical Turk workers took part in the online survey (55 males, age range 19–65, M = 33.72, SD = 10.30 years). Most of the participants were white/Caucasian (78%), the remainder were African-American (10%) and other ethnicity (12%). Most of them had at least a college degree (65%), whereas 34% had a high school education/General Educational Development (GED). Most of the participants were employed (67%), 15% unemployed, 1% retired and 17% others. Based on a sensitivity analysis for ANOVA with two groups of participants and five repeated measures, given α = 5% and 1 − β = 80% and assuming a correlation of .50 between the measures, this sample would allow us to detect a small-to-medium effect size (Cohen’s f = 0.16).

Participants study 1b:

A sample of 100 Amazon Mechanical Turk workers took part in the online survey (63% male, age range 19–70, M = 36.54, SD = 11.22 years). Most of the participants were white/Caucasian (77%), the remainder were African-American (12%) and other ethnicity (11%). Most of them had at least a college degree (72%); 28% had high school degrees or GED. Most of the participants were employed (78%), 10% unemployed, 2% retired and 10% other. Based on the same sensitivity analysis as above, this sample would allow us to detect a small-to-medium effect size (Cohen’s f = 0.16).

Materials and procedure

The methodology used to measure which outcome participants paid attention to was the same in the two studies, but the context was different (school test and landslide) and study 1b relies on a simpler design.

Materials and procedure: study 1a

The study focused on 16 target phrases and 3 filler phrases. The target phrases include the IPCC uncertainty lexicon for extremely low to medium probabilities and three positive alternative lexicons as shown in Table 1. Participants evaluated the appropriateness of each verbal probability prediction when it was associated with evidence in favour of or against the outcome it qualified (Juanchich et al. 2013; Teigen and Brun 1999) on a 5-point Likert scale ranging from 1: Not at all to 5: Completely. The appropriateness-rating task was repeated for the negative lexicon of the IPCC and the three positive alternatives shown in Table 1. The example below shows the task with the negative verbal probability “it is unlikely” and its positive counterpart “there is a small likelihood”.

-

Appropriateness-rating task with negative lexicon:

-

Evidence in favour of the outcome occurrence: “It is unlikely Anna will pass her test because she spent a lot of time studying”.

-

Evidence against the outcome occurrence: “It is unlikely Anna will pass her test because she did not attend her classes”.

-

-

Appropriateness-rating task with positive lexicon:

-

Evidence in favour of the outcome occurrence: “There is a small likelihood that Anna will pass her test because she spent a lot of time studying”.

-

Evidence against the outcome occurrence: “There is a small likelihood that Anna will pass her test because she did not attend her classes”.

-

Subsequently, participants answered a series of questions measuring their environmental values (Dunlap et al. 2000), belief in climate change (Heath and Gifford 2006) and scepticism about existing evidence of climate change (Capstick and Pidgeon 2014). The data from these questions are not reported in this manuscript. The materials and the data are available on the Open Science Framework (https://osf.io/5efxt/?view_only=91a1d43bce044ad4b221513cabe78451).

Materials and procedure: study 1b

We compared the effect of the lexicon in a 2 lexicon (IPCC original vs. alternative positive) × 4 probability range (extremely low, very low, low and medium) design. Participants completed the appropriateness rating for eight predictions, which were either completed with evidence in favour or against (as shown below).

-

Appropriateness-rating task with negative lexicon:

-

Evidence in favour of the outcome occurrence: “It is unlikely that there will be a landslide, because the slope is very steep”.

-

Evidence against the outcome occurrence: “It is unlikely that there will be a landslide, because the vegetation holds the ground”.

-

-

Appropriateness rating task with positive lexicon:

-

Evidence in favour of the outcome occurrence: “There is a small likelihood that there will be a landslide, because the slope is very steep”.

-

Evidence against the outcome occurrence: “There is a small likelihood that there will be a landslide, because the vegetation holds the ground”.

-

Participants then only reported their socio-demographic characteristics.

2.2 Results

As shown in Fig. 1, the IPCC negative lexicon was judged more appropriate than the positive lexicon when matched with evidence against the outcome occurrence and less appropriate when matched with evidence in favour of the outcome occurrence. This was the case whether the prediction focused on passing an exam or the occurrence of a landslide.

Across probability ranges, the IPCC lexicon (red round markers) was perceived to be more appropriate than the alternative positive lexicons (blue triangle markers) when associated with evidence against the outcome occurrence (left panel) and less appropriate when associated with evidence in favour of the outcome occurrence (right panel). The error bars show the 95% CI (N = 100 in experiment 1a and N = 100 in experiment 1b). Note: Experiment 1a focused on three alternative positive lexicons (probability, chance or likelihood), whereas experiment 1b included only the probability terminology

We evaluated whether the predictions directed attention more towards the outcome occurrence or the outcome non-occurrence by subtracting the appropriateness ratings of predictions associated with evidence against the outcome occurrence from the appropriateness ratings of predictions associated with evidence in favour (Honda and Yamagishi 2006). Negative scores indicate that attention focuses more on the outcome non-occurrence with more negative scores indicating a stronger focus on that possibility, and, conversely, positive scores indicate attention focuses more on the outcome occurrence, with higher scores indicating a stronger focus on that possibility.

As shown in Fig. 2, this outcome focus measure shows that the lexicons generated more focus on the non-occurrence of the target outcome for extremely low, very low and low probability predictions but that this focus was stronger for the negative lexicon than for the positive lexicons. For the medium probability predictions, the negative lexicon had a neutral focus whilst the positive alternatives focused attention towards the possibility that the outcome would occur.

A within-subject design variance analysis (with lexicons and probability as within-subject factors) confirmed these effects for both the exam and the landslide studies, F(3, 297) = 33.23, p < .001, η2p = .25Footnote 1 and F(1, 99) = 21.34, p < .001, η2p = .18. For the exam study, we compared the negative lexicon to the three positive alternative lexicons using pairwise comparisons with Bonferroni adjustment. The negative lexicon elicited a greater focus on the outcome non-occurrence than each of the positive lexicons used in the exam study (probability, chance and likelihood), M Diff = − 0.92, 95% CI [− 1.24, − 0.60], p < .001, M Diff = − 0.71, CI [− 1.02, − 0.40], p < .001 and M Diff = − 0.89, CI [− 1.20, − 0.57], p < .001. The three positive lexicons elicited a similar pattern (as shown by the clusters of blue triangles in the left panel of Fig. 2)—the specific wording of the positive lexicon (probability, chance and likelihood) was not instrumental in determining outcome focus. The pairwise comparisons of the three positive lexicons showed no statistically significant differences (p > .05). There was also a clear dependence on the probability magnitudes expressed, with higher probabilities creating more focus on the outcome occurrence (as would be expected) and an interaction between the lexicon and probabilities, F(3, 297) = 157.91, p < .001, η2p = .62 and F(9, 891) = 3.39, p < .001, η2p = .03. We probed this interaction further by conducting separate variance analyses for the four probability magnitudes and found that the lexicon had an effect for the very low, low and medium probabilities but not for the extremely low ones, respectively, F(3, 297) = 6.93, p < .001, η2p = .07; F(3, 297) = 20.89, p < .001, η2p = .17; F(3, 297) = 16.42, p < .001, η2p = .14; and F(3, 297) = 2.11, p = .099, η2p = .02.

The IPCC lexicon (red round markers) focused participants’ attention towards the outcome non-occurrence more than the three positive alternative lexicons (blue triangle markers) for the extremely low, very low, low and medium probability ranges. Markers show the means and the error bars show the 95% CI (N = 100 and N = 100)

Negative probability phrases elicited a stronger focus on the outcome non-occurrence compared to positive phrases, in the sense that they were judged more appropriate with reasons against the outcome occurrence than with reasons in favour of it. Besides the presence of the negation, this might be due to variations in the probabilistic meaning of the phrase. It is important to assess whether the effect of the lexicon on the outcome focus might be due to a difference in the probabilities conveyed, e.g. if negative statements are perceived as conveying a lower probability. This is addressed in the next section.

3 Experiment 2: Probability perception studies

We sought to determine whether the positive lexicon featuring the “probability” terminology (i) conveyed probabilities similar to the negative lexicon, (ii) matched equally well the probability guidelines and (iii) was not more sensitive to motivated reasoning (i.e. interpreting information based on our personal beliefs or attitudes). To assess whether people’s motivation to re-define the meaning of predictions is similar across lexicons, we evaluated the relationship between the probability interpretation of a range of climate change predictions with participants’ pro-ecological attitude (Dunlap et al. 2000), climate change belief (Heath and Gifford 2006) and epistemic scepticism (Capstick and Pidgeon 2014). Past work has shown that the interpretation of climate change predictions is sensitive to prior belief regarding climate change. For example, people with a stronger belief in climate change understand a prediction of climate change occurrence as having a higher probability than people who believe less in climate change (Budescu et al. 2012; Budescu et al. 2014). Interpreting predictions based on our own beliefs and experience is not in itself an issue and may even be desirable in some instances (e.g. taking an umbrella when rain is “unlikely” in London vs. in Madrid). However, motivated reasoning is an issue when it comes to the interpretation of evidence presented in the IPCC reports. The IPCC intends to convey a particular probability with each prediction and aims to inform every member of the public equally well. In this respect, the level of consistency of the probability perception with the guidelines as well as the sensitivity of this interpretation to prior beliefs are two indicators of the quality of the lexicon. Prior research indicates that negative verbal probabilities may lead to more variability (Smithson et al. 2012), which may be caused by greater sensitivity to motivated reasoning and may translate into lower consistency with the recommended numerical meaning. In a pre-test, we did not find evidence that this was the case, so, in this study, we expected that the positive and negative lexicons would convey similar probability perceptions. The experiment was pre-registered (registration reference 1789, available on the Open Science Framework: https://osf.io/5efxt/?view_only=91a1d43bce044ad4b221513cabe78451) and was run on two samples: a sample of American workers sourced from Amazon Mechanical Turk (AMT) and a sample of first-year psychology students from a British university, for which we conducted the same analyses (shown in tables and figures as experiments 2a and 2b).

3.1 Method

3.1.1 Participants

We conducted the experiment in two samples. For each, our pre-registered target number was a minimum of 180 participants. The sample size was chosen to measure a small-to-medium effect size (Cohen’s f = 0.16) based on an a priori power analysis for ANOVA with two groups of participants and five repeated measures, given α = 5% and 1 − β = 80%, and assuming a correlation of .50 between the repeated measures.

Experiment 2a, American sample

Overall, 196 American AMT workers took part (45% women (n = 89), age range 20–68 years, M = 35.19, SD = 10.04 years). Most of the participants were white/Caucasian (79%); 7% A frican-American, 6% Asian and 9% other) and most earned up to $50,000 (66%, 33% earned over $50,000 and 1% did not disclose their earnings). Lastly, most participants leaned towards the Democratic Party (48%, 23% supported the Republican Party and 28% were Independents). Participants had a pro-ecological attitude (M = 3.74, SD = 0.75) and believed in climate change (M = 3.93, SD = 1.05) but were slightly sceptical about climate change science (M = 2.43, SD = 0.98).

Experiment 2b. British student sample

A total of 198 first-year psychology students residing in the UK took part in the study (76% women (n = 151), age range 17–32 years, M = 18.97, SD = 1.89 years). Most of the participants were white/Caucasian (63%; 15% black, 13% Asian and 9% other) and most earned less than £10,000 (64%, whilst 8% earned over £10,000 and 26% did not disclose their earnings). Most participants leaned towards the Labour Party (41%, 15% towards the Conservatives and 43% other). Participants generally had a pro-ecological attitude (M = 3.77, SD = 0.45) and believed in climate change (M = 3.92, SD = 0.68), although they tended to be sceptical regarding the science supporting climate change (M = 2.71, SD = 0.61).

Design

Participants read eight phrases taken from the IPCC reports—two for each of four probability ranges—from an extremely low probability range to a medium one (Table 2). We also included two sentences which conveyed a high probability (“it is likely”) as filler items. The phrases featured either the negative IPCC lexicon or the “probability” alternative positive lexicon. We chose the alternative lexicon using the “probability” terminology because it has direct translations in most languages, whereas the lexicon using “likelihood” or “chance” does not (e.g. in French “likelihood” does not have a direct translation and “chance” would be translated as “luck”).

3.1.2 Materials and procedure

Participants performed two main tasks:

-

(a)

Participants read the eight climate change predictions shown in Table 2, which either featured the negative lexicon or the positive alternative one. The task also included two filler predictions with the verbal probability “likely”. Participants assessed the probability conveyed by each sentence presented on a different page and in a randomised order. The probability question was presented under the prediction and read: “Please, provide below your best understanding of the probability that the authors intended to communicate”. Each page featured an “IPCC guidelines button”, which participants could click to read the expected probabilistic meaning of the lexicon presented. This was included to mimic the IPCC reports, which always include a table outlining the probabilistic meaning of the uncertainty lexicon. Participants provided their judgement on a visual analogue scale ranging from 0% “Event is impossible” to 100% “Event is certain to happen” (by increments of 1). When participants moved the cursor, they could see the numerical value that they had selected written on the side of the scale.

-

(b)

Participants answered a series of questions measuring their environmental values, belief in climate change and scepticism about existing evidence of climate change. Pro-ecological attitude was assessed with the environmental values subscale of the New Environmental Paradigm Scale (Dunlap et al. 2000). Climate change belief was measured with the four items taken from the Beliefs About Global Climate Change scale (Heath and Gifford 2006). Participants’ lack of trust in the science behind climate change reports was measured using the epistemic scepticism scale of Capstick and Pidgeon (2014). For all those scales, participants provided their judgements on a 5-point Likert scale ranging from 1: Strongly disagree to 5: Strongly agree. The middle point was labelled as Neither agree nor disagree. The scales had a satisfactory reliability, respectively, Cronbach’s alpha = .75, .73 and .81.

The order of presentation of the probability perception block and the individual differences block was counterbalanced. Participants either responded to the climate change attitude items first and then to the probability judgements or conversely. We computed the average of the probability perception based on the two outcomes for each probability magnitude. Finally, we computed a consistency score for each average probability estimate that reflected whether the estimates fell within the expected guidelines (shown in the left column of Table 2).

3.2 Results

Both lexicons led to a wide distribution of numerical interpretation, as shown in Fig. 3. The positive lexicon led to similar probability estimates as the negative lexicon. The overall effect of the scale on probability was not statistically significant in the American sample but was significant in the British sample: F(4, 191) = 1.29, p = .275, ηp2 = .03 and F(5, 192) = 6.18, p < .001, ηp2 = .11. Examination of the between-subject effect per probability magnitude for the British sample showed that the effect of the lexicon was only based on a difference in medium probability predictions, for which the positive lexicon led to a higher probability perception than the negative lexicon, F(1, 197) = 21.78, p < .001; there were no statistically significant differences for the extremely low, very low and low probabilities: F(1, 197) = 0.21, p = .885, F(1, 197) = 0.014, p = .906, F(1, 197) = 1.06, p = .305.

Probability perception of participants based on the negative IPCC lexicon and the positive alternative lexicon (experiment 2a, N = 196 and experiment 2b, N = 198). The coloured rectangles show the distribution of probability estimates (wider rectangles represent more frequent values), the grey line shows the median, the middle point shows the average and the error bars show the 95% confidence intervals

Participants’ probability estimates were mostly within the IPCC guidelines, but more so for the American sample than for the British sample; the latter particularly tended to overestimate the extremely low and very low probabilities. The positive lexicon led to probability perceptions that were slightly more consistent with the IPCC guidelines (see Table 3), but not significantly so, F(4, 191) = 0.81, p = .521, ηp2 = .02, and F(4, 193) = 1.63, p = .169, ηp2 = .03.

Finally, to assess the impact of motivated reasoning on probability perception in the two samples, we conducted two Generalised Estimating Equation analyses with individual differences and lexicons as predictors along with their interaction and probability perception as the outcome variable. Participants’ pro-ecological attitude, climate change belief and epistemic scepticism did not predict statistically significantly the probability interpretations of the climate change predictions, neither in the American sample nor in the British sample: Wald χ2(1) = 0.86, p = .353; Wald χ2(1) = 1.25, p = .264; Wald χ2(1) = 0.27, p = .606; Wald χ2(1) = 0.40, p = .529; Wald χ2(1) = 0.06, p = .811; and Wald χ2(1) = 1.63, p = .201. Furthermore, in the American sample, both lexicons were equally insensistive to individual differences, as indicated by the non-significant interactions with pro-ecological attitude, climate change belief and epistemic scepticism, Wald χ2(1) = 1.58, p = .209; Wald χ2(1) = 0.81, p = .369; and Wald χ2(1) = 0.01, p = .934. In the British student sample, the results were the same for pro-ecological attitude and climate change belief, Wald χ2(1) = 0.90, p = .343 and Wald χ2(1) = 0.28, p = .597, but the lexicon interacted with epistemic scepticism to predict probability perception, Wald χ2(1) = 1.23, p = .040.

4 Decision-making study

We finally aimed to test the effect of the lexicon (positive vs. negative) on people’s decision-making and perception of the forecasts in terms of utility, trust and blame. We chose a context that was not too obviously connected with climate change in order to avoid the effect of personal beliefs interfering with the task. However, landslides are natural disasters that are related to climate change, since their likelihood is increased by the increases in heavy precipitation that are expected from climate change (Crozier 2010). We expected that when the positive lexicon was used to describe the probability of a landslide, participants would be more cautious (i.e, would take preventive measures more often), the forecast would be perceived as more useful and participants would be more likely to trust the forecaster after an incorrect decision.

4.1 Method

Participants

Following our pre-registered sample size, 55 participants from the university research participation pool took part in the study (26 males, age range 18–31 years, M = 21.32, SD = 2.73 years). In terms of ethnicity, the proportions were fairly split (47% white/Caucasian, 34% Asian British/other, 13% Black British/other and 6% other). Education ranged from secondary education (2%) to postgraduate (15%), with most participants having some university education (60%). For comparison purposes with previous experiments, the sample size was a priori set to detect a small effect of the lexicon on decision (Cohen’s f = 0.13) for 2 × 4 within-subject ANOVA, given α = 5% and 1 − β = 80%, and assuming a correlation of .50 between the repeated measures.

Design

We tested the effect of the lexicon in a within-subject design featuring two lexicons (negative vs. positive) × four probability magnitudes (extremely low, very low, low and medium), representing a total of eight landslide predictions that were each shown six times.

Materials and procedure

Participants completed three tasks in the following order: (i) a short probability estimation task, (ii) the main decision-making task and (iii) the post-decision survey, which featured a utility, trust and blame assessment and a probability translation of the verbal probabilities. In the probability estimation task, participants estimated the probability conveyed by the eight landslide predictions.

In the decision-making task, participants played the role of a landslide expert making a decision on whether to evacuate people from at-risk areas based on the landslide forecasts provided. Participants read a landslide prediction and made a decision, after which they saw information on whether the landslide occurred and the consequence of their decision (e.g. There was a landslide! Well done for evacuating, everyone is safe. You earn 20 points). We designed a payoff structure to mimic actual consequences associated with landslide decision-making. Participants could earn points for correct decisions (+ 20 for correct evacuation, 0 for correct stay) and lost points for incorrect decisions (− 5 for an evacuation decision that was not followed by a landslide and − 50 for a non-evacuation decision followed by a landslide).

Participants started with a training phase and then proceeded to a test phase. In the training phase, the predictions were either certain or focused on a probability of about 50% and the events were set in advance (not probabilistic). The training phase consisted of four predictions: “There will be a landslide” (corresponding to a 100% probability of landslide), “There will be no landslide” (corresponding to a 0% probability of landslide) and twice “There may be a landslide” (corresponding to a 50% probability of landslide). The first prediction was followed by a landslide, the second was followed by no landslide and only one of the two “may be” predictions was followed by a landslide.

In the test phase, participants made six decisions for each of the eight predictions (i.e. 48 decisions in total). Those decisions were organised in six blocks of eight decisions in which the eight experimental predictions were randomly presented. After each prediction, the occurrence of a landslide was determined probabilistically, with the probabilities matching the top of the range proposed by the IPCC: 1%, 10%, 33% and 66%. The landslide did not occur at all in the 1% condition, meaning that there was no correct evacuation and no incorrect stay for this condition. Based on the probability of landslide occurrence and the points attached to the different decision outcomes, we can compute the expected utility attached to each decision according to Expected Utility Theory (e.g. EU evacuation = P(landslide) × Points correct evacuation + P(¬landslide) × Points incorrect evacuation). These utilities formalise that from a normative standpoint, and if we only take into account our point system in terms of utility, it was better to decide to evacuate than not to evacuate for very low to medium probabilities. It was only better not to evacuate than to evacuate for extremely low probabilities. The table of utilities is shown in the Appendix.

In the post-decision survey, participants first assessed the predictions’ utility as they experienced them in the decision-making task. Participants judged how useful, informative and accurate each of the eight predictions was on a 5-point Likert scale ranging from 1: Not at all useful/informative/accurate to 5: Extremely useful/informative/accurate. The internal consistency of the scale was good across the different predictions (Cronbach’s alphas ranged from .64 to .85). Then, and for each prediction, participants imagined a situation where a local community had decided not to evacuate an area based on a given landslide forecast but that a landslide had occurred, creating many casualties. Participants judged how much the forecaster was to blame for the wrong decision of the local community. Participants reported their blame by providing the extent to which they agreed with five sentences describing how one could blame the landslide expert (e.g. “The landslide expert is responsible for the casualties”; 1: Strongly disagree, 5: Strongly agree). The internal consistency of the blame scale was good for all the predictions (Cronbach’s alphas ranged from .82 to .93). Afterwards, participants judged the extent to which they would trust the forecaster in the future on a 5-point scale ranging from 1: Not at all to 5: Very much. (“To what extent would you trust this expert next time?”) Then participants provided their probability estimates for the landslide forecasts.

The data from this experiment are available on the Open Science Framework (https://osf.io/5efxt/?view_only=91a1d43bce044ad4b221513cabe78451).

4.2 Results

We first checked that the positive and negative phrases were perceived as conveying a similar probability prior to the experiment, which was the case, Wald χ2(3) = 2.57, p = .109. Then, using two Generalised Estimating Equations, we tested the effect of the lexicon on the decisions taken (dichotomous dependent variable: evacuation or no evacuation) and the decision outcome (multinomial dependent variable: correct evacuation, correct stay, unnecessary evacuation or failure to evacuate).

As expected, participants evacuated more often for higher probabilities of landslide, Wald χ2(1) = 138.35, p < .001. However, for each probability level, participants evacuated more often when the risk was predicted using the positive lexicon (Fig. 4, top panel), Wald χ2(1) = 87.00, p < .001. The number of evacuation decisions increased over time for both lexicons, except for the medium probability for which the number of evacuations increased with the negative lexicon but remained stable (presumably because of a ceiling effect) with the positive lexicon; this difference is supported by a significant lexicon × probability magnitude × block interaction, Wald χ2(13) = 35.22, p = .001.

Overall, participants made more incorrect decisions based on the positive lexicon than with the negative lexicon (37% vs. 28%). However, the nature of the errors is critical to appraise how cautious participants were, as errors of omission (a landslide occurs following a decision not to evacuate) are generally more consequential than errors of commission (no landslide occurs after an evacuation decision). As shown in the middle row of Fig. 4, participants made almost twice fewer errors of omission with the alternative lexicon compared with the original lexicon (6% vs. 11%), but they also made more incorrect evacuation decisions (31% vs. 17%). In aggregate, the lexicon did not have a detectable bulk effect, Wald χ2(1) < .01, p = 1.000, but it interacted with decision round and probability conveyed to impact decision outcome. The effect of the lexicon was smaller in the middle decision rounds than at the beginning and at the end, as testified by a two-way interaction with decision round, Wald χ2(5) = 266.69, p < .001, and it was smaller for extremely low probabilities because of the landslides not occurring even once in that condition, as testified by a three-way interaction with decision round and probability conveyed, Wald χ2(13) = 35.22, p = .001.

As shown in the lower row of Fig. 4, although participants made fewer correct decisions based on the alternative lexicon than on the original IPCC lexicon, they performed better (i.e. earned more points), Wald χ2(1) = 17.73, p < .001. This was the case because of the heavy penalty associated with not evacuating when a landslide happened. Participants also earned points based on higher probability predictions, Wald χ2(1) = 300.76, p < .001. Specifically, participants earned a similar number of points with the two lexicons in the extremely low and very low probability of landslide conditions but earned more points with the positive lexicon in the low to medium probability conditions in which the landslides occurred more frequently. This effect is supported by a statistical interaction between the lexicon and probability ranges, Wald χ2(30) = 33.85, p < .001. For low and medium probability predictions, participants were more likely to make the cautious decision to evacuate based on the positive lexicon, thereby avoiding the heavy penalty associated with a failure to evacuate followed by a landslide. Further, as shown in the rightmost panel of the lower row, performance based on the lexicons varied over rounds for the medium probability predictions: Participants performed better over time with the original lexicon, whereas performance decreased slightly based on the alternative lexicon. This trend mirrors the one observed about the number of evacuations, interaction effect lexicon × probability range × block, Wald χ2(30) = 72.47, p < .001.

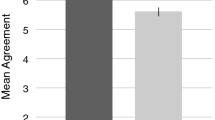

We also assessed how useful participants thought the predictions were in the landslide evacuation task. Participants judged the negative lexicon to be more useful (top panel of Fig. 5) in making their decision, Wald χ2(1) = 8.26, p = .004; this was the case for extremely low, very low and low probabilities but was the opposite for medium probabilities, as shown by the interaction effect lexicon × probability, Wald χ2(3) = 25.97, p < .001. Also, participants generally judged the extremely low probabilities to be more useful than the medium ones, Wald χ2(3) = 56.58, p < .001. We conducted an exploratory mediation analysis using the Mediation and Moderation for Repeated Measures (MEMORE) package (Montoya and Hayes 2017). We included the sum of correct decisions per lexicon as the mediator, the lexicon as a predictor and utility perception as the outcome variable (see lower panel of Fig. 5). The results confirmed that the alternative lexicon indeed led to fewer correct decisions and a lower perceived utility than the original lexicon. This effect was not mediated at a detectable level by the number of correct decisions, although after controlling for the number of correct decisions, the effect of the alternative lexicon on utility became statistically insignificant (c′ path).

Top panel: Average utility judgements of the landslide predictions featuring either the negative IPCC lexicon or the alternative positive lexicon to convey landslide probabilities ranging from extremely low to medium. Error bars show 95% CI (N = 55). Lower panel: Mediation analysis assessing whether the effect of the lexicon on utility is mediated by the overall number of correct decisions. Note: Participants provided judgements across all eight experimental conditions (2 lexicons × 4 probability magnitudes), and hence, the overlap between the error bars is not an indicator of a lack of statistical significance

When participants made the mistake of not evacuating, they judged that the forecaster was more to blame and were less likely to trust the subsequent predictions, when using the negative lexicon compared to the positive lexicon (Fig. 6), Wald χ2(1) = 13.41, p < .001, Wald χ2(1) = 7.58, p = .006. In addition, for both lexicons, participants judged the forecasters to be less blameworthy and more trustworthy when they communicated higher probabilities of landslides, Wald χ2(3) = 10.69, p = .014, Wald χ2(3) = 12.10, p = .007.

Average judgements of trust and blame in the case of an incorrect stay (no evacuation + landslide), based on extremely low to medium probability landslide predictions featuring either the negative IPCC lexicon or the alternative positive lexicon. Error bars show 95% CI (N = 55). Note: Participants provided judgements across all eight experimental conditions (2 lexicons × 4 probability magnitudes), and hence, the overlap between the 95% confidence error bars is not an indicator of a lack of statistical significance

5 Discussion

Currently, the IPCC guidelines use a negative lexicon to express extremely low to medium probabilities (0–66%). In this study, we developed an alternative positive lexicon and compared it with the negative IPCC lexicon to test whether the positive lexicon conveyed a similar probability, yet elicited more attention to the possibility that the outcome would occur, which would lead to more cautious decisions whilst maintaining trust over time. Our work complements past work that has focused on helping recipients to better interpret the numerical meaning of the IPCC uncertainty lexicon (Budescu et al. 2012; Budescu et al. 2014; Smithson et al. 2012) by investigating the psychological consequences of using negations on numerical meaning interpretation, focus of attention and decision-making. Our work builds on psychological research showing that negative verbal probabilities direct people’s attention away from the outcome occurrence (Teigen and Brun 1995) and influence decisions (Teigen and Brun 1999). We extend this line of work by replicating these findings with the IPCC lexicon and providing behavioural evidence of their importance.

Negations attracts attention towards the outcome non-occurrence

Our results indicate that for probabilities ranging from 0 to 66%, the presence of negations increases participants’ tendency to focus on the outcome non-occurrence, as participants judged that the negative predictions fitted better with evidence against the outcome occurrence than in favour of it. One may think that it is logical to focus on outcome non-occurrence for low probability events, but even low probability events may occur and require attention, and this focus translates the perceived attitude of the speaker, i.e. there is no need to take action on this issue (Hilton 2008; Teigen and Brun 1999). For medium probability events, this is especially problematic as it is more clearly counterproductive to focus on the possibility that the event would not occur when it has a probability of occurring between 33 and 66%.

The alternative positive lexicon conveys a similar probabilistic meaning

We found that the difference in attention paid to the outcome non-occurrence between the negative and positive lexicons was not due to variation in the perceived probability that the outcome would happen. The positive lexicon conveyed, on average, the same probabilistic meaning as the original IPCC lexicon. Regarding the ability to convey the expected probability range prescribed by the IPCC (see left column of Table 1), the positive lexicon again performed similarly to the IPCC lexicon. We observed an overall higher level of consistency with the probability guidelines than with past findings, which found that only a minority of participants interpreted climate change predictions consistently with the guidelines, even when the stated guidelines were presented (Budescu et al. 2012; Budescu et al. 2014). Also, on a positive note with respect to communication, we did not find evidence that individuals’ attitude towards the environment and beliefs about climate change tempered their interpretation of the climate change predictions, as had been shown in past research (Budescu et al. 2012; Budescu et al. 2014). This difference might partly be due to the fact that this past work has mostly focused on lexicons conveying higher probabilities (from low to high probabilities—and not, as here—from extremely low to medium) (Budescu et al. 2012).

Positive disaster forecasts lead to more cautious decisions

At the heart of our work was the examination of the effect of the use of a negative uncertainty lexicon on decision-making. In our role-playing experiment, we found that participants were more likely to decide to evacuate an area when they were warned using the positive landslide predictions. Our work extends past research showing an effect of the directionality of verbal probabilities (Honda and Yamagishi 2006; Teigen and Brun 1999), by applying it to a natural hazard context and focusing on a series of decisions in which participants had the opportunity to learn in a probabilistic dynamic environment (i.e. the landslide occurrence had a set probability of occurring). This set-up was particularly useful because it provided the opportunity to assess the number and type of errors made by participants.

Because participants were less likely to take action to mitigate the risk of landslide, the negative lexicon led to more false negative errors—deciding to stay followed by a landslide occurrence—whereas the positive lexicon led to more false alarm errors—deciding to evacuate without a landslide occurrence. Because in this experiment the hazards were, overall, only 28% likely to occur (1%, 10%, 33% and 66% probability depending on the prediction), overall the participants committed more false alarm errors and made more incorrect decisions, based on the positive lexicon.

The negative lexicon was perceived as more useful for making a decision than the positive lexicon for the low probability predictions, and this was not mediated at a statistically significant level by the greater number of correct decisions. However, to be valuable, predictions must be trusted in the long term, even when they occasionally lead to incorrect decisions. We found that the positive lexicon triggered less blame and more trust in the prediction if a false negative error was committed (i.e. someone did not evacuate and a landslide occurred).

The nature of the uncertain event should always be taken into account when we reflect on the effect of the lexicon on outcome focus and decision-making. From a probabilistic point of view, the positive and negative lexicons are expected to convey a similar probability, whether they qualify positive or negative outcomes. However, the choice of lexicon would have opposite effects for opposite outcomes. Our results suggest that describing climate change occurrence with a negative uncertainty lexicon would lead to more reckless decisions. However, we can also draw from this evidence to predict that the same negative lexicon used to describe climate change non-occurrence (e.g. it is unlikely that the flood defence wall will hold) would actually entice more cautious decisions than a positive lexicon (e.g. there is a chance that the flood defence wall will hold). Further studies should investigate the effect of uncertainty lexicons for events that are perceived to be positive as well as negative.

Another limitation of our work is that we did not systematically sample a range of different events from the IPCC reports—we mostly focused on the predicted consequences of climate change (IPCC 2013), whereas the IPCC reports also quantify uncertainty regarding climate change adaptation or mitigation, as well as the drivers of climate change. Further research should broaden the range of predictions under focus (as with the adaptation example in the previous paragraph) and evaluate the benefits of the positive lexicon for different outcomes.

5.1 Implications

Our results show that the lexicon of uncertainty is never completely neutral. The presence of a negation, or its absence, both convey a particular message to recipients: “no need to take action” vs. “you should take action”. Hence, it is difficult to convey uncertainty in a neutral way, except possibly by providing both messages, which is cumbersome but worth considering (e.g. “it is unlikely but there is a small probability that the sea level will rise 2 metres”). We argue for a simpler method: People and organisations who believe that actions to mitigate climate change and its consequences are needed (as most governments do now; Hallegatte et al. 2016; United Nations 2015) should use a positive uncertainty lexicon to describe climate change occurrence. The positive uncertainty lexicon should also prove beneficial to discuss the impact of mitigation strategies and encourage their support (e.g. “there is a chance that it will be effective” rather than “it is unlikely that it will be effective”). In other contexts, in which being cautious would instead be counterproductive, it might be more beneficial to recommend the use of a negative uncertainty lexicon (i.e. when false positive errors are more costly than false negative errors; e.g. in the criminal justice system). Verbal probabilities should be used as tools to minimise the costs of decision-making errors and maximise trust. We have provided here credible data showing that, in a climate change context, the positive uncertainty lexicons should be the preferred choice.

Notes

We also tested the effect using a Generalised Estimating Equation for ordinal variables and found the same effects.

References

Brun W, Teigen KH (1988) Verbal probabilities: ambiguous, context dependent, or both? Organ Behav Hum Decis Process. 41:390–404. https://doi.org/10.1016/0749-5978(88)90036-2

Budescu DV, Por H-H, Broomell SB (2012) Effective communication of uncertainty in the IPCC reports. Clim Chang 113:181–200. https://doi.org/10.1007/s10584-011-0330-3

Budescu DV, Por H-H, Broomell SB, Smithson M (2014) The interpretation of IPCC probabilistic statements around the world. Nat Clim Chang 4:508–512. https://doi.org/10.1038/nclimate2194

Budescu DV, Wallsten TS (1995) Processing linguistic probabilities: general principles and empirical evidence. In: Busemeyer RHJR, Medin D (eds) Psychology of learning and motivation (pp. 275–318). Academic Press, Amsterdam

Capstick SB, Pidgeon NF (2014) What is climate change scepticism? Examination of the concept using a mixed methods study of the UK public. Glob Environ Chang 24:389–401. https://doi.org/10.1016/j.gloenvcha.2013.08.012

Crozier MJ (2010) Deciphering the effect of climate change on landslide activity: a review. Geomorphology 124:260–267. https://doi.org/10.1016/j.geomorph.2010.04.009

Dunlap RE, Van Liere KD, Mertig AG, Jones RE (2000) Measuring endorsement of the new ecological paradigm: a revised NEP scale. J Soc Issues 56:425–442

Hallegatte S, Rogelj J, Allen M, Clarke L, Edenhofer O, Field CB et al (2016) Mapping the climate change challenge. Nat Clim Chang 6:663–668. https://doi.org/10.1038/nclimate3057

Harris AJL, Corner A (2011) Communicating environmental risks: clarifying the severity effect in interpretations of verbal probability expressions. J Exp Psychol Learn Mem Cogn (37):1571–1578. https://doi.org/10.1037/a0024195

Harris AJL, Corner A, Xu J, Du X (2013) Lost in translation? Interpretations of the probability phrases used by the intergovernmental panel on climate change in China and the UK. Clim Chang, 121, 415–425-415–425. https://doi.org/10.1007/s10584-013-0975-1

Hasson U, Glucksberg S (2006) Does understanding negation entail affirmation? J Pragmat 38:1015–1032. https://doi.org/10.1016/j.pragma.2005.12.005

Heath Y, Gifford R (2006) Free-market ideology and environmental degradation. Environ Behav 38:48–71. https://doi.org/10.1177/0013916505277998

Hilton DJ (2008) Emotional tone and argumentation in risk communication. Judgm Decis Mak 3:100–110

Honda H, Yamagishi K (2006) Directional verbal probabilities: inconsistencies between preferential judgments and numerical meanings. Exp Psychol 53:161–170. https://doi.org/10.1027/1618-3169.53.3.161

Horn L (1989) A natural history of negation. University of Chicago Press, Chicago

Hornsey MJ, Harris EA, Bain PG, Fielding KS (2016) Meta-analyses of the determinants and outcomes of belief in climate change. Nat Clim Chang 6:622–626. https://doi.org/10.1038/nclimate2943http://www.nature.com/nclimate/journal/v6/n6/abs/nclimate2943.html#supplementary-information

Intergovernmental Panel on Climate Change (2013) Fifth Assessment Report Climate Change 2013: The Physical Science Basis. Summary for Policymakers

Juanchich M, Sirota M (2017) How much will the sea level rise? Outcome selection and subjective probability in climate change predictions. J Exp Psychol Applied 23:386–402. https://pubmed.ncbi.nlm.nih.gov/28816471/

Juanchich M, Sirota M, Karelitz T, Villejoubert G (2013) Can membership-functions capture the directionality of verbal probabilities? Think Reason 19:231–247. https://doi.org/10.1080/13546783.2013.772538

Juanchich M, Teigen KH, Villejoubert G (2010a) Is guilt ‘likely’ or ‘not certain’? Contrast with previous probabilities determines choice of verbal terms. Acta Psychol 135:267–277. https://doi.org/10.1016/j.actpsy.2010.04.016

Juanchich M, Teigen KH, Villejoubert G (2010b) Is guilt “likely” or “not certain”? Contrast with previous probabilities determines choice of verbal terms. Acta Psychol 135:267–277

Leiserowitz A (2006) Climate change risk perception and policy preferences: the role of affect, imagery, and values. Clim Chang 77:45–72

Levin IP, Gaeth GJ (1988) How consumers are affected by the framing of attribute information before and after consuming the product. J Consum Res 15:374–378

Maibach EW, Kreslake JM, Roser-Renouf C, Rosenthal S, Feinberg G, Leiserowitz AA (2015) Do Americans understand that global warming is harmful to human health? Evidence from a national survey. Ann Glob Health 81:396–409. https://doi.org/10.1016/j.aogh.2015.08.010

Mastrandrea MD, Mach KJ, Plattner G-K, Edenhofer O, Stocker TF, Field CB et al (2011). The IPCC AR5 guidance note on consistent treatment of uncertainties: A common approach across the working groups. Clim Chang 108:675. https://doi.org/10.1007/s10584-011-0178-6

Montoya, A. K., & Hayes, A. F. (2017). Two-condition within-participant statistical mediation analysis: A path-analytic framework. Psychol Methods 22:6–27. https://doi.org/10.1037/met0000086

Patt A, Dessai S (2005) Communicating uncertainty: lessons learned and suggestions for climate change assessment. Comptes Rendus Geoscience 337:425–441. https://doi.org/10.1016/j.crte.2004.10.004

Patt AG, Schrag DP (2003) Using specific language to describe risk and probability. Clim Chang 61:17–30. 10.1023%2FA%3A1026314523443

Smith SW, Jucker AH (2014) Maybe, but probably not: negotiating likelihood and perspective. Language and Dialogue 4:284–298

Smithson M, Budescu DV, Broomell SB, Por H-H (2012) Never say “not:” impact of negative wording in probability phrases on imprecise probability judgments. Int J Approx Reason 53:1262–1270. https://doi.org/10.1016/j.ijar.2012.06.019

Sterman JD (2011) Communicating climate change risks in a skeptical world. Clim Chang 108:811–826 https://doi.org/10.1007/s10584-011-0189-3

Sutton RT (2019) Climate science needs to take risk assessment much more seriously. Bull Amer Met Soc 100:1637–1642. https://journals.ametsoc.org/doi/full/10.1175/BAMS-D-18-0280.1

Teigen KH (1988) The language of uncertainty. Acta Psychol 68:27–38. https://doi.org/10.1016/0001-6918(88)90043-1

Teigen KH, Brun W (1995) Yes, but it is uncertain - direction and communicative intention of verbal probabilistic terms. Acta Psychol 88:233–258. https://doi.org/10.1016/0001-6918(93)E0071-9

Teigen KH, Brun W (1999) The directionality of verbal probability expressions: effects on decisions, predictions, and probabilistic reasoning. Organ Behav Hum Decis Process 80:155–190. https://doi.org/10.1006/obhd.1999.2857

Teigen KH, Brun W (2003) Verbal probabilities: a question of frame? J Behav Decis Mak 16:53–72. https://doi.org/10.1002/bdm.432

Teigen KH, Juanchich M, Riege A (2013) Improbable outcomes: infrequent or extraordinary? Cognition 127:119–139. https://doi.org/10.1016/j.cognition.2012.12.005

United Nations (2015) The Paris agreement. Retrieved from https://unfccc.int/sites/default/files/english_paris_agreement.pdf

van der Linden S, Maibach E, Leiserowitz A (2015) Improving public engagement with climate change: five “best practice” insights from psychological science. Perspect Psychol Sci 10:758–763. https://doi.org/10.1177/1745691615598516

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Open Science Statement: All the materials and data for all experiments and the pre-registration protocols for Exp. 2 and 3 are available at: https://osf.io/5efxt/?view_only=91a1d43bce044ad4b221513cabe78451.

Appendix

Appendix

P (landslide) | Decision expected utility | |

|---|---|---|

Evacuate | Do not evacuate | |

1% | Total EU = 0.01 × 20 + 0.99 × − 5 | Total EU = 0.01 × − 50 + 0.99 × 0 |

Total EU = 0.2–4.95 | Total EU = − 0.5 + 0 | |

Total EU = − 4.75 | Total EU = − 0.5 | |

10% | Total EU = 0.10 × 20 + 0.90 × − 5 | Total EU= 0.10 × − 50 + 0.90 × 0 |

Total EU = 2–4. 5 | Total EU = − 5 + 0 | |

Total EU = − 2.5 | Total EU = − 5 | |

33% | Total EU = 0.33 × 20 + 0.66 × − 5 | Total EU = 0.33 × − 50 + 0.66 × 0 |

Total EU = 6.6–3.33 | Total EU = − 16.5 + 0 | |

Total EU = 3.3 | Total EU = − 16.5 | |

60% | Total EU = 0.66 × 20 + 0.33 × − 5 | Total EU = 0.66 × − 50 + 0.33 × 0 |

Total EU = 13.2 + (− 1.65) | Total EU = − 33 + 0 | |

Total EU = 11.55 | Total EU = − 33 | |

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Juanchich, M., Shepherd, T.G. & Sirota, M. Negations in uncertainty lexicon affect attention, decision-making and trust. Climatic Change 162, 1677–1698 (2020). https://doi.org/10.1007/s10584-020-02737-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10584-020-02737-y