Abstract

This paper proposes a new effective pseudo-spectral approximation to solve the Sylvester and Lyapunov matrix differential equations. The properties of the Chebyshev basis operational matrix of derivative are applied to convert the main equation to the matrix equations. Afterwards, an iterative algorithm is examined for solving the obtained equations. Also, the error analysis of the propounded method is presented, which reveals the spectral rate of convergence. To illustrate the effectiveness of the proposed framework, several numerical examples are given.

Similar content being viewed by others

1 Introduction

For study on the dynamical systems, filtering, model reduction, image restoration, etc., many phenomena can be modeled more efficiently by the matrix differential equations [1–5]. We consider the subsequent Sylvester matrix differential equations

where \(P\in\mathbb{R}^{p\times q}\) is an unknown matrix, the matrices \(P_{0}\in\mathbb{R}^{p\times q}\), \(A(t) :[t_{0}, t_{f}]\rightarrow\mathbb{R}^{p\times p}\), \(B(t) :[t_{0}, t_{f}]\rightarrow\mathbb{R}^{q\times q}\), and \(Q(t) :[t_{0}, t_{f}]\rightarrow\mathbb{R}^{p\times q}\) are given. We assume \(A(t),B(t),Q(t)\in C^{s}[t_{0}, t_{f}]\), \(s\geq1\). In the particular case, where \(B(t)\) is the transpose of \(A(t)\), system (1) is called the Lyapunov differential equation. Such equations occur frequently in various fields of science and are widely used in control problems and theory of stability in time varying systems [1, 4]. The analytical and numerical approaches have been studied by several authors to solve the Sylvester equations [6–17].

In recent years spectral collocation methods have received attention of many researchers [18–20]. The ease of implying and the exponential rate of convergence are two main advantages of these methods [21, 22]. The main contribution of the current paper is to implement the Chebyshev collocation method to evaluate (1). With the aid of collocation points, we obtain a coupled linear matrix equations where their solution gives the unknown coefficients. In the literature, finding a solution to different kinds of linear and coupled matrix equations is a subject of interest and has been studied extensively. Several approaches have been established for solving the mentioned equations; for example, the idea of conjugate gradient method, gradient-based iterative algorithm, Paige algorithm, and Krylov subspace methods; for more details, see [13, 23–27] and the references therein. We propose an iterative algorithm based on Paige’s algorithm [28] to solve the obtained coupled matrix equations.

In Sect. 2, we first review some definitions and notations. Then, we review some of the necessary properties of the Chebyshev polynomials for our latest developments. In Sect. 3, we employ the Chebyshev basis to reduce problem (1) to the solution of coupled matrix equations. Then, a new iterative algorithm is presented to solve the obtained coupled matrix equations. Moreover, we give an error estimation of the proposed method. Section 4 is dedicated to numerical simulations. Finally, a conclusion is provided.

2 Preliminaries

We review Paige’s algorithm and some basic definitions and properties of matrix algebra and the Chebyshev polynomials.

The main idea behind of Paige’s algorithm [27–29] is using bidiagonalization algorithm as a basis for solution of

The solution generated by Algorithm 1 is the minimum Euclidean norm solution of the computational importance of the algorithms in their application to very large problems with sparse matrices. In this case the computation per step and storage is about as small as could be hoped. Moreover, theoretically the number of steps will be no greater than the minimum dimension of A.

Definition 1

([30])

Consider two matrix groups, \(\mathcal{X}=(\mathcal{X}_{1},\mathcal{X}_{2},\ldots,\mathcal{X}_{k})\) and \(\widetilde{\mathcal{X}}=(\widetilde{\mathcal{X}}_{1},\widetilde {\mathcal{X}}_{2}, \ldots,\widetilde{\mathcal{X}}_{k})\), where \(\mathcal{X}_{j},\widetilde{\mathcal{X}}_{j} \in\mathbb{R}^{p \times q}\) for \(j=1,2,\ldots,k\). The inner product \(\langle\cdot,\cdot\rangle \) is

Remark 1

The norm of \(\mathcal{X}\) is \(\Vert \mathcal{X} \Vert ^{2} := \sum_{j=1}^{k} \operatorname{tr} ({\mathcal{X}}_{j}^{T} {\mathcal{X}_{j}})\).

Definition 2

([30])

Let \(L_{\omega}^{2}[t_{0},t_{f}]\) and \(\omega(x)\) be the weight function, the inner product and norm in \(L_{\omega}^{2}\) are defined as follows:

Definition 3

([30])

A function \(f:[t_{0},t_{f}]\rightarrow\mathbb{R}\), belongs to the Sobolev space \(H_{\omega}^{k,2}\) if its jth weak derivative lies in \(L_{\omega}^{2}[t_{0},t_{f}]\) for \(0\leq j \leq k\) with the norm

The Chebyshev polynomials are defined on the interval \([-1,1]\) and determined by recurrence formulae

These polynomials are orthogonal with \(\omega(t) = \frac{1}{{\sqrt{1 - {t^{2}}} }}\). With change of variable

where \(h=t_{f}-t_{0}\), the shifted Chebyshev polynomials in x on the interval \([t_{0}, t_{f}]\) are obtained as follows:

and for \(i = 1,2,3,\dots\),

The orthogonality condition for the shifted Chebyshev basis functions is

where \(\omega(x) = \frac{1}{{\sqrt{1 - {{(\frac{{2(x-t_{0})-h}}{{h}})}^{2}}} }}\).

It is well known that \(Y=\rm span\{ {\phi_{0}},{\phi_{1}}, \ldots ,{\phi_{m}}\}\) is a complete subspace of \(H=L_{\omega}^{2}[t_{0},t_{f}]\). So, \(f \in H\) has unique best approximation such as \(y_{m}\), that is,

where \({C^{T}} = ({c_{0}},{c_{1}},\ldots,{c_{m}})\) such that \(c_{j}\)s are uniquely calculated by \({c_{j}} = \frac{{{{ \langle{f,{\phi_{j}}} \rangle }_{L_{\omega}^{2}}}}}{{ \Vert {{\phi_{j}}} \Vert _{L_{\omega}^{2}}^{2}}}\) and

For more details, see [31].

Proposition 1

([22])

Assume that\(f\in H_{\omega}^{k,2}(t_{0},t_{f})\)and\({{{\mathcal {P}}_{m}}f}=\sum_{i = 0}^{m} {{c_{i}}{\phi_{i}}}\)is the truncated orthogonal Chebyshev series offand\({{{\mathcal {I}}_{m}}f}\)is the interpolation offin the Chebyshev–Gauss points. Then

where

\(h=t_{f}-t_{0}\)andC, C̃are constants independent ofmandf.

The derivative of \(\varPhi(x)\), defined in (3), can be given by

where the \((m+1)\times(m+1)\) matrix D is called the operational matrix of derivative for the Chebyshev polynomials in \([t_{0},t_{f}]\). Straightforward computations show that each entry of \(D=[d_{ij}]_{(m+1)\times(m+1)}\) for \(i,j=1,\ldots,m+1\) is defined as follows [32]:

3 Main results

Let us approximate each entry of \(P(t)=[p_{ij}(t)]_{p\times q}\) in (1) by the Chebyshev polynomials. Consequently, we have

where \({\mathcal{A} }_{ij}\in\mathbb{R}^{1\times(m+1)}\) are the unknown row vectors and m is the order of the Chebyshev polynomial. We can write

where notation ⊗ denotes the Kronecker product of two matrixes, \(\overline{\mathcal {A}} \in\mathbb{R}^{p\times q(m+1)} \) and

Thus

By substituting equations (7) and (8) in (1), we derive

We discretize the above equation at m points \(\xi_{i}\) (\(1\leq i\leq m\)) such that \(R_{m}(\xi_{i})=0_{p\times q}\). The selected collocation points are the roots of \(\phi_{m}(t)\) (the Chebyshev–Gauss nodes in \([t_{0},t_{f}]\)). These m roots that we use as the collocation nodes are defined by

which are all in \([t_{0},t_{f}]\). By replacing \(\xi_{i }\) nodes in (9), we obtain the coupled equations

where \(\mathcal{C}_{i}=I_{q} \otimes D \varPhi(\xi_{i})\), \(\mathcal{D}_{i}=A(\xi_{i})\), \(\mathcal{E}_{i}=I_{q} \otimes\varPhi (\xi_{i})\), \(\mathcal{F}_{i}=(I_{q} \otimes\varPhi(\xi_{i}))B(\xi_{i})\), and \(\mathcal{G}_{i}=Q(\xi_{i})\). Moreover, from the initial condition we set \(\overline{ \mathcal{A} } (I_{q}\otimes\varPhi(t_{0}))=P(t_{0}) \) and define \(\xi_{0}=t_{0}\), \(\mathcal{C}_{0}=0_{q(m+1)\times q}\), \(\mathcal{D}_{0}=I_{p}\), \(\mathcal{E}_{0}=I_{q} \otimes\varPhi(t_{0})\), \(\mathcal{F}_{0}=0_{q(m+1)\times q}\), and \(\mathcal{G}_{0}=-P(t_{0})\). Therefore, we may solve the coupled equations

where \(\mathcal{H}_{i}=\mathcal{C}_{i}-\mathcal{F}_{i}\), \(\mathcal{G}_{i}\), \(\mathcal{D}_{i}\) and \(X:=\overline{\mathcal{A} }\). Using the following relation [2]

and the operator “vec” which transforms a matrix A of size \({m\times s}\) to a vector \(a=\operatorname{vec}(A)\) of size \(ms \times 1\) by stacking the columns of A, equations (10) are equivalent to the linear system \(Ax=b\) with the following parameters:

where \(A_{i}\) and \(b_{i}\) denote the rows of A and b, respectively, and \(I_{p}\) is the identity matrix of order p. We can solve the above linear system by classical schemes such as Krylov subspace methods, but the size of the coefficient matrix may be too large. So, it is more advisable to apply an iterative scheme to solve the coupled linear matrix equations rather than the linear system.

3.1 Solving the coupled matrix equations

We propose a new iterative algorithm to solve (10), which is essentially based on Paige’s algorithm. Recently, Paige’s algorithm has been extended to find the bisymmetric minimum norm solution of the coupled linear matrix equations [27]

Using the “\(\operatorname{vec}(\cdot)\)” operator, the authors elaborated on how Paige’s algorithm can be derived. The reported results reveal the superior convergence properties of their algorithm in comparison to the algorithms derived via the extension of the conjugate gradient method [29], which was presented in the literature for solving different types of coupled linear matrix equations. This motivates us to generalize Paige’s algorithm to resolve (10).

For simplicity, let us consider the linear operator \(\mathcal{M}\) defined as

where \(M_{i} (X) =X \mathcal{H}_{i}-\mathcal{D}_{i} X \mathcal{E}_{i}\), \(i=0,1,\ldots,m\). Using the above operator for equation (10), we have

where \(\mathcal{G}=(\mathcal{G}_{0},\mathcal{G}_{1},\ldots,\mathcal{G}_{m})\) and \(\mathcal{G}_{i}\in\mathbb{R}^{p\times q}\), \(i=0,1,\ldots,m\). Suppose that the linear operator \(\mathcal{D}\) is defined as

where \(\mathcal{D}(Y)= \sum_{i=0}^{m} (Y_{i}\mathcal{ H}_{i}^{T} - \mathcal{D}_{i}^{T} Y_{i} \mathcal{E}_{i}^{T} )\).

In Algorithm 2, ϵ is the given small tolerance to compute the unique minimum Frobenius norm, and for computational purposes, we choose \(\epsilon=10^{-14}\).

The process of solving matrix equations (10)

3.2 Implementing the method

We employ the step-by-step method for solving (1). To do so, we choose \({s}\neq0\), starting with \(x_{0}:=t_{0}\), \(Z_{0}:=P(x_{0})\) and considering the points \(x_{i}=x_{0}+i{s} \), \(i=1,2,3,\dots\). On each subinterval \([x_{i} ,x_{i+1})\) for \(i=0,1,\ldots,[\frac{t_{f}-t_{0}}{s}]-1\), by solving the following equations

with the framework described in Sect. 3.1, we consecutively approximate \(P(t)\) by \(Z(t)\). Then, to compute the approximate solution \(Z(t)\) of \(P(t)\) on the next subinterval, we set \(Z_{i+1}=Z(x_{i+1})\).

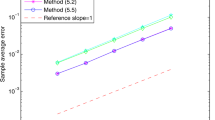

3.3 Error estimation

We illustrate an error analysis based on the notion employed to the Volterra type integral equations [33, 34].

Definition 4

Let \(F(x)=[f_{ij}(x)]\) be a matrix of order \(p\times q\) defined on \([t_{0},t_{f}]\) such that \(f_{ij}(x)\in L_{\omega}^{2}[t_{0},t_{f}]\). Then we define

Theorem 1

Consider the Sylvester matrix differential problem (1), where\(p_{ij} \in H_{\omega}^{k}(x_{l},x_{l+1})\), \(h_{l}=x_{l+1}-{x_{l}}\), \(A(t)=[a_{ij}(t)]_{p\times p}\), \(B(t)=[b_{ij}(t)]_{q\times q}\), and\(Q(t)=[q_{ij}(t)]_{p\times q}\)are given so that\(a_{ij}(t)\), \(b_{ij}(t)\)and\(q_{ij}(t)\)are sufficiently smooth. Also, assume that\(P_{m}=\overline{\mathcal {A}} ({I_{q}} \otimes\varPhi)\)denotes the approximation ofP. Furthermore, suppose that\({M_{1}} = \max_{i,j} \max_{{x_{l}} \le t \le{x_{l + 1}}} \vert {{a_{ij}}(t)} \vert \)and\({M_{2}} = \max_{i,j} \max_{{x_{l}} \le t \le{x_{l + 1}}} \vert {{b_{ij}}(t)} \vert \). Then the following statement holds:

whereC̃is a finite constant.

Proof

Integrating (1) in \([x_{l},x]\) results in

For \(\xi_{0}=x_{l}\) and the Chebyshev–Gauss nodes \(\xi_{n}\), \(1\leq n\leq m\), on the interval \([x_{l},x_{l+1}]\), we have

From (12), we obtain

where \(E=[e_{ij}]_{p\times q}=P_{m}-P\). For \(L_{n}\) as the Lagrange interpolating polynomial, we have

Subtracting this equation from (11) yields

where

and

Since \(P_{m}\) is a polynomial of order m, we may rewrite (14) in the form

By implying the Gronwall inequality on (15), we have

Since \(A(t)\), \(B(t)\), and \(Q(t)\) are sufficiently smooth, for \(R_{1}(x)\) and \(R_{2}(x)\) we obtain the following results. From Definition 4,

in which \(f(x) = \int_{{x_{l}}}^{x} {(\sum_{\upsilon = 1}^{p} {{a_{i\upsilon}}(t){e_{\upsilon j}}(t) + {\sum_{\upsilon = 1}^{q} {{e_{i\upsilon}}(t){b_{\upsilon j}}(t)} } } } )\,dt\). Using (5) for \(k=1\) and (4), it can be deduced that

Also, for \(R_{2}(x)\), we derive that

Now the assertion can be concluded from (16)–(18) immediately. □

4 Numerical simulations

In this section, we show the application of the Chebyshev collocation method to solve (1). We would like to point out that at each subinterval \([x_{i} , x_{i+1}]\), \(i=0,1,\dots\), Algorithm 2 is applied. We use the notations

where \(Z(t)=[Z_{ij}(t)]_{p\times q}\) and \(P(t)=[P_{ij}(t)]_{p\times q}\) denote the approximate and exact solutions, respectively.

Example 1

Consider the Sylvester equation [8]

where

with the exact solution

The obtained results of the spline method [8] and our method with \(m=5\), \(h=0.1\) are given in Table 1. The results show that our method has fewer errors in comparison with [8].

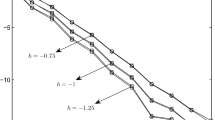

Example 2

Consider the periodic Lyapunov equation

where

The period of the problem is 2π. The exact solution of this Lyapunov differential equation is

The corresponding numerical results of Example 2 with \(h=1\) and \(h=0.1\) are reported in Tables 2 and 3, respectively.

The CPU runtimes of Examples 1 and 2 are reported in Table 4. For these computations, we applied the AbsoluteTiming[⋅] function in Wolfram Mathematica 12 on system with Pentium Dual Core T4500 2.3 GHz CPU and 4 GB RAM.

5 Conclusions

The Sylvester and Lyapunov equations have numerous notable applications in analysis and design of control systems. The properties of the Chebyshev basis have been employed to solve the Sylvester equations by a new framework. The Sylvester differential equations are useful in solving periodic eigenvalue assignment problems. Our approach converts the main problem to the coupled linear matrix equations. An iterative algorithm was proposed for solving the obtained coupled linear matrix equations. Also, an error estimation of the method was obtained. Numerical experiments have been explained to show the applicably of our scheme.

References

Barnett, S.: Matrices in Control Theory with Applications to Linear Programming. Van Nostrand-Reinhold, New York (1971)

Bernstein, D.S.: Matrix Mathematics: Theory, Facts, and Formulas. Princeton University Press, Princeton (2009)

Doha, E., Bhrawy, A., Saker, M.: On the derivatives of Bernstein polynomials: an application for the solution of high even-order differential equations. Bound. Value Probl. 2011, Article ID 829543 (2011)

Gajic, G., Tair, M., Qureshi, J.: Lyapunov Matrix Equation in System Stability and Control. Academic Press, New York (1995)

Yang, X., Li, X.: Finite-time stability of linear non-autonomous systems with time-varying delays. Adv. Differ. Equ. 2018, Article ID 101 (2018)

Chen, L., Ma, C.: Developing CRS iterative methods for periodic Sylvester matrix equation. Adv. Differ. Equ. 2019, Article ID 87 (2019)

Defez, E., Hervás, A., Soler, L., Tung, M.M.: Numerical solutions of matrix differential models cubic spline II. Math. Comput. Model. 46, 657–669 (2007)

Defez, E., Tung, M.M., Ibáñez, J.J., Sastre, J.: Approximating and computing nonlinear matrix differential models. Math. Comput. Model. 55, 2012–2022 (2012)

Dehghan, M., Hajarian, M.: An iterative algorithm for the reflexive solutions of the generalized coupled Sylvester matrix equations and its optimal approximation. Appl. Math. Comput. 202, 571–588 (2008)

Dehghan, M., Hajarian, M.: An iterative method for solving the generalized coupled Sylvester matrix equations over generalized bisymmetric matrices. Appl. Math. Model. 34, 639–654 (2010)

Dehghan, M., Shirilord, A.: A generalized modified Hermitian and skew-Hermitian splitting (GMHSS) method for solving complex Sylvester matrix equation. Appl. Math. Comput. 348, 632–651 (2019)

Dehghan, M., Shirilord, A.: The double-step scale splitting method for solving complex Sylvester matrix equation. Comput. Appl. Math. 38, Article ID 146 (2019)

Huang, N., Ma, C.F.: Modified conjugate gradient method for obtaining the minimum-norm solution of the generalized coupled Sylvester-conjugate matrix equations. Appl. Math. Model. 40, 1260–1275 (2016)

Jarlebring, E., Mele, G., Palitta, D., Ringh, E.: Krylov methods for low-rank commuting generalized Sylvester equations. Numer. Linear Algebra Appl. 25, Article ID e2176 (2018)

Hached, M., Jbilou, K.: Computational Krylov-based methods for large-scale differential Sylvester matrix problems. Numer. Linear Algebra Appl. 25, Article ID e2187 (2018)

Varga, A.: On solving periodic differential matrix equations with applications to periodic system norms computation. In: Proceeding of the 44th IEEE Conference on Decision and Control, and the European Control Conference (2005)

Wimmer, H.K.: Contour integral solutions of Sylvester-type matrix equations. Linear Algebra Appl. 493, 537–543 (2016)

Bhrawy, A.H., Assas, L.M., Alghamdi, M.A.: An efficient spectral collocation algorithm for nonlinear Phi-four equations. Bound. Value Probl. 2013, Article ID 87 (2013)

Doha, E.H., Bhrawy, A.H., Ezz-Eldien, S.S.: Efficient Chebyshev spectral methods for solving multi-term fractional orders differential equations. Appl. Math. Model. 35, 5662–5672 (2011)

Gheorghiu, C.I.: Pseudospectral solutions to some singular nonlinear BVPs. Numer. Algorithms 68, 1–14 (2015)

Boyd, J.P.: Chebyshev and Fourier Spectral Methods. Dover, New York (2000)

Canuto, C., Hussaini, M.Y., Quarteroni, A., Zang, T.A.: Spectral Methods in Fluid Dynamics. Springer, New York (1988)

Ding, F., Chen, T.: Gradient based iterative algorithms for solving a class of matrix equations. IEEE Trans. Autom. Control 50, 1216–1221 (2005)

Ding, F., Chen, T.: Iterative least squares solutions of coupled Sylvester matrix equations. Syst. Control Lett. 54, 95–107 (2005)

Ding, F., Chen, T.: On iterative solutions of general coupled matrix equations. SIAM J. Control Optim. 44, 2269–2284 (2006)

Ding, J., Liu, Y.J., Ding, F.: Iterative solutions to matrix equations of the form \(A_{i}XB_{i} =F_{i}\). Comput. Math. Appl. 59, 3500–3507 (2010)

Liu, A., Chen, G., Zhang, X.: A new method for the bisymmetric minimum norm solution of the consistent matrix equations \(A_{1}XB_{1}=C_{1}\), \(A_{2}XB_{2}=C_{2}\). J. Appl. Math. 2013, Article ID 125687 (2013)

Paige, C.C.: Biodiagonalization of matrices and solution of linear equation. SIAM J. Numer. Anal. 11, 197–209 (1974)

Saad, Y.: Iterative Methods for Sparse Linear Systems. PWS Press, New York (1995)

Atkinson, K., Han, W.: Theoretical Numerical Analysis: A Functional Analysis Framework. Springer, New York (2009)

Kreyszig, E.: Introductory Functional Analysis with Applications. Wiley, New York (1978)

Doha, E.H., Bhrawy, A.H., Ezz-Eldien, S.S.: A Chebyshev spectral method based on operational matrix for initial and boundary value problems of fractional order. Comput. Math. Appl. 62, 2364–2373 (2011)

Pishbin, S., Ghoreishi, F., Hadizadeh, M.: A posteriori error estimation for the Legendre collocation method applied to integral-algebraic Volterra equations. Electron. Trans. Numer. Anal. 38, 327–346 (2011)

Tang, T., Xu, X., Cheng, J.: On spectral methods for Volterra type integral equations and the convergence analysis. Electron. Trans. Numer. Anal. 26, 825–837 (2008)

Acknowledgements

Not applicable.

Availability of data and materials

Not applicable.

Funding

Not applicable.

Author information

Authors and Affiliations

Contributions

All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Nouri, K., Beik, S.P.A., Torkzadeh, L. et al. An iterative algorithm for robust simulation of the Sylvester matrix differential equations. Adv Differ Equ 2020, 287 (2020). https://doi.org/10.1186/s13662-020-02757-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-020-02757-z