Abstract

We investigate the asymptotic properties of the trajectories generated by a second-order dynamical system with Hessian driven damping and a Tikhonov regularization term in connection with the minimization of a smooth convex function in Hilbert spaces. We obtain fast convergence results for the function values along the trajectories. The Tikhonov regularization term enables the derivation of strong convergence results of the trajectory to the minimizer of the objective function of minimum norm.

Similar content being viewed by others

1 Introduction

The paper of Su et al. [20] was the starting point of intensive research of second order dynamical systems with an asymptotically vanishing damping term of the form

where \(g:\mathcal {H}\longrightarrow \mathbb {R}\) is a convex and continuously Fréchet differentiable function defined on a real Hilbert space \(\mathcal {H}\) fulfilling \(\hbox {argmin}g \ne \emptyset \). The aim is to approach by the trajectories generated by this system the solution set of the optimization problem

The convergence rate of the objective function along the trajectory is in case \(\alpha >3\) of

while in case \(\alpha =3\) it is of

where \(\min g \in \mathbb {R}\) denotes the minimal value of g. Also in view of this fact, system (1) is seen as a continuous version of the celebrated Nesterov accelerated gradient scheme (see [16]). In what concerns the asymptotic properties of the generated trajectories, weak convergence to a minimizer of g as the time goes to infinity has been proved by Attouch et al. [7] (see also [6]) for \(\alpha > 3\). Without any further geometrical assumption on g, the convergence of the trajectories in the case \(\alpha \le 3\) is still an open problem.

Second order dynamical systems with a geometrical Hessian driven damping term have aroused the interest of the researchers, due to both their applications in optimization and mechanics and their natural relations to Newton and Levenberg-Marquardt iterative methods (see [2]). Furthermore, it has been observed for some classes of optimization problems that a geometrical damping term governed by the Hessian can induce a stabilization of the trajectories. In [11] the dynamical system with Hessian driven damping term

where \(\alpha \ge 3\) and \(\beta >0\), has been investigated in relation with the optimization problem (2). Fast convergence rates for the values and the gradient of the objective function along the trajectories are obtained and the weak convergence of the trajectories to a minimizer of g is shown. We would also like to mention that iterative schemes which result via (symplectic) discretizations of dynamical systems with Hessian driven damping terms have been recently formulated and investigated from the point of view of their convergence properties in [5, 18, 19].

Another development having as a starting point (1) is the investigation of dynamical systems involving a Tikhonov regularization term. Attouch, Chbani and Riahi investigated in this context in [8] the system

where \(\alpha \ge 3\) and \(\epsilon :[t_0,+\infty )\longrightarrow [0,+\infty )\). One of the main benefits of considering such a regularized dynamical system is that it generates trajectories which converge strongly to the minimum norm solution of (2). Besides that, in [8] it was proved that the fast convergence rate of the objective function values along the trajectories remains unaltered. For more insights into the role played by the Tikhonov regularization for optimization problems and, more general, for monotone inclusion problems, we refer the reader to [3, 4, 9, 15].

This being said, it is natural to investigate a second order dynamical system which combines a Hessian driven damping and a Tikhonov regularization term and to examine if it inherits the properties of the dynamical systems (3) and (4). This is the aim of the manuscript, namely the analysis in the framework of the general assumption stated below of the dynamical system

where \(\alpha \ge 3\) and \(\beta \ge 0\), and \(u_0, v_0 \in \mathcal{H}\).

General assumption:

-

\(g:\mathcal {H}\longrightarrow \mathbb {R}\) is a convex and twice Fréchet differentiable function with Lipschitz continuous gradient on bounded sets and \(\hbox {argmin}g \ne \emptyset \);

-

\(\epsilon :[t_0,+\infty )\longrightarrow [0,+\infty )\) is a nonincreasing function of class \(C^1\) fulfilling \(\lim _{t\longrightarrow +\infty }\epsilon (t)=0\).

The fact that the starting time \(t_0\) is taken as strictly greater than zero comes from the singularity of the damping coefficient \(\frac{\alpha }{t}\). This is not a limitation of the generality of the proposed approach, since we will focus on the asymptotic behaviour of the generated trajectories. Notice that if \(\mathcal {H}\) is finite-dimensional, then the Lipschitz continuity of \(\nabla g\) on bounded sets follows from the continuity of \(\nabla ^2 g\).

To which extent the Tikhonov regularization does influence the convergence behaviour of the trajectories generated by (5) can be seen even when minimizing a one dimensional function. Consider the convex and twice continuously differentiable function

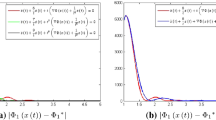

First column: the trajectories of the dynamical system with Tikhonov regularization \(\epsilon (t)=t^{-\gamma }\) are approaching the minimum norm solution \(x^*=0\). Second column: the trajectories of the dynamical system without Tikhonov regularization the trajectory are approaching the optimal solution 1

It holds that \(\hbox {argmin}g=[-1,1]\) and \(x^*=0\) is its minimum norm solution. In the second column of Fig. 1 we can see the behaviour of the trajectories generated by the dynamical system without Tikhonov regularization (which corresponds to the case when \(\epsilon \) is identically 0) for \(\beta =1\) and \(\alpha =3\) and, respectively, \(\alpha =4\). In both cases the trajectories are approaching the optimal solution 1, which is a minimizer of g, however, not the minimum norm solution.

In the first column of Fig. 1 we can see the behaviour of the trajectories generated by the dynamical system with Tikhonov parametrizations of the form \(t \mapsto \epsilon (t)=t^{-\gamma }\), for different values of \(\gamma \in (1,2)\), which is in accordance to the conditions in Theorem 4.4, \(\beta =1\) and \(\alpha =3\) and, respectively, \(\alpha =4\). The trajectories are approaching the minimum norm solution \(x^*=0\).

The organization of the paper is as follows. We start the analysis of the dynamical system (5) by proving the existence and uniqueness of a global \(C^2\)-solution. In the third section we provide two different settings for the Tikhonov parametrization \(t \mapsto \epsilon (t)\) in both of which g(x(t)) converges to \(\min g\), the minimal value of g, with a convergence rate of \(O\left( \frac{1}{t^2}\right) \) for \(\alpha =3\) and of \(o\left( \frac{1}{t^2}\right) \) for \(\alpha >3\). The proof relies on Lyapunov theory; the choice of the right energy functional plays a decisive role in this context. Weak convergence of the trajectory is also derived for \(\alpha >3\). In the last section we focus on the proof of strong convergence to a minimum norm solution: firstly, in a general setting, for the ergodic trajectory, and, secondly, in a slightly restrictive setting, for the trajectory x(t) itself.

2 Existence and uniqueness

In this section we will prove the existence and uniqueness of a global \(C^2\)-solution of the dynamical system (5). The proof of the existence and uniqueness theorem is based on the idea to reformulate (5) as a particular first order dynamical system in a suitably chosen product space (see also [11]).

Theorem 2.1

For every initial value \((u_0, v_0) \in \mathcal {H}\times \mathcal {H}\), there exists a unique global \(C^2\)-solution \(x:[t_0, + \infty ) \rightarrow \mathcal {H}\) to (5).

Proof

Let \((u_0, v_0) \in \mathcal {H}\times \mathcal {H}\). First we assume that \(\beta =0\), which gives the dynamical system (4) investigated in [8]. The statement follows from [14, Proposition 2.2(b)] (see also the discussion in [8, Section 2]).

Assume now that \(\beta >0\). We notice that \(x:[t_0,+\infty )\longrightarrow \mathcal {H}\) is a solution of the dynamical system (5), that is

if and only if \((x,y) :[t_0,+\infty )\longrightarrow \mathcal {H} \times \mathcal {H}\) is a solution of the dynamical system

which is further equivalent to

We define \(F:[t_0,+\infty ) \times \mathcal {H} \times \mathcal {H} \rightarrow \mathcal {H} \times \mathcal {H}\) by

and write (7) as

Since \({\nabla }g\) is Lipschitz continuous on bounded sets and continuously differentiable, the local existence and uniqueness theorem (see [17, Theorems 46.2 and 46.3]) guarantees the existence of a unique solution (x, y) of (8) defined on a maximum intervall \([t_0,T_{\max })\), where \(t_0 < T_{\max }\le +\infty .\) Furthermore, either \(T_{\max } = +\infty \) or \(\lim _{t \rightarrow T_{\max }} \Vert x(t)\Vert + \Vert y(t)\Vert = +\infty \). We will prove that \(T_{\max }=+\infty \), which will imply that x is the unique global \(C^2\)-solution of (5).

Consider the energy functional (see [10])

By using (5) we get

and, since \(\epsilon \) is nonincreasing and \({\nabla }^2 g(x(t))\) is positive semidefinite, we obtain that

Consequently, \(\mathcal{E}\) is nonincreasing, hence

From the fact that g is bounded from below we obtain that \(\dot{x}\) is bounded on \([t_0, T_{\max })\). Let \(\Vert \dot{x}\Vert _\infty :=\sup _{t\in [t_0, T_{\max })}\Vert \dot{x}(t)\Vert < +\infty .\)

Since \(\Vert x(t)-x(t')\Vert \le \Vert \dot{x}\Vert _\infty |t-t'|\) for all \(t,t' \in [t_0, T_{\max })\), there exists \(\lim _{t\longrightarrow T_{\max }}x(t)\), which shows that x is bounded on \([t_0, T_{\max })\). Since \(\dot{x}(t)+\beta {\nabla }g(x(t))=y(t)\) for all \(t \in [t_0, T_{\max })\) and \(\nabla g\) is Lipschitz continuous on bounded sets, it yields that y is also bounded on \([t_0,T_{\max })\). Hence \(\lim _{t \rightarrow T_{\max }} \Vert x(t)\Vert + \Vert y(t)\Vert \) cannot be \(+\infty \), thus \(T_{\max }=+\infty ,\) which completes the proof. \(\square \)

3 Asymptotic analysis

In this section we will show to which extent different assumptions we impose to the Tikhonov parametrization \(t \mapsto \epsilon (t)\) influence the asymptotic behaviour of the trajectory x generated by the dynamical system (5). In particular, we are looking at the convergence of the function g along the trajectory and the weak convergence of the trajectory.

We recall that the asymptotic analysis of the system (5) is carried out in the framework of the general assumptions stated in the introduction.

We start with a result which provides a setting that guarantees the convergence of g(x(t)) to \(\min g\) as \(t \rightarrow +\infty \).

Theorem 3.1

Let x be the unique global \(C^2\)-solution of (5). Assume that one of the following conditions is fulfilled:

-

(a)

\(\int _{t_0}^{+\infty }\frac{\epsilon (t)}{t}dt<+\infty \) and there exist \(a > 1\) and \(t_1\ge t_0\) such that

$$\begin{aligned}\dot{\epsilon }(t)\le -\frac{a\beta }{2}\epsilon ^2(t) \text{ for } \text{ every } t\ge t_1;\end{aligned}$$ -

(b)

there exists \(a >0\) and \(t_1\ge t_0\) such that

$$\begin{aligned}\epsilon (t)\le \frac{a}{t} \text{ for } \text{ every } t\ge t_1. \end{aligned}$$

If \(\alpha \ge 3\), then

Proof

Let be \(x^* \in \hbox {argmin}g\) and \(2\le b\le \alpha -1\) be fixed. We introduce the following energy functional \(\mathcal {E}_{b} : [t_0, +\infty ) \rightarrow \mathbb {R}\),

For every \(t \ge t_0\) it holds

Now, by using (5), we get for every \(t \ge t_0\)

Let be \(t_0':=\max (\beta ,t_0)\). For all \(t \ge t_0'\) the function \(g_t : \mathcal {H} \rightarrow \mathbb {R}, g_t(x)= \left( 1-\frac{\beta }{t}\right) g(x)+\frac{\epsilon (t)}{2}\Vert x\Vert ^2,\) is strongly convex, thus, one has

By taking \(x:=x(t)\) and \(y:=x^*\) we get for every \(t\ge t_0'\)

From (10), (11) and (12) it follows that for every \(t\ge t_0'\) it holds

At this point we treat the situations \(\alpha >3\) and \(\alpha =3\) separately.

The case \(\alpha > 3\) and \(2<b< \alpha -1\). We will carry out the analysis by addressing the settings provided by the conditions (a) and (b) separately.

Condition (a) holds: Assuming that condition (a) holds, there exist \(a >1\) and \(t_1\ge t_0'\) such that

Using that

(13) leads to the following estimate

which holds for every \(t\ge t_1.\)

Since \(a> 1\) and \(b > 2\), we notice that for every \(t\ge t_1\) it holds

On the other hand, we have that

and

We define \(t_2:=\max \left( t_1,\frac{2a\beta }{a-1},\frac{\beta (\alpha -2)}{b-2}\right) \). According to (15), it holds for every \(t\ge t_2\)

Condition (b) holds: Assuming now that condition (b) holds, there exist \(a >0\) and \(t_1\ge t_0'\) such that

Further, the monotonicity of \({\nabla }g\) and the fact that \({\nabla }g(x^*)=0\) implies that

Using that

(13) leads to the following estimate

for every \(t\ge t_1\).

Since \(b > 2\), we have that for every \(t\ge t_1\) it holds

On the other hand, since

holds for every \(t\ge t_1\), it follows that

We recall that

We define \(t_2:=\max \left( t_1,4\beta ,\frac{\beta (\alpha -2)}{b-2}\right) .\) According to (18), it holds for every \(t \ge t_2\)

From now on we will treat the two cases together. According to (16), in case (a), and to (20), in case (b), we obtain

for every \(t\ge t_2\), where \(l:=b \text{ and } t_2=\max \left( t_1,\frac{2a\beta }{a-1},\frac{\beta (\alpha -2)}{b-2}\right) \), in case (a), and \(l:=b+a\beta \text{ and } t_2=\max \left( t_1,4\beta ,\frac{\beta (\alpha -2)}{b-2}\right) \) in case (b).

By integrating the latter inequality on the interval \([t_2,T]\), where \(T \ge t_2\) is arbitrarily chosen, we obtain

On the other hand,

hence, for every \(T\ge \max ( \beta (b+2-\alpha ),t_3)\) we get

Obviously,

Further, Lemma A.1 applied to the functions \(\varphi (t)=t^2\) and \(f(t)=\frac{\epsilon (t)}{t}\) provides

hence,

and, consequently,

The case \(\alpha =3\) and \(b=2\). In this case the energy functional reads

for every \( t \ge t_0\). We will address again the settings provided by the conditions (a) and (b) separately.

Condition (a) holds: Relation (15) becomes

for every \(t \ge t_1\). Consequently, for \(t_3:= \max \left( t_1,\frac{\beta a}{a-1}\right) \), we have

for every \(t \ge t_3\). After multiplication with \((t-\beta )\), it yields

for every \(t\ge t_3\). Dividing by \((t-\beta )^2\) we obtain

or, equivalently,

Condition (b) holds: We define \(t_3:=\max \left( t_1,4\beta \right) \). Relation (18) becomes

for every \(t\ge t_3\). Repeating the above steps for the inequality (23) we obtain

From now on we will treat the two cases together. According to (22), in case (a), and to (24), in case (b), we obtain

for every \(t\ge t_3\), where \(l:=1 \text{ and } t_3=\max \left( t_1,\frac{\beta (\alpha -1)}{b-2}\right) \), in case (a), and \(l:=\frac{2+a\beta }{2} \text{ and } t_3=\max (t_1,4\beta )\) in case (b).

By integrating the latter inequality on an interval \([t_3,T]\), where \(T \ge t_3\) is arbitrarily chosen, we obtain

On the other hand,

for every \(t \ge t_0\), hence, for every \(T \ge \max ( \beta ,t_3)=t_3\) we get

Obviously,

Lemma A.1, applied this time to the functions \(\varphi (t)=\frac{t^3}{t-\beta }\) and \(f(t)=\frac{\epsilon (t)}{t}\), yields

Consequently,

hence

\(\square \)

Remark 3.2

One can easily notice that, in case \(\beta >0\), the fact that there exist \(a >1\) and \(t_1 \ge t_0\) such that \(\dot{\epsilon }(t)\le -\frac{a\beta }{2}\epsilon ^2(t)\) for every \(t\ge t_1\) implies that \(\int _{t_0}^{+\infty }\frac{\epsilon (t)}{t}dt<+\infty \).

The next theorem shows that, by strengthening the integrability condition \(\int _{t_0}^{+\infty }\frac{\epsilon (t)}{t}dt<+\infty \) (which is actually required in both settings (a) and (b) of Theorem 3.1), a rate of \(\mathcal {O}(1/t^2)\) ca be guaranteed for the convergence of g(x(t)) to \(\min g\).

Theorem 3.3

Let x be the unique global \(C^2\)-solution of (5). Assume that

and that one of the following conditions is fulfilled:

-

(a)

there exist \(a>1\) and \(t_1\ge t_0\) such that

$$\begin{aligned}\dot{\epsilon }(t)\le -\frac{a\beta }{2}\epsilon ^2(t) \text{ for } \text{ every } t\ge t_1;\end{aligned}$$ -

(b)

there exist \(a>0\) and \(t_1\ge t_0\) such that

$$\begin{aligned}\epsilon (t)\le \frac{a}{t} \text{ for } \text{ every } t\ge t_1.\end{aligned}$$

If \(\alpha \ge 3\), then

In addition, if \(\alpha >3\), then the trajectory x is bounded and

for every arbitrary \(x^* \in \hbox {argmin}g\).

Proof

Let be \(x^* \hbox {argmin}g\) and \(2 \le b \le \alpha -1\) fixed. We will use the energy functional introduced in the proof of the previous theorem and some of the estimate we derived for it. We will treat again the situations \(\alpha >3\) and \(\alpha =3\) separately.

The case \(\alpha > 3\) and \(2<b< \alpha -1\). As we already noticed in the proof of Theorem 3.1, according to (16), in case (a), and to (20), in case (b), we have

where \(l:=b \text{ and } t_2=\max \left( t_1,\frac{2a\beta }{a-1},\frac{\beta (\alpha -2)}{b-2}\right) \), in case (a), and \(l:=b+a\beta \text{ and } t_2=\max \left( t_1,4\beta ,\frac{\beta (\alpha -2)}{b-2}\right) \) in case (b).

Using that \(t\epsilon (t)\in L^1([t_0,+\infty ),\mathbb {R})\) and that \(t \mapsto \mathcal {E}_{b}(t)\) is bounded from below, from Lemma A.2 it follows that the limit \(\lim _{t\longrightarrow +\infty }\mathcal {E}_{b}(t)\) exists. Consequently, \(t \mapsto \mathcal {E}_{b}(t)\) is bounded, which implies that there exist \(K>0\) and \(t'\ge t_0\) such that

In addition, the function \(t \mapsto \Vert x(t)-x^*\Vert ^2\) is bounded, hence the trajectory x is bounded. Since \(t \mapsto \Vert b(x(t)-x^*)+t(\dot{x}(t)+\beta {\nabla }g(x(t)))\Vert ^2\) is also bounded, the inequality

which is true for every \(t \ge t_0\), leads to

By integrating relation (16), in case (a), and relation (20), in case (b), on an interval \([t_2, s]\), where \(s \ge t_3\) is arbitrarily chosen, and by letting afterwards s converge to \(+\infty \), we obtain

The boundedness of the trajectory and the condition on the Tikhonov parametrization guarantee that

The case \(\alpha = 3\) and \(b=2\). As we already noticed in the proof of Theorem 3.1, according to (22), in case (a), and to (24), in case (b), we obtain

where \(l=1 \text{ and } t_3=\max \left( t_1,\frac{\beta (\alpha -1)}{b-2}\right) \), in case (a), and \(l=\frac{2+a\beta }{2} \text{ and } t_3=\max (t_1,4\beta )\) in case (b).

Since \(t\epsilon (t)\in L^1([t_0,+\infty ),\mathbb {R})\) and \(\epsilon (t)\) is nonnegative, obviously \(\frac{t^2}{t-\beta }\epsilon (t)\Vert x^*\Vert ^2\in L^1([t_2,+\infty ),\mathbb {R})\). Using that \(t \mapsto \frac{t}{t-\beta }\mathcal {E}_{2}(t)\) is bounded from below, from Lemma A.2 it follows that the limit \(\lim _{t\longrightarrow +\infty }\frac{t}{t-\beta }\mathcal {E}_{2}(t)\) exists. Consequently, the limit \(\lim _{t\longrightarrow +\infty }\mathcal {E}_{2}(t)\) also exists and \(t \mapsto \mathcal {E}_{2}(t)\) is bounded. This implies that there exist \(K>0\) and \(t'\ge t_0\) such that

\(\square \)

The next result shows that the statements of Theorem 3.3 can be strengthened in case \(\alpha >3\).

Theorem 3.4

Let x be the unique global \(C^2\)-solution of (5). Assume that

and that one of the following conditions is fulfilled:

-

(a)

there exist \(a>1\) and \(t_1\ge t_0\) such that

$$\begin{aligned}\dot{\epsilon }(t)\le -\frac{a\beta }{2}\epsilon ^2(t) \text{ for } \text{ every } t\ge t_1;\end{aligned}$$ -

(b)

there exist \(a>0\) and \(t_1\ge t_0\) such that

$$\begin{aligned}\epsilon (t)\le \frac{a}{t} \text{ for } \text{ every } t\ge t_1.\end{aligned}$$

Let be an arbitrary \(x^* \in \hbox {argmin}g\). If \(\alpha > 3\), then

and the limits

exist. In addition,

Proof

Since \(\alpha >3\) we can choose \(2<b< \alpha -1\). From (10) and (11) we have that

We will address the settings provided by the conditions (a) and (b) separately.

Condition (a) holds: In this case we estimate \(-\beta \epsilon (t)t^2\langle {\nabla }g(x(t)),x(t)\rangle \) just as in (14) and from (25) we obtain

We define \(t_2:=\max \left( \beta ,t_1,\frac{\beta a}{a-1}\right) .\) By using condition (a), neglecting the nonpositive terms and afterwards integrating on the interval \([t_2,t]\), with arbitrary \(t \ge t_2\), we obtain

For every \(s\ge t_2\), by the monotonicity of \({\nabla }g\), we have \(\left\langle {\nabla }g(x(s)), x(s)-x^*\right\rangle \ge 0\). Further, it holds

By letting in (27) s converge to \(+\infty \) and by taking into account that, according to Theorem 3.3,

Condition (b) holds: In this case we estimate \(-\beta \epsilon (t)t^2\langle {\nabla }g(x(t)),x(t)\rangle \) just as in (17) and from (25) we obtain

We define \(t_2:=\max \left( 4\beta ,t_1\right) .\) According to (19) we have that \(\beta ^2 t-\beta t^2+\frac{\beta \epsilon (t)t^3}{2a_1}\le 0\) for every \(t\ge t_2\). By using condition (b), neglecting the nonpositive terms and afterwards integrating on the interval \([t_2,t]\), with arbitrary \(t \ge t_2\), we obtain

From here, by using the similar arguments as for the case (a), we obtain (28).

Consider now, \(b_1,b_2\in (2, \alpha -1),\,b_1\ne b_2.\) Then for every \(t \ge t_0\) we have

According to Theorem 3.3, the limits

exist, consequently, the limit

also exists. For every \(t \ge t_0\) we define

and

Then

From (28) and the fact that k(t) has a limit whenever \(t\longrightarrow +\infty \), we obtain that \((\alpha -1)q(t)+t\dot{q}(t)\) has a limit when \(t\longrightarrow +\infty \). According to Lemma 4.6, q(t) has a limit when \(t\longrightarrow +\infty .\) By using (28) again we obtain that the limit

exists and, consequently, the limit

also exists. On the other hand, we notice that for every \(t \ge t_0\) the energy functional can be written as

Since the limits

exist, it follows that the limit

exists, too.

We define

and notice that for sufficiently large t it holds

According to Theorem 3.3 the right hand side of the above inequality is of class \(L^1([t_0,+\infty ),\mathbb {R}).\)

Hence,

Since \(\frac{1}{t}\not \in L^1([t_0,+\infty ),\mathbb {R})\) and the limit \(\lim _{t \longrightarrow +\infty } \varphi (t) \in \mathbb {R}\) exists, it must hold that \(\lim _{t\longrightarrow +\infty }\varphi (t)=0.\) Consequently,

and the proof is complete. \(\square \)

Working in the hypotheses of Theorem 3.4 we can prove also the weak convergence of the trajectories generated by (5) to a minimizer of the objective function g.

Theorem 3.5

Let x be the unique global \(C^2\)-solution of (5). Assume that

and that one of the following conditions is fulfilled:

-

(a)

there exist \(a>1\) and \(t_1\ge t_0\) such that

$$\begin{aligned}\dot{\epsilon }(t)\le -\frac{a\beta }{2}\epsilon ^2(t) \text{ for } \text{ every } t\ge t_1;\end{aligned}$$ -

(b)

there exist \(a>0\) and \(t_1\ge t_0\) such that

$$\begin{aligned}\epsilon (t)\le \frac{a}{t} \text{ for } \text{ every } t\ge t_1.\end{aligned}$$

If \(\alpha > 3\), then x(t) converges weakly to an element in \(\hbox {argmin}g\) as \(t \longrightarrow +\infty \).

Proof

We will to apply the continuous version of the Opial Lemma (Lemma A.3) for \(S=\hbox {argmin}g.\) According to Theorem 3.4, the limit

exists for every \(x^*\in \hbox {argmin}g\).

Further, let \(\overline{x}\in \mathcal {H}\) be a weak sequential limit point of x(t). This means that there exists a sequence \((t_n)_{n \in \mathbb {N}} \subseteq [t_0, +\infty )\) such that \(\lim _{n \longrightarrow \infty } t_n = +\infty \) and \(x(t_n)\) converges weakly to \(\overline{x}\) as \(n \longrightarrow \infty \). Since g is weakly lower semicontinuous, we have that

On the other hand, according to Theorem 3.3,

consequently one has \(g(\overline{x})\le \min g\), which shows that \(\overline{x}\in \hbox {argmin}g.\)

The convergence of the trajectory is a consequence of Lemma A.3. \(\square \)

Remark 3.6

We proved in this section that the convergence rate of \(o\left( \frac{1}{t^2}\right) \) for g(x(t)), the converge rate of \(o\left( \frac{1}{t}\right) \) for \(\Vert \dot{x}(t)+\beta {\nabla }g(x(t))\Vert \) and the weak convergence of the trajectory to a minimizer of g that have been obtained in [11] for the dynamical system with Hessian driven damping (3) are preserved when this system is enhanced with a Tikhonov regularization term. In addition, in the case when the Hessian driven damping term is removed, which is the case when \(\beta =0\), we recover the results provided in [8] for the dynamical system (4) with Tikhonov regularization term. In this setting, we have to assume in Theorem 3.1 just that \(\int _{t_0}^{+\infty }\frac{\epsilon (t)}{t}dt<+\infty \), and in the theorems 3.3 - 3.5 just that \(\int _{t_0}^{+\infty }t\epsilon (t)dt<+\infty \), since condition (a) is automatically fulfilled.

4 Strong convergence to the minimum norm solution

In this section we will continue the investigations we did at the end of Section 3, by working in the same setting, on the behaviour of the trajectory of the dynamical system (5) by concentrating on strong convergence. In particular, we will provide conditions on the Tikhonov parametrization \(t \mapsto \epsilon (t)\) which will guarantee that the trajectory converges to a minimum norm solution of g, which is the element of minimum norm of the nonempty convex closed set \(\hbox {argmin}g\). We start with the following result.

Lemma 4.1

Let x be the unique global \(C^2\)-solution of (5). For \(x^*\in \hbox {argmin}g\) we introduce the function

If \(\alpha > 0\) and \(\beta \ge 0\), then

In addition,

Proof

We consider the following energy functional

By using (5) we have for every \(t \ge t_0\)

From here, invoking the convexity of g, it follows

for every \(t \ge t_0\). Since \(\epsilon \) is nonincreasing this leads further to

therefore the energy W is nonincreasing. Since W is bounded from bellow, there exists \(\lim _{t\longrightarrow +\infty }W(t)\in \mathbb {R}.\) Consequently, \(t \mapsto W(t)\) is bounded on \([t_0,+\infty )\) from which, since g is bounded from bellow, we obtain that

By integrating (34) on an interval \([t_0,t]\) for arbitrary \(t > t_0\) it yields

which, by letting \(t\longrightarrow +\infty \), leads to

Further, for every \(t \ge t_0\) we have that

and

hence,

\(\square \)

For each \(\epsilon > 0,\) we denote by \(x_{\epsilon }\) the unique solution of the strongly convex minimization problem

In virtue of the Fermat rule, this is equivalent to

It is well known that the Tikhonov approximation curve \(\epsilon \longrightarrow x_\epsilon \) satisfies \(\lim _{\epsilon \longrightarrow 0}x_\epsilon =x^*\), where \(x^*= \hbox {argmin}\{\Vert x\Vert : x \in \hbox {argmin}g\}\) is the element of minimum norm of the nonempty convex closed set \(\hbox {argmin}g\). Since \({\nabla }g\) is monotone, for every \(\epsilon >0\) it holds \(\langle {\nabla }g(x_\epsilon )-{\nabla }g(x^*), x_\epsilon -x^*\rangle \ge 0\), that is \(\langle -\epsilon x_\epsilon ,x_\epsilon -x^*\rangle \ge 0\). Hence,\(-\Vert x_\epsilon \Vert ^2+\langle x_\epsilon ,x^*\rangle \ge 0\), which, by using the Cauchy-Schwarz inequality, implies

4.1 Strong ergodic convergence

We will start by proving a strong ergodic convergence result for the trajectory of (5).

Theorem 4.2

Let x be the unique global \(C^2\)-solution of (5). Assume that

Let \(x^*= \hbox {argmin}\{\Vert x\Vert : x \in \hbox {argmin}g\}\) be the element of minimum norm of the nonempty convex closed set \(\hbox {argmin}g\). If \(\alpha > 0\), then

Proof

We introduce the function

For every \(t\ge t_0\) we have

Further, for every \(t\ge t_0\), the function \(g_t:\mathcal {H}\longrightarrow \mathbb {R},\, g_t(x)=g(x)+\frac{\epsilon (t)}{2}\Vert x\Vert ^2,\) is strongly convex, with modulus \(\epsilon (t)\), hence

But \({\nabla }g_t(x(t))={\nabla }g(x(t))+\epsilon (t) x(t)\) and by using (5) we get

Consequently, (36) becomes

By using (35), the latter relation leads to

for every \(t \ge t_0\).

For every \(t \ge t_0\), let \(x_{\epsilon (t)}\) the unique solution of the strongly convex minimization problem

Then

for every \(t \ge t_0\) and taking into account (38) we get

for every \(t \ge t_0\). We have

and

hence (39) is equivalent to

for every \(t \ge t_0\).

After integrating (40) on \([t_0,t]\), for arbitrary \(t > t_0\), it yields

We show that the right-hand side of the above inequality is bounded from above. Indeed, according to Lemma 4.1, one has

hence there exists \(C_1\ge 0\) such that \(\int _{t_0}^t \frac{1}{s}\Vert \dot{x}(s)\Vert ^2\le C_1\) for every \(t\ge t_0\). Further, for every \(t \ge t_0\),

where \(C_2=\frac{\dot{h}_{x^*}(t_0)}{t_0}+(\alpha +1)\frac{{h}_{x^*}(t_0)}{t_0^2}.\) Consequently,

for every \(t \ge t_0\). According to Lemma 4.1, there exists \(C_3\) such that \(\frac{1}{t}|\dot{h}_{x^*}(t)|\le C_3 \text{ for } \text{ all } t\ge t_0\), which combined with (42) guarantees the existence of \(C_4\ge 0\) such that

for every \(t\ge t_0\).

On the other hand, for every \(t \ge t_0\),

From the gradient inequality of the convex function g we have

hence

for all \(t \ge t_0\). Obviously the right-hand side of (44) is bounded from above, hence there exists \(C_5>0\) such that

Combining (43) and (45) we obtain that there exists \(C>0\) such that

Since \(\lim _{t\longrightarrow +\infty }\epsilon (t)=0\) we have \(\lim _{t\longrightarrow +\infty }x_{\epsilon (t)}=x^*\), hence \(\lim _{t\longrightarrow +\infty }(\Vert x^*\Vert ^2-\Vert x_{\epsilon (t)}\Vert ^2)=0.\) Consequently, by using the l’Hospital rule and the fact that \(\int _{t_0}^{+\infty } \frac{\epsilon (t)}{t}dt=+\infty \), we get

Dividing (46) by \(\int _{t_0}^t \frac{\epsilon (s)}{s}ds\) and taking into account that \(\int _{t_0}^{+\infty } \frac{\epsilon (t)}{t}dt=+\infty \), we obtain that

The last equality immediately implies that

\(\square \)

Remark 4.3

The strong ergodic convergence obtained in [8] for the dynamical system (4) is extended to the dynamical system with Hessian driven damping and Tikhonov regularization term (5) under the same hypotheses concerning the Tikhonov parametrization \(t \mapsto \epsilon (t)\).

4.2 Strong convergence

In order to prove strong convergence for the trajectory generated by the dynamical system (5) to an element of minimum norm of \(\hbox {argmin}g\) we have to strengthen the conditions on the Tikhonov parametrization. This is done in the following result.

Theorem 4.4

Let be \(\alpha \ge 3\) and x the unique global \(C^2\)-solution of (5). Assume that

and that there exist \(a>1\) and \(t_1\ge t_0\) such that

In addition, assume that

-

in case \(\alpha =3\): \(\lim _{t\longrightarrow +\infty }t^2\epsilon (t) =+\infty \);

-

in case \(\alpha >3\): there exists \(c>0\) such that \(t^2\epsilon (t)\ge \frac{2}{3} \alpha \left( \frac{1}{3}\alpha -1+\beta c^2\right) \) for t large enough.

If \(x^*= \hbox {argmin}\{\Vert x\Vert : x \in \hbox {argmin}g\}\) is the element of minimum norm of the nonempty convex closed set \(\hbox {argmin}g\), then

In addition,

if there exists \(T\ge t_0\) such that the trajectory \(\{x(t) :t \ge T\}\) stays either in the ball \(B(0, \Vert x^*\Vert )\), or in its complement.

Proof

Case I Assume that there exists \(T \ge t_0\) such that the trajectory \(\{x(t) :t \ge T\}\) stays in the complement of the ball \(B(0, \Vert x^*\Vert )\).

In other words, \(\Vert x(t)\Vert \ge \Vert x^*\Vert \) for every \(t \ge T\). For \(p\ge 0\), we consider the energy functional

We define \(t_2:=\max \left( t_1, 2(\beta +\beta p+b+1-\alpha )\right) \). We have that

For every \(t\ge t_0\) consider the strongly convex function

and denote

Since \(x^*\) is the element of minimum norm in \(\hbox {argmin}\frac{1}{2} g = \hbox {argmin}g\), it holds\(\Vert x_{\epsilon (t)}\Vert \le \Vert x^*\Vert \). Using the gradient inequality we have

On the other hand,

By adding the last two inequalities we obtain

From (48) and (49) we have that for every \(t\ge t_2\) it holds

The next step is to obtain an upper bound for \(t \mapsto E_b^p(t)\), and to this end we will evaluate its time derivative. For every \(t\ge t_0\) we have

By using (5) we have

hence

for every \(t \ge t_0\). Further, for every \(t \ge t_0\),

which means that (51) becomes

The gradient inequality for the strongly convex function \(x\rightarrow g(x)+\frac{\epsilon (t)}{2}\Vert x\Vert ^2\) gives

hence

for every \(t \ge t_0\). Plugging this inequality into (54) gives

for every \(t \ge t_0\). Further we have for every \(t \ge t_0\)

and

where \(a>1\) and \(c>0\) are the constants which are assumed to exist in the hypotheses of the theorem, whereby in case \(\alpha =3\) we will take \(c=1\).

Combining (55), (56) and (57) and neglecting the nonpositive terms we derive

for every \(t \ge t_0\).

For the remaining of the proof we choose the parameters appearing in the definition of the energy functional as

Since \(\alpha \ge 3\), we have

Notice that, if \(\alpha =3\), then \((p+2-b)t+(p+1)(\alpha -\beta -\beta p-b-1)=-\beta \le 0\) and, if \(\alpha >3\), then \(p+2-b<0\). This means that there exists \(t_3\ge t_2\) such that \((p+2-b)t+(p+1)(\alpha -\beta -\beta p-b-1)<0\) for every \(t\ge t_3.\) This implies that the term

in (58) is nonpositive for every \(t\ge t_2\) and therefore we will omit it. Further, using that \(\lim _{t\longrightarrow +\infty }t^2\epsilon (t) =+\infty \), if \(\alpha =3\), and that \(t^2\epsilon (t)\ge \frac{2}{3} \alpha (\frac{1}{3}\alpha -1+\beta c^2)\) for t large enough, if \(\alpha >3,\) we immediately see that there exists \(t_4\ge t_3\) such that

Finally, since \(a>1\), it is obvious that there exists \(t_5\ge t_4\) such that

Thus, (58) yields

for every \(t\ge t_5\). By the hypotheses, we have that

for every \(t\ge t_5\) and, taking into account the setting considered in this first case, it follows there exists \(t_6\ge t_5\) such that

for every \(t\ge t_6\). Hence, (59) leads to

By integrating (60) on the interval \([t_6, t]\), for arbitrary \(t \ge t_6\), we get

Recall that from (50) we have

which, combined with (61), gives for every \(t\ge t_6\) that

Using that \(\lim _{t\longrightarrow +\infty }\epsilon (t)t^{\frac{1}{3}\alpha +1}=+\infty \), \(\lim _{t\longrightarrow +\infty }x_{\epsilon (t)}=x^*\) and taking into account the hypotheses of the theorem, we get that the right-hand side of (62) converges to 0 as \(t \longrightarrow +\infty \). This yields

Case II Assume that there exists \(T \ge t_0\) such that the trajectory \(\{x(t) :t \ge T\}\) stays in the ball \(B(0, \Vert x^*\Vert )\).

In other words, \(\Vert x(t)\Vert < \Vert x^*\Vert \) for every \(t \ge T\). Since

according to Theorem 3.1, we have

Consider \(\overline{x} \in \mathcal {H}\) a weak sequential cluster point of the trajectory x, which exists since the trajectory is bounded. This means that there exists a sequence \((t_n)_{n\in \mathbb {N}} \subseteq [T,+\infty )\) such that \(t_n\longrightarrow +\infty \) and \(x(t_n)\) converges weakly to \(\overline{x}\) as \(n \longrightarrow +\infty \).

Since g is weakly lower semicontinuous, it holds

Since the norm is weakly lower semicontinuous, it holds

which, by taking into account that \(x^*\) is the unique element of minimum norm in \(\hbox {argmin}g\), implies \(\overline{x}=x^*\). This shows that the whole trajectory x converges weakly to \(x^*\).

Thus,

But by taking into account that \(x(t)\rightharpoonup x^*\) as \(t\longrightarrow +\infty \), we obtain that the convergence is strong, that is

Case III Assume that for every \(T \ge t_0\) there exists \(t\ge T\) such that \(\Vert x^*\Vert > \Vert x(t)\Vert \) and there exists \(s\ge T\) such that \(\Vert x^*\Vert \le \Vert x(s)\Vert \).

By the continuity of x it follows that there exists a sequence \((t_n)_{n\in \mathbb {N}} \subseteq [t_0,+\infty )\) such that \(t_n\longrightarrow +\infty \) as \(n \longrightarrow +\infty \) and

We will show that \(x(t_n) \longrightarrow x^*\) as \(n\longrightarrow +\infty .\) To this end we consider \(\overline{x} \in \mathcal {H}\) a weak sequential cluster point of the sequence \((x(t_n))_{n\in \mathbb {N}}.\) By repeating the arguments used in the previous case (notice that the sequence is bounded) it follows that \((x(t_n))_{n\in \mathbb {N}}\) converges weakly to \(x^*\) as \(n \longrightarrow +\infty \). Since \(\Vert x(t_n)\Vert \longrightarrow \Vert x^*\Vert \) as \(n\longrightarrow +\infty \), it yields \(\Vert x(t_n)- x^*\Vert \longrightarrow 0\) as \(n\longrightarrow +\infty \). This shows that

\(\square \)

Remark 4.5

Theorem 4.4 can be seen as an extension of a result given in [8] for the dynamical system (4) to the dynamical system with Hessian driven damping and Tikhonov regularization term (5). One can notice that for the choice \(\beta =0\), which means that the Hessian driven damping is removed, the lower bound we impose for \(t \mapsto t^2\epsilon (t)\) in case \(\alpha >3\) is less tight than the one considered in [8, Theorem 4.1] for the system (4). As we will see later, this lower bound influences the asymptotic behaviour of the trajectory.

In case \(\beta >0\), in order to guarantee that

one just have to additionally assume that

and that the function

This follows from Lemma A.1, by also taking into account that \(\lim _{t\longrightarrow +\infty }\epsilon (t)t^{\frac{\alpha }{3}+1}=+\infty \).

Combining the main results in the last two sections, one can see that if

the function

there exist \(a>1\) and \(t_1\ge t_0\) such that

and

-

in case \(\alpha =3\): \(\lim _{t\longrightarrow +\infty }t^2\epsilon (t) =+\infty \);

-

in case \(\alpha >3\): there exists \(c>0\) such that \(t^2\epsilon (t)\ge \frac{2}{3} \alpha \left( \frac{1}{3}\alpha -1+\beta c^2\right) \) for t large enough,

then one obtains both fast convergence of the function values and strong convergence of the trajectory to the minimal norm solution. This is for instance the case when \(\epsilon (t) = t^{-\gamma }\) for all \(\gamma \in (1,2)\).

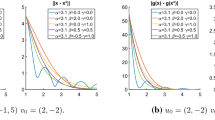

In the following, we would like to comment on the role on the condition in Theorem 4.4 which asks, in case \(\alpha >3\), for the existence of a positive constant c such that \(t^2\epsilon (t)\ge \frac{2}{3} \alpha (\frac{1}{3}\alpha -1+\beta c^2)\) for t large enough. To this end it is very helpful to visualize the trajectories generated by the dynamical system (5) in relation with the minimization of the function given in (6) for a fixed large value of \(\alpha \) and Tikhonov parametrizations of the form \(t \mapsto \epsilon (t)=t^{-\gamma }\), for different values of \(\gamma \in (1,2)\). The trajectories in the plot in Fig. 2 have been generated for \(\alpha =200\) and \(\beta =1\) and are all approaching the minimum norm solution \(x^*=0\). The norm of the difference between the trajectory and the minimum norm solution is guaranteed to be bounded from above by a function which converges to zero, after the time point t is reached at which the inequality \(t^2\epsilon (t)\ge \frac{2}{3} \alpha (\frac{1}{3}\alpha -1+\beta c^2)\) “starts” being fulfilled. For large \(\alpha \) and the Tikhonov parametrizations considered in our experiment, the closer \(\gamma \) is to 1 is, the faster is this inequality fulfilled. This is reflected by the behaviour of the trajectories plotted in Fig. 2.

Finally, we would like to formulate some possible questions of future research related to the dynamical sytem (5):

-

In [7, Theorem 3.4] it has been proved for the dynamical system (1) that, when g is strongly convex, the rates of convergence of the function values and the tracjectory are both of \(O(t^{-\frac{2}{3}\alpha })\), thus they can be made arbitrarily fast by taking \(\alpha \) large. It is natural to ask if similar rates of convergence can be obtained in a similar setting for the dynamical system (5) (see, also, [8, Section 5.4]).

-

In the literature, in the context of dynamical systems, regularization terms have been considered not only in open-loop, but also in closed-loop form (see, for instance, [12]). It is an interesting question if one can obtain for the dynamical system (5) similar results if the Tikhonov regularization term is taken in closed-loop form.

-

A natural question is to formulate proper numerical algorithms via time discretization of (5), to investigate their theoretical convergence properties, and to validate them with numerical experiments.

References

Abbas, B., Attouch, H., Svaiter, B.F.: Newton-like dynamics and forward-backward methods for structured monotone inclusions in Hilbert spaces. J. Optim. Theory Appl. 161(2), 331–360 (2014)

Alvarez, F., Attouch, H., Bolte, J., Redont, P.: A second-order gradient-like dissipative dynamical system with Hessian-driven damping. Application to optimization and mechanics. Journal de Mathématiques Pures et Appliquées 81(8), 747–779 (2002)

Alvarez, F., Cabot, A.: Asymptotic selection of viscosity equilibria of semilinear evolution equations by the introduction of a slowly vanishing term. Discrete Contin. Dyn. Syst. 15, 921–938 (2006)

Attouch, H.: Viscosity solutions of minimization problems. SIAM J. Optim. 6(3), 769–806 (1996)

Attouch, H., Chbani, Z., Fadili, J., Riahi, H.: First-order optimization algorithms via inertial systems with Hessian driven damping (2019). arXiv:1907.10536v1

Attouch, H., Chbani, Z., Riahi, H.: Rate of convergence of the Nesterov accelerated gradient method in the subcritical case \(\alpha \le 3\). ESAIM: Control Optim. Calc. Var. 25(2) (2019)

Attouch, H., Chbani, Z., Peypouquet, J., Redont, P.: Fast convergence of inertial dynamics and algorithms with asymptotic vanishing viscosity. Math. Program. Ser. B 168(1–2), 123–175 (2018)

Attouch, H., Chbani, Z., Riahi, H.: Combining fast inertial dynamics for convex optimization with Tikhonov regularization. J. Math. Anal. Appl. 457(2), 1065–1094 (2018)

Attouch, H., Cominetti, R.: A dynamical approach to convex minimization coupling approximation with the steepest descent method. J. Differ. Equ. 128(2), 519–540 (1996)

Attouch, H., Czarnecki, M.-O.: Asymptotic control and stabilization of nonlinear oscillators with non-isolated equilibria. J. Differ. Equ. 197, 278–310 (2002)

Attouch, H., Peypouquet, J., Redont, P.: Fast convex optimization via inertial dynamics with Hessian driven damping. J. Differ. Equ. 261(10), 5734–5783 (2016)

Attouch, H., Redont, P., Svaiter, B.F.: Global convergence of a closed-loop regularized Newton method for solving monotone inclusions in Hilbert spaces. J. Optim. Theory Appl. 157, 624–650 (2013)

Attouch, H., Svaiter, B.F.: A continuous dynamical Newton-like approach to solving monotone inclusions. SIAM J. Control Optim. 49(2), 574–598 (2011)

Cabot, A., Engler, H., Gadat, S.: On the long time behavior of second order differential equations with asymptotically small dissipation. Trans. Am. Math. Soc. 361, 5983–6017 (2009)

Cominetti, R., Peypouquet, J., Sorin, S.: Strong asymptotic convergence of evolution equations governed by maximal monotone operators with Tikhonov regularization. J. Differ. Equ. 245, 3753–3763 (2008)

Nesterov, Y.: A method of solving a convex programming problem with convergence rate \(O(1 / k^{2})\). Soviet Math. Doklady 27, 372–376 (1983)

Sell, G.R., You, Y.: Dynamics of Evolutionary Equations. Springer, New York (2002)

Shi, B., Du, S.S., Jordan, M.I., Su, W.J.: Understanding the acceleration phenomenon via high-resolution differential equations (2018). arXiv:1810.08907v3

Shi, B., Du, S.S., Su, W.J., Jordan, M.I.: Acceleration via symplectic discretization of high-resolution differential equations. Adv. Neural Inf. Process. Syst. 32(NIPS 2019), 5745–5753 (2019)

Su, W., Boyd, S., Candès, E.J.: A differential equation for modeling Nesterov’s accelerated gradient method: theory and insights. J. Mach. Learn. Res. 17(153), 1–43 (2016)

Acknowledgements

Open access funding provided by Austrian Science Fund (FWF). The authors are thankful to an anonymous reviewer for comments and remarks which improved the quality of the paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

The paper is dedicated to Prof. Marco A. López on the occasion of his 70th birthday.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Radu Ioan Boţ: Research partially supported by FWF (Austrian Science Fund), Project I 2419-N32. Ernö Robert Csetnek: Research supported by FWF (Austrian Science Fund), Project P 29809-N32. Szilárd Csaba László: This work was supported by a grant of Ministry of Research and Innovation, CNCS—UEFISCDI, Project Number PN-III-P1-1.1-TE-2016-0266 and by a Grant of Ministry of Research and Innovation, CNCS—UEFISCDI, Project Number PN-III-P4-ID-PCE-2016-0190, within PNCDI III.

Appendix

Appendix

In this appendix, we collect some lemmas and technical results which we will use in the analysis of the dynamical system (5). The following lemma was stated for instance in [8, Lemma A.3] and is used to prove the convergence of the objective function along the trajectory to its minimal value.

Lemma A.1

Let \(\delta >0\) and \(f\in L^1((\delta ,+\infty ),\mathbb {R})\) be a nonnegative and continuous function. Let \(\varphi :[\delta ,+\infty )\longrightarrow [0,+\infty )\) be a nondecreasing function such that \(\lim _{t\longrightarrow +\infty }\varphi (t)=+\infty .\) Then it holds

The following statement is the continuous counterpart of a convergence result of quasi-Fejér monotone sequences. For its proofs we refer to [1, Lemma 5.1].

Lemma A.2

Suppose that \(F:[t_0,+\infty )\rightarrow \mathbb {R}\) is locally absolutely continuous and bounded from below and that there exists \(G\in L^1([t_0,+\infty ), \mathbb {R})\) such that

for almost every \(t \in [t_0,+\infty )\). Then there exists \(\lim _{t\longrightarrow +\infty } F(t)\in \mathbb {R}\).

The following technical result is [11, Lemma 2].

Lemma 4.6

Let \(u : [t_0,+\infty )\longrightarrow \mathcal {H}\) be a continuously differentiable function satisfying \(u(t) +\frac{t}{\alpha }\dot{u}(t)\longrightarrow u \in \mathcal {H}\) as \(t\longrightarrow +\infty ,\) where \(\alpha >0.\) Then \(u(t)\longrightarrow u\) as \(t \longrightarrow +\infty .\)

The continuous version of the Opial Lemma (see [7]) is the main tool for proving weak convergence for the generated trajectory.

Lemma A.3

Let \(S \subseteq \mathcal {H}\) be a nonempty set and \(x : [t_0, +\infty ) \rightarrow H\) a given map such that:

Then the trajectory x(t) converges weakly to an element in S as \(t \rightarrow + \infty \).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Boţ, R.I., Csetnek, E.R. & László, S.C. Tikhonov regularization of a second order dynamical system with Hessian driven damping. Math. Program. 189, 151–186 (2021). https://doi.org/10.1007/s10107-020-01528-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-020-01528-8

Keywords

- Second order dynamical system

- Convex optimization

- Tikhonov regularization

- Fast convergence methods

- Hessian-driven damping