- Research article

- Open access

- Published:

Towards Understanding Online Question & Answer Interactions and their effects on student performance in large-scale STEM classes

International Journal of Educational Technology in Higher Education volume 17, Article number: 20 (2020)

Abstract

Online question & answer (Q & A) is a distinctive type of online interaction that is impactful on student learning. Prior studies on online interaction in large-scale classes mainly focused on online discussion and were conducted mainly in non-STEM fields. This research aims to quantify the effects of online Q & A interactions on student performance in the context of STEM education. 218 computer science students from a large university in the southeastern United States participated in this research. Data of four online Q & A activities was mined from the online Q & A forum for the course, including three student activities (asking questions, answering questions and viewing questions/answers) and one instructor activity (answering questions/providing clarifications). These activities were found to have different effects on student performance. Viewing questions/answers was found to have the greatest effect, while interaction with instructors showed minimum effects. This research fills the gap of lacking research in online Q & A, and the results of this research can inform the effective usage of online Q & A in large-scale STEM courses.

Introduction

Higher education instructors, regardless of teaching modality and fields, have looked to online communication tools as a means of interacting with and assisting their students. These online communication tools support the primary form of student engagement in online classes, and typically fulfill a secondary or supportive role in classes with face-to-face meetings. These tools may be used to support teacher-directed activities (e.g., required topical discussion of course content), or student-initiated ones. In particular, students may find online communication tools useful when they are confused or need support to complete their course assignments. These student-driven help and information-seeking activities, in which questions and answers are exchanged between human partners, may rely on peer or instructor responses to student queries (Puustinen & Rouet, 2009). Although students might also seek help and ask questions during a face-to-face class session or instructor office hours, or alternatively by email, asynchronous online communication tools offer some distinct advantages in this scenario (Hwang, Singh, & Argote, 2015; Ryan, Pintrich, & Midgley, 2001). They can increase student access to help in situations where class sizes are large and opportunities for face-to-face interaction are limited. They allow students to ask questions when questions arise and receive crowdsourced answers from their peers and instructors (Reznitskaya & Wilkinson, 2017; Xia, Wang, & Hu, 2009). The generated questions and responses on asynchronous online communication tools can be archived, indexed and viewed as often as is needed, and all students in the class can benefit from the answers to a question asked by a single student.

Questions can be asked and answers can be provided in any online discussion forums, but special question and answer (Q & A) platforms have been developed to facilitate this specific form of interaction. These Q & A forums are designed to focus on help-seeking interactions. Rather than writing more general posts, as is done on discussion forums, users on Q & A forums are prompted directly to ask or answer questions. This approach is more effective than a general discussion forum for Q & A interactions (Hao, Barnes, Branch, & Wright, 2017a). Each question begins its own thread, and the appropriate form of response is an answer. Q & A forums vary in their sophistication; for instance, some platforms allow users to upvote popular responses, allow users to prompt question-askers to rephrase asked questions, or associate user reputational information with questions and answers. On the Internet at large, social question and answer (SQA) platforms like Stack Exchange (www.stackexchange.com), Yahoo! Answers (answers.yahoo.com) and Quora (www.quora.com) allow individuals to seek answers to their questions from the general population. SQA platforms crowdsource answers, in contrast to Virtual Reference (VR) services, where experts are contacted to provide answers (Shah & Kitzie, 2012). In a class setting, peer responses are most similar to crowdsourced responses on SQA platforms, varying in depth and accuracy. In contrast, instructor responses might be best equaled by VR services that value quality, and are more likely than SQA to provide a thorough answer including an explanation of how the answer was found or derived.

The value of online Q & A with regard to learning outcomes remains uncertain. Although the relationship between more general online discussion and student performance has been heavily studied (e.g., Beaudoin, 2002; Davies & Graff, 2005; Palmer, Holt, & Bray, 2008; Russo & Koesten, 2005), Q & A forums have been studied far less, and been typically researched in SQA settings rather than class contexts (e.g., Ji & Cui, 2016; Jin, Li, Zhong, & Zhai, 2015; Shah & Kitzie, 2012; Zhang, Liu, Chen, & Gong, 2017). Class-based online Q & A activities differ from SQA activities in some meaningful ways, such as user motivation and interactions (Zhang et al., 2017). For instance, users in an SQA community must form and maintain their own social networks in order to sustain the online Q & A community (Jin et al., 2015; Nasehi, Sillito, Maurer, & Burns, 2012). In a class context, students, as primary contributors to and consumers of the Q & A, have instructor-defined reasons to engage in asking, answering, and viewing questions. Rather than being rewarded solely through attention and community reputation, students might be rewarded by engaging in activities and gaining knowledge that ideally will help support their academic learning and performance (Deng, Liu, & Qi, 2011).

The aforementioned general class discussion studies consistently show both that student behavior patterns vary along with student performance in class, and that online discussion is beneficial to student learning (Beaudoin, 2002; Davies & Graff, 2005; Palmer et al., 2008; Russo & Koesten, 2005). This study expands these prior findings by examining different student behaviors and their relationships to student performance within a class-based online Q & A context. The study is situated in a large-scale introductory programming course. Online Q & A may be particularly useful in this and other STEM disciplines where the class size tends to be large and students have substantial needs for Q & A interaction (Henderson & Dancy, 2011).

Related studies

Online Question & Answer, help seeking, information seeking, and online discussion

Longstanding definitional problems have muddied the understanding of several important concepts when it comes to online interaction among students, such as help-seeking, information seeking, and online discussion. Help-seeking and information seeking have been studied extensively over the last fifty years mainly in face-to-face contexts, and their boundaries become blurry in online contexts (Puustinen & Rouet, 2009). Help-seeking refers to seeking social assistance from other people, while information seeking refers to seeking information typically from books or machines (Verhoeven, Heerwegh, & De Wit, 2010). The literature on both education and computer-human interaction in the last two decades has suggested the convergence of seeking social assistance and information (Hao, Barnes, Branch, & Wright, 2017a; Puustinen & Rouet, 2009; Stahl & Bromme, 2009). More help-seeking involves the support from machines, such as search engines or tutor systems, and more information-seeking serves the same purpose as help-seeking (Deng et al., 2011). It is worth noting that both help-seeking and information-seeking emphasize the person who seeks help or information, but not the interactions among people or machines. Online discussion, in educational contexts, refers to course-related conversations among students on discussion boards or forums (Hammond, 2005). In contrast to help-seeking and information seeking, online discussion emphasizes interactions among students (An, Shin, & Lim, 2009). Online discussion has found extensive applications in social or liberal education over the last two decades.

Online Q & A, in educational contexts, refers to interactions involving asking and answering learning questions among students and instructors (Yoo & Kim, 2014). Online Q & A shares some similarities with help-seeking, information seeking, and online discussion, but also bears some notable differences. Online Q & A approaches are similar to online discussion because both emphasize interaction among people (Hao, Barnes, Wright, & Branch, 2017b; Jain, Chen, & Parkes, 2009). However, online Q & A is comprised of three distinct interactive behaviors: asking learning questions, answering questions from others, and viewing questions and answers from others. Online Q & A interactions tend to be situated among problem-solving learning activities. For this reason, they are particularly useful in courses where students work through problem sets or are engaged in other solution-oriented work, such as in programming courses (Hao, Wright, Barnes, & Branch, 2016; Jain et al., 2009; Parnell et al., 2011).

Online Question & Answer Interaction and academic success

Many studies have explored the relationship between online discussion and student performance in the last two decades. Such studies contributed to the understanding of the effective usage of online discussions in educational settings. Beaudoin (2012) found that education majors who were active in online discussion tended to have slightly better performance, although their counterparts who were inactive in online discussion still spend time on other course tasks. Beaudoin’s findings were confirmed by Davies and Graff (2005), who also noted a significant difference between students who were active and those who were inactive in online discussion. Russo and Koesten (2005) noted in communication education that students who led the discussion and those who only followed up tend to exhibit different learning styles, motivation and performance through social network analysis. The findings on the relationship between online discussion and student performance are largely consistent. However, it is worth noting that the majority of these studies were conducted in the context of liberal and social education.

Online discussion has not been as widely adopted in STEM fields as it is in the humanities and social sciences, where discursive interactions and the exploration of multiple perspectives are more highly valued. When online discussion is employed in STEM classes, participation has been found to be flat and focused on minimal, deadline-oriented participation (Palmer et al., 2008). The value of this type of participation to learning is questionable (de Jong, Sotiriou, & Gillet, 2014; Yu, Liu, & Chan, 2005). In contrast, Q & A forums have bigger application potential in education in STEM fields, given the typical large class sizes and the needs for solution-oriented communication.

Despite the potential of Q & A forums in STEM fields, there is a lack of research attention on quantifying the effects of online Q & A interactions on student performance. Nurminen, Heino, and Ihantola (2017) surveyed computer science students and found that they were most likely to seek help online from previous social connections and more experienced peers. In this sense, students are likely to mine their personal networks for formal learning purposes in much the same way as other people do for more general Q & A needs (Jin et al., 2015). Consistent with such findings, Hao et al. (2016) found that computer science students tended to ask questions online to their peers more frequently than to instructors, a trend that became more clear with experienced students. Mazzolini and Maddison (2003) investigated the roles of instructors in online Q & A interactions among astronomy students, and found that the active instructors inadvertently discourage students from interacting with peers for Q & A online, but are welcomed by students. Such studies confirmed that students in STEM fields did interact with each other online for Q & A purposes, but did not measure the effects of the interactions on student performance. To our best knowledge, Yoo and Kim’s (2014) study is the only one that focuses on the relationship between Q & A interaction and student performance. Yoo and Kim (2014) studied a computer science course and found that the frequency of student answers to questions and the earlier students communicated their problems correlated positively with their project performance. Essentially, students who posted more in the Q & A also performed better on the course project. However, the extent to which this finding can be generalized remains uncertain, and a causal relationship should not be assumed from the correlation. Additional studies are needed to help confirm these findings and explore how specific types of Q & A activities relate to student performance.

Social network analysis in Online Question & Answer Interactions

Social network analysis (SNA) refers to the process of investigating social structures through the use of networks and graph theory (Borgatti, Mehra, Brass, & Labianca, 2009). SNA is a good fit for analyzing student online Q & A interactions. SNA can capture the dynamics of online interactions, and provide a more granular investigation than many conventional metrics, such as posting and viewing frequency (Hernández-García, González-González, Jiménez-Zarco, & Chaparro-Peláez, 2015).

Despite the lack of application of SNA in online Q & A interactions, SNA has been applied to understand the online discussion of students. Such research may contribute to understanding online Q & A interactions from the perspective of SNA. For instance, many of these studies demonstrated that a strong sense of community can lead to a strong social network, which has a higher rate of information flow and interpersonal support (De Laat, 2002; Shen, Nuankhieo, Huang, Amelung, & Laffey, 2008). A strong sense of community can be evidenced by a set of factors such as student-instructor ratio, instructor immediateness, and student performance.

For instance, instructor immediateness contributes to a stable social network of online discussion. Instructor immediateness is a social network factor, and it refers to the extent to which instructors can provide facilitation to online interaction. As the student-instructor ratio increases, the more their ability to facilitate interactions among students is constrained. However, if an instructor becomes dominant in the social network, student-student interactions might be discouraged (Rovai, 2007). For another instance, student performance can be an important factor in determining the constructs of an online discussion network, such as network density and stability (Dawson, 2010). The higher students’ academic performance is, the more likely their network is to have higher density and centrality scores. Ghadirian and his colleagues (Ghadirian, Salehi, & Ayub, 2018) corroborated the findings of Dawson and suggested that low-performing students are less willing to engage in a discussion given their innate lack of knowledge and, as a result, confidence. This condition is only worsened given their lack of interactions with high performing and well-connected members of the network who may aid them in expanding their knowledge base.

Research design

Research design and questions

This study used a quasi-experimental research design to investigate the relationship among the activity level of students and instructors using an online Q & A forum, and student performance on course assessments. Specifically, the research questions addressed by this study are:

RQ1: How do students interact with their peers and instructors in online Q & A forums?

RQ2: To what extent do online student Q & A interactions predict their academic performance?

RQ3: To what extent does the instructor facilitation in online Q & A forums predict student academic performance?

Participants and contexts

This study was conducted in a face-to-face introductory programming course offered at a large research university in the southeastern United States. To strengthen the stability and generalizability of our findings, we collected data from the same course in both the summer and fall semesters. A total of 218 participants participated in this study, consisting of 54 students in the summer semester and 164 students in the fall semester. During both semesters the course had the same instructor, syllabus, curriculum, exams, and grading rubrics. However, in the fall semester the course duration was 50% longer than in summer, and covered the course material in a more in-depth approach.

In both semesters, an online Q & A forum, Piazza (piazza.com), was set up for students to ask learning questions to their peers, TAs and the instructor. As a Q & A forum, Pizza has been adopted widely in large-scale computer science and engineering courses (Hao, Galyardt, Barnes, Branch, & Wright, 2018). In our study, students were encouraged but not required to ask questions to their peers and instructor using Piazza. It is worth noting that student online Q & A interactions on Piazza were periodically pushed to each individual student of the same course through automated emails.

Data collection and analysis

To answer the three proposed research questions, we needed to collect student online Q & A interaction data. To achieve this, we programmed a script that mines Q & A data from Piazza. We mined the Q & A data of the two courses that were studied in this research. From the mined data, we were able to extrapolate the following online Q & A interaction behaviors and frequency data:

Who asked which questions

Who answered which questions

Frequency of asking questions per person

Frequency of answering questions per person

Frequency of viewing questions and answers from other people per person

Frequency of posting notes and clarifications per instructor/TA

The frequency of posting notes and clarifications only applies to the instructor and teaching assistants (TAs). The other frequency data applies to the instructor, TAs, and students.

To answer the RQ1, we applied SNA to the mined online Q & A interactions. Specifically, directed network graphs were constructed per semester. The following network factors were considered:

Density: The ratio between all connections in a network and the number of possible connections.

Transitivity: The extent to which interconnected transitive triads exist in a graph.

Closeness Centrality: This represents the average distance from one node in the network to another.

Betweenness Centrality: This is a method of indicating the influence of a node in a network by the number of vertices in a given vertexes path of communication.

Eigenvector Centrality: Defines the importance of a vertex by the degree to which it is connected with other well-connected vertices.

To answer RQ2 and RQ3, we collected student performance data on both programming assignments and paper-based exams. The reason for using two different measurements is the dual focuses of programming courses, including student programming capability and conceptual knowledge on programming languages and problem-solving. Programming assignments focus on measuring student programming capability, while paper-based exams focus on measuring student conceptual knowledge. Both programming assignments and exams were graded by the course instructor with the help of two teaching assistants. Once data matching between online Q & A data and performance data occurred, all individual identifiers were cleaned from the dataset. Based on the collected data, we used blockwise regression to explore the extent to which student, instructor, and TAs’ online Q & A interactions can predict student course performance.

Results

How do students interact with their peers and instructors in online Q & a forums (RQ1)?

To answer RQ1, we used descriptive analysis and SNA to investigate how students interact with their peers, TAs, and instructors in terms of asking and answering questions. The behavior “viewing questions” was excluded from SNA given the lack of knowledge in who viewed which questions or answers. The descriptive analysis results are presented in Table 1. As student numbers increased, they tended to ask and answer more questions on average. As questions and answer numbers went up, the average view also increased substantially. Overall, the frequency data showed that students successfully formed online Q & A communities regardless of student numbers in class.

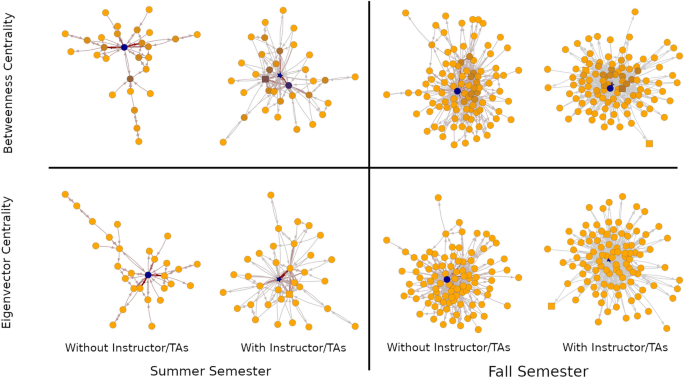

SNA uncovered a consistent theme across the two semesters: online Q & A interactions are student-driven. The evidence for this theme is that when instructors and TAs are removed from the social networks, the networks still showed strong centralization (see Fig. 1). Although instructors and teaching assistants were the most central nodes in these networks (by several metrics), their removal did not alter substantially local statistics for nodes as well as overall network statistics (e.g., density and transitivity). To quantify our observation from the visualization, we calculated all network factors and compared between with and without instructors/TAs. The results are presented in Table 2. The quantitative comparisons confirmed our observation from the visualization, and yielded consistent findings across the two semesters. This finding also indicated that the instructors and TAs were not attempting to take dominant roles in the online Q & A interactions.

To further understand the characteristics of students who contributed more to asking and answering questions, we separated students of the same class into three groups by their overall academic performance (total of both programming assignments and exams): high, medium and low performing students. The summary of their online interaction frequencies is presented in Table 3. The only consistent finding across the two semesters is that high-performing students with higher overall academic performance tended to view more questions and answers than low-performing students [summer: t = 3.30, p < 0.025Footnote 1; fall: t = 2.87, p < 0.0052].

To what extent do student activity and instructor facilitation in online Q & a forums predict student performance (RQ2 and RQ3)?

To answer RQ2 and RQ3, we applied blockwise regressions to understand the extent to which online Q & A interaction activities of students, TAs, and instructors predict student performance. Given the nature of the programming courses, there were two metrics of student performance: programming assignments and paper exams. To avoid inflating Type II error, we applied Bonferroni Correction to the significance levels. The adjusted significance levels were:

* p < 0.025; **p < 0.005; ***p < 0.0005.

We separated all predictors of online Q & A interactions into two blocks:

Student online Q & A behaviors:

○ Ask: ask questions to peers, TAs, and instructors online

○Answer: answer questions asked by peers online

○View: view questions, answers, notes, or announcements online

Instructor/TA online Q & A behaviors:

○Facilitate: Answer questions, post announcements, or post notes

When only the block of student online Q & A behaviors were used as predictors, we found that “view” as a significant predictor of student performance in programming assignments. This finding was consistent across the two semesters. The results are presented in Table 4. Overall, asking, answering, and viewing questions/answers explained 16.2% variance of programming assignment performance in the summer semester, and 11.6% variance in the fall semester.

In contrast, asking, answering, and viewing questions/answers explained 10.0% variance of exam performance in the summer semester, and 3.6% variance in the fall semester. However, neither the overall results nor any single factors were found significant in predicting student exam performance.

When the block of instructor/TA online Q & A behaviors were added to the regression models of predicting student programming assignment performance, no significant improvements were found for the summer semester [F(1,17) = 0.148, p > 0.0.25] or the fall semester [F(1, 58) = 0.674, p > 0.025]. However, it is worth noting that when the Instructor/TA behaviors were controlled, student behavior “view” was still a significant predictor of student programming assignment performance for the summer semester. A similar effect was not found for the fall semester. The results are presented in Table 5.

When the block of instructor/TA online Q & A behaviors were added to the regression models of predicting student exam performance, no significant improvements were found for the summer semester [F(1, 17) = 0.455, p > 0.025] or the fall semester [F(1, 58) = 0.003, p > 0.025]. Different from the models of predicting student programming assignment performance, no single factors were found consistently significant in predicting student exam performance. When the Instructor/TA behaviors were controlled, student behavior “view” was found as a significant predictor of student exam performance in the summer semester. However, this finding was not replicated in the fall semester.

Discussion

We systematically investigated online student interactions in large-scale face-to-face programming courses over two semesters in this study. Of all the findings in this study, we would like to highlight and discuss three findings that were consistent across the two experiments of this study.

First, low-performing students should be identified from early assessments and given more guidance/facilitation on effective usage of online Q & A. Although we investigated the predictive power of online Q & A interaction on student performance, it is important to note that our results do not imply causality between online Q & A and academic achievement. Despite the lack of causality, we found that high-performing students tended to use online Q & A forums more actively than their counterparts. This finding shares similarities with the prior studies on both online Q & A and online discussion. Hao, et al. (Hao, Barnes, Wright, & Branch, 2017b) reached similar findings through a survey study on online Q & A in the context of computing education. Palmer et al. (2008), as well as Davies and Graff (2005), found that students who were active in online course discussions tended to outperform their counterparts in terms of the overall course grade. Compared with prior studies, this study took a further step by understanding fine-grained differences among three types of student online interactions. It is worth noting that a significant difference was only found in viewing questions/answers among students with different academic performance. In other words, high-performing students did not necessarily ask or answer more questions, but they viewed significantly more questions and answers from both peers and instructors for learning purposes. In large-scale classes, low-performing students can be identified from an early assessment. If these students are given guidance on using online Q & A (e.g., read questions and answers from peers), they may gradually learn how to utilize online Q & A effectively for learning purposes, which may lead to overall student performance improvement. To understand why students of different performance behave as such, future studies may consider an explorative qualitative study on student usage of Q & A in STEM classes.

Second, effective online Q & A may not require instructors to actively answer student questions. This finding was indicated by multiple evidence accumulated when answering the three proposed research questions. The facilitation of the instructor was found insignificant in predicting student performance. More importantly, when we analyzed the social networks formed by online Q & A interactions, we noticed that the social networks remained robust and healthy when instructor/TAs were removed by all network metrics. This finding is consistent with prior studies on online discussion. For instance, high levels of instructor intervention in online discussion has been found to stifle peer interaction (Dennen, 2005) and shorten discussion or stop further conversation (Knutas, Ikonen, & Porras, 2013; Mazzolini & Maddison, 2003). In the context of online Q & A situated in large-scale face-to-face classes, the instructors’ early intervention might remove the barrier to problem-solving immediately, but may also eliminate potential cooperative problem solving and knowledge construction among students (Hao et al., 2016; Mazzolini & Maddison, 2003). Given the heavy teaching loads of large-scale classes, it might be more beneficial for instructors to intervene less in online Q & A among student peers unless a question stays unanswered for some time. However, it is worth noting that the generalizability of this finding may have limits. This study is situated in a large-scale face-to-face programming course. Introductory programming courses are typically featured by a large number of students and intensive hands-on problem-solving tasks. Although online Q & A plays a significant role for students to ask questions and have their questions answered, it is by no means the only approach. Students can meet the instructors and TAs in person to ask questions. In a purely online or blended learning environment, the role of instructors in online Q & A interactions may change significantly (Bliuc, Ellis, Goodyear, & Piggott, 2011; Khine & Lourdusamy, 2003). For instance, the instructor facilitation of online Q & A interactions in a purely online learning environment may become more important than the face-to-face classes (Lynch, 2010).

Third, effective usage and engagement in online Q & A are not necessarily observable. Viewing is not deemed as active participation behavior in online discussion (Davies & Graff, 2005; Hao et al., 2016). Typically, students who only engage in viewing discussion content are described as “lurkers” (Rovai, 2007). Lurkers have been found to have negative impacts on the development of community networks in online discussion forums (Nonnecke & Preece, 2000; Rovai, 2007). When there is a higher percentage of lurkers, the online community may risk collapsing or never be successfully formed. However, we found that student viewing questions/answers to be positively significant in predicting their programming assignment performance. This finding was consistent across the two semesters and with or without controlling the facilitation from instructors/TAs. On the one hand, it is surprising that a seemingly passive behavior could have any significant impacts on student performance. On the other hand, given the focus of the course is problem-solving, it is understandable that active discussion is not necessary as long as a learning question is clearly answered. When a learning question is clearly answered, all students can all benefit from reading it online. This is different from the courses in social studies or liberal studies, where the intensity of online discussion and debates is positively correlated with student performance (e.g., Hara, Bonk, & Angeli, 2000; Knowlton, 2002). Viewing questions was found to have limited predictive power on student exam performance, but students also asked significantly fewer questions on the exam than programming assignments. Given the nature of paper-based closed-book exams, it is less likely students would rely heavily on online Q & A for their studying and preparation. In contrast, when students encounter difficulties or challenges while working on their programming assignments, online Q & A can promote just-in-time learning. Given the learning benefits of viewing questions/answers, instructors may consider different information delivery approaches that best expose such information to students, such as periodically pushing information to students through emails (Warren & Meads, 2014).

Limitations

There are three major limitations of this study. First, this study was conducted in a single context, computing education. The extent to which our findings can be generalized to other fields, especially non-STEM fields, needs further investigation. Second, this study was conducted in only one higher education institute, which may limit the generalizability of the findings. To mitigate this limitation, a replication study was designed and conducted during the study period of time, and the findings were consistent across the two studies. To further verify the generalizability of our findings, future studies may consider replicating our studies across multiple higher education institutes. Third, the demographic information of the participants was not collected in this study. Although demographic factors, such as race or gender, were found less important than student performance in terms of how student behave in online discussion, the lack of such data prevents further investigation into how students of different gender, race, or age interact with each other in online Q & A. Future studies may consider collecting such data through surveys and provide a more fine-grained analysis on the relationship between student demographics and online Q & A interaction.

Conclusion

Online Q & A forums have found wide application in large-scale STEM courses during the last few years, but few studies have investigated the effects of online Q & A interactions on student learning. This study sought to fill this gap by quantifying the effect of fine-grained online Q & A interaction behaviors on student academic performance. The findings of this study provide evidence to support that online Q & A interactions share similarities with online discussion, but also bear significant differences. Viewing questions/answers is typically deemed as a passive behavior that does not indicate active participation in online discussion. However, this study demonstrated that such passive behavior contributed to stronger student academic performance in Q & A interactions. Given the significance of viewing questions/answers in predicting student assignment performance, instructors are recommended to push such information to “the face of students” through pushing functions, which may further amplify its positive effects.

Availability of data and materials

The anonymized datasets used and/or analysed during the current study are available from the corresponding author on reasonable request.

Notes

, 2 corrected significance level; see page 10

References

An, H., Shin, S., & Lim, K. (2009). The effects of different instructor facilitation approaches on students’ interactions during asynchronous online discussions. Computers & Education, 53(3), 749–760.

Beaudoin, M. F. (2002). Learning or lurking? Tracking the “invisible” online student. The Internet and Higher Education, 5(2), 147–155.

Beaudoin, B. B. (2012). Creating Community: From Individual Reflection to SoTL Transformation. International Journal for the Scholarship of Teaching & Learning, 6(1).

Bliuc, A. M., Ellis, R. A., Goodyear, P., & Piggott, L. (2011). A blended learning approach to teaching foreign policy: Student experiences of learning through face-to-face and online discussion and their relationship to academic performance. Computers & Education, 56(3), 856–864.

Borgatti, S. P., Mehra, A., Brass, D. J., & Labianca, G. (2009). Network analysis in the social sciences. Science, 323(5916), 892–895.

Davies, J., & Graff, M. (2005). Performance in e-learning: Online participation and student grades. British Journal of Educational Technology, 36(4), 657–663.

Dawson, S. (2010). ‘Seeing’the learning community: An exploration of the development of a resource for monitoring online student networking. British Journal of Educational Technology, 41(5), 736–752.

de Jong, T., Sotiriou, S., & Gillet, D. (2014). Innovations in STEM education: The go-lab federation of online labs. Smart Learning Environments, 1(1), 3.

De Laat, M. (2002, January). Network and content analysis in an online community discourse. In Proceedings of the conference on computer support for collaborative learning: Foundations for a CSCL community (pp. 625-626). International Society of the Learning Sciences.

Deng, S., Liu, Y., & Qi, Y. (2011). An empirical study on determinants of web-based question-answer services adoption. Online Information Review, 35(5), 789–798.

Dennen, V. P. (2005). From message posting to learning dialogues: Factors affecting learner participation in online discussion. Distance Education, 26(1), 125–146.

Ghadirian, H., Salehi, K., & Ayub, A. F. M. (2018). Analyzing the social networks of high-and low-performing students in online discussion forums. American Journal of Distance Education, 32(1), 27–42.

Hammond, M. (2005). A review of recent papers on online discussion in teaching and learning in higher education. Journal of Asynchronous Learning Networks, 9(3), 9–23.

Hao, Q., Barnes, B., Branch, R. M., & Wright, E. (2017). Predicting computer science students’ online help-seeking tendencies. Knowledge Management & E-Learning: An International Journal, 9(1), 19–32.

Hao, Q., Barnes, B., Wright, E., & Branch, R. M. (2017). The influence of achievement goals on online help seeking of computer science students. British Journal of Educational Technology, 48(6), 1273–1283.

Hao, Q., Galyardt, A., Barnes, B., Branch, R. M., & Wright, E. (2018, October). Automatic identification of ineffective online student questions in computing education. In proceedings of 2018 IEEE Frontiers in Education Conference (FIE) (pp. 1-5). IEEE.

Hao, Q., Wright, E., Barnes, B., & Branch, R. M. (2016). What are the most important predictors of computer science students' online help-seeking behaviors? Computers in Human Behavior, 62, 467–474.

Hara, N., Bonk, C. J., & Angeli, C. (2000). Content analysis of online discussion in an applied educational psychology course. Instructional Science, 28(2), 115–152.

Henderson, C., & Dancy, M. H. (2011). Increasing the impact and diffusion of STEM education innovations. In Invited paper for the National Academy of Engineering, Center for the Advancement of Engineering Education Forum, Impact and Diffusion of Transformative Engineering Education Innovations, available at: http://www.Nae.Edu/file.Aspx.

Hernández-García, Á., González-González, I., Jiménez-Zarco, A. I., & Chaparro-Peláez, J. (2015). Applying social learning analytics to message boards in online distance learning: A case study. Computers in Human Behavior, 47, 68–80.

Hwang, E. H., Singh, P. V., & Argote, L. (2015). Knowledge sharing in online communities: Learning to cross geographic and hierarchical boundaries. Organization Science, 26(6), 1593–1611.

Jain, S., Chen, Y., & Parkes, D. C. (2009). Designing incentives for online question and answer forums. In Proceedings of the 10th ACM conference on Electronic Commerce (pp. 129-138). ACM.

Ji, Q., & Cui, D. (2016). The enjoyment of social Q & a websites usage: A multiple mediators model. Bulletin of Science, Technology & Society, 36(2), 98–106.

Jin, J., Li, Y., Zhong, X., & Zhai, L. (2015). Why users contribute knowledge to online communities: An empirical study of an online social Q & a community. Information & Management, 52(7), 840–849.

Khine, M. S., & Lourdusamy, A. (2003). Blended learning approach in teacher education: Combining face-to-face instruction, multimedia viewing and online discussion. British Journal of Educational Technology, 34(5), 671–675.

Knowlton, D. S. (2002). Promoting liberal arts thinking through online discussion: A practical application and its theoretical basis. Educational Technology & Society, 5(3), 189–194.

Knutas, A., Ikonen, J., & Porras, J. (2013). Communication patterns in collaborative software engineering courses: A case for computer-supported collaboration. In Proceedings of the 13th Koli Calling International Conference on Computing Education Research (pp. 169-177). ACM.

Lynch, D. J. (2010). Application of online discussion and cooperative learning strategies to online and blended college courses. College Student Journal, 44(3).

Mazzolini, M., & Maddison, S. (2003). Sage, guide or ghost? The effect of instructor intervention on student participation in online discussion forums. Computers & Education, 40(3), 237–253.

Nasehi, S. M., Sillito, J., Maurer, F., & Burns, C. (2012, September). What makes a good code example?: A study of programming Q & a in StackOverflow. In Software Maintenance (ICSM), 2012 28th IEEE International Conference on (pp. 25-34). IEEE.

Nonnecke, B., & Preece, J. (2000, April). Lurker demographics: Counting the silent. In Proceedings of the SIGCHI conference on Human Factors in Computing Systems (pp. 73-80). ACM.

Nurminen, M., Heino, P., & Ihantola, P. (2017). Friends and gurus: Do students ask for help from those they know or those who would know. In Proceedings of the 17th Koli Calling Conference on Computing Education Research (pp. 80-87). ACM.

Palmer, S., Holt, D., & Bray, S. (2008). Does the discussion help? The impact of a formally assessed online discussion on final student results. British Journal of Educational Technology, 39(5), 847–858.

Parnell, L. D., Lindenbaum, P., Shameer, K., Dall'Olio, G. M., Swan, D. C., Jensen, L. J., … Albert, I. (2011). BioStar: An online question & answer resource for the bioinformatics community. PLoS Computational Biology, 7(10), e1002216.

Puustinen, M., & Rouet, J. F. (2009). Learning with new technologies: Help seeking and information searching revisited. Computers & Education, 53(4), 1014–1019.

Reznitskaya, A., & Wilkinson, I. A. (2017). Truth matters: Teaching young students to search for the most reasonable answer. Phi Delta Kappan, 99(4), 33–38.

Rovai, A. P. (2007). Facilitating online discussions effectively. The Internet and Higher Education, 10(1), 77–88.

Russo, T. C., & Koesten, J. (2005). Prestige, centrality, and learning: A social network analysis of an online class. Communication Education, 54(3), 254–261.

Ryan, A. M., Pintrich, P. R., & Midgley, C. (2001). Avoiding seeking help in the classroom: Who and why? Educational Psychology Review, 13(2), 93–114.

Shah, C., & Kitzie, V. (2012). Social Q & a and virtual reference-comparing apples and oranges with the help of experts and users. Journal of the American Society for Information Science and Technology, 63(10), 2020–2036.

Shen, D., Nuankhieo, P., Huang, X., Amelung, C., & Laffey, J. (2008). Using social network analysis to understand sense of community in an online learning environment. Journal of Educational Computing Research, 39(1), 17–36.

Stahl, E., & Bromme, R. (2009). Not everybody needs help to seek help. Surprising effects of metacognitive instructions to foster help-seeking in an online-learning environment. Computers & Education, 53, 1020–1028.

Verhoeven, J. C., Heerwegh, D., & De Wit, K. (2010). Information and communication technologies in the life of university freshmen: An analysis of change. Computers & Education, 55(1), 53–66.

Warren, I., & Meads, A. (2014). Push notification mechanisms for pervasive smartphone applications. IEEE Pervasive Computing, 13(2), 61–71.

Xia, H., Wang, R., & Hu, S. (2009). Social networks analysis of the knowledge diffusion among university students. In Knowledge Acquisition and Modeling, 2009. KAM'09. Second International Symposium on (Vol. 2, pp. 343-346). IEEE.

Yoo, J., & Kim, J. (2014). Can online discussion participation predict group project performance? Investigating the roles of linguistic features and participation patterns. International Journal of Artificial Intelligence in Education, 24(1), 8–32.

Yu, F. Y., Liu, Y. H., & Chan, T. W. (2005). A web-based learning system for question-posing and peer assessment. Innovations in Education and Teaching International, 42(4), 337–348.

Zhang, X., Liu, S., Chen, X., & Gong, Y. (2017). Social capital, motivations, and knowledge sharing intention in health Q & a communities. Management Decision, 55(7), 1536–1557.

Acknowledgements

Not applicable.

Funding

Not applicable.

Author information

Authors and Affiliations

Contributions

QH led the project. DS analyzed data, interpreted the results, and drafted the 1st version of the paper. VD contributed to the literature review, MT contributed to the social network analysis, and BB contributed to the data collection. LM and NT contributed to the revision of the paper. The author(s) read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Smith IV, D., Hao, Q., Dennen, V. et al. Towards Understanding Online Question & Answer Interactions and their effects on student performance in large-scale STEM classes. Int J Educ Technol High Educ 17, 20 (2020). https://doi.org/10.1186/s41239-020-00200-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s41239-020-00200-7