Abstract

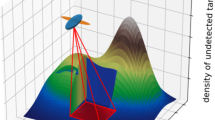

Decentralized policies for information gathering are required when multiple autonomous agents are deployed to collect data about a phenomenon of interest when constant communication cannot be assumed. This is common in tasks involving information gathering with multiple independently operating sensor devices that may operate over large physical distances, such as unmanned aerial vehicles, or in communication limited environments such as in the case of autonomous underwater vehicles. In this paper, we frame the information gathering task as a general decentralized partially observable Markov decision process (Dec-POMDP). The Dec-POMDP is a principled model for co-operative decentralized multi-agent decision-making. An optimal solution of a Dec-POMDP is a set of local policies, one for each agent, which maximizes the expected sum of rewards over time. In contrast to most prior work on Dec-POMDPs, we set the reward as a non-linear function of the agents’ state information, for example the negative Shannon entropy. We argue that such reward functions are well-suited for decentralized information gathering problems. We prove that if the reward function is convex, then the finite-horizon value function of the Dec-POMDP is also convex. We propose the first heuristic anytime algorithm for information gathering Dec-POMDPs, and empirically prove its effectiveness by solving discrete problems an order of magnitude larger than previous state-of-the-art. We also propose an extension to continuous-state problems with finite action and observation spaces by employing particle filtering. The effectiveness of the proposed algorithms is verified in domains such as decentralized target tracking, scientific survey planning, and signal source localization.

Similar content being viewed by others

1 Introduction

Autonomous agents and robots can be deployed in information gathering tasks in environments where human presence is either undesirable or infeasible. Examples include monitoring of deep ocean conditions, or space exploration. It may be desirable to deploy a team of agents due to the large scope of the task at hand, resulting in a multi-agent active information gathering task. Such a task typically has a definite duration, after which the agents, the data collected by the agents, or both, are recovered. For example, underwater survey vehicles may be recovered by a surface vessel after completion of a survey mission, or the agents may periodically communicate their data back to a base station.

The overall task can be viewed as sequential decision-making. Each agent takes an action and then perceives an observation. The action taken is determined based on the history of the agents’ past actions and observations. Action selection is repeated after perceiving each observation until the task ends. To maximize effectiveness of the team and the informativeness of the data collected, planning is required. Planning produces a policy that prescribes how each team member should act to maximize a shared utility. Utility in information gathering tasks is measured by information-theoretic quantities, such as negative entropy or mutual information. Planning should also take into account the possible non-determinism of action effects and observation noise. During the task, the maximum range of communication between agents and the amount of data that can be transmitted can vary, or there may be delays in communication. At one extreme, communication is entirely prevented during task execution.

We approach cooperative information gathering as a decentralized partially observable Markov decision process, or Dec-POMDP [7, 37]. The Dec-POMDP is a general model for sequential co-operative decision-making under uncertainty. It models uncertainty in the current state of the system, the effects of actions, and observation noise. Types of inter-agent communication, such as data-rate limited, delayed, or non-existent communication, can be explicitly modelled in the Dec-POMDP framework. This generality makes the Dec-POMDP an ideal choice for formalizing multi-agent information gathering problems.

Specifically, a Dec-POMDP models a sequential multi-agent decision making task. There is an underlying hidden system state, and a set of agents. Each agent has its own set of local actions it can execute, and a set of local observations it may perceive. Markovian state transition and observation processes conditioned on the agents’ actions and the state determine the relative likelihoods of subsequent states and observations. A reward function determines the utility of executing any action in any state. In a Dec-POMDP, no implicit communication or information sharing between the agents during task execution is assumed. Communication may be explicitly modelled via the actions and observations. Each agent acts independently, without necessarily knowing what the other agents have perceived or how they have acted. The objective in a Dec-POMDP is to plan optimal local policies for each agent that maximize the expected sum of rewards over a finite horizon of time.

A decentralized information gathering task differs from other multi-agent control tasks by the lack of a goal state. It is not the purpose of the agents to execute actions that reach a particular state, but rather to observe the environment in a manner that provides the greatest amount of information while satisfying operational constraints. As the objective is information acquisition, the reward function depends on the joint belief of the agents.

In contrast to most prior work on Dec-POMDPs, in this paper we consider reward functions that are non-linear functions of the joint belief state. Convex functions of a probability mass function naturally model certainty [13], and have been proposed before in the context of single-agent POMDPs [3] and Dec-POMDPs [27]. However, to the best of our knowledge no heuristic or approximate algorithms for convex reward Dec-POMDPs have been proposed, and no theoretical results on the properties of such Dec-POMDPs exist in the literature.

1.1 Contributions

Information gathering in decentralized POMDPs (Dec-POMDPs) remains very much an unexplored topic in multi-agent research. According to our knowledge, the only paper prior to ours on information gathering Dec-POMDPs is [27] (same first author as in this paper). Linear reward Dec-POMDPs are solved in several works [7, 14, 19, 29, 33, 35, 38, 40, 42, 47] and Spaan et. al. gather information in fully centralized multi-agent POMDPs [50]. Our work in contrast focuses on Dec-POMDPs with a non-linear reward function. Information gathering for single-agent POMDPs is formalized by Araya-López et. al. [3] using a convex reward function. In this paper, we prove that the value function of information gathering Dec-POMDPs is convex. Lauri et. al. [27] apply the ideas presented in [3] to the Dec-POMDP setting by modifying an existing search tree based Dec-POMDP algorithm. We instead utilize our new proof of value function convexity by combining the idea of iterative improvement of a fixed-size policy represented as a graph [42], and reasoning about reachability of the graph nodes in a manner methodologically similar to the plan-time sufficient statistics of [35]. In experiments, our algorithm solves problems an order of magnitude larger than prior state-of-the-art. The Dec-POMDP generalizes other decision-making formalisms such as multi-agent POMDPs and Dec-MDPs [7]. Thus, our results also apply to these special cases, as illustrated in Fig. 1.

Specifically, the contributions of our article are:

-

We prove that in Dec-POMDPs where the reward is a convex function of the joint belief, the value function of any finite horizon policy is convex in the joint belief.

-

We propose the first heuristic anytime algorithm for Dec-POMDPs with a reward that is a function of the agents’ joint state information. The algorithm is based on iterative improvement of the value of fixed-size policy graphs. We derive a lower bound that may be improved instead of the exact value, leading to computational speed-ups. We also propose an extension of the algorithm for problems with a continuous state space and finite action and observation spaces.

-

We experimentally verify the feasibility and usefulness of our algorithm in Dec-POMDPs with non-linear rewards, in domains such as decentralized target tracking, scientific survey planning, and signal source localization.

This article is an extended version of our earlier conference paper [28] published in the Proceedings of the International Conference on Autonomous Agents and Multiagent Systems (AAMAS) 2019. The extensions compared to the conference paper are the following:

-

The conference paper is restricted to discrete small state spaces. To improve the applicability of the proposed approach, we extend the algorithm to large discrete and continuous state spaces by utilizing particle filtering.

-

We extend the experimental evaluation with a new benchmark task motivated by signal source localization and mobile robotics. In the benchmark task, the agents can move from a graph node to another and receive observations of a signal source depending on the distance to the source. For the discrete domains, we provide new data for the anytime performance of our proposed algorithm.

-

We extend the related work section, and describe the algorithm in more detail. In particular, we clarify the backward pass. We also provide a complexity analysis of the proposed algorithm.

1.2 Outline

The article is organized as follows. We review related work in Sect. 2. In Sect. 3, we define the Dec-POMDP problem we consider and introduce notation and definitions. Section 4 derives the value of a policy graph node. In Sect. 5, we prove convexity of the value in a Dec-POMDP where the reward is a convex function of the state information. Section 6 introduces our policy improvement algorithm, while in Sect. 7 we present its extension to continuous-state problems with finite action and observation spaces. Experimental results are presented in Sect. 8, and concluding remarks are provided in Sect. 9.

2 Related work

In a wide range of applications, autonomous systems have controllable sensors or the ability to otherwise control data acquisition. This allows active information gathering and planned allocation of sensing resources. Active information gathering is known by several synonyms or near-synonyms depending on the context and subfield, such as sensor management [20] or active perception [6]. In the following, we first review approaches based on other models than Dec-POMDPs for multi-agent active information gathering. Secondly, we review the state-of-the-art in Dec-POMDPs and highlight the differences to the present paper.

2.1 Multi-agent active information gathering

In the following paragraphs, we review related work in multi-agent active information gathering in three major directions: distributed constraint optimization problems, application of sequential greedy maximization of submodular functions, and adaptation of single-agent POMDP methods to the multi-agent setting. Finally, we briefly review other related techniques such as applying open loop planning, gradient ascent based control, stochastic control under simplifying assumptions, and active distributed hypothesis testing.

A detailed review of the solution techniques for each of these related approaches is beyond the scope of the present paper. We refer the reader to relevant literature on the respective topics, and instead focus on describing applications and modelling assumptions and contrasting them to the Dec-POMDP approach to multi-agent active information gathering. Each of the alternative approaches we review makes some simplifying assumptions about the active information gathering problem in contrast to Dec-POMDP based planning. For instance, action effects may be assumed to have known outcomes. The system state may be assumed to be static, or perfectly known to the agents, or it may be assumed to evolve deterministically. Finally, implicit local communication is often assumed to be possible, whereas in the Dec-POMDP communication is allowed only if explicitly modelled.

Distributed constraint optimization problems Information gathering can be framed as a distributed constraint optimization problem (DCOP). Instead of trying to find a policy for each agent that maximizes total expected information gain in a stochastic dynamic system over time, the standard DCOP finds a variable assignment for each agent such that, for example, sensors cover a large area. Compared to a full Dec-POMDP solution a DCOP approach is computationally less intensive but usually requires stronger assumptions or approximations to the underlying problem. There are also extensions to basic DCOP; we discuss DCOPs and these extensions in the context of information gathering next.

Distributed constraint optimization problems (DCOPs) are described by a set of agents and a set of variables along with a set of cost functions. Each variable is assigned to one of the agents. Each of the cost functions is defined on a subset of the variables it is affected by. The cost functions are real-valued, although an additional special value can indicate a violated constraint. A solution of a DCOP is an assignment of all variables that does not violate any of the constraints, with each agent only choosing the assignment of variables assigned to it. The objective is to find a solution that minimizes the sum of the individual cost functions such that no constraints are violated. With regards to communication, a typical setting in DCOPs is that each agent can communicate locally with its neighbouring agents to coordinate actions. The most relevant variants of DCOPs for active information gathering are dynamic DCOPs and probabilistic DCOPs [54], which we discuss next. For a detailed introduction to DCOPs and their solution algorithms, we refer the reader to the recent survey [16].

A dynamic DCOP (D-DCOP) extends the variables, assignments, and cost functions to depend on a discrete time step. A D-DCOP may be viewed as a sequence of DCOP problems, one for each time step. The DCOP at the current time step is assumed to be known by the agents, however the agents are unaware how the DCOP will evolve over future time steps. Zivan et. al. [57] present a D-DCOP variant for controlling a mobile sensor team tracking multiple targets. Each sensor is an agent that has a perfectly observable variable describing its location which may evolve locally over time. Each target is represented by a cost function whose value depends on how well the sensors cover the target. The coverage of a target is measured by a monotonic function of the number of agents within sensing range of the target, and no explicit probabilistic modelling of the sensing process is undertaken.

Markovian D-DCOPs [34] augment D-DCOPs by introducing an underlying state with Markovian dynamics depending on the variable assignments. The cost functions are dependent on the state that is fully observable. Unlike in the D-DCOP, changes in the Markovian D-DCOP are restricted to changes in the underlying state. The Markovian D-DCOP is demonstrated in a multi-sensor tracking environment, where the target state is described using the Markovian dynamics and the variable assignments correspond to assignments of sensors to targets.

Probabilistic DCOPs (P-DCOP) contain two types of variables: decision variables and random variables. Decision variables are analogous to variables in the DCOP, with assignments selected by the agents. Random variables model events beyond the agents’ control and assume values according to a specified probability distribution. In the P-DCOP actions have known outcomes. Uncertainty in environment dynamics is modelled by defining stochastic cost functions. The cost functions may depend on decision variables and random variables. The objective is to find an assignment of all decision variables such that, e.g., the expected utility of the assignments under the probability distribution of the random variables is maximized. P-DCOPs with partial agent knowledge [22, 53] are a variant especially relevant for active information gathering. They introduce a finite time horizon to the P-DCOP, and a solution is an assignment of decision variables for each time step. The objective is to maximize the cumulative utility over the time horizon. At each time step the agents acquire information about the cost functions and the available utility through exploration. P-DCOPs have been applied to a mobile wireless network problem, where the objective is to choose agent locations to maximize the signal strength of an ad-hoc communication relay network formed between the agents [22, 53].

Sequential greedy maximization of submodular functions Submodularity is a property of a set function often used for proving a bound on the suboptimality of greedy algorithms in information gathering. The tasks that we target in this paper are in general not approximately solvable by a greedy algorithm. Below we discuss how information gathering problems are formulated to allow use of greedy submodular maximization with suboptimality bounds. This will help the reader build a deeper understanding of the challenges this paper addresses.

The assignment or selection of sensors can be formulated as a selection of a subset among a larger set of possible choices. The selection corresponds to a choice of which sensors to apply, where to deploy sensors, or how to operate multiple sensors. The utility of an assignment is then measured by a set function that maps the selected subset to a real-valued utility. Several set functions relevant for active information gathering such as mutual information and entropy are submodular. Informally, the submodularity property of a set function encodes the intuition that the marginal benefit of deploying a new sensor is reduced as we deploy more and more sensors. A sequential greedy algorithm is often applied to approximately maximize submodular functions. Such an algorithm sequentially finds the item with the greatest marginal utility, and inserts it into the current subset of selected items. The selection is repeated until a subset with the required number of items has been obtained. Maximization of a submodular set function by this greedy approach results in an approximation within at most a factor of \((1-1/e)\) from an optimal solution. Consequently, this selection method has been applied to optimize sensor placements under a Gaussian process model of spatial phenomena [24]. In this approach, mutual information between the selected sensing locations and the rest of the space is maximized.

In multi-agent active information gathering, submodular function maximization under a matroid constraint is especially relevant. A matroid is a mathematical structure that generalizes the notion of linear independence from vector spaces to sets. In multi-agent active information gathering, the independent sets in a matroid correspond to possible joint actions or sensing strategies of each of the agents in a team. Approximation guarantees for the sequential greedy algorithm outlined above can also be proven for submodular maximization under a matroid constraint [10]. A distributed algorithm based on greedy maximization for multi-robot exploration is proposed in [12]. As the proposed algorithm runs in parallel on all of the agents, the required time of planning is reduced compared to sequential planning for each agent in turn, e.g., as proposed in [4]. To simplify the problem, Corah and Michael [12] plan over sequences of actions rather than over closed-loop policies. Contrary to a Dec-POMDP model, a fully connected communication network and a shared belief state among all agents are assumed.

In distributed submodular optimization under a matroid constraint, the information an agent has about the actions of other agents affects the approximation quality obtained with the greedy algorithm. Gharesifard and Smith [17] assume each agent knows the strategies of its neighbouring agents, as defined by a communication graph. A local greedy algorithm is found to approximate an optimal solution within a factor inversely proportional to the clique number of the communication graph: the larger the maximum clique in the graph, the better the approximation factor.

Adapting single-agent POMDPs Single-agent POMDPs feature imperfect knowledge of the underlying true state in a system, uncertain action effects and noisy observations. If instantaneous communication and centralized control are assumed, multi-agent active perception may be treated as a POMDP under the control of a central controller that determines the actions of all individual agents [45, 50].

When strong centralization assumptions are infeasible, POMDP techniques may be adapted to multi-agent active information gathering by adding additional coordination mechanisms. A notable example is [11], where a decentralization scheme based on an auction mechanism is proposed. Each agent is assumed to play one of a finite set of roles or behaviours. Each role corresponds to a specific local reward function that depends on the local state and action of the agent playing the role. The complete reward function is defined as the sum of local reward functions and a joint reward function modelling agent cooperation. At run-time, each individual agent first solves a centralized multi-agent POMDP planning problem to compute a policy and its value for each of the potential roles. An auction algorithm is applied for task allocation where the cost of assigning a policy to an agent is equal to the negative value of the policy, resulting in high-value policies likely being assigned to each agent. The resulting approach requires some communication between the agents to facilitate the bidding, but can avoid the computational complexity of planning in a Dec-POMDP. The approach is demonstrated in two scenarios, environmental monitoring and cooperative tracking.

Other related work Multi-agent information gathering may be formulated as an open-loop planning problem, where the solution is a sequence of actions or a path to be traversed for each agent. These approaches ignore the adaptivity of Dec-POMDP solutions that allow agents to modify their behaviour conditional on the observations received at run-time. Instead, if communication is possible at some time after starting execution of the paths, replanning may be applied to update the solution based on the information acquired up to that time. An example of decentralized open-loop planning with periodic communication is the decentralized Monte Carlo tree search (Dec-MCTS) algorithm [9]. The agents are first provided with initial information of each others’ plans. Each agent in a team runs an instance of MCTS to optimize its local actions, assuming the other agents will act according to their last known plan. The updated plans found via local MCTS instances are periodically communicated between the agents, and planning is restarted. Hollinger and Singh [21] formalize multi-agent exploration as path planning in a time-expanded graph. Instead of assuming communication links exist between agents, feasible paths are constrained in a way that agents will periodically be within communication range. While in communication range, the agents share information and may replan to update the subsequent paths.

Several works propose to derive a control policy for each individual agent that follows the gradient of mutual information [5, 23]. Joint belief states are updated via agent-to-agent communication. Communication links are described by a graph, and convergence of the state estimate to the true value is shown in the case of a connected graph.

The problem of planning policies for multi-agent active information gathering can be simplified if assumptions are made regarding the dynamics of the state transitions and the observation processes of the agents. Schlotfeldt et. al. [46] investigate a problem with a deterministic state transition model with a linear-Gaussian model of the observation process. The joint belief state in this case is Gaussian and may be tracked by a Kalman filter (see, for example, [44, Sect. 4.3]). In particular, the covariance matrix of the Gaussian is independent of the actual measurement values recorded. The covariance matrix only depends on the deterministic state trajectory of the agent. This allows planning a policy for active information gathering by solving an open loop control problem, analogous to similar approaches in classical control theory where a measurement subsystem can be controlled independently of the overall plant control task [30]. Schlotfeldt et. al. [46] search policies for each agent sequentially, assuming the other agents’ plans are fixed. A distributed estimation scheme is proposed to enable decentralization, requiring a connected communication graph to guarantee state estimate convergence.

Distributed hypothesis testing [26] targets a scenario where a stationary hidden state (hypothesis) should be inferred from noisy observations recorded by a team of agents. The agents perform local Bayesian belief updates, and communicate the updates to neighbouring agents. A consensus algorithm is applied to fuse the updates from neighbours into an updated local belief with convergence guarantees. Active distributed hypothesis testing where each agent can choose among a finite set of sensing actions is considered by Lalitha and Javidi [25]. They characterize randomized action strategies that ensure that the maximum likelihood estimate of the hypothesis converges to the true hypothesis.

2.2 Decentralized POMDPs

In this subsection, we first provide a brief summary of the state-of-the-art in Dec-POMDPs with linear reward functions. The expected value of a reward function that depends on the hidden state and action is a linear function of the joint belief. These types of rewards are standard in Dec-POMDPs. Then, we describe related work in active information gathering in single-agent POMDPs and Dec-POMDPs that are especially relevant for the work presented in this paper. These works target problems with a reward function that is convex in the joint belief, allowing convenient modelling of information gathering tasks.

Dec-POMDPs with linear reward functions The computational complexity of finding an optimal decentralized policy for a finite-horizon Dec-POMDP is NEXP-complete [7], that is, double-exponential w.r.t. the planning horizon. It is also NEXP-complete to compute solutions with an absolutely bounded error [43].

Exact algorithms for Dec-POMDPs may apply either backwards in time dynamic programming [19], or forwards in time heuristic search [38, 52]. It can also be shown that a Dec-POMDP is equivalent to a special case of a POMDP that is completely unobservable [14, 29, 36]. The state space of this POMDP is the set of possible plan-time sufficient statistics [35], which are joint distributions over the hidden state and the histories of the agents’ actions and observations given the past policies executed by the agents. The actions correspond to selecting the next decision rules for the agents. An optimal policy for such a non-observable POMDP is an optimal sequence of local decision rules for each agent, which corresponds to an optimal solution of the equivalent Dec-POMDP. This insight has allowed adaptation of POMDP algorithms for solving Dec-POMDPs [14].

Approximate and heuristic methods for solving Dec-POMDPs have been proposed, e.g., based on finding locally optimal “best response” policies for each agent [33], memory-bounded dynamic programming [47], cross-entropy optimization over the space of policies [40, 41], or monotone iterative improvement of fixed-size policies [42]. Algorithms for special cases such as goal-achievement Dec-POMDPs [2] and factored Dec-POMDPs [39] have also been proposed.

Structural properties, such as transition, observation, and reward independence between the agents, can also be leveraged and may even result in a problem with a lesser computational complexity [1]. Some Dec-POMDP algorithms [38] take advantage of plan-time sufficient statistics. The sufficient statistics provide a means to reason about possible distributions over the hidden state, also called joint beliefs, reached under a given policy.

No implicit communication between the agents is assumed in the Dec-POMDP framework. However, communication may be explicitly included into the Dec-POMDP model if desired, leading to the so-called Dec-POMDP-Com model [18]. Spaan et. al. [49] also include communication into their Dec-POMDP model but in addition split state features into local and shared global ones making belief tracking, as in POMDP models, possible. In another line of work, Wu et. al. [56] investigate online decision making in Dec-POMDPs. Their goal is to reduce the amount of communication needed in online Dec-POMDP planning: agents re-synchronize with other agents whenever the behavior of other agents changes sufficiently from the expected behavior.

Active Information Gathering in POMDPs and Dec-POMDPs In the context of single-agent POMDPs, Araya-López et al. [3] argue that information gathering tasks are naturally formulated using a reward function that is a convex function of the state information and introduce the \(\rho \hbox {POMDP}\) model with such a reward. This enables application of, for example, the negative Shannon entropy of the state information as a component of the reward function. Under a locally Lipschitz continuous reward function, an optimal value function of a \(\rho \hbox {POMDP}\) is Lipschitz-continuous [15] which may be exploited in a solution algorithm.

Spaan et. al. [50] introduce an alternative formulation for information gathering in single agent POMDPs that assumes problem specific state features and for each state feature a special action for committing to a specific value of the state feature. This allows for rewarding information gathering without changing the POMDP optimization process at the cost of growing the action space and the need for selecting features. Subsequently, it was proven that a \(\rho \hbox {POMDP}\) with a piecewise linear and convex reward function can be transformed into a standard POMDP with a linear reward function [45]. The key to making the transformation is to augment the action space of the POMDP by adding a prediction action for each of the linear components of the \(\rho \hbox {POMDP}\) reward function. The reward function for the new prediction actions is set to return the reward of the corresponding component of the \(\rho \hbox {POMDP}\) reward function. The conversion also allows an optimal solution for either problem to be transformed into an optimal solution of the other problem in polynomial time.

In this article, we present the first heuristic algorithm for Dec-POMDPs with rewards that depend non-linearly on the joint belief. Our algorithm is based on the combination of the idea of using a fixed-size policy represented as a graph [42] with plan-time sufficient statistics [35] to determine joint beliefs at the policy graph nodes. The local policy at each policy graph node is then iteratively improved, monotonically improving the value of the node. We show that if the reward function is convex in the joint belief, then the value function of any finite-horizon Dec-POMDP policy is convex as well. This is a generalization of a similar result known for single-agent POMDPs [3]. Lauri et. al. [27] directly apply the ideas presented in [3] to the Dec-POMDP setting by modifying an existing search tree based Dec-POMDP algorithm that can solve small problems. However, our new approach allows us to derive a lower bound for the value of a policy. We empirically show that an algorithm maximizing this lower bound finds high quality solutions. Thus, compared to prior state-of-the-art in Dec-POMDPs with convex rewards [27], our algorithm is capable of handling problems an order of magnitude larger.

We do not require any communication between agents during task execution, and do not make any distributional assumptions about the state transition or observation models.

3 Decentralized POMDPs

We next formally define the Dec-POMDP problem we consider. Contrary to most earlier works, we define the reward as a function of state information and action. This allows us to model information acquisition problems. We choose the finite-horizon formulation to reflect the fact that a decentralized information gathering task should have a clearly defined end after which the collected information is pooled and subsequent inference or decisions are made.

A finite-horizon Dec-POMDP is a tuple (T, I, S, \(\{A_i\}\), \(\{Z_i\}\), \(P^s\), \(P^z\), \(b^0\),\(\{\rho _t\})\), where

-

\(T \in {\mathbb {N}}\) is the problem time horizon. In other words, time steps \(t=0,1, \ldots , T\) are considered in the problem.

-

\(I=\{1, 2, \ldots , n\}\) is a set of agents.

-

S is a finite set of hidden states. We write \(s^t\) for a state at time t.

-

\(\{A_i\}\) and \(\{Z_i\}\) are the collections of finite local action and local observation sets of each agent \(i \in I\), respectively. The local action and observation of agent i at time t are written as \(a_i^t \in A_i\) and \(z_i^t \in Z_i\), respectively. We write as A and Z the Cartesian products of all \(A_i\) or \(Z_i\), respectively. Furthermore, the joint action and observation at time t are written as \(a^t = (a_1^t, \ldots , a_n^t) \in A\) and \(z^{t+1}=(z_1^{t+1}, \ldots , z_n^{t+1})\in Z\), respectively.

-

\(P^s\) is the state transition probability that gives the conditional probability \(P^s(s^{t+1}\mid s^t, a^t)\) of the new state \(s^{t+1}\) given the current state \(s^t\) and joint action \(a^t\).

-

\(P^z\) is the observation probability that gives the conditional probability \(P^z(z^{t+1}\mid s^{t+1}, a^t)\) of the joint observation \(z^{t+1}\) given the state \(s^{t+1}\) and previous joint action \(a^t\).

-

\(b^0 \in \varDelta (S)\) is the initial state distribution,Footnote 1 also called the joint belief, at time \(t=0\).

-

\(\rho _t: \varDelta (S)\times A \rightarrow {\mathbb {R}}\) are the reward functions at times \(t=0, \ldots , T-1\), while \(\rho _T:\varDelta (S) \rightarrow {\mathbb {R}}\) determines a final reward obtained at the end of the problem horizon.

Most works in Dec-POMDPs up to date define state-dependent reward functions of the form \(R_t: S\times A \rightarrow {\mathbb {R}}\) and \(R_T: S \rightarrow {\mathbb {R}}\) for time steps \(t=0,1,\ldots , T-1\) and \(t=T\), respectively. The Dec-\(\rho \hbox {POMDP}\) with belief-dependent reward functions as defined above generalizes a Dec-POMDP with state-dependent rewards. The standard Dec-POMDP with a state-dependent reward function is recovered by choosing the belief-dependent reward to equal the expected state-dependent reward, that is, \(\rho _t(b,a) := {\mathbb {E}}_{s\sim b}[R_t(s,a)]\). We choose the symbol \(\rho _t\) for belief-dependent rewards to distinguish from state-dependent reward functions and to be consistent with [3] that in part inspired this work.

The Dec-POMDP starts from some state \(s^0 \sim b^0\). Each agent \(i\in I\) then selects a local action \(a_i^0\), and the joint action \(a^0=(a_1^0, \ldots , a_n^0)\) is executed. The time step is incremented, and the state transitions according to \(P^s\), and each agent perceives a local observation \(z_i^1\), where the likelihood of the joint observation \(z^1=(z_1^1, \ldots , z_n^1)\) is determined according to \(P^z\). The action selection and observation process repeats similarly until \(t=T\) when the task ends.

Optimally solving a Dec-POMDP means to design a policy for each agent that encodes which action the agent should execute conditional on its past observations and actions; in a manner such that the expected sum of rewards collected is maximized. In the following, we make the notion of a policy exact, and determine the expected sum of rewards collected when executing a policy.

3.1 Histories and policies

At any time step while the agents are executing actions in a Dec-POMDP, each agent only has knowledge of its own past local actions and observations and the initial state distribution \(b^0\). Each agent may decide on its next local action based on this knowledge. We formalize the information available to agent i at time t as a local history. At the starting time \(t=0\) the information available to any agent i is completely described by a local history \(h_i^0 = b^0\). On subsequent time steps \(t\ge 1\), the local history \(h_i^t\) of any agent i belongs to the local history set

Analogously, we define the joint history set \(H^t = \{(b^0, a^0, z^1, \ldots , a^{t-1}, z^{t}) \mid \forall k: a^k \in A, z^k \in Z\}\) for joint actions and observations. Given a joint history \(h^t\), we may view its composite local histories as the tuple \((h_1^t, \ldots , h_n^t)\), or vice versa. Both the local and joint histories satisfy the recursion of the form \(h^t = (h^{t-1}, a^{t-1}, z^t)\).

In general, a local policy for agent i is a set of mappings \(H_i^t \mapsto A_i\), one for every \(t=0,1,\ldots , T-1\). Such a local policy determines the next action the agent should take conditional on any possible local history up to the current time. The fact that local policies only depend on an agent’s local actions and observations is a key feature of Dec-POMDPs which ensures that an agent can execute its local policy in a decentralized manner, without knowledge about the other agents’ actions and observations. A solution of a Dec-POMDP is in turn a joint policy, which is the collection of all agents’ local policies. An optimal solution is a joint policy that maximizes the expected sum of rewards obtained when each agents acts according to its local policy contained in the joint policy.

We define a local policy as a particular kind of finite state controller (FSC) following [42]. This choice allows a compact representation of policies by viewing them as FSCs with few nodes. Furthermore, as we will argue later, increasing the number of nodes allows retaining the generality of the definition given in the paragraph above.

Definition 1

(Local policy) For agent i, a local policy is \(\pi _i=(Q_i, q_{i,0}, \gamma _i, \lambda _i)\), where \(Q_i\) is a finite set of nodes, \(q_{i,0} \in Q_i\) is a starting node, \(\gamma _i: Q_i\rightarrow A_i\) is an output function that determines which action to take, and \(\lambda _i: Q_i\times Z_i \rightarrow Q_i\) is a node transition function that determines the next node conditional on the last observation.

This definition coincides with that of a Moore machine [31]. Another useful way to view a local policy is as a directed acyclic graph, where policy execution is equivalent to graph traversal starting from the specified starting node. Figure 2 shows an example of such a local policy graph, along with a description of how the local policy is executed. The depicted local policy is suitable for a problem with a horizon \(T=3\).

A local policy \(\pi _i=(Q_i, q_{i,0}, \gamma _i, \lambda _i)\) for agent i with local action space \(A_i=\{a_{i,0}, a_{i,1}, a_{i,2}\}\) and local observation space \(Z_i=\{z_{i,0}, z_{i,1}\}\) represented as a directed acyclic graph. The shaded circles show the set of nodes \(Q_i\), the starting node is \(q_{i,0}\). The dashed boxes indicate the subsets \(Q_i^t \subset Q_i\) of nodes reachable at time t. The table on the right shows the output function \(\gamma _i\) that determines the action to take in any node. The node transition function \(\lambda _i\) is indicated by the out-edges from each node: for example, \(\lambda _i(q_{i,0},z_{i,0}) = q_{i,1}\). Execution of the policy is equivalent to traversal of the graph starting from the initial node, taking actions indicated by \(\gamma _i\), and transitioning to the next node conditional on the next observation according to \(\lambda _i\)

An arbitrary node may be reached by zero (in case the node has no in-edges), one, or more than one local histories. This will be important in our subsequent analysis of the problem and the proposed solution algorithm. For example, in the case of Fig. 2, node \(q_{i,3}\) is reachable by three local histories, namely \((b^0, a_{i,0}, z_{i,0}, a_{i,1}, z_{i,0})\), \((b^0, a_{i,0}, z_{i,0}, a_{i,1}, z_{i,1})\), and \((b^0, a_{i,0}, z_{i,1}, a_{i,2}, z_{i,1})\). The first two of these local histories traverse through the nodes \(q_{i,0}\rightarrow q_{i,1} \rightarrow q_{i,3}\), while the last local history traverses through nodes \(q_{i,0}\rightarrow q_{i,2} \rightarrow q_{i,3}\). Although the local histories are different, the action to be taken next after experiencing them is the same, \(\gamma _i(q_{i,3})=a_{i,1}\).

Next, we formally define the concept of a joint policy that is a collection of each agent’s local policies.

Definition 2

(Joint policy) Given local policies \(\pi _i=(Q_i\), \(q_{i,0}\), \(\gamma _i\), \(\lambda _i)\) for all agents \(i \in I\), the corresponding joint policy is the tuple \(\pi =(Q, q_0, \gamma , \lambda )\), where Q is the Cartesian product of all \(Q_i\), \(q_0 = (q_{1,0}, \ldots , q_{n,0}) \in Q\) is the initial node, and for an arbitrary joint policy node \(q=(q_1, \ldots , q_n)\in Q\) and joint observation \(z=(z_1,\ldots , z_n)\in Z\), the output function \(\gamma :Q\rightarrow A\) determines the joint action to take in a node according to \(\gamma (q) = (\gamma _1(q_1), \ldots , \gamma _n(q_n))\), and the node transition function \(\lambda :Q\times Z \rightarrow Q\) is defined such that \(\lambda (q,z) = (\lambda _1(q_1,z_1), \ldots , \lambda _n(q_n,z_n))\).

Figure 3 shows an example of a joint policy constructed from two local policies using the definition above. The nodes \(q\in Q\) in the joint policy graph are tuples of nodes in the local policy graphs: \(Q=Q_1 \times Q_2\). For example, the node \(q=(q_{1,3}, q_{2,3})\) indicates that both agents i are currently executing their respective local policies \(\pi _i\) at node \(q_{i,3}\). The dashed boxes indicate the subsets \(Q^t \subset Q\) of nodes reachable at time t. The node output function \(\gamma\) and node transition function \(\lambda\) of the joint policy are constructed from the corresponding function \(\gamma _i\) and \(\lambda _i\) of the local policies, respectively, as described in Definition 2. From each node \(q\in Q\), there is now an out edge for each joint observation \(z\in Z\). As is the case for local policies, also in a joint policy a given node may be reached by zero, one, or more than one joint history. Since a joint policy is constructed from local policies that are decentrally executable, the joint policy is also decentrally executable.

The generality of the policy representation is maintained, as any finite horizon local policy can be represented by a local policy graph with sufficiently many nodes. If the size of the local policy graph is grown sufficiently a graph where each node is reachable by a single unique local history can be created. Such a policy graph is in fact a tree and can represent any possible mapping from local histories to local actions and thus any possible local policy. A joint policy composed of such trees is a so-called pure deterministic policy for a Dec-POMDP in the sense that an agent’s local observation history is sufficient to determine the agent’s next action. It is known that there exists an optimal pure policy in every Dec-POMDP [40]. Since a tree can represent an optimal policy, the policy graph representation we choose can also represent an optimal policy given enough nodes. Consequently, our main theoretical results hold in the general case, and are not limited to our particular policy representation. Our emphasis in this paper is on compact local policies that are not trees and are not guaranteed to be able to represent an optimal policy. We refer the reader to [40] for further discussion of possible types of policies for Dec-POMDPs.

A joint policy \(\pi =(Q,q_0, \gamma , \lambda )\) composed of two local policies \(\pi _1\) and \(\pi _2\) that are identical to the one shown in Fig. 2. For clarity, we denote existence of parallel edges by single edges with labels such as \((\cdot , z_{2,1})\), which indicates there are parallel edges for joint observations where the local observation of agent 1 can assume any value, and the local observation of agent 2 is \(z_{2,1}\)

To close this subsection, we give a technical condition for policy graphs that constrains the structure of local policies. The condition, called temporal consistency, ensures that each node can be identified with a unique time step.

Definition 3

(Temporal consistency) A local policy \(\pi _i=(Q_i\), \(q_{i,0}\), \(\gamma _i\), \(\lambda _i)\) is temporally consistent if there exists a partition \(Q_i = \bigcup _{t=0}^{T-1} Q_i^t\) where \(Q_i^t\) are pairwise disjoint and non-empty, \(Q_i^0 = \{q_{i,0}\}\), and for any \(t=0, \ldots , T-2\), for \(q_i^t \in Q_i^t\), for all \(z_i\in Z_i\), \(\lambda _i(q_i^t, z_i) \in Q_i^{t+1}\).

In a temporally consistent policy, at a node in \(Q_i^t\) the agent has \((T-t)\) decisions left until the end of the problem horizon. Temporal consistency guarantees that exactly one node in each set \(Q_i^t\) can be visited, and that after visiting a node in \(Q_i^t\), the next node will belong to \(Q_i^{t+1}\). Temporal consistency naturally extends to joint policies, such that there exists a partition of Q by pairwise disjoint sets \(Q^t\). In Figs. 2 and 3, the sets \(Q_i^t\) and \(Q^t\) are indicated by the dashed boxes. Throughout the rest of the article, we assume temporal consistency holds for all policies. The assumption of temporal consistency does not restrict our proposed method, it merely allows us to refer to a subset of nodes reachable at a particular time step.

3.2 Bayes filter

While planning policies for information gathering, it is useful to reason about the joint belief of the agents given some joint history. This can be done via recursive Bayesian filtering, see [44] for a general overview of the topic. Recursive Bayesian filtering is a process by which the probability mass function over the hidden state of the system is estimated given a joint history and the state transition and observation probability models of the Dec-POMDP. Recall that during policy execution agents only perceive their own local actions and observations. Thus, Bayesian filtering typically cannot be achieved online by an individual agent as it lacks the necessary information. However, if the local policies of each agent are planned centrally as is the usual case in Dec-POMDPs, Bayesian filtering may be applied during planning to reason about the possible joint beliefs the system may reach.

We now describe the discrete Bayesian filter we use to track the joint belief. The initial joint belief \(b^0\) is a function of the state at time \(t=0\), and for any state \(s^0 \in S\), \(b^0(s^0)\) is equal to the probability \(P(s^0 \mid h^0)\). When a joint action \(a^0\) is executed and a joint observation \(z^{1}\) is perceived, we find the posterior belief \(P(s^1 \mid h^1)\) where \(h^{1}=(h^0, a^0, z^1)\) applying a Bayes filter. For notational convenience, we drop the explicit dependence of \(b^t\) on the joint history in the following. In general, given any current joint belief \(b^t\) corresponding to some joint history \(h^t\), and a joint action \(a^t\) and joint observation \(z^{t+1}\), the posterior joint belief is calculated by

where

is the normalization factor equal to the prior probability of observing \(z^{t+1}\). Given \(b^0\) and any joint history \(h^t=(b^0\),\(a^0\),\(z^1\), \(\ldots\), \(a^{t-1}\), \(z^t)\), repeatedly applying Eq. (1) yields a sequence \(b^0, b^1, \ldots , b^t\) of joint beliefs. We shall denote the application of the Bayes filter (Eq. (1)) by the shorthand notation

Furthermore, we shall denote the filter that recovers \(b^t\) given \(h^t\) by repeated application of \(\zeta\) by a function \(\tau : H^t \rightarrow \varDelta (S)\), that is,

The innermost application of \(\zeta\) recovers \(b^1\) given \(b^0\), \(a^0\), and \(z^1\). The output is the input to the next application of \(\zeta\) together with \(a^1\) and \(z^2\). This process is repeated until the \(t\hbox {th}\) application of \(\zeta\) which outputs \(b^t\).

3.3 Value of a policy

The value of a joint policy \(\pi =(Q, q_0, \gamma , \lambda )\) is equal to the expected sum of rewards collected when acting according to the policy, starting from the initial joint belief. To characterize the value of a policy, we define value functions \(V_t^\pi :\varDelta (S) \times Q^{t} \rightarrow {\mathbb {R}}\) for \(t=T, T-1, \ldots , 0\) using a backwards in time dynamic programming principle. Each \(V_t^\pi (b, q)\) gives the expected sum of rewards when following policy \(\pi\) until the end of the horizon when t decisions have been taken so far, for any joint belief \(b\in \varDelta (S)\) and any policy node \(q\in Q^{t}\). Then, \(V_0^\pi (b^0, q_0)\) is the value of the policy.

We start with time step \(t=T\) which is a special case when all actions have already been taken, and the value function only depends on the joint belief and is equal to the final reward: \(V_T(b) = \rho _T(b)\).

For \(t=T-1\), one decision remains, and the remaining expected sum of rewards of executing policy \(\pi\) is equal to

that is, the sum of the immediate reward and the expected final reward at time T. From above, we define \(V_t^\pi\) iterating backwards in time for \(t=T-2, \ldots , 0\) as

The objective is to find an optimal policy \(\pi ^* \in {\mathop {{\mathrm{argmax}}}\limits _{\pi }} V_0^\pi (b^0, q_0)\) whose value is greater than or equal to the value of any other policy.

4 Value of a policy node

Executing a policy corresponds to a stochastic traversal of the policy graphs (Fig. 2) conditional on the observations perceived. In this section, we first answer two questions related to this traversal process. First, given a history, when is it consistent with a policy, and which nodes in the policy graph will be traversed (Sect. 4.1)? Second, given an initial state distribution, what is the probability of reaching a given policy graph node, and what are the relative likelihoods of histories if we assume a given node is reached (Sect. 4.2)? With the above questions answered, we define the value of a policy graph node both in a joint and in a local policy (Sect. 4.3). These values will be useful in designing a policy improvement algorithm for Dec-POMDPs. Probabilities of joint histories conditioned on the local information of one agent have been derived earlier for reasoning what one agent can know about the experiences of other agents [33]. Oliehoek [35] introduces plan-time sufficient statistics describing the joint distribution over hidden states and joint observation histories. Our derivation here is methodologically similar, but we consider explicitly the setting where a policy is represented as a graph and reason about reachability probability of a policy graph node that summarizes multiple joint histories, rather than the reachability probability of a particular joint history.

4.1 History consistency

A history is consistent with a policy when executing the policy could have resulted in the given history. In the context of policy graphs defined in Sect. 3.1, consistency means that the actions in the history are equal to those that would be taken by the policy conditional on the observations in the history.

Definition 4

(History consistency) We are given for all \(i\in I\) the local policy \(\pi _i=(Q_i\),\(q_{i,0}\),\(\gamma _i\),\(\lambda _i)\), and the corresponding joint policy \(\pi =(Q\),\(q_0\),\(\gamma\),\(\lambda )\).

-

1.

A local history \(h_i^t=(b_0, a_i^0, z_i^1, \ldots , a_i^{t-1},z_i^{t})\) is consistent with \(\pi\) if the sequence of nodes \((q_i^0, q_i^1, \ldots , q_i^t)\) where \(q_i^0 = q_{i,0}\) is the initial node and \(q_i^k = \lambda _i(q_i^{k-1},z_i^k)\) for \(k=1, \ldots , t\) satisfies: \(a_i^k = \gamma _i(q_i^k)\) for every k. We say \(h_i^t\) ends at \(q_i^t \in Q_i^t\) under \(\pi\).

-

2.

A joint history \(h^t = (h_1^t, \ldots , h_n^t)\) is consistent with \(\pi\) if for all \(i\in I\), \(h_i^t\) is consistent with \(\pi\) and ends at \(q_i^{t}\). We say \(h^t\) ends at \(q^t=(q_1^t, \ldots , q_n^t) \in Q^t\) under \(\pi\).

Due to temporal consistency, any \(h_i^{t}\in H_i^{t}\) consistent with a policy will end at some \(q_i^t \in Q_i^{t}\). Similarly, any \(h^t\in H^t\) ends at some \(q^t \in Q^{t}\).

4.2 Node reachability probabilities

Above, we have defined when a history ends at a particular node. Using this definition, we now derive the joint probability mass function (pmf) \(P(q^t, h^t \mid \pi )\) of policy nodes and joint histories given that a particular policy \(\pi\) is executed.

We note that \(P(q^t, h^t \mid \pi ) = P(q^t\mid h^t, \pi )P(h^t\mid \pi )\) and first consider \(P(h^t \mid \pi )\). The unconditional a priori probability of experiencing the joint history \(h^0=(b^0)\) is \(P(h^0)=1\). For \(t\ge 1\), the unconditional probability of experiencing \(h^t\) is obtained recursively by \(P(h^t) = \eta (z^t \mid \tau (h^{t-1}), a^{t-1})P(h^{t-1})\). Conditioning \(P(h^t)\) on a policy yields \(P(h^t\mid \pi ) = P(h^t)\) if \(h^t\) is consistent with \(\pi\) and 0 otherwise. Next, we have \(P(q^t \mid h^t, \pi ) = \prod _{i\in I} P(q_i^t \mid h_i^t, \pi )\), with \(P(q_i^t \mid h_i^t, \pi ) = 1\) if \(h_i^t\) ends at \(q_i^t\) under \(\pi\) and 0 otherwise.

Combining the above, the joint pmf is defined as

Marginalizing over \(h^t\), the probability of ending at node \(q^t\) under \(\pi\) is

and by definition of conditional probability,

We now find the probability of ending at \(q_i^t\) under \(\pi\). Let \(Q_{-i}^t\) denote the Cartesian product of all \(Q_j^t\) except \(Q_i^t\), that is,

Then \(q_{-i}^t \in Q_{-i}^t\) denotes the nodes for all agents except i. We have \((q_{-i}^t, q_i^t) \in Q^t\). The probability of ending at \(q_i^t\) under \(\pi\) is

where the sum terms are determined by Eq. (7). Again, by definition of conditional probability,

where the term in the numerator is obtained from Eq. (7).

4.3 Value of policy nodes

We define the values of a node in a joint policy and an individual policy.

Definition 5

(Value of a joint policy node) Given a joint policy \(\pi =(Q,q_0,\gamma ,\lambda )\), the value of a node \(q^t \in Q^{t}\) is defined as

where \(P(h^t\mid q^t, \pi )\) is defined in Eq. (8) and \(\tau (h^t)\) is the joint belief corresponding to history \(h^t\) as given in Eq. (4).

To give intuition about the definition above, consider the joint policy \(\pi\) in Fig. 3, and suppose \(q^t=(q_{1,3},q_{2,4})\). This node can be reached by three joint histories that correspond to the following three sequences of joint observations, listed as tuples of local observations: \(\left( (z_{1,0},z_{2,1}), (z_{1,0},z_{2,0}) \right)\), \(\left( (z_{1,0},z_{2,1}), (z_{1,1},z_{2,0}) \right)\), and \(\left( (z_{1,1},z_{2,1}), (z_{1,1},z_{2,0}) \right)\). Note that the out-edge of \(q^t\) labeled with \((\cdot , z_{2,0})\) corresponds to two joint observations as described in the figure caption. The probabilities of joint histories are quantified by \(P(h^t \mid q^t, \pi )\), which can be computed as outlined in Sect. 4.2. This probability can be non-zero only for the three joint histories that end in \(q^t\). The value of the node, \(V_t^{\pi }(q^t)\) is obtained by taking the expectation of \(V_t^\pi (\tau (h^t), q^t)\) under the pmf of histories.

Another example for an arbitrary node \(q^t\) of a policy \(\pi\) is shown in Fig. 4. Here, the horizontal axis indicates the joint belief state in a Dec-POMDP with two underlying hidden states. The joint belief in this case is represented by a single real number denoting the probability of the first of these two states. The red curve depicts the value function \(V_t^\pi (b^t, q^t)\) of the policy. The blue circle markers corresponding to the blue right-hand vertical axis depict the pmf \(P(h^t\mid q^t, \pi )\) of histories at the node. The value \(V_t^\pi (q^t)\) above is defined as the expectation of the value function (red curve) under the pmf of histories (blue circle markers).

An example of the value function of a node and its lower bound. The horizontal axis denotes the joint belief in a two-state Dec-POMDP as a real number indicating the probability of the first state. On the red left-hand vertical axis the value function \(V_t^\pi (b^t, q^t)\) of the policy is drawn as a convex function of the joint belief. The blue right-hand vertical axis denotes the probability of joint histories, and the three lines with blue circle markers denote an example of a distribution \(P(h^t\mid q^t,\pi )\) of joint belief states at a joint policy graph node. The exact value \(V_t^\pi (q^t)\) of the node is calculated as the expectation of the value function \(V_t^\pi (b^t, q^t)\) under \(P(h^t\mid q^t,\pi )\). A lower bound for the value of the node is found by instead taking the value function at the expected joint belief state as indicated by the dashed lines

Definition 6

(Value of a local policy node) For \(i\in I\), let \(\pi _i=(Q_i,q_{i,0},\gamma _i,\lambda _i)\) be the local policy and let \(\pi =(Q,q_0,\gamma ,\lambda )\) be the corresponding joint policy. For any \(i \in I\), the value of a local node \(q_i^t \in Q_i^{t}\) is

where \(P(q_{-i}^t \mid q_i^t, \pi )\) is defined in Eq. (10).

The value of a local node \(q_i^t\) is equal to the expected value of the joint node \((q_{-i}^t, q_i^t)\) under \(q_{-i}^t \sim P(q_{-i}^t \mid q_i^t, \pi )\). Suppose we wish to quantify the value of a local node \(q_i^t\) of agent i. From the perspective of agent i, it is not aware of which local policy nodes the other agents in their respective local policies are located at. However, since agent i is aware of the local policies of all agents, it can deduce which local policy nodes are possible for the other agents. These are the local policy nodes of agents other than i at the same time step as \(q_i^t\), that is, the nodes in \(Q_{-i}^t\). As an example, consider the case depicted in Fig. 3 and suppose we wish to compute the value of \(q_1^t = q_{1,3}\) for agent 1. The second agent’s node \(q_{2}^t\) belongs to \(Q_2^t = \{q_{2,3}, q_{2,4}\}\). The probability of the second agent being in either of these two nodes is obtained from \(P(q_{2}^t \mid q_1^t, \pi )\) as outlined in Sect. 4.2. According to the definition above, the value of \(q_{1,3}\) is then computed as \(P(q_{2,3}\mid q_{1,3}, \pi )V_t^\pi ((q_{1,3},q_{2,3})) + P(q_{2,4}\mid q_{1,3}, \pi )V_t^\pi ((q_{1,3},q_{2,4}))\).

5 Convex-reward Dec-POMDPs

In this section, we prove several results for the value function of a Dec-POMDP whose reward function is convex in \(\varDelta (S)\). Convex rewards are of special interest in information gathering. This is because of their connection to so-called uncertainty functions [13], which are non-negative functions concave in \(\varDelta (S)\). Informally, an uncertainty function assigns large values to uncertain beliefs, and smaller values to less uncertain beliefs. Negative uncertainty functions are convex and assign high values to less uncertain beliefs, and are suitable as reward functions for information gathering. Examples of uncertainty functions include Shannon entropy, generalizations such as Rényi entropy, and types of value of information, for example, the probability of error in hypothesis testing.

The following theorem shows that if the immediate reward functions are convex in the joint belief, then the finite horizon value function of any policy is convex in the joint belief.

Theorem 1

If the reward functions \(\rho _T:\varDelta (S)\rightarrow {\mathbb {R}}\) and \(\rho _t:\varDelta (S)\times A \rightarrow {\mathbb {R}}\) are convex in \(\varDelta (S)\), then for any policy \(\pi\), \(V_T:\varDelta (S)\rightarrow {\mathbb {R}}\) is convex and \(V_t^\pi : \varDelta (S) \times Q^t \rightarrow {\mathbb {R}}\) is convex in \(\varDelta (S)\) for any t.

Proof

Let \(\pi =(Q,q_0,\gamma ,\lambda )\), and \(b\in \varDelta (S)\). We proceed by induction (\(V_T(b) = \rho _T(b)\) is trivial). For \(t=T-1\), let \(q^{T-1} \in Q^{T-1}\), and denote by \(a := \gamma (q^{T-1})\) the joint action taken according to \(\pi\) at this node. From Eq. (5), \(V_{T-1}^\pi (b,q^{T-1}) = \rho _{T-1}(b,a) + \sum \limits _{z\in Z}\eta \left( z\mid b, a\right) V_T\left( \zeta \left( b,a, z\right) \right)\). We recall from above that \(V_T\) is convex, and by Eq. (1), the Bayes filter \(\zeta \left( b,a, z\right)\) is a linear function of b. The composition of a linear and convex function is convex, so \(V_T\left( \zeta \left( b,a, z\right) \right)\) is a convex function of b. The non-negative weighted sum of convex functions is also convex, and by assumption \(\rho _{T-1}\) is convex in \(\varDelta (S)\), from which it follows that \(V_{T-1}^\pi\) is convex in \(\varDelta (S)\).

Now assume \(V_{t+1}^\pi\) is convex in \(\varDelta (S)\) for some \(0 \le t \le T-1\). By the definition in Eq. (6) and the same argumentation as above, it follows that \(V_t^\pi\) is convex in \(\varDelta (S)\).\(\square\)

Since a sufficiently large policy graph can represent any policy, we conclude that the value function of an optimal policy is convex in a Dec-POMDP with a reward function convex in the joint belief.

The following corollary gives a lower bound for the value of a policy graph node.

Corollary 1

Let \(g^t: H^t \rightarrow [0, 1]\) be a probability mass function over the joint histories at time t. If the reward functions \(\rho _T:\varDelta (S)\rightarrow {\mathbb {R}}\) and \(\rho _t:\varDelta (S)\times A \rightarrow {\mathbb {R}}\) are convex in \(\varDelta (S)\), then for any time step t, and for any policy \(\pi\) and \(q^t\in Q^t\),

Proof

By Theorem 1, \(V_t^\pi : \varDelta (S) \times Q^t \rightarrow {\mathbb {R}}\) is convex in \(\varDelta (S)\). The claim immediately follows applying Jensen’s inequality.\(\square\)

Applied to Definition 5, the corollary says the value of a joint policy node \(q^t\) is lower bounded by the value of the expected joint belief at \(q^t\). An illustration of this lower bound is shown by the dashed lines in Fig. 4. Applied to Definition 6, we obtain a lower bound for the value of a local policy node \(q_i^t\) as

where inside the inner expectation we write \((q_{-i}^t,q_i^t)=q^t\). A lower bound for the value of any local node \(q_i^t \in Q_i^t\) is found by finding the values \(V_t^\pi (q^t)\) of all joint nodes \(q^t \in Q^t\) and then taking the expectation of \(V_t^\pi (q^t)\) where \(q^t=(q_{-i}^t,q_i^t)\) under \(P(q_{-i}^t \mid q_i^t, \pi )\).

As Corollary 1 holds for any pmf over joint histories, it could be applied also with pmfs other than \(P(h^t \mid q^t, \pi )\). For example, if it is expensive to enumerate the possible histories and beliefs at a node, one could approximate the lower bound through importance sampling [32, Ch. 23.4].

Since a linear function is both convex and concave, rewards that are state-dependent and rewards that are convex in the joint belief can be combined on different time steps in one Dec-POMDP and the lower bound still holds.

In standard Dec-POMDPs, the expected reward is a linear function of the joint belief. Then, Corollary 1 holds with equality, as shown by the following.

Corollary 2

Consider a Dec-POMDP where the reward functions are defined as \(\rho _T(b) = \sum \limits _{s\in S}b(s)R_T(s)\) where \(R_T:S\rightarrow {\mathbb {R}}\) is a state-dependent final reward function, and for \(t=0, 1, \ldots , T-1\), \(\rho _t(b,a) = \sum \limits _{s\in S} b(s)R_t(s,a)\), where \(R_t:S\times A \rightarrow {\mathbb {R}}\) are the state-dependent reward functions. Then, the conclusion of Corollary 1 holds with equality.

Proof

Let \(\pi =(Q,q_0,\gamma ,\lambda )\) and \(b\in \varDelta (S)\). First note that \(V_T(b) = \rho _T(b) = \sum \limits _{s\in S}b(s)R_T(s)\). Consider then \(t=T-1\), and let \(q^{T-1}\in Q^{T-1}\), and write \(a:=\gamma (q^{T-1})\). Then from the definition of \(V_{T-1}^\pi\) in Eq. (5), consider first the latter sum term which equals

which follows by replacing \(\zeta (b,a,z)\) by Eq. (1), canceling out \(\eta (z\mid b, a)\), and rearranging the sums. The above is clearly a linear function of b, and by definition, so is \(\rho _t\), the first part of \(V_{T-1}^\pi\). Thus, \(V_{T-1}^\pi :\varDelta (S) \times Q^{T-1} \rightarrow {\mathbb {R}}\) is linear in \(\varDelta (S)\). By an induction argument, it is now straightforward to show that \(V_t^\pi\) is linear in \(\varDelta (S)\) for \(t=0, 1, \ldots , T-1\). Finally,

for any pmf g over joint histories by linearity of expectation. \(\square\)

Corollary 1 indicates that the value of a node is lower bounded by the value of the expected joint belief in the node. This result has applications in policy improvement algorithms that iteratively improve the value of a policy by modifying the output and node transition functions at each local policy node. Instead of directly optimizing the value of a node, the lower bound can be optimized. We present one such algorithm in the next section. As shown by Corollary 2, such an algorithm will also work for Dec-POMDPs with a state-dependent reward function.

6 The nonlinear policy graph improvement algorithm

The Policy Graph Improvement (PGI) algorithm [42] was originally introduced for Dec-POMDPs with a standard state-dependent reward function that is linear in the joint belief. PGI monotonically improves policies by locally modifying the output and node transition functions of the individual agents’ policies. The policy size is fixed, such that the worst case computation time for an improvement iteration is known in advance. Moreover, due to the limited size of the policies the method produces compact, understandable policies.

We extend PGI to the non-linear reward case, and call the method non-linear PGI (NPGI). Contrary to tree based Dec-POMDP approaches the policy does not grow double-exponentially with the planning horizon as we use a fixed size policy. NPGI may improve the lower bound of the values of nodes (Corollary 1). The lower bound is tight when each policy graph node corresponds to only one history suggesting we can improve the quality of the lower bound by increasing policy graph size.

NPGI is shown in Algorithm 1. At each improvement step, NPGI repeats two steps: the forward pass and the backward pass. In the forward pass, the current best joint policy is applied to find the set B of expected joint beliefs at every policy graph node. In the backward pass, we iterate over all local policy graph nodes optimizing the policy parameters for each of them, that is, the output function and the node transition function. As output from the backward pass, we obtain an updated policy \(\pi ^+\) using the improved output and node transition functions \(\gamma ^+\) and \(\lambda ^+\), respectively. As NPGI optimizes a lower bound of the node values, we finally check if the expected sum of rewards for the improved policy, \(V_0^{\pi ^+}(b^0,q_0)\), is greater than the value of the current best policy, and update the best policy if necessary. Similarly as PGI, NPGI is an anytime algorithm. It may be terminated at any point in time, and the best joint policy recovered so far may be returned.

In the following, we first give details of the forward pass. Then, we discuss in detail the most important part of the algorithm, the so-called backward pass. Finally, we close the section with a discussion of some implementation details.

6.1 The forward pass

Given a joint policy \(\pi =(Q, q_{0}, \gamma , \lambda )\) and an initial joint belief \(b^0\), the forward pass calculates the set \(B = \{b_q \mid q \in Q\}\) of expected beliefs \(b_q\) for each of the joint policy graph nodes q. We implement the forward pass in two stages, first enumerating the local and joint histories, and then applying the Bayes filter to find the corresponding belief states along with their relative likelihoods.

From the local policy graphs \(\pi _i=(Q_i, q_{i,0}, \gamma _i, \lambda _i)\) of each agent i (see Fig. 2), we first enumerate the sets of local histories ending at each of the nodes \(q_i \in Q_i\). This corresponds to enumeration of all paths in the graph \(\pi _i\). Next, for a given joint policy graph node \(q = (q_1, \ldots , q_n) \in Q\), we look up the set of local histories for each \(q_i\). The set of joint histories at q is then obtained by enumerating all combinations of local histories. That is, if there are \(m_i\) local histories that end at \(q_i\), there are \(\prod \limits _{i=1}^n m_i\) unique combinations of local histories, each of which corresponds to one joint history that ends at q.

Now we have enumerated the set of joint histories that ends at q. Recursively using the Bayes filter, Eq. (4), we obtain for each joint history h a corresponding belief state \(\tau (h)\). As described in Sect. 4.2, we also obtain the relative likelihoods \(P(h \mid q, \pi )\) of the joint histories. The expected belief state at q is then obtained by \(b_q = \sum \limits _{h} P(h\mid q, \pi )\tau (h)\).

6.2 The backward pass

We denote the improved joint policy as \(\pi ^+ = (Q, q_0, \gamma ^+, \lambda ^+)\). The parameters \(\gamma ^+\) and \(\lambda ^+\) of this policy will be incrementally updated throughout the backward pass. This is done by iterating over all nodes in the local policy graphs, and solving optimization problems to maximize the node values. The maximization is done over possible values of the output function and node transition function at the node. At time step t for agent i, for each node \(q_i^t \in Q_i^t\), we maximize either the value \(V_t^{\pi {^+}}(q_i^t)\) or its lower bound with respect to the local policy parameters. In the following, we present the details for maximizing the lower bound. The algorithm for the exact value can be derived analogously, then we store all belief states possible at a node \(q\in Q\) instead of the expected belief in the forward pass.

The backward pass of NPGI is shown in Algorithm 2. We first consider time step \(t=T-1\). We loop over each agent \(i \in I\), and over each local policy graph node \(q_i^{T-1}\). Since after this step no subsequent actions will be taken, we find an optimal local action and assign it to \(\gamma _i^+(q_i^{T-1})\). An optimal local action is such that it maximizes the sum of the expected immediate and final reward. For clarity, in the following we drop explicit notations of the time step from the notation of beliefs, policy graph nodes, actions, and observations. Recall that \(P(q_{-i} \mid q_i, \pi )\) gives the pmf over the local policy graph nodes of agents other than i. To simplify notation, we use the shorthand \(b_{-i}\) for the expected joint belief at a joint policy graph node \(q = \left( q_i, q_{-i}\right)\). Finally, we write \(a=(\gamma _1^+(q_1)\), \(\ldots\), \(a_i\), \(\ldots\), \(\gamma _n^+(q_n))\) as the joint action where local actions of all other agents except i are fixed to those specified by the current output function \(\gamma ^+\). We solve

and assign \(\gamma _i^+(q_i)\) equal to the local action that maximizes Eq. (11).

Next, consider time step \(t\le T-1\). There are now actions remaining after the current one, so we consider both the current local action and which node to traverse to next via the node transition function. We find an optimal local action and assign it to \(\gamma _i^+(q_i^{t})\), and find an optimal configuration for out edges of \(q_i^{t}\) and assign values of \(\lambda _i(q_i^t, z_i)\) accordingly for each \(z_i \in Z_i\). As earlier, we shall drop all explicit notations of the time step for clarity. We use the same notation for \(b_{-i}\) and a as above. Additionally, for any joint observation \(z=(z_1, \ldots , z_n) \in Z\), define

as the next joint policy node to transition to when the transitions of all other agents except i are fixed to those specified by \(\lambda ^+\), and agent i transitions to \(q_i^{z_i}\). We solve

and assign \(\gamma _i^+(q_i)\) and \(\lambda _i^+(q_i, \cdot )\) to their respective maximizing values.

Line 13 of Algorithm 2 checks if there exists a node \(w_i^t\) that we have already optimized that has the same local policy as the current node \(q_i^t\). If such a node exists, we redirect all of the in-edges of \(q_i^t\) to \(w_i^t\) to avoid having nodes with identical local policies. The redirection may change the expected beliefs at the nodes at time steps \(t, t+1, \ldots , T-1\). To ensure that the expected joint beliefs and node reachability probabilities remain valid, we recompute the forward pass (Line 16).

If we redirect the in-edges of \(q_i^t\) to \(w_i^t\), on Line 15 we randomize the local policy of the now useless node \(q_i^t\) that has no in-edges, in the hopes that it may be improved on subsequent backward passes. To randomize the local policy of a node \(q_i^t\in Q_i^t\), we sample new local policies until we find one that is not identical to the local policy of any other node in \(Q_i^t\). Likewise, when randomly initializing a new policy in our experiments we avoid including in any \(Q_i^t\) nodes with identical local policies.

In Algorithm 2 the loop at Line 7 may sometimes encounter a node \(q_i^t\) that is unreachable. That is, there are no in-edges to \(q_i^t\), or the probability of every joint history ending at \(q_i^t\) is equal to zero. In such cases, we randomize the local policy at \(q_i^t\) by calling the subroutine \(\textsc {Randomize}\) on it.

6.3 Computational complexity

We next derive the computational complexity of one improvement iteration for NPGI (Algorithm 1) when using the lower bound. We first consider the forward pass. The forward pass requires computation of all joint belief states reachable over the horizon of T decisions under the current joint policy \(\pi\). For any history of past joint actions and observations, the joint policy \(\pi\) always specifies a single joint action to take. Therefore, the number of possible joint belief states at each time step increases by a factor of |Z|. The number of joint belief states evaluated in the forward pass is \(O(|Z|^T)\).

The backward pass (Algorithm 2) iterates over all T time steps, and all of the n agents. For each agent i, all its controller nodes \(Q_i\) are iterated over. There are a total of \(m = \sum \limits _{i=1}^n |Q_i|\) controller nodes. There are Tnm iterations, and in the worst case at each iteration the optimization problem from Eq. (12) is solved.

To determine the complexity of solving Eq. (12), we determine the size of the feasible set of the optimization problem. At any node \(q_i\), we may set the output function \(\lambda _i(q_i)\) to equal any of the \(|A_i|\) local actions. Likewise, the node transition function \(\gamma _i(q_i,\cdot )\) for any of the \(|Z_i|\) possible local observations may be set to equal any of the \(O(|Q_i|)\) possible successor nodes. To determine the worst case complexity, let \(|A_*| = \max \limits _{i\in I} |A_i|\), \(|Z_*| = \max \limits _{i\in I} |Z_i|\), and \(|Q_*| = \max \limits _{i\in I} |Q_i|\). There are \(O(|A_*||Z_*|^{|Q_*|})\) elements in the feasible set of Eq. (12).

To evaluate each feasible solution, a sum over the possible joint policy graph nodes \(q_{-i}\) of agents other than i is computed. Each of the other agents is in one of at most \(|Q_*|\) local policy graph nodes. Thus, there are \(O(|Q_*|^{n-1})\) possible node configurations for the other agents. Each sum term corresponds to a joint policy graph node \((q_i, q_{-i})\) and is equal to the expected value of the node starting at its expected joint belief, weighted by the reachability probability of the node. The policy is executed until the end of the problem horizon. By a similar argument as made above for the forward pass, evaluating this value requires computation of at most \(O(|Z|^T)\) joint belief states and the corresponding rewards. The reachability probabilities \(P(q_{-i}\mid q_i, \pi )\) are computed and cached already during the forward pass.

In summary, the computational complexity of one iteration in the while loop of Algorithm 1 is

The time complexity of an improvement step in NPGI is exponential in the number of agents n, the number of nodes \(|Q_*|\) in the largest local policy graph. The complexity is exponential in the planning horizon T, unlike tree based Dec-POMDP approaches with doubly-exponential complexity. The complexity is linear in the number of actions.

6.4 Implementation details

We discuss how to initialize policies, and mention some techniques we use to escape local maxima. The source code of our implementation of NPGI is available online at https://github.com/laurimi/npgi.

Policy initialization. We initialize a random policy for each agent \(i\in I\) with a given policy graph width \(\left| Q_i^t\right|\) for each t as follows. For example, for a problem with \(T=3\) and \(\left| Q_i^t\right| =2\), we create a policy similar to Fig. 2 for each agent, where there is one initial node \(q_{i,0}\), and 2 nodes at each time step \(t\ge 1\). The action determined by the output function \(\gamma _i(q_i)\) is sampled uniformly at random from \(A_i\). For each node \(q_i^t \in Q_i^t\) for \(0 \le t \le T-1\), we sample a next node from \(Q_i^{t+1}\) uniformly at random for each observation \(z_i \in Z_i\) and assign the node transition function \(\lambda _i(q_i,z_i)\) accordingly. At the last time step, it is only meaningful to have \(\left| Q_i^T\right| \le \left| A_i\right|\). In our experiments if \(\left| Q_i^T\right| >\left| A_i\right|\), we instead set \(\left| Q_i^T\right| =\left| A_i\right|\).