Abstract

In this paper we deal with a general second order continuous dynamical system associated to a convex minimization problem with a Fréchet differentiable objective function. We show that inertial algorithms, such as Nesterov’s algorithm, can be obtained via the natural explicit discretization from our dynamical system. Our dynamical system can be viewed as a perturbed version of the heavy ball method with vanishing damping, however the perturbation is made in the argument of the gradient of the objective function. This perturbation seems to have a smoothing effect for the energy error and eliminates the oscillations obtained for this error in the case of the heavy ball method with vanishing damping, as some numerical experiments show. We prove that the value of the objective function in a generated trajectory converges in order \(\mathcal {O}(1/t^2)\) to the global minimum of the objective function. Moreover, we obtain that a trajectory generated by the dynamical system converges to a minimum point of the objective function.

Similar content being viewed by others

References

Abbas, B., Attouch, H., Svaiter, B.F.: Newton-like dynamics and forward–backward methods for structured monotone inclusions in Hilbert spaces. J. Optim. Theory Appl. 161(2), 331–360 (2014)

Alecsa, C., László, S.C.: Viorel, A: A gradient type algorithm with backward inertial steps associated to a nonconvex minimization problem. Num. Algorithms (2019). https://doi.org/10.1007/s11075-019-00765-z

Alvarez, F., Attouch, H., Bolte, J., Redont, P.: A second-order gradient-like dissipative dynamical system with Hessian-driven damping. Application to optimization and mechanics. Journal de Mathématiques Pures et Appliquées 81(8), 747–779 (2002)

Attouch, H., Chbani, Z.: Fast inertial dynamics and fista algorithms in convex optimization. Perturbation aspects. (2015). arXiv:1507.01367

Attouch, H., Svaiter, B.F.: A continuous dynamical Newton-like approach to solving monotone inclusions. SIAM J. Control Optim. 49(2), 574–598 (2011)

Attouch, H., Goudou, X., Redont, P.: The heavy ball with friction method, I. The continuous dynamical system: global exploration of the local minima of a real-valued function by asymptotic analysis of a dissipativ dynamical system. Commun. Contemp. Math. 2, 1–34 (2000)

Attouch, H., Peypouquet, J., Redont, P.: A dynamical approach to an inertial forward–backward algorithm for convex minimization. SIAM J. Optim. 24(1), 232–256 (2014)

Attouch, H., Peypouquet, J., Redont, P.: Fast convex optimization via inertial dynamics with Hessian driven damping. J. Differ. Equ. 261(10), 5734–5783 (2016)

Attouch, H., Chbani, Z., Peypouquet, J., Redont, P.: Fast convergence of inertial dynamics and algorithms with asymptotic vanishing viscosity. Math. Program. 168(1–2), 123–175 (2018)

Attouch, H., Chbani, Z., Riahi, H.: Rate of convergence of the Nesterov accelerated gradient method in the subcritical case \(\alpha \le 3\). ESAIM: COCV 25, 2 (2019)

Attouch, H., Chbani, Z., Fadili, J., Riahi, H.: First-order optimization algorithms via inertial systems with Hessian driven damping. (2019). arXiv:1907.10536

Attouch, H., Chbani, Z., Riahi, H.: Fast convex optimization via time scaling of damped inertial gradient dynamics. https://hal.archives-ouvertes.fr/hal-02138954 (2019)

Aujol, J.F., Dossal, Ch.: Optimal rate of convergence of an ODE associated to the Fast Gradient Descent schemes for \(b > 0\). https://hal.inria.fr/hal-01547251v2/document (2017)

Aujol, J.F., Dossal, C., Rondepierre, A.: Optimal convergence rates for Nesterov acceleration. SIAM J. Optim. 29(4), 3131–3153 (2019)

Balti, M., May, R.: Asymptotic for the perturbed heavy ball system with vanishing damping term. Evol. Equ. Control Theory 6(2), 177–186 (2017)

Bégout, P., Bolte, J., Jendoubi, M.A.: On damped second-order gradient systems. J. Differ. Equ. 259, 3115–3143 (2015)

Boţ, R.I., Csetnek, E.R., László, S.C.: An inertial forward–backward algorithm for minimizing the sum of two non-convex functions. Euro J. Comput. Optim. 4(1), 3–25 (2016)

Boţ, R.I., Csetnek, E.R., László, S.C.: Approaching nonsmooth nonconvex minimization through second-order proximal-gradient dynamical systems. J. Evol. Equ. 18(3), 1291–1318 (2018)

Boţ, R.I., Csetnek, E.R., László, S.C.: A second order dynamical approach with variable damping to nonconvex smooth minimization. Appl. Anal. 99(3), 361–378 (2018)

Boţ, R.I., Csetnek, E.R., László, S.C.: A primal-dual dynamical approach to structured convex minimization problems. (2019). arXiv:1905.08290

Boţ, R.I., Csetnek, E.R., László, S.C.: Tikhonov regularization of a second order dynamical system with Hessian driven damping. (2019). arXiv:1911.12845

Cabot, A., Engler, H., Gadat, S.: On the long time behavior of second order differential equations with asymptotically small dissipation. Trans. Am. Math. Soc. 361, 5983–6017 (2009)

Cabot, A., Engler, H., Gadat, S.: Second order differential equations with asymptotically small dissipation and piecewise at potentials. Electr. J. Differ. Equ. 17, 33–38 (2009)

Haraux, A.: Systèmes Dynamiques Dissipatifs et Applications. Recherches en Mathématiques Appliquéées 17, Masson (1991)

László, S.C.: Convergence rates for an inertial algorithm of gradient type associated to a smooth nonconvex minimization. (2018). arXiv:1811.09616

Muehlebach, M., Jordan, M.I.: A Dynamical systems perspective on Nesterov acceleration. (2019). arXiv:1905.07436

Nesterov, Y.: A method for solving the convex programming problem with convergence rate \(O(1/k^2)\). Dokl. Akad. Nauk SSSR 269(3), 543–547 (1983). (Russian)

Nesterov, Y.: Introductory Lectures on Convex Optimization: A Basic Course. Kluwer Academic Publishers, Dordrecht (2004)

Polyak, B.T.: Some methods of speeding up the convergence of iteration methods. U.S.S.R. Comput. Math. Math. Phys. 4(5), 1–17 (1964)

Shi, B., Du, S.S., Jordan, M.I., Su, W.J.: Understanding the acceleration phenomenon via high-resolution differential equations. (2018). arXiv:1810.08907

Sontag, E.D.: Mathematical Control Theory. Deterministic Finite-Dimensional Systems. Texts in Applied Mathematics 6, vol. 2. Springer, New York (1998)

Su, W., Boyd, S., Candes, E.J.: A differential equation for modeling Nesterov’s accelerated gradient method: theory and insights. J. Mach. Learn. Res. 17, 1–43 (2016)

Zhang, J., Mokhtari, A., Sra, S., Jadbabaie, A.: Direct Runge-Kutta discretization achieves acceleration. In: Advances in Neural Information Processing Systems, pp. 3900–3909 (2018)

Acknowledgements

The authors are thankful to two anonymous referees for their valuable remarks and suggestions which improved the quality of the paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was supported by a Grant of Ministry of Research and Innovation, CNCS - UEFISCDI, project number PN-III-P1-1.1-TE-2016-0266.

Appendices

A. Complements to the Section Existence and uniqueness

In what follows we give a detailed proof for Theorem 9.

Proof

(Theorem 9).

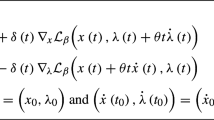

By making use of the notation \(X(t)=(x(t),\dot{x}(t))\) the system (6) can be rewritten as a first order dynamical system:

where \(F:[t_0,+\infty )\times \mathbb {R}^m\times \mathbb {R}^m\longrightarrow \mathbb {R}^m\times \mathbb {R}^m,\, F(t,u,v)=\left( v, -\frac{\alpha }{t}v-\nabla g\left( u+\left( \gamma +\frac{\beta }{t}\right) v\right) \right) .\)

The existence and uniqueness of a strong global solution of (6) follows according to the Cauchy–Lipschitz–Picard Theorem applied to the first order dynamical system (37). In order to prove the existence and uniqueness of the trajectories generated by (37) we show the following:

-

(I)

For every \(t\in [t_0,+\infty )\) the mapping \(F(t, \cdot , \cdot )\) is L(t)-Lipschitz continuous and \(L(\cdot )\in L^1_{loc}([t_0,+\infty ))\).

-

(II)

For all \(u,v\in {\mathbb {R}^m}\) one has \(F(\cdot ,u,v)\in L^1_{loc}([t_0,+\infty ),{\mathbb {R}^m}\times {\mathbb {R}^m})\) .

Let us prove (I). Let \(t \in [t_0, +\infty )\) be fixed and consider the pairs (u, v) and \((\bar{u}, \bar{v})\) from \(\mathbb {R}^m \times \mathbb {R}^m\). Using the Lipschitz continuity of \({\nabla }g\) and the obvious inequality \(\Vert A+B\Vert ^2\le 2\Vert A\Vert ^2+2\Vert B\Vert ^2\) for all \(A,B\in \mathbb {R}^m\), we make the following estimations :

By employing the notation \(L(t)= \sqrt{1+4L_g^2+2\left( \frac{\alpha }{t}\right) ^2+4L_g^2\left( \gamma +\frac{\beta }{t}\right) ^2}\), we have that

Obviously the function \(t \longrightarrow L(t)\) is continuous on \([t_0, +\infty )\), hence \(L(\cdot )\) is integrable on \([t_0,T]\) for all \(t_0<T<+\infty \).

For proving (II) consider \((u,v) \in \mathbb {R}^m \times \mathbb {R}^m\) a fixed pair of elements and let \(T > t_0\). We consider the following estimations:

and the conclusion follows by the continuity of the function \(t \longrightarrow \sqrt{5+2\left( \frac{\alpha }{t}\right) ^2+4L_g^2\left( \gamma +\frac{\beta }{t}\right) ^2}\) on \([t_0,T]\).

The Cauchy–Lipschitz–Picard theorem guarantees existence and uniqueness of the trajectory of the first order dynamical system (37) and thus of the second order dynamical system (6). \(\square \)

Remark 26

Note that we did not use the convexity assumption imposed on g in the proof of Theorem 9. However, we emphasize that according to the proof of Theorem 9, the assumption that g has a Lipschitz continuous gradient is essential in order to obtain existence and uniqueness of the trajectories generated by the dynamical system (6).

Next we present the complete proof of Proposition 10. For simplicity, in the proof of the following sequel we employ the 1-norm on \(\mathbb {R}^m \times \mathbb {R}^m\), defined as \(\Vert (x_1, x_2) \Vert _{1} = \Vert x_1 \Vert + \Vert x_2 \Vert \), for all \(x_1,x_2 \in \mathbb {R}^m\). Obviously one has

Proof

(Proposition 10).

We show that \(\dot{X}(t) = (\dot{x}(t), \ddot{x}(t))\) is locally absolutely continuous, hence \(\ddot{x}\) is also locally absolutely continuous. This implies by Remark 8 that \(x^{(3)}\) exists almost everywhere on \([t_0, + \infty )\).

Let \(T > t_0\) and \(s,t \in [t_0, T]\). We consider the following chain of inequalities :

and by using the \(L_g\)-Lipschitz continuity of \({\nabla }g\) we obtain

Further, let us introduce the following additional notations:

Then, one has

By the fact that x is the strong global solution for the dynamical system (6), it follows that x and \(\dot{x}\) are absolutely continuous on the interval \([t_0,T]\). Moreover, the function \(t \longrightarrow \dfrac{1}{t}\) belongs to \(C^1([t_0, T], \mathbb {R})\), hence it is also absolutely continuous on the interval \([t_0,T]\). Let \(\varepsilon > 0\). Then, there exists \(\eta > 0\), such that for \(I_k = (a_k,b_k) \subseteq [t_0,T]\) satisfying \(I_k \cap I_j = \emptyset \) and \(\sum \limits _{k} |b_k-a_k| < \eta \), we have that

Summing all up, we obtain

consequently \(\dot{X}\) is absolutely continuous on \([t_0,T]\). and the conclusion follows. \(\square \)

Concerning an upper bound estimate of the third order derivative \(x^{(3)}\) the following result holds.

Lemma 27

For the initial values \((u_0,v_0) \in \mathbb {R}^m \times \mathbb {R}^m\) consider x the unique strong global solution of the second-order dynamical system (6). Then, there exists \(K> 0\) such that for almost every \(t \in [t_0, +\infty )\), we have that:

Proof

For \(h > 0 \) we consider the following inequalities :

Now, dividing by \(h > 0\) and taking the limit \({h \longrightarrow 0}\), it follows that

Consequently,

Finally,

where \(K=\max \left\{ \max _{t\ge t_0}\left( L_g + \dfrac{\alpha +L_g|\beta |}{t^2}\right) ,\max _{t\ge t_0}\left( \frac{\alpha }{t} + L_g \left| \gamma + \dfrac{\beta }{t} \right| \right) \right\} \). \(\square \)

B. On the Derivative of the Energy Functional (13)

In what follows we derive the conditions which must be imposed on the positive functions a(t), b(t), c(t), d(t) in order to obtain \(\dot{\mathcal {E}}(t)\le 0\) for every \(t\ge t_1.\) We have,

Now, from (6) we get \(\ddot{x}(t)=-\alpha (t)\dot{x}(t)-{\nabla }g(x(t)+\beta (t)\dot{x}(t)),\) hence

Consequently,

But

and by the convexity of g we have \(\langle {\nabla }g(x(t)+\beta (t)\dot{x}(t)),x(t)+\beta (t)\dot{x}(t)-x^*\rangle \ge g(x(t)+\beta (t)\dot{x}(t))-g^*,\) hence

Therefore, (39) becomes

From here one easily can observe that the conditions (15)–(20) assure that

Remark 28

Note that we did not use the form of the sequences \(\alpha (t)\) and \(\beta (t)\) in the above computations. Consequently, the energy functional (13) may be suitable also for the following general system.

where \(u_0,v_0\in \mathbb {R}^m\) and \(\alpha ,\beta :[t_0+\infty )\longrightarrow (0,+\infty ),\, t_0\ge 0\) are continuously derivable functions.

Rights and permissions

About this article

Cite this article

Alecsa, C.D., László, S.C. & Pinţa, T. An Extension of the Second Order Dynamical System that Models Nesterov’s Convex Gradient Method. Appl Math Optim 84, 1687–1716 (2021). https://doi.org/10.1007/s00245-020-09692-1

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00245-020-09692-1

Keywords

- Convex optimization

- Heavy ball method

- Continuous second order dynamical system

- Convergence rate

- Inertial algorithm