Examples of Gibsonian Affordances in Legged Robotics Research Using an Empirical, Generative Framework

- 1Department of Electrical and Systems Engineering, University of Pennsylvania, Philadelphia, PA, United States

- 2Department of Philosophy, University of Pennsylvania, Philadelphia, PA, United States

Evidence from empirical literature suggests that explainable complex behaviors can be built from structured compositions of explainable component behaviors with known properties. Such component behaviors can be built to directly perceive and exploit affordances. Using six examples of recent research in legged robot locomotion, we suggest that robots can be programmed to effectively exploit affordances without developing explicit internal models of them. We use a generative framework to discuss the examples, because it helps us to separate—and thus clarify the relationship between—description of affordance exploitation from description of the internal representations used by the robot in that exploitation. Under this framework, details of the architecture and environment are related to the emergent behavior of the system via a generative explanation. For example, the specific method of information processing a robot uses might be related to the affordance the robot is designed to exploit via a formal analysis of its control policy. By considering the mutuality of the agent-environment system during robot behavior design, roboticists can thus develop robust architectures which implicitly exploit affordances. The manner of this exploitation is made explicit by a well constructed generative explanation.

1. Introduction

Gibson (1979) describes an affordance as a perceptually reliable feature of the environment that presents an agent with an opportunity for purposeful action. Autonomous robotics research can then be conceived as the process of designing a robot to systematically exploit the available opportunities for action in order to accomplish a specified overall task or tasks. In a complex, uncertain, and changing world, it is not obvious what design strategies will be most effective. It is therefore of general interest to ask how the explicit study of affordances might facilitate robotics research, and what the empirical study of affordances involves.

Ecological psychologists and philosophers have in general argued that perception and exploitation of affordances need not require the construction and manipulation of complex internal representations. Here, a “representation” refers to a model used in the control policy. For example, while a reactive controller could be argued to in some way represent the problem it is designed to solve, the system itself only senses and represents the variables used for feedback control. Beginning with the observation that agents have bodies (Chiel and Beer, 1997; Shapiro, 2011) and environments (Clark and Chalmers, 1998; Wilson and Golonka, 2013), and considering affordances to be properties of the embodied agent-environment system (Stoffregen, 2003; Chemero and Turvey, 2007), the argument is that the agent already has all of the action-relevant properties of the world available to it. It thus does not need complex internal representations, either of the world or of the affordances it exploits.

Others—even those sympathetic to the cause of embodied cognition (Clark, 1997, 1998) — have argued that complex representations are necessary for basic cognitive skills. It is elementary that some representation of internal state is necessary to achieve feedback stabilization of many control systems, and more comprehensive analyses suggest that robustness to complex perturbations in a complex world requires an agent to have complex internal representations of the environment and the perturbations (Conant and Ross Ashby, 1970; Francis and Wonham, 1976; Wonham, 1976). The role of internal models for limbs and their environmental loading in animal motor activity is widely accepted by many neuroscientists (Kawato, 1999). Deep learning (LeCun et al., 2015; Goodfellow et al., 2016) offers a modern version of this argument: The complexity of the problem a neural net can solve is in many ways limited by the size of the net and the amount of data, and thus the complexity of the model the net can build.

However, taking an ecological view, it may instead be the case that the agent needs only representations of “essential variables” which describe the relevant features of the activity the agent is involved in Fultot et al. (2019). Representations using only these essential variables may be of much lower dimensionality than representations of the full system. Seemingly complex behaviors can then be built from structured compositions of such simpler controllers operating on the variables essential to their component behavior. The behavior resulting from such compositions of simpler controllers has the added benefit of increased explainability (Cohen, 1995; Samek et al., 2017; Gunning and Aha, 2019) since the expected behavior of the component parts is known and, when properly conceived, their composition follows formal mathematical properties (Burridge et al., 1999; De and Koditschek, 2015).

Legged locomotion is one example of such a behavior. Robots are able to locomote using both very strong feed-forward control methods, such as central pattern generators (Saranli et al., 2001; Ijspeert et al., 2007; Ijspeert, 2008) as well as more distributed control (Cruse et al., 1998; Owaki et al., 2013; Owaki and Ishiguro, 2017; De and Koditschek, 2018) and many roboticists employ combinations thereof (Espenschied et al., 1996; Merel et al., 2019). Legged animals appear to pragmatically combine feedforward (Grillner, 1985; Whelan, 1996; Golubitsky et al., 1999; Minassian et al., 2017) and feedback (Pearson, 1995, 2004; Steuer and Guertin, 2019) controllers, implemented physically in both the mechanics of the body and in the nervous system (Cruse et al., 1995, 2006; Jindrich and Full, 2002; Sponberg and Full, 2008).

Research on insect navigation provides some support for the potential robustness and flexibility of coordinated systems of simple controllers, even for behaviors that had been previously believed to require cognitive maps (Tolman, 1948). Despite compelling evidence of complex cognition in invertebrate species (Jacobs and Menzel, 2014), recent studies of insect navigation (review: Wystrach and Graham, 2012) suggest that insects integrate information from multiple simple navigation systems (Hoinville and Wehner, 2018). These could include a path integration system (Wehner and Srinivasan, 2003); visual cues, with motivation controlling the switch between relevant cues (Cruse and Wehner, 2011); reactive collision avoidance (Bertrand et al., 2015); and a highly conserved systemic search mechanism when other mechanisms fail (Cheng et al., 2014). Each of these simple mechanisms for navigation operates in a relatively decentralized manner and requires little internal representation. Properly coordinated, together they are sufficient to produce robust navigation.

The literature on vertebrate navigation suggests that even animals which do seem to build complex spatial maps of their environments for navigation using special neural structures (O'Keefe and Burgess, 1996; Hafting et al., 2005; Savelli and Knierim, 2019) still rely on the coordination of multiple navigation systems (Moser et al., 2008)—perhaps one solving the problem of local navigation with landmarks, and the other providing directional bearing (Jacobs and Menzel, 2014). It has even been suggested that primates navigate available affordances and choose between them in virtue of neurally implemented competing feedback controllers (Cisek, 2007; Pezzulo and Cisek, 2016).

However, the robotics research often considered to exemplify the application of ecological concepts like affordances to robotics is generally either oriented toward building representations of affordances (Şahin et al., 2007; Zech et al., 2017; Andries et al., 2018; Hassanin et al., 2018) or toward biomimetic implementations of specific animal behaviors or capabilities (Beer, 1997; Cruse et al., 1998; Webb and Consilvio, 2001; Ijspeert, 2014) which then act as models for testing biological hypotheses (Webb, 2001, 2006). When non-biomimetic robotics research oriented toward coordinating simple controllers is considered (e.g., Braitenberg, 1986; Brooks, 1986; Raibert, 1986; Arkin, 1998), it is inevitably decades old. This motivates a reconsideration of how affordances are—and can be—studied in robotics, one that examines how simpler component processes and behaviors might be coordinated to explainably produce more complex and robust affordance exploitation.

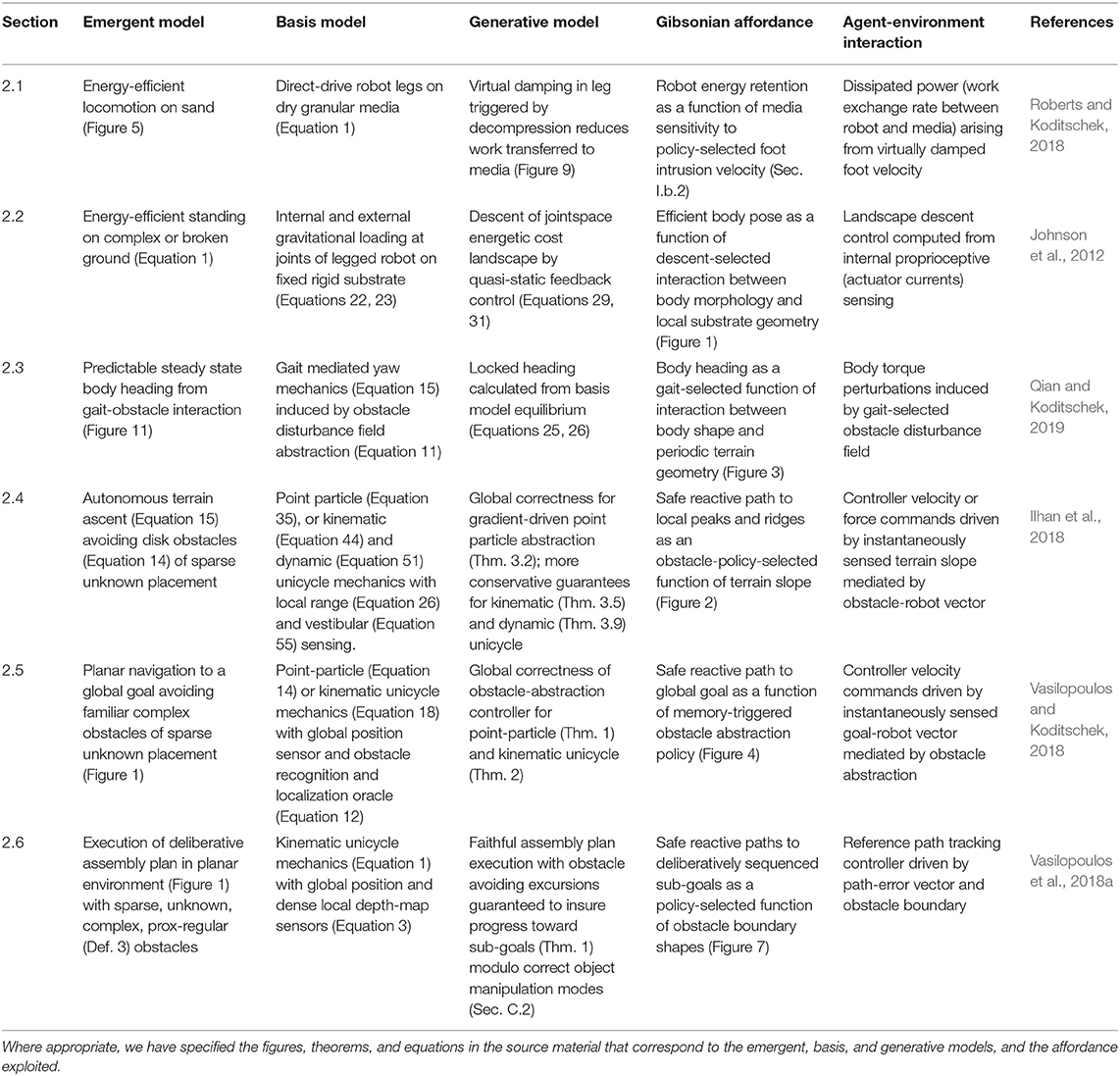

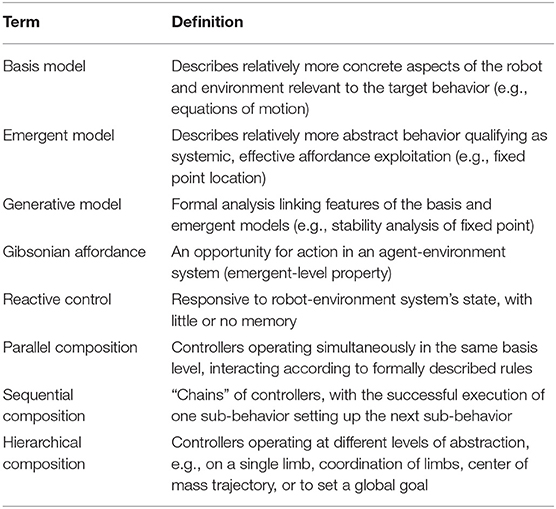

We find the application of Miracchi (2017, 2019)'s generative framework useful for this discussion. The generative framework is designed to separate descriptions of a target behavior (in our case affordance exploitation) from commitments about the details of robot morphology, programming, or environment necessary to accomplish the task. The framework consists of three parts. The basis model describes aspects of the robot, including its methods of information processing, and relevant properties of the environment. The emergent model specifies the target behaviors in the relevant contexts. These behaviors operate at a larger spatiotemporal scale than those of the basis model, characterizing more global patterns and abstracting away from the details of implementation. Emergent behaviors are thus likely not to be obvious consequences of the basis model. The generative model specifies how features of the basis model determine (“generate”) features of the emergent model, thus explaining the emergent behavior in terms of the implementation details at the basis level1 (see Table 1).

Table 1. Definitions of terms used to describe the case studies under the generative framework. An example application to a simple reactive controller represented with a dynamical system is provided in parentheses.

We can demonstrate this separation of the basis and emergent models with an example application to two types of feedback controller. Say we have a proportional-derivative (PD) controller on the speed of a steam engine that operates by powering a motor to open or close a valve based on a reading given by an electronic velocity sensor such as an inertial measurement unit (IMU). The emergent model would then be the stable operation of the engine at the set point speed. The basis model would then include the engine dynamics, the way it is influenced by powering the valve, and the properties of the velocity sensor. Formal analysis on the stability of the PD controller would constitute the generative model. With properly set gains and an assumption that the sensor is within a certain percentage error, the controller would be guaranteed to bring the engine to the set speed. Now say the speed controller used a Watt governor instead (Van Gelder, 1995), which raises and lowers the arms controlling the amount of steam allowed through the valve using centrifugal forces determined by the speed of the prime mover. The emergent model would be the same, but the basis model would substitute the governor's mechanical equations of motion for the electronic signal processing model of the IMU. Similarly, we can consider what representations are sufficient to effectively exploit the target affordance specified by an emergent model.

We apply this framework to six case studies of legged locomotion involving reactive controllers in order to demonstrate how these projects can be usefully conceptualized as designing robots to exploit affordances without complex internal representations. We choose work that uses reactive controllers to generate affordance exploitation, not only because they are often referenced in research on affordances in animals (see above), but also because robotics research has long demonstrated their utility. Reactive controllers respond to the state of the robot-environment system with little or no memory; are robust; typically require modest explicit internal world models, if at all; can often be formally analyzed with tools from dynamical systems theory; and—correctly designed and implemented—must indefatigably steer the coupled robot-environment system toward an appropriate goal. Such controllers can also be composed into more complex systems (Brooks, 1986; Arkin, 1998), though it is vital to the explainability of the emergent behavior that these compositions follow formal mathematical rules (e.g., Burridge et al., 1999). Compositions can be made in parallel (Raibert, 1986; De and Koditschek, 2015, 2018), in sequence (Lozano-Perez et al., 1984; Burridge et al., 1999; Burke et al., 2019a,b), and hierarchically (Full and Koditschek, 1999), which requires proper coordination of more primitive subcomponents whose isolated behavior and interactions are both mathematically understood. These interactions generate the required emergent behavior (Vasilopoulos et al., 2018a,b) (see Table 1).

The case studies are drawn from one research group that typically provides formal generative explanations, easing the application of the generative framework. Focusing on one group also enabled us to quickly and deeply examine the ways in which systems can be designed to exploit affordances at multiple levels of abstraction from implementation details without introducing many different kinds of research problems, and to discuss each project with the primary and senior authors to ensure that the researchers agreed with this characterization of their work. We anticipate that similar analysis would be interesting to apply to a variety of other robotics research programs (e.g., Hatton and Choset, 2010; Hogan and Sternad, 2013; Majumdar and Tedrake, 2017; Burke et al., 2019a,b).

2. Case Studies

The six examples in this section are arranged in order of abstraction from the physics governing the robot's limb-ground interactions. We will mention the roles of parallel, sequential, and hierarchical compositions of controllers where appropriate. A concise, technical summary is provided in Table 2. Readers interested in the more detailed analysis leading to the summarized formal conclusions can refer to the specific equations, theorems, and figures in the original research papers which are noted in the table. The emergent and basis models (EM, BM) refer to the target behavior and implementation details of the paper cited in the last column. The generative model (GM) describes the formal analytical link, a mathematical explanation of how the target behavior emerges from the basis model (Table 1). The Gibsonian affordance (GA) describes in functional terms the designers' selected opportunity for action provided by the interaction of the robot's physical implementation with its environment, and the agent-environment interaction (AEI) specifies the perceptually reliable information or energetic exchange between the robot and the environment that makes this affordance possible to exploit.

2.1. Energetic Cost of Running on Sand

Roberts and Koditschek (2018, 2019) develop a reactive controller to reduce the cost of transport (EM) for a direct-drive robot with programmable compliance in its legs (Kenneally et al., 2016) locomoting on granular media (BM) using a combination of simulation and physical emulation experiments. Robust locomotion can be accomplished by a composition of decoupled controllers which individually affect vertical motion and the robot's directional velocity (Raibert, 1986).

When a robot pushes off of a compliant substrate like sand (Aguilar and Goldman, 2016), its foot, which has a smaller mass than the body, can quickly penetrate deep into the sand before the body begins to move up. Because the dissipation function of the sand is quadratic in velocity, the robot can transfer – and thus lose—a large amount of energy from its motors through its leg and foot to the ground while pushing off (GA). The energy wasted in transfer to the ground is significantly reduced by adding damping to the robot's leg “spring” in proportion to the vertical foot intrusion velocity (GM).

To implement Roberts and Koditschek's controller, the robot needs only the high-bandwidth information about its foot intrusion velocity provided by the direct-drive architecture and a measurement of the distance to the ground (AEI). It does not require an explicit internal representation of the ground (Hubicki et al., 2016).

2.2. Manipulating a Robot's Body Pose Using Its Limbs

Johnson et al. (2012) develop a controller that distributes effort between limbs of a six-legged robot standing on rigid, uneven terrain. Statically stable poses require the projected center of mass to lie within a polygon defined by the toes, which typically requires much more torque from some motors than others on uneven surfaces. Distributing effort between legs reduces the maximum torque requirement, lowering the overall energetic cost of standing and avoiding damage to the motors from overheating (EM).

To develop the controller, the authors build a “landscape” describing the energetic cost to stand as a function of body pose for a robot with a given legged morphology and a given toe placement (GA). They show formally that an effective descent direction toward a local minimum can be determined at every location on the landscape (GM) by the current draw from the motors, a direct measurement requiring no additional modeling (AEI).

Since the landscape can be expressed as the sum of costs due to the legs fighting each other in stance (internal forces) and the effort of the limbs to support the body mass (external forces), these two systems can be decoupled. The authors exploit this decomposition to implement the behavior on a physical six-legged robot as a parallel composition of two controllers: One to relax the internal forces by driving down the torque of legs operating in opposition to each other, and one to center the body mass over the toe polygon by driving down the body-averaged torques in parallel (BM).

2.3. Characterizing Interactions With Obstacles

Obstacles in a robot's environment could be used as opportunities for the robot to perturb its trajectory toward a desired direction. Qian and Koditschek (2019) walk a small robot with four legs and a fast-manufacturable body through a periodic obstacle field consisting of evenly spaced half-cylinders. By systematic experimentation, they observe the emergence of a yaw angle that locks the robot's steady-state trajectory over the obstacles in a manner relatively invariant to the robot's initial conditions upon entering the field (EM). This locked angle is an empirically stable function of body aspect ratio, the spacing between the obstacles, and the robot's gait (GA).

The authors develop an abstract representation of the effective yawing disturbance field resulting from the interaction between the robot's body aspect ratio and the spacing between the obstacles, which is a selectable consequence of gait (BM). The result is a dynamical model whose equilibrium states predict the resulting steady-state body yaw angle of the robot—and thus its steady-state locked heading (GM). The only feedback signals used by the robot are position and velocity measurements on the rotation of the legs, which are used for feedback control on the clock-driven position and velocity commands sent to the legs. These are sufficient to recruit the desired interaction between the body morphology and the environment structure, and the robot's heading stabilizes in absence of any body-level sensing (AEI).

2.4. Reactive Control on a Global Scale

Ilhan et al. (2018) develop a controller that drives a robot toward the locally most elevated position from any start location in a gentle hillslope environment punctured by tree-like, disk-shaped obstacles (EM). They test their controller on a physical six-legged robot walking on unstructured, forested hillslopes, using the top of the hillslope as the goal location. The robot uses an inertial measurement unit to acquire the local gradient, and a laser range finder to detect obstacles which are likely to be insurmountable (AEI).

A reactive “navigation”-level controller takes information about the local gradient and the presence of local obstacles, and produces a summed vector indicating the direction that increases the robot's elevation while avoiding a collision (GA). The coordination of the limbs to execute these commands is handled by a lower-level controller in a hierarchical composition.

The authors present multiple options of such compositions that assume different degrees of actuation authority for the lower-level controller. The strongest conclusions from formal analysis (global stability; GM-1) come from assuming the robot can be treated as a fully velocity-controlled two degree-of-freedom point particle (BM-1). More realistic models of outdoor mobility, which have more conservative guarantees (GM-2), assume that the robot can be treated as a non-holonomically constrained, velocity-controlled unicycle, or—when running at speed—a force-controlled unicycle.

2.5. Using Recognition of Complex Obstacles to Create Abstract Spaces Conditioned for Reactive Control Schemes

In contrast to the previous case study, which had a perceptually detected environmental feature as the goal location and a purely reactive control scheme, Vasilopoulos and Koditschek (2018) develop a controller that governs navigation in an environment with perceptually intricate obstacles toward an arbitrary, user-selected goal (EM). Obstacles may be highly complex, but if non-convex or densely packed then they are expected to be “familiar." The robot is assumed to have access to its global position and to an oracle with a catalog of non-convex obstacles on which it was previously trained (Pavlakos et al., 2017) (BM). When an obstacle enters the robot's sensory footprint at execution time, the obstacle can thus be instantaneously recognized and localized. Unrecognized obstacles are presumed to be convex and suitably sparse.

Once recognized, non-convex obstacles are abstracted to a generic round shape using a smooth change of coordinates, and if densely packed, may be conglomerated into one large obstacle. The result is a geometrically simple, abstracted space. A purely reactive navigation controller (Arslan and Koditschek, 2019) closes the loop to guarantee obstacle free convergence to the goal location within the geometrically simplified but topologically equivalent environment (GA). Actuation commands for the geometrically detailed physical environment with non-convex obstacles are obtained by pushing forward the abstracted navigation commands through this change of coordinates (AEI). The authors perform a formal analysis of the overall dynamical system describing the closed-loop controller navigating reactively through the abstracted space as it is updated by the robot's perception of new obstacles. Proofs of correctness (GM-1, GM-2) are provided for two actuation schemes: A fully actuated point particle robot (BM-1), and a kinematic unicycle (BM-2). In a recent extension (Vasilopoulos et al., under review), the composed controller is implemented on a physical legged robot, with the unicycle commands interpreted by a lower-level controller for the robot's gait in a hierarchical composition.

The contribution of this project may at first seem to be at odds with our interpretation of affordances, but we suggest that this project demonstrates how two very different methods can usefully complement one another. The problems of obstacle perception and navigation can be separated by the judicious use of a previously learned library of objects. This separation reduces the navigation problem to one which can be solved with reactive navigation control, about which formal guarantees can be provided. Methods like deep learning can then be used to produce the necessary library of objects. A careful composition of the navigation capability provided by the reactive controller and object recognition capabilities provided by the learning methods then produces the emergent behavior described in this project.

2.6. Layering Deliberative and Reactive Controllers

Vasilopoulos et al. (2018b) build on the previously described navigation system to develop a deliberative (offline) planning and reactive (online) control architecture which enables a robot to rearrange multiple objects in its imperfectly known environment. During execution of each deliberatively sequenced sub-goal, the combined controller produces reactive commands (GA) as a function of the recognized obstacle's boundary shape. Theoretical work (Vasilopoulos et al., 2018b) assumes a robot constrained to move as a kinematic unicycle, with a globally known position, and an omnidirectional LIDAR producing a dense local depth map (BM). Physical experiments (Vasilopoulos et al., 2018a) are performed with a four-legged direct-drive robot, with a hierarchically arranged composition of controllers coordinating the robot's legs to produce the kinematic unicycle-like behavior. The emergent model of the velocity-controlled unicycle is generated by a subcomponent basis model consisting of properly coordinated parallel and sequential compositions of hybrid Lagrangian stance dynamics (Topping et al., 2019). The velocity-controlled unicycle then becomes the basis model of the reactive controller in this project, producing the whole-robot behavior.

Formal generative analysis (GM) in these papers addresses only the interaction between the deliberative planner and the reactive controller. The former breaks down the task of moving multiple objects to multiple goals into an ordered set of subtasks assigned to the latter. The reactive controller, which is endowed with the same oracle as the previous case study, is able to drive the robot around unanticipated obstacles (AEI) as needed in order to execute subtasks as they are assigned by the deliberative symbolic controller. The robot is then able to grab and move each object toward its planned subgoal location (EM).

The use of a reactive layer to handle obstacle interactions significantly simplifies the control problem, and allows the authors to provide formal guarantees about the conditions under which this combined controller should be expected to succeed. The offline, symbolic deliberative layer effectively solves the abstracted task planning problem. High-level commands from this layer drive the reactive manipulation and navigation layer, which can use realtime signal processing and control to readily handle unexpected geometric and topological complexities which would seriously challenge a symbolic planner.

3. Discussion

A major contribution of engineering to the understanding of affordances more generally is the formal methods which are used to describe the generative relationships between the implementation details and the desired behavior. We hope to encourage crossdisciplinary interest in projects using these methods, and find the clear separation of the specified target behavior from the implementation details provided by a generative framework is helpful for discussion. We suggest that the use of affordances in robotics research need not include the development of computational models of those affordances for robots to identify and thus exploit. Instead, consideration of the mutuality of the agent-environment system during robot behavior design can be used to develop robust and explainable architectures which implicitly exploit affordances. Roboticists can and do use systematic, empirical practice to apply Gibson's philosophy of affordances—just without naming them.

Considerations of engineering design and the practicability of abstraction from the environment at different levels of planning and control can determine the mix of endowed prior knowledge, representation building, and sensory dependence. For example, with the last case study, we suggest that methodological commitment to use only reactive controllers (as by e.g., Brooks, 1986, 1991) distracts from the potential benefits of combining a focus on affordances during robot behavior design with a cautionary approach to internal representations2. If effort need not be spent to create representations that are useful for the robot to perform its basic behaviors like locomotion and navigation, then the effort can be spent to create useful representations for tasks which do require them, such as to enable better communication between collaborating robots and humans. For example, a team of robots tasked with helping geomorphologists study erosion in the desert might build a map of the ground stiffness in different locations (Qian et al., 2017). Such a map would be useful even if the robots are able to navigate and locomote completely with reactive control, which allows each robot to continue functioning normally even when it loses signal connection to team members, damages an end effector, or experiences a sensor glitch. Why not reserve the difficult task of building good representations for behaviors that require them, and use affordance-based reactive control for behaviors that don't?

Author Contributions

SR and LM linked the generative framework developed by LM to the literature on embodied cognition and artificial intelligence. SR and DK linked the embodied cognition and control theory literatures. SR, LM, and DK applied the framework to the case studies. SR worked with primary authors to ensure that their approaches and results were appropriately characterized.

Funding

This work was supported in part by NSF NRI-2.0 grant 1734355 and in part by ONR grant N00014-16-1-2817, a Vannevar Bush Fellowship held by the DK, sponsored by the Basic Research Office of the Assistant Secretary of Defense for Research and Engineering.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We are grateful to the primary authors of the reviewed projects: Aaron Johnson, Feifei Qian, Deniz Ilhan, and Vasileios Vasilopoulos. We also thank Diedra Krieger for administrative support.

Footnotes

1. ^(Miracchi, 2019) uses the term “agent” model because of her focus on agency and intentionality. Here we use the term “emergent” both for increased generality and because we do not wish to take a stand on whether the target behaviors are agential in any important sense.

2. ^As formalized by (Şahin et al., 2007), this is similar to taking the “observer" perspective rather than the “agent” perspective.

References

Aguilar, J., and Goldman, D. I. (2016). Robophysical study of jumping dynamics on granular media. Nat. Phys. 12, 278–283. doi: 10.1038/nphys3568

Andries, M., Chavez-Garcia, R. O., Chatila, R., Giusti, A., and Gambardella, L. M. (2018). Affordance equivalences in robotics: a formalism. Front. Neurorobot. 12:26. doi: 10.3389/fnbot.2018.00026

Arslan, O., and Koditschek, D. E. (2019). Sensor-based reactive navigation in unknown convex sphere worlds. Int. J. Robot. Res. 38, 196–223. doi: 10.1177/0278364918796267

Beer, R. D. (1997). The dynamics of adaptive behavior: a research program. Robot. Auton. Syst. 20, 257–289. doi: 10.1016/S0921-8890(96)00063-2

Bertrand, O. J., Lindemann, J. P., and Egelhaaf, M. (2015). A bio-inspired collision avoidance model based on spatial information derived from motion detectors leads to common routes. PLoS Comput. Biol. 11:e1004339. doi: 10.1371/journal.pcbi.1004339

Brooks, R. (1986). A robust layered control system for a mobile robot. IEEE J. Robot. Auto. 2, 14–23.

Brooks, R. A. (1991). Intelligence without representation. Art. Intell. 47, 139–159. doi: 10.1016/0004-3702(91)90053-M

Burke, M., Hristov, Y., and Ramamoorthy, S. (2019a). Hybrid system identification using switching density networks. arXiv [preprint]. arXiv:1907.04360.

Burke, M., Penkov, S., and Ramamoorthy, S. (2019b). From explanation to synthesis: Compositional program induction for learning from demonstration. arXiv [preprint]. arXiv:1902.10657. doi: 10.15607/RSS.2019.XV.015

Burridge, R. R., Rizzi, A. A., and Koditschek, D. E. (1999). Sequential composition of dynamically dexterous robot behaviors. Int. J. Robot. Res. 18, 534–555. doi: 10.1177/02783649922066385

Chemero, A., and Turvey, M. T. (2007). Gibsonian affordances for roboticists. Adapt. Behav. 15, 473–480. doi: 10.1177/1059712307085098

Cheng, K., Schultheiss, P., Schwarz, S., Wystrach, A., and Wehner, R. (2014). Beginnings of a synthetic approach to desert ant navigation. Behav. Process. 102, 51–61. doi: 10.1016/j.beproc.2013.10.001

Chiel, H. J., and Beer, R. D. (1997). The brain has a body: adaptive behavior emerges from interactions of nervous system, body and environment. Trends Neurosci. 20, 553–557.

Cisek, P. (2007). Cortical mechanisms of action selection: the affordance competition hypothesis. Philos. Trans. R. Soc. Lond. B Biol. Sci. 362, 1585–1599. doi: 10.1098/rstb.2007.2054

Clark, A. (1998). Being there: Putting Brain, Body, and World Together Again. Cambridge, MA: MIT Press.

Cohen, P. R. (1995). Empirical Methods for Artificial Intelligence, Vol. 139. Cambridge, MA: MIT Press.

Conant, R. C., and Ross Ashby, W. (1970). Every good regulator of a system must be a model of that system. Int. J. Syst. Sci. 1:89–97.

Cruse, H., Bartling, C., Dreifert, M., Schmitz, J., Brunn, D., Dean, J., et al. (1995). Walking: a complex behavior controlled by simple networks. Adapt. Behav. 3:385–418.

Cruse, H., Dürr, V., and Schmitz, J. (2006). Insect walking is based on a decentralized architecture revealing a simple and robust controller. Philos Trans A Math Phys Eng Sci. 365, 221–250. doi: 10.1098/rsta.2006.1913

Cruse, H., Kindermann, T., Schumm, M., Dean, J., and Schmitz, J. (1998). Walknet–a biologically inspired network to control six-legged walking. Neural Netw. 11, 1435–1447.

Cruse, H., and Wehner, R. (2011). No need for a cognitive map: decentralized memory for insect navigation. PLoS Comput. Biol. 7:e1002009. doi: 10.1371/journal.pcbi.1002009

De, A., and Koditschek, D. (2015). “Parallel composition of templates for tail-energized planar hopping,” in 2015 IEEE International Conference on Robotics and Automation (ICRA) (Seattle, WA), 4562–4569.

De, A., and Koditschek, D. E. (2018). Vertical hopper compositions for preflexive and feedback-stabilized quadrupedal bounding, pacing, pronking, and trotting. Int. J. Robot. Res. 37, 743–778. doi: 10.1177/0278364918779874

Espenschied, K. S., Quinn, R. D., Beer, R. D., and Chiel, H. J. (1996). Biologically based distributed control and local reflexes improve rough terrain locomotion in a hexapod robot. Robot. Auton. Syst. 18, 59–64.

Francis, B. A., and Wonham, W. M. (1976). The internal model principle of control theory. Automatica 12, 457–465.

Full, R., and Koditschek, D. (1999). Templates and anchors: Neuromechanical hypotheses of legged locomotion on land. J. Exp. Biol. 202, 3325–3332.

Fultot, M., Frazier, P. A., Turvey, M., and Carello, C. (2019). What are nervous systems for? Ecol. Psychol. 31, 218–234. doi: 10.1080/10407413.2019.1615205

Golubitsky, M., Stewart, I., Buono, P.-L., and Collins, J. (1999). Symmetry in locomotor central pattern generators and animal gaits. Nature 401:693–695.

Grillner, S. (1985). Neurobiological bases of rhythmic motor acts in vertebrates. Science 228, 143–149.

Gunning, D., and Aha, D. W. (2019). DARPA's explainable artificial intelligence program. AI Magaz. 40, 44–58.

Hafting, T., Fyhn, M., Molden, S., Moser, M.-B., and Moser, E. I. (2005). Microstructure of a spatial map in the entorhinal cortex. Nature 436:801–806. doi: 10.1038/nature03721

Hassanin, M., Khan, S., and Tahtali, M. (2018). Visual affordance and function understanding: a survey. arXiv [preprint]. arXiv:1807.06775.

Hatton, R. L., and Choset, H. (2010). “Sidewinding on slopes,” in 2010 IEEE International Conference on Robotics and Automation (Anchorage, AK: IEEE), 691–696.

Hogan, N., and Sternad, D. (2013). Dynamic primitives in the control of locomotion. Front. Comput. Neurosci. 7:71. doi: 10.3389/fncom.2013.00071

Hoinville, T., and Wehner, R. (2018). Optimal multiguidance integration in insect navigation. Proc. Natl. Acad. Sci. U.S.A. 115, 2824–2829. doi: 10.1073/pnas.1721668115

Hubicki, C. M., Aguilar, J. J., Goldman, D. I., and Ames, A. D. (2016). “Tractable terrain-aware motion planning on granular media: an impulsive jumping study,” in 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (Stockholm: IEEE), 3887–3892.

Ijspeert, A. J. (2008). Central pattern generators for locomotion control in animals and robots: a review. Neural Netw. 21, 642–653. doi: 10.1016/j.neunet.2008.03.014

Ijspeert, A. J. (2014). Biorobotics: using robots to emulate and investigate agile locomotion. Science 346, 196–203. doi: 10.1126/science.1254486

Ijspeert, A. J., Crespi, A., Ryczko, D., and Cabelguen, J.-M. (2007). From swimming to walking with a salamander robot driven by a spinal cord model. Science 315, 1416–1420. doi: 10.1126/science.1138353

Ilhan, B. D., Johnson, A. M., and Koditschek, D. E. (2018). Autonomous legged hill ascent. J. Field Robot. 35, 802–832. doi: 10.1002/rob.21779

Jacobs, L. F., and Menzel, R. (2014). Navigation outside of the box: what the lab can learn from the field and what the field can learn from the lab. Move. Ecol. 2:3. doi: 10.1186/2051-3933-2-3

Jindrich, D. L., and Full, R. J. (2002). Dynamic stabilization of rapid hexapedal locomotion. J. Exp. Biol. 205, 2803–2823. Available online at: https://jeb.biologists.org/content/205/18/2803

Johnson, A. M., Haynes, G. C., and Koditschek, D. E. (2012). “Standing self-manipulation for a legged robot,” in 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems (Vilamoura: IEEE), 272–279.

Kawato, M. (1999). Internal models for motor control and trajectory planning. Curr. Opin. Neurobiol. 9, 718–727.

Kenneally, G., De, A., and Koditschek, D. E. (2016). Design principles for a family of direct-drive legged robots. IEEE Robot. Auto. Lett. 1, 900–907. doi: 10.1109/LRA.2016.2528294

LeCun, Y., Bengio, Y., and Hinton, G. (2015). Deep learning. Nature 521, 436–444. doi: 10.1038/nature14539

Lozano-Perez, T., Mason, M. T., and Taylor, R. H. (1984). Automatic synthesis of fine-motion strategies for robots. Int. J. Robot. Res. 3, 3–24.

Majumdar, A., and Tedrake, R. (2017). Funnel libraries for real-time robust feedback motion planning. Int. J. Robot. Res. 36, 947–982. doi: 10.1177/0278364917712421

Merel, J., Botvinick, M., and Wayne, G. (2019). Hierarchical motor control in mammals and machines. Nat. Commun. 10:5489. doi: 10.1038/s41467-019-13239-6

Minassian, K., Hofstoetter, U. S., Dzeladini, F., Guertin, P. A., and Ijspeert, A. (2017). The human central pattern generator for locomotion: Does it exist and contribute to walking? Neuroscientist. 23:649–663. doi: 10.1177/1073858417699790

Miracchi, L. (2017). Generative explanation in cognitive science and the hard problem of consciousness. Philos. Perspect. 31, 267–291. doi: 10.1111/phpe.12095

Miracchi, L. (2019). A competence framework for artificial intelligence research. Philos. Psychol. 32, 589–634. doi: 10.1080/09515089.2019.1607692

Moser, E. I., Kropff, E., and Moser, M.-B. (2008). Place cells, grid cells, and the brain's spatial representation system. Annu. Rev. Neurosci. 31, 69–89. doi: 10.1146/annurev.neuro.31.061307.090723

O'Keefe, J., and Burgess, N. (1996). Geometric determinants of the place fields of hippocampal neurons. Nature 381, 425–428.

Owaki, D., and Ishiguro, A. (2017). A quadruped robot exhibiting spontaneous gait transitions from walking to trotting to galloping. Sci. Rep. 7:277.

Owaki, D., Kano, T., Nagasawa, K., Tero, A., and Ishiguro, A. (2013). Simple robot suggests physical interlimb communication is essential for quadruped walking. J. R. Soc. Interface 10:20120669.

Pavlakos, G., Zhou, X., Chan, A., Derpanis, K. G., and Daniilidis, K. (2017). “6-DoF object pose from semantic keypoints,” in 2017 IEEE International Conference on Robotics and Automation (ICRA) (IEEE), 2011–2018.

Pearson, K. G. (2004). Generating the walking gait: role of sensory feedback. Prog. Brain Res. 143, 123–129. doi: 10.1016/S0079-6123(03)43012-4

Pezzulo, G., and Cisek, P. (2016). Navigating the affordance landscape: feedback control as a process model of behavior and cognition. Trends Cogn. Sci. 20:414–24. doi: 10.1016/j.tics.2016.03.013

Qian, F., Jerolmack, D., Lancaster, N., Nikolich, G., Reverdy, P., Roberts, S. F., et al. (2017). Ground robotic measurement of aeolian processes. Aeolian Res. 27:1–11. doi: 10.1016/j.aeolia.2017.04.004

Qian, F., and Koditschek, D. E. (2019). An obstacle disturbance selection framework: emergent robot steady states under repeated collisions. Int. J. Robot. Res.

Roberts, S. F., and Koditschek, D. E. (2018). “Reactive velocity control reduces energetic cost of jumping with a virtual leg spring on simulated granular media,” in Proceedings of the 2018 IEEE International Conference on Robotics and Biomimetics (ROBIO) (Kuala Lumpur).

Roberts, S. F., and Koditschek, D. E. (2019). “Mitigating energy loss in a robot hopping on a physically emulated dissipative substrate,” in Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA) (Montreal, QC).

Şahin, E., Çakmak, M., Doğar, M. R., Uğur, E., and Üçoluk, G. (2007). To afford or not to afford: a new formalization of affordances toward affordance-based robot control. Adapt. Behav. 15:447–472. doi: 10.1177/1059712307084689

Samek, W., Wiegand, T., and Müller, K.-R. (2017). Explainable artificial intelligence: Understanding, visualizing and interpreting deep learning models. arXiv [preprint]. arXiv:1708.08296.

Saranli, U., Buehler, M., and Koditschek, D. E. (2001). RHex: a simple and highly mobile hexapod robot. The Int. J. Robot. Res. 20:616–631. doi: 10.1177/02783640122067570

Savelli, F. and Knierim, J. J. (2019). Origin and role of path integration in the cognitive representations of the hippocampus: computational insights into open questions. J. Exp. Biol. 222(Suppl. 1):jeb188912. doi: 10.1242/jeb.188912

Sponberg, S., and Full, R. (2008). Neuromechanical response of musculo-skeletal structures in cockroaches during rapid running on rough terrain. J. Exp. Biol. 211:433–446. doi: 10.1242/jeb.012385

Steuer, I., and Guertin, P. A. (2019). Central pattern generators in the brainstem and spinal cord: an overview of basic principles, similarities and differences. Rev. Neurosci. 30, 107–164. doi: 10.1515/revneuro-2017-0102

Stoffregen, T. A. (2003). Affordances as properties of the animal-environment system. Ecol. Psychol. 15, 115–134. doi: 10.1207/S15326969ECO1502_2

Topping, T. T., Vasilopoulos, V., De, A., Koditschek, and Daniel, E. (2019). “Composition of templates for transitional pedipulation behaviors,” in International Symposium on Robotics Research (ISRR), an IEEE conference (Hanoi).

Vasilopoulos, V., and Koditschek, D. E. (2018). “Reactive navigation in partially known non-convex environments,” in 13th International Workshop on the Algorithmic Foundations of Robotics (WAFR) (Merida).

Vasilopoulos, V., Topping, T. T., Vega-Brown, W., Roy, N., and Koditschek, D. E. (2018a). “Sensor-based reactive execution of symbolic rearrangement plans by a legged mobile manipulator,” in 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (Madrid: IEEE), 3298–3305.

Vasilopoulos, V., Vega-Brown, W., Arslan, O., Roy, N., and Koditschek, D. E. (2018b). “Sensor-based reactive symbolic planning in partially known environments,” in 2018 IEEE International Conference on Robotics and Automation (ICRA) (Brisbane, QLD: IEEE), 1–5.

Webb, B. (2001). Can robots make good models of biological behaviour? Behav. Brain Sci. 24, 1033–1050. doi: 10.1017/S0140525X01000127

Wehner, R., and Srinivasan, M. V. (2003). “Path integration in insects,” in The Neurobiology of Spatial Behaviour, ed Jeffery, K. J., (Oxford: Oxford University Press), 9–30.

Wilson, A. D., and Golonka, S. (2013). Embodied cognition is not what you think it is. Front. Psychol. 4:58. doi: 10.3389/fpsyg.2013.00058

Wonham, W. M. (1976). Towards an abstract internal model principle. IEEE Trans. Syst. Man Cybern. 6, 735–740.

Wystrach, A., and Graham, P. (2012). What can we learn from studies of insect navigation? Anim. Behav. 84:13–20. doi: 10.1016/j.anbehav.2012.04.017

Keywords: robot, affordance, legged, generative, reactive

Citation: Roberts SF, Koditschek DE and Miracchi LJ (2020) Examples of Gibsonian Affordances in Legged Robotics Research Using an Empirical, Generative Framework. Front. Neurorobot. 14:12. doi: 10.3389/fnbot.2020.00012

Received: 13 October 2019; Accepted: 31 January 2020;

Published: 20 February 2020.

Edited by:

Erwan Renaudo, University of Innsbruck, AustriaReviewed by:

Subramanian Ramamoorthy, University of Edinburgh, United KingdomVolker Dürr, Bielefeld University, Germany

R. Omar Chavez-Garcia, Dalle Molle Institute for Artificial Intelligence Research, Switzerland

Copyright © 2020 Roberts, Koditschek and Miracchi. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Sonia F. Roberts, soro@seas.upenn.edu

Sonia F. Roberts

Sonia F. Roberts Daniel E. Koditschek1

Daniel E. Koditschek1  Lisa J. Miracchi

Lisa J. Miracchi