Abstract

Diffusive losses of nitrogen and phosphorus from agricultural areas have detrimental effects on freshwater and marine ecosystems. Mitigation measures treating drainage water before it enters streams hold a high potential for reducing nitrogen and phosphorus losses from agricultural areas. To achieve a better understanding of the opportunities and challenges characterising current and new drainage mitigation measures in oceanic and continental climates, we reviewed the nitrate and total phosphorus removal efficiency of: (i) free water surface constructed wetlands, (ii) denitrifying bioreactors, (iii) controlled drainage, (iv) saturated buffer zones and (v) integrated buffer zones. Our data analysis showed that the load of nitrate was substantially reduced by all five drainage mitigation measures, while they mainly acted as sinks of total phosphorus, but occasionally, also as sources. The various factors influencing performance, such as design, runoff characteristics and hydrology, differed in the studies, resulting in large variation in the reported removal efficiencies.

Similar content being viewed by others

Introduction

The high intensive agricultural production dominating parts of the world, such as Western Europe and North America, is one of the main causes of eutrophication resulting in water quality problems and ecosystem degradation worldwide (Kronvang et al. 2005; Diaz and Rosenberg 2008; Steffen et al. 2015). The intensification and expansion of agriculture during the past decades have led to a drastic increase in nutrient loss from agricultural areas, as well as changes in land use. Wet landscapes have been systematically drained to enable anthropogenic activities such as food production (Skaggs and van Schilfgaarde 1999). However, in addition to water, drainage systems also transport nutrients rapidly to surface waters, thereby lowering the natural retention capacity of catchments. Thus, engineered ecotechnologies designed to intercept and reduce nitrogen (N) and phosphorus (P) losses from agricultural drainage systems have emerged over the last decades with the aim to improve water quality (Mitsch and Jørgensen 1989). Substantial changes in land use can also be expected in the future when addressing energy and food security such as transformation of the society to a bio-economy (Marttila et al. 2020; Rakovic et al. 2020). Water quality and quantity are key elements in such a transformation, thus the development and implementation of drainage mitigation provide valuable opportunities for innovation in future bio-economies. Besides reducing nutrient losses to surface water, these measures can be designed to provide multiple ecosystem services, such as water storage and biomass production, as well as recycling of nutrients.

Drainage mitigation measures reduce the transport of N from drainage systems primarily by enhancing denitrification (O’Geen et al. 2010), i.e. the process by which nitrate dissolved in water is converted to atmospheric nitrogen (Knowles 1982). Denitrification requires anoxic conditions, electron donors and availability of organic carbon. If these requirements are met, the rate of the denitrification is mainly controlled by temperature and the hydraulic retention time (HRT), which is inversely proportional to the water flow rate (Kadlec and Knight 1996; Hoffmann et al. 2019). The water flow from subsurface drainage systems is driven by precipitation and snowmelt and, thus, varies greatly on a temporal as well as a spatial scale (Skaggs and van Schilfgaarde 1999). This challenges the performance of drainage mitigation measures in some parts of the world, for instance the Nordic countries, where high loading rates of nitrate often occur during autumn to early spring when the water temperature and denitrification rates are low. Therefore, we were particularly interested in investigating the nitrate removal efficiency of drainage mitigation measures treating drainage water in climate zones, where high loading rates of nitrate often occur when conditions for denitrification is suboptimal. In addition to nitrate removal, drainage mitigation measures have shown potential for retention of P as increased HRT allows settling of suspended material such as sediment and particulate P (PP). Yet, the anoxic conditions established by these mitigation measures might lead to net P release, depending on local hydrological and geochemical conditions (O’Geen et al. 2010).

In this review, we focused on five types of mitigation measures treating drainage water before it enters streams. These were the commonly applied free water surface flow constructed wetlands (FWS), denitrifying bioreactors (DBR) and controlled drainage (CD) and the two emergent technologies saturated buffer zones (SBZ) and integrated buffer zones (IBZ) (Fig. 1). To obtain a better understanding of the opportunities and challenges of current and new drainage mitigation measures targeting the transport of nutrients from agricultural areas in oceanic and continental climates, we examined nitrate and total P (TP) removal efficiencies at 82 drainage sites established between 1991 and 2018 in eleven countries. Thus, this review compiles the available evidence on nitrate and TP removal efficiencies from both pilot and full-scale field studies on drainage mitigation measures to provide a synthesis of the existing body of peer-reviewed literature.

Materials and methods

Overview of the included types of drainage mitigation measures

Free water surface constructed wetlands (FWS)

In FWS, drainage water typically passes one or more deep basins or channels and shallow vegetated zones (berms) before reaching the outlet and eventually the stream (Kovacic et al. 2000) (Fig. 1). The deep zones reduce the water flow and thus increase HRT and promote denitrification and sedimentation, while the shallow vegetation berms supply organic carbon. Furthermore, FWS can capture surface runoff if located downhill. Free water surface constructed wetlands are mostly established in areas with low permeable soils, and if not, they are often sealed with non-permeable layers such as clay membranes to prevent seepage to the groundwater. Construction of wetlands for diffusive pollution control began in the late 1980s with the aim to create simple systems mimicking the processes occurring in natural wetlands (Mitsch and Jørgensen 1989; Fleischer et al. 1994). Multiple types of FWS exist (Mitsch et al. 2001), although in this review, we focused only on the subset of FWS designed to treat drainage water before it reaches streams.

Denitrifying bioreactors (DBR)

In DBR, the drainage water is routed horizontally or vertically through a basin filled with carbon-rich filter substrate (e.g. different types of wood chips mixed with gravel, soil or other materials) before it reaches the outlet (Blowes et al. 1994) (Fig. 1). The substrate of the DBR can either be in direct contact with air (David et al. 2016; Carstensen et al. 2019b) or sealed off by a layer of soil on top of the reactor (de Haan et al. 2010). Similar to FWS, the base of the DBR are sealed with non-permeable membranes to avoid seepage if establish on water-permeable soils. Denitrifying bioreactors are also known as subsurface flow constructed wetlands, denitrifying beds or bio-filters. The first pilot study with DBR, established in Canada in 1994, was inspired by wastewater treatment plants (Blowes et al. 1994). However, in contrast to wastewater treatment plants, DBR was solely designed to promote anoxic conditions, and carbon-rich filter material was added to fuse denitrification.

Controlled drainage (CD)

Controlled drainage is a groundwater management technique, where the in-field groundwater level is elevated using a water control structure to restrict the water flow from the drain outlet (Gilliam et al. 1979) (Fig. 1). Thus, CD alters the hydrological cycle of the field, which, depending on location and season, increases some or all of the following flow components: root zone water storage, seepage (shallow, deep), surface runoff, plant uptake and evaporation (Skaggs et al. 2012). Experiments with CD were initiated in the late 1970s in the USA to investigate the potential for enhancing in-field denitrification (Willardson et al. 1970), and CD were also practiced in the former German Democratic Republic to cope with summer droughts, though the technique disappeared with the fall of the wall (Heinrich 2012).

Saturated buffer zones (SBZ)

In a SBZ, drainage water and riparian soil are reconnected by a buried, lateral perforated distribution pipe running parallel to the stream, which redirect the drainage water into the riparian zone (Jaynes and Isenhart 2019) (Fig. 1). The infiltrating water saturates the riparian soil and creates anoxic conditions, though in order for denitrification to occur, the soil carbon content must be sufficient. This novel technique was recently developed and tested in the USA (Jaynes and Isenhart 2014).

Integrated buffer zones (IBZ)

In IBZ, the drainage water is first retained in a pond designed to capture particles and increase the HRT and to buffer surface runoff (Zak et al. 2018) (Fig. 1). After the pond, the water infiltrates a vegetated shallow zone where the top soil has been removed. In this infiltration zone, anoxic conditions develop and carbon is added from the vegetation via root exudates or leached plant litter. Integrated buffer zones were recently developed and tested in Northwestern Europe with the aim to improve the nutrient reduction capacity of traditional riparian buffer zones bypassed by drainage pipes, while promoting multi-functionality, such as biodiversity and biomass production (Zak et al. 2019).

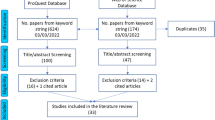

Literature search and inclusion criteria

To find relevant studies for our review, a search of published studies was conducted via ISI Web of Science for 1900–2019 employing four different search strings, which are described in the Supplementary Material (Table S1). The relevant studies was selected considering the following criteria:

-

The inlet water had to originate from drainage systems transporting water from agricultural fields, and must not be mixed with water from other sources such as streams.

-

Based on the Köppen-Geiger climate classification system, the sites had to be located in oceanic (Cfb, Cfc) or continental (Dfa, Dfb, Dfc, Dfd, Dsc) climates (Fig. 2), where the conditions for denitrification are often suboptimal. Thus, climate zones with dry winters (letter w) were excluded.

-

The study had to be a field study with sites exposed to ambient temperature and with a surface area larger than 10 m2.

-

The study had to include a mass balance for either nitrate—N, total phosphorus (TP) or total suspended solids (TSS) for at least one drainage season, whose length depended on the climate region.

If two studies were conducted at the same study site within overlapping monitoring periods, the study with the longest time series was selected. Not all extracted data could be separated into years or seasons, implying that standard deviation (σ) for nitrate removal was not available for nine sites and for TP removal for one site; still, these sites were included in the calculation of the arithmetic mean (Table S2). Absolute removal was reported in various units (e.g. g m−2, kg ha−1, g m−3), and we therefore identified and used the most commonly reported unit, which meant that recalculation of removal efficiencies were necessary in some studies.

Meta-analysis

The average nitrate and TP removal efficiencies of mitigation measures treating agricultural drainage water were quantified using meta-analysis. Prior to the analysis, the assumption of normality was tested visually (Q–Q plot, histogram) and by the Shapiro–Wilk test, and where the assumptions were not fulfilled this is mentioned in the result section. Meta-analysis was only conducted for a mitigation measure if sufficient data were available, i.e. data from more than two sites originating from different studies. The meta-analysis was performed in R software 3.6.1 (R Core Team 2019) using the R package ‘meta’ (Schwarzer 2019). The effect size of each study was expressed as the raw removal efficiency and was calculated as follows:

where Loadin is the loading to the system in kg year−1 and Loadout the loss from the system in kg year−1; for CD sites the unit is kg ha−1 year−1.

Each effect size was weighted, and a higher weight was given to studies with small standard error (SE) and large sample size, as these were regarded as more precise. The summary effect was calculated based on the effect sizes and their weight, using a random effect model, which allow the true mean to vary between studies, as the selected studies differed in design, materials and methods. To account for this variability, the weighting factor assigned to each effect size incorporated both the within-study variance (σ2) and the between-study variance (T2). The DerSimonian and Laird (DL) method was applied to estimate T2, and the Hartung-Knapp method was used to adjust the confidence intervals (CI), producing more conservative results, as recommended by Borenstein (2009), when dealing with a low number of studies (K < 20). To evaluate whether the use of the overall summary effect was appropriate, the degree of consistency of the effect sizes was assessed using forest plot, funnel plot and multiple statistical measures. The observed variation (Q) was tested to investigate if the true effect varied between studies and if application of the random effect model was appropriate (Borenstein 2009). The excess variation over the observed variation (I2) gave an indication of what proportion of the variation was real, and reflected the extent of overlapping CIs. However, care must be taken, as in the case of an I2 close to zero, it can either be ascribed to that all variance is due to sampling error within the studies, though it can also be caused by very imprecise studies with substantial difference between effect sizes. Thus, large I2 values can either indicate the possible existence of different subgroups or that the analysis contain highly precise studies with very small differences between the effect sizes. The estimate of the absolute variance, T2, was used as an indication of dispersion, and it was compared with σ2. The standard deviation of the effect size (T) was also reported. In the funnel plot, the removal efficiencies were plotted against the SE, thus asymmetry or other shapes in the funnel plot might indicate bias related to publication bias, heterogeneity or sampling error. Funnel plots were only inspected if the analysis contained more than ten studies, as recommend by Borenstein (2009). For each effect size and summary effect, a 95% CI was reported. Additionally, a 95% prediction interval (PI) was calculated for each summary effect, yielding the interval where 95% of future studies will fall (Borenstein 2009). To further explore heterogeneity and the robustness of the summary effect, a meta-analysis was performed on two subsets of data for each drainage mitigation measure if data sufficed. The first subset of data contained only sites from the low risk of bias category (“Risk of bias assessment”), while the other data set only contained sites where the within-study sample size (N) was larger than two.

Risk of bias assessment

It is important to consider the extent of systematic errors resulting from different factors such as a poor study design or issues related to the collection, analysis and reporting of data when conducting a review. In this study, the risk of bias tool developed by Higgins et al. (2011) was used as a guideline, although it was originally developed based on evidence from randomised trials within the field of meta-epidemiology. However, it has earlier been modified and used for environmental studies (Bilotta et al. 2014), such as wetlands (Land et al. 2016). In our study, the risk of bias assessment included two steps (Fig. 3), where the first step was an evaluation of the water balance monitoring strategy (1.A in Fig. 3). The water balance is especially of importance when quantifying the removal efficiency, as any errors here will propagate into the nutrient balance. To assess the monitoring strategy of the water balance, the most important flow paths were given a percentage, and aggregated into an overall score. Thus, monitoring of inflow and outflow accounted for 30%, groundwater for 20%, surface runoff for 10% and precipitation and evaporation for 5% each. A percentage of 100% implied that all important flow paths were monitored or otherwise accounted for. In the second step, the monitoring frequency of flow (2.B in Fig. 3) and the spatial and temporal frequency of nutrient sampling (2.C, 2.D) were assessed. Finally, the selection of control and impact sites was evaluated (2.E in Fig. 3); however, this was only relevant for studies on CD, as these were the only studies with true spatial replication. In the remaining studies, the inlet served as control and the outlet as impact. If all five attributes were fulfilled, the site was considered as having low risk of bias; otherwise, it was considered having moderate to high risk of bias.

Results

Descriptive characteristics

The initial search yielded 8126 studies in total, and after evaluating the inclusion criteria, we had a master bibliography of 42 articles containing 84 sites distributed across eleven countries (Table 1 and Table S2). According to our risk of bias assessment, the risk of bias was low in 35% of the studies. Insufficient monitoring of the water balance was the main reason that many studies were categorised as having ‘moderate to high’ risk of bias (Table 1). The ratio of the drainage mitigation measure surface area to the contributing catchment area (DMMCAR) was largest for SBZ (7%) and FWS (2%), while DBR (0.1%) and IBZ (0.2%) had the lowest ratios (Table 2). For CD, the DMMCAR was technically 100% if assuming that the groundwater level was elevated within the entire contributing catchment area, however, this can be a misleading term, as the control system only occupied very little of the field (app. one m2 per regulation well). The hydraulic loading rate (HLR) to the systems differed substantially, as expected, being highest for DBR and lowest for CD due to the difference in size. Treatment of drainage water is a relatively new concept, as illustrated by that the oldest facilities were two 20-year-old FWS and the second oldest a 10-year-old DBR. The youngest and least studied measure was IBZ.

Free water surface constructed wetlands (FWS)

The weighted average obtained by meta-analysis showed that FWS significantly reduced nitrate loading by 41% within a range from − 8 to 63% (Fig. 4). The CI varied from 29 to 51%, while the PI was rather broad, varying from 5 to 76%. The funnel plot did not indicate major biases, as the studies were more or less evenly scattered (Fig. 5). However, the heterogeneity of the selected sites was rather high (I2 = 96%), and T2 (260%) was higher than σ2 (70%). The subset analysis of data with either low risk of bias or sampling periods longer than two years/drainage seasons showed the average removal ranged between 40 and 44%, and CI and PI were slightly more narrow than for the full dataset (Table 4). Studies with N > 2 had lower T2, whereas σ2 was slightly higher, which lowered the heterogeneity. According to the arithmetic mean, the removal efficiency was 41% (CI: 29 to 51%) (Table 3). The absolute nitrate removal per FWS area amounted to 60 g N m−2 year−1 (CI: 29 to 91 g N m−2 year−1).

Forest plots showing effect sizes (RRE) and 95% confidence intervals (CI) of relative nitrate–N removal and summary effect with CI and prediction interval and heterogeneity analysis for free water surface constructed wetlands (FWS), denitrifying bioreactors (DBR) and controlled drainage (CD). N within-study sample size. ID represents FWS and DBR study sites; for CD the letter is unique for the research facilities

According to the meta-analysis the average TP removal efficiency of FWS was 33%, ranging from − 103 to 68% (CI: 19 to 47%, PI: − 2 to 69%) (Fig. 6). The removal efficiencies did not follow a normal distribution; the data were skewed to the left due to net release of TP from multiple sites. The funnel plot showed an asymmetrical scatter of sites, as sites with TP release had much higher SE (Fig. 5). As expected, the heterogeneity was rather high, and T2 (226%) was much lower than σ2 (838%). The subset data analysis for TP removal reported a slightly higher removal (35%) than the initial data; however, σ2 was still very high as the included studies reported both removal and release of TP (Table 4). The data were further investigated by separating sinks and sources, showing that four sites exhibited a net release of TP (− 49%, CI: − 18 to − 83%) and eleven sites acted as sinks (38%, CI: 27 to 49%). The arithmetic mean TP removal efficiency was 18% (CI: − 4 to 46%) with an average absolute removal of 0.68 g P m−2 year−1 (− 1.16 to 2.52 g P m−2 year−1) (Table 3). The removal efficiency of TSS was 41% (CI: 28 to 54%) when calculated as the arithmetic mean.

Forest plots showing effect sizes (RRE) and 95% confidence intervals (CI) of relative total phosphorus (TP) removal and summary effect with CI and prediction interval and heterogeneity analysis for free water surface constructed wetlands (FWS) and controlled drainage (CD). N within-study sample size. ID represents unique sites for FWS and DBR; for CD the letter is unique for the research facilities

Denitrifying bioreactors (DBR)

The weighted average calculated by meta-analysis showed a significant reduction of the annual nitrate loading by DBR of 40% within a range from 6 to 79% (CI: 24 to 55%, PI: − 9 to 89%) (Fig. 4). The funnel plot revealed asymmetry of data, where studies with low efficiency tended to have lower SE (Fig. 5). The heterogeneity analysis showed that the I2 was high (99%), as some of the studies were very precise, but showed different removal efficiency. Average T2 (436%) was much higher than σ2 (169%). The subset analysis of data with either low risk of bias or sampling periods longer than two years/drainage seasons reported lower removal efficiency (35%), and CI and PI were slightly narrower for studies with N > 2 (Table 4). Similar to FWS, studies with N > 2 had lower T2 and higher σ2. The arithmetic mean efficiency was 44% (CI: 35 to 53%), while the absolute nitrate removal per DBR volume amounted, on average, to 715 g N m−3 year−1 (CI: 292 to 760 g N m−3 year−1), ranging from 66 to 2033 g N m−3 year−1 (Table 4). This corresponded to an area-based nitrate reduction of 594 g N m−2 year−1 (CI: 333 to 855 g N m−2 year−1).

Only two studies included TP balances for the full drainage season, preventing meta-analysis. These two studies were somewhat contradictory in that one found release of TP (− 208% or − 30 g P m−2 year−1) and the other net removal (28% or 6 g P m−2 year−1) (Table 4).

Controlled drainage (CD)

The meta-analysis showed that CD significantly reduced the annual nitrate loading by, on average, 50% within a range from 19 to 82% (Fig. 4) (CI: 41 to 59%, PI: 19 to 81%). However, both CI and PI should be interpreted with care as the effect sizes did not follow a normal distribution. The funnel plot displayed a more or less even scatter of sites (Fig. 5). Heterogeneity was high (I2 = 79%), yet T2 was only slightly higher than σ2. The removal efficiency of studies including sampling periods longer than two years/drainage seasons was more or less similar to the result of the full data analysis (Table 4). However, the subset analysis pointed to the possible occurrence of two groups; one with a removal efficiency < 44% and one with a removal efficiency > 61%. The mean (arithmetic) nitrate removal efficiency was 48% (CI: 40 to 56%). The absolute nitrate removal amounted to 1.20 g N m−2 year−1 (1.16 to 1.24 g N m−2 year−1), corresponding to 12 kg N ha−1 year−1 (CI: 8 to 16 kg N ha−1 year−1). The relative nitrate reduction correlated well with the relative reduction of drainage flow (R = 0.80 (Pearson), p < 0.0001, K = 19), and exclusion of studies with sub-irrigation, a practice implying an additional water supply, improved this correlation (R = 0.88, p < 0.0001, K = 10) (Fig. 7).

The average loss of TP via drainage water was reduced by 34% (CI: 10 to 58%, PI: − 23 to 92%) according to the meta-analysis (Fig. 6). The removal efficiencies did not follow a normal distribution as the data were slightly skewed towards the right. According to the statistical analysis, heterogeneity was moderate (I2 = 65%), while T2 (406%) and σ2 (403%) were more or less identical, suggesting that the studies were similar enough to justify combination. The arithmetic mean was 29% (CI 10 to 48%) (Table 4). The average absolute TP retention amounted to 0.03 g P m−2 year−1 (0.01 to 0.05 g P m−2 year−1) or 0.30 kg P ha−1 year−1 (0.10 to 0.49 kg P ha−1 year−1) (Table 4). The relative reduction of TP loading correlated well with the reduction of drainage flow (R = 0.87 (Pearson), p < 0.01, K = 6) (Fig. 7).

Saturated and integrated buffer zones (SBZ and IBZ)

Removal efficiencies could not be aggregated using meta-analysis for the emergent technologies, SBZ and IBZ, as, until now, only one study containing multiple sites has been published for each practice (Table 4). The annual arithmetic mean removal efficiency was 75% (CI: 35 to 53%) of the nitrate loaded into the SBZ. However, between 6 and 77% of the water bypassed the SBZ; thus, taking all nitrate leaving the field into account, the average nitrate removal efficiency was 37% (CI: 17 to 57%) and varied from 8 to 84%. The absolute nitrate removal per SBZ area was 23 g N m−2 year−1 (CI: 9 to 37 g N m−2 year−1). There were no available data on TP balances for SBZ in the articles selected for this review. For IBZ, the annual nitrate removal efficiency, calculated as the arithmetic mean, was 26% (CI: 20 to 32%) (Table 4). The absolute nitrate removal per IBZ area was 140 g N m−2 year−1 (71 to 209 g N m−2 year−1). The removal efficiency of TP was 48% (CI: 40 to 56%), while the absolute TP removal per IBZ area was 2.4 g P m−2 year−1 (CI: 1.4 to 3.5 g P m−2 year−1).

Discussion

Removal efficiency and uncertainty of drainage mitigation measures

Removal efficiency was quantified in both absolute and relative values in our review. However, care should be taken when comparing values from different sites, as the absolute removal efficiency depended heavily on the nutrient loading to the system (Fig. S1). The loading rate of nutrients are highly site specific, as it is determined by the concentration of nutrients in the water and by HLR, which is highly variable from site to site. For example, for DBR, the specific loading rate of nitrate per DBR area differed substantially between sites (221 to 11,533 g N m−2 DBR year−1). Furthermore, the HLR varies from year to year, although this variation can be accounted for to some extent by monitoring over multiple years. In this review, it was demonstrated by that study sites monitored for multiple years (N > 2) had higher σ2, and thus incorporated more variation. Absolute removal was reported relative to mitigation measures surface area in our review, however, another possibility would be to report absolute removal per catchment area, however, the estimate of catchment areas are often very uncertain, adding more uncertainty to the removal estimate. The HLR also influence relative removal, where the removal efficiency tends to increase with decreasing HLR (Vymazal 2017; Hoffmann et al. 2019) (Fig. S2), though temperature is at least as important. The design of mitigation measures is commonly guided by DMMCAR, as a rough estimate of HLR and temperature; for instance, in New Zealand a guideline predicts that a DMMCAR of 5% will yield an approximate nitrate reduction of 50 ± 15% (Tanner et al. 2010), while in Denmark a ratio around 1–1.5% is recommended for FWS to ensure a HRT of minimum 24 h during winter (Landbrugsstyrelsen 2019). However, the optimal DMMCAR is site-specific and depends on hydrological and geochemical conditions, e.g. similar DMMCARs can have very different temperatures and HLRs (Fig. S3).

The quantification of nutrient loading and removal is somewhat uncertain as it relies on a black-box approach (i.e. input–output). This implies that the estimates depend especially on the frequency of nutrient sampling and the water flow monitoring strategy. Estimates of TP retention might be more uncertain than those of nitrate as TP concentrations in tile drainage water tend to change quickly over time, especially at high flow, which can be difficult to capture (Johannesson et al. 2017), whereas nitrate concentrations tend to change more gradually. Johannesson et al. (2017) tested the importance of flow monitoring strategy and found that TP retention was underestimated when based solely on outlet flow measurements rather than on both inlet and outlet flow measurements.

Free water surface flow constructed wetlands (FWS)

The results showed that FWS significantly reduced the nitrate loss from drainage systems. However, as expected, the efficiency varied considerably since the included studies differed in design (e.g. HLR, aspect ratio, size, carbon availability), age monitoring schemes and run off characteristics, factors that all affected the removal efficiency. At one site, nitrate release was reported, which was most likely due to the lack of monitoring of one of the inlets (Koskiaho et al. 2003), which emphasises the importance of the monitoring scheme. The removal efficiency found in this review was slightly higher than that of an earlier review, which reported a removal of 37% (CI: 29 to 44%) (Land et al. 2016). Compared with Land et al. (2016), the average absolute removal was much lower in our review (181 ± 251 g N m−2 year−1), which was not surprising, as their review included a broad range of created and restored wetlands treating both agricultural runoff, riverine water, secondary and tertiary domestic wastewater and urban stormwater. Our review of FWS showed that they did not always remove TP, as four out of 15 FWS sites acted as a source of P. This net release of P might be due to mobilisation of dissolved reactive P (DRP) from the sediment or the size of the FWS being too small to adequately decelerate the flow (Kovacic et al. 2000; Tanner and Sukias 2011). The studies reporting a net release of TP had a very high within-study variance and they were therefore given less weight in the meta-analysis, with the consequence that the removal efficiency was higher than the arithmetic mean. Both the relative and the absolute removal efficiency were lower compared with Land et al. (2016), probably because the average TP loading was much higher in the studies included in their review, where also FWS established in streams were represented. In our review, most studies on FWS had low risk of bias, although, often only the inlet or the outlet was monitored, which were compensated for in the studies by adjusting the unmonitored flow component with precipitation, evaporation or groundwater (if not lined with a non-permeable membrane).

Denitrifying bioreactors (DBR)

Our meta-analysis showed that DBR significantly reduced the nitrate loss from drainage systems to surface water. The removal efficiencies generally displayed high variations, which reflected the differences (e.g. design, age) between the studied sites, not least regarding nitrate loading rates. Many of the study sites were experimental facilities or pilot studies, implying that they were established to investigate and identify factors influencing performance. For example, among the studies included in our review, the low removal efficiency could be ascribed to short-circuiting within the system (Christianson et al. 2012a), inadequate sizing, i.e. too short HRT (David et al. 2016), and scarce monitoring (Søvik and Mørkved 2008). Accordingly, the average removal efficiency derived from the meta-analysis was most likely a conservative estimate since many of the sites with suboptimal design were given a relatively high weight due to low SE. Many of the DBR sites were assessed to have a moderate to high risk of bias, as flow was often only measured at either the inlet or the outlet, however, due to their small size, the uncertainty caused by this might be lower for DBR than for e.g. FWS.

Controlled drainage (CD)

According to our results, CD significantly reduced the loading of nitrate at the drain outlet. However, heterogeneity was relatively high and the efficiencies displayed high dispersion around the mean. This was expected, though, as the efficiency of CD is especially influenced by drain spacing and management, which differed between sites (Ross et al. 2016). For example, the target elevation of the water table differed considerably between sites, from 15 to 76 cm below the soil surface. The removal efficiency found in our review aligned very well with that from an earlier review of 48 ± 12% by Ross et al. (2016). The nitrate reduction was mainly regulated by the reduction of the flow at the drain outlet, which has also been stressed in earlier studies (Skaggs et al. 2012; Ross et al. 2016). Although many studies stated that CD was implemented to increase denitrification, higher denitrification rates or lower nitrate concentrations in drain water were seldom reported despite denitrification measurements (Woli et al. 2010; Carstensen et al. 2019a). This lack of denitrification was probably due to insufficient amounts of soil organic carbon, temperature limitation or absence of anoxic zones in the soil. Higher efficiencies could potentially be obtained if the water level was elevated even closer to the surface where the organic C content is higher, but this could increase the surface runoff (Rozemeijer et al. 2015) and/or harm the crop yield. The redirected water is either stored in the root zone or directed to alternative flow paths. If the excess water moves towards the stream without passing conditions suitable for denitrification, there will be no removal of nitrate and thus no effect of CD. In contrast, if the water passes deeper zones with reduced conditions or conditions favourable for denitrification, the nitrate will most likely be removed. Higher removal efficiency of CD could be gained if CD was combined with, for example DBR, treating the part of the water still leaving via the drainage system (Woli et al. 2010). A concern regarding the implementation of CD has been that the saturation of the root zone might cause desorption of redox-sensitive P, but none of the studies on CD reported TP or DRP release. However, in three studies the CI crossed the zero line, indicating that TP removal was not significant, which was supported by the PI. The retention efficiency determined in our study was considerably lower compared with Ross et al. (2016), who reported a TP retention of 55 ± 15%. Almost all sites with CD were categorised as having moderate to high risk of bias, as the majority of the studies only quantified the reduction in flow and nutrients at the drainage outlet. Only few attempted to quantify nitrate or P budgets for all flow paths leading nutrients to the surface water (Sunohara et al. 2014).

Saturated and integrated buffer zones (SBZ and IBZ)

Two novel technologies, SBZ and IBZ, were included in our review to demonstrate the recent development in this research area. Until now, SBZ have mainly been investigated in USA and with variable results (Jaynes and Isenhart 2019). Low performance of SBZ has been linked to selection of unideal sites containing permeable soil layers or sites where a low fraction of water was diverted to the SBZ, which is controlled by the length of the distribution pipe. Vegetation has also been argued to influence the efficiency of SBZ (Jaynes and Isenhart 2019) as higher removal efficiency has been found at sites with established perennial vegetation. This might be due to addition of more labile carbon to the soil to support denitrification or to enhanced immobilisation of microbial N by the more developed rhizospheres (Jaynes and Isenhart 2019). The removal efficiency of SBZ is difficult to quantify as the outlet of the SBZ is the riparian soil where N and P concentrations can only be measured with piezometers, and dilution by groundwater through flow can occur. Another concern is whether or not the piezometer measurements can be considered representative for the whole area. In our review, IBZ had the lowest average removal efficiency of the mitigation measures, which probably can be ascribed to that the two IBZs were experimental test facilities with too low DMMCAR and the vegetation was not fully developed (Zak et al. 2018). A recent technical report on IBZ showed that the removal efficiency of two full-scale facilities established in Denmark was 53–55%, which was even a conservative estimate (van’t Veen et al. 2019). The overall reduction of nitrate to the receiving water might be even higher than reported, as after passing the IBZ, the water infiltrates the riparian zone between the IBZ and the stream where nitrate can be further removed by denitrification or vegetation. Thus, more studies on SBZ and IBZ are needed to critically assess their nutrient removal efficiency and the uncertainty related to the monitoring of the outlet.

Applicability in the farmed landscape

The five drainage mitigation measures can seamlessly be integrated into landscapes with existing drainage systems, but to optimise performance and cost efficiency their individual applicability to the landscape must be evaluated carefully. Each measure varies in size and capacity to intercept water, where the size relative to the catchment area decreases in the order of FWS > SBZ > IBZ > DBR. Especially the size of the contributing catchment, slope and soil type determine how and where the measures can be implemented (Fig. 8). Flat landscapes (slope < 1%) are suitable for implementation of CD as a single control structure will affect a large area; however, as the technology advances it might soon be possible also to implement CD in sloping landscapes. In gently sloping terrains, FWS, DBR and SBZ fit as a hydraulic gradient is needed to move the water through the systems. The hydraulic gradient should preferably be minimum 2–3% for FWS and DBR, while for SBZ the slope of the landscape should be around 2–8% (Tomer et al. 2017). In addition, in sloping landscapes, IBZ are suitable as a hydraulic gradient of minimum 4% is required to move water through the pond and the infiltration zone (Fig. 8). In sloping areas, surface runoff is also more likely to occur, which can be intercepted by the IBZ (Zak et al. 2019).

Besides suitability to the landscape, implementation strategies are often guided by cost efficiency. Cost efficiency, including capital and operational cost of the drainage systems, has been calculated earlier (Christianson et al. 2012b; Jaynes and Isenhart 2019). However, the cost of preliminary examinations such as geological and soil investigations has often not been included despite that it can constitute a substantial part of the budget, and is therefore important to consider when selecting mitigation measure. Cost efficiency is inherently country specific since, as besides local costs, such as land acquisition, it depends on national regulation and implementation strategies. For example, in Denmark, FWS can only be implemented at a certain location if the catchment area is larger than 20 ha and if it removes more than 300 kg N ha wetland−1 year−1, and other requirements such as to soil clay content (> 12%) also prevail.

Current advances in ecosystem service provisioning

The selection, implementation and design of drainage mitigation measures should ideally maximise the supply of ecosystem services and minimise undesirable by-products. Thus, the management and design of mitigation measures should not solely focus on nutrient reduction, but also take into consideration potential negative by-products, as some of these can be minimised by location or design (Carstensen et al. 2019b). For instance, DRP release and methane emission have been reported from facilities experiencing nitrate limitation (Robertson and Merkley 2009; Shih et al. 2011), while other processes need further investigation (e.g. nitrous oxide emission, loss of dissolved organic carbon). Permanent removal and recycling of P require plant harvesting or sediment removal; another course of action may be to combine mitigation measures with a P filter (Canga et al. 2016; Christianson et al. 2017).

The possibilities of optimising ecosystem services and synergies with the surrounding landscapes where drainage mitigation measures are applied are manifold (Goeller et al. 2016) e.g. biodiversity, water storage, phytoremediation (Williams 2002) or provision of biomass (Zak et al. 2019). Current examples of multiple ecosystem service provisioning are, the combination of CD, sub-irrigation and reservoirs, which according to Satchithanantham et al. (2014), can reduce the peak flow in spring and delay short-term water-related stress on crops in periods with less precipitation. In addition, sub-irrigation can increase crop yields (Wesström and Messing 2007; Jaynes 2012). According to our review, CD was combined with sub-irrigation at 14 out of 25 sites, while FWS were combined with a sedimentation pond at 6 of the 33 sites. A sedimentation pond is a simple supplement, which can increased the water storage capacity and give access to irrigation water and nutrients for recycling. Yet, the potential of mitigation measures for increasing the climate resilience of agricultural areas by retaining and storing more water in the landscape, thereby buffering hydrological peak events, needs to be investigated at catchment scale. Due to the potentials for adaptation and synergies with the surrounding landscape, these systems are innovative opportunities in future bio-economies, as the measures can reduce nutrient losses, while providing multiple ecosystem services e.g. nutrient reuse, biomass production, biodiversity, etc, if designed accordingly.

Perspective: Opportunities and challenges for implementation of mitigation measures at catchment scale

Effective implementation of drainage mitigation measures requires a holistic approach encompassing both ecosystem services and potential negative by-products, while simultaneously maintaining a catchment scale perspective (Hewett et al. 2020). This require a catchment scale understanding of flow paths, taking into consideration all important transport paths influencing the quality of ground- and surface water (Goeller et al. 2016). Consequently, detailed information on local nutrient flow pathways and drainage systems is highly needed. It should also be emphasised that the mitigation measures discussed in this review only target drainage water, while other mitigation measures, such as cover crops, target the water before it leaves the root zone (Beckwith et al. 1998) or restored wetlands that target water further downstream (Audet et al. 2014). Consequently, it is essential that the drainage mitigation measures should complement and not compensate for farm management practices producing high pesticide, N or P leaching that influences other flow paths such as groundwater or surface runoff. Choosing the most appropriate and avoiding incompatible mitigation measures require collaboration between the different actors in the catchment to align the interests of all stakeholders (Hashemi and Kronvang 2020). To guide this decision process, we propose a further development of the sustainability index developed by Fenton et al. (2014), where weighting factors are assigned to relevant parameters. This index, serving as a tool for stakeholder involvement, could be expanded with more ecosystem services and cost effectiveness adapted to local conditions. Furthermore, application of a combination of mitigation measures may be more cost efficient than introducing only one option. In correspondence with this, a study by Hashemi and Kronvang (2020) found that it may be more cost effective to use a combination of targeted mitigation measures rather than a single option for reduction of the nitrate loading to aquatic ecosystems.

In addition to considering the local geographical and climatic conditions for selection and application of drainage mitigation measures, integration with future changes in climate and land use must be considered. Climate change is predicted to cause more intense and frequent precipitation events and prolonged summer droughts in the investigated climate regions (Christensen et al. 2013). The envisaged increase in temperature might improve the performance of the drainage mitigation measures, even though the intense precipitation events will challenge their hydraulic capacities and, thereby, their performance, potentially changing the need for mitigation measures at catchment scale. Human modifications of land use, land and water management induced by, for instance, a green shift to a new bio-economy (Marttila et al. 2020) might entail further expansion and intensification of land uses such as agriculture and forestry, which will increase the demand for drainage and thereby the need for implementation of drainage mitigation measures to reduce the nutrient losses.

References

Audet, J., L. Martinsen, B. Hasler, H. De Jonge, E. Karydi, N.B. Ovesen, and B. Kronvang. 2014. Comparison of sampling methodologies for nutrient monitoring in streams: Uncertainties, costs and implications for mitigation. Hydrology and Earth System Sciences 18: 4721–4731.

Beckwith, C.P., J. Cooper, K.A. Smith, and M.A. Shepherd. 1998. Nitrate leaching loss following application of organic manures to sandy soils in arable cropping. Soil Use and Management 14: 123–130.

Bilotta, G.S., A.M. Milner, and I.L. Boyd. 2014. Quality assessment tools for evidence from environmental science. Environmental Evidence 3: 14.

Blowes, D.W., W.D. Robertson, C.J. Ptacek, and C. Merkley. 1994. Removal of agricultural nitrate from tile-drainage effluent water using in-line bioreactors. Journal of Contaminant Hydrology 15: 207–221.

Borenstein, M. 2009. Introduction to meta-analysis. Oxford: Wiley-Blackwell.

Canga, E., G.J. Heckrath, and C. Kjaergaard. 2016. Agricultural drainage filters. II. Phosphorus retention and release at different flow rates. Water, Air, & Soil Pollution 227: 276.

Carstensen, M.V., C.D. Børgesen, N.B. Ovesen, J.R. Poulsen, S.K. Hvid, and B. Kronvang. 2019a. Controlled drainage as a targeted mitigation measure for nitrogen and phosphorus. Journal of Environmental Quality 48: 677–685.

Carstensen, M.V., S.E. Larsen, C. Kjærgaard, and C.C. Hoffmann. 2019b. Reducing adverse side effects by seasonally lowering nitrate removal in subsurface flow constructed wetlands. Journal of Environmental Management 240: 190–197.

Christensen, J.H., K. Krishna Kumar, S.-I.A.E. Aldrian, M.D.C. Cavalcanti, W. Dong, P. Goswami, J.K.K. Hall, A. Kitoh, et al. 2013. Climate phenomena and their relevance for future regional climate change. Cambridge, New York: Cambridge University Press.

Christianson, L., A. Bhandari, M. Helmers, K. Kult, T. Sutphin, and R. Wolf. 2012a. Performance evaluation of four field-scale agricultural drainage denitrification bioreactors in Iowa. Transactions of the Asabe 55: 2163–2174.

Christianson, L.E., A. Bhandari, and M.J. Helmers. 2012b. A practice-oriented review of woodchip bioreactors for subsurface agricultural drainage. Applied Engineering in Agriculture 28: 861–874.

Christianson, L.E., C. Lepine, P.L. Sibrell, C. Penn, and S.T. Summerfelt. 2017. Denitrifying woodchip bioreactor and phosphorus filter pairing to minimize pollution swapping. Water Research 121: 129–139.

David, M.B., L.E. Gentry, R.A. Cooke, and S.M. Herbstritt. 2016. Temperature and substrate control woodchip bioreactor performance in reducing tile nitrate loads in East-Central Illinois. Journal of Environmental Quality 45: 822–829.

De Haan, J., J.R. Van Der Schoot, H. Verstegen, and O. Clevering. 2010. Removal of nitrogen leaching from vegetable crops in constructed wetlands. International Society for Horticultural Science (ISHS), Leuven, pp. 139–144.

Diaz, R.J., and R. Rosenberg. 2008. Spreading dead zones and consequences for marine ecosystems. Science 321: 926–929.

Drury, C.F., C.S. Tan, O. Gaynor, and T.O. Welacky. 1996. Influence of controlled drainage-subirrigation on surface and tile drainage nitrate loss. Journal of Environmental Quality 25: 317.

Drury, C.F., C.S. Tan, W.D. Reynolds, T.W. Welacky, T.O. Oloya, and J.D. Gaynor. 2009. Managing tile drainage, subirrigation, and nitrogen fertilization to enhance crop yields and reduce nitrate loss. Journal of Environmental Quality 38: 1193.

Drury, C.F., C.S. Tan, T.W. Welacky, W.D. Reynolds, T.Q. Zhang, T.O. Oloya, N.B. Mclaughlin, and J.D. Gaynor. 2014. Reducing nitrate loss in tile drainage water with cover crops and water-table management systems. Journal of Environmental Quality 43: 587.

Fenton, O., M.G. Healy, F. Brennan, M.M.R. Jahangir, G.J. Lanigan, K.G. Richards, S.F. Thornton, and T.G. Ibrahim. 2014. Permeable reactive interceptors: blocking diffuse nutrient and greenhouse gases losses in key areas of the farming landscape. The Journal of Agricultural Science 152: 71–81.

Fink, D.F., and W.J. Mitsch. 2004. Seasonal and storm event nutrient removal by a created wetland in an agricultural watershed. Ecological Engineering 23: 313–325.

Fleischer, S., A. Gustafson, A. Joelsson, J. Pansar, and L. Stibe. 1994. Nitrogen removal in created ponds. Ambio 23: 349–357.

Gilliam, J.W., R.W. Skaggs, and S.B. Weed. 1979. Drainage control to diminish nitrate loss from agricultural fields. Journal of Environmental Quality.

Goeller, B., C. Febria, J. Harding, and M. Ar. 2016. Thinking beyond the bioreactor box: Incorporating stream ecology into edge-of-field nitrate management. Journal of Environmental Quality 45: 151.

Groh, T.A., L.E. Gentry, and M.B. David. 2015. Nitrogen removal and greenhouse gas emissions from constructed wetlands receiving tile drainage water. Journal of Environmental Quality 44: 1001–1010.

Hashemi, F., and B. Kronvang. 2020. Multi-functional benefits from targeted set-aside land in a Danish catchment. Ambio. (This issue). https://doi.org/10.1007/s13280-020-01375-z.

Hassanpour, B., S. Giri, W.T. Pluer, T.S. Steenhuis, and L.D. Geohring. 2017. Seasonal performance of denitrifying bioreactors in the Northeastern United States: Field trials. Journal of Environmental Management 202: 242–253.

Heinrich, C. 2012 BalticCOMPASS. BalticCOMPASS Work Package 4 coordination meeting, 8–9 March.

Hewett, C.J.M., M.E. Wilkinson, J. Jonczyk, and P.F. Quinn. 2020. Catchment systems engineering: An holistic approach to catchment management. WIREs Water 7: e1417.

Higgins, J.P. T., D.G. Altman, P.C. Gøtzsche, P. Jüni, D. Moher, A.D. Oxman, J. Savović, K.F. Schulz, et al. 2011. The Cochrane Collaboration’s tool for assessing risk of bias in randomised trials. British Medical Journal.

Hoagland, C.R., L.E. Gentry, M.B. David, and D.A. Kovacic. 2001. Plant nutrient uptake and biomass accumulation in a constructed wetland. Journal of Freshwater Ecology 16: 527–540.

Hoffmann, C.C., S.E. Larsen, and C. Kjaergaard. 2019. Nitrogen removal in woodchip-based biofilters of variable designs treating agricultural drainage discharges. Journal of Environmental Quality 48: 1881–1889.

Jaynes, D.B. 2012. Changes in yield and nitrate losses from using water management in central Iowa, United States. Journal of Soil and Water Conservation 67: 485–494.

Jaynes, D.B., and T.M. Isenhart. 2014. Reconnecting tile drainage to riparian buffer hydrology for enhanced nitrate removal. Journal of Environmental Quality 43: 631–638.

Jaynes, D.B., and T.M. Isenhart. 2019. Performance of saturated riparian buffers in Iowa, USA. Journal of Environmental Quality 48: 289–296.

Johannesson, K.M., K.S. Tonderski, P.M. Ehde, and S.E.B. Weisner. 2017. Temporal phosphorus dynamics affecting retention estimates in agricultural constructed wetlands. Ecological Engineering 103: 436–445.

Kadlec, R.H., and R.L. Knight. 1996. Treatment wetlands. London: Taylor & Francis Group.

Knowles, R. 1982. Denitrification. Microbiological Reviews 46: 43–70.

Koskiaho, J., P. Ekholm, M. Raty, J. Riihimaki, and M. Puustinen. 2003. Retaining agricultural nutrients in constructed wetlands: experiences under boreal conditions. Ecological Engineering 20: 89–103.

Kovacic, D.A., M.B. David, L.E. Gentry, K.M. Starks, and R.A. Cooke. 2000. Effectiveness of constructed wetlands in reducing nitrogen and phosphorus export from agricultural tile drainage. Journal of Environmental Quality 29: 1262–1274.

Kronvang, B., E. Jeppesen, D.J. Conley, M. Søndergaard, S.E. Larsen, N.B. Ovesen, and J. Carstensen. 2005. Nutrient pressures and ecological responses to nutrient loading reductions in Danish streams, lakes and coastal waters. Journal of Hydrology 304: 274–288.

Kynkaanniemi, P., B. Ulen, G. Torstensson, and K.S. Tonderski. 2013. Phosphorus retention in a newly constructed wetland receiving agricultural tile drainage water. Journal of Environmental Quality 42: 596–605.

Lalonde, V., C.A. Madramootoo, L. Trenholm, and R.S. Broughton. 1996. Effects of controlled drainage on nitrate concentrations in subsurface drain discharge. Agricultural Water Management 29: 187–199.

Land, M., J.T.A. Verhoeven, W.J. Mitsch, W. Grannli, A. Grimvall, C.C. Hoffmann, and K.S. Tonderski. 2016. How effective are created or restored freshwater wetlands for nitrogen and phosphorus removal? A systematic review. Environmental Evidence

Landbrugsstyrelsen. 2019. Minivådområde-ordningen 2019. Etablering af åbne minivådområder og minivådområder med filter matrice.

Marttila, H., A. Lepistö, A. Tolvanen, M. Bechmann, K. Kyllmar, A. Juutinen, H. Wenng, E. Skarbøvik, et al. 2020. Potential impacts of a future Nordic bioeconomy on surface water quality. Ambio. (This issue). https://doi.org/10.1007/s13280-020-01355-3.

Mendes, L.R.D., K. Tonderski, B.V. Iversen, and C. Kjaergaard. 2018. Phosphorus retention in surface-flow constructed wetlands targeting agricultural drainage water. Ecological Engineering 120: 94–103.

Miller, P.S., J.K. Mitchell, R.A. Cooke, and B.A. Engel. 2002. A wetland to improve agricultural subsurface drainage water quality. Transactions of the Asae 45: 1305–1317.

Mitsch, W., and S.E. Jørgensen. 1989. Ecological engineering: An introduction to ecotechnology. New York: Springer.

Mitsch, W.J., J.W. Day, J.W. Gilliam, P.M. Groffman, D.L. Hey, G.W. Randall, and N. Wang. 2001. Reducing nitrogen loading to the Gulf of Mexico from the Mississippi River Basin: Strategies to counter a persistent ecological problem: Ecotechnology—the use of natural ecosystems to solve environmental problems—should be a part of efforts to shrink the zone of hypoxia in the Gulf of Mexico. BioScience 51: 373–388.

Ng, H.Y.F., C.S. Tan, C.F. Drury, and J.D. Gaynor. 2002. Controlled drainage and subirrigation influences tile nitrate loss and corn yields in a sandy loam soil in Southwestern Ontario. Agriculture, Ecosystems & Environment 90: 81–88.

O’Geen, A.T., R. Budd, J. Gan, J.J. Maynard, S.J. Parikh, and R.A. Dahlgren. 2010. Mitigating nonpoint source pollution in agriculture with constructed and restored wetlands. Advances in Agronomy 108: 1.

Ockenden, M.C., C. Deasy, J.N. Quinton, A.P. Bailey, B. Surridge, and C. Stoate. 2012. Evaluation of field wetlands for mitigation of diffuse pollution from agriculture: Sediment retention, cost and effectiveness. Environmental Science & Policy 24: 110–119.

Poe, A.C., M.F. Piehler, S.P. Thompson, and H.W. Paerl. 2003. Denitrification in a constructed wetland receiving agricultural runoff. Wetlands 23: 817–826.

R Core Team. 2019. R: A language and environment for statistical computing. Vienna: R Core Team.

Rakovic, J., M.N. Futter, K. Kyllmar, K. Rankinen, M.I. Stutter, J. Vermaat, and D. Collentine. 2020. Nordic Bioeconomy Pathways: Future narratives for assessment of water-related ecosystem services in agricultural and forest management. Ambio. (This issue). https://doi.org/10.1007/s13280-020-01389-7.

Reinhardt, M., R. Gachter, B. Wehrli, and B. Muller. 2005. Phosphorus retention in small constructed wetlands treating agricultural drainage water. Journal of Environmental Quality 34: 1251–1259.

Reinhardt, M., B. Muller, R. Gachter, and B. Wehrli. 2006. Nitrogen removal in a small constructed wetland: An isotope mass balance approach. Environmental Science and Technology 40: 3313–3319.

Robertson, W.D., and L.C. Merkley. 2009. In-stream bioreactor for agricultural nitrate treatment. Journal of Environmental Quality 38: 230–237.

Ross, J.A., M.E. Herbert, S.P. Sowa, J.R. Frankenberger, K.W. King, S.F. Christopher, J.L. Tank, J.G. Arnold, et al. 2016. A synthesis and comparative evaluation of factors influencing the effectiveness of drainage water management. Agricultural Water Management 178: 366–376.

Rozemeijer, J.C., A. Visser, W. Borren, M. Winegram, Y. Van Der Velde, J. Klein, and H.P. Broers. 2015. High frequency monitoring of water fluxes and nutrient loads to assess the effects of controlled drainage on water storage and nutrient transport. Hydrology and Earth System Sciences 20: 347–358.

Satchithanantham, S., R.S. Ranjan, and P. Bullock. 2014. Protecting water quality using controlled drainage as an agricultural BMP for potato production. Transactions of the Asabe 57: 815–826.

Schott, L., A. Lagzdins, A. Daigh, K. Craft, C. Pederson, G. Brenneman, and M. Helmers. 2017. Drainage water management effects over five years on water tables, drainage, and yields in southeast Iowa. Journal of Soil and Water Conservation 72: 251.

Schwarzer, G. 2019. General package for meta-analysis.

Shih, R., W.D. Robertson, S.L. Schiff, and D.L. Rudolph. 2011. Nitrate controls methyl mercury production in a streambed bioreactor. Journal of Environmental Quality 40: 1586.

Skaggs, R. W., N. R. Fausey, and R. O. Evans. 2012. Drainage water management. Journal of Soil and Water Conservation 67.

Skaggs, R.W., and J. Van Schilfgaarde. 1999. Agricultural drainage. Madison, WI: American Society of Agronomy, Crop Science Society of America, Soil Science Society of America.

Søvik, A.K., and P.T. Mørkved. 2008. Use of stable nitrogen isotope fractionation to estimate denitrification in small constructed wetlands treating agricultural runoff. Science of the Total Environment 392: 157–165.

Steffen, W., K. Richardson, J. Rockström, S.E. Cornell, I. Fetzer, E.M. Bennett, R. Biggs, S.R. Carpenter, et al. 2015. Planetary boundaries: guiding human development on a changing planet. Science 347: 1259855.

Sunohara, M.D., E. Craiovan, E. Topp, N. Gottschall, C.F. Drury, and D.R. Lapen. 2014. Comprehensive nitrogen budgets for controlled tile drainage fields in Eastern Ontario, Canada. Journal of Environmental Quality 43: 617.

Tan, C.S., and T.Q. Zhang. 2011. Surface runoff and sub-surface drainage phosphorus losses under regular free drainage and controlled drainage with sub-irrigation systems in southern Ontario. Canadian Journal of Soil Science 91: 349–359.

Tanner, C.C., and J.P.S. Sukias. 2011. Multiyear nutrient removal performance of three constructed wetlands intercepting tile drain flows from grazed pastures. Journal of Environmental Quality 40: 620–633.

Tanner, C. C., J. P. S. Sukias, and C. R. Yates. 2010. New Zealand guidelines for constructed wetland treatment of tile drainage.

Tomer, M.D., D.B. Jaynes, S.A. Porter, D.E. James, and T.M. Isenhart. 2017. Identifying riparian zones best suited to installation of saturated buffers: A preliminary multi-watershed assessment. In Precision conservation: geospatial techniques for agricultural and natural resources conservation, ed. J. Delgado, G. Sassenrath, and T. Mueller, 83–93. Madison, WI: American Society of Agronomy and Crop Science Society of America Inc.

Tonderski, K.S., B. Arheimer, and C.B. Pers. 2005. Modeling the impact of potential wetlands on phosphorus retention in a Swedish catchment. Ambio 34: 544–551.

Tournebize, J., C. Chaumont, C. Fesneau, A. Guenne, B. Vincent, J. Garnier, and U. Mander. 2015. Long-term nitrate removal in a buffering pond-reservoir system receiving water from an agricultural drained catchment. Ecological Engineering 80: 32–45.

Van’t Veen, S. G. W., B. Kronvang, D. Zak, N. Ovesen, and H. Jensen. 2019. Intelligente bufferzoner - Notat fra DCE. Nationalt Center for Miljø og Energi.

Vymazal, J. 2017. The use of constructed wetlands for nitrogen removal from agricultural drainage: A review. Scientia Agriculturae Bohemica 48: 82–91.

Wesström, I., A. Joel, and I. Messing. 2014. Controlled drainage and subirrigation: A water management option to reduce non-point source pollution from agricultural land. Agriculture, Ecosystems & Environment 198: 74–82.

Wesström, I., and I. Messing. 2007. Effects of controlled drainage on N and P losses and N dynamics in a loamy sand with spring crops. Agricultural Water Management 87: 229–240.

Willardson, L.S., B. Grass, G.L. Dickey, and J.W. Bailey. 1970. Drain installation for nitrate reduction. Environment Science Technology 31: 2229–2236.

Williams, J.B. 2002. Phytoremediation in wetland ecosystems: progress, problems, and potential. Critical Reviews in Plant Sciences 21: 607–635.

Williams, M.R., K.W. King, and N.R. Fausey. 2015. Drainage water management effects on tile discharge and water quality. Agricultural Water Management 148: 43–51.

Woli, K.P., M.B. David, R.A. Cooke, G.F. Mcisaac, and C.A. Mitchell. 2010. Nitrogen balance in and export from agricultural fields associated with controlled drainage systems and denitrifying bioreactors. Ecological Engineering 36: 1558–1566.

Zak, D., B. Kronvang, M.V. Carstensen, C.C. Hoffmann, A. Kjeldgaard, S.E. Larsen, J. Audet, S. Egemose, et al. 2018. Nitrogen and phosphorus removal from agricultural runoff in integrated buffer zones. Environmental Science and Technology 52: 6508–6517.

Zak, D., M. Stutter, H.S. Jensen, S. Egemose, M.V. Carstensen, J. Audet, J.A. Strand, P. Feuerbach, C.C. Hoffmann, et al. 2019. An assessment of the multifunctionality of integrated buffer zones in Northwestern Europe. Journal of Environmental Quality 48: 362–375.

Zhang, T.Q., C.S. Tan, Z.M. Zheng, T.W. Welacky, and W.D. Reynolds. 2015. Impacts of soil conditioners and water table management on phosphorus loss in tile drainage from a clay loam soil. Journal of Environmental Quality 44: 572–584.

Acknowledgement

This paper is a contribution from the Nordic Centre of Excellence BIOWATER, which is funded by Nordforsk under Project Number 82263. We thank Tinna Christensen for graphical design and Anne Mette Poulsen for language assistance.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Carstensen, M.V., Hashemi, F., Hoffmann, C.C. et al. Efficiency of mitigation measures targeting nutrient losses from agricultural drainage systems: A review. Ambio 49, 1820–1837 (2020). https://doi.org/10.1007/s13280-020-01345-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13280-020-01345-5