Abstract

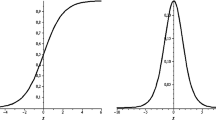

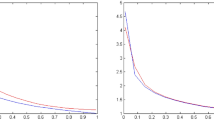

We study the approximation properties of Cardaliaguet-Euvrard type neural network operators. We first modify the operators in order to get the uniform convergence, later we use regular summability matrix methods in the approximation by means of these operators to get more general results than the classical ones. We also display some examples and show graphical illustrations supporting our approximation results by neural networks operators. At the end of the paper we extend the theory to the multivariate case.

Similar content being viewed by others

References

Anastassiou, G.A.: Rate of convergence of some neural network operators to the unit-univariate case. J. Math. Anal. Appl. 212, 237–262 (1997)

Anastassiou, G.A.: Rate of convergence of some multivariate neural network operators to the unit. Comput. Math. Appl. 40(1), 1–19 (2000)

Anastassiou, G.A.: Multivariate sigmoidal neural network approximation. Neural Netw. 24(4), 378–386 (2011)

Anastassiou, G.A.: Multivariate hyperbolic tangent neural network approximation. Comput. Math. Appl. 61(4), 809–821 (2011)

Anastassiou, G.A.: Rate of convergence of some neural network operators to the unit-univariate case, revisited. Mat. Vesnik 65(4), 511–518 (2013)

Anastassiou, G.A.: Rate of convergence of some multivariate neural network operators to the unit, revisited. J. Comput. Anal. Appl. 15(7), 1300–1309 (2013)

Anastassiou, G.A.: Approximation by interpolating neural network operators. Neural Parallel Sci. Comput. 23(1), 1–62 (2015)

Aslan, I., Duman, O.: A summability process on Baskakov-type approximation. Period. Math. Hungar. 72(2), 186–199 (2016)

Aslan, I., Duman, O.: Summability on Mellin-type nonlinear integral operators. Integral Transforms Spec. Funct. 30(6), 492–511 (2019)

Aslan, I., Duman, O.: Approximation by nonlinear integral operators via summability process. Math. Nachr. 293(3), 430–448 (2020)

Atlihan, O.G., Orhan, C.: Summation process of positive linear operators. Comput. Math. Appl. 56(5), 1188–1195 (2008)

Boos, J.: Classical and Modern Methods in Summability. Oxford University Press, Oxford (2000)

Cao, F., Chen, Z.: Scattered data approximation by neural networks operators. Neurocomputing 190, 237–242 (2016)

Cao, F., Xie, T., Xu, Z.: The estimate for approximation error of neural networks: a constructive approach. Neurocomputing 71(4), 626–630 (2008)

Cao, F., Zhang, Y., He, Z.-R.: Interpolation and rates of convergence for a class of neural networks. Appl. Math. Model. 33(3), 1441–1456 (2009)

Cardaliaguet, P., Euvrard, G.: Approximation of a function and its derivative with a neural network. Neural Netw. 5(2), 207–220 (1992)

Cheang, G.H.L.: Approximation with neural networks activated by ramp sigmoids. J. Approx. Theory 162, 1450–1465 (2010)

Chen, Z., Cao, F.: The approximation operators with sigmoidal functions. Comput. Math. Appl. 58(4), 758–765 (2009)

Chen, Z., Cao, F.: The construction and approximation of neural networks operators with Gaussian activation function. Math. Commun. 18(1), 185–207 (2013)

Costarelli, D., Spigler, R.: Multivariate neural network operators with sigmoidal activation functions. Neural Netw. 48, 72–77 (2013)

Costarelli, D., Spigler, R.: Approximation results for neural network operators activated by sigmoidal functions. Neural Netw. 44, 101–106 (2013)

Costarelli, D., Spigler, R.: Convergence of a family of neural network operators of the Kantorovich type. J. Approx. Theory 185, 80–90 (2014)

Costarelli, D., Vinti, G.: Max-product neural network and quasi-interpolation operators activated by sigmoidal functions. J. Approx. Theory 209, 1–22 (2016)

Costarelli, D., Vinti, G.: Convergence for a family of neural network operators in Orlicz spaces. Math. Nachr. 290(2–3), 226–235 (2017)

Costarelli, D., Vinti, G.: Estimates for the neural network operators of the max-product type with continuous and \(p\)-integrable functions. Results Math. 73(1), 10 (2018)

Costarelli, D., Vinti, G.: Saturation classes for max-product neural network operators activated by sigmoidal functions. Results Math. 72(3), 1555–1569 (2017)

Costarelli, D., Vinti, G.: Convergence results for a family of kantorovich max-product neural network operators in a multivariate setting. Math. Slovaca 67(6), 1469–1480 (2017)

Cybenko, G.: Approximations by superpositions of sigmoidal functions. Math. Control Signals Syst. 2(4), 303–314 (1989)

Duman, O.: Summability process by Mastroianni operators and their generalizations. Mediterr. J. Math. 12(1), 21–35 (2015)

Gal, S.G.: Approximation by max-product type nonlinear operators. Stud. Univ. Babeş-Bolyai Math. 56(2), 341–352 (2011)

Gokcer, T. Y., Duman, O.: Approximation by max-min operators: a general theory and its applications. Fuzzy Sets Syst. (2019). https://doi.org/10.1016/j.fss.2019.11.007

Gripenberg, G.: Approximation by neural network with a bounded number of nodes at each level. J. Approx. Theory 122(2), 260–266 (2003)

Hardy, G.H.: Divergent Series. Clarendon Press, Oxford (1949)

Keagy, T.A., Ford, W.F.: Acceleration by subsequence transformations. Pacific J. Math. 132(2), 357–362 (1988)

Lorentz, G.G., Zeller, K.: Strong and ordinary summability. Tohoku Math. J. Second Ser. 15(4), 315–321 (1963)

Mhaskar, H.N.: Approximation Theory and Neural Networks, Wavelets and Allied Topics, 247–289. Narosa, New Delhi (2001)

Mhaskar, H.N., Micchelli, C.A.: Degree of approximation by neural and translation networks with a single hidden layer. Adv. Appl. Math. 16, 151–183 (1995)

Mohapatra, R.N.: Quantitative results on almost convergence of a sequence of positive linear operators. J. Approx. Theory 20, 239–250 (1977)

Makovoz, Y.: Uniform approximation by neural networks. J. Approx. Theory 95(2), 21–228 (1998)

Sakaoğlu, I., Orhan, C.: Cihan Strong summation process in \(L_{p}\)-spaces. Nonlinear Anal. 86, 89–94 (2013)

Silverman, L.L.: On the definition of the sum of a divergent series, University of Missouri Studies. Math. Ser. I, 1–96 (1913)

Smith, D.A., Ford, W.F.: Acceleration of linear and logarithmic convergence. SIAM J. Numer. Anal. 16(2), 223–240 (1979)

Swetits, J.J.: On summability and positive linear operators. J. Approx. Theory 25, 186–188 (1979)

Toeplitz, O.: Über die lineare Mittelbildungen. Prace mat.-fiz. 22, 113–118 (1911)

Vanderbei, R.J.: Uniform Continuity is Almost Lipschitz Continuity, Technical Report SOR-91 11. Statistics and Operations Research Series. Princeton University, Princeton (1991)

Wimp, J.: Sequence Transformations and Their Applications. Academic Press, New York (1981)

Yu, D.S.: Approximation by neural networks with sigmoidal functions. Acta Math. Sin. (Engl. Ser.) 29(10), 2013–2026 (2013)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Turkun, C., Duman, O. Modified neural network operators and their convergence properties with summability methods. RACSAM 114, 132 (2020). https://doi.org/10.1007/s13398-020-00860-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s13398-020-00860-0

Keywords

- Neural network operators

- Uniform approximation

- Bell-shaped function

- Summability methods

- Strong summability