Abstract

Unexpected risk events in drinking water systems, such as heavy rain or manure spill accidents, can cause waterborne outbreaks of gastrointestinal disease. Using a scenario-based approach, these unexpected risk events were included in a risk-based decision model aimed at evaluating risk reduction alternatives. The decision model combined quantitative microbial risk assessment and cost–benefit analysis and investigated four risk reduction alternatives. Two drinking water systems were compared using the same set of risk reduction alternatives to illustrate the effect of unexpected risk events. The first drinking water system had a high pathogen base load and a high pathogen log10 reduction in the treatment plant, whereas the second drinking water system had a low pathogen base load and a low pathogen Log10 reduction in the treatment plant. Four risk reduction alternatives were evaluated on their social profitability: (A1) installation of pumps and back-up power supply, to remove combined sewer overflows; (A2) installation of UV treatment in the drinking water treatment plant; (A3) connection of 25% of the OWTSs in the catchment area to the WWTP; and (A4) a combination of A1–A3. Including the unexpected risk events changed the probability of a positive net present value for the analysed alternatives in the decision model and the alternative that is likely to have the highest net present value. The magnitude of the effect of unexpected risk events is dependent on the local preconditions in the drinking water system. For the first drinking water system, the unexpected risk events increase risk to a lesser extent compared to the second drinking water system. The main conclusion was that it is important to include unexpected risk events in decision models for evaluating microbial risk reduction, especially in a drinking water system with a low base load and a low pathogen log10 reduction in the drinking water treatment plant.

Similar content being viewed by others

Introduction

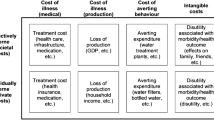

Contaminated drinking water can cause waterborne infections (Guzman-Herrador et al. 2015) and result in a considerable cost to society due to health effects and related monetary effects (Lindberg et al. 2011). Microbial risks in drinking water systems are mainly related to gastrointestinal disease resulting from infection by various pathogens (e.g. Campylobacter, Salmonella, EHEC, norovirus, rotavirus, Cryptosporidium and Giardia) (WHO 2016). Microbial risks in drinking water systems can be divided into two main components (Assmuth et al. 2016). Firstly, a base microbial risk level caused by pathogen sources with a predictable contribution and a continuous or reoccurring temporal distribution (wastewater discharge, annual manure spreading, etc.). Secondly, microbial risks related to unexpected risk events occurring with an uneven, less predictable temporal distribution and with typically short and varying durations (spill accidents with faecal matter containing pathogens, extreme weather events, combined sewer overflows, technical system failures, etc.).

To describe the total risk, a set of events must be identified to describe possible scenarios that may occur and cause problems. In Fig. 1, the base load (UR0) represents the base risk level that occurs on an annual basis. UR1, UR2…URn represent unexpected risk events occurring irregularly and occasionally. These unexpected risk events are part of the total risk in a drinking water system. In drinking water distribution systems, unexpected risk events have been investigated to a certain extent (e.g. Viñas et al. 2018), but there has been less focus on the drinking water catchments and the adoption of a holistic approach, which would include all parts of a drinking water system. The use of a risk graph to illustrate the total risk has been described, including examples such as nuclear power and dam failures (Ale et al. 2015), but not in this type of drinking water setting. In the risk graph in Fig. 1, the area under the curve illustrates the total risk. Risk reduction alternatives aim to reduce the total risk, i.e. the graph area, either by reducing the base load, reducing the unexpected risk events, or reducing both. The graph shape varies and is dependent on the types of unexpected risks in the system.

The total microbial risk level in drinking water systems is thus affected by unexpected risk events. Despite this, drinking water producers in Sweden commonly perform risk analysis based solely on the average base load (UR0) or adapt a worst-case scenario with the highest risk (URn) to serve as a dimensioning event. In the first case, the risk level may be underestimated, while in the second case the risk level may be overestimated. To supply safe water to drinking water consumers, microbial risks in drinking water systems need to be managed and, depending on the system, risk reduction measures may need to be implemented. However, implementing these measures is costly and available resources must be used efficiently. It may thus be of great importance to consider unexpected risk events to provide holistic decision support.

Earlier studies have set up models for evaluating the microbial risks in drinking water systems (e.g. Bartram et al. 2009; Bergion et al. 2017; Schijven et al. 2011, 2013, 2015) and related economic effects (e.g. Baffoe-Bonnie et al. 2008; Bergion et al. 2018b; Juntunen et al. 2017). In general, it can be concluded that unexpected risk events have a short duration, hence the importance of analysing the risk using an appropriate time resolution. In addition, unexpected risk events related to climate change are likely to increase in the future, highlighting the importance of accounting for future changes in risk and decision models (Schijven et al. 2013). Accounting for both the base risk and unexpected risk events has been identified as important for integrated water management on a qualitative level (Assmuth et al. 2016), although to our knowledge there is no detailed quantitative description available of how to perform this. On the other hand, looking only at the risk assessment and omitting any decision analysis, Westrell et al. (2003) reported that the microbial base risk caused the majority of annual infections. Risk management will be dependent on thorough identification of relevant events to be included. Furthermore, this inclusion of unexpected risk events facilitates analyses aimed at investigating whether dimensioning risks are part of the base load or part of the unexpected risk events.

The main contribution and novelty of this study was to enable inclusion of unexpected risk events in the decision analysis for prioritising microbial risk reduction in drinking water systems using a scenario-based approach. An existing risk-based decision model was used as a starting point for this work (Bergion et al. 2018b). To achieve the aim, further features were added to the decision model:

-

− The risk was calculated on a daily basis, to take account of variation in the daily risk level and the occurrence of unexpected risk events.

-

− Hydrological modelling was added to the already applied hydrodynamic model to simulate the fate and transport of pathogens in the catchment.

-

− Unexpected risk events were included using a scenario-based approach.

To illustrate the expanded decision model, it was applied to two drinking water systems with distinctly different characteristics. The comparison facilitated the investigation of unexpected risk events and their impact on drinking water systems with different preconditions.

Methods

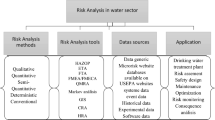

The risk-based decision model (Bergion et al. 2018b) is based on quantitative microbial risk assessment (QMRA), including source characterisation, hydrological modelling, hydrodynamic modelling, groundwater modelling and dose–response models. Hydrological modelling describes the processes within the catchment and contribution from land sources to the lake, hydrodynamic modelling estimates the pathogen fate and transport within the lake, and groundwater modelling estimates the pathogen removal in the artificial groundwater recharge. The hydrodynamic modelling and the groundwater modelling were used to estimate the log10 pathogen removal in the lake and in the artificial groundwater recharge, respectively. The decision model is also based on cost–benefit analysis (CBA) to enable evaluation of risk reduction alternatives in order to determine the alternative that is most profitable for society. In this study, risks were calculated using an updated version of the decision model (Bergion et al. 2018b) and the risk was expressed in terms of probability of infection, lost quality-adjusted life years (QALYs) and the number of infections. The risk cost was calculated using a unit value per lost QALY and a unit value per infection. Details on the decision model and related assumptions can be found in (Bergion et al. 2018b) and in the supplementary material, Table S1. A schematic illustration of the decision model is presented in Fig. 2.

Two drinking water systems were investigated. For the first system, settings for the Vomb drinking water system (Vomb DWS) were used, as described in Bergion et al. (2018b). The second system (Alt. DWS) was based on the Vomb DWS, although the artificial groundwater recharge was replaced by a treatment step resulting in much lower pathogen removal. Additionally, the Alternative Lake Vomb was assumed to provide less pathogen removal compared to Lake Vomb. It was also assumed that the base load for the alternative system was 1% of the base load of the Vomb DWS. The Alt. DWS was set up to be representative for many drinking water systems in Sweden in terms of the treatment steps and the resulting log10 removal of pathogens.

Microbial risks included in the base load (UR0) were wastewater discharges and combined sewer overflows (CSOs) from on-site wastewater treatment systems (OWTS) and wastewater treatment plants (WWTP), manure application on farmland and grazing farm animals. The OWTSs and WWTPs were added as point sources to the tributary discharges into the lake. The manure application and grazing farm animals were added using the SWAT (Soil and Water Assessment Tool) hydrological model set up for the catchment. Note that the wild animals were not accounted for in the model. Also included were unexpected risks from a precipitation event with a 10-year return period (UR1), emergency CSOs (ECSO) (UR2) and an accident resulting in a large manure discharge directly into the lake (UR3).

The decision model was illustrated by investigating four risk reduction alternatives: (A1) installation of pumps and a back-up power supply to remove CSOs and ECSOs; (A2) installation of UV treatment in the drinking water treatment plant (DWTP); (A3) connection of 25% of the OWTSs in the catchment to the WWTP; and (A4) a combination of A1–A3. The risk reduction alternatives were compared to a reference alternative (A0), where no risk reduction measures were implemented.

By defining uncertainty distributions for the input data, Monte Carlo simulations (@risk version 7.6.0) could be used to also present uncertainties in the results. The risk model, including the added model features, is presented below.

Quantitative Microbial Risk Assessment

Source Characterisation

The daily average pathogen (p) concentration in OWTSs (cOWTS,i,p) (#/L) contributing to the pathogen count in tributary i was calculated as in Bergion et al. (2018b) using epidemiological statistics. Details can be found in the supplementary material.

The contribution of WWTP discharge (cWWTP,i,p) (#/L) to the pathogen concentration in tributary i was calculated as:

where cww,p (#/L) was the literature value of pathogen (p) concentration in wastewater, RWWTP,p (log10 removal) was the pathogen removal in the WWTP reported in the literature (Supplementary Table S1), Qi was the average daily flow in tributary i, and QWWTP (L/Day) was the average daily wastewater production. The cww,p was approximated to a log normal distribution with µ/σ LN(17200/13200) for Campylobacter (Stampi et al. 1993) and LN(3947233/190.7) for norovirus, respectively. For Cryptosporidium, the cww,p was set at a point value of 103.4, estimated by the authors based on observed oocyst concentrations during the Östersund waterborne outbreak in 2010 (PHAS 2011).

Based on Ottoson et al. (2006), the pathogen removal in WWTP was included using uniform distribution with min/max of 2/3, 0.5/1.5 and 0.5/2.5 for Campylobacter, norovirus and Cryptosporidium, respectively.

The contribution by CSOs (cCSO,i,p) (#/L) to the tributary pathogen concentration was estimated using the same literature values as for WWTP discharge, though it was assumed the CSOs would not undergo any treatment:

where QCSO (L/Day) was the volume of wastewater discharged from the CSO. Based on CSO frequencies and volumes for the last five years, it was assumed that one CSO with a volume of 139,500 L occurred each year. The timing of the CSO was assumed to be during the summer months, coinciding with the grazing period but not with manure application. This was assumed since standard practice is to avoid manure application in temporal proximity to heavy rain due to the loss of nutrients and the fact that the ground cannot cope with heavy vehicles.

Hydrological Modelling

The contribution of grazing activities and manure application (note that only Cryptosporidium was considered for these two pathogen sources) to the pathogen concentrations in the tributaries was estimated using the Soil and Water Assessment Tool (SWAT) (Nietsch et al. 2011), which was set up for the Vomb catchment. The model included transport from land sources to water and die-off processes during land transport and in the watercourse. The number of farm animals, dairy cows (5279), calves (6967), heifers and steers 1–2 years (7416) and heifers > 2 years (3257), was based on reported statistics for the municipality of Sjöbo for 2016 (Swedish Board of Agriculture 2019). The amount of manure produced during indoor periods, and later applied to farmland during manure application, was calculated in the manner presented in (Bergion et al. 2017). 1591, 308, 411 and 411 kg manure per hectare was applied to fields on 15 April, June, August and October each year, where proportions were based on (Statistics Sweden 2012). It was assumed that the grazing animals (excluding calves) grazed for 153 days, starting on 1 May and the daily contribution from grazing was 88 kg per hectare. Based on literature values for herd prevalence and excretion, the number of Cryptosporidium oocysts in manure was estimated to 4400 (oocysts/g) (Sokolova et al. 2018, Table S2).

The SWAT model was calibrated and validated based on the observed monthly average flow for the Eggelstad gauging station (SMHI 2018). Calibration was performed for 2009–2013, and validation for 2014–2017. The Nash Sutcliffe Efficiency (NSE) index was used to determine the fit between simulated and observed water flow. The SWAT-CUP software was used, applying the General Likelihood Uncertainty Estimation (GLUE) approach, simulating 1000 iterations (Abbaspour 2013). Calibration and validation of the water flow resulted in an NSE index of 0.75 and 0.78, respectively. These are considered to be very good results, since the NSE index can range from − ∞ to + 1, where a value above 0.5 is considered good, and a value above 0.75 is considered very good (Moriasi et al. 2007). Final parameter values are presented in supplementary material, Table S3. Calibration and validation of the pathogen concentrations were not possible as no observations were available.

SWAT model results for the grazing months May–September during the simulation period 2009–2017 were used as input for the risk-based decision model. Daily Cryptosporidium oocyst concentrations were randomly resampled from the SWAT model output to represent the variations in Cryptosporidium oocyst concentrations due to the variations in hydrometeorological conditions during this period.

The daily maximum Cryptosporidium oocyst concentrations in the tributaries (cManu,i,p) (#/L) from April to October simulated in the SWAT model were used as input for manure application. These maximum concentrations were assumed to last for one week after the manure application day. No stochastic input for manure application was used in the Monte Carlo simulations.

The total pathogen (p) concentrations (cTOT,i,p,S) in the tributaries (i) for each scenario (S) were calculated as:

where S corresponded to the different scenarios, i.e. possible combinations of considered events (presented further in Fig. 3. below), and cUR was the contribution from unexpected risk events as explained in the section Scenario-based approach for including unexpected risk events below.

Schematic illustration of the variation in base pathogen load (solid areas) and the possible positions of unexpected risk events (striped areas). Note that the height of the bars is not to scale. O + W (OWTS and WWTP, included in all scenarios), M (manure), G (grazing), G + M (grazing and manure), and G + CSO (grazing and CSO) represent the five different scenarios of when unexpected risk events could occur in relation to the five different base load levels. Note that the possible positions O + W, M, G, G + M and G + CSO are only examples, and that the actual event can take place on any day when the same base load conditions for each scenario exist

Pathogen Removal Based on Hydrodynamic and Groundwater Modelling

The daily drinking water pathogen concentration (cDW,p,S) was calculated as:

where RLAKE was the log10 removal during transport in Lake Vomb from the tributary mouth to the raw water intake for Borstbäcken (Bo), Torpsbäcken (To) and Björkaån (Bj), respectively, RDWTP was the log10 removal in the DWTP plant. The RLAKE was based on Bergion et al. (2018b). The removal in the DWTP was calculated as:

where RStepI, RStepII and RStepIII were the combinations of treatment steps in the specific risk reduction alternative. The log10 removal in the treatment steps was estimated using values reported in the literature (see Table 1). For norovirus, the log10 removal in the artificial groundwater recharge (AGR) was estimated using a groundwater virus transport model (including retention time, attachment of pathogens to soil and pathogen die-off) (Åström et al. 2016). The possible combinations of treatment steps and their removal are reported in Table 1.

Dose–Response Model

The dose–response models used were the same as in Bergion et al. (2018b). The daily probability of infection per person (Pinf,p,S) (no unit) was calculated using a dose–response model, adapting the Exact Beta Poisson distribution, represented using an exponential function with a beta distribution in the exponent:

where S was the scenario (refers to the different scenarios in Fig. 3 below) for that specific day, hp (no unit) was the infectivity (represented using a beta distribution with α and β for each pathogen), Dp, was the pathogen dose for the scenario (S). Values for α/β were 0.024/0.011 (Teunis et al. 2005), 0.04/0.055 (Teunis et al. 2008) and 0.115/0.176 (Teunis et al. 2002) for Campylobacter, norovirus and Cryptosporidium, respectively.

The annual probability of infection (Pinf,ann,p,g) (no unit) for each pathogen (p) was calculated for each risk reduction alternative (g = A0,…A4) as (WHO 2016):

where si (days) represents the duration of the scenario (Si) with the specific daily probability of infection (Pinf,p,g,Si). Note that the durations (s1 + s2 + … + si) should total 365 days. The annual probability of infection was thus calculated using a separate Pinf value for each day of the year.

Scenario-Based Approach for Including Unexpected Risk Events

The base load risk level (UR0) included pathogen load from OWTSs, WWTPs, one annual CSO, grazing animals and manure application. UR1, UR2…URn represented unexpected risk events (here n = 3). The unexpected risk events are part of the total risk in a drinking water system, as illustrated in the risk graph in Fig. 1.

UR1 included UR0 loads and added a rainfall event with a 10-year return period, resulting in additional CSO load and additional load from grazing and manure application. For UR1, the magnitude of a 10-year rainfall event during a 24 h timeframe (47.3 mm) was calculated using precipitation data for the period 2007–2018 based on the Log-Pearson III distribution (Ojha et al. 2008). The precipitation gauge available in the area has only been in operation since 2007. Proximity to the catchment was considered more important, hence the relatively short data series for the estimation of the 10-year rainfall event. In the SWAT model, fictive precipitation data series in the form of a 10-year rainfall event occurring on the 15th of each month was created. To obtain the maximum pathogen concentrations resulting from a 10-year rainfall event, the maximum concentration based on the modelling results using these fictive data for the 15th of all months with grazing was used, representing the pathogen concentrations in the tributaries during this unexpected risk event.

UR2 included UR0 loads and an emergency CSO (ECSO) event, resulting in a high temporary load into the lake. For UR2, the volume (2,370,000 L) of wastewater discharged by the ECSO was based on an actual event in the catchment, when a technical failure in the sewer network caused untreated wastewater to be discharged. In this simulation the total wastewater volume was assumed to be discharged on one single day.

UR3 included UR0 loads and added pathogen load from a manure transport accident. For UR3, the manure transport was assumed to occur in close proximity to one of the tributaries, facilitating rapid transport of the manure to Lake Vomb. It was assumed that 25,000 L of manure leaked into the mouth of one of the tributaries. It was assumed that the leak could just as well have occured in any of the tributaries. Only Cryptosporidium was assumed to be present in the manure. The Cryptosporidium concentration in manure was estimated to be the same as for the hydrological modelling, 4400 (oocysts/g).

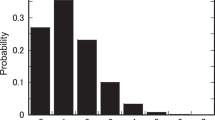

UR1, UR2 and UR3 were assumed to have a duration of one day and the probability of occurrence (Pocc,URi) was 0.1, 0.05 and 0.01 for UR1, UR2 and UR3, respectively. The probabilities were based on return period (UR1), expert judgement (UR2) and road accident statistics, in combination with expert judgement by the authors (UR3).

Five different types of base load levels were identified, representing preconditions where unexpected risk events could occur. Examples of these scenarios are schematically illustrated in Fig. 3. The solid areas represent the base load of activities occurring on a regular basis, and the striped areas represent possible positions of the unexpected risk events (UR1–UR3) during the different scenarios.

Provided the unexpected risk event is happening, the probability of occurrence during the different scenarios was calculated by dividing the possible days of the specific scenario each year by the number of days per year (Table 2).

The probability of occurrence was equal for all days (365 days) for UR2 and UR3, but UR1 could only occur during the grazing period (152 possible days). It was assumed that each unexpected risk event could occur only once each year, and that different unexpected risk events did not occur simultaneously. UR1 was assumed to occur during the grazing period, either in combination with manure application or not (Scenarios G or G + M in Fig. 3). It was assumed that the additional CSO load from a 10-year rainfall event was added as an event separate from the annual CSO event, and the possibility of a simultaneous occurrence of an annual CSO and a 10-year rainfall event was thus excluded. Due to practices that avoid manure application in conjunction with heavy rain, this simultaneous occurrence was also excluded. It was assumed that UR2 and UR3 could occur during any type of scenario (Fig. 3).

To determine the position of the unexpected risk event, a discrete distribution with the probability weights reported in Table 2 was used in the Monte Carlo simulations. When the position was determined, the additional pathogen concentration was added to the tributary concentrations (Eq. 3) for the duration (one day) of the unexpected risk event.

To calculate the increased annual risk (ΔP,Inf,ann,p,g,URi), the addition to the total risk level due to the unexpected risk event (i) was calculated as:

where PInf,ann,p,g,URi was the annual probability of infection in the case of a risk event URi and PInf,ann,p,g,UR0 was the probability of infection without any unexpected risk event (UR0).

The total annual risk (PInf,ann,p,g,Tot) compriced the base load (UR0) and the unexpected risk events (UR1–UR3) in combination with their respective probability of occurrence, and was calculated as:

where Pocc,URi was the annual probability of occurrence of unexpected risk events (0.1, 0.05 and 0.01 for UR1, UR2 and UR3, respectively).

The change in annual probability of infection for each risk reduction alternative compared to the reference alternative and the resulting change in QALYs was calculated conservatively, assuming that each infection resulted in illness and using a unit value for the amount of QALYs that corresponded to one infection for each pathogen type, as performed in Bergion et al. (2018b). A Campylobacter, norovirus and Cryptosporidium infection corresponds to 0.0163, 0.0009 and 0.0035 QALYs, respectively (Batz et al. 2014).

Cost–Benefit Analysis

The total annual (ann) benefits (BT) from each risk reduction alternative (g) were calculated as:

where BH was the health benefits, BE was the environmental benefits, and BO was other additional benefits. The other benefits were not included as a monetised benefit, but they were identified qualitatively.

The monetisation of the health (H) benefits was estimated using a unit value per gained QALY in each risk reduction alternative (g) each year (ann), calculated as:

where BH,ann,g was the annual monetised health benefits (SEK), ΔQALYg was the annual change in QALYs, the DWPann (persons) was the drinking water consumer population during that year, and QALYV (SEK/QALY) was the unit value of one gained QALY. There were 400,000 drinking water consumers in both drinking water systems. Based on a national prognosis of a 30% population increase from 2017 to 2060 (Statistics Sweden 2017), an annual population increase was included in the model. QALYV was set at SEK 1,220,000 (Svensson et al. 2015).

The annual environmental benefits (BE) for reducing the nutrient load from wastewater were calculated using a unit value per reduced kg of SEK 53 and SEK 25 for phosphorous and nitrogen, respectively (SEPA 2008), as performed in Bergion et al. (2018b). For g = A1, the annual removal of phosphorous (0.9 kg) and nitrogen (5.3 kg) was calculated as:

where NuR,ann,g was the annual nutrient (nitrogen or phosphorous) removal, CNu,WW was the maximum measured nutrient concentration in CSO events during the period 2013–2017, CSOann,A1 was the volume of untreated wastewater discharged during a CSO, and ECSOann,A1 was the volume of untreated wastewater discharged during an ECSO. For g = A3, the reduction in the phosphorous (651 kg) and nitrogen (4004 kg) load into the lake was calculated based on values from Bergion et al. (2018b).

Investment costs were assumed to arise in the first year of the time horizon, and operation and maintenance costs were set as an annual cost for the entire time horizon. Costs for A1 were based on reported costs from the municipality of Sjöbo (personal communication), and the costs for A2 and A3 were based on Bergion et al. (2018b). In the Vomb DWS, investment costs were estimated at approximately SEK 1.3 million, SEK 55.5 million, and SEK 36.2 million for A1, A2 and A3, respectively. The investment costs for A4 were the sum of A1, A2 and A3. The annual operation and maintenance costs in the Vomb DWS were assumed to be approximately SEK 0, SEK 0.43 million and SEK 0.2 million for A1, A2 and A3, respectively. The annual operation and maintenance costs for A4 were the sum of A1, A2 and A3. In the Alt. DWS, the investment costs and operation and maintenance costs were the same as for the Vomb DWS with the exception of A3. For A3 for the Alt. DWS, the investment costs were set at SEK 0.36 million, and the annual operation and maintenance costs were set at SEK 2,000. This was 1% of the costs for A3 in the Vomb DWS, since the number of OWTS in the base load was reduced by 99%.

The net present value (NPV) was calculated as:

where B was the benefits for each year; C was the costs for each year; r was the discount rate (3.5%); and t was each specific year during the time horizon T of 50 years.

The health risk reduction potential was also investigated. The maximum health benefits would be achieved if all health risks were reduced to zero. The health benefit potential (Bpot.) of each risk reduction alternative was thus calculated as:

where BH,Max,g was the monetary health benefits if all microbial risks were removed.

To account for increased risk in the future, a risk increase factor was used for future risk levels. A 25% increase compared to 2017 was assumed for 2100. This was equivalent to an annual increase of 0.296%. The factor was based on the Swedish Meteorological and Hydrological Institute prognosis of increased future precipitation events (SMHI 2015).

To investigate the impact of including unexpected risk events, the probability of occurrence was set at 0 for UR1, UR2 and UR3 to see the effect on the NPV. This was a decision model that did not include unexpected risk events.

Sensitivity Analysis

The impact of parameters on the pathogen dose for the five scenarios (O + W, G + CSO, M, G and G + M) was investigated using Spearman rank correlation coefficients. The impact of the dose–response model was studied using scatter plots between the two inputs—pathogen dose and infectivity versus the probability of infection. Variation in the NPV was reported using the coefficient of variation.

The impact of the discount rate and the choice of health valuation method on the outcome of the risk-based decision model was studied using a manual approach. Two discount rates were tested: 3.5% and 1%. The discount rate of 3.5% was based on the discount rate used by the road administration authorities in Sweden when evaluating large infrastructure projects. The discount rate of 1% was chosen, since the long-term investments that are evaluated in the case study spanned several future generations and this may justify a lower discount rate.

To investigate the sensitivity of the NPV to the health valuation method used, apart from using the value of a QALY, the cost of illness and added cost of disutility, COI(+), was used as a health valuation method in addition to using the value of a QALY (Bergion et al. 2018a). The annual health benefits (BH,ann,g) (Eq. 10) were then calculated as:

where ΔPinf,ann,p,g was the change in annual probability of infection per person resulting from each risk reduction alternative A1–A4 (g), DWPann (persons) was the drinking water consumer population during year y, and COI(+)V,p (SEK/infection) was the unit value of one avoided infection for each respective pathogen. COI(+)V,p was set at SEK 30,537, SEK 6064 and SEK 26,273 for Campylobacter, norovirus and Cryptosporidium, respectively (Bergion et al. 2018a).

Results

The results of the QMRA and the CBA are presented below. The manual sensitivity analysis of the impact of the discount rate and the health valuation method are included as part of the results below. The sensitivity analysis using Spearman rank correlation coefficients, scatter plots and coefficient of variation is reported in Supplementary Material (Tables S4, S5, S7 and Fig. S1).

Quantitative Microbial Risk Assessment

The simulated base load pathogen concentrations in the raw water intake and in drinking water for Campylobacter, norovirus and Cryptosporidium are presented in Fig. 4.

The simulated 50th percentile (median) of the pathogen concentrations (Campylobacter, norovirus and Cryptosporidium) in the raw water intake and in the drinking water caused by the base load for the Vomb drinking water system (Vomb DWS) and for the Alternative drinking water system (Alt. DWS). O + W, G + CSO, M, G and G + M represent the five scenarios as shown in Fig. 3. The risk reduction alternatives were as follows: (A0) the reference alternative where no risk reduction measures were implemented; (A1) installation of pumps and a back-up power supply to remove CSOs and ECSOs; (A2) installation of UV treatment in the drinking water treatment plant (DWTP); (A3) connection of 25% of the OWTSs in the catchment to the WWTP; and (A4) a combination of A1–A3. Whiskers represent the 95th percentiles. Note that the risk contribution from unexpected risk events is excluded from the results in this figure

The annual probability of infection and annual number of infections, using the scenario-based approach for including unexpected risk events, are presented in Fig. 5, as are the reduction in the annual probability of infection, the reduction in the number of infections and the reduction in QALYs in comparison to the reference alternative (A0).

Risk level in terms of annual probability of infection (Annual Pinf) and number of annual infections (Annual infections) for drinking water consumers for the risk reduction alternatives: (A0) reference alternative where no risk reduction measures were implemented; (A1) installation of pumps and a back-up power supply to remove CSOs and ECSOs; (A2) installation of UV treatment in the drinking water treatment plant (DWTP); (A3) connection of 25% of the OWTSs in the catchment to the WWTP; and (A4) a combination of A1–A3. Reduction in the annual probability of infection (ΔAnnual Pinf), reduction in the number of infections (ΔAnnual Infections), and reduction in QALYs (ΔAnnual QALYs) for the risk reduction alternatives A1–A4 are presented in comparison to A0. Bars represents the 50th percentile (median) and whiskers represent the 95th percentiles. Note that unexpected risk events are included in the results reported in this figure

Both in US and the Netherlands (Signor and Ashbolt 2009) an annual probability of infection of 10–4 has been used as a guideline for an acceptable risk of exposure to pathogens via drinking water. In Sweden, there is no specified acceptable risk level. Consequently, 10–4 is used in this study for comparison purposes. Looking at the 50th and 95th percentiles, the Vomb DWS had a microbial risk level below 10–4 for all pathogens for all the alternatives, i.e. A0-A4. For the Alt. DWS, looking at the included percentiles the microbial level in A0 was above 10–4 for norovirus, but below 10–4 for Campylobacter and Cryptosporidium. For the Alt. DWS, risk reduction alternatives A1 and A3 reduced the norovirus risk level, but not below the acceptable risk level, while A2 and A4 reduced the norovirus risk to below 10–4.

For the Vomb DWS, the unexpected risk events (UR1–UR3) contributed 1.5%, < 0.1%, 1.5%, 1.6% and < 0.1% of the total risk for A0, A1, A2, A3 and A4, respectively, (looking at the 50th percentile). For the Alt. DWS, the unexpected risk events contributed 60.1%, < 0.1%, 60.2%, 62.0% and 0.4% of the total risk for A0, A1, A2, A3 and A4, respectively.

For the Vomb DWS, looking at the 50th percentile, the annual number of infections was < 1 for all pathogens and all risk reduction alternatives (A0–A4). For the Alt. DWS, looking at the 50th percentile, the annual number of infections (Campylobacter/norovirus/Cryptosporidium) was (7.4E0/3.0E2/1.7E−2), (7.4E0/2.1E2/1.7E−2), (3.7E−5/1.7E−2/1.7E−5), (5.5E0/2.6E2/1.6E−2) and (2.8E−5/1.0E−2/1.6E−5) for A0, A1, A2, A3 and A4, respectively.

For the Vomb DWS, the contribution of different pathogens, Campylobacter/norovirus/Cryptosporidium, to the total annual number of infections (mean) was < 0.1% / > 99.9% / < 0.1% for all the alternatives (A0–A4). For the Alt. DWS, the contribution of different pathogens Campylobacter/norovirus/Cryptosporidium to the total annual infections was 0.1%/99.9%/< 0.1% for A0 and A3, 0.2%/99.8%/< 0.1% for A1, < 0.1%/99.7%/0.3% for A2, and < 0.1%/99.3%/0.7% for A4.

The annual lost QALYs for each risk reduction alternative and the reduction in annual lost QALYs are presented in Table 3. The reduction in annual lost QALYs in comparison to the reference alternative (A0) represents the avoided QALYs and thus the health risk reduction.

Cost–Benefit Analysis

Net Present Value

The NPVs for A1, A2, A3 and A4 are presented in Fig. 6. The exact figures for the NPV percentiles are reported in the Supplementary Material, Table S6.

Net present values for the risk reduction alternatives: (A1) installation of pumps and a back-up power supply to remove CSOs and ECSOs; (A2) installation of UV treatment in the drinking water treatment plant (DWTP); (A3) connection of 25% of the OWTSs in the catchment to the WWTP; and (A4) a combination of A1–A3. Sensitivity analyses of the discount rates (DR) and the choice of health valuation method (societal value of a QALY (SVoQ)) and the cost of illness adding cost of disutility, (COI+) are presented. P05, P50 and P95 are the 5th, 50th and 95th percentiles, respectively

The NPVs for the Vomb DWS were negative for all risk reduction alternatives (< 0.001 probability of a positive NPV). This was consistent when the discount rate was altered and when the health valuation method was altered. Ranking the 50th percentile of the NPVs for the different risk reduction alternatives resulted in the rank order A1 > A3 > A2 > A4. This rank order did not change when using the lower discount rate (1%) or when using the health valuation method COI+.

For the Alt. DWS, the rank order of the risk reduction alternatives based on the NPVs is presented in Table 4 for the 5th, 50th and 95th percentiles.

Probability of Having the Highest Net Present Value and Probability of a Positive Net Present Value

For the Vomb DWS, the probability of having the highest NPV was > 0.999 for A1 and < 0.001 for A2–A4. For the Alt. DWS, the probability of having the highest NPV and the probability of a positive NPV are presented in Table 5.

For the Alt. DWS using the health valuation method SVoQ, A2 and A4 achieved > 99.9% of the maximum possible health benefits (100% would correspond to all risks being reduced to 0), while A1 and A3 achieved < 0.1–37% and 16–17% of the maximum possible health benefits, respectively (ranges from 5 to 95th percentiles). Looking at the health valuation method COI+, A2 and A4 still achieved > 99.9% of the maximum possible health benefits, while A1 and A3 achieved < 0.1–49% and 13–15% of the maximum possible health benefits, respectively (ranges from 5 to 95th percentiles).

Benefits not included in the NPV were identified and are reported in Table 6. The list does not claim to be complete, although it does include important non-monetised benefits.

Discussion

Previous economic evaluation of microbial risk has focused mainly on the effects (Assmuth et al. 2016; Juntunen et al. 2017) and not compared different risk reduction alternatives. The enhanced risk-based decision model presented here included a detailed analysis of microbial risk reduction alternatives and used CBA for economic evaluation, making it possible to evaluate and compare the alternatives using their societal profitability. The presented decision model enables an evaluation to be made using an economic perspective, as outlined by the World Health Organization (2001).

Although a strict CBA approach considers only the NPVs, there may be other criteria (legislation, pursuing an opportunity, etc.) that need to be taken into account when determining whether the risk needs to be reduced (WHO 2017). It may be the case that an alternative that reduces the risk to an acceptable risk level needs to be implemented, even though this alternative results in a negative NPV. Given that each risk reduction alternative fulfils the non-monetary criteria, the alternative with the highest NPV rank order should be implemented. This approach combines the two decision criteria of maximising societal benefits and fulfilling legal requirements.

For the Vomb DWS, the probability of a positive NPV was very low for all alternatives. This is mainly because of the high log10 removal in the DWTP and a low probability of infection for the reference alternative. Hence, the risk reduction alternatives could not substantially reduce the risk any further. For the Alt. DWS, log10 removal in the DWTP was lower, and a temporary increase in pathogen load in the raw water thus had a greater effect on the dose to which the drinking water consumers were exposed. This, and the fact that the Alt. DWS had the highest risk compared to the Vomb DWS, resulted in a greater health risk reduction due to the risk reduction alternatives and thus also a higher probability of a positive NPV. However, this large health risk reduction also affects the uncertainties in the NPV. The high log10 removal in the Vomb DWS reduced the dose to a low and similar level in most cases in such a way that, based on the dose–response relationship the effect of additional risk reduction alternatives was almost negligible. For the Alt. DWS, the lower log10 removal resulted in a higher effect from risk reduction alternatives in terms of more avoided infections and thus a greater variation. This suppressing effect of the high log10 removal, in this case the artificial groundwater recharge, can be seen in the uncertainty in the NPV values when comparing the two DWS, resulting in a higher coefficient of variation in the NPV for the Alt. DWS compared to the Vomb DWS (Supplementary Material, Table S7). However, a high degree of uncertainty in the NPV does not automatically mean there is high uncertainty regarding the risk reduction alternative that is the most profitable for society.

The results for the Vomb DWS show that the unexpected risk events did not constitute a high proportion of the total risk. However, for the Alt. DWS, the unexpected risk events were the main contributor to the total risk for both norovirus and Cryptosporidium, but not for Campylobacter. The importance of unexpected risk events for the outcome of risk reduction measures is dependent on the conditions in the drinking water system. It is important to acknowledge that sub-optimal treatment in the DWTP, especially in combination with other unexpected risk events, may result in high microbial risks to the drinking water consumers (Taghipour et al. 2019). It is thus important to also identify and included unexpected events within the drinking water treatment plant. Where there is a low pathogen base load, as the case for A3 in the Alt. DWS, the contribution of unexpected risk events to the total risk is greater than in situations where the base load is higher. In the Alt. DWS, where there is a lower base load and lower pathogen removal in the DWTP, the unexpected risk events have a greater impact than in the Vomb DWS. When the unexpected risks were excluded, the order of probability of the risk reduction alternatives having the highest NPV changed, and the probability of a positive NPV also changed. In Westrell et al. (2003), where a DWTP with high log10 removal was evaluated, the base load was reported as being the cause of the majority of waterborne illnesses. In this study we confirm these results and add to the discussion that the importance of unexpected risk events is higher in the DWS, where log10 removal in the DWTP is lower.

The stakeholder distribution of costs and benefits for the risk reduction alternatives has not been explored in detail in this study. It has been shown earlier (Bergion et al. 2018b) that including a UV treatment step (A2) distributed all the benefits and costs to the drinking water consumers (assuming the UV treatment is paid for using the drinking water charge). As regards connection of the OWTSs (A3) a discrepancy between the persons bearing the costs and the persons receiving the benefits was identified (Bergion et al. 2018b), where most of the costs were borne by the private owners of the OWTSs and at the same time most of the health benefits were attributed to drinking water consumers supplied with drinking water from Lake Vomb. Distribution of costs is generally not fixed and is arranged according to the settings for the drinking water system, the risk reduction alternative and the specific stakeholders in question.

Even though the presented decision model enables the important inclusion of additional events and conditions typically not considered, further development is possible. For example, the exclusion of the possibility of a 10-year rainfall occurring outside the grazing period could result in an underestimation of the risk. It was based on the fact that most (75%) of heavy precipitation events (2 h intensity) occurs in June, July and August (Hernebring 2006). It is also possible that the risk is underestimated since we assumed that unexpected risk events do not occur simultaneously.

A more accurate description of the inter annual fluctuations of pathogen concentrations in wastewater treatment plant effluent (Westrell et al. 2006) could be a way of achieving a more comprehensive microbial risk assessment.

The 25% risk increase through to 2100 was based on predictions from SMHI for future risk events connected to precipitation. For the purposes of this study, it was assumed that this increase was applicable to all types of risks. However, future work should include additional investigations into how to analyse future changes in risk levels for non-climate-related risk events. Furthermore, ways in which existing models (e.g. Schijven et al. 2013) describing microbial risks in relation to climate change can be included in the decision model should also be investigated.

Hydrological modelling was included to better describe pathogen contribution from grazing animals and the application of manure. In this study, the conversion from deterministic to stochastic results was done using a resampling function. This enables results from all the years in the simulated period to be used as input for the decision model. Future improvements could include investigating other methods for incorporating stochastic hydrological modelling results. The hydrological model was only calibrated and validated for the water flow. For future applications, the water quality component of the model should also be calibrated and validated. For this study, lack of observed pathogen data was the reason for not pursuing these measures. However, during the initial setup of the model (Bergion et al. 2018b) it was confirmed that the norovirus infections spread through drinking water only accounted for a small part of the reported infections in the population. This would be expected and thus provide initial validation of the model. The groundwater modelling was based on a simplified approach using stochastic simulation of an analytical transport and fate model. For future applications, the use of more sophisticated stochastic numerical models should be investigated to take into account aquifer heterogeneities.

Conclusion

Based on the results from the risk-based decision model, it can be concluded that the effect and importance of considering unexpected events is determined by the local preconditions. In drinking water systems with a low pathogen base load and low pathogen removal potential in the drinking water treatment plant, the unexpected risk events have a greater impact compared to drinking water systems with a high pathogen base load and high pathogen removal potential in the drinking water treatment plant. Hence, the risk reduction alternatives aimed at reducing the unexpected risk events will have the greatest effect in the former system rather than the latter.

The results show that unexpected risk events can affect the results of a decision analysis and change the alternative that is most profitable. In the Alt. DWS, excluding the unexpected risks from the decision model resulted in changes in the probability of having the highest net present value and changes in the probability of a positive net present value of the risk reduction alternatives. In addition, the rank order of the NPV of the risk reduction alternatives changed, resulting in a different prioritisation.

In summary, this work has resulted in a comprehensive decision support model, capable of including contributions from both base load and unexpected risk events to the total microbial health risk in drinking water systems. The model can be used in real world systems, and the applications in this study show that a comprehensive model, which includes both base load and unexpected risk events, is needed to provide proper decision support with regard to microbial safety measures.

References

Abbaspour K (2013) SWAT-CUP 2012: SWAT calibration and uncertainty programs-A user manual. Eawag Zurich, Dübendorf

Abrahamsson JL, Ansker J, Heinicke G (2009) MRA - A model for Swedish water works. Association SWaW, Stockholm, In Swedish: MRA - Ett modellverktyg för svenska vattenverk. https://vav.griffel.net/filer/2009-05_hogupplost.pdf

Ale B, Burnap P, Slater D (2015) On the origin of PCDS – (Probability consequence diagrams). Saf Sci 72:229–239. https://doi.org/10.1016/j.ssci.2014.09.003

Assmuth T, Simola A, Pitkänen T, Lyytimäki J, Huttula T (2016) Integrated frameworks for assessing and managing health risks in the context of managed aquifer recharge with river water. Integr Environ Assess Manag 12:160–173

Åström J, Lindhe A, Bergvall M, Rosén L, Lång L-O (2016) Microbial risk assessment of groundwater sources – development and implementation of a QMRA tool. Swedish Water and Wastewater Association, Stockholm, (In Swedish: Mikrobiologisk riskbedömning av grundvattentäkter – utveckling och tillämpning av ett QMRA-verktyg). https://vav.griffel.net/filer/SVU-rapport_2016-19.pdf

Baffoe-Bonnie B, Harle T, Glennie E, Dillon G, Sjøvold F (2008) Framework for operational cost benefit analysis in water supply. TECHNEAU. https://www.techneau.org/fileadmin/files/Publications/Publications/Deliverables/D5.1.2.frame.pdf

Bartram J, Coralles L, Davison A, Deere D, Drury D, Gordon B, Howard G, Rineholt A, Stevens M (2009) Water safety plan manual: step/by/step risk management for drinking-water suppliers. World Health Organization, Geneva. https://apps.who.int/iris/bitstream/10665/75141/1/9789241562638_eng.pdf

Batz M, Hoffmann S, Morris JG Jr (2014) Disease-outcome trees, EQ-5D scores, and estimated annual losses of quality-adjusted life years (QALYs) for 14 foodborne pathogens in the United States. Foodborne Pathog Dis 11:395–402

Bergion V, Sokolova E, Åström J, Lindhe A, Sörén K, Rosén L (2017) Hydrological modelling in a drinking water catchment area as a means of evaluating pathogen risk reduction. J Hydrol 544:74–85. https://doi.org/10.1016/j.jhydrol.2016.11.011

Bergion V, Lindhe A, Sokolova E, Rosén L (2018a) Economic valuation for cost-benefit analysis of health risk reduction in drinking water systems. Expo Health. https://doi.org/10.1007/s12403-018-00291-8

Bergion V, Lindhe A, Sokolova E, Rosén L (2018b) Risk-based cost-benefit analysis for evaluating microbial risk mitigation in a drinking water system. Water Res 132:111–123. https://doi.org/10.1016/j.watres.2017.12.054

Guzman-Herrador B, Carlander A, Ethelberg S, de Blasio BF, Kuusi M, Lund V, Löfdahl M, MacDonald E, Nichols G, Schönning C (2015) Waterborne outbreaks in the Nordic countries, 1998 to 2012. Euro Surveill 20:1–10

Hernebring C (2006) Design storms in Sweden, then and now – Rain data for design and control of urban drainage systems. Swedish Water and Wastewater Association, Stockholm, (In Swedish: 10års-regnets återkomst, förr och nu – Regndata för dimensionering/kontroll-beräkning av VA-system i tätorter). https://vav.griffel.net/filer/VA-Forsk_2006-04.pdf

Juntunen J, Meriläinen P, Simola A (2017) Public health and economic risk assessment of waterborne contaminants and pathogens in Finland. Sci Total Environ 599–600:873–882. https://doi.org/10.1016/j.scitotenv.2017.05.007

Lindberg A, Lusua J, Nevhage B (2011) Cryptosporidium in Östersund during the Winter 2010/2011: Consequences and Costs from an Outbreak of a Waterborne Disease. Agency SDR, Stockholm, In Swedish: Cryptosporidium i Östersund Vintern 2010/2011: Konsekvenser och kostnader av ett stort vattenburet sjukdomsutbrott.

Moriasi D, Arnold J, Van Liew M, Bingner R, Harmel R, Veith T (2007) Model evaluation guidelines for systematic quantification of accuracy in watershed simulations. Trans ASABE 50:885–900

Nietsch SL, Arnold JG, Kiniry JR, Williams JR (2011). Soil and Water Assessment Tool - Theoretical documentation - Version 2009. Texas. https://swat.tamu.edu/media/99192/swat2009-theory.pdf

Norwegian Water BA (2014). Guideline in analysis of microbial barriers (MBA). Norwegian Water BA, Hamar, (In norwegian: Veiledning i mikrobiell barriere analyse (MBA)). https://www.svensktvatten.se/globalassets/dricksvatten/riskanalys-och-provtagning/norsk-vann_rapport-209.pdf

Ojha CSP, Berndtsson R, Bhunya P (2008) Engineering hydrology. Oxford University Press, Oxford

Ottoson J, Hansen A, Westrell T, Johansen K, Norder H, Stenstrom T (2006) Removal of noro-and enteroviruses, Giardia cysts, Cryptosporidium oocysts, and fecal indicators at four secondary wastewater treatment plants in Sweden. Water Environ Res 78:828–834

PHAS (2011) Cryptosporidium in Östersund. Solna, In Swedish: Cryptosporidium i Östersund. https://www.folkhalsomyndigheten.se/publicerat-material/publikationsarkiv/c/cryptosporidium-i-ostersund/

Schijven JF, Teunis PF, Rutjes SA, Bouwknegt M, de Roda Husman AM (2011) QMRAspot: a tool for Quantitative Microbial Risk Assessment from surface water to potable water. Water Res 45:5564–5576. https://doi.org/10.1016/j.watres.2011.08.024

Schijven J, Bouwknegt M, de Roda Husman AM, Rutjes S, Sudre B, Suk JE, Semenza JC (2013) A decision support tool to compare waterborne and foodborne infection and/or illness risks associated with climate change. Risk Anal 33:2154–2167. https://doi.org/10.1111/risa.12077

Schijven J, Derx J, de Roda Husman AM, Blaschke AP, Farnleitner AH (2015) QMRAcatch: microbial quality simulation of water resources including infection risk assessment. J Environ Qual 44:1491–1502. https://doi.org/10.2134/jeq2015.01.0048

SEPA (2008) Cross-section charge system proposal for nitrogen and phosphorous. Swedish Environmental Protection Agency, Stockholm, (In Swedish: Förslag till avgiftssystem för kväve och fosfor). https://www.naturvardsverket.se/upload/stod-i-miljoarbetet/vagledning/avlopp/faktablad-8147-enskilt-avlopp/faktablad-8147-sma-avloppsanlaggningar.pdf

Signor RS, Ashbolt NJ (2009) Comparing probabilistic microbial risk assessments for drinking water against daily rather than annualised infection probability targets. J Water Health 7:535–543. https://doi.org/10.2166/wh.2009.101

Smeets P, Rietveld L, Hijnen W, Medema G, Stenström T (2006). Efficacy of water treatment processes Microrisk report

SMHI (2015) Cloudbust mission - a mission for SMHI assigned by the Government. In Swedish: Skyfallsuppdraget - ett regeringsuppdrag till SMHI. https://smhi.diva-portal.org/smash/record.jsf?pid=diva2%3A948110&dswid=-7974

SMHI (2018) Vattenwebb, Eggelstad Gauge Station 2125. Swedish Meteorological and Hydrological Institute. https://vattenwebb.smhi.se/station/. Accessed 20 Nov 2018

Sokolova E, Lindström G, Pers C, Strömqvist J, Lewerin SS, Wahlström H, Sörén K (2018) Water quality modelling: microbial risks associated with manure on pasture and arable land. J Water Health 16:549–561. https://doi.org/10.2166/wh.2018.278

Stampi S, Varoli O, Zanetti F, De Luca G (1993) Arcobacter cryaerophilus and thermophilic campylobacters in a sewage treatment plant in Italy: two secondary treatments compared. Epidemiol Infect 110:633–639

Statistics Sweden (2012) Fertilisers in the agriculture sector 2010/11 (Gödselmedel i jordbruket 2010/11). Stockholm. https://www.scb.se/sv_/Hitta-statistik/Publiceringskalender/Visa-detaljerad-information/?publobjid=17265+

Statistics Sweden (2017) The future population of Sweden 2017–2060. Sveriges framtida befolkning, Swedish, pp 2017–2060

Svensson M, Nilsson FOL, Arnberg K (2015) Reimbursement decisions for pharmaceuticals in Sweden: the impact of disease severity and cost effectiveness. PharmacoEconomics 33:1229–1236. https://doi.org/10.1007/s40273-015-0307-6

Swedish Board of Agriculture (2019) Statistics from the Swedish Board of Agriculture. https://statistik.sjv.se/PXWeb/pxweb/sv/Jordbruksverkets%20statistikdatabas/?rxid=5adf4929-f548-4f27-9bc9-78e127837625. Accessed 15 Feb 2019

Taghipour M, Shakibaeinia A, Sylvestre É, Tolouei S, Dorner S (2019) Microbial risk associated with CSOs upstream of drinking water sources in a transboundary river using hydrodynamic and water quality modeling. Sci Total Environ 683:547–558. https://doi.org/10.1016/j.scitotenv.2019.05.130

Teunis PFM, Chappell CL, Okhuysen PC (2002) Cryptosporidium dose response studies: variation between isolates. Risk Anal 22:175–185. https://doi.org/10.1111/0272-4332.00014

Teunis P, Van den Brandhof W, Nauta M, Wagenaar J, Van den Kerkhof H, Van Pelt W (2005) A reconsideration of the Campylobacter dose–response relation. Epidemiol Infect 133:583–592

Teunis PFM, Moe CL, Liu P, Miller S, Lindesmith L, Baric RS, Le Pendu J, Calderon RL (2008) Norwalk virus: how infectious is it? J Med Virol 80:1468–1476. https://doi.org/10.1002/jmv.21237

Viñas V, Malm A, Pettersson TJR (2018) Overview of microbial risks in water distribution networks and their health consequences: quantification, modelling, trends, and future implications. Can J Civil Eng 46:149–159. https://doi.org/10.1139/cjce-2018-0216

Westrell T, Bergstedt O, Stenström T, Ashbolt N (2003) A theoretical approach to assess microbial risks due to failures in drinking water systems. Int J Environ Health Res 13:181–197. https://doi.org/10.1080/0960312031000098080

Westrell T, Teunis P, van den Berg H, Lodder W, Ketelaars H, Stenström TA, de Roda Husman AM (2006) Short- and long-term variations of norovirus concentrations in the Meuse river during a 2-year study period. Water Res 40:2613–2620. https://doi.org/10.1016/j.watres.2006.05.019

WHO (2001) Water quality - guidelines, standards & health: assessment of risk and risk management for water-related infectious disease. IWA Publishing, World Health Organization, Geneva

WHO (2016) Quantitative microbial risk assessment: Application for water safety management, 2016th edn. World Health Organization, Geneva

WHO (2017) Guidelines for drinking-water quality, 4th edition, incorporating the 1st addendum, 2017th edn. World Health Organization, Geneva

Acknowledgements

Open access funding provided by Chalmers University of Technology. Funding has been provided by the Swedish Water and Wastewater Association through the project Risk-Based Decision Support for Safe Drinking Water (Project 13-102). The research is part of DRICKS, a framework programme for drinking water research coordinated by Chalmers University of Technology.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

No potential conflicts of interest have been identified.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bergion, V., Lindhe, A., Sokolova, E. et al. Accounting for Unexpected Risk Events in Drinking Water Systems. Expo Health 13, 15–31 (2021). https://doi.org/10.1007/s12403-020-00359-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12403-020-00359-4