Abstract

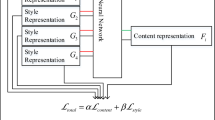

This paper presents an automatic image synthesis method to transfer the style of an example image to a content image. When standard neural style transfer approaches are used, the textures and colours in different semantic regions of the style image are often applied inappropriately to the content image, ignoring its semantic layout and ruining the transfer result. In order to reduce or avoid such effects, we propose a novel method based on automatically segmenting the objects and extracting their soft semantic masks from the style and content images, in order to preserve the structure of the content image while having the style transferred. Each soft mask of the style image represents a specific part of the style image, corresponding to the soft mask of the content image with the same semantics. Both the soft masks and source images are provided as multichannel input to an augmented deep CNN framework for style transfer which incorporates a generative Markov random field model. The results on various images show that our method outperforms the most recent techniques.

Similar content being viewed by others

References

Azadi, S., Fisher, M., Kim, V., Wang, Z., Shechtman, E., Darrell, T.: Multi-content GAN for few-shot font style transfer. arXiv preprint arXiv:1712.00516 (2017)

Baltrušaitis, T., Robinson, P., Morency, L.P.: OpenFace: an open source facial behavior analysis toolkit. In: Winter Conference on Applications of Computer Vision, pp. 1–10 (2016)

Brancati, N., De Pietro, G., Frucci, M., Gallo, L.: Human skin detection through correlation rules between the YCb and YCr subspaces based on dynamic color clustering. Comput. Vis. Image Underst. 155, 33–42 (2017)

Champandard, A.J.: Semantic style transfer and turning two-bit doodles into fine artworks. arXiv preprint arXiv:1603.01768 (2016)

Chang, H., Lu, J., Yu, F., Finkelstein, A.: PairedCycleGAN: asymmetric style transfer for applying and removing makeup. In: The IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2018)

Criminisi, A., Pérez, P., Toyama, K.: Region filling and object removal by exemplar-based image inpainting. IEEE Trans. Image Process. 13(9), 1200–1212 (2004)

Deng, X.: Enhancing image quality via style transfer for single image super-resolution. IEEE Signal Process. Lett. 25, 571–575 (2018)

Dong, X., Yan, Y., Ouyang, W., Yang, Y.: Style aggregated network for facial landmark detection. In: The IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2018)

Efros, A.A., Freeman, W.T.: Image quilting for texture synthesis and transfer. In: Proceedings of the 28th Annual conference on Computer Graphics and Interactive Techniques, pp. 341–346. ACM (2001)

Efros, A.A., Leung, T.K.: Texture synthesis by non-parametric sampling. In: Proceeding of the International Conference on Computer Vision, vol. 2, pp. 1033–1038. IEEE (1999)

Face++: Face++. https://www.faceplusplus.com/face-detection/. Accessed 4 Apr 2015

Fišer, J., Jamriška, O., Simons, D., Shechtman, E., Lu, J., Asente, P., Lukáč, M., Sýkora, D.: Example-based synthesis of stylized facial animations. ACM Trans. Graph. 36(4), 155:1–155:11 (2017)

Freeman, W.T., Pasztor, E.C., Carmichael, O.T.: Learning low-level vision. Int. J. Comput. Vis. 40(1), 25–47 (2000)

Frigo, O., Sabater, N., Delon, J., Hellier, P.: Split and match: example-based adaptive patch sampling for unsupervised style transfer. In: Proceedings of the Conference on Computer Vision and Pattern Recognition, pp. 553–561 (2016)

Gatys, L., Ecker, A.S., Bethge, M.: Texture synthesis using convolutional neural networks. In: Advances in Neural Information Processing Systems, pp. 262–270 (2015)

Gatys, L.A., Ecker, A.S., Bethge, M.: Image style transfer using convolutional neural networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2414–2423 (2016)

Gatys, L.A., Ecker, A.S., Bethge, M., Hertzmann, A., Shechtman, E.: Controlling perceptual factors in neural style transfer. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2017)

Gilbert, A., Collomosse, J., Jin, H., Price, B.: Disentangling structure and aesthetics for style-aware image completion. In: The IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2018)

Girshick, R., Donahue, J., Darrell, T., Malik, J.: Rich feature hierarchies for accurate object detection and semantic segmentation. In: Proceedings of the Conference on Computer Vision and Pattern Recognition, pp. 580–587 (2014)

Gooch, A., Gooch, B., Shirley, P., Cohen, E.: A non-photorealistic lighting model for automatic technical illustration. In: Conference on Computer Graphics and Interactive Techniques (1998)

Hall, P., Cai, H., Wu, Q., Corradi, T.: Cross-depiction problem: recognition and synthesis of photographs and artwork. Comput. Vis. Media 1(2), 91–103 (2015)

Isenberg, T.: Visual abstraction and stylisation of maps. Cartogr. J. 50(1), 8–18 (2013)

Johnson, J., Alahi, A., Fei-Fei, L.: Perceptual losses for real-time style transfer and super-resolution. In: European Conference on Computer Vision, pp. 694–711. Springer (2016)

Kang, S.B., Kang, S.B., Kang, S.B., Kang, S.B., Kang, S.B.: Visual attribute transfer through deep image analogy. ACM Trans. Graph. 36(4), 120 (2017)

Kim, B.C., Azevedo, V., Gross, M., Solenthaler, B.: Transport-Based Neural Style Transfer for Smoke Simulations (2019). arXiv:1905.07442

Kwatra, V., Essa, I., Bobick, A., Kwatra, N.: Texture optimization for example-based synthesis. ACM Trans. Graph. ToG 24(3), 795–802 (2005)

Kwatra, V., Schödl, A., Essa, I., Turk, G., Bobick, A.: Graphcut textures: image and video synthesis using graph cuts. In: ACM Transactions on Graphics (ToG), vol. 22, pp. 277–286. ACM (2003)

Lerotic, M., Chung, A.J., Mylonas, G., Yang, G.Z.: Pq-space Based non-photorealistic rendering for augmented reality. In: Ayache N., Ourselin S., Maeder A. (eds.) Medical Image Computing and Computer-Assisted Intervention—MICCAI 2007. Lecture Notes in Computer Science, vol 4792, pp. 102–109. Springer, Berlin, Heidelberg (2007)

Li, C., Wand, M.: Combining Markov random fields and convolutional neural networks for image synthesis. In: Proceedings of the Conference on Computer Vision and Pattern Recognition, pp. 2479–2486 (2016)

Li, Y., Fang, C., Yang, J., Wang, Z., Lu, X., Yang, M.H.: Universal style transfer via feature transforms. In: Advances in Neural Information Processing Systems, pp. 386–396 (2017)

Liu, L., Ouyang, W., Wang, X., Fieguth, P.W., Chen, J., Liu, X., Pietikäinen, M.: Deep learning for generic object detection: a survey. CoRR arXiv:1809.02165 (2018)

Luan, F., Paris, S., Shechtman, E., Bala, K.: Deep photo style transfer. In: The IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2017)

Luft, T., Kobs, F., Zinser, W., Deussen, O.: Watercolor illustrations of cad data. In: International Symposium on Computational Aesthetics in Graphics Visualization and Imaging (2008)

Noh, H., Hong, S., Han, B.: Learning deconvolution network for semantic segmentation. In: Proceedings of the International Conference on Computer Vision, pp. 1520–1528 (2015)

Ruder, M., Dosovitskiy, A., Brox, T.: Artistic style transfer for videos. In: German Conference on Pattern Recognition, pp. 26–36. Springer (2016)

Selim, A., Elgharib, M., Doyle, L.: Painting style transfer for head portraits using convolutional neural networks. ACM Trans. Graph. TOG 35(4), 129 (2016)

Shelhamer, E., Long, J., Darrell, T.: Fully convolutional networks for semantic segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 39(4), 640–651 (2017)

Shih, Y., Paris, S., Barnes, C., Freeman, W.T., Durand, F.: Style transfer for headshot portraits. ACM Trans. Graph. TOG 33(4), 148:1–148:14 (2014)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556 (2014)

Thoma, M.: A survey of semantic segmentation. arXiv preprint arXiv:1602.06541 (2016)

Ulyanov, D., Lebedev, V., Vedaldi, A., Lempitsky, V.: Texture networks: feed-forward synthesis of textures and stylized images. In: International Conference on Machine Learning (ICML) (2016)

Ulyanov, D., Vedaldi, A., Lempitsky, V.: Improved texture networks: maximizing quality and diversity in feed-forward stylization and texture synthesis. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, vol. 1, p. 6 (2017)

Vicente, S., Rother, C., Kolmogorov, V.: Object cosegmentation. In: Conference on Computer Vision and Pattern Recognition, pp. 2217–2224. IEEE (2011)

Wei, L.Y., Levoy, M.: Fast texture synthesis using tree-structured vector quantization. In: Proceedings of the 27th Annual Conference on Computer Graphics and Interactive Techniques, pp. 479–488. ACM Press/Addison-Wesley Publishing Co. (2000)

Yang, Y., Zhao, H., You, L., Tu, R., Wu, X., Jin, X.: Semantic portrait color transfer with internet images. Multimed. Tools Appl. 76(1), 523–541 (2017)

Zhang, H., Dana, K.: Multi-style generative network for real-time transfer. arXiv preprint arXiv:1703.06953 (2017)

Zhang, W., Cao, C., Chen, S., Liu, J., Tang, X.: Style transfer via image component analysis. IEEE Trans. Multimed. 15(7), 1594–1601 (2013)

Zheng, S., Jayasumana, S., Romera-Paredes, B., Vineet, V., Su, Z., Du: Conditional random fields as recurrent neural networks. In: Proceedings of the International Conference on Computer Vision, pp. 1529–1537 (2015)

Acknowledgements

This work was supported by National Natural Science Foundation of China (61503128), Science and Technology Plan Project of Hunan Province (2016TP1020), Scientific Research Fund of Hunan Provincial Education Department (16C0226, 18A333), Hengyang guided science and technology projects and Application-oriented Special Disciplines (Hengkefa [2018]60-31), Double First-Class University Project of Hunan Province (Xiangjiaotong [2018]469), Hunan Province Special Funds of Central Government for Guiding Local Science and Technology Development (2018CT5001) and Subject Group Construction Project of Hengyang Normal University (18XKQ02), Key Programme (61733004). We would like to thank NVIDIA for the GPU donation.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zhao, HH., Rosin, P.L., Lai, YK. et al. Automatic semantic style transfer using deep convolutional neural networks and soft masks. Vis Comput 36, 1307–1324 (2020). https://doi.org/10.1007/s00371-019-01726-2

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-019-01726-2