Abstract

In process algebras, a congruence is an equivalence that remains valid when any subsystem is replaced by an equivalent one. Whether or not an equivalence is a congruence depends on the set of operators used in building systems from subsystems. Numerous congruences have been found, differing from each other in fine details, major ideas, or both, and none of them is good for all situations. The world of congruences seems thus chaotic, which is unpleasant, because the notion of congruence is at the heart of process algebras. This study continues attempts to clarify the big picture by proving that in certain sub-areas, there are no other congruences than those that are already known or found in the study. First, the region below stability-preserving fair testing equivalence is surveyed using an exceptionally small set of operators. The region contains few congruences, which is in sharp contrast with an earlier result on the region below Chaos-Free Failures Divergences (CFFD) equivalence, which contains 40 well-known and not well-known congruences. Second, steps are taken towards a general theory of dealing with initial stability, which is a small but popular detail. This theory is applied to the region below CFFD.

Similar content being viewed by others

1 Introduction

This study is motivated by a striking difference: in the case of sequential computation, the notion of the result of a computation at the highest level of abstraction is simple, clear and widely agreed upon, whereas in the case of concurrent computation, many alternatives are widely used and numerous more alternatives are known to exist. Let us discuss this a bit.

It is universally agreed that a deterministic sequential program computes a partial function. The function is partial, because with some inputs the program may fail to terminate. This nice picture is slightly complicated by the fact that sequential programs may contain intentional nondeterminism, such as in the Miller–Rabin probabilistic primality test [1, 13]; or unwanted nondeterminism, such as in i=i++ + 1; (a Wikipedia example of undefined behavior).Footnote 1 This issue could be taken into account by declaring that a sequential program executes a relation from the set of inputs to the set of outputs union \(\{\perp \}\): (i, o) is in the relation if and only if, for the input i, o is a possible output or \(o = \perp \) denoting failure to terminate. These abstract views to sequential programs are simple, natural, and widely accepted. At their level of abstraction, they have no rivals.

The situation is entirely different with concurrent programs. A concurrent program computes a behaviour. Behaviours may be—and have been—compared with branching bisimilarity [8], weak bisimilarity [10], CSP failures divergences equivalence [15], Chaos-Free Failures Divergences (CFFD) equivalence [20], and numerous other equivalences. None of them is widely considered as the “most natural” or “right” notion of “similar behaviour”. If there is any agreement, it is that the choice of the most appropriate equivalence depends on the situation. Even the same users keep on switching between different equivalences depending on the task at hand, such as in [15], where, for instance, stable failures equivalence is used when the so-called catastrophic divergence phenomenon prevents the use of failures divergences equivalence.

The famous survey [5], among others, has improved our understanding a lot by presenting many equivalences in a systematic framework. However, such surveys do not provide full information, because they only discuss known equivalences. They leave it open whether there could be unknown useful equivalences with interesting properties.

In many situations the equivalence must be a congruence with respect to the operators that are available for building systems from subsystems. This requirement is so strong that it makes it possible to survey certain regions of equivalences, list all congruences in them, and prove that they contain no other congruences. Chapters 11 and 12 of [15] survey two regions and prove that there are three congruences in each. In [17], all congruences that are implied by the CFFD equivalence were found. This fairly large region contains 40 congruences, including stable failures equivalence, CSP failures divergences equivalence, and trace equivalence. Five kinds of failures, four kinds of infinite traces, two kinds of divergence traces, and two kinds of traces were needed, some of them new. Perhaps none of the previously unknown congruences among the 40 is interesting, but if so, then we know that no interesting congruences are lurking in that region.

A task that is somewhat similar in spirit to fully surveying a region is to choose a property such as deadlock-freedom and find the weakest congruence that preserves the property. Such results have been published in, e.g., [2, 4, 6, 7, 9, 11, 12, 14, 16]. As explained in [17], knowing the weakest congruence helps in designing compositional verification algorithms for the property.

The congruence property depends on the set of operators for building systems. Perhaps the most well known example of this deals with the common choice operator “\(+\)”. Branching bisimilarity, weak bisimilarity, CSP failures divergences equivalence, CFFD equivalence, and many other equivalences are not congruences with respect to it. In CSP, the congruence property was obtained by rejecting the common choice operator and introducing two other choice operators instead. In most other theories, the common choice operator was kept and the equivalence was refined so that it became a congruence.

At this point it is worth mentioning that if we are only interested in so-called safety properties of systems (that is, whatever the system does must be acceptable), then there is a single very widely agreed “right” congruence: trace equivalence. Furthermore, it was proven in [18] that any operator that satisfies a rather natural weak assumption can be constructed from parallel composition and hiding modulo trace equivalence, implying that trace equivalence is a congruence with respect to every “reasonable” operator. This situation is comparable to sequential programs in simplicity and clarity.

Things become problematic indeed, when also so-called liveness properties are of interest (the system must eventually do something useful, or at least not lose the ability to nondeterministically choose to eventually do something useful). The problems are so severe that they have led to wide adoption of an equivalence that does not imply trace equivalence, that is, CSP failures divergences equivalence.

The above-mentioned results in [15] use a fairly large set of operators. In particular, they use a “throw” operator that rules out many equivalences that would otherwise be congruences. The results in [17] only use parallel composition, hiding, relational renaming, and action prefix. Therefore, where the regions considered by [15] and [17] overlap, [17] gives additional congruences.

In [19], of which the present study is an extension, all congruences were found that are implied by the stability-preserving fair testing equivalence of [14]. This equivalence is a congruence. It is interesting for many reasons. It is the weakest congruence that preserves the property AGEFa, that is, “in all futures always, there is a future where eventually a occurs”. It offers an alternative approach to the verification of liveness properties. With the traditional approach, it is often necessary to explicitly state so-called fairness assumptions, which may be a burden. With fair testing equivalence this is unnecessary, because, so to speak, it has a built-in fairness assumption that is acceptable in many cases. Unlike other congruences for a significant subset of liveness properties, it has a very well-working partial order reduction method [21]. On the theoretical side, its definition is an interesting exception, because it seems somewhat ad-hoc instead of following a familiar pattern.

An important feature of [19] is that only parallel composition, hiding, and functional renaming were used for proving the absence of more congruences. This is a strictly smaller set of operators than in [15] and [17]. In [17] it was proven that if a congruence is implied by strong bisimilarity (this is a very weak assumption) and preserves anything, then it preserves at least the alphabet. It was shown with two counter-examples that the result depends on the availability of the action prefix and relational renaming operators. In [19], one of the counter-examples was encountered again, and six new (albeit uninteresting) congruences were found that do not preserve the alphabet.

The most important finding of [19] was that there is only one congruence between the not stability-preserving fair testing equivalence and the congruence that only preserves the alphabet: trace equivalence. If one wants to have something like fair testing, then one must go all the way to fair testing. There are no intermediate stops. This is in sharp contrast to [17]. It is also somewhat surprising, because the definition of fair testing seems quite ad-hoc, and because fair testing preserves AGEFa which is a well-known example of a property that is not linear-time (e.g., [3, p. 32]). The importance of this result is strengthened by the fact that it was obtained in the presence of only parallel composition, hiding, and functional renaming. Also this is different from [17].

A widely used way to make an equivalence a congruence with respect to the common choice operator is to add information on initial stability: systems that can initially execute an invisible action are deemed inequivalent to systems that cannot. The study [19] was the first one that fully covers a region induced by a stability-preserving congruence. Also the weakest stability-preserving congruence was found. Some unexpected or at least unconventional congruences were found, but they may be considered uninteresting, because they rely on the absence of the action prefix operator.

The present study makes two contributions. First, the conference paper [19] had a strict page limit, leading to dense proofs that are hard to read. The present study attempts to make the results in [19] more readable. Second, it develops a theory that greatly simplifies the treatment of initial stability when proving the absence of unknown congruences, at the cost of assuming the congruence property with respect to more operators than [19]. Therefore, it gives less general results on fair testing equivalence than [19]. On the other hand, it applies to CFFD equivalence.

Section 2 presents the necessary background concepts. The congruences that are implied by stability-preserving fair testing equivalence are introduced in Sect. 3. In Sect. 4, the weakest stability-preserving congruence is found. That stability-preserving fair testing equivalence does not imply more congruences is proven in Sect. 5. The new theory on adding initial stability checking is presented in Sect. 6, and applied to CFFD equivalence in Sect. 7 resulting in 79 congruences. This study is concluded by a discussion section.

2 LTSs and their operators

In this section we list many widely known concepts needed in this study, pointing out little facts that are useful to remember when reading our proofs. We also pay attention to details that vary in the literature, discussing the motivation of our choice.

The empty string is denoted with \(\varepsilon \). The set of strings on A is denoted with \(A^*\), and \(A^+ = A^* {\setminus } \{\varepsilon \}\). If \(\pi \) and \(\sigma \) are strings, then \(\pi \sqsubseteq \sigma \) denotes that \(\pi \) is a prefix of \(\sigma \), that is, there is a string \(\rho \) such that \(\sigma = \pi \rho \). If \(\pi \) is a string and K is a set of strings, then \(\pi \sqsubseteq K\) denotes that there is \(\sigma \in K\) such that \(\pi \sqsubseteq \sigma \). We have \(\varepsilon \sqsubseteq K\) if and only if \(K \ne \emptyset \). We define \(\pi ^{-1}K = \{ \rho \mid \pi \rho \in K \}\). It is nonempty if and only if \(\pi \sqsubseteq K\). Trivially \(\varepsilon ^{-1}K = K\).

The invisible action is denoted with \(\tau \). It denotes the occurrence of something that the outside world does not see. This is different from the occurrence of nothing, thus \(\tau \ne \varepsilon \). An alphabet is any set \(\varSigma \) such that \(\varepsilon \notin \varSigma \) and \(\tau \notin \varSigma \). Its elements are called visible actions.

A labelled transition system or LTS is a tuple \((S, \varSigma , \varDelta , {\hat{s}})\) such that \(\varSigma \) is an alphabet, \(\varDelta \subseteq S \times (\varSigma \cup \{\tau \}) \times S\), and \({\hat{s}} \in S\). Elements of S and \(\varDelta \) are called states and transitions, respectively, and \({\hat{s}}\) is the initial state. The transition \((s,a,s')\) may also be denoted with \(s \mathrel {{-}a{\rightarrow }} s'\). By \(s \mathrel {{-}a{\rightarrow }}\) we mean that there is \(s'\) such that \(s \mathrel {{-}a{\rightarrow }} s'\).

If an LTS is shown as a drawing, then, unless otherwise stated, its alphabet is the set of the visible actions along the transitions in the drawing. The alphabet may be specified explicitly in the text or near the bottom right corner of the drawing. For instance, the alphabet of  is \(\{a\}\) and the alphabet of

is \(\{a\}\) and the alphabet of  is \({\{a,b\}}\). In particular, we will frequently use

is \({\{a,b\}}\). In particular, we will frequently use  and

and  , their alphabets being \(\emptyset \).

, their alphabets being \(\emptyset \).

In the constructions of this study, we will often need elements that are not in a given alphabet or in a given set of states. Such entities exist because, by the axiom of foundation in set theory, if X is a set, then X, \(\{X\}\), \(\{\{X\}\}\), and so on are not elements of X. Sometimes in the literature, instead of each LTS having an alphabet of its own, there is a single global alphabet. That convention would make things difficult in the present study, because elements that are not in the alphabet would not be available. We will return to this issue in Sect. 8.

We use L, M, \(L'\), \(M'\), \(L_1\), \(M_1\), and so on to denote LTSs. Unless otherwise stated, \(L = (S, \varSigma , \varDelta , {\hat{s}})\), \(L' = (S', \varSigma ', \varDelta ',\)\({\hat{s}}')\), \(L_1 = (S_1, \varSigma _1, \varDelta _1, {\hat{s}}_1)\), and so on. Because this convention is sometimes unclear, we also use \(\varSigma (L)\) to denote the alphabet of L. By \(s \mathrel {{-}a{\rightarrow }}_i s'\) we mean that \((s,a,s') \in \varDelta _i\).

Researchers widely agree that at the detailed level, it is appropriate to compare behaviours using the following notion. Two LTSs \(L_1\) and \(L_2\) are bisimilar, denoted with \(L_1 \equiv L_2\), if and only if \(\varSigma _1 = \varSigma _2\) and there is a relationFootnote 2 “\(\sim \)” \(\subseteq S_1 \times S_2\) with the following properties:

- 1.

\({\hat{s}}_1 \sim {\hat{s}}_2\).

- 2.

If \(s_1 \mathrel {{-}a{\rightarrow }}_1 s'_1\) and \(s_1 \sim s_2\), then there is \(s'_2\) such that \(s_2 \mathrel {{-}a{\rightarrow }}_2 s'_2\) and \(s'_1 \sim s'_2\).

- 3.

If \(s_2 \mathrel {{-}a{\rightarrow }}_2 s'_2\) and \(s_1 \sim s_2\), then there is \(s'_1\) such that \(s_1 \mathrel {{-}a{\rightarrow }}_1 s'_1\) and \(s'_1 \sim s'_2\).

It is easy to check that if \(L_1\) and \(L_2\) are isomorphic, then they are bisimilar.

The reachable part of an LTS \((S, \varSigma , \varDelta , {\hat{s}})\) is \((S', \varSigma , \varDelta ', {\hat{s}})\), where \(S'\) and \(\varDelta '\) consist of those states and transitions to which there is a path from \({\hat{s}}\). Any LTS is bisimilar with its reachable part.

Next we define the six operators that this study will focus on.

Parallel composition\(L_1 {\Vert }L_2\) It is the reachable part of \((S, \varSigma , \varDelta , {\hat{s}})\), where \(S = S_1 \times S_2\), \(\varSigma = \varSigma _1 \cup \varSigma _2\), \({\hat{s}} = ({\hat{s}}_1, {\hat{s}}_2)\), and \((s_1,s_2) \mathrel {{-}a{\rightarrow }} (s'_1,s'_2)\) if and only if

\(a \notin \varSigma _2\), \(s_1 \mathrel {{-}a{\rightarrow }}_1 s'_1\), and \(s'_2 = s_2 \in S_2\),

\(a \notin \varSigma _1\), \(s_2 \mathrel {{-}a{\rightarrow }}_2 s'_2\), and \(s'_1 = s_1 \in S_1\), or

\(a \in \varSigma _1 \cap \varSigma _2\), \(s_1 \mathrel {{-}a{\rightarrow }}_1 s'_1\), and \(s_2 \mathrel {{-}a{\rightarrow }}_2 s'_2\).

That is, if a belongs to the alphabets of both components, then an a-transition of the parallel composition consists of simultaneous a-transitions of both components. If a belongs to the alphabet of one but not the other component, then that component may make an a-transition while the other component stays in its current state. Also each \(\tau \)-transition of the parallel composition consists of one component making a \(\tau \)-transition without the other participating. The result of the parallel composition is pruned by only taking the reachable part.

It is easy to check that \(L_1 {\Vert }L_2\) is isomorphic to (and thus bisimilar with) \(L_2 {\Vert }L_1\), and \((L_1 {\Vert }L_2) {\Vert }L_3\) is isomorphic to \(L_1 {\Vert }(L_2 {\Vert }L_3)\). This means that “\({\Vert }\)” can be considered commutative and associative.

Hiding\(L {\setminus } A\) Let A be a set. The hiding of A in L is \((S, \varSigma ', \varDelta ', {\hat{s}})\), where \(\varSigma ' = \varSigma {\setminus } A\) and \(\varDelta ' = \{ (s,a,s') \in \varDelta \mid \)\(a \notin A \} \cup \{ (s,\tau ,s') \mid \exists a \in A: (s,a,s') \in \varDelta \}\). That is, labels of transitions that are in A are replaced by \(\tau \) and removed from the alphabet. Other labels of transitions are not affected.

Relational renaming\(L\varPhi \) Let \(\varPhi \) be a set of pairs such that for every \((a,b) \in \varPhi \) we have \(\tau \ne a \ne \varepsilon \) and \(\tau \ne b \ne \varepsilon \). The domain of \(\varPhi \) is \(\mathcal {D}(\varPhi ) = \{ a \mid \exists b: (a,b) \in \varPhi \}\). Let the predicate \(\varPhi (a,b)\) hold if and only if either \((a,b) \in \varPhi \) or \(b = a \notin \mathcal {D}(\varPhi )\). The relational renaming of L with \(\varPhi \) is \((S, \varSigma ', \varDelta ', {\hat{s}})\), where \(\varSigma ' = \{ b \mid \exists a \in \varSigma : \varPhi (a,b) \}\) and \(\varDelta ' = \{(s,b,s') \mid \exists a: (s,a,s') \in \varDelta \wedge \varPhi (a,b) \}\).

That is, \(\varPhi \) renames visible actions to visible actions. A visible action may be renamed to more than one visible action. In that case, the transitions labelled by that action are duplicated as needed. If \(\varPhi \) specifies no new names for an action, the transitions labelled by it remain unchanged. In particular, \(\tau \)-transitions remain unchanged. The alphabet of the result consists of the new names of the original visible actions where such have been defined, and of the remaining original visible actions as such. Pairs in \(\varPhi \) whose first component is not in \(\varSigma \) have no effect. This design makes it simple to specify the intended changes without causing accidental removal of the transitions that are not intended to change.

Functional renaming\(\phi (L)\) Functional renaming is the subcase of relational renaming where \(\varPhi \) specifies at most one new action name for each action. It is denoted with \(\phi (L)\), where \(\phi (a) = b\) if \((a,b) \in \varPhi \), and \(\phi (a) = a\), otherwise. It is included in our list of six operators, because we will encounter some equivalences that are congruences with respect to it but not with respect to relational renaming.

We will frequently use the following two special cases of functional renaming as helpful notation in proofs. They attach and remove an integer i to visible actions. They will make it easy to ensure that in a parallel composition, precisely those actions synchronize whom we want to synchronize. In the notation, A is an alphabet, \(\varepsilon \ne a \ne \tau \), and \(\varepsilon \ne a_j \ne \tau \) for \(1 \le j \le n\). Without loss of generality we assume that always \(\varepsilon \ne a^{[i]} \ne \tau \).

Action prefixa.L. Let \(a \ne \varepsilon \). Let \(\varSigma ' = \varSigma \cup \{a\}\) if \(a \ne \tau \), and \(\varSigma ' = \varSigma \) otherwise. The operator a.L yields \((S', \varSigma ', \varDelta ', {\hat{s}}')\), where \({\hat{s}}'\) is a new state (that is, \({\hat{s}}' \notin S\)), \(S' = S \cup \{{\hat{s}}'\}\), and \(\varDelta ' = \varDelta \cup \{({\hat{s}}', a, {\hat{s}})\}\). That is, a.L starts by executing a, after which it is in the initial state of L.

Choice\(L_1 + L_2\) Roughly speaking, the choice between \(L_1\) and \(L_2\) starts by executing an initial transition of \(L_1\) or an initial transition of \(L_2\). This transition represents a choice between \(L_1\) and \(L_2\). Then \(L_1 + L_2\) continues like the chosen LTS continues after the corresponding transition.

This may be formalized by taking a disjoint union of \(L_1\) and \(L_2\), and adding a new state that acts as the initial state of the result. For each initial transition of \(L_1\) and of \(L_2\), a copy is made that starts at the new state. Indexing of state names is used to ensure that the union is disjoint. That is, \(L_1 + L_2 = (S', \varSigma ', \varDelta ', {\hat{s}}')\), where \(S' = S_1^{[1]} \cup S_2^{[2]} \cup \{{\hat{s}}'\}\), \(\varSigma ' = \varSigma _1 \cup \varSigma _2\), \(\varDelta ' = \varDelta '_1 \cup \varDelta ''_1 \cup \varDelta '_2 \cup \varDelta ''_2\), and \({\hat{s}}' \notin S_1^{[1]} \cup S_2^{[2]}\), where \(\varDelta '_i = \{ (s^{[i]}, a, s'^{[i]}) \mid (s,a,s') \in \varDelta _i \}\) and \(\varDelta ''_i = \{ ({\hat{s}}', a, s'^{[i]}) \mid ({\hat{s}}_i,a,s') \in \varDelta _i \}\) for \(i \in \{1,2\}\).

Also “\(+\)” can be considered commutative and associative (up to bisimilarity).

Let “\(\cong \)” and “\(\cong '\)” be equivalences on LTSs. We say that “\(\cong \)” implies “\(\cong '\)” or “\(\cong '\)” is at least as weak as “\(\cong \)” if and only if “\(\cong \)” \(\subseteq \) “\(\cong '\)”. This is equivalent to the following: for any LTSs \(L_1\) and \(L_2\) we have \(L_1 \cong L_2 \Rightarrow L_1 \cong ' L_2\).

Let “\(\cong \)” be an equivalence on LTSs and \(\mathsf {op}\) be a unary operator on LTSs. We say that “\(\cong \)” is a congruence with respect to\(\mathsf {op}\) if and only if for every L and \(L'\), \(L \cong L'\) implies \(\mathsf {op}(L) \cong \mathsf {op}(L')\). When we say that an equivalence is a congruence with respect to parallel composition, we mean that it is a congruence with respect to the two unary operators \(\mathsf {op}_1(L) := L_1 {\Vert }L\) and \(\mathsf {op}_2(L) := L {\Vert }L_2\). Because “\({\Vert }\)” is commutative, this is equivalent to saying that the equivalence is a congruence with respect to \(\mathsf {op}_1(L)\). The similar convention and remark apply to “\(+\)”.

It is easy to show with induction that if \(f(L_1, \ldots , L_n)\) is an expression, \(L_i \cong L'_i\) for \(1 \le i \le n\), and “\(\cong \)” is a congruence with respect to all operators used in f, then \(f(L_1, \ldots , L_n) \cong f(L'_1, \ldots , L'_n)\).

3 Stability-preserving fair testing and the region below it

In this section we define 4 times 5 equivalences in a two-dimensional fashion. Stability-preserving fair testing equivalence is the strongest equivalence among them. We prove that 17 of these equivalences are congruences with respect to parallel composition, hiding, and functional renaming. We investigate the congruence properties of these 17 also with respect to relational renaming, action prefix, and choice. We will see that the remaining three equivalences are not congruences with respect to parallel composition.

An LTS L is unstable if and only if \({\hat{s}} \mathrel {{-}\tau {\rightarrow }}\), and stable otherwise. If L is stable we define \(\mathsf {en}(L) := \{ a \in \varSigma \mid {\hat{s}} \mathrel {{-}a{\rightarrow }} \}\), that is, the set of visible actions that L can execute in its initial state. If L is unstable, then the value of \(\mathsf {en}(L)\) is not important. By defining it as \(\mathsf {en}(L) := \{\tau \}\) we get the handy property that if L is stable and \(L'\) is unstable, then certainly \(\mathsf {en}(L) \ne \mathsf {en}(L')\). The following lemma tells how stability and \(\mathsf {en}\) behave in LTS expressions.

Lemma 1

-

\(L_1 {\Vert }L_2\) is stable if and only if both \(L_1\) and \(L_2\) are stable. Then \(\mathsf {en}(L_1 {\Vert }L_2) = (\mathsf {en}(L_1) {\setminus } \varSigma _2) \cup (\mathsf {en}(L_2) {\setminus } \varSigma _1) \cup (\mathsf {en}(L_1) \cap \mathsf {en}(L_2))\).

-

\(L {\setminus } A\) is stable if and only if L is stable and \(\mathsf {en}(L) \cap A = \emptyset \). Then \(\mathsf {en}(L {\setminus } A) = \mathsf {en}(L)\).

-

\(L\varPhi \) is stable if and only if L is stable. Then \(\mathsf {en}(\varPhi (L)) = \{ b \mid \exists a \in \mathsf {en}(L): \varPhi (a,b) \}\).

-

\(\phi (L)\) is stable if and only if L is stable. Then \(\mathsf {en}(\phi (L)) = \{ \phi (a) \mid a \in \mathsf {en}(L) \}\).

-

a.L is stable if and only if \(a \ne \tau \). Then \(\mathsf {en}(a.L) = \{a\}\).

-

\(L_1 + L_2\) is stable if and only if both \(L_1\) and \(L_2\) are stable. Then \(\mathsf {en}(L_1 + L_2) = \mathsf {en}(L_1) \cup \mathsf {en}(L_2)\).

If \(s \in S\), \(s' \in S\), and \(\sigma \in \varSigma ^*\), then \(s \mathrel {{=}\sigma {\Rightarrow }} s'\) denotes that L contains a path from s to \(s'\) such that the sequence of visible actions along it is \(\sigma \). In particular, \(s \mathrel {{=}\varepsilon {\Rightarrow }} s\) holds for every \(s \in S\). The notation \(s \mathrel {{=}\sigma {\Rightarrow }}\) means that there is \(s'\) such that \(s \mathrel {{=}\sigma {\Rightarrow }} s'\). The set of traces of L is \(\mathsf {Tr}(L) := \{ \sigma \mid {\hat{s}} \mathrel {{=}\sigma {\Rightarrow }} \}\). If L is stable, then \(\mathsf {en}(L) = \mathsf {Tr}(L) \cap \varSigma \).

A state s of Lrefuses the string \(\rho \) if and only if \(s \mathrel {{=}\rho {\Rightarrow }}\) does not hold. That is, refusing a string means inability to execute it to completion. Refusing a set means refusing its every element. A tree failure of L is a pair \((\sigma , K)\) where \(\sigma \in \varSigma ^*\) and \(K \subseteq \varSigma ^+\) such that there is s such that \({\hat{s}} \mathrel {{=}\sigma {\Rightarrow }} s\) and s refuses K [14]. The empty string \(\varepsilon \) is ruled out from K because \(s \mathrel {{=}\varepsilon {\Rightarrow }}\) holds for every state s. In the failures of CSP [15] or CFFD [17], K is a set of visible actions, while now it is a set of strings of visible actions.

The set of the tree failures of L is denoted with \(\mathsf {Tf}(L)\). The following lemmas express simple properties of tree failures that will be used in the sequel.

Lemma 2

-

1.

If \(\varSigma = \emptyset \), then \(\mathsf {Tr}(L) = \{\varepsilon \}\) and \(\mathsf {Tf}(L) = \{(\varepsilon , \emptyset )\}\).

-

2.

If \(\sigma \in \mathsf {Tr}(L)\), then \((\sigma , \emptyset ) \in \mathsf {Tf}(L)\).

-

3.

If \(\sigma \notin \mathsf {Tr}(L)\), then, for every \(\pi \) and K, \((\sigma \pi , K) \notin \mathsf {Tf}(L)\).

Proof

The first two claims are immediate from the definitions. The third claim follows from the fact that if \(\sigma \notin \mathsf {Tr}(L)\), then \(\sigma \pi \notin \mathsf {Tr}(L)\). \(\square \)

Lemma 3

Assume that \({\hat{s}} \mathrel {{=}\sigma {\Rightarrow }} s\) and, for every \(a \in \varSigma \), \(\lnot (s \mathrel {{=}a{\Rightarrow }})\). Then \((\sigma , K) \in \mathsf {Tf}(L)\) if and only if \(K \subseteq \varSigma ^+\).

Proof

It is immediate from the definition that if \((\sigma , K) \in \mathsf {Tf}(L)\), then \(K \subseteq \varSigma ^+\). If \(K \subseteq \varSigma ^+\), the state s guarantees that \((\sigma , K) \in \mathsf {Tf}(L)\) by blocking the first action of every element in K. \(\square \)

In particular,  , implying

, implying  . This is a major difference between tree failures and the failures in CSP or CFFD theories. In CSP failures divergences equivalence divergence is catastrophic [15], meaning, among other things, that for every L and \(L'\) with \(\varSigma = \varSigma '\), we have

. This is a major difference between tree failures and the failures in CSP or CFFD theories. In CSP failures divergences equivalence divergence is catastrophic [15], meaning, among other things, that for every L and \(L'\) with \(\varSigma = \varSigma '\), we have  and

and  . Also CFFD equivalence is sensitive to divergence, but in a much less dramatic fashion [17]. We mention already now that fair testing equivalence is insensitive to divergence.

. Also CFFD equivalence is sensitive to divergence, but in a much less dramatic fashion [17]. We mention already now that fair testing equivalence is insensitive to divergence.

Lemma 4

Assume that L is stable and \(K \subseteq \varSigma ^+\). We have \((\varepsilon , K) \in \mathsf {Tf}(L)\) if and only if \(K \cap \mathsf {Tr}(L) = \emptyset \).

Proof

Because L is stable, \({\hat{s}} \mathrel {{=}\varepsilon {\Rightarrow }} s\) implies \(s = {\hat{s}}\). Therefore, \((\varepsilon , K) \in \mathsf {Tf}(L)\) if and only if \({\hat{s}}\) refuses K. Furthermore, \({\hat{s}}\) refuses \(\rho \) if and only if \(\rho \notin \mathsf {Tr}(L)\). \(\square \)

The notation \(L_1 \preceq L_2\) denotes that for every \((\sigma , K) \in \mathsf {Tf}(L_1)\), either \((\sigma , K) \in \mathsf {Tf}(L_2)\) or there is \(\pi \) such that \(\pi \sqsubseteq K\) and \((\sigma \pi , \pi ^{-1}K) \in \mathsf {Tf}(L_2)\). The latter condition is motivated by the following example. If  , then \((\varepsilon , \{aa\}) \notin \mathsf {Tf}(L)\). Even so,

, then \((\varepsilon , \{aa\}) \notin \mathsf {Tf}(L)\). Even so,  may fail to execute b. Here \((\sigma \pi , \pi ^{-1}K) \in \mathsf {Tf}(L)\), where \(\sigma = \varepsilon \), \(\pi = a\), and \(\pi ^{-1}K = \{a\}\). For a more detailed discussion, please see [14].

may fail to execute b. Here \((\sigma \pi , \pi ^{-1}K) \in \mathsf {Tf}(L)\), where \(\sigma = \varepsilon \), \(\pi = a\), and \(\pi ^{-1}K = \{a\}\). For a more detailed discussion, please see [14].

The condition \((\sigma , K) \in \mathsf {Tf}(L_2)\) is only needed to deal with the case \(K = \emptyset \), because when \(K \ne \emptyset \) it is obtained from the latter condition by choosing \(\pi = \varepsilon \). The LTSs \(L_1\) and \(L_2\) are fair testing equivalent, if and only if \(\varSigma _1 = \varSigma _2\), \(L_1 \preceq L_2\), and \(L_2 \preceq L_1\) [14].

If A and B are sets, let \(A \mathrel {\#}B := (A {\setminus } B) \cup (B {\setminus } A)\).

Lemma 5

The following relation is an equivalence on sets: \(A \approx B\) if and only if \(A \mathrel {\#}B\) is finite.

Proof

Because \(A \mathrel {\#}A = \emptyset \), “\(\approx \)” is reflexive. Because \(A \mathrel {\#}B = B \mathrel {\#}A\), “\(\approx \)” is symmetric. To prove transitivity, assume that \(A \approx B\) and \(B \approx C\). That is, \(A \mathrel {\#}B\) and \(B \mathrel {\#}C\) are finite. If \(a \in A {\setminus } C\), then \(a \in A {\setminus } B\) or \(a \in B {\setminus } C\). So \(A {\setminus } C \subseteq (A {\setminus } B) \cup (B {\setminus } C)\). A symmetric claim holds if \(a \in C {\setminus } A\). Thus \(A \mathrel {\#}C \subseteq (A \mathrel {\#}B) \cup (B \mathrel {\#}C)\). Therefore, also \(A \mathrel {\#}C\) is finite, that is, \(A \approx C\). \(\square \)

Lemma 6

Let \(f_1(L), \ldots , f_n(L)\) be functions from LTSs to some sets \(D_1, \ldots , D_n\), and let “\(\approx _i\)” be equivalences on \(D_i\) for \(1 \le i \le n\). Assume that “\(\cong \)” has been defined via \(L \cong L'\) if and only if for \(1 \le i \le n\), \(f_i(L) \approx _i f_i(L')\). Then “\(\cong \)” is an equivalence.

Proof

For any L and for \(1 \le i \le n\), \(f_i(L) \approx _i f_i(L)\), because “\(\approx _i\)” is reflexive. Therefore, \(L \cong L\), that is, “\(\cong \)” is reflexive. If \(L_1 \cong L_2\), then, for \(1 \le i \le n\), \(f_i(L_1) \approx _i f_i(L_2)\). The symmetry of “\(\approx _i\)” yields \(f_i(L_2) \approx _i f_i(L_1)\). So \(L_2 \cong L_1\) and “\(\cong \)” is symmetric. If \(L_1 \cong L_2\) and \(L_2 \cong L_3\), then, for \(1 \le i \le n\), \(f_i(L_1) \approx _i f_i(L_2) \approx _i f_i(L_3)\), yielding \(f_i(L_1) \approx _i f_i(L_3)\) by the transitivity of “\(\approx _i\)”. This means \(L_1 \cong L_3\). Therefore, “\(\cong \)” is transitive. \(\square \)

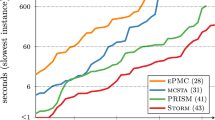

We now define a number of equivalences, of which twenty will be discussed in detail. The twenty will be shown in Fig. 1. Five of them do not preserve initial stability. The remaining 15 are defined by using one of the five to compare unstable LTSs, one of three equivalences to compare stable LTSs, and declaring that a stable and an unstable LTS are never equivalent. Eight of these 20 equivalences do not preserve the alphabet. If they were not congruences, they would be uninteresting indeed. However, they are congruences, and thus serve as examples of oddities that may be found when studying all congruences. The reader can skip them by skipping everything that contains \(\#\) or \(\perp \).

Definition 7

Let \(L_1\) and \(L_2\) be LTSs, and let \(\{x,y\} \subseteq \{{\perp }, {\#}, {\mathsf {\varSigma }}, \mathsf {en}, \mathsf {tr}, \mathsf {ft}\}\). We define

\(L_1 \cong _{\perp }L_2\) holds for every \(L_1\) and \(L_2\),

\(L_1 \cong ^{\textsf {}}_{\textsf {\#}} L_2\) if and only if \(\varSigma _1 \mathrel {\#}\varSigma _2\) is finite,

\(L_1 \cong _{\mathsf {\varSigma }}L_2\) if and only if \(\varSigma _1 = \varSigma _2\),

\(L_1 \cong ^{\textsf {}}_{\textsf {en}} L_2\) if and only if \(\varSigma _1 = \varSigma _2\) and \(\mathsf {en}(L_1) = \mathsf {en}(L_2)\) (this one will not be used on unstable LTSs),

\(L_1 \cong _\mathsf {tr}L_2\) if and only if \(\varSigma _1 = \varSigma _2\) and \(\mathsf {Tr}(L_1) = \mathsf {Tr}(L_2)\) (trace equivalence),

\(L_1 \cong _\mathsf {ft}L_2\) if and only if \(\varSigma _1 = \varSigma _2\), \(L_1 \preceq L_2\), and \(L_2 \preceq L_1\) (fair testing equivalence), and

\(L_1 \cong ^x_y L_2\) if and only if

\(L_1 \cong _x L_2\) and \(L_1\) and \(L_2\) are both stable, or

\(L_1 \cong _y L_2\) and \(L_1\) and \(L_2\) are both unstable (stability-preserving equivalences).

For instance, “\(\cong ^{\textsf {ft}}_{\textsf {tr}}\)” compares stable LTSs with fair testing equivalence and unstable LTSs with trace equivalence. It also preserves initial stability, that is, \(L_1 \cong ^{\textsf {ft}}_{\textsf {tr}} L_2\) implies \(L_1\cong ^{\perp }_{\perp }L_2\). The relation “\(\cong ^{\mathsf {\varSigma }}_{\mathsf {\varSigma }}\)” equates two LTSs if and only if they have the same alphabet and either both or none of them is stable.

Lemma 8

If “\(\cong _x\)” is an equivalence on stable LTSs and “\(\cong _y\)” is an equivalence on unstable LTSs, then “\(\cong ^x_y\)” is an equivalence on all LTSs.

Proof

If L is stable then \(L \cong _x L\) holds and yields \(L \cong ^x_y L\). Otherwise \(L \cong _y L\) holds and yields \(L \cong ^x_y L\).

Assume \(L_1 \cong ^x_y L_2\). If \(L_1\) is stable, then \(L_2\) is as well and \(L_1 \cong _x L_2\). It implies \(L_2 \cong _x L_1\) and \(L_2 \cong ^x_y L_1\). If \(L_1\) is unstable, similar reasoning applies with “\(\cong _y\)”.

To prove that “\(\cong ^x_y\)” is transitive, let \(L_1 \cong ^x_y L_2\) and \(L_2 \cong ^x_y L_3\). If \(L_2\) is stable, then \(L_1\) is as well by \(L_1 \cong ^x_y L_2\), and \(L_3\) by \(L_2 \cong ^x_y L_3\). So \(L_1 \cong _x L_2 \cong _x L_3\), yielding \(L_1 \cong _x L_3\) and \(L_1 \cong ^x_y L_3\). Similar reasoning applies if \(L_2\) is unstable. \(\square \)

Lemma 9

The relations in Definition 7 are equivalences.

Proof

The claim is trivial for “\(\cong _{\perp }\)”. It follows for “\(\cong _{\mathsf {\varSigma }}\)”, “\(\cong ^{\textsf {}}_{\textsf {en}}\)”, and “\(\cong _\mathsf {tr}\)” from Lemma 6. The claim for “\(\cong _\mathsf {ft}\)” has been proven in [14]. The claim for “\(\cong ^{\textsf {}}_{\textsf {\#}}\)” follows from Lemma 5 and Lemma 6, and the remaining claim from Lemma 8. \(\square \)

The congruences (black solid) in Theorem 12 and three related non-congruences (grey)

The 17 equivalences that will be proven congruences are shown in Fig. 1, together with three equivalences that arise from Definition 7 but are not congruences. There is a path downwards from an equivalence to an equivalence in the figure if and only if the former implies the latter. This holds because of the following. In [14] it was shown that “\(\cong _\mathsf {ft}\)” implies “\(\cong _\mathsf {tr}\)”. The same follows easily from Lemma 2(2) and (3). Clearly \(L_1 \cong _\mathsf {tr}L_2 \Rightarrow L_1 \cong _{\mathsf {\varSigma }}L_2 \Rightarrow L_1 \cong ^{\textsf {}}_{\textsf {\#}} L_2 \Rightarrow L_1 \cong _{\perp }L_2\) and \(L_1 \cong ^{\textsf {}}_{\textsf {en}} L_2 \Rightarrow L_1 \cong _{\mathsf {\varSigma }}L_2\). Furthermore, if \(L_1\) and \(L_2\) are stable, then \(L_1 \cong _\mathsf {tr}L_2 \Rightarrow L_1 \cong ^{\textsf {}}_{\textsf {en}} L_2\), because then \(\mathsf {en}(L) = \mathsf {Tr}(L) \cap \varSigma \). Clearly “\(\cong ^x_x\)” implies “\(\cong _x\)”. If “\(\cong _y\)” implies “\(\cong _z\)”, then “\(\cong ^x_y\)” implies “\(\cong ^x_z\)” and “\(\cong ^y_x\)” implies “\(\cong ^z_x\)”.

Let  and

and  . We have \(L_1 \cong ^{\textsf {en}}_{\textsf {ft}} L_2\) and \(L_1 \cong ^{\textsf {en}}_{\textsf {tr}} L_2\) but

. We have \(L_1 \cong ^{\textsf {en}}_{\textsf {ft}} L_2\) and \(L_1 \cong ^{\textsf {en}}_{\textsf {tr}} L_2\) but  and

and  . If

. If  , then \(L_2 \cong ^{\mathsf {tr}}_{\mathsf {ft}} L_3\) but

, then \(L_2 \cong ^{\mathsf {tr}}_{\mathsf {ft}} L_3\) but  . That is, the grey equivalences in Fig. 1 are not congruences with respect to “\({\Vert }\)”. It is also easy (but lengthy) to show with examples that all equivalences in the figure are different.

. That is, the grey equivalences in Fig. 1 are not congruences with respect to “\({\Vert }\)”. It is also easy (but lengthy) to show with examples that all equivalences in the figure are different.

We now investigate the congruence properties of the black equivalences in Fig. 1 with respect to the six operators defined in Sect. 2. The next lemma formulates a principle that is very useful in proving that certain equivalences are congruences.

Lemma 10

Let \(f_1(L), \ldots , f_n(L)\) be functions from LTSs to some sets \(D_1, \ldots , D_n\). Assume that “\(\cong \)” has been defined via \(L \cong L'\) if and only if for \(1 \le i \le n\), \(f_i(L) = f_i(L')\). Let \(\mathsf {op}\) be a unary LTS operator. If, for \(1 \le i \le n\), there are functions \(g_i: D_1 \times \cdots \times D_n \rightarrow D_i\) such that \(f_i(\mathsf {op}(L)) = g_i(f_1(L), \ldots , f_n(L))\), then “\(\cong \)” is a congruence with respect to \(\mathsf {op}\).

Proof

By Lemma 6, “\(\cong \)” is an equivalence. Let \(L \cong L'\). For \(1 \le i \le n\) we have \(f_i(\mathsf {op}(L)) = g_i(f_1(L), \ldots , f_n(L)) = g_i(f_1(L'), \ldots , f_n(L')) = f_i(\mathsf {op}(L'))\), because \(f_j(L) = f_j(L')\) for \(1 \le j \le n\). As a consequence, \(\mathsf {op}(L) \cong \mathsf {op}(L')\). \(\square \)

For instance, the alphabets of the results of the six operators defined in Sect. 2 were defined via functions on only the alphabets of the argument LTSs. Therefore, “\(\cong _{\mathsf {\varSigma }}\)” is a congruence by Lemma 10.

Lemma 11

Let \(\mathsf {op}\) be any unary LTS operator such that if L is unstable, then also \(\mathsf {op}(L)\) is unstable. Assume that “\(\cong ^x_y\)” and “\(\cong _z\)” are congruences with respect to \(\mathsf {op}\), and that “\(\cong _y\)” implies “\(\cong _z\)”. Then “\(\cong ^x_z\)” is a congruence with respect to \(\mathsf {op}\).

Proof

By Lemma 9, “\(\cong ^x_z\)” is an equivalence. To show that “\(\cong ^x_z\)” is a congruence with respect to \(\mathsf {op}\), assume that \(L_1 \cong ^x_z L_2\).

If \(L_1\) and \(L_2\) are both unstable, then by definition \(L_1 \cong _z L_2\). This implies \(\mathsf {op}(L_1) \cong _z \mathsf {op}(L_2)\). Because \(L_1\) and \(L_2\) are unstable, also \(\mathsf {op}(L_1)\) and \(\mathsf {op}(L_2)\) are unstable. These yield \(\mathsf {op}(L_1) \cong ^x_z \mathsf {op}(L_2)\).

Otherwise \(L_1\) and \(L_2\) are both stable. We have \(L_1 \cong _x L_2\), \(L_1 \cong ^x_y L_2\), and \(\mathsf {op}(L_1) \cong ^x_y \mathsf {op}(L_2)\). If one of \(\mathsf {op}(L_1)\) and \(\mathsf {op}(L_2)\) is stable, then also the other one is stable and \(\mathsf {op}(L_1) \cong _x \mathsf {op}(L_2)\). These yield \(\mathsf {op}(L_1) \cong ^x_z \mathsf {op}(L_2)\). Otherwise \(\mathsf {op}(L_1)\) and \(\mathsf {op}(L_2)\) are unstable and \(\mathsf {op}(L_1) \cong _y \mathsf {op}(L_2)\). These yield \(\mathsf {op}(L_1) \cong _z \mathsf {op}(L_2)\) and \(\mathsf {op}(L_1) \cong ^x_z \mathsf {op}(L_2)\). \(\square \)

Theorem 12

An equivalence labelling a row in Table 1 is a congruence with respect to the operator labelling a column in the table if and only if the intersection of the row and colum contains \(\surd \).

Proof

The claim is trivial for “\(\cong _{\perp }\)”, and for “\(\cong _{\mathsf {\varSigma }}\)” it was shown above using Lemma 10. For “\(\cong _\mathsf {tr}\)”, a proof using Lemma 10 is common knowledge. For “\(\cong _\mathsf {ft}\)” with “\({\Vert }\)”, “\({\setminus }\)”, and “.” (and “\(\phi \)”), the claim has been shown in [14], and with “\(\varPhi \)” in [18]. The classic counter-example  versus

versus  works for “\(\cong _\mathsf {ft}\)” with “\(+\)”.

works for “\(\cong _\mathsf {ft}\)” with “\(+\)”.

Next we deal with “\(\cong ^{\textsf {}}_{\textsf {\#}}\)”. The essence of the proof is that “\(\varPhi \)” can make the difference between alphabets grow from finite to infinite, while the other five operators cannot (they cannot make the difference grow at all).

Let \(\varPhi := \{ (0,i) \mid i \in {\mathbb {N}} \}\). We have  but

but  . Because

. Because  is unstable, this counter-example also works for “\(\cong ^{\textsf {ft}}_{\textsf {\#}}\)”, “\(\cong ^{\textsf {tr}}_{\textsf {\#}}\)”, and “\(\cong ^{\textsf {en}}_{\textsf {\#}}\)”.

is unstable, this counter-example also works for “\(\cong ^{\textsf {ft}}_{\textsf {\#}}\)”, “\(\cong ^{\textsf {tr}}_{\textsf {\#}}\)”, and “\(\cong ^{\textsf {en}}_{\textsf {\#}}\)”.

For each of the remaining operators, we provide an injection from \(\varSigma (\mathsf {op}(L_1)) {\setminus } \varSigma (\mathsf {op}(L_2))\) to \(\varSigma _1 {\setminus } \varSigma _2\). This shows that if \(\varSigma _1 {\setminus } \varSigma _2\) is finite, then \(\varSigma (\mathsf {op}(L_1)) {\setminus } \varSigma (\mathsf {op}(L_2))\) is finite as well. The case of \(\varSigma (\mathsf {op}(L_2)) {\setminus } \varSigma (\mathsf {op}(L_1))\) is similar. Because the union of two sets is finite if and only if the sets are finite, the result generalizes to \(\varSigma (\mathsf {op}(L_1)) \mathrel {\#}\varSigma (\mathsf {op}(L_2))\).

If \(a \in \varSigma (L_1 {\Vert }L) {\setminus } \varSigma (L_2 {\Vert }L)\), then \(a \in \varSigma _1 {\setminus } \varSigma _2\) (and \(a \notin \varSigma \)).

If \(a \in \varSigma (L_1 {\setminus } A) \setminus \varSigma (L_2 {\setminus } A)\), then \(a \in \varSigma _1 {\setminus } \varSigma _2\) (and \(a \notin A\)).

If \(a \in \varSigma (\phi (L_1)) {\setminus } \varSigma (\phi (L_2))\), then there is \(b \in \varSigma _1 {\setminus } \varSigma _2\) such that \(a = \phi (b)\). Furthermore, each such a has its own b, because \(\phi (b_1) \ne \phi (b_2)\) implies \(b_1 \ne b_2\).

If \(a \in \varSigma (L_1 + L) {\setminus } \varSigma (L_2 + L)\), then \(a \in \varSigma _1 {\setminus } \varSigma _2\) (and \(a \notin \varSigma \)).

If \(a \in \varSigma (b.L_1) {\setminus } \varSigma (b.L_2)\), then \(a \in \varSigma _1 {\setminus } \varSigma _2\) (and \(a \ne b\)).

If \(L_1 \cong ^\mathsf {ft}_\mathsf {ft}L_2\), then \(L_1 \cong _\mathsf {ft}L_2\) and \(L_1 \cong ^{\perp }_{\perp } L_2\). If \(L_1\) and \(L_2\) are both stable, then also \(L_1\varPhi \) and \(L_2\varPhi \) are both stable. If \(L_1\) and \(L_2\) are both unstable, then also \(L_1\varPhi \) and \(L_2\varPhi \) are both unstable. By the congruence properties of “\(\cong _\mathsf {ft}\)”, we have \(L_1\varPhi \cong _\mathsf {ft}L_2\varPhi \). Therefore, \(L_1\varPhi \cong ^\mathsf {ft}_\mathsf {ft}L_2\varPhi \). The remaining claims for “\(\cong ^\mathsf {ft}_\mathsf {ft}\)” can be taken from [14] or proven similarly using Lemma 1. The claims for “\(\cong ^{\textsf {tr}}_{\textsf {tr}}\)” can be proven similarly and are widely known.

The claims for “ ” follow from Lemmas 1, 10, and the fact that “\(\cong ^{\textsf {}}_{\textsf {en}}\)” implies “\(\cong _{\mathsf {\varSigma }}\)” which is a congruence.

” follow from Lemmas 1, 10, and the fact that “\(\cong ^{\textsf {}}_{\textsf {en}}\)” implies “\(\cong _{\mathsf {\varSigma }}\)” which is a congruence.

The remaining claims follow by Lemma 11, except for “.”. If  and

and  , then \(L_1 \cong ^{\textsf {ft}}_{\textsf {tr}} L_2\) but \(a.L_1 \ncong ^{\mathsf {ft}}_{\mathsf {tr}} a.L_2\). If

, then \(L_1 \cong ^{\textsf {ft}}_{\textsf {tr}} L_2\) but \(a.L_1 \ncong ^{\mathsf {ft}}_{\mathsf {tr}} a.L_2\). If  , then

, then  and

and  , but

, but  and

and  . Furthermore,

. Furthermore,  when \(x \in \{\mathsf {ft}, \mathsf {tr}, \mathsf {en}\}\) and \(y \in \{{\#}, {\perp }\}\), but

when \(x \in \{\mathsf {ft}, \mathsf {tr}, \mathsf {en}\}\) and \(y \in \{{\#}, {\perp }\}\), but  when \(b \notin \{a, \tau , \varepsilon \}\), so

when \(b \notin \{a, \tau , \varepsilon \}\), so  . \(\square \)

. \(\square \)

4 The weakest stability-preserving congruence

In this section we find the weakest stability-preserving congruence both in the presence and absence of the action prefix operator. This result is central in the study of stability-preserving congruences. It does not assume the congruence property with respect to renaming, so it makes weaker assumptions than the rest of this study.

Theorem 13

The weakest congruence with respect to parallel composition and hiding that never equates a stable and an unstable LTS is “\(\cong ^{\mathsf {en}}_{\perp }\)”. The weakest congruence with respect to parallel composition, hiding, and action prefix that never equates a stable and an unstable LTS is “ ”. Both are also congruences with respect to relational renaming and choice.

”. Both are also congruences with respect to relational renaming and choice.

Proof

It is immediate from the definition that “\(\cong ^{\mathsf {en}}_{\perp }\)” and “ ” never equate a stable and an unstable LTS. Theorem 12 says that they indeed are congruences as promised.

” never equate a stable and an unstable LTS. Theorem 12 says that they indeed are congruences as promised.

It remains to be proven that they are the weakest possible. That is, if a congruence does not imply “\(\cong ^{\mathsf {en}}_{\perp }\)”, then it equates a stable and an unstable LTS, and similarly with “ ”. So, for

”. So, for  , we assume that \(L_1 \cong L_2\) and \(L_1 \ncong ^\mathsf {en}_x L_2\), and prove the existence of \(L'_1\) and \(L'_2\) such that one of them is stable, the other is unstable, and \(L'_1 \cong L'_2\).

, we assume that \(L_1 \cong L_2\) and \(L_1 \ncong ^\mathsf {en}_x L_2\), and prove the existence of \(L'_1\) and \(L'_2\) such that one of them is stable, the other is unstable, and \(L'_1 \cong L'_2\).

There are three ways how \(L_1 \ncong ^\mathsf {en}_x L_2\) may occur.

First, one of \(L_1\) and \(L_2\) is stable while the other is unstable. Then they can be used as \(L'_1\) and \(L'_2\).

Second, \(L_1\) and \(L_2\) are stable and \(L_1 \ncong ^{\mathsf {}}_{\mathsf {en}} L_2\). The latter means that there is a such that \(a \in \mathsf {en}(L_1) {\setminus } \mathsf {en}(L_2)\) or \(a \in \varSigma _1 {\setminus } \varSigma _2\) (or the same with the roles of \(L_1\) and \(L_2\) swapped).

If \(a \in \mathsf {en}(L_1) {\setminus } \mathsf {en}(L_2)\), then \(L_2 {\setminus } \{a\}\) is stable and \(L_1 {\setminus } \{a\}\) is unstable, so they qualify as \(L'_1\) and \(L'_2\). The same argument applies when \(a \in \varSigma _1 {\setminus } \varSigma _2\) and \(a \in \mathsf {en}(L_1)\), because \(a \notin \varSigma _2\) implies \(a \notin \mathsf {en}(L_2)\). The case remains where \(a \in \varSigma _1 {\setminus } \varSigma _2\) and \(a \notin \mathsf {en}(L_1)\). Then  is stable and

is stable and  is unstable, and thus qualify as \(L'_1\) and \(L'_2\).

is unstable, and thus qualify as \(L'_1\) and \(L'_2\).

Third, \(L_1\) and \(L_2\) are unstable and \(L_1 \ncong _x L_2\). If \(x = {\perp }\), this is impossible by the definition of “\(\cong _{\perp }\)”. So let  . There is a such that \(a \in \varSigma _1\) and \(a \notin \varSigma _2\) (or the same with the roles of \(L_1\) and \(L_2\) swapped). Let \(b \notin \{a, \tau , \varepsilon \}\). Then \(b.L_1\) and \(b.L_2\) are stable and \(a \in \varSigma (b.L_1) {\setminus } \varSigma (b.L_2)\). So the case has been reduced to an earlier case. \(\square \)

. There is a such that \(a \in \varSigma _1\) and \(a \notin \varSigma _2\) (or the same with the roles of \(L_1\) and \(L_2\) swapped). Let \(b \notin \{a, \tau , \varepsilon \}\). Then \(b.L_1\) and \(b.L_2\) are stable and \(a \in \varSigma (b.L_1) {\setminus } \varSigma (b.L_2)\). So the case has been reduced to an earlier case. \(\square \)

5 Proof that Fig. 1 contains all congruences in the region

In this section we assume that the alphabets of LTSs are finite or countably infinite, and prove that Fig. 1 contains all equivalences that are implied by “\(\cong ^\mathsf {ft}_\mathsf {ft}\)” and are congruences with respect to parallel composition, hiding, and functional renaming. The assumption of countability is only needed for equivalences that do not imply “\(\cong ^{\textsf {}}_{\textsf {\#}}\)” (and thus not “\(\cong _{\mathsf {\varSigma }}\)”). The author believes that similarly to “\(\cong ^{\textsf {ft}}_{\textsf {\#}}\)”, “\(\cong ^{\textsf {tr}}_{\textsf {\#}}\)”, “\(\cong ^{\textsf {en}}_{\textsf {\#}}\)”, and “\(\cong ^{\textsf {}}_{\textsf {\#}}\)”, there are four congruences for each infinite cardinal number in place of “\(\#\)”, and, accepting the axiom of choice, that is all. However, the author felt that studying them would have meant going too far from concurrency theory.

For each equivalence “\(\approx \)” in Fig. 1, we will prove that any congruence that implies “\(\approx \)” but implies neither the nearest equivalence above nor the nearest equivalence above left “\(\approx \)” in the figure, is “\(\approx \)”. To be able to do so, we first develop ten lemmas. Figure 2 shows five LTSs that are referred to in them. Many of the lemmas use the following assumption:

Assumption A. “\(\cong ^\mathsf {ft}_\mathsf {ft}\)” implies “\(\cong \)” and “\(\cong \)” is a congruence with respect to parallel composition, hiding, and functional renaming.

We first prove a lemma that starts with an arbitrary difference between the sets of traces of two equivalent LTSs that have the same alphabet, and, so to speak, amplifies it to the maximal such difference. This result will later be used to prove that if the congruence does not preserve full information on traces, then, both in the case where stability does not matter and in the case where it matters and the LTSs are unstable, it does not preserve any information on traces at all. When stability matters and the LTSs are stable, a similar claim does not hold, because “ ” is a congruence. For that case, the lemma presents another result that can be used to show that information on traces beyond the first visible action does not matter.

” is a congruence. For that case, the lemma presents another result that can be used to show that information on traces beyond the first visible action does not matter.

The two versions of the LTS \(L^\sigma _A\) in the proof of Lemma 14

Lemma 14

Assume A. If there are \(L_1\), \(L_2\), and \(\sigma \) such that \(L_1 \cong L_2\), \(\varSigma _1 = \varSigma _2\), \(\sigma \in \mathsf {Tr}(L_1)\), and \(\sigma \notin \mathsf {Tr}(L_2)\), then for every alphabet A we have  . If \(L_1\) and \(L_2\) are stable, then \(M_3^A \cong M_4^A\).

. If \(L_1\) and \(L_2\) are stable, then \(M_3^A \cong M_4^A\).

Proof

Let \(\varSigma := \varSigma _1 = \varSigma _2\), and let \(L^\sigma _A\) be the following LTS (shown in Fig. 3 left):

Let

We have  \(= A\). Furthermore, all these four LTSs are unstable.

\(= A\). Furthermore, all these four LTSs are unstable.

Because \(\sigma \in \mathsf {Tr}(L_1)\), \(f(L_1)\) can reach a state of the form \((s, s_\sigma )\). This happens without executing visible actions, because \(\varSigma ^{[2]}\) is hidden in \(f(L_i)\). Then \(f(L_1)\) can execute any member of A, getting back to \((s, s_\sigma )\). As a consequence, \(\mathsf {Tr}(f(L_1)) = A^*\). Because \((s_\sigma , \tau , s_\tau ) \in \varDelta ^\sigma _A\), \(f(L_1)\) can continue to \((s, s_\tau )\). Because \(s_\tau \) has no outgoing transitions, \(\mathsf {Tf}(f(L_1)) = A^* \times 2^{A^+}\) by Lemma 3. Also \(\mathsf {Tf}(M_1^A) = A^* \times 2^{A^+}\) by Lemma 3. So \(\mathsf {Tf}(f(L_1)) = \mathsf {Tf}(M_1^A)\) and \(f(L_1) \cong ^\mathsf {ft}_\mathsf {ft}M_1^A\).

Because \(\sigma \notin \mathsf {Tr}(L_2)\), \(f(L_2)\) cannot reach any state of the form \((s, s_\sigma )\), and thus cannot ever execute any member of A. We have \(\mathsf {Tr}(f(L_2)) = \{\varepsilon \}\) and  . So

. So  .

.

By the congruence property, \(f(L_1) \cong f(L_2)\). We have proven  . It implies

. It implies  , because by Assumption A, “\(\cong ^\mathsf {ft}_\mathsf {ft}\)” implies “\(\cong \)” and “\(\cong \)” is an equivalence.

, because by Assumption A, “\(\cong ^\mathsf {ft}_\mathsf {ft}\)” implies “\(\cong \)” and “\(\cong \)” is an equivalence.

From now on assume that \(L_1\) and \(L_2\) are stable. Let g be defined similarly to f, except that the transition \({\hat{s}}^\sigma _A \mathrel {{-}\tau {\rightarrow }} s_\varepsilon \) is replaced by \({\hat{s}}^\sigma _A \mathrel {{-}a^{[1]}{\rightarrow }} s_\varepsilon \) for every \(a \in A\) in \(L^\sigma _A\), resulting in the version shown in Fig. 3 right. We have \(g(L_1) \cong g(L_2)\) and \(\varSigma (g(L_1)) = \varSigma (g(L_2)) = \varSigma (M_3^A) = \varSigma (M_4^A) = A\). Furthermore, all these four LTSs are stable.

For any stable L, g(L) starts by executing an arbitrary member of A and then continues like f(L). As a consequence, \(g(L_1) \cong ^\mathsf {ft}_\mathsf {ft}M_3^A\) and \(g(L_2) \cong ^\mathsf {ft}_\mathsf {ft}M_4^A\), yielding \(M_3^A \cong M_4^A\). \(\square \)

The next lemma is similar in spirit to the previous one, but this time an arbitrary not alphabet-related violation against fair testing equivalence is used as the starting point, and the results concern information on the K parts of tree failures.

The LTS \(L^{(\sigma ,K)}_A\) in the proof of Lemma 15

Lemma 15

Assume A. If there are \(L_1\), \(L_2\), \(\sigma \), and K such that \(L_1 \cong L_2\), \(\varSigma _1 = \varSigma _2\), \((\sigma , K) \in \mathsf {Tf}(L_1)\), \((\sigma , K) \notin \mathsf {Tf}(L_2)\), and \((\sigma \pi , \pi ^{-1}K) \notin \mathsf {Tf}(L_2)\) for every \(\pi \sqsubseteq K\), then for every alphabet A we have \(M_1^A \cong M_2^A\). If \(L_1\) and \(L_2\) are stable, then \(M_3^A \cong M_5^A\).

Proof

Let \(\varSigma := \varSigma _1 = \varSigma _2\), and let \(L^{(\sigma , K)}_A\) be the following LTS (shown in Fig. 4):

Similarly to the previous proof,

We have \(\varSigma (f(L_1)) = \varSigma (f(L_2)) = \varSigma (M_1^A) = \varSigma (M_2^A) = A\). Furthermore, all these four LTSs are unstable.

Let \(i \in \{1,2\}\). Trivially \(\mathsf {Tr}(f(L_i)) \subseteq A^*\). Without \(L_i\) moving, \(f(L_i)\) can move invisibly from its initial state \(({\hat{s}}_i, {\hat{s}}^{(\sigma , K)}_A)\) to \(({\hat{s}}_i, s_\varepsilon ^\sigma )\). Then it can execute any member of \(A^*\), getting back to \(({\hat{s}}_i, s_\varepsilon ^\sigma )\) after each transition. Therefore, \(\mathsf {Tr}(f(L_1)) = \mathsf {Tr}(f(L_2)) = A^*\).

Because \((\sigma , K) \in \mathsf {Tf}(L_1)\), \(L_1\) can execute \(\sigma \) and then be in a state \(s'\) where it cannot execute any element of K. So \(f(L_1)\) can continue invisibly from \(({\hat{s}}_1, s_\varepsilon ^\sigma )\) to the state \((s', s_\varepsilon ^K)\), but cannot continue from there to any state of the form \((s, s_\pi ^K)\), where \(\pi \in K\). That is, \(f(L_1)\) can execute any element of \(A^*\) and then invisibly move to a state from which it cannot continue to a state where it can execute an element of A. As a consequence, \(\mathsf {Tf}(f(L_1)) = A^* \times 2^{A^+} = \mathsf {Tf}(M_1^A)\). So \(f(L_1) \cong ^\mathsf {ft}_\mathsf {ft}M_1^A\).

If \(f(L_2)\) is in a state of the form \((s, s_\pi ^\sigma )\), then it can execute any member of A immediately. If \(f(L_2)\) is in a state of the form \((s, {\hat{s}}^{(\sigma , K)}_A)\), then it can execute \(\tau \) and enter a state of the previous form. If \(f(L_2)\) is in a state of the form \((s, s_\pi ^K)\) where \(\varepsilon \ne \pi \sqsubseteq K\) or \(\varepsilon = \pi \sqsubseteq K\), then by \((\sigma \pi , \pi ^{-1}K) \notin \mathsf {Tf}(L_2)\) it can execute invisibly at least one member of \(\pi ^{-1}K\). That takes it to a state of the form \((s', s_{\kappa }^K)\) where \(\kappa \in K\). There it can execute any member of A. The case remains where \(f(L_2)\) is in a state of the form \((s, s_\varepsilon ^K)\), where \(\varepsilon \not \sqsubseteq K\). Then \(L_2\) has executed \(\sigma \), implying \((\sigma , \emptyset ) \in \mathsf {Tf}(L_2)\). On the other hand, \(K = \emptyset \) because \(\varepsilon \not \sqsubseteq K\). This contradicts \((\sigma , K) \notin \mathsf {Tf}(L_2)\), showing that this case is impossible.

Therefore, \(f(L_2)\) cannot reach a state from which it cannot continue to a state where it can execute any member of A. We have \(\mathsf {Tf}(f(L_2)) = A^* \times \{\emptyset \}\) and \(f(L_2) \cong ^\mathsf {ft}_\mathsf {ft}M_2^A\).

By the congruence property, \(f(L_1) \cong f(L_2)\). We have proven \(M_1^A \cong ^\mathsf {ft}_\mathsf {ft}f(L_1) \cong f(L_2) \cong ^\mathsf {ft}_\mathsf {ft}M_2^A\). It implies \(M_1^A \cong M_2^A\), because “\(\cong ^\mathsf {ft}_\mathsf {ft}\)” implies “\(\cong \)”.

From now on assume that \(L_1\) and \(L_2\) are stable. Let g be defined similarly to f, except that the transition \({\hat{s}}^{(\sigma , K)}_A \mathrel {{-}\tau {\rightarrow }} s_\varepsilon ^\sigma \) is replaced by \({\hat{s}}^{(\sigma , K)}_A \mathrel {{-}a^{[1]}{\rightarrow }} s_\varepsilon ^\sigma \) for every \(a \in A\). We have \(g(L_1) \cong g(L_2)\) and \(\varSigma (g(L_1)) = \varSigma (g(L_2)) = \varSigma (M_3^A) = \varSigma (M_5^A) = A\). Furthermore, all these four LTSs are stable.

For any stable L, g(L) starts by executing an arbitrary member of A and then continues like f(L). As a consequence, \(g(L_1) \cong ^\mathsf {ft}_\mathsf {ft}M_3^A\) and \(g(L_2) \cong ^\mathsf {ft}_\mathsf {ft}M_5^A\), yielding \(M_3^A \cong M_5^A\). \(\square \)

In the congruences of the form “\(\cong ^x_y\)” in Fig. 1, x can only be \(\mathsf {ft}\), \(\mathsf {tr}\), or \(\mathsf {en}\). When proving that they suffice, the next two lemmas and Theorem 13 will be used.

Lemma 16

Assume A. If there are stable \(L'_1\) and \(L'_2\) such that \(L'_1 \cong L'_2\), \(\varSigma '_1 = \varSigma '_2\), and \(L'_1 \ncong _\mathsf {ft}L'_2\), then for any stable \(L_1\) and \(L_2\) such that \(L_1 \cong _\mathsf {tr}L_2\) we have \(L_1 \cong L_2\).

Proof

For any stable L, let \(f(L) := L {\Vert }M_3^{\varSigma }\). Clearly \(L \equiv L {\Vert }M_5^{\varSigma }\). By Lemma 15 and the congruence property, \(L {\Vert }M_5^{\varSigma } \cong L {\Vert }M_3^{\varSigma }\). So \(L \cong f(L)\). Clearly f(L) is stable, \(\varSigma (f(L)) = \varSigma \), and \(\mathsf {Tr}(f(L)) = \mathsf {Tr}(L)\).

By Lemma 4, \((\varepsilon , K) \in \mathsf {Tf}(f(L))\) if and only if \(K \cap \mathsf {Tr}(f(L)) = \emptyset \). The LTS \(M_3^{\varSigma }\) may deadlock after any nonempty trace. Therefore, by Lemma 3, if \(\sigma \ne \varepsilon \), then \((\sigma , K) \in \mathsf {Tf}(f(L))\) if and only if \(\sigma \in \mathsf {Tr}(f(L))\) and \(K \subseteq \varSigma (f(L))^+\). As a consequence, \(\mathsf {Tf}(f(L))\) is determined by \(\varSigma (f(L))\) and \(\mathsf {Tr}(f(L))\), that is, \(\varSigma \) and \(\mathsf {Tr}(L)\).

Let \(L_1\) and \(L_2\) be stable and \(L_1 \cong _\mathsf {tr}L_2\). We have \(\varSigma _1 = \varSigma _2\) and \(\mathsf {Tr}(L_1) = \mathsf {Tr}(L_2)\). These imply \(\mathsf {Tf}(f(L_1)) = \mathsf {Tf}(f(L_2))\). Furthermore, \(f(L_1)\) and \(f(L_2)\) are stable. As a consequence, \(f(L_1) \cong ^\mathsf {ft}_\mathsf {ft}f(L_2)\).

Hence \(L_1 \cong f(L_1) \cong ^\mathsf {ft}_\mathsf {ft}f(L_2) \cong L_2\), implying \(L_1 \cong L_2\). \(\square \)

Lemma 17

Assume A. If there are stable \(L'_1\) and \(L'_2\) such that \(L'_1 \cong L'_2\), \(\varSigma '_1 = \varSigma '_2\), and \(L'_1 \ncong _\mathsf {tr}L'_2\), then for any stable \(L_1\) and \(L_2\) such that \(L_1 \cong ^{\textsf {}}_{\textsf {en}} L_2\) we have \(L_1 \cong L_2\).

Proof

For any stable L, let \(f(L) := L {\Vert }M_4^{\varSigma }\). Because “\(\cong _\mathsf {ft}\)” implies “\(\cong _\mathsf {tr}\)”, the assumptions of Lemma 15 hold. By Lemmas 14 and 15, \(L \equiv L {\Vert }M_5^{\varSigma } \cong L {\Vert }M_3^{\varSigma } \cong L {\Vert }M_4^{\varSigma }\). So \(L \cong f(L)\). Clearly f(L) is stable and \(\varSigma (f(L)) = \varSigma \). Because \(\mathsf {Tr}(M_4^{\varSigma }) = \varSigma \cup \{\varepsilon \}\), we have \(\mathsf {Tr}(f(L)) = \mathsf {en}(L) \cup \{\varepsilon \}\). It implies \(\mathsf {en}(f(L)) = \mathsf {en}(L)\).

By Lemma 4, \((\varepsilon , K) \in \mathsf {Tf}(f(L))\) if and only if \(K \cap \mathsf {Tr}(f(L)) = \emptyset \). By Lemma 3, if \(\sigma \ne \varepsilon \), then \((\sigma , K) \in \mathsf {Tf}(f(L))\) if and only if \(\sigma \in \mathsf {Tr}(f(L))\) and \(K \subseteq \varSigma (f(L))^+\). As a consequence, \(\mathsf {Tf}(f(L))\) is determined by \(\varSigma (f(L))\) and \(\mathsf {en}(f(L))\), that is, \(\varSigma \) and \(\mathsf {en}(L)\).

Let \(L_1\) and \(L_2\) be stable and \(L_1 \cong ^{\textsf {}}_{\textsf {en}} L_2\). We have \(\varSigma _1 = \varSigma _2\) and \(\mathsf {en}(L_1) = \mathsf {en}(L_2)\). These imply \(\mathsf {Tf}(f(L_1)) = \mathsf {Tf}(f(L_2))\). Furthermore, \(f(L_1)\) and \(f(L_2)\) are stable. As a consequence, \(f(L_1) \cong ^\mathsf {ft}_\mathsf {ft}f(L_2)\).

Hence \(L_1 \cong f(L_1) \cong ^\mathsf {ft}_\mathsf {ft}f(L_2) \cong L_2\), implying \(L_1 \cong L_2\). \(\square \)

In the sequel, we will have to deal with cases where stability does not matter, and with cases where it matters and the LTSs in question are unstable. To exploit results on the latter when dealing with the former, we define a simple operator that, given an LTS, yields an unstable “\(\cong _\mathsf {ft}\)”-equivalent LTS. We let

The following lemma tells some properties of \(\mathsf {us}(L)\).

Lemma 18

Assume A. For every L we have the following.

- 1.

\(\mathsf {us}(L)\) is unstable.

- 2.

\(\mathsf {us}(L) \cong _\mathsf {ft}L\).

- 3.

If L is unstable, then \(\mathsf {us}(L) \cong ^\mathsf {ft}_\mathsf {ft}L\) and \(\mathsf {us}(L) \cong L\).

- 4.

If there are \(L_1\) and \(L_2\) such that \(L_1 \cong L_2\), \(\varSigma _1 = \varSigma _2\), and \(L_1 \ncong _\mathsf {ft}L_2\), then \(\mathsf {us}(L) \cong L {\Vert }M_1^{\varSigma }\).

- 5.

If there are \(L_1\) and \(L_2\) such that \(L_1 \cong L_2\), \(\varSigma _1 = \varSigma _2\), and \(L_1 \ncong _\mathsf {tr}L_2\), then

.

.

Proof

The first three claims are obvious.

For any L, \(\mathsf {us}(L) \cong ^\mathsf {ft}_\mathsf {ft}L {\Vert }M_2^{\varSigma }\), because they both have \(\varSigma \) as the alphabet, they are both unstable, and \(M_2^{\varSigma }\) never blocks actions of L. With the assumptions of the fourth claim, Lemma 15 and the congruence property yield \(L {\Vert }M_2^{\varSigma } \cong L {\Vert }M_1^{\varSigma }\). As a consequence, \(\mathsf {us}(L) \cong L {\Vert }M_1^{\varSigma }\).

With the assumptions of the last claim, for any L, Lemma 14 yields  . Clearly

. Clearly  , because

, because  blocks all visible actions of L. Because “\(\cong _\mathsf {ft}\)” implies “\(\cong _\mathsf {tr}\)”, claim 4 yields \(\mathsf {us}(L) \cong L {\Vert }M_1^{\varSigma }\). So

blocks all visible actions of L. Because “\(\cong _\mathsf {ft}\)” implies “\(\cong _\mathsf {tr}\)”, claim 4 yields \(\mathsf {us}(L) \cong L {\Vert }M_1^{\varSigma }\). So  . \(\square \)

. \(\square \)

The next lemma tells that if the congruence equates a stable and an unstable LTS, then stability does not matter at all.

Lemma 19

Assume that “\(\cong ^\mathsf {ft}_\mathsf {ft}\)” implies “\(\cong \)” and “\(\cong \)” is a congruence with respect to parallel composition and hiding. If there are a stable LTS \(L_\mathsf {s}\) and an unstable LTS \(L_\mathsf {u}\) such that \(L_\mathsf {s} \cong L_\mathsf {u}\), then

- 1.

, and

, and - 2.

for any L, \(L \cong \mathsf {us}(L)\).

Proof

Let \(\varSigma := \varSigma (L_\mathsf {s}) \cup \varSigma (L_\mathsf {u})\) and  . Clearly

. Clearly  . The alphabet of \(f(L_\mathsf {u})\) is

. The alphabet of \(f(L_\mathsf {u})\) is  , and

, and  by Lemma 2(1). Furthermore, \(f(L_\mathsf {u})\) is obviously unstable. So

by Lemma 2(1). Furthermore, \(f(L_\mathsf {u})\) is obviously unstable. So  . These yield

. These yield  . Therefore,

. Therefore,  .

.

Let L be any LTS. Clearly  , so \(L \cong \mathsf {us}(L)\). \(\square \)

, so \(L \cong \mathsf {us}(L)\). \(\square \)

The next lemma says that if the congruence does not preserve the alphabet, then, in the case of unstable LTSs, it throws away all information on traces and tree failures.

Lemma 20

Assume A. If “\(\cong \)” does not imply “\(\cong _{\mathsf {\varSigma }}\)”, then, for any L,  .

.

Proof

Because “\(\cong \)” does not imply “\(\cong _{\mathsf {\varSigma }}\)”, there are \(L_1\), \(L_2\), and a such that \(L_1 \cong L_2\), \(a \in \varSigma _1\), and \(a \notin \varSigma _2\). Let \(\varSigma := (\varSigma _1 \cup \varSigma _2) {\setminus } \{a\}\).

If \(L_1 \mathrel {{=}a{\Rightarrow }}\), then choose any \(b \notin \{a, \tau , \varepsilon \}\) and let  , where \(\phi (b) := a\) and \(\phi (x) := x\) if \(x \ne b\). We have \(f(L_1) \mathrel {{=}a{\Rightarrow }}\) but \(\lnot (f(L_2) \mathrel {{=}a{\Rightarrow }})\). Although \(a \notin \varSigma _2\), we have \(\varSigma (f(L_2)) = \{a\}\) thanks to

, where \(\phi (b) := a\) and \(\phi (x) := x\) if \(x \ne b\). We have \(f(L_1) \mathrel {{=}a{\Rightarrow }}\) but \(\lnot (f(L_2) \mathrel {{=}a{\Rightarrow }})\). Although \(a \notin \varSigma _2\), we have \(\varSigma (f(L_2)) = \{a\}\) thanks to  and \(\phi \).

and \(\phi \).

If \(\lnot (L_1 \mathrel {{=}a{\Rightarrow }})\), then let  . We have \(\lnot (f(L_1) \mathrel {{=}a{\Rightarrow }})\) but \(f(L_2) \mathrel {{=}a{\Rightarrow }}\).

. We have \(\lnot (f(L_1) \mathrel {{=}a{\Rightarrow }})\) but \(f(L_2) \mathrel {{=}a{\Rightarrow }}\).

In both cases, \(f(L_1) \cong f(L_2)\), \(\varSigma (f(L_1)) = \varSigma (f(L_2)) = \{a\}\), and \(\mathsf {Tr}(f(L_1)) \ne \mathsf {Tr}(f(L_2))\). By Lemma 18(5), for any L,  . \(\square \)

. \(\square \)

If \(L_1 \cong L_2\) where \(\cong \) is a congruence with respect to parallel composition, then  , yielding \(\mathsf {us}(L_1) \cong \mathsf {us}(L_2)\). Next we prove \(\mathsf {us}(L_1) \cong \mathsf {us}(L_2)\) under five different assumptions, without assuming \(L_1 \cong L_2\).

, yielding \(\mathsf {us}(L_1) \cong \mathsf {us}(L_2)\). Next we prove \(\mathsf {us}(L_1) \cong \mathsf {us}(L_2)\) under five different assumptions, without assuming \(L_1 \cong L_2\).

Lemma 21

Assume A. In each of the following situations we have \(\mathsf {us}(L_1) \cong \mathsf {us}(L_2)\).

- 1.

If \(L_1 \cong _\mathsf {ft}L_2\).

- 2.

If \(L_1 \cong _\mathsf {tr}L_2\), and “\(\cong \)” implies “\(\cong _{\mathsf {\varSigma }}\)” but not “\(\cong _\mathsf {ft}\)”.

- 3.

If \(L_1 \cong _{\mathsf {\varSigma }}L_2\), and “\(\cong \)” implies “\(\cong _{\mathsf {\varSigma }}\)” but not “\(\cong _\mathsf {tr}\)”.

- 4.

If \(L_1 \cong ^{\textsf {}}_{\textsf {\#}} L_2\), and “\(\cong \)” does not imply “\(\cong _{\mathsf {\varSigma }}\)”.

- 5.

If the alphabets of \(L_1\) and \(L_2\) are countable, and “\(\cong \)” does not imply “\(\cong ^{\textsf {}}_{\textsf {\#}}\)”.

Proof

1. By Lemma 18(2), \(\mathsf {us}(L_1) \cong _\mathsf {ft}L_1 \cong _\mathsf {ft}L_2 \cong _\mathsf {ft}\mathsf {us}(L_2)\). So \(\mathsf {us}(L_1) \cong _\mathsf {ft}\mathsf {us}(L_2)\). This implies \(\mathsf {us}(L_1) \cong ^\mathsf {ft}_\mathsf {ft}\mathsf {us}(L_2)\), because \(\mathsf {us}(L_1)\) and \(\mathsf {us}(L_2)\) are unstable by Lemma 18(1). By assumption A, this implies \(\mathsf {us}(L_1) \cong \mathsf {us}(L_2)\).

2. Let \(L \in \{L_1, L_2\}\) and \(f(L) := L {\Vert }M_1^{\varSigma }\). It is unstable because of \(M_1^{\varSigma }\), so \(f(L_1) \cong ^{\perp }_{\perp } f(L_2)\). We have \(\varSigma (f(L)) = \varSigma \). Because \(M_1^{\varSigma }\) may deadlock after any trace, Lemma 3 yields \(\mathsf {Tf}(f(L)) = \{ (\sigma , K) \mid \sigma \in \mathsf {Tr}(L) \wedge K \subseteq \varSigma ^+ \}\). Because \(L_1 \cong _\mathsf {tr}L_2\), we have \(\varSigma _1 = \varSigma _2\) and \(\mathsf {Tr}(L_1) = \mathsf {Tr}(L_2)\). These yield \(f(L_1) \cong ^\mathsf {ft}_\mathsf {ft}f(L_2)\), implying \(f(L_1) \cong f(L_2)\). The part of the condition after “and” justifies the use of Lemma 18(4), implying \(\mathsf {us}(L_1) \cong f(L_1) \cong f(L_2) \cong \mathsf {us}(L_2)\).

3. The condition \(L_1 \cong _{\mathsf {\varSigma }}L_2\) means that \(\varSigma _1 = \varSigma _2\). By Lemma 18(5),  .

.

4. The condition \(L_1 \cong ^{\textsf {}}_{\textsf {\#}} L_2\) means that \(\varSigma _1 \mathrel {\#}\varSigma _2\) is finite. Because “\(\cong \)” does not imply “\(\cong _{\mathsf {\varSigma }}\)”, there are \(L'_1\), \(L'_2\), and a such that \(L'_1 \cong L'_2\), \(a \in \varSigma '_1\), and \(a \notin \varSigma '_2\). Let \(\varSigma := (\varSigma '_1 \cup \varSigma '_2) {\setminus } \{a\}\). By Lemma 20,  , where \(\mathsf {us}(L'_2 {\setminus } \varSigma ) \cong \mathsf {us}(L'_1 {\setminus } \varSigma )\) follows from \(L'_1 \cong L'_2\). Therefore,

, where \(\mathsf {us}(L'_2 {\setminus } \varSigma ) \cong \mathsf {us}(L'_1 {\setminus } \varSigma )\) follows from \(L'_1 \cong L'_2\). Therefore,  .

.

Choose any b such that \(\tau \ne b \ne \varepsilon \). Let \(\phi (a) := b\) and \(\phi (x) := x\) when \(x \ne a\). We have  . So

. So  . Let \(A = \{a_1, \ldots , a_n\}\) be any finite alphabet. For \(0 \le i < n\), we have

. Let \(A = \{a_1, \ldots , a_n\}\) be any finite alphabet. For \(0 \le i < n\), we have

. By induction,

. By induction,  .

.

If \(\varSigma _1 \mathrel {\#}\varSigma _2\) is finite, then also \(\varSigma _1 {\setminus } \varSigma _2\) and \(\varSigma _2 {\setminus } \varSigma _1\) are finite. By Lemma 20, \(.\)

5. By the assumption, there are \(L'_1\) and \(L'_2\) such that \(L'_1 \cong L'_2\) and \(\varSigma '_1 \setminus \varSigma '_2\) is infinite. By Lemma 20,  .

.

Let A be any countable alphabet. If \(A = \emptyset \), then  .

.

Otherwise there is \(a \in A\). Because every infinite set contains a countably infinite subset, there is a bijection f from A to a subset of \(\varSigma '_1 {\setminus } \varSigma '_2\). A surjection \(\phi \) from \(\varSigma '_1 {\setminus } \varSigma '_2\) to A is obtained by letting \(\phi (x) := b\) if \(x = f(b)\) and \(\phi (x) := a\) if there is no b such that \(x = f(b)\). We have  .

.

So both \(A = \emptyset \) and \(A \ne \emptyset \) yield  . We conclude

. We conclude  . \(\square \)

. \(\square \)

We now have sufficient machinery to prove the main result. We deal first with the case where stability matters.

Lemma 22

Let x be any of \(\mathsf {ft}\), \(\mathsf {tr}\), and \(\mathsf {en}\), and let \(\mathsf {prev}(x)\) be the previous one (if \(x \ne \mathsf {ft}\)). Let y be any of \(\mathsf {ft}\), \(\mathsf {tr}\),  , \({\#}\), and \(\perp \), and let \(\mathsf {prev}(y)\) be the previous one (if \(y \ne \mathsf {ft}\)). Assume A and that “\(\cong \)” implies “\(\cong ^x_y\)”. If \(x \ne \mathsf {ft}\), assume also that “\(\cong \)” does not imply “\(\cong ^{\mathsf {prev}(x)}_y\)”. If \(y \ne \mathsf {ft}\), assume also that “\(\cong \)” does not imply “\(\cong ^x_{\mathsf {prev}(y)}\)”. If \(y = {\perp }\), assume also that the alphabets of the LTSs are countable. Then “\(\cong \)” is “\(\cong ^x_y\)”.

, \({\#}\), and \(\perp \), and let \(\mathsf {prev}(y)\) be the previous one (if \(y \ne \mathsf {ft}\)). Assume A and that “\(\cong \)” implies “\(\cong ^x_y\)”. If \(x \ne \mathsf {ft}\), assume also that “\(\cong \)” does not imply “\(\cong ^{\mathsf {prev}(x)}_y\)”. If \(y \ne \mathsf {ft}\), assume also that “\(\cong \)” does not imply “\(\cong ^x_{\mathsf {prev}(y)}\)”. If \(y = {\perp }\), assume also that the alphabets of the LTSs are countable. Then “\(\cong \)” is “\(\cong ^x_y\)”.

Proof

That “\(\cong \)” is “\(\cong ^x_y\)” means that “\(\cong \)” implies “\(\cong ^x_y\)” and “\(\cong ^x_y\)” implies “\(\cong \)”. The former was given in the assumption part of the lemma. Our task is to prove the latter for each x and y. So we assume that \(L_1\) and \(L_2\) are arbitrary LTSs such that \(L_1 \cong ^x_y L_2\), and we have to prove that \(L_1 \cong L_2\).

The definition of “\(\cong ^x_y\)” implies that \(L_1\) and \(L_2\) are both stable or both unstable.

If\(L_1\)and\(L_2\)are stable, then \(L_1 \cong _x L_2\). There are three cases.

If \(x = \mathsf {ft}\), then \(L_1\) and \(L_2\) are stable and \(L_1 \cong _\mathsf {ft}L_2\). By definition, \(L_1 \cong ^\mathsf {ft}_\mathsf {ft}L_2\). It implies \(L_1 \cong L_2\) by assumption A.

If \(x = \mathsf {tr}\), then \(L_1 \cong _\mathsf {tr}L_2\) and there are \(L'_1\) and \(L'_2\) such that \(L'_1 \cong L'_2\) but \(L'_1 \ncong ^\mathsf {ft}_y L'_2\). Because \(L'_1 \cong L'_2\) implies \(L'_1 \cong ^\mathsf {tr}_y L'_2\), this means that \(L'_1\) and \(L'_2\) are both stable, \(L'_1 \ncong _\mathsf {ft}L'_2\), and \(\varSigma '_1 = \varSigma '_2\). Lemma 16 yields \(L_1 \cong L_2\).

If \(x = \mathsf {en}\), then \(L_1 \cong ^{\textsf {}}_{\textsf {en}} L_2\) and there are \(L'_1\) and \(L'_2\) such that \(L'_1 \cong L'_2\) but \(L'_1 \ncong ^\mathsf {tr}_y L'_2\). Because \(L'_1 \cong L'_2\) implies \(L'_1 \cong ^\mathsf {en}_y L'_2\), this means that \(L'_1\) and \(L'_2\) are both stable, \(L'_1 \ncong _\mathsf {tr}L'_2\), and \(\varSigma '_1 = \varSigma '_2\). Lemma 17 yields \(L_1 \cong L_2\).

If\(L_1\)and\(L_2\)are unstable, then Lemma 18(3) yields \(L_1 \cong \mathsf {us}(L_1)\) and \(\mathsf {us}(L_2) \cong L_2\). We will soon show that the assumptions of Lemma 21 hold. By it, \(\mathsf {us}(L_1) \cong \mathsf {us}(L_2)\), yielding \(L_1 \cong L_2\).

Because \(L_1\) and \(L_2\) are unstable, \(L_1 \cong ^x_y L_2\) implies \(L_1 \cong _y L_2\). This gives the first condition of Lemma 21(1) to (4). The first condition of (5) is in the assumptions of the current lemma. When \(y = \mathsf {tr}\) or  , then “\(\cong \)” implies “\(\cong ^x_y\)” implies “\(\cong _{\mathsf {\varSigma }}\)”, because both “\(\cong _x\)” and “\(\cong _y\)” imply “\(\cong _{\mathsf {\varSigma }}\)”. This is needed by (2) and (3). When \(y \ne \mathsf {ft}\), then there are \(L'_1\) and \(L'_2\) such that \(L'_1 \cong L'_2\) yielding \(L'_1 \cong ^x_y L'_2\), but \(L'_1 \ncong ^x_{\mathsf {prev}(y)} L'_2\). They are unstable and satisfy \(L'_1 \ncong _{\mathsf {prev}(y)} L'_2\). So “\(\cong \)” does not imply “\(\cong _{\mathsf {prev}(y)}\)”. This gives the last condition of (2) to (5) and completes the checking of the assumptions of Lemma 21. \(\square \)

, then “\(\cong \)” implies “\(\cong ^x_y\)” implies “\(\cong _{\mathsf {\varSigma }}\)”, because both “\(\cong _x\)” and “\(\cong _y\)” imply “\(\cong _{\mathsf {\varSigma }}\)”. This is needed by (2) and (3). When \(y \ne \mathsf {ft}\), then there are \(L'_1\) and \(L'_2\) such that \(L'_1 \cong L'_2\) yielding \(L'_1 \cong ^x_y L'_2\), but \(L'_1 \ncong ^x_{\mathsf {prev}(y)} L'_2\). They are unstable and satisfy \(L'_1 \ncong _{\mathsf {prev}(y)} L'_2\). So “\(\cong \)” does not imply “\(\cong _{\mathsf {prev}(y)}\)”. This gives the last condition of (2) to (5) and completes the checking of the assumptions of Lemma 21. \(\square \)

Before continuing, it is perhaps a good idea to discuss a bit the fact that Lemma 22 refers to three equivalences that are grey in Fig. 1. First, in some cases “\(\cong ^x_{\mathsf {prev}(y)}\)” or “\(\cong ^{\mathsf {prev}(x)}_y\)” is grey. This is not a problem, because the lemma does not assume that it is a congruence. It only assumes that there are \(L'_1\) and \(L'_2\) such that \(L'_1 \cong L'_2\) but \(L'_1 \ncong ^x_{\mathsf {prev}(y)} L'_2\) and \(L'_1 \ncong ^{\mathsf {prev}(x)}_y L'_2\).

Second, the lemma may claim that “\(\cong \)” is “\(\cong ^x_y\)” also when “\(\cong ^x_y\)” is grey. This is not a problem, because the lemma does not promise but assumes that “\(\cong \)” is a congruence. The lemma says that if there is a congruence with the assumed properties, then it is “\(\cong ^x_y\)”. If “\(\cong ^x_y\)” is not a congruence, then, with the chosen x and y, no congruences satisfy the assumptions of the lemma.