Abstract

A big challenge in the knowledge discovery process is to perform data pre-processing, specifically feature selection, on a large amount of data and high dimensional attribute set. A variety of techniques have been proposed in the literature to deal with this challenge with different degrees of success as most of these techniques need further information about the given input data for thresholding, need to specify noise levels or use some feature ranking procedures. To overcome these limitations, rough set theory (RST) can be used to discover the dependency within the data and reduce the number of attributes enclosed in an input data set while using the data alone and requiring no supplementary information. However, when it comes to massive data sets, RST reaches its limits as it is highly computationally expensive. In this paper, we propose a scalable and effective rough set theory-based approach for large-scale data pre-processing, specifically for feature selection, under the Spark framework. In our detailed experiments, data sets with up to 10,000 attributes have been considered, revealing that our proposed solution achieves a good speedup and performs its feature selection task well without sacrificing performance. Thus, making it relevant to big data.

Similar content being viewed by others

1 Introduction

In a broad variety of domains, data are being gathered and stored at an intense pace due to the Internet and the widespread use of databases [7]. This ongoing rapid growth of data that led to a new terminology ‘big data’ has created an immense need for a novel generation of computational techniques, theories and approaches to extract useful information, i.e., knowledge, from these voluminous gathered data. These theories and approaches are the key elements of the emerging domain of knowledge discovery in databases (KDD) [21]. More precisely, big data arise with many challenges such as in clustering [35], in classification [34], in mining [38] but mainly in dimensionality reduction and more precisely in feature selection as this is usually a source of potential data loss [13]. This motivated researchers to build an efficient and an automated knowledge discovery process with a special focus on its third step, namely data reduction.

Data reduction is an important point of interest as many real-world applications may have a very large number of features (attributes) [41]. For instance, among the most practically relevant and high-impact applications are biochemistry, genetics and molecular biology. In these biological sciences, the collected data, e.g., gene expression data, may easily have a number of attributes which is more than 10,000 [1]. However, not all of these attributes are crucial and needed since many of them can be redundant in the context of some other features or even completely irrelevant and insignificant to the task being handled. Therefore, several important issues arise when learning in such a situation among these are the problems of over-fitting to insignificant aspects of the given data, as well as the computational burden due to the process of several similar attributes that give some redundant information [17]. These problems may decrease the performance of any learning technique, e.g., a classification algorithm. Hence, to solve these problems, it is an important and significant research direction to automatically look for and only select a small subset of relevant attributes from the initial large set of attributes; that is, to perform feature selection. In fact, by removing the irrelevant and redundant attributes, feature selection is capable of reducing the dimensionality of the input data while speeding up the learning process, simplifying the learned model as well as increasing the performance [9, 17].

At an abstract level, to reduce the high dimensionality of data sets, suitable techniques can be applied with respect to the requirements of the future KDD process. The taxonomy of these techniques falls into two main groups namely feature selection techniques and feature extraction techniques [17]. The main difference between the two approaches is that techniques for feature selection select a subset from the initial features while techniques for feature extraction create new attributes from the initial feature set. More precisely, feature extraction techniques transform the underlying semantic (meaning) of the attributes while feature selection techniques preserve the data set semantics in the process of reduction. In knowledge discovery, feature selection techniques are notably desirable as these ease the interpretability of the output knowledge. In this paper, we mainly focus on the feature selection category for big data pre-processing.

Technically, feature selection is a challenging process due to the very large search space that reflects the combinatorially large number of all possible feature combinations to select from. This task is becoming more difficult as the total number of attributes is increasing in many big data application domains combined with the increased complexity of those problems. Therefore, to cope with the vast amount of the given data, most of the state-of-the-art techniques employ some degree of reduction, and thus, an effective feature reduction technique is needed.

As one of data analysis techniques, rough set theory (RST) [27]-based approaches have been successfully and widely applied in data mining and knowledge discovery [16], and particularly for feature selection [31]. Nonetheless, in spite of being powerful rough set-based feature selection techniques, most of the classical algorithms are sequential ones, computationally expensive and can only handle non-large data sets. The fact that the RST-based algorithms are computationally expensive and the reason behind the methods’ incapacity to handle high dimensional data is explained by the need to first generate all the possible combinations of attributes at once, then process these in turn to finally select the most pertinent and relevant set of attributes.

Nevertheless, as previously mentioned, since the number of attributes is becoming very large this task becomes more critical and challenging, and at this point the RST-based approaches reach their limits. More precisely, it is unfeasible to generate all the possible attribute combinations at once because of both hardware and memory constraints.

This leads us to advance in this disjointed field and broaden the application of the theory of rough sets in the domain of data mining and knowledge discovery for big data. This paper proposes a scalable and effective algorithm based on rough sets for large-scale data pre-processing, and specifically for big data feature selection. Based on a distributed implementation design using both Scala and the Apache Spark framework [36], our proposed distributed algorithm copes with the RST computational inefficiencies and its restriction to be only applied to non-large data sets. To deeply analyze the proposed distributed approach, experiments on big data sets with up to 10,000 features will be carried out for feature selection and classification. Results demonstrate that our proposed solution achieves a good speedup and performs its feature selection task well without sacrificing performance, making it relevant to big data.

The rest of this paper is structured as follows. Section 2 presents preliminary information and related work. Section 3 reviews the fundamentals of rough set theory for feature selection. Section 4 formalizes the motivation of this work and introduces our novel distributed algorithm based on rough sets for large-scale data pre-processing. The experimental setup is introduced in Sect. 5. The results of the performance analysis are given in Sect. 6, and the conclusion is given in Sect. 7.

2 Literature review

Feature selection is defined as the process that selects a subset of the most relevant and pertinent attributes from a large input set of original attributes. For example, feature selection is the task of finding key genes (i.e., biomarkers) from the very huge number of candidate genes in biological and biomedical problems [3]. It is also the task of discovering core indicators (i.e., attributes) to describe the dynamic business environment [25], or to select key terms (e.g., words or phrases) in text mining [2] or to construct essential visual contents (e.g., pixel, color, texture, or shape) in image analysis [15].

In many data mining and machine learning real-world problems, feature selection became a crucial and highly important data pre-processing step due to the abundance of noisy, irrelevant and/or misleading features that are in big data. To cope with this, the usefulness of a feature can be measured by its relevancy as well as its redundancy. In fact, a feature is considered to be relevant if it can predict the decision feature(s); otherwise, it is said to be irrelevant as it provides no useful information with reference to any context. On the other hand, a feature is considered to be redundant if it provides the same piece of information for the currently selected features; this means that it is highly correlated with them. Hence, feature selection must provide beneficial results from big data as it should detect those attributes that present a high correlation with the decision feature(s), but at the same time are uncorrelated with each other.

In the literature, feature selection techniques can be broadly grouped into two main approaches which are filter approaches and wrapper approaches [9, 17]. The key difference between the two approaches is that wrapper approaches involve a specific learning algorithm, e.g., classification algorithm, when it comes to evaluating the attribute subset. The applied learning algorithm is mainly used as a black box by the wrapper approach to evaluate the quality (i.e., the classification performance) of the selected attribute set. Technically, when an algorithm performs feature selection in an independent way of any learning algorithm, the approach is defined as a filter where the set of the irrelevant features are filtered out before the induction process. Filter approaches tend to be applicable to most real-world domains since they are independent from any specific induction algorithm. On the other side, if the evaluation task is linked or dependent to the task of the learning algorithm then the feature selection approach is a wrapper technique. This approach searches through the attribute subsets space using the training (or validation) accuracy value of a specific induction algorithm as the measure of utility for a candidate subset. Therefore, these approaches may generate subsets that are overly explicit and specific to the used learning algorithm, and hence, any modification in the learning model might render the attribute set suboptimal.

Each of these two feature selection categories has its advantages and shortcomings where the main distinguishing aspects are the computational speed and the possibility of over-fitting. Overall, in terms of speed of computation, filter algorithms are usually computationally less expensive and more general than the wrapper techniques. Wrappers are computationally expensive and can easily break down when dealing with a very large number of attributes. This is due to the adoption of a learning algorithm in the evaluation process of subsets [24, 26]. In terms of over-fitting, the wrapper techniques have a higher learning capability so are more likely to overfit than filter techniques. It is important to mention that in the literature, some researchers classified feature selection techniques into three separate categories, namely the wrapper techniques, the embedded techniques and the filter techniques [24]. The embedded approaches tend to fuse feature selection and the learning approach into a single process. For large-scaled data sets having large number of features, the filter methods are usually a good option. Focusing on this category is the main scope of this paper.

Meanwhile, in the context of big data, it is worth mentioning that a detailed study was conducted in [6] where authors performed a deep analysis of the scalability of the state-of-the-art feature selection techniques that belong to the filter, the embedded and the wrapper techniques. In [6], it was demonstrated that the state-of-the-art feature selection techniques will obviously have scalability issues when dealing with big data. Authors have proved that the existent techniques will be inadequate for handling a high number of attributes in terms of training time and/or effectiveness in selecting the relevant set of features. Thus, the adaptation of feature selection techniques for big data problems seems essential and it may require the redesign of these algorithms and their incorporation in parallel and distributed environments/frameworks. Among the possible alternatives is the MapReduce paradigm [10] which was introduced by Google and which offers a robust and efficient framework to deal with big data analysis. Several recent works have been concentrated on parallelizing and distributing machine learning techniques using the MapReduce paradigm [40, 43, 44]. Recently, a set of new and more flexible paradigms have been proposed aiming at extending the standard MapReduce approach, mainly Apache SparkFootnote 1 [36] which has been applied with success over a number of data mining and machine learning real-world problems [36]. Further details and descriptions of such distributed processing frameworks will be given in Sect. 4.1.

With the aim of choosing the most relevant and pertinent subset of features, a variety of feature reduction techniques were proposed within the Apache Spark framework to deal with big data in a distributed way. Among these are several feature extraction methods such as nn-gram, principal component analysis, discrete cosine transform, tokenizer, PolynomialExpansion, ElementwiseProduct, etc., and very few feature selection techniques which are the VectorSlicer, the RFormula and the ChiSqSelector. To further expand this restricted research, i.e., the development of parallel feature selection methods, lately, some other feature selection techniques were proposed in the literature which are based on evolutionary algorithms [30]. Specifically, the evolutionary algorithms were implemented based on the MapReduce paradigm to obtain subsets of features from big data sets.Footnote 2 These include a generic implementation of greedy information theoretic feature selection methodsFootnote 3 which are based on the common theoretic framework presented in [29], and an improved implementation of the classical minimum Redundancy and Maximum Relevance feature selection method [29]. This implementation includes several optimizations such as cache marginal probabilities, accumulation of redundancy (greedy approach) and a data-access by columns.Footnote 4 Nevertheless, most of these techniques suffer from some shortcomings. For instance, they usually require the user or expert to deal with the algorithms’ parameterisation, noise levels specification, where some other techniques simply order the attributes set and let the user choose his/her own subset. There are some other feature selection techniques that require the user to indicate how many attributes should be selected, or they must give a threshold that determines when the algorithm should end, which are all counted as significant drawbacks. All of these require users to make a decision based on their own (possibly subjective) perception. To overcome the shortcomings of the state-of-the-art techniques, it seemed to be crucial to look for a filter approach that does not require any external or supplementary information to function properly. Rough set theory (RST) can be used as such a technique [39].

The use of rough set theory in data mining and knowledge discovery, specifically for feature selection, has proved to be very successful in many application domains such as in classification [22], clustering [23] and in supply chain [5]. This success is explained by the several aspects of the theory in dealing with data. For example, the theory is able to analyze the facts hidden in data, does not need any supplementary information about the given data such as thresholds or expert knowledge on a particular domain and is also capable to find a minimal knowledge representation [11]. This is achieved by making use of the granularity structure of the provided data only.

Although algorithms based on rough sets have been widely used as efficient filter feature selectors, most of the classical rough set algorithms are sequential ones, computationally expensive and can only deal with non-large data sets. The prohibitive complexity of these algorithms comes from the search for an optimal attribute subset through the computation of an exponential number of candidate subsets. Although it is an exhaustive method, this is quite impractical for most data sets specifically for big data as it becomes clearly unmanageable to build the set of all possible combinations of features.

In order to overcome these weaknesses, a set of parallel and distributed rough set methods has been proposed in the literature to ensure feature selection but in different contexts. For example, some of these distributed methods adopt some evolutionary algorithms, such as the work proposed in [12], where authors defined a hierarchical MapReduce implementation of a parallel genetic algorithm for determining the minimum rough set reduct, i.e., the set of the selected features. Within another context, the context of limited labeled big data, in [32], authors introduced a theoretic framework called local rough set and developed a series of corresponding concept approximation and attribute reduction algorithms with linear time complexity, which can efficiently and effectively work in limited labeled big data. In the context of distributed decision information systems, i.e., several separate data sets dealing with different contents/topics but concerning the same data items, in [19], authors proposed a distributed definition of rough sets to deal with the reduction of these information systems.

In this paper, and in contrast to the state-of-the-art methods, we mainly focus on the formalization of rough set theory in a distributed manner by using its granular concepts only, and without making use of any heuristics, e.g., evolutionary algorithms. We also focus on a single information system, i.e., a single big data set, which covers a single content/topic and which is characterized by a full and complete labeled data. Within this focus, and in the literature, a first attempt presenting a parallel rough set model was given in [8]. The main idea in [8] is to split the given big data set into several partitions, each with a smaller number of features which are all then processed in a parallel way. This is to minimize the computational effort of the RST computations when dealing with a very large number of features particularly. However, it is important to mention that the scalability of [8] was only validated in terms of sizeup and scaleup with a change in the standard metrics definitions (the standard definitions are given in Sect. 6.2). Actually, the used definition of these two metrics was based on the number of features per partition instead of the standard definition where the evaluation has to be based on the total number of features in the database used.

In this paper, we propose a redesign of rough set theory for feature selection by giving a better definition of the work presented in [8], specifically when it comes to the validation of the method (Sect. 6). Our work, which is an extension of [8], is based on a distributed partitioning procedure, within a Spark/MapReduce paradigm, that makes our proposed solution scalable and effective in dealing with big data. For the validation of our method, and in contrast to [8], we believe that using the overall number of attributes is a much more natural setup as it will give insights into the performance depending on the input data set rather than the partitions.

3 Rough sets for feature selection

Rough set theory (RST) [27, 28] is a formal approximation of the conventional set theory that supports approximations in decision making. This approach can extract knowledge from a problem domain in a concise way and retain the information content while reducing the involved amount of data [39]. This section focuses mainly on highlighting the fundamentals of RST for feature selection.

3.1 Preliminaries

In rough set theory, the training data set is called an information table or an information system. It is represented by a table where rows represent objects or instances and columns represent attributes or features. The information table can be defined as a tuple \(S = (U, A)\), where \(U = \{u_1, u_2, \ldots , u_N\}\) is a non-empty finite set of N instances (or objects), called universe, and A is a non-empty set of \((n + k)\) attributes. The feature set \(A = C \cup D\) can be partitioned into two subsets, namely the conditional feature set \(C = \{a_1, a_2, \ldots , a_n\}\) consisting of n conditional attributes or predictors and the decision attribute \(D = \{d_1, d_2, \ldots , d_k\}\) consisting of k decision attributes or output variables. Each feature \(a \in A \) is described with a set of possible values \(V_a\) named the domain of a.

For each non-empty subset of attributes \(P \subset C\), a binary relation called P-indiscernibility relation, which is the central concept of rough set theory, is defined as follows:

where \(a(u_i)\) refers to the value of attribute a for the instance \(u_i\). This means if \((u_1, u_2) \in IND (P)\), then \(u_1\) is indistinguishable (indiscernible) from \(u_2\) by the attributes P. This relation is reflexive, symmetric and transitive.

The induced set of equivalence classes is denoted as \([u]_P\) where \(u \in U\), and it partitions U into different blocks denoted as U/P.

The rough set approximates a concept or a target set of objects \(X \subseteq U\) using the equivalence classes induced using P as follows:

where \({\underline{P}}(X)\) and \({\overline{P}}(X)\) denote the P-lower (certainly classified as members of X) and P-upper (possibly classified as members of X) approximations of X, respectively. The notation \(\cap \) denotes the intersection operation.

The concept that defines the set of instances that are not certainly, but can possibly be classified in a specific way is named the boundary region and is defined as the difference between the two approximations. X is a crisp set if the boundary region is an empty set, i.e., accurate approximation, \({\overline{P}}(X) = {\underline{P}}(X)\); otherwise, it is a rough set.

To compare subsets of attributes, a dependency measure is defined. For instance, the dependency measure of an attribute subset Q on another attribute subset P is given as:

where \(0 \le \gamma _P(Q) \le 1\), \(\cup \) denotes the union operation, | | denotes the set cardinality, and \( POS _P(Q)\) is defined as:

\({POS}_P(Q)\) is the positive region of Q with respect to P and is the set of all elements of U that can be uniquely classified to blocks of the partition \([u]_Q\), by means of P. The closer \(\gamma _P(Q)\) is to 1, the more Q depends on P.

Based on these basics, RST defines two important concepts for feature selection which are the Core and the Reduct.

3.2 Reduction process

The theory of rough sets aims at finding the smallest subset of the conditional attribute set in a way that the resulting reduced database remains consistent with respect to the decision attribute. A database is considered to be consistent in case where for every set of objects, having identical feature values, the corresponding decision features are the same. To achieve this, the theory defines the Reduct concept and the Core concept.

Formally, in an information table, the unnecessary attributes can be categorized into either irrelevant features or into redundant features. The point is to define an heuristic that defines a measure to evaluate the necessity of a feature. Nevertheless, it is not easy to define an heuristic based on these qualitative definitions of irrelevance and redundancy. Therefore, authors in [20] defined strong relevance and weak relevance of an attribute based on the probability of the target concept occurrence given this attribute. The set of the strong relevant attributes presents the indispensable features in the sense that they cannot be removed from the information table without causing a loss of the prediction accuracy. On the other hand, the set of the weak relevant features can in some cases contribute to the prediction accuracy. Based on these definitions, both of the strong and the weak relevance concepts can provide good basics upon which the description of the importance of each feature can be defined. In the rough set terminology, the set of strong relevant attributes can be mapped to the Core concept while the Reduct concept defines a mixture of all strong relevant attributes and some weak relevant attributes.

To define these key concept, RST sets the following formalizations: A subset \(R \subseteq C\) is said to be a reduct of C in the case where

and there is no \(R' \subset R\) such that \( \gamma _{R^{'}}(D) = \gamma _R(D)\). Based on this formula, the Reduct can be defined as the minimal set of selected features that preserve the same dependency degree as the whole set of features.

In practice, from the given information table, it is possible that the theory generates a set of reducts: \( RED ^{F}_{C}(D)\). In this situation, any reduct in \( RED ^{F}_{C}(D)\) can be selected to describe the original information table.

The theory also defines the Core concept which is the set of features that are enclosed in all reducts. The Core concept is defined as

More precisely, the Core is defined as the set of features that cannot be omitted from the information table without inducing a collapse of the equivalence class structure. Thus, the Core is the most important subset of attributes, since none of its elements can be removed without affecting the classification power of attributes. This means that all the features which are in the Core are indispensable.

4 Parallel computing frameworks and the MapReduce programming model

In this section, we highlight the main solutions for big data processing. We, also, give a description of the MapReduce paradigm.

4.1 Parallel computing frameworks

With the dramatic increase of the amount of data, it has become crucial to implement a new set of technologies and tools that permit improved decision making and insight discovery. In this context, different techniques [33] have been developed to handle high dimensional data sets where most of these proposed tools are based on distributed processing, e.g., the Message Passing Interface (MPI) programming paradigm [37].

The encountered challenges in this concern are essentially linked to the access to the given big data, to the transparency of the development process of the software with respect to its prerequisites, as well as to the available programming paradigms [14]. For example, standard techniques require that all the given data should be loaded into the main machine’s memory. This obviously presents a technical issue in big data since the data, which is given as input, is usually stored in different locations causing an intensive communication in the network as well as some supplementary input and output costs. It is true that it is possible to afford this, but it is also important to mention that it will be crucial to afford an intensively large main memory to be able to retain all the pre-loaded given data for computing and processing purposes.

To overcome these serious limitations, a new set of highly efficient and fault-tolerant parallel frameworks has been developed and set in the market. These distributed frameworks can be categorized with respect to the nature or type of the data they are able to process. Actually, there are some frameworks that can only process batch data. Within this schema, the parallel processing system functions over a high dimensional and static data set. At a later level of the distributed processing, the system returns the output result(s) when all the process of computations is successfully achieved. Among the well-known open-source distributed processing frameworks dedicated for batch processing, we mention Hadoop.Footnote 5 Hadoop is based on simple programming paradigms that allow a highly scalable and reliable parallel processing of high-dimensional data sets. The framework offers a cost-effective solution to store and process different types of data such as structured, semi-structured and unstructured data without any specific format specifications. Technically, Hadoop works on top of the Hadoop distributed file system (HDFS) which duplicates the input data files in various storage machines (nodes). In this manner, the framework facilitates a fast transfer rate of the data among nodes set in the cluster and allows the system to operate without any interruption if one or a number of nodes fail. MapReduce is the core of the Hadoop framework. This paradigm offers an intensive scalability over a large number of nodes within a Hadoop cluster. The programming details of MapReduce as well as its basic concepts will be given in Sect. 4.2.

On the other hand, there are some other distributed frameworks that can only deal with streaming data. Within these frameworks’ design, the distributed calculations are performed over data (to each individual data item) at the time it enters the parallel framework. Apache StormFootnote 6 and Apache SamzaFootnote 7 are among the most popular stream processing frameworks.

A third category of distributed frameworks can be highlighted which is considered as hybrid systems. This is because these frameworks are capable of processing not only batch data but also stream data. In these frameworks’ designs, similar or some linked elements can be used for both types of data. This makes the diverse processing requirements of the hybrid systems much easier and simpler. Among the well-known streaming processing parallel frameworks, we mention Apache SparkFootnote 8 and Apache Flink.Footnote 9

In this conducted research, we focus on Apache Spark. The distributed open source framework was initially developed in the UC Berkeley AMPLab for big data processing. Apache Spark is characterized by its capability of improving the system’s effectiveness—which is achieved via the use of intensive memory—,its efficiency, and its high transparency for users. These characteristics allow to perform parallel processing of diverse application domains in a simple and easy way. More precisely and in comparison to Hadoop, in Hadoop MapReduce multiple jobs would be adjusted together to build a data pipeline. In this process, and in every level of that built pipeline, MapReduce will have to read the data from the disk and then write it back to the disk again. This process was obviously ineffective as it had to read all the data and write it from and back to the disk at each level of the process. To deal with this issue, Apache Spark comes into play. Based on the same MapReduce paradigm, the Spark framework could offer an immediate 10 times increase in the system’s performance. This is explained by the non-necessity to store the given data back to the disk at every stage of the process as all activities remain in the memory [36]. Spark affords a much faster data process in contrast to transferring it through needless Hadoop MapReduce mechanisms. Adding to this specificity, the key concept that Spark offers is a resilient distributed data set (RDD), which is a set of elements that are distributed across the nodes of the used cluster that can be operated on in a parallel way. Indeed, Spark has a number of high-level libraries for stream processing, machine learning and graph processing, e.g., MLlib [18]. The choice of this specific framework to design our proposed algorithm based on rough sets for big data feature selection is essentially based on several reasons which are as follows: (1) to offer a general solution based on a hybrid parallel framework, (2) Apache Spark provides high-speed benefits with a trade-off in the usage of high memory, (3) Spark is one of the well-known and certified distributed frameworks and also a mature hybrid system specifically when comparing it to some other frameworks in the market. These are considered as more niche in terms of their usage but more importantly they are still in their initial periods of adoption.Footnote 10

4.2 The MapReduce paradigm

MapReduce [10] is one of the most popular processing techniques and program models for distributed computing to deal with big data. It was proposed by Google in 2004 and designed to easily scale data processing over multiple computing nodes. The MapReduce paradigm is composed of two main tasks/phases, namely the map phase and the reduce phase. At an abstract level, the map process takes as input a set of data and transforms it into a different set where each element is represented in the form of a tuple key/value pair, producing some intermediate results. Then, the reduce process collects the output from the map task as an input and combines these given key/value tuples into a smaller set of pairs to generate the final output. A representation of the MapReduce framework is given in Fig. 1.

Technically, the MapReduce paradigm is based on a specific data structure which is the (key, value) pair. More precisely, during the map phase, on each split of the data the map function gets a unique (key, value) tuple as an input and generates a set of intermediate (key\(^{\prime }\), value\(^{\prime }\)) pairs as output. This is represented as follows:

After that, the MapReduce paradigm assembles all the intermediate (key\(^{\prime }\), value\(^{\prime }\)) pairs by key via the shuffling phase. Finally, the reduce function takes the aggregated (key\(^{\prime }\), value\(^{\prime }\)) pairs and generates a new (key\(^{\prime }\), value\(^{\prime \prime }\)) pair as output. This is defined as:

As discussed, a variety of open source parallel computing frameworks are proposed in the market, and in this section, we have highlighted the well-known ones. However, it is important to mention that choosing a particular distributed framework is always dependent to the type or kind of the given data that the system will process. The choice also depends on how time bound the specifications of the users are, and on the types of output results that users are looking for. In this paper, we mainly focused on the use of Apache Spark.

5 The rough set distributed algorithm for big data feature selection

In this section, we will introduce our developed parallel rough set-based algorithm, that we name ‘Sp-RST,’ for big data pre-processing and specifically for feature selection. Sp-RST has a distributed architecture based on Apache Spark for a distributed and in-memory computation task. First, we will highlight the main motivation for developing the distributed Sp-RST algorithm by identifying the computational inefficiencies of the classical rough set theory which limit its application to small data sets only. Secondly, we will elucidate our Sp-RST solution as an efficient approach capable of performing big data feature selection without sacrificing performance.

5.1 Motivation and problem statement

Rough set theory for feature selection is an exhaustive search as the theory needs to compute every possible combination of attributes. The number of possible attribute subsets with m attributes from a set of N total attributes is \(\left( {\begin{array}{c}N\\ m\end{array}}\right) = \frac{N!}{m!(N - m)!}\) [17]. Thus, the total number of feature subsets to generate is \(\sum _{i=1}^N{\left( {\begin{array}{c}N\\ i\end{array}}\right) } = 2^N-1\). For example, for \(N=30\) we have roughly 1 billion combinations. This constraint prevents us to use high-dimensional data sets as the number of feature subsets is growing exponentially in the total number of features N. Moreover, hardware constraints, specifically memory consumption, do not allow us to store a high number of entries. This is because the system has to store the entire training data set in memory, together with all the supplementary data computations as well as the generated results. All of this data can be so big that its size can easily exceed the available RAM memory. These are the main motivations for our proposed Sp-RST solution, which makes use of parallelization.

5.2 The proposed solution

To overcome the standard RST inadequacy to perform feature selection in the context of big data, we propose our distributed Sp-RST solution. Technically, to handle a large set of data it is crucial to store all the given data set in a parallel framework and perform computations in a distributed way. Based on these requirements, we first partition the overall rough set feature selection process into a set of smaller and basic tasks that each can be processed independently. After that, we combine the generated intermediate outputs to finally build the sought result, i.e., the reduct set.

5.2.1 General model formalization

For feature selection, our learning problem aims to select a set of highly discriminating attributes from the initial large-scale input data set. The input base refers to the data stored in the distributed file system (DFS). To perform distributed tasks on the given DFS, a resilient distributed data set (RDD) is built. The latter can be formalized as a given information table that we name \(T_{ RDD }\). \(T_{ RDD }\) is defined via a universe \(U = \{x_1, x_2, \ldots , x_N\}\), which refers to the set of data instances (items), a large conditional feature set \(C = \{c_1, c_2, \ldots , c_V\}\) that includes all the features of the \(T_{ RDD }\) information table and finally via a decision feature D of the given learning problem. D refers to the label (also called class) of each \(T_{ RDD }\) data item and is defined as follows: \(D =\{d_1, d_2, \ldots , d_W\}\). C presents the conditional attribute pool from where the most significant attributes will be selected.

As explained in Sect. 5.1, the classical RST cannot deal with a very large number of features, which is defined as C in the \(T_{ RDD }\) information table. Thus, to ensure the scalability of our proposed algorithm when dealing with a large number of attributes, Sp-RST first partitions the input \(T_{ RDD }\) information table (the big data set) into a set of m data blocks based on splits from the conditional feature set C, i.e., m smaller data sets with a fewer number of features instead of using a single data block (\(T_{ RDD }\)) with an unmanageable C number of features that we note as \(T_{ RDD }(C)\). The key idea is to generate m smaller data sets that we name \(T_{ RDD _{(i)}}\), where \(i \in \{1, \ldots , m\}\), from the big \(T_{ RDD }\) data set, where each \(T_{ RDD _{(i)}}\) is defined via a manageable number of features r, where \(r \lll C = \{c_1, c_2, \ldots , c_V\}\) and \(r \in \{1, \ldots , V\}\). The definition of the parameter r will be further explained in what follows. We note the resulting data block as \(T_{ RDD _{(i)}}(C_r)\). This leads to the following formalization: \(T_{ RDD } = \bigcup _{i=1}^{m}T_{ RDD _{(i)}}(C_r)\), where \(r \in \{1, \ldots , V\}\). As mentioned above, r defines the number of attributes that will be considered to build every \(T_{ RDD _{(i)}}\) data block. Based on this, every \(T_{ RDD _{(i)}}\) is built using r random attributes which are selected from C. Each \(T_{ RDD _{(i)}}\) is constructed based on r distinct features as there are no common attributes between all the built \(T_{ RDD _{(i)}}\). This leads to the following formalization: \(\forall T_{ RDD _{(i)}}{:}\,\not \exists \{c_r\} = \bigcap _{i=1}^{m} T_{ RDD _{(i)}}\). Figure 2 presents this data partitioning phase.

With respect to the parallel implementation design, the distributed Sp-RST algorithm will be applied to every \(T_{ RDD _{(i)}}(C_r)\) while gathering all the intermediate results from the distinct m created partitions; rather than being applied to the complete \(T_{ RDD }\) that encloses the whole set C of conditional features. Based on this design, we can ensure that the algorithm can perform its feature selection task on a computable number of attributes and therefore overcome the standard rough set computational inefficiencies. The pseudocode of our proposed distributed Sp-RST solution is highlighted in Algorithm 1.

To further guarantee the Sp-RST feature selection performance while avoiding any critical information loss, to evolve the algorithm and to refine it, Sp-RST runs over N iterations on the \(T_{ RDD }\)m data blocks, i.e., N iterations on all the m built \(T_{ RDD _{(i)}}(C_r)\). Through all these N iterations, Sp-RST will first randomly build the m distinct \(T_{ RDD _{(i)}}(C_r)\) as explained above. Once this is achieved and for each partition, the algorithm’s distributed tasks defined in Algorithm 1 (lines 5–10) will be performed. As noticed, line 1 in Algorithm 1 that defines the initial Sp-RST parallel job is performed outside the loop iteration. This process calculates the indiscernibility relation \( IND (D)\) of the decision class D. The main reason for this implementation is that this process is totally separated from the m created partitions. This is because the output is tied to the label of the data instances and not on the attribute set.

Out from the iteration loop (line 12), the outcome of each created partition can be either only one reduct \( RED _{i_{(D)}}(C_r)\) or a set (a family) of reducts \( RED _{i_{(D)}}^{F}(C_r)\). As previously highlighted in Sect. 3, any reduct among the \( RED _{i_{(D)}}^{F}(C_r)\) reducts can be selected to describe the \(T_{ RDD _{(i)}}(C_r)\) information table. Therefore, in case where Sp-RST generates a single reduct for a specific \(T_{ RDD _{(i)}}(C_r)\) partition, the final output of this attribute selection phase is the set of features defined in \( RED _{i_{(D)}}(C_r)\). These attributes represent the most informative features among the \(C_r\) features and generate a new reduced \(T_{ RDD _{(i)}}\) defined as: \(T_{ RDD _{(i)}}(RED)\). The latter reduced base guarantees nearly the same data quality as its corresponding \(T_{ RDD _{(i)}} (C_r)\) which is based on the full attribute set \(C_r\). In the other case where Sp-RST generates multiple reducts, the algorithm performs a random selection of a single reduct among the generated family of reducts \( RED _{i_{(D)}}^{F}(C_r)\) to describe the corresponding \(T_{ RDD _{(i)}}(C_r)\). This random selection is supported by the RST fundamentals and is explained by the same level of importance of all the reducts defined in \( RED _{i_{(D)}}^{F}(C_r)\). More precisely, any reduct included in the family of reducts \( RED _{i_{(D)}}^{F}(C_r)\) can be selected to replace the \(T_{ RDD _{(i)}}\,(C_r)\) attributes.

At this level, the output of every i data block is \( RED _{i_{(D)}}(C_r)\) which refers to the selected set of features. Nevertheless, since every \(T_{ RDD _{(i)}}\) is described using r distinct attributes and with respect to \(T_{ RDD } = \bigcup _{i=1}^{m} T_{ RDD _{(i)}}(C_r)\), a union operator on the generated selected attributes is needed to represent the original \(T_{ RDD }\). This is defined as \( Reduct _m = \bigcup _{i=1}^{m} RED _{i_{(D)}}(C_r)\) (Algorithm 1, lines 12–14). As previously highlighted, Sp-RST will perform its distributed tasks over the N iterations generating N\( Reduct _m\). Therefore, finally, an intersection operator applied on all the obtained \( Reduct _m\) is required. This is defined as \(Reduct = \bigcap _{n=1}^{N} Reduct _m\). Sp-RST could diminish the dimensionality of the original data set from \(T_{ RDD }(C)\) to \(T_{ RDD }(Reduct)\) by removing irrelevant and redundant features at each computation level. Sp-RST could also simplify the learned model, speed up the overall learning process, and increase the performance of an algorithm, e.g., a classification algorithm, as will be discussed in the experimental setup section (Sect. 6). Figure 3 illustrates the global functioning of Sp-RST. In what follows, we will elucidate the different Sp-RST elementary distributed tasks.

5.2.2 Algorithmic details

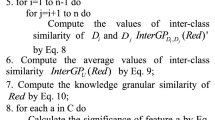

As previously highlighted, the elementary Sp-RST distributed tasks will be executed on every \(T_{ RDD _{(i)}}\) partition defined by its \(C_r\) features (\(T_{ RDD _{(i)}}(C_r)\)), except for the first step, Algorithm 1—line 1, which deals with the calculation of the indiscernibility relation for the decision class D: \( IND (D)\). Sp-RST performs seven main distributed jobs to generate the final output, i.e., Reduct.

Sp-RST stars first of all by computing the indiscernibility relation for the decision class \(D = \{d_1, d_2, \ldots , d_W\}\). We define the indiscernibility relation as \( IND (D)\): \( IND (d_i)\), where \(i \in \{1, 2, \ldots , W\}\). Sp-RST will calculate \( IND (D)\) for each decision class \(d_i\) by associating the same \(T_{ RDD }\) data items (instances) that are expressed in the universe \(U = \{x_1, \ldots , x_N\}\) and that belong to the same decision class \(d_i\).

To achieve this task, Sp-RST processes a first map transformation operation taking the data in its format of (\(id_i\)of\(x_i\), List of the features of\(x_i\), Class\(d_i\)of\(x_i\)) and transforming it to a \(\langle key, value \rangle \) pair: \(\langle \)Class \(d_i\) of \(x_i\), List of \(id_i\) of \(x_i\rangle \). Based on this transformation, the decision class \(d_i\) defines the key of the generated output and the data items identifiers \(id_i\) of \(x_i\) of the \(T_{ RDD }\) define the values. After that, the foldByKey()Footnote 11 transformation operation is applied to merge all values of each key in the transformed RDD output. This is to represent the sought \( IND (D)\): \( IND (d_i)\). The pseudo-code related to this distributed job is highlighted in Algorithm 2.

After that and within a specific partition i, where \(i \in \{1, 2, \ldots , m\}\) and m is the number of partitions, the algorithm generates the \( AllComb _{(C_r)}\) RDD which reflects all the possible combinations of the \(C_r\) set of attributes. This is based on transforming the \(C_r\) RDD to the \( AllComb _{(C_r)}\) RDD using the flatmap()Footnote 12 transformation operation and by using the combinations() operation. This is shown in Algorithm 3.

In its third distributed job, Sp-RST calculates the indiscernibility relation \( IND ( AllComb _{(C_r)})\) for every created combination, i.e., the indiscernibility relation of every element in the output of Algorithm 3, and that we name \( AllComb _{{(C_r)}_i}\). In this task and as described in Algorithm 4, the algorithm aims at collecting all the identifiers \(id_i\) of the data items \(x_i\) that have identical values of the combination of attributes which are extracted from \( AllComb _{(C_r)}\). To do so, a first map operation is applied taking the data in its format of (\(id_i\)of\(x_i\), List of the features of\(x_i\), Class\(d_i\)of\(x_i\)) and transforming it to a \(\langle key, value \rangle \) pair: \(\langle ( AllComb _{{(C_r)}_i}\), List of the features of \(x_i)\), List of \(id_i\) of \(x_i\rangle \). Based on this transformation, the combination of features and their vector of features define the key and the identifiers \(id_i\) of the data items \(x_i\) define the value. After that, the foldByKey() operation is applied to merge all values of each key in the transformed RDD output, i.e., all the identifiers \(id_i\) of the data items \(x_i\) that have the same combination of features with their corresponding vector of features \(( AllComb _{{(C_r)}_i}\), List of the features of \(x_i)\). This is to represent the sought \( IND ( AllComb _{(C_r)})\). At its third step, Sp-RST prepares the set of features that will be selected in the coming steps.

In a next stage, Sp-RST computes the dependency degrees \(\gamma ( AllComb _{(C_r)})\) of each attribute combination as described in Algorithm 5. For this task, the distributed job requires three input parameters which are the calculated indiscernibility relations \( IND (D)\), the \( IND ( AllComb _{(C_r)})\) and the set of all attribute combinations \( AllComb _{(C_r)}\).

For every element \( AllComb _{{(C_r)}_i}\) in \( AllComb _{(C_r)}\), and using the intersection() transformation, the job tests first if the intersection of every \( IND (d_i)\) of \( IND (d)\) with each element \( IND ( AllComb _{{(C_r)})_i}\) in \( IND ( AllComb _{(C_r)})\) holds all the elements in the latter parameter. This process refers to the calculation of the lower approximation as detailed in Sect. 3. We name the length of the resulting intersection as LengthIntersect. If the condition is satisfied then a score, which is equal to the length of the elements resulting from the generated intersection, i.e., LengthIntersect, is assigned, else a 0 value is given.

After that a reduce function is applied over the different \( IND (D)\) elements together with a sum() function applied on the calculated scores which are based on the elements having the same \( IND (d_i)\). This operation is followed by a second reduce function which is applied over the different \( IND ( AllComb _{(C_r)})\) elements together with a sum() function applied on the previous calculated results which are indeed based on the elements having the same \( AllComb _{{(C_r)}_i}\).

The latter output refers to the dependency degrees: \(\gamma ( AllComb _{(C_r)})\). This distributed job generates two outputs namely the set of dependency degrees \(\gamma ( AllComb _{(C_r)})\) of the attribute combinations \( AllComb _{(C_r)}\) as well as their associated sizes \( Size _{( AllComb _{(C_r)})}\).

Once all the dependencies are calculated, in Algorithm 6, Sp-RST looks for the maximum value of the dependency among all the computed \(\gamma ( AllComb _{(C_r)})\) using the max() function operated on the given RDD input and which is referred to as RDD[\( AllComb _{(C_r)}\), \( Size _{( AllComb _{(C_r)})}\), \(\gamma ( AllComb _{(C_r)})\)]. Specifically, the max() function will be applied on the third argument of the given RDD, i.e., \(\gamma ( AllComb _{(C_r)})\).

Let us recall that based on the RST preliminaries (seen in Sect. 3), the maximum dependency refers to not only the dependency of the whole attribute set \((C_r)\) describing the \(T_{ RDD _i}(C_r)\) but also to the dependency of all the possible attribute combinations satisfying the following constraint: \(\gamma ( AllComb _{(C_r)})= \gamma (C_r)\). The maximum dependency MaxDependency reflects the baseline value for the feature selection task.

In a next step, Sp-RST performs a filtering process using the filter() function to only keep the set of all combinations which have the same dependency degrees, as the already selected dependency baseline value (MaxDependency), i.e., \(\gamma ( AllComb _{(C_r)}) = MaxDependency\). This is described in Algorithm 7. In fact, through these computations, the algorithm removes in each level the unnecessary attributes that may negatively influence the performance of any learning algorithm.

At a final stage, and using the results generated from the previous step, which is the input of Algorithm 8, Sp-RST applies first the min() operator to look for the minimum number of features among all the \( Size _{( AllComb _{(C_r)})}\); specifically, the min() operator will be applied to the second argument of the given RDD. Once determined, a result that we name minNbF, the algorithm applies a filter() method to only keep the set of combinations having the same minimum number of features as minNbF. This is achieved by satisfying the full reduct constraints highlighted in Sect. 3: \( \gamma ( AllComb _{(C_r)}) = \gamma (C_r)\) while there is no \( AllComb _{(C_r)}^{'} \subset AllComb _{(C_r)}\) such that \(\gamma ( AllComb _{(C_r)}^{'}) = \gamma ( AllComb _{(C_r)})\). Every combination that satisfies this constraint is evaluated as a possible minimum reduct set. The features defining the reduct set describe all concepts in the initial \(T_{ RDD _i}(C_r)\) training data set.

5.3 Sp-RST: a working example

We apply Sp-RST to an example of an information table, \(T_{ RDD }(C)\), which is presented in Table 1. By assuming that the considered \(T_{ RDD }(C)\) is a big data set, the information table is defined via a universe \(U = \{x_1, x_2, \ldots , x_5\}\) which refers to the set of data instances (items), a large conditional feature set C = {Headache, Muscle-pain, Temperature} that includes all the features of the \(T_{ RDD }(C)\) information table and finally via a decision feature Flu of the given learning problem. Flu refers to the label (or class) of each \(T_{ RDD }(C)\) data item and is defined as follows: \(Flu =\{yes, no\}\). C presents the conditional attribute pool from where the most significant attributes will be selected.

Independently from the set of conditional features C, Sp-RST starts first of all by computing the indiscernibility relation for the decision class Flu. We define the indiscernibility relation as \( IND (Flu)\): \( IND (Flu_i)\). Sp-RST will calculate \( IND (Flu)\) for each decision class \(Flu_i\) by associating the same \(T_{ RDD }(C)\) data items (instances) that are expressed in the universe U and that belong to the same decision class \(Flu_i\). Based on the Apache Spark framework and by applying Algorithm 2, line 1, we get the following outputs from the different Apache Spark data splits which are presented in Tables 2 and 3:

-

From Split 1:

-

\(\langle \)yes, \(x_0\rangle \)

-

\(\langle \)yes, \(x_1\rangle \)

-

\(\langle \)no, \(x_2\rangle \)

-

-

From Split 2:

-

\(\langle \)no, \(x_3\rangle \)

-

\(\langle \)yes, \(x_4\rangle \)

-

\(\langle \)yes, \(x_5\rangle \)

-

After that, and by applying Algorithm 2, line 2, we get the following output which refers to the indiscernibility relation of the class \( IND (Flu)\):

-

\(yes, \{x_0, x_1, x_4, x_5\} \)

-

\(no, \{x_2, x_3\}\)

In this example, we assume that we have two partitions \(m = 2\). For the first partition, \(m = 1\), a random number \(r = 2\) is selected to build the first \(T_{ RDD _{i=1}}(C_r)\). For the second partition, \(m = 2\), a random number \(r = 1\) is selected to build the first \(T_{ RDD _{i=2}}(C_r)\). Based on these assumptions, the following partitions and splits based on Apache Spark are obtained (Tables 4, 5, 6, 7).

Based on the first partition \(m = 1\), and by applying Algorithm 3, which aims to generate all the \( AllComb _{(C_r)}\) possible combinations of the \(C_r\) set of attributes, the output from both Apache Spark splits is the following:

-

Muscle-pain

-

Temperature

-

Muscle-pain, temperature

In its third distributed job, Sp-RST calculates the indiscernibility relation \( IND ( AllComb _{(C_r)})\) for every created combination, i.e., the indiscernibility relation of every element in the output of the previous step (Algorithm 3). By applying Algorithm 4 and based on both Apache Spark splits, the output is the following:

-

From\(m = 1\)—Split 1:

-

Muscle-pain, \(\{x_0\}, \{x_1, x_2\}\)

-

Temperature, \(\{x_0\}, \{x_1, x_2\}\)

-

Muscle-pain, Temperature, \(\{x_0\}, \{x_1, x_2\} \)

-

-

From\(m = 1\)—Split 2:

-

Muscle-pain, \(\{x_3, x_4, x_5\}\)

-

Temperature, \(\{x_3\}, \{x_4\}, \{x_5\}\)

-

Muscle-pain, Temperature, \(\{x_3\}, \{x_4\}, \{x_5\}\)

-

In a next stage, and by using the previous output as well as \( IND (Flu)\), Sp-RST computes the dependency degrees \(\gamma ( AllComb _{(C_r)})\) of each attribute combination as described in Algorithm 5. This distributed job generates two outputs namely the set of dependency degrees \(\gamma ( AllComb _{(C_r)})\) of the attribute combinations \( AllComb _{(C_r)}\) as well as their associated sizes \( Size _{( AllComb _{(C_r)})}\). The output from both splits for \(m = 1\) is the following:

-

Muscle-pain, 1, 1

-

Temperature, 4, 1

-

Muscle-pain, temperature, 4, 2

Once all the dependencies are calculated, in Algorithm 6, Sp-RST looks for the maximum value of the dependency among all the computed \(\gamma ( AllComb _{(C_r)})\). The maximum dependency reflects the baseline value for the feature selection task. The output is the following:

-

4

In a next step, Sp-RST performs a filtering process to only keep the set of all combinations, which have the same dependency degrees, as the already selected dependency baseline value (\(MaxDependency = 4\)), i.e., \(\gamma ( AllComb _{(C_r)}) = MaxDependency = 4\). By applying Algorithm 7, the following output is obtained:

-

Temperature, 4, 1

-

Headache, Temperature, 4, 2

In fact, through these computations, the algorithm removes in each level the unnecessary attributes that may negatively influence the performance of any learning algorithm.

At a final stage, and using the results generated from the previous step and by applying Algorithm 8, Sp-RST looks for the minimum number of features among all the \( Size _{( AllComb _{(C_r)})}\). Once determined (\(minNbF = 1\)), the algorithm only keeps the set of combinations having the same minimum number of features as minNbF. The filtered selected features define the reduct set and describe all concepts in the initial \(T_{ RDD _i}(C_r)\) training data set. The output of Algorithm 8 and which presents the Reduct for \(m = 1\) is the following:

-

Temperature

Based on these calculations, for \(m = 1\), Sp-RST reduced the \(TDD_{i=1}(C_{r=2})\) to \( Reduct _{m=1} = \{Temperature\}\).

The same calculations will be applied to \(m = 2\), and the output is \( Reduct _{m=2} = \{Headache\}\) (as the data is composed of a single feature).

At this stage, different reducts are generated from the different m partitions. With respect to Algorithm 1, lines 12–14, a union of the obtained results is required to represent the initial big information table \(T_{ RDD }(C)\), i.e., Table 1. The final output is \(Reduct = \{Headache, Temperature\}\).

In this example, we presented a single iteration of Sp-RST, i.e., \(N = 1\). Therefore, line 16 on Algorithm 1 will not be covered in this example.

Sp-RST could reduce the big information table presented in Table 1 from \(T_{ RDD }(C)\) to \(T_{ RDD }(Reduct)\). The output is presented in Table 8.

6 Experimental setup

6.1 Benchmark

To validate the effectiveness of Sp-RST, we require a data set with a large number of attributes that is also defined by a large number of data instances. The Amazon Commerce reviews data set from the UCI machine learning repository [4] fulfills this requirement. The Amazon data set was initially build from several customer reviews on the Amazon commerce Web site. The base was constructed based on the identification of the most active users, aiming at performing authorship identification. The database enclosed a total of 1500 data instances which are described using 10,000 features (linguistic style such punctuation, length of words, sentences, etc.) and 50 distinct classes (referring to authors). The Amazon data items are identically distributed across the data set classes, i.e., for each class there are 30 items.

We demonstrate the scalability of our approach by considering subsets of this data set in terms of attributes. To be more precise, we have created five additional data sets by randomly choosing 1000, 2000, 4000, 6000, and 8000 out of the original 10,000 attributes. We use these sets to evaluate our proposed method as discussed in Sect. 6.2 and refer to them as Amazon1000, Amazon2000, ..., Amazon10,000 in the following.

6.2 Evaluation metrics

To evaluate the scalability of the parallel Sp-RST, we consider the standard metrics which are the speedup, the scaleup, and the sizeup from literature [42]. These are defined as follows:

-

For the speedup, we keep the size of the data set constant (where size is measured by the number of features, i.e., we use the original data set with 10,000 features) and increase the number of nodes. For a system with m nodes, the speedup is defined as:

$$\begin{aligned} \text {Speedup(m)} = \frac{\text {runtime on one node}}{\text {runtime on } m \text { nodes}} \end{aligned}$$An ideal parallel algorithm has linear speedup: The algorithm using m nodes solves the problem in the order of m times faster than the same algorithm using a single node. However, this is difficult to achieve in practice due to startup and communication cost as well as interference and skew [42] which may lead to a sub-linear speedup.

-

The sizeup keeps the number of nodes constant and measures how much the runtime increases as the data set is increased by a factor of m:

$$\begin{aligned} \text {Sizeup(m)} = \frac{\text {runtime for data set of size } m \cdot s}{\text {runtime for baseline data set of size } s} \end{aligned}$$To measure the sizeup, we use the smaller databases described in Sect. 6.1. We use 1000 features as a baseline and consider 2000, 4000, 6000, 8000, and 10,000 features, respectively. A parallel algorithm with a linear sizeup has a very good sizeup performance: Considering a problem that is m times larger than a baseline problem, the algorithm requires in the order of m times more runtime for the larger problem.

-

The scaleup evaluates the ability to increase the number of nodes and the size of the data set simultaneously:

$$\begin{aligned} \text {Scaleup(m)} = \frac{\text {runtime for data set of size } s \text { on 1 node}}{\text {runtime for data set of size } s \cdot m \text { on } m \text { nodes}} \end{aligned}$$Again, we use the sub-data set with 1000 features as a baseline. Here, a scaleup of 1 implies ‘linear’ scaleup, which similarly to linear speedup is difficult to achieve.

As previously highlighted in Sect. 2, a preliminary version of our proposed solution was introduced in [8] as an attempt to deal with feature selection in the big data context. Yet, let us recall that in [8], both of the sizeup and scaleup were measured based on the number of features per partition, and hence, they are based on a modified definition of the standard metrics which are detailed above. However, we think that using the overall number of attributes is a much more natural setup as it will give insights into the performance depending on the input data set rather than the partitions, i.e., the proper definitions of the metrics are adopted in this paper.

To demonstrate that our distributed Sp-RST solution performs its feature selection task well without sacrificing performance, we perform model evaluation using a Naive Bayes and a random forest classifier. For the evaluation, we use the standard measures which are the precision, the recall, the accuracy and F1 score as well as the runtime (measured in seconds), to compare the quality of the feature set selected by Sp-RST with other feature selection methods as described in Sect. 6.3. The metrics definitions are as follows (where TP: True positive, TN: True negative, FP: False positive, and FN: False negative):

-

Precision: measures the ratio of correctly predicted positive observations to the total predicted positive observations, and is defined as:

$$\begin{aligned} \text {Precision} = \frac{\text{ TP }}{\text{ TP } \text{+ } \text{ FP }} \end{aligned}$$ -

Recall: measures the ratio of correctly predicted positive observations to all observations in the actual class—yes, and is defined as:

$$\begin{aligned} \text {Recall} = \frac{\text{ TP }}{\text{ TP } \text{+ } \text{ FN }} \end{aligned}$$ -

Accuracy: measures the ratio of correctly predicted observation to the total observations, and is defined as follows:

$$\begin{aligned} \text {Accuracy} = \frac{\text{ TP } \text{+ } \text{ TN }}{\text{ TP } \text{+ } \text{ FN } \text{+ } \text{ TN } \text{+ } \text{ FP }} \end{aligned}$$ -

F1 score: is the weighted average of Precision and Recall. F1 score is defined as follows:

$$\begin{aligned} \text {F1 score} = 2*\frac{\text{ Recall } \text{* } \text{ Precision }}{\text{ Recall } \text{+ } \text{ Precision }} \end{aligned}$$

We remark that Sp-RST is a stochastic algorithm. For Sp-RST, the process of randomization is applied twice. Once when partitioning the data set into m data blocks and second during the selection of one reduct among the generated set (family) of reducts. To diminish the effect of the first randomization process, we perform several iterations of the main part of the algorithm (Algorithm 1) and we only keep the set of attributes that are selected in all iterations. The second randomization process is already justified and supported by the fundamentals of the theory of rough sets as presented in Sect. 3. We, therefore, conduct a deep analysis of the stability of the attribute sets which were selected by performing several runs of Algorithm 1 and then report averages and standard deviations whenever appropriate.

To investigate the significance of any noticed variation in the classification performance when random forest and Naive Bayes are applied to the initial data set and to the reduced set generated by Sp-RST and other feature selection techniques, we perform Wilcoxon signed rank tests with Bonferroni correction.

6.3 Experimental environment

In the following, we conduct a detailed study of various parameters of Sp-RST with the aim to analyze how these can affect the system’s runtime as well as the stability of the attribute selection task. We then apply a Naive Bayes and a random forest classifier on the original data set and the reduced data sets produced by Sp-RST and other feature selection techniques. We use the scikit-learn random forest implementationFootnote 13 with the following parameters: \(\text {n}\_\text {estimators}=1000\), \(\text {n}\_\text {jobs}=-1\), and \(\text {oob}\_\text {score}=\text {True}\). A Stratified 10-Folds cross-validatorFootnote 14 is used for all our conducted experiments. Moreover, we use the Naive Bayes implementation from Weka 3.8.2.Footnote 15

The Sp-RST algorithm is implemented in Scala 2.11 within the Spark 2.1.1 framework. Our experiments for Sp-RST are performed on Grid5000,Footnote 16 a large-scale testbed for experiment-driven research. Within this testbed, we used dual 8 core Intel Xeon E5-2630v3 CPUs and 128 GB memory. Since the study does not require a scalable version of the two classifiers, these experiments are run on a standard laptop configuration with Intel(R) Core(TM) i7-7500U CPU, 16 GB RAM, 64-bit, Windows-10.

Preliminary results revealed that a maximum of 10 features per partition is the limit that can be processed by Sp-RST. We therefore perform experiments using 4, 5, 8, and 10 features per partition in Algorithm 1. We run all settings on 1, 2, 4, 8, 16, and 32 nodes on Grid5000. When considering scalability, we set the number of iterations in Algorithm 1 to 10 (based on preliminary experiments). However, we perform an additional analysis of the feature selection process across different iterations in Sect. 7.1.

To ensure a fair comparison, we restrict our comparison with other feature selection methods to filter techniques. These methods include both, attribute and subset evaluation methods. For subset evaluation, we use a ‘Best First’ greedy search method. For attribute evaluation, we need to either provide a threshold or a number of features to be selected. We set the number of features to be selected to a value comparable with Sp-RST, i.e., the average number of features selected for each parameter setting considered and additionally use 0 as a threshold. We determine the sets of features selected by these methods and then perform model evaluation with a Naive Bayes and a random forest classifier as discussed previously.

Subset selection:

-

CfsSubsetEval considers the individual predictive ability of each feature along with the degree of redundancy between them

-

ConsistencySubsetEval considers the level of consistency in the class values when the training instances are projected onto the subset of attributes

Attribute selection:

-

Sum squares ratio, which measures the ratio of between-groups to within-groups sum of squares.

-

Chi squared, which computes the value of the chi-squared statistic with respect to the class.

-

Gain ratio, which measures the gain ratio with respect to the class.

-

Information gain, which measures the information gain with respect to the class.

-

Correlation, which measures the correlation (Pearson’s) between it and the class.

-

CV, which first creates a ranking of attributes based on the Variation value, then divides into two groups, last using Verification method to select the best group.

-

ReliefF, which repeatedly samples an instance and considers the value of the given attribute for the nearest instance of the same and different class.

-

Significance, which computes the probabilistic significance as a two-way function (attribute-classes and classes-attribute association).

-

Symmetrical uncertainty, which evaluates the symmetrical uncertainty with respect to the class.

For sum squares ratio, we have used the version implemented in Smile;Footnote 17 while for the other three techniques, we have used the implementation provided in Weka 3.8.2.Footnote 18

7 Discussion of results

We first examine the feature selection process over several iterations, which is a crucial parameter of Sp-RST (Sect. 7.1). Afterwards, we analyze the stability (Sect. 7.2) and scalability (Sect. 7.3) of our proposed feature selection approach. Finally, we compare its performance with other state-of-the-art feature selection techniques (Sect. 7.4).

7.1 Number of iterations in Sp-RST

We first have a closer look at one of the parameters of Sp-RST, i.e., the number of iterations (N in Algorithm 1). We perform four independent runs (or repetitions) of Sp-RST with 1, 2, ..., 20 iterations, and for each iteration record the elected features as well as the elapsed time. We plot the average and standard deviation of the number of remaining features after each iteration over these four runs and for different parameter settings in Fig. 4.

We also perform runs of Sp-RST with 1, 2, ..., 20 iterations on different numbers of nodes, i.e., 1, 4, 8, and 16 nodes. The corresponding runtimes split by the number of the nodes are shown in Fig. 5. Here, the average and standard deviation are taken across all runs with the same number of iterations.

From Fig. 4, we observe that independently of the number of iterations, there is a clear ordering with respect to the number of selected features: The smaller the number of features per partition, the fewer features are selected by Sp-RST. The very small standard deviation (\(< 40\)) is hardly visible in the graphs and clearly demonstrates the stability of Sp-RST with respect to the number of features selected in an iteration. Recall that Sp-RST returns the intersection of the reducts from all iterations performed. Thus, the number of selected features strictly decreases with the number of iterations.

As discussed before, the runtime for the rough set component of our methods grows exponentially with respect to the number of features per partition. Thus, the runtime behavior in Fig. 5 is not surprising and clearly demonstrates that the number of features per partition should not grow too large. We also see that the runtime per iteration is quite stable so that the overall runtime grows linearly with the number of iterations. Based on these experiments, we have decided to use a medium number of iterations for the remainder of our analysis, namely 10.

7.2 Stability of feature selection

To validate the stability of the feature selection of Sp-RST, we have a closer look at the concrete features selected. We perform two sets of experiments. First, we look at the features selected by Sp-RST with a single iteration (\(N=1\)). Second, we consider our standard parameter setting of 10 iterations (\(N=10\)) and perform several independent runs of Algorithm 1. We particularly look at two extreme cases: the number of features that are always selected and the number of features that are never selected over a given number of these runs or iterations.

7.2.1 Iterations

We first consider features selected during a single iteration (\(N=1\)). We run 10, 20, 40, and 80 independent runs of Sp-RST with 1 iteration and for each run record the set of selected features. For each feature, we count the number of times it has been selected and depict the results in Fig. 6. Table 9 additionally shows the mean, standard deviation (SD), min and max of the number of features selected in a single iteration (over 80 runs performed independently).

From Fig. 6 and Table 9, we see that the number of features selected in a single iteration is very stable. However, depending on the number of features per partition, only about 45% (4, 5 features), 55% (8 features), and 70% (10 features) of these features are selected in all 10 iterations and already for 20 iterations all features will have been selected at least once. The latter also holds for 40 and 80 iterations. This demonstrates that the set of ‘core’ features (defined is Sect. 3.2) that are reliably selected is much smaller than the set of features selected in a single iteration. This observation is the main motivation for using several iterations and only returning features that are always selected as the result of Sp-RST.

7.2.2 Complete algorithm

To confirm that using the intersection of several iterations improves the stability of the feature selection, we now consider the complete algorithm with 10 iterations. We run 6 independent repetitions of Sp-RST with 10 iterations and plot the number of features selected 0, 1, ..., 6 times in these 6 runs in Fig. 7.

From Fig. 7, it is obvious that most features are either always or never selected. This demonstrates that Sp-RST reliably selects the same features, or in other words is able to identify the most relevant (always selected) and least relevant (never selected) features. As before, we provide the number of features selected in a run of Sp-RST in Table 10 and observe that the number of features selected is again very stable.

We remark that it is not a weakness of our proposed method that the actual features selected from one run to another differ. As discussed earlier in Sect. 3.2, there can be more than one reduct, a family of reducts, and selecting an arbitrary one among these is appropriate. Only core features appear in all the generated reducts set. The results presented in Fig. 7 support that our method is able to identify core features as well as features that are not in any of the resulting reducts.

7.3 Scalability

We measure the scalability of Sp-RST based on its speedup (Fig. 8), sizeup (Fig. 10) and scaleup (Fig. 12) as discussed in Sect. 6.2 and additionally plot the measured runtimes by the number of nodes and the different data sets (Figs. 9, 11). We see that the runtime increases considerably with the number of features per partition and decreases with the number of nodes used (Fig. 9). However, in the latter case, increasing the number of nodes from 1 to 2 or 4 has a much larger effect than increasing it further (Fig. 9). Moreover, it increases with the size of the database (Fig. 11), where size is measured in terms of the number of features.

Speedup for the six data sets discussed in Sect. 6.1

Runtimes (in seconds) for the six data sets discussed in Sect. 6.1

In terms of speedup (Fig. 8), we see that the speedup for our smallest data set (Amazon1000) with 8 or 10 features per partition is approximately linear. However, in general and in particular for larger databases, the speedup is sub-linear. It is better for settings with more features per partition (with 8 and 10 features always being the best parameter settings). This implies that having more partitions is not beneficial with respect to the parallel running time—even though the runtime for a single partition grows exponentially in the number of its features. This indicates that settings with 4 and 5 features per partition generate too small sub-databases that generate unnecessary large overhead due to high communication cost.

Figure 10 shows that Sp-RST has a very good sizeup performance; however, the more nodes are used, the more important the parameterization becomes. We see that 4 or 5 features per partition yield generally better sizeup than 8 or 10 features. While 4, 5 as well as 8 and 10 features show very similar sizeup performance. For the latter, the sizeup deteriorates for \(m \ge 6\). This is in stark contrast to the speedup results discussed earlier where we have observed better speedups for more features per partition. Thus, depending on the size of the database in terms of features and the number of nodes available different parameter settings will be more appropriate.

Figure 12 shows the scaleup for 1, 2, 4, and 8 nodes and the corresponding data sets Amazon1000, Amazon2000, Amazon4000, and Amazon8000. For up to 4 nodes, the scaleup is close to 1 for most parameter settings; however, it drops below 1 for 8 nodes. Therefore, we can conclude that using a very large number of nodes does not necessarily yield much better runtimes while we obtain large improvements with moderate parallelization. This is in line with the speedup results in Fig. 8 where the speedup was close to linear for most parameter settings for up to 4 or 8 nodes and only deteriorates quickly with more nodes.

7.4 Comparison with other feature selection techniques

To demonstrate that our method is suitable with respect to classification, i.e., to demonstrate that Sp-RST performs its feature selection task well without sacrificing performance, we perform model evaluation and investigate the influence of Sp-RST on the classification of a Naive Bayes (Sect. 7.4.1) and a random forest (Sect. 7.4.2) classifier and compare the results with the original data set with 10,000 features and other feature selection techniques (see Sect. 6.3).