Abstract

A common approach to controller synthesis for hybrid systems is to first establish a discrete-event abstraction and then to use methods from supervisory control theory to synthesise a controller. In this paper, we consider behavioural abstractions of hybrid systems with a prescribed discrete-event input/output interface. We discuss a family of abstractions based on so called experiments which consist of samples from the external behaviour of the hybrid system. The special feature of our setting is that the accuracy of the abstraction can be carefully adapted to suit the particular control problem at hand. Technically, this is implemented as an iteration in which we alternate trial control synthesis with abstraction refinement. While localising refinement to where it is intuitively needed, we can still formally establish that the overall iteration will solve the control problem, provided that an abstraction-based solution exists at all.

Similar content being viewed by others

1 Introduction

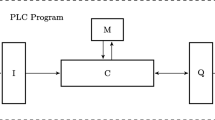

The analysis and the control of hybrid systems have become an important subject in modern control theory; see, e.g., Alur et al. (2000) and Tabuada (2009) and the references cited therein. A common approach is to construct a finite-state abstraction of the hybrid system under consideration and then to apply methods known from the domain of discrete-event systems, most notably model checking, reactive synthesis, or supervisory control.

A well established framework to obtain a finite-state abstraction is to strategically construct a finite partition or a finite cover on the continuous state space and to thereby define symbolic dynamics associated with the hybrid system; see e.g. Stiver et al. (1995), Tabuada (2009), Reissig et al. (2017), Pola and Tabuada (2009), Gol et al. (2014), Zamani et al. (2012), and Liu and Ozay (2016). For controller synthesis, this approach is particularly suited when the design of a discrete-event interface is considered part of the synthesis problem. In contrast, if the hybrid plant is equipped with a prescribed discrete-event interface, so called behavioural abstractions are an adequate alternative. In this approach, one seeks to derive a finite-state abstraction directly in terms of the external signals. This is the situation we study in the present paper. Footnote 1

The behavioural abstractions proposed by Moor and Raisch (1999) and Moor et al. (2002) are based on the notion of l-completeness from Willems’ behavioural systems theory; see, e.g., Willems (1991). By definition, a discrete-time system is l-complete if its infinite-time behaviour can be exactly recovered from all length l samples, \(l\in \mathbb {N}_{0}\), taken from all infinite-length signals. For a system which does not exhibit this property, the strongest l-complete approximation is then introduced as the tightest behavioural over-approximation that is l-complete. In the following, whenever clear from the context, we simply refer to l-complete approximations when we mean strongest l-complete approximations. An l-complete approximation can be obtained by exhaustively taking samples of length l from the original behaviour and generating the abstraction by superposition of these samples. For the control of hybrid systems with discrete-valued input- and output-signals, l-complete approximations can be used to synthesise controllers that address inclusion-type specifications in these signals. In this situation, the finite external signal range of the hybrid system leads to a finite-state realisation of the l-complete approximation and a variant of Ramadge and Wonham’s supervisory control theory (Ramadge and Wonham 1987, 1989) is subsequently applied to synthesise a supervisory controller. It is shown by Moor and Raisch (1999) and Moor et al. (2002) that if the supervisor suitably restricts the behaviour of the l-complete approximation, it also accomplishes the control objective for the underlying hybrid system. The applicability of this approach has been demonstrated with case studies in the area of process engineering, including the start-up of a distillation column (Moor and Raisch 2002). Recent methodological extensions have been reported by Schmuck and Raisch (2014), Park and Raisch (2015), and Moor and Götz (2018) to address time-variant systems and partial observation.

The existence of an appropriate supervisor depends on the approximation accuracy, namely, if the abstract model is too coarse, no supervisor may exist that meets the specification. This leads to an iteration of trial synthesis and abstraction refinement, until either a solution to the control problem is established or computational resources are exhausted. Considering l-complete approximations, the construction of a finer abstraction effectively amounts to incrementing the length l of samples taken from the original hybrid system. This can be done uniformly for all samples as, e.g., proposed by Moor and Raisch (1999) or, more efficiently, in a non-uniform way tailored for the particular control problem at hand. For abstractions by symbolic dynamics, Clarke et al. (2003) introduce counterexample guided refinement for the verification of hybrid systems, with a further development to address synthesis by Stursberg (2006). For behavioural abstractions, Moor et al. (2006) introduce the notion of an experiment as a set of non-uniform length samples taken from the original behaviour, with a subsequent discussion that leads to abstractions obtained from experiments. Technically, the resulting abstractions are still l-complete and, hence, they can be safely utilised in an abstraction-based design.

In the present paper, we further develop abstraction-based synthesis by experiments on behaviours. While the study by Moor et al. (2006) is entirely set within Willems’ behavioural systems theory, we now make use of explicit state machine realisations reported by Moor and Götz (2018). By a more detailed discussion, we gain some relevant benefits. First, we can literally refer to supervisory control of sequential behaviours with a synthesis algorithm given by Thistle and Wonham (1994a). As a consequence, we can address more general liveness specifications with eventual task completion as a prototypical example. This extends the results from Moor et al. (2006), which are restricted to l-complete safety specifications. Second, the technically involved temporal decomposition of the control problem used by Moor et al. (2006) to guided abstraction refinement can now be replaced by the controllability prefix introduced by Thistle and Wonham (1994b). The latter is an intermediate result of the synthesis procedure and characterises winning states, from which on the control objective can be accomplished. In the case that the synthesis procedure fails and, thus, a refinement of the abstraction is required, intuitively, such a refinement does not need to address any winning states. Likewise, we can identify failing states, from which on no supervisor can possibly satisfy the specification. Again intuitively, this status is retained under refinement and, thus, no refinement should address the behaviour after a failing state. For the iteration of trial controller synthesis and abstraction refinement, it is therefore proposed to refine the experiment only by addressing states that are neither winning nor failing. Since this iteration intentionally generates only specific experiments, it may fail to generate a particular experiment for which controller synthesis would succeed. Here, our main technical result, Theorem 17, guarantees that a successful experiment will be generated provided that such a one exists.

A predecessor of this paper has been presented at the Workshop on Discrete Event Systems; see Yang et al. (2018). The present version has been extended (a) to address iterative refinements in contrast to a single local refinement, (b) to allow for specifications with a Büchi acceptance condition, and (c) to include a formal proof of our main technical result. The remainder of the paper is organised as follows. After introducing elementary notation in Section 2, we summarise the concept of experiments and the behavioural abstractions obtained therefrom in Section 3. Realisations of the hybrid plant and its abstractions are discussed in Section 4. In Section 5 we present the control problem under consideration and derive an abstraction-based solution procedure. Finally, we discuss the proposed abstraction refinement scheme in Section 6. A simple example is used throughout the entire discussion, illustrating the suggested ideas and demonstrating the applicability of the proposed strategy.

2 Notation

We denote the positive, respectively non-negative, integers by \(\mathbb {N}\), respectively \(\mathbb {N}_{0}\). The cardinality of a finite set A is denoted by \(|A|\in \mathbb {N}_{0}\).

Given a set W, referred to as a signal range, and \(l \in \mathbb {N}\), we denote by Wl := {〈w1,…,wl〉|∀k,1 ≤ k ≤ l: wk ∈ W} the set of sequences over W of length l and we let \(W^{+}:= \cup \{W^{l}|l\in \mathbb {N}\}\). Introducing the empty sequence𝜖∉W, we formally define W0 := {𝜖} and write \(W^{*}:=\cup \{W^{l}|l\in \mathbb {N}_{0}\}=\{\epsilon \}\cup W^{+}\) for the set of finite sequences over W. For a sequence \(s\in W^{l}\subseteq W^{*}\), its length l is denoted |s|. The set of all countably infinite sequences overWis denoted\(W^{\mathbb {N}_{0}}\), with \(\mathbf {w}\in W^{\mathbb {N}_{0}}\) commonly interpreted as a discrete time signal\(\mathbf {w}:\mathbb {N}_{0}\rightarrow W\). Subsets \(S\subseteq W^{*}\) are referred to as ∗-languages, or languages of finite words, in contrast to ω-languages\({\mathscr{B}}\subseteq W^{\mathbb {N}_{0}}\), or languages of infinite words.

For two finite sequences s = 〈w1,…,wl〉∈ Wl and r = 〈u1,…,un〉∈ Wn, the concatenation is defined by 〈s,r〉 := 〈w1,…,wl,u1,…,un〉∈ Wl+n. For the empty sequence, let 〈s,𝜖〉 := s =: 〈𝜖,s〉. The concatenation of the finite sequence s = 〈w1,…,wl〉∈ Wl with a signal \(\mathbf {w}\in W^{\mathbb {N}_{0}}\) is denoted \(\mathbf {v}=\langle s,\mathbf {w}\rangle \in W^{\mathbb {N}_{0}}\), with v(k) = wk+ 1 for 0 ≤ k < l and v(k) = w(k − l) for k ≥ l. Again, for the empty sequence let 〈𝜖,w〉 := w. For notational convenience, we write 〈⋅,⋅,⋅〉 for 〈⋅,〈⋅,⋅〉〉.

A sequence r ∈ W∗ is a prefix of s ∈ W∗ if there exists t ∈ W∗ such that 〈r,t〉 = s; we then write r ≤ s. If, in addition, r≠s, we say that r is a strict prefix of s and write r < s. Likewise, r ∈ W∗ is a prefix of a signal \(\mathbf {w}\in W^{\mathbb {N}_{0}}\), if there exits \(\mathbf {v}\in W^{\mathbb {N}_{0}}\) such that 〈r,v〉 = w; we then write r < w. The set of all prefixes of a given sequence s ∈ W∗ or a given signal \(\mathbf {w}\in W^{\mathbb {N}_{0}}\) is denoted \(\mathop {\text {pre}} s\subseteq W^{*}\) or \(\mathop {\text {pre}} \mathbf {w}\subseteq W^{*}\), respectively. A sequence t ∈ W∗ is a suffix of s ∈ W∗ if there exists r ∈ W∗ such that 〈r,t〉 = s.

The left-shift operatorσl, \(l\in \mathbb {N}_{0}\), is defined for signals \(\mathbf {w}\in W^{\mathbb {N}_{0}}\) by \(\sigma ^{l} \mathbf {w}\in W^{\mathbb {N}_{0}}\) with (σlw)(k) := w(k + l) for all \(k\in \mathbb {N}_{0}\), and we let σ := σ1. For a signal \(\mathbf {w}\in W^{\mathbb {N}_{0}}\), the restriction to a finite integer interval \(D\subseteq \mathbb {N}_{0}\) is denoted w|D with, e.g., \(D=[k_{1},k_{2}):=\{k\in \mathbb {N}_{0}|k_{1}\le k < k_{2}\}\) and left-open and/or right-closed intervals defined likewise. When taking restrictions, we drop absolute time and reinterpret w|D as finite sequence, i.e., we identify \(\mathbf {w}|_{[k_{1},k_{2})}\) with \(\langle \mathbf {w}(k_{1}),\ldots ,\mathbf {w}(k_{2}-1) \rangle \in W^{k_{2}-k_{1}}\).

Taking point-wise images, all operators and maps in this paper are identified with their respective extension to set-valued arguments; e.g., we write \(\sigma {\mathscr{B}}\) for the image \(\{\sigma \mathbf {w}|\mathbf {w}\in {\mathscr{B}}\}\) of the ω-language \({\mathscr{B}}\subseteq W^{\mathbb {N}_{0}}\) under the operator σ with domain \(W^{\mathbb {N}_{0}}\), and, likewise, \(\mathop {\text {pre}}{\mathscr{B}}\) for the image \(\{\mathop {\text {pre}}\mathbf {w}|\mathbf {w}\in {\mathscr{B}}\}\) of \({\mathscr{B}}\) under the operator \(\mathop {\text {pre}}\).

3 Behavioural abstractions

We present a general scheme of system abstraction that allows for a guided refinement, and we do so within Willems’ behavioural systems theory. In this framework, a dynamical system is defined as a triple \({\Sigma }=(T,W,{\mathscr{B}})\), where T is the time axis, W is the external signal range, and \({\mathscr{B}}\subseteq W^{T}:=\{\mathbf {w}|\mathbf {w}: T\rightarrow W\}\) is the behaviour, i.e., the set of all external signals that the system may generate. It is then proposed to discuss and to categorise dynamical systems in terms of their behaviours. For the present paper, we focus attention on time-invariant systems with an external discrete-event interface. Technically, we consider the discrete time axis \(T=\mathbb {N}_{0}\) and the finite external signal range W, \(|W|\in \mathbb {N}\), and we interpret ω-languages \({\mathscr{B}}\subseteq W^{\mathbb {N}_{0}}\) as behaviours. Regarding time invariance, we refer to the following definition from Willems (1991).

Definition 1

A behaviour \({\mathscr{B}}\subseteq W^{\mathbb {N}_{0}}\) is time invariant if \(\sigma {\mathscr{B}}\subseteq {\mathscr{B}}\). A system \({\Sigma }=(\mathbb {N}_{0},W,{\mathscr{B}})\) is time invariant if its behaviour \({\mathscr{B}}\) is time invariant.

Given a system \({\Sigma }=(\mathbb {N}_{0},W,{\mathscr{B}})\), a behavioural abstraction is a system \({\Sigma }^{\prime }=(\mathbb {N}_{0},W,{\mathscr{B}}^{\prime })\) with \({\mathscr{B}}\subseteq {\mathscr{B}}^{\prime }\), i.e., provided that the original system Σ accounts for all possible trajectories the actual phenomenon modelled by Σ can generate, then so does the abstraction \({\Sigma }^{\prime }\). Behavioural abstractions are commonly used for the verification and the synthesis of safety properties, with optional liveness properties being addressed by additional structural requirements. Considering a time invariant system, we ask for a time invariant system abstraction. We refer to Moor et al. (2006) for the following notion of an experiment which we will use to construct a rich family of time-invariant behavioural abstractions.

Definition 2

An experiment over W is a ∗-language \(S\subseteq W^{*}\). If there exists a uniform upper bound on the length of all sequences in S, we say that S is of bounded length. Moreover, \(S\subseteq W^{*}\) is an experiment on a behaviour\({\mathscr{B}}\subseteq W^{\mathbb {N}_{0}}\) if S accounts for each signal from \({\mathscr{B}}\) in terms of a prefix, i.e., if \((\mathop {\text {pre}}\mathbf {w})\cap S\neq \emptyset \) for all \(\mathbf {w}\in {\mathscr{B}}\).

Given an experiment S on some behaviour \({\mathscr{B}}\), Moor et al. (2006) discuss abstractions \({\mathscr{B}}_{S}\), \({\mathscr{B}}\subseteq {\mathscr{B}}_{S}\), that can be obtained exclusively from S. To this end, we consider the candidate

i.e., \({\mathscr{B}}_{S}\) consists of all those trajectories which at any instance of time continue to evolve on some finite future sequence that matches S; see Fig. 1.

Experiment S on \({\mathscr{B}}\) with associated abstraction \({\mathscr{B}}_{S}\). Here, the prefixes of behaviours are interpreted as infinite computational trees with the empty string as the root at the very left and progress of discrete time towards the right. In the sketch, the trees are abstractly shown as cones; i.e., \(\mathop {\text {pre}}{\mathscr{B}}\) in dark yellow and \(\mathop {\text {pre}}{\mathscr{B}}_{S}\) in light green. The bounded-length ∗-language S is shown as a dark green stripe. In particular, the sketch indicates that any signal from \({\mathscr{B}}\) must pass S, as required by Definition 3. Regarding the abstraction, the signal \(\mathbf {w}\in {\mathscr{B}}_{S}\) shown in the sketch at every instance of time k must have a finite-length future that matches S; see Eq. 1. This is illustrated by the transparent grey copy of S shifted such that the root matches w(k) to indicate that w|[k,k+l) = w|[k,k+l− 1] ∈ S

It is immediate from the construction that \({\mathscr{B}}_{S}\) is time invariant and that S is an experiment on \({\mathscr{B}}_{S}\). Moreover, for any behaviour \(\tilde {{\mathscr{B}}}\subseteq W^{\mathbb {N}_{0}}\) we have the following implication:

It is shown in Moor et al. (2006), Proposition 7, that \({\mathscr{B}}_{S}\) is the unique smallest behaviour for which the above implication holds. Assuming that the original behaviour \({\mathscr{B}}\) is indeed time invariant, \({\mathscr{B}}_{S}\) is the smallest superset of \({\mathscr{B}}\) that can be characterised exclusively in terms of the experiment S. Therefore the system \({\Sigma }_{S}=(\mathbb {N}_{0},W,{\mathscr{B}}_{S})\) is referred to as the behavioural abstraction obtained from S under the assumption of time invariance, or in short as the abstraction obtained from S.

Moor et al. (2006) and Moor and Götz (2018) provide a comprehensive discussion regarding algebraic properties of experiments and the abstractions obtained therefrom. For the present paper, we pragmatically refer to Eq. 1 as the defining equation and point out some technical consequences relevant for the subsequent discussion. It is immediate from Eq. 1 that, regarding the associated abstraction, we may without loss of generality restrict our considerations to prefix-free experiments, i.e., to experiments that satisfy

A prefix-free experiment S is commonly interpreted as a tree with root\(\epsilon \in \mathop {\text {pre}}S\), nodes\(\mathop {\text {pre}}S\) and leavesS. Likewise, it is not restrictive to assume that the experiment is trim in the sense that every sequence s ∈ S contributes to the abstraction, i.e.,

or, equivalently, \(S\subseteq \mathop {\text {pre}}{\mathscr{B}}_{S}\). Thus, whenever convenient, we may restrict our discussion to trim and prefix-free experiments.

For the special case of the experiment \(S={\mathscr{B}}|_{[0,l]}\) with samples with uniform length l + 1, \(l\in \mathbb {N}_{0}\), the abstraction \({\mathscr{B}}_{S}\) obtained from S amounts to the strongest l-complete approximation proposed by Moor and Raisch (1999) and Moor et al. (2002). The approximation-refinement scheme known from l-complete approximations amounts to incrementing the sample length. This concept of refinement generalises to experiments as follows.

Definition 3

Given two experiments S and \(S^{\prime }\) over W, we say that \(S^{\prime }\) is a refinement of S if

We then write \(S\le S^{\prime }\).

The first conjunct in Eq. 5 ensures that the refinement accounts for each sample s ∈ S from the original experiment by an extended sample \(s^{\prime }\in S^{\prime }\), \(s\le s^{\prime }\), including the trivial case of \(s=s^{\prime }\). The second conjunct ensures that no other samples are in the refinement than those obtained by (possibly trivially) extending samples from the original experiment. Figure 2 illustrates a prefix-free experiment S on \({\mathscr{B}}\) where the leaves s ∈ S form a “barrier” through which each trajectory from \(\mathbf {w}\in {\mathscr{B}}\) must pass. In this view, a prefix-free refinement \(S^{\prime }\) of S is obtained by pushing the “barrier” to the right. The figure also indicates that a refinement is expected to lead to a tighter abstraction in that it more accurately encodes which trajectories are not within \({\mathscr{B}}\), e.g, \(\mathbf {v}\not \in {\mathscr{B}}\) is possibly in \({\mathscr{B}}_{S}\) but cannot be in \({\mathscr{B}}_{S^{\prime }}\). Technically, we obtain for two experiments S and \(S^{\prime }\) on \({\mathscr{B}}\) with \(S\le S^{\prime }\) that

as an immediate consequence of Eq. 1 and the second conjunct in Eq. 5; i.e., it is guaranteed that the abstraction does not become worse and we may optimistically expect it to become better.

Prefix-free experiment S on \({\mathscr{B}}\) with prefix-free refinement \(S^{\prime }\), \(S\le S^{\prime }\). As in Fig. 1, \(\mathop {\text {pre}}{\mathscr{B}}\) is interpreted as an infinite computational tree with the empty string as the root at the very left, graphically represented as a dark yellow cone. Both bounded length experiments S and \(S^{\prime }\) are shown as “barriers” and, as indicated in the sketch, any signal from \({\mathscr{B}}\) must pass both S and \(S^{\prime }\). Being a prefix-free refinement, the sequences in \(S^{\prime }\) are obtained by extending specific sequences from S, i.e., pushing the boundary to the right. Referring exclusively to the experiment S, both signals w and v could possibly belong to some behaviour on which S was conducted. Hence, we may expect \(\mathbf {v}\in {\mathscr{B}}_{S}\) although \(\mathbf {v}\not \in {\mathscr{B}}\). In contrast, we have \(\mathbf {v}|_{[0,l)}\not \in S^{\prime }\) for all \(l\in \mathbb {N}_{0}\) and, hence, \(\mathbf {v}\not \in {\mathscr{B}}_{S^{\prime }}\)

For the systematic construction of refinements, we propose to nominate a set \(R\subseteq S\) of refinement candidates and observe that

is indeed an experiment on \({\mathscr{B}}\) with \(S\le S^{\prime }\). For the special case of \(S={\mathscr{B}}|_{[0,l]}\) and R = S we obtain \(S^{\prime }={\mathscr{B}}|_{[0,l+1]}\), which coincides with the refinement of l-complete approximations.

4 State machine realisations

We further elaborate the proposed scheme of behavioural abstractions in the context of state realisations. Here, we account for a class of transition systems referred to as state machines, which we will utilise for realisations of the plant model, finite state abstractions, and the specification in the control problem under consideration.

Definition 4

A state machine is a quadruple P = (X,W,δ,X0), where X is the state set, W is the external signal range, \(\delta \subseteq X\times W\times X\) is the transition relation, and \(X_{0}\subseteq X\) is the set of initial states. The state machine P is called a finite state machine if \(|X|\in \mathbb {N}\).

We use the following terminology.

-

Whenever convenient, we reinterpret the transition relation with a set-valued map, recursively defined for (x,s) ∈ X × W∗ and w ∈ W by (a) δ(x,𝜖) := {x} and (b) \(\delta (x,sw):=\{x^{{\prime }{\prime }}|\exists x^{\prime }\in \delta (x,s)\colon (x^{\prime },w,x^{{\prime }{\prime }})\in \delta \}\); i.e., δ(x,s) denotes the set of states reachable from x by taking |s| transitions with labels as specified by s.

-

Referring to the set-valued map interpretation of δ, the set δ(X0,W∗) are the reachable states, and P is said to be reachable if δ(X0,W∗) = X. If δ(x,W) is non-empty for every reachable state x ∈ δ(X0,W∗), then P is termed deadlock-free.

-

The state machine P induces the full behaviour

$$ \mathcal{B}_{\text{full}}:=\{(\mathbf{w},\mathbf{x})| \forall k\in \mathbb{N}_{0} \colon (\mathbf{x}(k),\mathbf{w}(k),\mathbf{x}(k+1))\in\delta \text{\ \ and\ \ }\mathbf{x}(0)\in X_{0}\} $$(8)and the state space system \({\Sigma }_{\text {full}}:=(\mathbb {N}_{0},W\times X,{\mathscr{B}}_{\text {full}})\).

-

The external behaviour\({\mathscr{B}}_{\text {ex}}\) of Σfull is the projection of \({\mathscr{B}}_{\text {full}}\) onto \(W^{\mathbb {N}_{0}}\), i.e.,

$$ \mathcal{B}_{\text{ex}}:=\mathcal{P}_{W} \mathcal{B}_{\mathrm{\text{full}}}:= \{\mathbf{w}|\exists \mathbf{x} \colon (\mathbf{w},\mathbf{x})\in \mathcal{B}_{\text{full}}\}. $$(9)If a state machine \(P^{\prime }\) induces the external behaviour \({\mathscr{B}}^{\prime }\) of a system \({\Sigma }^{\prime }\), \(P^{\prime }\) is termed a realization of \({\Sigma }^{\prime }\), denoted \({\Sigma }^{\prime }\cong P^{\prime }\).

-

If |δ(X0,s)|≤ 1 for every s ∈ W∗, then P is said to be past-induced; in automata theory, this is also referred to as deterministic. We then write δ0(s) ∈ X for s ∈ W∗ with |δ(X0,s)| = 1 to denote the unique state reachable from X0 via the external sequence s.

In our subsequent discussion of controller synthesis, we assume that the plant is given as a state machine P = (X,W,δ,X0) with unrestricted initial conditions, i.e., X0 = X, and we note that this assumption implies time invariance for the induced full behaviour as well as for the induced external behaviour. Moreover, we consider the product W = U × Y as external signal space, where U and Y denote the ranges of input symbols and output symbols, respectively, and where we assume that

State machines with this property are called I/S/- machines. For the induced external behaviour, it can be seen that the input is free and the output does not anticipate the input, both technical terms defined within Willems’ behavioural framework; see Proposition 24 in Moor and Raisch (1999), which refers to Definitions VIII.1 and VIII.4 in Willems (1991). In particular, we will consider supervisory controllers which at any instance of time disable specific input symbols and which in turn accept any output symbol. Technically, all external symbols are organised as pairs w = (u,y) ∈ U × Y = W, with only the U-component considered controllable; this will be followed up in Section 5, including a formal definition of the corresponding control patterns (26). To this end, we address two remarks on how the requirement of a time-invariant I/S/- plant model can be relaxed by a preprocessing stage applicable in the context of controller synthesis with upper-bound behavioural-inclusion specifications.

Remark 5

Formally, Eq. 10 requires that every input symbol can be applied regardless of the current state of the plant. Nevertheless, if we are provided with a state machine P = (X,U × Y,δ,X) which fails to satisfy (10), we consider the substitute model \(P^{\prime }=(X,U\times Y^{\prime },\delta ^{\prime },X)\) with \(Y^{\prime }=Y\dot {\cup }\{\ddagger \}\) where we define the transition relation \(\delta ^{\prime }\) to issue the distinguished output symbol †∉Y whenever an invalid input symbol was applied:

Clearly, \(P^{\prime }\) satisfies (10). The considered upper-bound behavioural-inclusion specification can then be used to prevent the distinguished output symbol †∉Y from occurring in the closed-loop configuration. Now assume that a controller that has been designed for the plant substitute \(P^{\prime }\) such that † does not indeed occur in any external closed-loop signal. Whenever \(P^{\prime }\) attains a state x ∈ X such that there exists a transition \((x,(u,\ddagger ),x)\in \delta ^{\prime }\) for some u ∈ U, this transition will be prevented by the controller. In our specific setting of \(W=U\times Y^{\prime }\), the controller can only directly restrict the U-component of the external symbol. Hence, the controller must at least effectively disable the external symbols

whenever the plant attains the state x ∈ X. For the remaining transitions, however, \(\delta ^{\prime }\) matches the original transition relation δ; i.e.,

Therefore, the supervisor designed for the substitute \(P^{\prime }\) will implement exactly the same closed-loop behaviour when applied to the actual plant P.

Remark 6

The situation of restricted initial states is addressed in a similar fashion. Given P = (X,U × Y,δ,X0) with \(X_{0}\subsetneq X\), we consider the substitute model \(P^{\prime }=(X,U^{\prime }\times Y^{\prime },\delta ^{\prime },X)\), \(U^{\prime }=U\dot {\cup }\{\dagger \}\), \(Y^{\prime }=Y\dot {\cup }\{\ddagger \}\). Here, the distinguished input symbol ‡ is introduced to test whether the state is within the original set of initial states, and, if so, this is confirmed by the distinguished output symbol †. Technically, we define the transition relation by

We then design a controller for \(P^{\prime }\) with a specification that requires (a) that ‡ appears exclusively as the unique first input symbol and (b) that any further closed-loop requirements are only imposed conditionally, subject to † being generated as the very first output symbol. In order to apply the resulting controller to the actual plant, we need an additional device that generates an adequate output symbol when the distinguished input symbol ‡ is applied, i.e., we need to implement the additional transitions introduced by \(\delta ^{\prime }\). However, by clause (a), this is only necessary for the very first transition taken and, since P has the initial state restricted to X0, the additional device is a-priori known to generate † and it does so without affecting any state. From a practical perspective, this can be implemented by intercepting the closed-loop interconnection to hide the very first input symbol ‡ from the plant and by injecting a fake response † to the controller. For any subsequent transitions, the actual plant P matches the substitute \(P^{\prime }\) as in our previous Remark 5. Hence, the supervisor will enforce the conditional specification (b) in the adapted closed-loop configuration with the actual plant.

For a discrete-time model of hybrid plant dynamics with a discrete external interface, we consider an I/S/- machine P = (X,U × Y,δ,X) with a state set \(X\subseteq D\times \mathbb {R}^{n}, |D|\in \mathbb {N}\), and with finite input- and output-ranges, i.e., |U|, \(|Y|\in \mathbb {N}\). This is a rather general setting and, for practical applications, one needs to formally derive the transition relation δ from a more detailed model. Since the literature provides a rich variety of models for hybrid dynamics, we demonstrate this step by example.

Example 1

Consider a physical system with linear continuous dynamics and a finite number of linear controllers to implement individual modes of operation. Discretising time by a regular sampling period, we obtain the switched affine system

where \(k\in \mathbb {N}_{0}\) denotes the discrete time; \(\mathbf {x}\colon \mathbb {N}_{0}\rightarrow \mathbb {R}^{n}\) is the sampled continuous state trajectory; the discrete signal \(\mathbf {d}\colon \mathbb {N}_{0}\rightarrow D\) selects the mode of operation d(k) at time k; and the square matrix \(A_{d}\in \mathbb {R}^{n\times n}\) and the column vector \(B_{d}\in \mathbb {R}^{n}\) are obtained by sampling the closed-loop configuration for mode of operation d ∈ D. We can either directly interpret d as our input signal, or encode additional discrete dynamics by

where \(f:D\times U\rightarrow D\) is a complete transition function and \(\mathbf {u}\colon \mathbb {N}_{0}\rightarrow U\) is the discrete input signal. As discrete output, we propose a mode dependant finite partition of the continuous state space, i.e.,

with \(g:D\times \mathbb {R}^{n}\rightarrow Y\). The transition relation δ is then formally defined by

With W := U × Y, \(X:=D\times \mathbb {R}^{n}\) and unrestricted initial states X0 := X, the latter completes the construction of an I/S/- machine P = (X,W,δ,X0) with time-invariant induced external and internal behaviour, respectively.

Example 2

In a similar way to the above example, hybrid automata address the situation of a finite number of modes of operation, each with specific continuous dynamics. However, and in contrast to the above example, the generation of events is organised in dependency of the evolution of the continuous state and by referring to so called mode invariants and guard relations. A general and formal definition of hybrid automata semantics is quite involved and interested readers are referred to the literature; see, e.g., Henzinger (2000) or Chapter 7 in Tabuada (2009).

In the present study, we provide a simple practical example with hybrid automata semantics to which we will refer later in the context of abstraction based controller design. To this end, consider a vehicle which we shall navigate within a rectangular area \(A:=[0,w]\times [0,h]\subseteq \mathbb {R}^{2}\); see Fig. 3.

The vehicle is equipped with low-level continuous controllers which implement the modes of operation U := {u_nw, u_ne,u_sw,u_se} to drive the vehicle in the respective direction, i.e., north-west, north-east, south-west or south-east. With each mode u ∈ U, we associate a differential inclusion \(\frac {\mathrm {d}}{\mathrm {d}t} \varphi (t)\in F_{u}\), where the constant right-hand-side \(F_{u}\subseteq \mathbb {R}^{2}\) is set up as the sum of the respective nominal velocity (−v,v), (v,v), (−v,−v) or \((v,-v)\in \mathbb {R}^{2}\) and a square with diameter \(d\in \mathbb {R}\), d > 0, as a bounded additive disturbance. Each mode of operation is associated with the entire rectangular area Iu := A as the mode invariant.

An output event y ∈ Y := {y_n,y_s,y_w,y_e} will be generated while the vehicle is inside a guard region, denoted \(G_{\mathsf {y\_n}}\), \(G_{\mathsf {y\_s}}\), \(G_{\mathsf {y\_w}}\), \(G_{\mathsf {y\_e}}\subseteq \mathbb {R}^{2}\), respectively. To avoid trivial Zeno-behaviour, guards are enabled and disabled according to the mode of operation, e.g., when driving north-west, only the north guard \(G_{\mathsf {y\_n}}\) and the west guard \(G_{\mathsf {y\_w}}\) are enabled.

For a discrete-time model of the vehicle, we consider the overall state set X := A. On this state set, we define the transition relation \(\delta \subseteq X\times (U\times Y)\times X\) by \((x,(u,y),x^{\prime })\in \delta \), if and only if (a) there exists τ ≥ 0 and a continuous state trajectory \(\varphi :[0,\tau ]\rightarrow I_{u}\), differentiable on (0,τ) with \(\frac {\mathrm {d}}{\mathrm {d}t} \varphi (t)\in F_{u}\) for all t ∈ (0,τ), with the initial state φ(0) = x and the final state \(x^{\prime }=\varphi (\tau )\); (b) \(x^{\prime }\) is within the guard Gy; and (c) the guard Gy is enabled by mode u ∈ U. To practically test whether a tuple \((x,(u,y),x^{\prime })\) satisfies the above conditions (a)–(c), we observe that the relevant sets Iu = A, Fu and Gy are convex closed polyhedra. This implies that the positions reachable by some qualifying continuous trajectory φ amount to the convex closed polyhedron V = {x + λv|v ∈ Fu,λ ≥ 0}∩ A; see, e.g., Halbwachs et al. (1997). Provided that the guard Gy is enabled by mode u, we have that \((x,(u,y),x^{\prime })\in \delta \) if and only if \(x^{\prime }\in V\cap G_{y}\). In this context, the transitions in δ are also referred to as logic-time transitions to contrast with the evolution of the continuous state with respect to physical time.

This completes the construction of the I/S/- machine P = (X,W,δ,X0) with W := U × Y and X0 = X = A.

Referring back to Definition 2, recall that an experiment S must account for all trajectories \(\mathbf {w}\in {\mathscr{B}}\) by some finite prefix \(s\in (\mathop {\text {pre}}\mathbf {w})\cap S\). Hence, the construction of an experiment practically amounts to the inspection of specific finite prefixes in \(\mathop {\text {pre}}{\mathscr{B}}\). For example, to set up an initial experiment by \(S:={\mathscr{B}}|_{[0,l]}\subseteq \mathop {\text {pre}}{\mathscr{B}}\) for some \(l\in \mathbb {N}\) we need an implementable test for whether or not \(s\in \mathop {\text {pre}}{\mathscr{B}}\) for all finite sequences s of length l + 1. Likewise, referring to Eq. 7, a refinement of an experiment S w.r.t. the candidates \(R\subseteq S\) requires us to test whether or not \(\langle s,w\rangle \in \mathop {\text {pre}}{\mathscr{B}}\) for all s ∈ R and w ∈ W. Given an I/S/- machine P = (X,W,δ,X) with the external behaviour \({\mathscr{B}}\), Moor et al. (2002) propose to base the required test on the following recursively defined sets of compatible states:

where r ∈ W∗, u ∈ U, y ∈ Y, and the right hand side of Eq. 20 is a one-step forward reachability operator applied to \(\mathcal {X}({r})\) with (u,y) as a constraint for the external symbols. By construction, \(\mathcal {X}({s})\subseteq X\) consists of all states the I/S/- machine can attain after generating the finite sequence of external symbols s ∈ W∗. Since I/S/- machines do not deadlock, this implies that \(s\in \mathop {\text {pre}}{\mathscr{B}}\) if and only if \(\mathcal {X}({s})\neq \emptyset \). Hence, for behaviours realised by I/S/- machines, setting up and/or refining experiments effectively amounts to a finite iteration of the one-step forward-reachability operator in Eq. 20. The latter type of reachability operator has been intensively investigated over the past two decades and the literature provides a variety of efficient computational methods addressing specific classes of hybrid systems; see, e.g., Alur et al. (2000), Alur et al. (1996), and Lafferriere et al. (2000) for the exact computation of sets of reachable states for a restricted class of continuous dynamics, or, e.g., Althoff et al. (2010), Chutinan and Krogh (1998), Frehse (2008), Henzinger et al. (2000), Maler and Dang (1998), Mitchell et al. (2005), and Reissig (2011) for safe over-approximations for richer classes of continuous dynamics.

Example 3

(cont.) For our vehicle navigation example, all relevant sets are convex closed polyhedra and the differential inclusions have a constant polyhedral right-hand-side. Sets of states reachable by one logic-time transition can hence be computed exactly, e.g., using the software Parma Polyhedra Library (PPL); see Bagnara et al. (2008). We outline the overall computational procedure that is used to construct an initial experiment and a refinement thereof.

Consider u_nw as the first input symbol being applied in the vehicle navigation example. Then the subsequent output symbol can be either y_n or y_w since the initial states are not restricted and since \(G_{\mathsf {y\_n}}\) and \(G_{\mathsf {y\_w}}\) are the only enabled guards. This implies \(\mathcal {X}((\mathsf {u\_nw},\mathsf {y\_s}))=\emptyset \) and \(\mathcal {X}((\mathsf {u\_nw},\mathsf {y\_e}))=\emptyset \). Under the additional hypothesis that we actually observe y_w, we conclude that the attained state must be within \(G_{\mathsf {y\_w}}\). Hence, we obtain \(\mathcal {X}((\mathsf {u\_nw},\mathsf {y\_w}))=G_{\mathsf {y\_w}}\) as compatible states; see Fig. 4. Likewise, we obtain \(\mathcal {X}((\mathsf {u\_nw},\mathsf {y\_n}))=G_{\mathsf {y\_n}}\). Repeating the above reasoning for all possible first input symbols, we establish that

and obtain our initial experiment \(S:={\mathscr{B}}|_{[0,0]}\). This experiment encodes the fact that for our vehicle navigation example, only relevant guards are enabled depending on the input symbol. This is expected to be insufficient for the design of a supervisor that solves typical navigation tasks like, e.g., to visit a specific guard region.

For illustration purposes, we consider \(R:=\{(\mathsf {u\_nw},\mathsf {y\_w})\}\subseteq S\) as our refinement candidate. Since \(\mathcal {X}((\mathsf {u\_nw},\mathsf {y\_w}))=G_{\mathsf {y\_w}}\) from the foregoing discussion, we now need to compute \(\mathcal {X}(\langle (\mathsf {u\_nw},\mathsf {y\_w}),(u,y)\rangle )\) for all u ∈ U and y ∈ Y by applying Eq. 20. Consider the case of u = u_ne, i.e., we apply the input symbol u_ne when the vehicle position x is initially in \(\mathcal {X}((\mathsf {u\_nw},\mathsf {y\_w}))\). The continuous time motion of the vehicle is then modelled by a trajectory \(\varphi :[0,\tau ]\rightarrow I_{\mathsf {u\_ne}}=A\) with φ(0) = x and \(\frac {\mathrm {d}}{\mathrm {d}t} \varphi (t)\in F_{\mathsf {u\_ne}}\) for all t ∈ (0,τ). Recall that in our example, all relevant sets are convex closed polyhedra. This implies that all positions reachable by the vehicle are given by

see also Fig. 4. Compatible states are then obtained by \(\mathcal {X}(\langle (\mathsf {u\_nw},\mathsf {y\_w}),(\mathsf {u\_ne},y)\rangle )= V\cap G_{y}\) with y ∈ Y and are again convex closed polyhedra. More specifically, we have \(\mathcal {X}(\langle (\mathsf {u\_nw},\mathsf {y\_w}),(\mathsf {u\_ne},\mathsf {y\_n})\rangle )\) as shown in Fig. 4 and \(\mathcal {X}(\langle (\mathsf {u\_nw},\mathsf {y\_w}),(\mathsf {u\_ne},y)\rangle )=\emptyset \) for y≠y_n. This procedure can be applied repeatedly for subsequent input symbols. To this end, Fig. 4 shows the sets of compatible states for 〈(u_nw,y_w),(u_ne,y_n), (u_se,y_s)〉 and 〈(u_nw,y_w),(u_ne,y_n),(u_se,y_e)〉. Coming back to the refinement, we apply the same analysis to \(\mathcal {X}((\mathsf {u\_nw},\mathsf {y\_w}))\) as above, but now for the remaining choices of the input symbol, i.e., u = u_ne, u = u_sw and u = u_se. As it turns out, the only extensions of our refinement candidate (u_nw,y_w) by one more pair of an input symbol and an output symbol with a non-empty set of compatible states are the following length-two sequences:

Referring to Eq. 7, the refined experiment \(S^{\prime }\) is obtained from \(S:={\mathscr{B}}|_{[0,0]}\), Eq. 21, by removing the refinement candidate (u_nw,y_w) and by including the above six extensions; for a tree representation of \(S^{\prime }\) see Fig. 5.

Once an experiment S on the external behaviour \({\mathscr{B}}\) of the plant P has been obtained, it can be used to set up a finite-state realisation of the corresponding abstraction ΣS. Roughly speaking, the realisation tracks the longest suffix of the signal generated so far that matches some prefix within S.

Theorem 7

(See Moor and Götz (2018), Lemma 14) Given a prefix-free trim experiment \(S\subseteq W^{*}\), 𝜖∉S≠∅, of bounded length, the abstraction \({\Sigma }_{S}=(\mathbb {N}_{0},W, {\mathscr{B}}_{S})\) obtained under the assumption of time invariance (see Eq. 1) is realised by the state machine PS = (ZS,W,δS,{𝜖}), where ZS := preS consists of the prefixes of S and where the transition relation δS is defined as follows: \((z,w,z^{\prime })\in Z_{S} \times W \times Z_{S}\) is in δS if and only if \(z^{\prime }=\langle z^{\triangleleft },w\rangle \) with z⊲ the longest suffix of z that is in ZS but not in S; i.e., if and only if z⊲ is the unique longest sequence in {r ∈ W∗|∃t ∈ W∗: 〈t,r〉 = z}∩{r ∈ ZS|r∉S}. Moreover, PS is reachable, deadlock-free and past-induced. Provided that W is a finite set, PS is a finite state machine.

Note that for the degenerated cases of 𝜖 ∈ S or S = ∅ we have \({\mathscr{B}}_{S}=W^{\mathbb {N}_{0}}\) or \({\mathscr{B}}_{S}=\emptyset \), respectively, with well known realisations. To provide some intuition regarding the transition relation given by the above theorem, we consider the initial state z0 := 𝜖 and a signal \(\mathbf {w}\in {\mathscr{B}}_{S}\). In particular, there exists \(l\in \mathbb {N}_{0}\) such that w|[0,l) ∈ S. By 𝜖∉S, this implies l ≥ 1. Moreover, since S is prefix-free, we have w|[0,k)∉S and \(w|_{[0,k)}\in \mathop {\text {pre}}S=Z_{S}\) for all k < l. Now let zk := w|[0,k) for all k ≤ l and observe, for all k < l, that zk is its own longest suffix z⊲ qualifying for z⊲∉S and z⊲ ∈ ZS, and, hence, (zk,w(k),zk+ 1) ∈ δS. In other words, the state records all past external symbols until zl ∈ S. However, once in state zl ∈ S, no additional external symbols can be recorded with the given state set unless one first drops a sufficient amount of symbols recorded earlier. Technically, we are asking for a suffix z⊲ of zl such that 〈z⊲,w(l)〉∈ ZS. To see that such a suffix exists, we consider the shortest suffix 𝜖 of zl and we refer to time invariance to obtain 〈𝜖,w(l)〉 = w(l) ∈ ZS. For the transition relation proposed in the theorem, we take the longest qualifying z⊲ to drop as little as possible of the recorded symbols and, as a conjecture, obtain (zl,w(l),zl+ 1) ∈ δS with zl+ 1 := 〈z⊲,w(l)〉. If we can continue this construction indefinitely and if the conjecture holds true at each stage, we obtain a state trajectory \(\mathbf {z}:\mathbb {N}_{0}\rightarrow Z_{S}\), z(k) := zk for all k, such that (w,z) is in the full behaviour induced by PS. Note that it is neither obvious that the construction actually can be continued indefinitely, nor, for the converse behavioural inclusion, that any trajectory generated by PS is within \({\mathscr{B}}_{S}\). The cited reference in Theorem 7 provides technical proofs for both claims and the above theorem.

Example 4

(cont.) For the experiment S from Fig. 5, the realisation PS is obtained by the following construction. The nodes \(s\in \mathop {\text {pre}}S\) in Fig. 5 become the states of PS and the edges in Fig. 5 become transitions with an event label to match the most recent event of the respective target node; see Fig. 6, transitions given in black color. Then, per leaf z ∈ S, we: (a) drop the minimum prefix from the node label z ∈ S to obtain z⊲ ∈{r ∈ ZS|r∉S}; and (b) for all w ∈ W such that \(z^{\prime }:=\langle z^{\triangleleft },w\rangle \in Z_{S}\) insert a transition from z to \(z^{\prime }\) with label w. For the node z = 〈(u_nw,y_w),u_nw,y_w)〉, we have z⊲ = 〈(u_nw,y_w)〉 with out-going transitions indicated in green color in Fig. 6. For all other nodes z ∈ S, z≠〈(u_nw,y_w),u_nw,y_w)〉, we obtain z⊲ = 𝜖 and insert per w ∈ W the transition (z,w,w) ∈ δS. This amounts to 12 × 8 = 96 transitions, which are omitted in Fig. 6.

Fragment of the realisation PS of the experiment S from Fig. 5

We observe for the vehicle navigation example that not only the actual plant P but also the realisation PS of the abstraction is an I/S/- machine. This can always be achieved by suitable trimming, and we can without loss of generality restrict the discussion to experiments such that PS is an I/S/- machine.

Proposition 8

Consider an experiment \(S\subseteq W^{*}\), W = U × Y, on an external behaviour \({\mathscr{B}}\subseteq W^{\mathbb {N}_{0}}\) realised by an I/S/- machine P with input range U and output range Y. With PS = (ZS,W,δS,{𝜖}) from Theorem 7, let

and trim S by

Then \(S^{\prime }\subseteq S\) is an experiment on \({\mathscr{B}}\).

Proof

To show that \(S^{\prime }\) is an experiment on \({\mathscr{B}}\), we pick an arbitrary \(\mathbf {w}\in {\mathscr{B}}\). Since S is an experiment on \({\mathscr{B}}\), there exists s ∈ S with s < w. To show that \(s\in S^{\prime }\), we pick an arbitrary prefix z ≤ s and an arbitrary u ∈ U and establish the existence of y ∈ Y and \(z^{\prime }\in Z_{S}\) such that \((z,(u,y),z^{\prime })\in \delta _{S}\). For our choice, we refer to any state trajectory x such that (w,x) is in the full behaviour induced by P. Since P is an I/S/- machine, there exists another trajectory \((\mathbf {w^{\prime }},\mathbf {x^{\prime }})\) from the full behaviour such that \((\mathbf {w^{\prime }},\mathbf {x^{\prime }})|_{[0,l)}=(\mathbf {w},\mathbf {x})|_{[0,l)}\), \(\mathbf {w^{\prime }}(l)=(u,y)\) and \(\mathbf {x^{\prime }}(l)=\mathbf {x}(l)\) for l = |z| and some y ∈ Y. By \({\mathscr{B}}\subseteq {\mathscr{B}}_{S}\) we obtain the unique state trajectory \(\mathbf {z^{\prime }}\) such that \((\mathbf {w^{\prime }},\mathbf {z^{\prime }})\) is in the full behaviour induced by PS. By the definition of δS we have \(\mathbf {z^{\prime }}(k)=\mathbf {w^{\prime }}|_{[0,k)}\) for all k ≤ l. In particular, we have \(\mathbf {z^{\prime }}(l)=z\) and, hence, \((z, (u,y), \mathbf {z^{\prime }}(l+1))\in \delta _{S}\). □

Applying the above trimming procedure repeatedly, it generates a monotonously decreasing sequence \(S\supseteq S^{\prime }\supseteq S^{\prime \prime }\supseteq \cdots \) of experiments. For our situation of a finite external signal range and experiments of bounded length, S is a finite set. Hence, a fixpoint S∗ is attained after finitely many stages of trimming. Then, the definition of Zio implies that the S∗ is indeed realised by an I/S/- machine. Moreover, the realisation by an I/S/- machine is retained under refinement by Eq. 7.

5 Supervisory Control

Given a plant model with discrete-event interface, we seek to design a controller that restricts the behaviour to satisfy a prescribed upper bound specification. This type of control problem is addressed by supervisory control theory, as introduced by Ramadge and Wonham (1987, 1989), however, using regular ∗-languages and finite automata realisations as base models. For the present paper, we refer to an adaption of supervisory control to ω-languages to address infinite-length signals as discussed by (Thistle and Wonham 1994a; 1994b), and we propose to substitute the actual plant by a finite state abstraction obtained from an experiment.

Formally, a supervisor is defined as a causal feedback map f with domain W∗ that, at any instance of time \(k\in \mathbb {N}_{0}\), maps the present prefix s = w|[0,k) generated by the plant to a control pattern\(\gamma =f(s)\subseteq W\), with the effect that the subsequently generated symbol must satisfy w(k) ∈ γ, i.e., the plant is restricted to only generate symbols that match the respective control pattern. Most commonly the range Γ of all admissible control patterns is derived from partitioning W into controllable and uncontrollable events. However, to address I/S/- machines as plants, we refer to the product W = U × Y and define

as the range of the supervisor, i.e., \(f\colon W^{*}\rightarrow \varGamma \). By this choice, the supervisor imposes its restriction on the input symbol only and accepts any output symbol generated by the plant. Note also that, by construction, Γ is closed under unions of control patterns and, thus, our setting here is formally covered by the relevant references (Thistle and Wonham 1994a, 1994b).

In the more common setting with a partition into controllable and uncontrollable events, a supervisor could apply a control pattern such that the plant in its current state cannot generate any of the enabled symbols. This form of temporal blocking is undesired and, in general, needs to be addressed by the synthesis procedure. However, for the specific situation of I/S/- machines and our tailored choice of Γ in Eq. 26, temporal blocking is not an issue.

Definition 9

Given a deadlock-free state machine P = (X,W,δ,X0), a supervisor \(f\colon W^{*}\rightarrow \varGamma \)preserves liveness in closed-loop configuration with P if for all signals \((\mathbf {w},\mathbf {x})\in {\mathscr{B}}_{\text {full}}\) from the induced full behaviour that comply with f up to some time \(k\in \mathbb {N}_{0}\), i.e., w(κ) ∈ f(w|[0,κ)) for all κ < k, there exist w ∈ f(w[0,k)) and \((\mathbf {w}^{\prime },\mathbf {x}^{\prime })\in {\mathscr{B}}_{\text {full}}\) such that \(\mathbf {x}^{\prime }|_{[0,k]} = \mathbf {x}|_{[0,k]}\), \(\mathbf {w}^{\prime }|_{[0,k)} = \mathbf {w}|_{[0,k)}\) and \(\mathbf {w}^{\prime }(k)=w\).

Proposition 10

Given an I/S/- machine P = (X,W,δ,X0) where W = U × Y with input range U and output range Y, any supervisor \(f\colon W^{*}\rightarrow \varGamma \) preserves liveness in closed-loop configuration with P.

Proof

Consider any \((\mathbf {w},\mathbf {x})\in {\mathscr{B}}_{\text {full}}\) compliant with f up to time \(k\in \mathbb {N}_{0}\). Then at time \(k\in \mathbb {N}_{0}\) the supervisor applies the control pattern γ := f(w[0,k)) and P is in state x = x(k). By Eq. 26, γ≠∅ and we can pick a symbol w := (u,y) ∈ γ. Since P is an I/S/- machine, there exist \(y^{\prime }\in Y\) and \(x^{\prime }\in X\) such that \((x,(u,y^{\prime }),x^{\prime })\in \delta \). Referring again to Eq. 26, we obtain \(w^{\prime }:=(u,y^{\prime })\in \gamma \). To construct the signals \(\mathbf {x}^{\prime }\) and \(\mathbf {w}^{\prime }\), let \(\mathbf {x}^{\prime }(\kappa ):= \mathbf {x}(\kappa )\) for 0 ≤ κ ≤ k, \(\mathbf {x}^{\prime }(k+1)=x^{\prime }\), \(\mathbf {w}^{\prime }(\kappa ):= \mathbf {w}(\kappa )\) for 0 ≤ κ < k and \(\mathbf {w}^{\prime }(k)=w^{\prime }\). Observe that \((\mathbf {x}^{\prime }(\kappa ),\mathbf {w}^{\prime }(\kappa ),\mathbf {x}^{\prime }(\kappa +1))\in \delta \) for 0 ≤ κ ≤ k. Since P itself is deadlock-free, the signals can be extended to the entire time axis by taking arbitrary transitions from δ. We then obtain \((\mathbf {w}^{\prime },\mathbf {x}^{\prime })\in {\mathscr{B}}_{\text {full}}\) as required. □

Restricting consideration to I/S/- machines and the corresponding choice of Γ, Eq. 26, the problem of supervisory control is stated as follows.

Definition 11

Consider a plant \({\Sigma }=(\mathbb {N}_{0},W,{\mathscr{B}})\) realised by an I/S/- machine P = (X,W,δ,X0) with input range U and output range Y, and a specification \({\Sigma }_{\text {spec}}=(\mathbb {N}_{0},W,{\mathscr{B}}_{\text {spec}})\). For a supervisor \(f\colon W^{*}\rightarrow \varGamma \) with Γ from Eq. 26, the closed-loop behaviour is defined by

A supervisor \(f\colon W^{*}\rightarrow \varGamma \)solves the control problem if it enforces the specification, i.e., if \({\mathscr{B}}_{f}\subseteq {\mathscr{B}}_{\text {spec}}\).

The provided references (Thistle and Wonham 1994a, 1994b) present an algorithmic solution to the above problem for the case that the relevant behaviours are ω-regular and realised by past-induced finite state machines, extended by an acceptance condition. In the context of the present paper, we will substitute the actual plant by the abstraction ΣS obtained from some experiment S with past-induced finite state realisation PS = (ZS,W,δS,{𝜖}). Regarding the specification, we account for past-induced finite realisations with Büchi acceptance condition, i.e., we consider a state machine Pspec = (Xspec,W,δspec,{xspec0}) with a set of accepting states \(X_{\text {specM}}\subseteq X_{\text {spec}}\) and require that signals in the full behaviour visit accepting states infinitely often. The specification \({\Sigma }_{\text {spec}}=(\mathbb {N}_{0},W,{\mathscr{B}}_{\text {spec}})\) is then formally defined by

As a technical consequence of introducing an acceptance condition, it is not restrictive to assume that the transition relation δspec is full, i.e., for all χ ∈ Xspec and all w ∈ W there exists \(\chi ^{\prime }\in X_{\text {spec}}\) such that \((\chi ,w,\chi ^{\prime })\in \delta _{\text {spec}}\). For example, assume that we wish to exclude all closed loop trajectories that exhibit a certain string of symbols from W. We can encode this in a specification state machine with full transition relation where the occurrence of such a string leads into a “dump state”, from where no other state can be reached. The assumption of a full transition relation is common in automata theory and it simplifies the subsequent discussion.

Supervisory controller synthesis is conducted in the following two steps. First, we extend the abstraction state set to also encode the specification state by the product composition P× := PS × Pspec := (Q,W,λ,{q0}), where Q = ZS × Xspec, q0 = (𝜖,xspec0), and where \(\lambda \subseteq Q\times W\times Q\) is defined by \(((z,\chi ),w,(z^{\prime },\chi ^{\prime }))\in \lambda \) if and only if \((z,w,z^{\prime })\in \delta _{S}\) and \((\chi ,w,\chi ^{\prime })\in \delta _{\text {spec}}\). Since δspec is full, the induced behaviours of P× equal the respective behaviours induced by PS. Moreover, past-inducedness of both components PS and Pspec implies past-inducedness of the product P×. With \(Q_{\mathrm {M}}:=Z_{S}\times X_{\text {specM}}\subseteq Q\) we lift the acceptance condition of the specification accordingly. Note also that, since δspec is full and since we assume PS to be an I/S/- machine, P× is also an I/S/- machine and, hence, is deadlock-free. Regarding the acceptance condition, however, there can be live-locks; i.e., reachable states with no execution path to attain a state in QM thereafter. This is addressed by the second step of the synthesis approach, where we refer to the iteration proposed by Thistle and Wonham (1994a, 1994b) in order to identify states in P× which can be controlled to eventually visit some accepting states of QM and to do so infinitely often. The resulting state set Qwin is referred to as the set of winning states — once the closed-loop has generated a prefix that corresponds to a winning state q ∈ Qwin, a supervisor can be employed to enforce the specification from then on. In particular, the supervisory control problem has a solution if and only if the initial state of P× is a winning state. Addressing more general acceptance conditions for both the plant and the specification, Thistle and Wonham (1994a, 1994b) obtain the set of winning states by a five-nested fixpoint iteration, which for the specific situation in the present paper collapses to the following simplified algorithm.

Algorithm 12

Winning states Qwin of P× = (Q,W,λ,{q0}) w.r.t. the acceptance condition \(Q_{\mathrm {M}}\subseteq Q\)and control patterns Γ.

1) Initialise the target restriction with D := Q.

2) Initialise the winning states with Qwin := ∅.

3) Perform the following one-step controlled backward-reachability analysis: \( B =\{ q\in Q |\exists \gamma \in \varGamma \colon \emptyset \neq \lambda (q,\gamma )\subseteq (Q_{\mathrm {M}}\cap D)\cup Q_{\text {win}} \}. \) 4) If \(B\nsubseteq Q_{\text {win}}\), then update the winning states by Qwin := Qwin ∪ B and proceed with Step 3. Else, proceed with Step 5. 5) If \(Q_{\mathrm {M}}\cap D \nsubseteq Q_{\text {win}}\), then update the restriction by D := D ∩ Qwin and proceed with Step 2. Else, terminate and report Qwin as the result.

We provide some intuition on the above algorithm; see also Fig. 7. The inner loop over Steps 2–4 begins with Qwin = ∅ to accumulate in Qwin states that can be controlled to reach QM ∩ D within a finite number of steps. Since during the inner loop Qwin grows monotonously and the reachability analysis in Step 3 is monotone in the iterate Qwin, finiteness of the state set Q implies that the termination condition \(B\subseteq Q_{\text {win}}\) is satisfied after finitely many iterations. When proceeding with Step 5 for the first time, Qwin holds the states that can be controlled to reach QM at least once and, until then, to remain within Qwin. This is illustrated in Fig. 7 on the left, where the growth of Qwin occurs counter-clock-wise. In the figure it is assumed that the top-most transition which does not go to the target QM can be disabled by a suitable control pattern. As indicated in the figure, we cannot expect \(Q_{\mathrm {M}}\subseteq Q_{\text {win}}\), i.e., so far there may be states Qwin that can indeed only be controlled to reach QM once. Therefore, Step 5 restricts the effective target by letting D = Qwin. Repeating the inner loop again results in a set of winning states, but now they can all be controlled to reach QM at least twice. This is illustrated in Fig. 7 on the right, where the target has been restricted accordingly. Repeating the outer loop by monotonicity leads to a strictly decreasing restriction D and, by finiteness of Q, the termination condition must be satisfied after a finite number of iterations. At termination in Step 5, any state q ∈ Qwin can be controlled to reach QM ∩ D, and, by \(Q_{\mathrm {M}}\cap D\subseteq Q_{\text {win}}\), can be controlled to do so infinitely often.

A supervisor can be obtained from the above algorithm by recording for all q ∈ Qwin an arbitrary successful control pattern from Step 3 of the last run of the inner loop. Technically, this defines a map \(g:Q_{\text {win}}\rightarrow \varGamma \). By past-inducedness of P×, each prefix \(s\in \mathop {\text {pre}}{\mathscr{B}}_{S}\) corresponds to exactly one state in P× which we denote λ0(s) ∈ Q. The supervisor f is then defined for s ∈ W∗ by f(s) = g(λ0(s)) if λ0(s) ∈ Qwin and, else, f(s) = γdummy ∈Γ with γdummy := ∪{γ ∈Γ}. By construction, this supervisor preserves liveness in closed-loop configuration with the abstraction PS (even if it was not an I/S/- machine) it was designed for, and it conditionally enforces the specification once a prefix \(s\in \mathop {\text {pre}}{\mathscr{B}}_{S}\) with λ0(s) ∈ Qwin has been generated; i.e., we have

for the closed-loop behaviour \({\mathscr{B}}_{S,f}\); see Definition 11. Since the empty string \(\epsilon \in \mathop {\text {pre}}\mathbf {w}\) corresponds to the initial state q0 = λ0(𝜖), the right-hand-side of the above inclusion collapses to \({\mathscr{B}}_{\text {spec}}\) if we have q0 ∈ Qwin. In this case, we indeed obtain a solution of the control problem for the abstraction. This immediately carries over to the actual plant \(P\cong {\Sigma }=(\mathbb {N},W,{\mathscr{B}})\): the supervisor f preserves liveness in closed-loop configuration with P by Proposition 10 and we obtain \({\mathscr{B}}_{f}\subseteq {\mathscr{B}}_{S,f} \subseteq {\mathscr{B}}_{\text {spec}}\) as an immediate consequence of \({\mathscr{B}}\subseteq {\mathscr{B}}_{S}\) and Definition 11. If, on the other hand, q0∉Qwin, it follows from the detailed study by Thistle and Wonham (1994a, 1994b) that the control problem has no solution for the abstraction PS at hand. In this case, we interpret Qwin as an intermediate result which in an overall synthesis approach can be used to guide a local refinement of the abstraction.

Example 5

(cont.) For the vehicle navigation example from the previous section, we consider the specification to navigate the vehicle eventually to the north guard \(G_{\mathsf {y\_n}}\). This can be expressed by a state machine Pspec with two states, where one is an accepting state and indicates that y_n has occurred at least once. For controller synthesis, we use the abstraction PS obtained from the experiment S shown in Fig. 5. All states (z,χ) ∈ Q, for which \(z\in Z_{S}=\mathop {\text {pre}}S\) includes a y_n symbol, are immediately identified as winning states. Also, states (z,χ) with z = 〈(u_nw,y_w)〉 turn out to be winning states, because one can apply the control pattern {(u_ne,y)|y ∈ Y } to enforce that the winning state 〈(u_nw,y_w),(u_ne,y_n)〉 is attained by the next transition. Likewise, the initial state is a winning state: by applying the control pattern {(u_nw,y)|y ∈ Y } we either have 〈(u_nw,y_n)〉 or 〈(u_nw,y_w)〉, both known to be winning states by our previous observations. Thus, the supervisory control problem can be solved based on the abstraction. In contrast, if the control objective was to eventually visit the west guard \(G_{\mathsf {y\_w}}\), the provided abstraction is too coarse for a positive result — although intuition suggests that the actual plant can be very well controlled accordingly.

6 Guided refinements of experiments

We now consider the situation where abstraction-based synthesis of a supervisor as discussed in the previous section has failed, i.e., we are given a plant \({\Sigma }=(\mathbb {N}_{0},W,{\mathscr{B}})\) realised by a I/S- machine P and an abstraction \({\Sigma }_{S}=(\mathbb {N}_{0},W,{\mathscr{B}}_{S})\) obtained from an experiment S on \({\mathscr{B}}\), but applying Algorithm 12 shows that q0∉Qwin for the winning states \(Q_{\text {win}}\subseteq Q\) of the composed state machine P× = (Q,W,λ,{q0}). Provided that we are optimistic about the control problem with the actual plant to exhibit a solution, it is proposed to refine the abstraction and to repeat the synthesis procedure. Referring to Moor and Raisch (1999) and Moor et al. (2002), where the abstraction used is an l-complete approximation, i.e., \(S={\mathscr{B}}|_{[0,l]}\) for some \(l\in \mathbb {N}_{0}\), a refinement can be obtained by substituting l with l + 1. Effectively, this uniformly extends the sampled sequences in length by one more symbol. However, such an extension amounts to testing whether or not the extended sequence is in \(\mathop {\text {pre}}{\mathscr{B}}\), and this test is implemented as a one-step reachability analysis conducted on the original system. Since this is considered computationally expensive, we seek to identify specific sequences \(R\subseteq S\) that are worth the effort and use Eq. 7 to obtain a refinement of S tailored for the synthesis task at hand. The overall abstraction-based approach then becomes an iteration in which we alternate trial synthesis and abstraction refinement.

Algorithm 13

Iterative procedure to synthesise a supervisor for anI/S/ − machine P = (X,W,δ,X0), W = U × Y, X = X0, and a past-induced specification Pspec = (Xspec,W,δspec,{xspec0}) with accepting states \(X_{\text {specM}}\subseteq X_{\text {spec}}\).

1) Initialise the experiment \(S\subseteq W^{*}\) by \(S:={\mathscr{B}}|_{[0,0]}\), where \({\mathscr{B}}\) denotes the external behaviour induced by P.

2) Referring to Theorem 7, set up PS to realise the abstraction obtained from S.

3) Run Algorithm 12 on the product P× = PS × Pspec to obtain the winning states Qwin.

4) If the initial state q0 of P× is within Qwin, report the corresponding supervisor and terminate the iteration.

5) Choose refinement candidates \(R\subseteq S\) to obtain a refinement by Eq. 7 to substitute S, and proceed with Step 2.

In the case that the procedure terminates at Step 4, we refer to the discussion of the previous section and recall that the supervisor not only solves the synthesis problem for the abstraction PS but also for the actual plant P. Otherwise, the experiment is refined in Step 5 for the subsequent trial synthesis. The proposed iteration may fail to terminate regardless of the choice for the refinement in Step 5. This is to be expected: since the verification of language inclusion is known to be only semi-decidable even for restricted classes of hybrid systems, the synthesis problem cannot be decidable either. However, we will propose a refinement scheme for Step 5 that ensures termination under the hypothesis of the existence of some experiment for which synthesis succeeds. We are now left to set up sensible refinement candidates R to implement Step 5.

For our analysis, we inspect the composed system P× = (Q,W,λ,{q0}) := PS × Pspec, with lifted marked states \(Q_{\mathrm {M}}\subseteq Q\) for the specification acceptance condition and winning states \(Q_{\text {win}}\subseteq Q\) obtained by the synthesis algorithm. A refinement obtained by extending specific samples s ∈ S then corresponds to extending the transition relation λ at states q = (z,χ) ∈ ZS × Xspec = Q with z = s. Hence, our inspection of P× focuses attention on states in

and we will identify two classes of states that in turn characterise sequences s ∈ S that are not worth a refinement. For our formal argument, we consider two more experiments \(S^{\prime }\) and \(S^{\prime \prime }\) on \({\mathscr{B}}\) such that \(S\le S^{\prime }\le S^{\prime \prime }\). Here, we assume that \(S^{\prime \prime }\) is a successful refinement of S in the sense that there exists a supervisor \(f^{\prime \prime }\) such that the closed-loop \({\mathscr{B}}_{S^{\prime \prime },f^{\prime \prime }}\) satisfies the specification. We then construct \(S^{\prime }\) to refine S in the same way as \(S^{\prime \prime }\) except for avoiding refinement at a specific sequence s ∈ S, see Fig. 8. Technically, we let

to observe that \(S^{\prime }\) is indeed an experiment on \({\mathscr{B}}\) and that \(S\le S^{\prime \prime }\) implies \(S\le S^{\prime }\le S^{\prime \prime }\). We then show that \(S^{\prime }\) is also a successful refinement of S and thereby establish that the synthesis problem can be solved without refinements at the previously identified sequence s ∈ S.

Intermediate experiment \(S^{\prime }\) with \(S\le S^{\prime }\le S^{\prime \prime }\) by not refining at s ∈ S. Both experiments S and \(S^{\prime \prime }\) are shown as “barriers” (dark green) in the computational tree \(\mathop {\text {pre}}{\mathscr{B}}\) (light green). The intermediate experiment \(S^{\prime }\) lies between S and \(S^{\prime \prime }\) and consists of (a) the sequence s and (b) all sequences \(r\in S^{\prime \prime }\) except for extensions of s

For the remainder of this section, we refer to the synthesis problems based on the experiments \(S^{\prime }\) and \(S^{\prime \prime }\) by the same notational conventions as introduced for S, i.e., we denote the associated abstractions \({\Sigma }_{S^{\prime }}=(\mathbb {N}_{0},W,{\mathscr{B}}_{S^{\prime }})\) and \({\Sigma }_{S^{\prime \prime }}=(\mathbb {N}_{0},W,{\mathscr{B}}_{S^{\prime \prime }})\), the realisations thereof \(P_{S^{\prime }}=(Z_{S^{\prime }},W,\delta _{S^{\prime }},\{\epsilon \})\) and \(P_{S^{\prime \prime }}=(Z_{S^{\prime \prime }},W,\delta _{S^{\prime \prime }},\{\epsilon \})\), the composed state machines \(P^{\prime }_{\times }=(Q^{\prime },W,\lambda ^{\prime },\{q_{0}\})\) and \({P}^{\prime \prime }_{\times }=(Q^{\prime \prime },W,\lambda ^{\prime \prime },\{q_{0}\})\), the lifted marked states \(Q^{\prime }_{\mathrm {M}}\) and \({Q}^{\prime \prime }_{\mathrm {M}}\), and the winning states \(Q^{\prime }_{\text {win}}\) and \(Q^{\prime \prime }_{\text {win}}\) as obtained by Algorithm 12, respectively.

6.1 Winning states

Once the abstraction ΣS has generated a finite sequence s that drives the composed state machine P× to a winning state, there exists a supervisor that enforces the specification from then on. Intuitively, for such states, no refinement is necessary.

For a formal argument, fix any \(z=s\in S \subseteq Z_{S}\) such that

where

Recall that we have synthesised a supervisor f that enforces the conditional specification (29) with \({\mathscr{B}}_{S}\) as the plant. Moreover, by hypothesis, there exists a supervisor \(f^{\prime \prime }\) for the refined abstraction \({\mathscr{B}}_{S^{\prime \prime }}\) such that the closed loop satisfies \({\mathscr{B}}_{S^{\prime \prime },f^{\prime \prime }}\subseteq {\mathscr{B}}_{\text {spec}}\). In order to establish the existence of a supervisor that enforces the specification for \({\mathscr{B}}_{S^{\prime }}\) with the relaxed refinement \(S^{\prime }\) in Eq. 31, we use the candidate \(f^{\prime }:W^{*} \rightarrow \varGamma \) defined by

i.e., \(f^{\prime }\) applies the same control patterns as \(f^{\prime \prime }\) until the sequence s has been observed and, from then on, behaves as f in ignorance of any symbols generated before s. The intuition here is that if the closed loop formed by \({\mathscr{B}}_{S^{\prime }}\) and \(f^{\prime }\) happens to not generate s, then it evolves within \({\mathscr{B}}_{S^{\prime \prime }}\) and, hence, \(f^{\prime \prime }\) enforces the specification. If, on the other hand, s is generated, this corresponds to a winning state of P× and, hence, f enforces the specification. We obtain the following lemma.

Lemma 14

Consider three experiments \(S\le S^{\prime }\le S^{\prime \prime }\) over the finite signal range W = U × Y with the respective associated abstractions \({\Sigma }_{S}=(\mathbb {N}_{0},W,{\mathscr{B}}_{S})\), \({\Sigma }_{S^{\prime }}=(\mathbb {N}_{0},W,{\mathscr{B}}_{S^{\prime }})\) and \({\Sigma }_{S^{\prime \prime }}=(\mathbb {N}_{0},W,{\mathscr{B}}_{S^{\prime \prime }})\), and with respective past-induced realisations PS, \(P_{S^{\prime }}\) and \(P_{S^{\prime \prime }}\) given by Theorem 7. Assume that \(S^{\prime }\) relates to S and \(S^{\prime \prime }\) as in Eq. 31 for some s ∈ W∗ that complies with Eq. 32. If there exists a supervisor \(f^{\prime \prime }\) such that \({\mathscr{B}}_{S^{\prime \prime },f^{\prime \prime }}\subseteq {\mathscr{B}}_{\text {spec}}\), then there also exists a supervisor \(f^{\prime }\) such that \({\mathscr{B}}_{S^{\prime },f^{\prime }}\subseteq {\mathscr{B}}_{\text {spec}}\).

Proof

We prove the existence by the candidate supervisor \(f^{\prime }\) given in Eq. 34. To show \({\mathscr{B}}_{S^{\prime },f^{\prime }}\subseteq {\mathscr{B}}_{\text {spec}}\), pick any \(\mathbf {w}\in {\mathscr{B}}_{S^{\prime },f^{\prime }}\). We distinguish two cases.

First, assume that \(\langle u, s\rangle \not \in \mathop {\text {pre}}\mathbf {w}\) for all u ∈ W∗. We then have \(f(r)=f^{\prime \prime }(r)\) for all \(r\in \mathop {\text {pre}}\mathbf {w}\). Now pick arbitrary \(k\in \mathbb {N}_{0}\) and refer to \(\mathbf {w}\in {\mathscr{B}}_{S^{\prime }}\) for the choice of \(l\in \mathbb {N}_{0}\) such that \((\sigma ^{k}\mathbf {w})|_{[0,l)}\in S^{\prime }\). Here, the case hypothesis implies (σkw)|[0,l)≠s and, hence \((\sigma ^{k}\mathbf {w})|_{[0,l)}\in S^{\prime \prime }\). Since \(k\in \mathbb {N}_{0}\) was arbitrary, we obtain \(\mathbf {w}\in {\mathscr{B}}_{S^{\prime \prime }}\) to conclude with \(\mathbf {w}\in {\mathscr{B}}_{S^{\prime \prime },f^{\prime \prime }}\subseteq {\mathscr{B}}_{\text {spec}}\).

For the second case we pick the shortest sequence u ∈ W∗ such that \(\langle u, s\rangle \in \mathop {\text {pre}}\mathbf {w}\). We then have \((\sigma ^{|u|}\mathbf {w})(k) = \mathbf {w}(|u|+k)\in f^{\prime }(\mathbf {w}|_{[0,|u|+k)})=f((\sigma ^{|u|}\mathbf {w})|_{[0,k)})\) for all k ≥|s|. By \(\mathbf {w}\in {\mathscr{B}}_{S^{\prime },f^{\prime }}\subseteq {\mathscr{B}}_{S}\) there uniquely exist state trajectories \(\mathbf {z}\colon \mathbb {N}_{0}\rightarrow Z_{S}\) and \(\mathbf {x}\colon \mathbb {N}_{0}\rightarrow X_{\text {spec}}\) such that (w,(z,x)) is in the full behaviour induced by P×. In particular, we have that x(|u|+|s|) ∈ Xspec,s. By time invariance, we obtain \(\mathbf {w^{\prime }}:=\sigma ^{k}\mathbf {w}\in \sigma ^{k} {\mathscr{B}}_{S}\subseteq {\mathscr{B}}_{S}\) and denote \(\mathbf {z^{\prime }}\colon \mathbb {N}_{0}\rightarrow Z_{S}\) the unique state trajectory such that \((\mathbf {w^{\prime }},\mathbf {z^{\prime }})\) is in the full behaviour induced by PS. Here, we observe that \(\mathbf {z^{\prime }}(|s|)=z=s\). With \(\mathbf {x^{\prime }}:=\sigma ^{|u|}\mathbf {x}\), we obtain a state trajectory \((\mathbf {z^{\prime }},\mathbf {x^{\prime }})\) with \(((\mathbf {z^{\prime }}(k),\mathbf {x^{\prime }}(k)),\mathbf {w^{\prime }}(k),(\mathbf {z^{\prime }}(k+1),\mathbf {x^{\prime }}(k+1))\in \lambda \) for all \(k\in \mathbb {N}_{0}\). By the choice of s in Eq. 32, we observe \((\mathbf {z^{\prime }}(|s|),\mathbf {x^{\prime }}(|s|))\in Q_{\text {win}}\) and, referring to the case hypothesis, we also have \(\mathbf {w^{\prime }}(k)\in f(\mathbf {w^{\prime }}|_{[0,k)})\) for all k > |s|. Therefore, there exist infinitely many k > |s| such that \((\mathbf {z^{\prime }}(k),\mathbf {x^{\prime }}(k))\in Q_{\mathrm {M}}\) and, hence, \(\mathbf {x}(|u|+k)=\mathbf {x^{\prime }}(k)\in X_{\text {specM}}\). This implies \(\mathbf {w}\in {\mathscr{B}}_{\text {spec}}\) and concludes the proof of \({\mathscr{B}}_{S^{\prime },f^{\prime }}\subseteq {\mathscr{B}}_{\text {spec}}\). □

6.2 Failing States

Denote \(Q_{\text {fail}}\subseteq Q_{\text {leaf}}\) the set of failing states, i.e., states from which the accepting states QM are not reachable:

Obviously, a state q ∈ Qfail cannot be a winning state and, intuitively, it cannot become a winning state in any refinement. Therefore, a refinement at a failing state is not expected to be relevant for any solution of the control problem.

For our formal argument, fix any \(z=s\in S\subseteq Z_{S}\) with

where Xspec,s is defined in Eq. 33. By the following proposition, we associate with s a set of failing states in the composed state machine \({P}^{\prime \prime }_{\times }\) based on the refinement \(S^{\prime \prime }\).

Proposition 15

Consider two experiments \(S\le S^{\prime \prime }\) over W = U × Y with the respective associated abstractions ΣS and \({\Sigma }_{S^{\prime \prime }}\), and with the respective past-induced realisations PS and \(P_{S^{\prime \prime }}\) given by Theorem 7. Referring to the composed state machine \({P}^{\prime \prime }_{\times } = P_{S^{\prime \prime }}\times P_{\text {spec}}\), let

for some s ∈ W∗ that complies with Eqs. 35 and 36. Then any trajectory \((\mathbf {w^{\prime \prime }},\mathbf {x^{\prime \prime }})\) of the full behaviour induced by \({P}^{\prime \prime }_{\times }\) that passes \(Q^{\prime \prime }_{\text {fail},s}\) does not satisfy the specification, i.e., if there exists \(k\in \mathbb {N}_{0}\) with \(\mathbf {x}(k)\in Q^{\prime \prime }_{\text {fail},s}\) then \(\mathbf {w}\not \in {\mathscr{B}}_{\text {spec}}\).

Proof

Consider a state \(\xi \in Q^{\prime \prime }_{\text {fail},s}\), i.e., we have ξ = (〈s,t〉,χ) for some t ∈ W∗ and some χ ∈ Xspec. For a contradiction, assume that there exists a trajectory \((\mathbf {w^{\prime \prime }},\mathbf {x^{\prime \prime }})\) from the full behaviour induced by \({P}^{\prime \prime }_{\times }\) that first passes ξ and thereafter passes \({Q}^{\prime \prime }_{\mathrm {M}}\). We can then find u, v ∈ W∗, v≠𝜖, such that \(\langle u,s,t,v\rangle \in \mathop {\text {pre}}\mathbf {w^{\prime \prime }}\), \(\lambda ^{\prime \prime }_{0}(\langle u,s,t\rangle )=\xi \), and \(\lambda ^{\prime \prime }_{0}(\langle u,s,t,v\rangle )\in {Q}^{\prime \prime }_{\mathrm {M}}\). We denote the respective specification components of the state χu = δspec,0(u), χus = δspec,0(〈u,s〉), χust = δspec,0(〈u,s,t〉) = χ and χustv = δspec,0(〈u,s,t,v〉) ∈ XspecM. By \(\mathbf {w^{\prime \prime }}\in {\mathscr{B}}_{S^{\prime \prime }}\subseteq {\mathscr{B}}_{S}\), we observe χus ∈ Xspec,s. By \(\sigma ^{k}{\mathscr{B}}_{S^{\prime \prime }}\subseteq {\mathscr{B}}_{S^{\prime \prime }}\subseteq {\mathscr{B}}_{S}\), for any \(k\in \mathbb {N}_{0}\), we conclude that \(\mathbf {w:=}\sigma ^{|u|}\mathbf {w^{\prime \prime }}\in {\mathscr{B}}_{S}\). Hence, we can choose \(\mathbf {z}:\mathbb {N}_{0}\rightarrow Z_{S}\) such that (w,z) is in the full behaviour induced by PS. Since the transition relation δspec is full, we can use w to generate a state trajectory for any initial state. In particular, there exists \(\mathbf {x}:\mathbb {N}_{0}\rightarrow X_{\text {spec}}\) such that x(0) = χu, x(|s|) = χus, x(|s| + |t|) = χust, x(|s| + |t| + |v|) = χustv and (x(k),w(k),x(k + 1)) ∈ δspec for all \(k\in \mathbb {N}_{0}\). Therefore, (z(|s| + |t| + |v|),x(|s| + |t| + |v|)) is reachable from (z(|s|),x(|s|)) by transitions from λ. We observe z(|s|) = s = z, and, hence (z(|s|),x(|s|)) = (z,χus) ∈ Qfail. This constitutes a contradiction with x(|s| + |t| + |v|) = χustv ∈ XspecM. Therefore, no trajectory \((\mathbf {w^{\prime \prime }},\mathbf {x^{\prime \prime }})\) from the full behaviour induced by \({P}^{\prime \prime }_{\times }\) that passes ξ can pass \({Q}^{\prime \prime }_{\mathrm {M}}\) thereafter. □