Abstract

We study a pricing problem with finite inventory and semi-parametric demand uncertainty. Demand is a price-dependent Poisson process whose mean is the product of buyers’ arrival rate, which is a constant \(\lambda \), and buyers’ purchase probability \(q(p)\), where p is the price. The seller observes arrivals and sales, and knows neither \(\lambda \) nor \(q\). Based on a non-parametric maximum-likelihood estimator of \((\lambda ,q)\), we construct an estimator of mean demand and show that as the system size and number of prices grow, it is asymptotically more efficient than the maximum likelihood estimator based only on sale data. Based on this estimator, we develop a pricing algorithm paralleling (Besbes and Zeevi in Oper Res 57:1407–1420, 2009) and study its performance in an asymptotic regime similar to theirs: the initial inventory and the arrival rate grow proportionally to a scale parameter n. If \(q\) and its inverse function are Lipschitz continuous, then the worst-case regret is shown to be \(O((\log n / n)^{1/4})\). A second model considered is the one in Besbes and Zeevi (2009, Section 4.2), where no arrivals are involved; we modify their algorithm and improve the worst-case regret to \(O((\log n / n)^{1/4})\). In each setting, the regret order is the best known, and is obtained by refining their proof methods. We also prove an \(\Omega (n^{-1/2})\) lower bound on the regret. Numerical comparisons to state-of-the-art alternatives indicate the effectiveness of our arrivals-based approach.

Similar content being viewed by others

1 Introduction

1.1 Background

Pricing and revenue management are important problems in many industries. Talluri and van Ryzin (2005) discuss instances of this problem that range over many industries, including fashion and retail, air travel, hospitality, and leisure. Early literature assumes the relationship between the mean demand and the price is known to the seller (Gallego and van Ryzin 1994). In practice, decision makers seldom have such knowledge. Pricing and demand learning is a stream of literature concerned with pricing under incomplete knowledge of the demand process, which is estimated. A standard model is that whenever the price is set at p, the demand is a Poisson process of rate \(\Lambda (p)\), which is called the demand function (Besbes and Zeevi 2009, 2012; Wang et al. 2014).

Estimation methods are broadly divided into parametric and non-parametric. The former assume a certain functional form and carry mis-specification risk, while the latter make weaker assumptions and tend to alleviate this risk (Besbes and Zeevi 2009). For the single-product problem with finite inventory, prominent works are Besbes and Zeevi (2009) and Wang et al. (2014). In Besbes and Zeevi (2009), a learning phase during which certain test prices are applied allows estimating the demand function via the realized demand (sales). This estimate leads to a “good” fixed-price policy that is applied during the remainder of the selling horizon, the so-called exploitation phase. Performance is measured by the worst-case regret across a class of demand functions, where regret is the expected revenue loss relative to that achievable by acting optimally under full knowledge of the demand function.

The following setting is the main focus of this paper. Potential buyers arrive according to a Poisson process of rate \(\lambda \), regardless of the price on offer; and whenever the price is p, an arriving customer purchases with probability \(q(p)\) independently of everything else. The pair \((\lambda ,q)\) are unknown to the seller.

1.2 Overview of the main contributions

A high-level summary of our contribution is as follows:

-

1.

Starting from an arrival rate \(\lambda \) and a purchase probability (function) \(q(\cdot )\) we define a class of demand models such that whenever the price is p, the demand is a Poisson process of rate \(\lambda q(p)\), which matches the concept of demand function in the standard model. The essential deviation from the standard model is that we postulate the existence of the scaling factor \(\lambda \) that is unknown in addition to \(q(\cdot )\).

-

2.

Assuming that both arrivals and sales are observed, we construct a maximum-likelihood estimator (MLE) of \((\lambda ,\varvec{q})\), where \(\varvec{q}=(q(p_1),\ldots ,q(p_{\kappa }))\) for arbitrary (distinct) prices \(p_1,\ldots ,p_{\kappa }\). The product of estimated \(\lambda \) and \(\varvec{q}\) is our estimator of mean demand, and it is shown to be asymptotically more efficient than the maximum-likelihood estimator based only on sale data (sales-only estimator) (Besbes and Zeevi 2009; Wang et al. 2014; Lei et al. 2014). This development focuses primarily on mean-square estimation efficiency.

-

3.

We work with a model class \(\mathcal{L}\) whose essential requirement is that \(q(\cdot )\) and its inverse are Lipschitz continuous. Besbes and Zeevi (2009, Section 4.2) work with a class \(\mathcal{L}_{BZ}\) where the demand function and its inverse are Lipschitz continuous. Wang et al. (2014) and Lei et al. (2014) work with a smaller class, \(\mathcal{L}_{W}\), where the demand function is twice differentiable. Class \(\mathcal{L}\) is smaller than \(\mathcal{L}_{BZ}\), but it includes models outside \(\mathcal{L}_{W}\). Allowing an inventory constraint, we develop a policy based on the estimated \(\lambda \) and \(\varvec{q}\), and a counterpart based on the sales-only estimator. These policies’ worst-case regret is shown to be \(O(\log n/n)^{1/4}\), against \(\mathcal{L}\) (Theorem 1) and against \(\mathcal{L}_{BZ}\) (Theorem 2), respectively. The convergence rate improves slightly that in Besbes and Zeevi (2009), and is the best known in each case.

-

4.

We provide a lower bound on regret (Theorem 3). It is closely related to existing ones under the standard model (i.e., without an arrival rate); specifically, Wang et al. (2014, Theorem 2), and to a lesser extent Besbes and Zeevi (2012, Theorem 2) (discrete finite price set) and Broder and Rusmevichientong (2012, Theorem 3.1) (discrete-time pricing). Our bound does not immediately follow from any of them because their set of admissible policies does not contain ours, due to our allowing the price to depend on the arrival history (in addition to the sale history).

The remainder of this paper Section 1.3 reviews related literature. Section 2 introduces our model and formulates the problem. Section 3 presents the estimation and pricing methods. Section 4 analyzes the estimation problem. Section 5 analyzes the (worst-case) regret. Section 5.3 contains the lower bound on regret. Section 6 compares numerically against alternative methods. Section 8 contains selected proofs.

1.3 Related literature

The literature on pricing strategies is vast. We refer to Bitran and Caldentey (2003) and Talluri and van Ryzin (2005) for comprehensive reviews, and to den Boer (2015) for a more recent survey. Gallego and van Ryzin (1994) characterizes optimal pricing policies and develops an upper bound to the optimal revenue via a full-information deterministic relaxation, all under the assumption that the demand function is known. More recent literature addresses pricing problems with unknown demand function. In many works, the demand process is known up to a finite number of parameters. In Lin (2006), Araman and Caldentey (2009), and Farias and Van Roy (2010), there is a single unknown parameter representing the market size. den Boer and Keskin (2019) allow a discontinuous demand function, but they restrict it parametrically in each continuity interval.

Well-studied is the case of a single product and no inventory constraint (Broder and Rusmevichientong 2012; den Boer and Zwart 2014; Besbes and Zeevi 2015). Besbes and Zeevi (2015) show that pricing algorithms based on a mis-specified linear model of the demand function can perform well, under conditions. Keskin and Zeevi (2014) provide general sufficient conditions for a pricing policy to achieve asymptotic regret optimality when the demand function is linear. The coordination of price and inventory decisions is a related area; Chen et al. (2019) employ non-parametric demand learning methods that bear resemblance to ours, but notable restrictions are that unsatisfied demand is backlogged and the replenishment lead time is negligible.

Closely related to our work are Besbes and Zeevi (2009), Wang et al. (2014) and Lei et al. (2014). Besbes and Zeevi (2009, Section 4.2) define a model class \(\mathcal{L}_{BZ}\) where the demand function and its inverse are Lipschitz continuous, and achieve worst-case regret \(O(n^{-1/4}(\log n)^{1/2})\) against this class; they also prove an \(\Omega (n^{-1/2})\) lower bound on the regret of any admissible policy. Wang et al. (2014) and Lei et al. (2014) work with a smaller classes in which the demand function is smooth (twice-differentiable everywhere). Wang et al. (2014) iterate over shrinking price intervals, all of which contain the optimal static price under the deterministic relaxation (Gallego and van Ryzin 1994); the time spent learning and the number of test prices are controlled carefully as functions of the iteration count; the worst-case regret is \(O(n^{-1/2} (\log n)^{4.5})\). Lei et al. (2014) develop iterative algorithms that also control carefully key parameters; their worst-case regret is \(O(n^{-1/2})\). These methods first estimate the order relation between the unconstrained maximizer of the revenue-rate function and the clearance price (Gallego and van Ryzin 1994); this guides the onward choice of parameters. By excluding a non-differentiable demand function, these methods are not directly comparable to ours.

This paper has some connections to the continuum-armed bandit literature [e.g., Auer et al. (2002), Kleinberg (2004), Cope (2009)], but a clear distinguishing feature here is the presence of an inventory constraint. A recent work that addresses the inventory constraint is Babaioff et al. (2015).

2 Problem formulation and background

Model of Demand and Basic Assumptions A monopolist sells a single product. The selling horizon (period) is \(T > 0\); after this point sales are discontinued, and any unsold products have no value. Product demand is as follows:

Assumption 1

-

(a)

Customers arrive according to a Poisson process of rate \(\lambda \). Whenever the price is p, an arriving customer purchases with probability \(q(p) \in [0,1]\) independently of everything else.

-

(b)

The seller observes arrivals and sales throughout the selling period.

The primitives \((\lambda ,q)\) are unknown to the seller. The set of feasible prices is \([\underline{p},\overline{p}] \cup p_{\infty }\), where \(0< \underline{p}< \overline{p}< \infty \), and \(p_{\infty }> 0\) is a price that “turns off” demand, i.e., \(q(p_{\infty })=0\). The purchase probability function \(q(p)\) is assumed to be non-increasing and have an inverse. The revenue rate function per arrival, \(r(p) := pq(p)\), is assumed to be concave. Clearly, the demand process generated through any pair \((\lambda ,q)\) is Poisson, with rate function \(\Lambda (p) = \lambda q(p)\) whenever the price is p. Gallego and van Ryzin (1994), Besbes and Zeevi (2009), Wang et al. (2014) impose similar assumptions directly on the Poisson rate function (which is then called a regular demand function); we impose them on \(q\) instead. In analyzing the regret, we need:

Assumption 2

For some finite positive constants \(\underline{\lambda }\), \(\overline{\lambda }\), \(\underline{M}\), \(\overline{M}\), \(m_a\):

-

(a)

The arrival rate is bounded away from zero and infinity: \(\underline{\lambda }\le \lambda \le \overline{\lambda }\).

-

(b)

The purchase probability and its inverse are both Lipschitz functions. Specifically, for all \(p,p'\in [\underline{p},\overline{p}]\), we have \(\underline{M}|p-p'| \le |q(p) - q(p')| \le \overline{M}|p-p'|\).

-

(c)

The revenue rate can be made positive: \(\max \{pq(p): p\in [\underline{p},\overline{p}]\} \ge m_a\).

Let \(\mathcal{L}= \mathcal{L}(\overline{\lambda },\underline{\lambda },\underline{M},\overline{M},m)\) be the class of demand models satisfying Assumptions 1(a) and 2. Any bounded demand function \(\Lambda \) is representable in \(\mathcal{L}\) as a pair (\(\overline{\Lambda },\Lambda (\cdot )/\overline{\Lambda }\)) for any \(\overline{\Lambda }\ge \sup _p \Lambda (p)\); moreover, smoothness properties of \(q\) and \(\overline{\Lambda }q\) are identical.

Besbes and Zeevi (2009) (Assumption 1) define a model class \(\mathcal{L}_{BZ}\) requiring Lipschitz continuity of the demand function and its inverse; Wang et al. (2014) define a smaller class \(\mathcal{L}_{W}\) requiring, additionally, twice-differentiability.

Class \(\mathcal{L}\) is strictly smaller than \(\mathcal{L}_{BZ}\), and obeys no inclusion relation to \(\mathcal{L}_{W}\). Indeed, if \((\lambda ,q)\) and \((\lambda ',q')\) satisfy \(\lambda ' \ne \lambda \) and \(\lambda 'q'(p) = \lambda q(p)\) for all p, and if these pairs cover the selling horizon non-trivially (each applying a positive time period), then they induce the time-invariant demand function \(\lambda q(p)\); this model is not representable in \(\mathcal{L}\), and yet is representable in \(\mathcal{L}_{BZ}\) and \(\mathcal{L}_{W}\) under suitable conditions on \(q\), \(q'\). On the other hand, a pair \((\lambda , q)\) such that \(q\) is Lipschitz but not twice-differentiable everywhere induces a model with demand function \(\lambda q\) that is inside \(\mathcal{L}\) and outside \(\mathcal{L}_{W}\), since \(\lambda q\) inherits the non-differentiability of \(q\).

Estimation problem In studying estimation efficiency, we need:

Assumption 3

No stock out occurs during the estimation (learning) phase.

This assumption, which is commonly made (Broder and Rusmevichientong 2012; den Boer and Zwart 2014; Besbes and Zeevi 2015; Keskin and Zeevi 2014), can probably be relaxed, or by-passed by showing that a stock-out is a rare event. On this point, in Lemma 1 we show that the probability of a stock-out vanishes fast when the system size increases and the fraction of time spent learning vanishes sufficiently fast. Since the efficiency analysis is lengthy even under Assumption 3, we do not attempt to relax this assumption.

The efficiency of the MLE-based demand estimator relative to the sales-only counterpart (based on the same learning time and number of test prices) is studied on a sequence of estimation problems indexed by n, with arrival rate \(\lambda _n\), learning time unity, and number of test prices \(\kappa _n\). We assume:

That is, \(r_n\), the mean per-price-arrival-count, or less formally the “information per price” grows large. The second condition is aimed at keeping the bias (present in the MLE only) negligible relative to variance. In studying the efficiency in estimating the function \(q(\cdot )\) (not merely \(q(p)\) for fixed p) we need:

Assumption 4

The purchase-probability \(q(\cdot )\) is continuous almost everywhere on \([\underline{p},\overline{p}]\); that is, the set of its discontinuities has measure zero.

Pricing problem Let \(x > 0\) denote the level of inventory at the start of the selling period. Let \((p_t: 0 \le t\le T)\) denote the price process (assumed to take values in \([\underline{p},\overline{p}] \cup p_{\infty }\) and to have right-continuous paths with left limits). Let \(N= (N(t): t \ge 0 )\) be a unit-rate Poisson process. Then, the cumulative demand up to time t has the representation \( D_t = N\big ( \lambda \int _0^t q(p_s) \mathrm{d}s \big ) \) by Assumption 1(a). A process \((p_t)\) is said to be non-anticipating if its value at any time t is only allowed to depend on past prices \(\{p_u: u\in [0,t)\}\), past arrival counts \(\{A_u: u\in [0,t)\}\), and past demand counts \(\{D_u: u\in [0,t)\}\). In other words, the process \((p_t)\) is adapted to the filtration \(\mathcal{F}_t = \sigma (p^\pi _u, A_u, D_u: 0 \le u < t)\). A pricing policy \(\pi \) is a method of constructing a non-anticipating price process, which we then denote \((p^\pi _t: 0\le t\le T)\). In particular, \( D^\pi _t := N\big ( \lambda \int _0^t q(p^\pi _s) \mathrm{d}s \big ) \) is the cumulative demand up to time t under the policy \(\pi \). A policy \(\pi \) is said to be admissible if the induced price process \(p^\pi \) is non-anticipating and \( \int _0^T \mathrm{d}D^\pi _s \le x \ \text{ with } \text{ probability } \text{1. } \) Let \(\mathcal{P}\) denote the set of admissible policies. The seller’s problem is: choose \(\pi \in \mathcal{P}\) to maximize the mean revenue \( J^\pi = J^\pi (x,T|\lambda ,q) := {\mathbb E}_{\lambda ,q} \int _0^T p^\pi _s \mathrm{d}D^\pi _s , \) where \({\mathbb E}_{\lambda ,q}\) denotes expectation with respect to \((\lambda ,q)\).

Full-information deterministic relaxation Here the stochastic elements above are replaced by their means, giving rise to the problem

In line with the literature, our motivation is that the optimal solution to (2) performs well for the original (stochastic) problem (Gallego and van Ryzin 1994, Theorem 3). The solution prices at \(p^D:= \max \{p^u,p^c\}\) (while inventory is positive), where \(p^u:= \text {arg max\;}_{p}\{pq(p)\}\) is the unconstrained maximizer; and \(p^c:= \text {arg min\;}_p \left| \lambda q(p)-x/T\right| \) is the clearance price. We define the regret of \(\pi \) as \(1 - J^\pi /J^D\); this is conservative, since \(\sup _{\pi \in \mathcal{P}} J^\pi (x,T|\lambda ,q) \le J^D(x,T|\lambda ,q)\) (Gallego and van Ryzin 1994, [Theorem 1]).

Large-system performance analysis In the pricing problem, given a primitive \((\lambda ,q) \in \mathcal{L}\), we consider a sequence of problems indexed by \(n=1,2,\ldots \) so that in the n-th problem, the initial inventory is \(x_n = n x\); the arrival rate is \(\lambda _n = n\lambda \); the purchase-probability function \(q\) and the horizon T are fixed (same for all n). Our main objective is to study the regret as \(n\rightarrow \infty \).

Model discussion Our assumption of existence of a price-independent arrival rate is quite restrictive; in e-commerce, for example, many customers are channeled to a store through a price-comparison service, and thus one would expect the arrival rate to increase as the price is lowered. On the other hand, it may be reasonable for some physical (brick and mortar) stores when customers exhibit loyalty, that is, the preference to shop at a particular store (e.g., a local grocery or convenience store). In this case, customers might arrive at a fairly constant rate regardless of price, and, once in store, their purchase decision could be price-dependent. For example, a convenience store selling a fixed inventory of beer (having a “use by” or “best by” date rendering the product worthless beyond that date), could postulate a price-independent arrival rate.

Our formulation deviates from Besbes and Zeevi (2009), Besbes and Zeevi (2012), Broder and Rusmevichientong (2012), Wang et al. (2014) in that the seller has additional information, namely the arrival counts. Consequently, correspondingly different is our notion of non-anticipation, and by consequence the notion of admissibility. Specifically, their definition of admissibility requires that the price process \((p_t)\) is adapted to the filtration \(\sigma (p^\pi _u, D_u: 0 \le u < t)\), which contains less information than our \(\mathcal{F}_t\). The pricing policy we propose naturally involves the past arrival counts and therefore does not belong to the set of admissible policies they consider.

Notation Statements “\(x := y\)” and “\(y =: x\)” define x through y. When A is a set, \(\mathbb {1}_{\left[ {A} \right] }\) is the indicator function; \({A}^c\) is the complement; and |A| is the cardinality. When x is real, \(\lfloor {x}\rfloor \) is its floor, \(\lceil {x}\rceil \) is its ceiling, and \(x^+\) is \(\max \{0,x\}\). When \(a_n\) and \(b_n\) are nonnegative sequences, \(a_n = O(b_n)\) means that \(a_n/b_n\) is bounded from above; \(a_n = \Omega (b_n)\) means that \(a_n/b_n\) is bounded from below; \(a_n \asymp b_n\) means that \(a_n/b_n\) is bounded from both above and below; \(a_n \sim b_n\) means that \(\lim _{n\rightarrow \infty } a_n/b_n = 1\); and \(a_n = o(b_n)\) means that \(\limsup _{n\rightarrow \infty } a_n/b_n = 0\). When X is a random variable, “\(X \sim \cdot \)” indicates the probability law of X, and “\(X \Rightarrow \cdot \)” denotes convergence in distribution.

3 Estimation and pricing methods

The estimation method in the presence of an arrival rate is as follows:

Method A (\(\tau , \kappa \)) Let \(p_1,\ldots ,p_{\kappa }\) be any \(\kappa \) distinct prices in \([\underline{p},\overline{p}]\), i.e., \(p_i \ne p_j\) whenever \(i\ne j\). Set the learning interval as \([0,\tau ]\), and set \(\Delta = \tau /\kappa \). For \(i=1,2,\ldots ,\kappa \), during the time interval \([(i-1)\Delta , i\Delta ]\), price at \(p_i\), and let \(A_i\) and \(S_i\) be the count of arrivals and sales, respectively. If a stock-out occurs before time \(\tau \), then price at \(p_{\infty }\) and stop sales. Put

as a joint estimator of \(\lambda \) and the \(q(p_i)\).

The pricing policy in the presence of an arrival rate is as follows.

Algorithm AS or \(\pi (\tau ,\kappa )\).

Step 1 Initialization:

-

(a)

Let the learning time be \(\tau \) and the number of test prices be \(\kappa \). Put \(\Delta =\tau /\kappa \).

-

(b)

Divide \([\underline{p},\overline{p}]\) into \(\kappa \) equally spaced intervals. Let \(\{p_i,i=1,\ldots ,\kappa \}\) be the mid-points (or left-endpoints or right-endpoints) of these intervals.

Step 2 Learning:

-

(a)

For \(i=1,\ldots ,\kappa \), and provided inventory is positive, apply price \(p_i\) during the time interval \([t_{i-1}, t_i]\), where \(t_i:=i\Delta \). If inventory is zero at any time, apply \(p_{\infty }\) until time T and stop.

-

(b)

Let \(A_i\) and \(S_i\) be the number of arrivals and sales, respectively, during \([t_{i-1},t_i)\). Compute

$$\begin{aligned} \widehat{\lambda }= \frac{\sum _{i=1}^\kappa A_i}{\tau }, \quad \widehat{q}(p_i) := \frac{S_i}{A_i} \mathbb {1}_{\left[ {A_i>0} \right] } \end{aligned}$$(4)

Step 3 Compute \(\widehat{p}^u=\text {arg max\;}_{1\le i\le \kappa } \{p_i\widehat{q}(p_i)\}; \widehat{p}^c=\text {arg min\;}_{1\le i\le \kappa } |\widehat{\lambda }\widehat{q}(p_i) - x/T|; \) and set \(\widehat{p}= \max \{\widehat{p}^u,\widehat{p}^c\}\).

Step 4 Pricing: On the interval \((\tau ,T]\) apply price \(\widehat{p}\) as long as inventory is positive. If inventory is zero at any time, apply \(p_{\infty }\) until time T and stop.

4 Results on estimation

4.1 Estimator of arrival rate and purchase probabilities

From Assumptions 1 and 3 there follow two properties:

-

P1.

\(A_1,\ldots ,A_\kappa \) are independent Poisson(\(\lambda \tau /\kappa \)) random variables.

-

P2.

Given \(\mathbf{A}:= (A_1,A_2,\ldots ,A_\kappa )\), the conditional law of \((S_1, S_2, \ldots S_\kappa )\) consists of the independent marginals \(S_i \sim \mathrm{Binomial}(A_i,q(p_i))\) for all i.

The following result is elementary and thus given without proof.

Proposition 1

Let Assumptions 1 and 3 hold. Let \(A_i\) and \(S_i\) be the count of arrivals and sales, respectively, when price \(p_i\) applies during Method A\((\tau ,\kappa )\). Then, a maximum-likelihood estimator of \(\big (\lambda ,q(p_1),\ldots ,q(p_{\kappa })\big )\) is given in (3).

4.2 Estimator of mean demand

Here we estimate the demand vector \((\lambda q(p_1),\ldots ,\lambda q(p_{\kappa }))\) for finite \(\kappa \). The invariance property of Maximum Likelihood Estimation (Bickel and Doksum 1977, Section 4.5) gives the following.

Proposition 2

For any \(p_1,\ldots ,p_{\kappa }\), the vector \(\big (\widehat{\lambda }\widehat{q}(p_1),\ldots ,\widehat{\lambda }\widehat{q}(p_{\kappa })\big )\), with \(\widehat{\lambda }\) and \(\widehat{q}(p_1),\ldots ,\widehat{q}(p_{\kappa })\) as in (3), is a maximum likelihood estimator of \(\big (\lambda q(p_1),\ldots ,\lambda q(p_{\kappa })\big )\).

We also refer to this estimator as the arrivals-and-sales estimator.

4.2.1 Bias and mean square error

For any price p, put \(f(p) := q(p)[1-q(p)]\) and define the relative bias \(B:= {\mathbb E}[\widehat{\lambda }\widehat{q}(p)]/(\lambda q(p)) - 1\) (shown below to not depend on p).

Proposition 3

Let Assumptions 1 and 3 hold. Denote the i-th price applied during Method \(A(\tau ,\kappa )\) as \(p=p_i\); denote \(A_i\) the associated arrival count, and let \(\mathbf{A}=(A_1,\ldots ,A_\kappa )\). Define \(\sigma _1(p) := {\mathbb E}[{\mathbb Var}(\widehat{\lambda }\widehat{q}(p)|\mathbf{A})]\) and \(\sigma _2(p) := {\mathbb E}[{\mathbb E}^2[\widehat{\lambda }\widehat{q}(p)|\mathbf{A}]] - \lambda ^2q^2(p) (1 + 2 B)\). Let \(r := \lambda \tau /\kappa \); and, with \(X_{r} \sim \text {Poisson}(r)\), let \( \rho := {\mathbb P}(X_{r}>0) = 1 - e^{-r} \) and \( h(r):= {\mathbb E}[ X_{r}^{-1} \mathbb {1}_{\left[ {X_{r}>0} \right] }]. \) Then

Remark 1

For any estimator \(\theta \) we have \(\mathrm{MSE}(\theta ) = {\mathbb E}[{\mathbb Var}(\theta |\mathbf{A})] + {\mathbb Var}({\mathbb E}[\theta |\mathbf{A}]) + \mathrm{Bias}^2(\theta )\), where \(\mathrm{Bias}\) and \(\mathrm{MSE}\) denote bias and mean square error, respectively. Thus \(\sigma _{2}(p) = {\mathbb Var}({\mathbb E}[\widehat{\lambda }\widehat{q}(p)|\mathbf{A}]) + [\lambda q(p)B]^2 \ge 0\); this term aggregates variance (of the conditional expectation) and bias squared.

Proof of Proposition 3

Proof of (5). Based on Properties P1 and P2 (Sect. 4.1), we have:

where (8) and (9) follow immediately from Property P2; step (a) uses (8) and that \(\widehat{\lambda }=\tau ^{-1}\sum _i A_i\); step (b) uses: the independence of the \(A_i\); \({\mathbb P}(A_i>0) = \rho \); and \({\mathbb E}[A_i] = r\). This proves the left part of (5). For the right part of (5), put \(X=\widehat{\lambda }\widehat{q}(p_i)\), \(\mu = \lambda q(p_i)\), and \(B=({\mathbb E}[X]-\mu )/\mu \); now use the identities \({\mathbb E}[(X-\mu )^2] = {\mathbb E}[X^2] - \mu ^2 (1+2B)\); \({\mathbb E}[X^2] = {\mathbb E}[{\mathbb E}[X^2|\mathbf{A}]]\); and \({\mathbb E}[X^2|\mathbf{A}] = {\mathbb Var}(X|\mathbf{A}) + {\mathbb E}^2[X|\mathbf{A}]\).

Proof of (6). In view of (9), we have \(\sigma _1(p) = q(p_i)(1-q(p_i)) {\mathbb E}[\widehat{\lambda }^2 A_i^{-1} \mathbb {1}_{\left[ {A_i>0} \right] }].\) It suffices to verify that \({\mathbb E}[\widehat{\lambda }^2 A_i^{-1} \mathbb {1}_{\left[ {A_i>0} \right] }]\) equals \(\tau ^{-2}\) times the expression in curly braces in (6); this follows immediately by writing \( \widehat{\lambda }^2 = \tau ^{-2} [(\sum _{j:j\ne i} A_j)^2 + 2A_i \sum _{j:j\ne i} A_j+ A_i^2] \) and using Property P1 to resolve the expectation.

Proof of (7). Observe that \( {\mathbb E}[{\mathbb E}^2[\widehat{\lambda }\widehat{q}(p)|\mathbf{A}]] = q^2(p) {\mathbb E}[\widehat{\lambda }^2 \mathbb {1}_{\left[ {A_i>0} \right] }] \) by (8). It suffices to verify that \({\mathbb E}[\widehat{\lambda }^2 \mathbb {1}_{\left[ {A_i>0} \right] }]\) equals \(\tau ^{-2}\) times the expression in curly braces in (7); this follows immediately by expanding \(\widehat{\lambda }^2\) as above and using Property P1 to resolve the expectation. \(\square \)

4.2.2 Asymptotic efficiency relative to the sales-only estimator

Consider the scale-n instance and any price p. Let \(\widehat{\lambda }_n \widehat{q}_n(p)\) denote the MLE of mean demand, \(\lambda _n q(p)\), and let \(\widehat{\lambda q}_n(p)\) denote the sales-only estimator, each based on applying any \(\kappa _n\) prices over \(1/\kappa _n\) time units each. In particular,

where S(p) is the sale count during a period of length \(1/\kappa _n\) during which price p is applied; thus, S(p) is Poisson(\(r_n q(p))\).

To analyze the MLE, let \(\mathbf{A}_n=(A_{1,n},\ldots ,A_{\kappa _n,n})\) denote the arrival counts observed under Method A (\(1, \kappa _n\)), and define scale-n analogs of B, \(\sigma _1(p)\) and \(\sigma _2(p)\) (Proposition 3) as follows: \(B_n := {\mathbb E}[\widehat{\lambda }_n\widehat{q}_n(p)]/[\lambda _nq(p)] - 1\); \(\sigma _{1,n}(p) := {\mathbb E}[{\mathbb Var}(\widehat{\lambda }_n\widehat{q}_n(p)|\mathbf{A}_n)]\); and \(\sigma _{2,n}(p) := {\mathbb E}[{\mathbb E}^2[\widehat{\lambda }_n\widehat{q}_n(p)|\mathbf{A}_n]] - \lambda _n^2q^2(p) (1 + 2 B_n)\).

The scale n will affect the mean square error through the multiplicative factor \(s_n := r_n \kappa _n^2 = \lambda _n \kappa _n = \lambda _n^2 r_n^{-1}\). Moreover, the set \(\mathcal{I}:= \{p: p \in [\underline{p},\overline{p}], f(p) > 0\}\) will be needed.

Proposition 4

Let (1) hold and let Assumption 3 hold for all \(n \ge \tilde{n}\) and some finite \(\tilde{n}\).

-

(a)

We have

$$\begin{aligned} \lim _{n\rightarrow \infty } \sup _{p \in \mathcal{I}} \left| \frac{\sigma _{1,n}(p)}{r_n\kappa _n^2} - f(p) \right| = 0 , \quad \lim _{n\rightarrow \infty } \sup _{p \in \mathcal{I}} \left| \frac{\sigma _{2,n}(p)}{r_n\kappa _n} - q^2(p) \right| = 0 \end{aligned}$$(10) -

(b)

(Pointwise efficiency) If \(\lim _{n\rightarrow \infty } \kappa _n = \infty \), then

$$\begin{aligned} \lim _{n\rightarrow \infty } \sup _{p \in \mathcal{I}} \left| \frac{{\mathbb E}[ (\widehat{\lambda }_n \widehat{q}_n(p) - \lambda _n q(p))^2]}{r_n\kappa _n^2} - f(p) \right| = 0, \end{aligned}$$(11)and for any price p such that \(q(p) > 0\),

$$\begin{aligned} \lim _{n\rightarrow \infty } \frac{{\mathbb E}[(\widehat{\lambda q}_n(p) - \lambda _nq(p))^2]}{{\mathbb E}[(\widehat{\lambda }_n\widehat{q}_n(p) - \lambda _nq(p))^2]} = \left\{ \begin{array}{ll} [1-q(p)]^{-1} &{}\quad \text{ if }\; q(p) < 1\\ \infty &{}\quad \text{ if }\; q(p) = 1 \end{array} \right. \end{aligned}$$ -

(c)

(Global efficiency) For each \(n \ge 1\), let the two estimators be employed at the prices \(p_{i,n} = \underline{p}+ (i-1/2)\ell _n\), \(i=1,\ldots ,\kappa _n\), where \(\ell _n := (\overline{p}-\underline{p})/\kappa _n\) and where \(\lim _{n\rightarrow \infty } \kappa _n = \infty \). If Assumption 4 holds, then

$$\begin{aligned} \lim _{n\rightarrow \infty } \frac{{\mathbb E}[\sum _{i=1}^{\kappa _n}(\widehat{\lambda q}_n(p_{i,n}) - \lambda _nq(p_{i,n}))^2]}{{\mathbb E}[\sum _{i=1}^{\kappa _n}(\widehat{\lambda }_n\widehat{q}_n(p_{i,n}) - \lambda _nq(p_{i,n}))^2]} = \frac{\int _\mathcal{I}q(p)\mathrm{d}p}{\int _\mathcal{I}f(p) \mathrm{d}p} =: {{\mu }_{\infty }}\ge 1 . \end{aligned}$$(12)

Proof of Proposition 4

All limits are meant as \(n\rightarrow \infty \).

Proof of (a) Let \(p \in \mathcal{I}\), i.e., \(f(p) > 0\) (hence \(q^2(p)>0\)). All terms in Proposition 3 and its proof are denoted by appending the scale n as a rightmost subscript. Observe:

Step (a) is (6) re-arranged so that the right side does not depend on p. Step (b) uses: (i) \(h(r_n) = r_n^{-1} (1+o(1))\), using Lemma 4 and \(r_n \rightarrow \infty \); and (ii) \(\rho _n = 1 - e^{-r_n} = 1 + o(1)\). Step (c) notes that \((\kappa _n-1)/(r_n\kappa _n^2)\rightarrow 0\), due to \(r_n\rightarrow \infty \); this proves the first part of (a). Now observe:

Step (a) is (7) re-arranged so that the right side does not depend on p. Step (b) uses that \(\rho _n = 1+o(1)\) and collects terms (into \(r_n^2\kappa _n^2\)). Step (c) notes a cancellation (due to \(r_n\kappa _n = \lambda _n\)). Step (d) uses that \(B_n=-e^{-r_n}(\kappa _n-1)/\kappa _n\) and thus \(r_n\kappa _n B_n = o(1)\), by the second condition in (1). This proves the second part of (a).

Proof of (b). Result (11) follows immediately from part (a) and the assumption \(\kappa _n \rightarrow \infty \) (i.e., \(r_n\kappa _n\) vanishes relative to \(r_n\kappa _n^2\)). The sales-only estimator has \({\mathbb E}[(\widehat{\lambda q}_n(p) - \lambda _nq(p))^2] = {\mathbb Var}(S(p))\kappa _n^2 = q(p) r_n \kappa _n^2\), and the second part of (b) follows from (11), since \(f(p)/q(p) = 1-q(p)\).

Proof of (c). Recall that \(s_n = r_n\kappa _n^2 = \lambda _n\kappa _n\). Put \(f_n(p) := s_n^{-1} {\mathbb E}[ (\widehat{\lambda }_n \widehat{q}_n(p) - \lambda _n q(p))^2]\), \(I_n := \sum _{i=1}^{\kappa _n} f(p_{i,n}) \ell _n\), and observe: \( \left| \sum _{i=1}^{\kappa _n} f_n(p_{i,n}) \ell _n - \int _\mathcal{I}f(p) \mathrm{d}p \right| \le \left| \sum _{i=1}^{\kappa _n} f_n(p_{i,n}) \ell _n - I_n \right| + \left| I_n - \int _\mathcal{I}f(p) \mathrm{d}p\right| . \) Each (absolute difference) term on the right is arbitrarily small for all n sufficiently large. For the first term, this uses the uniform convergence of \(f_n(\cdot )\) to \(f(\cdot )\) shown in (11) (note \(\sum _{i=1}^{\kappa _n} \ell _n = \overline{p}-\underline{p}< \infty \)); for the second term, this uses that f is Riemann integrable on \([\underline{p},\overline{p}]\) [recall that Riemann integrability is equivalent to almost-everywhere continuity (Rudin 1976, Theorem 11.33 (b))] and that the partition defined by the \(p_{i,n}\) is arbitrarily fine for n large enough; that is, putting \(p_{0,n}:=\underline{p}\) and \(p_{\kappa _n+1,n}:=\overline{p}\), we have \(\max _{1\le i \le \kappa _n+1} (p_{i,n}-p_{i-1,n}) = \ell _n \rightarrow 0\). This shows that \( \lim _{n\rightarrow \infty } \sum _{i=1}^{\kappa _n} f_n(p_{i,n}) \ell _n = \int _\mathcal{I}f(p) \mathrm{d}p, \), i.e., the denominator in (12) equals \(s_n \ell _n^{-1} \int _\mathcal{I}f(p) \mathrm{d}p (1+o(1))\). An analogous argument shows that the enumerator equals \(s_n\ell _n^{-1} \int _\mathcal{I}q(p) \mathrm{d}p (1+o(1))\), and the proof is complete. \(\square \)

Remark 2

The condition \(q(p) > 0\) in (b) excludes only the trivial case \(q(p)=0\); here, the mean square error is zero for both estimators.

Remark 3

To explain the efficiency gain intuitively, we first decompose the \(\mathrm{MSE}\) of the sales-only estimator as was done for the MLE and then compare. As earlier, \(\mathbf{A}_n=(A_{1,n},\ldots ,A_{\kappa _n,n})\) is the vector of price-specific arrival counts observed under Method A (\(1, \kappa _n\)), which are independent Poisson(\(r_n\)). Without loss of generality, let \(A_{1,n}\) and \(S_{1,n}\) be the arrival and sale count associated to (any) target price p. For the sales-only \(\sigma _2\), observe: \({\mathbb E}[\widehat{\lambda q}_n(p)|\mathbf{A}_n] = {\mathbb E}[S_{1,n}/(1/\kappa _n)|\mathbf{A}_n] {\mathop {=}\limits ^{(a)}} {\mathbb E}[S_{1,n}/(1/\kappa _n)|A_{1,n}] = \kappa _n A_{1,n}q(p)\), with step (a) due to the independence in Property P2. Thus (note the bias is zero): \(\sigma _{2,n}^S(p) := {\mathbb Var}({\mathbb E}[\widehat{\lambda q}_n(p)|\mathbf{A}_n]) = {\mathbb Var}(\kappa _n A_{1,n}q(p)) = r_n \kappa _n^2 q^2(p)\) which is asymptotically \(\kappa _n\) times larger than the MLE analog, in view of (14). To see this more intuitively, note that Assumption 1 implies that all the arrival data \(\mathbf{A}_n\) contain information about the arrival rate; the sales-only estimator’s conditional expectation given \(\mathbf{A}_n\), that is \(\kappa _n A_{1,n}q(p)\), uses just one of them (\(A_{1,n}\) occurs over \(1/\kappa _n\) time units, hence the scaling up by \(\kappa _n\)); in contrast, the MLE analog, that is \(\widehat{\lambda }_n \mathbb {1}_{\left[ {A_{1,n}>0} \right] } q(p)\), uses all of them, and due to this its variance is asymptotically \(\kappa _n\) times smaller (the MLE’s bias is asymptotically negligible; see (14)). For the sales-only \(\sigma _1\), observe: \({\mathbb Var}(\widehat{\lambda q}_n(p)|\mathbf{A}_n) {\mathop {=}\limits ^{(a)}} {\mathbb Var}(\widehat{\lambda q}_n(p)|A_{1,n}) = f(p) A_{1,n} \kappa _n^2\), with step (a) again due to the independence in Property P2; thus \(\sigma _{1,n}^S(p) := {\mathbb E}[{\mathbb Var}(\widehat{\lambda q}_n(p)|\mathbf{A}_n)] = f(p) r_n\kappa _n^2\), and \(\sigma _{1,n}(p)/\sigma _{1,n}^S(p) \sim 1\), in view of (13); the MLE provides no benefit here as a consequence of the conditional independence of sale counts (at distinct prices) given \(\mathbf{A}_n\) (Property P2).

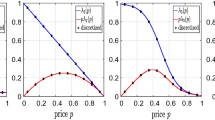

Example 1

Let \(q(p) = a - bp\), \(p \in [0,1]\), where \(a > 0\), \(b \ge 0\), and one requires \(q(0) = a \le 1\) and \(q(1) =a-b \ge 0\). By (12), \({{\mu }_{\infty }}={{\mu }_{\infty }}(a,b) = 1/[1- c - b^2/(12c)] > 1\), where \(c=a-b/2 > 0\). For example, \(q(\underline{p})=1\) and \(q(\overline{p})=0\) gives \({{\mu }_{\infty }}=3\), while \(q(\underline{p})=1\) and \(q(\overline{p})=1/2\) gives \({{\mu }_{\infty }}=9/2\).

5 Results on pricing

5.1 Regret upper bound in the presence of an arrival rate (the class \(\mathcal{L}\))

For the n-th problem, we put \(J^\pi _n := J^\pi (nx,T|n\lambda ,q)\) for the expected revenue under policy \(\pi \) (Algorithm AS); and we put \(J^D_n := J^D(nx,T|n\lambda ,q)\), which is easily seen to be \(n J^D(x,T|\lambda ,q)\). Our main result follows.

Theorem 1

Define \(\pi _n := \pi (\tau _n,\kappa _n)\) by Algorithm AS. If \( \tau _n \asymp (\log (n)/n)^{1/4} \) and \( \kappa _n \asymp (n/\log (n))^{1/4} \), then for some constant \(K_0\) and some finite \(\underline{n}\),

For each n and \(i=1,\ldots ,\kappa _n\), let \(p=p_{i,n}\) denote the i-th price applied in the learning step of Algorithm \(\pi (\tau _n,\kappa _n)\). Let \({\overline{A}_n} \sim \text {Poisson}(\lambda n\tau _n)\); \(A_{i,n} \sim \text {Poisson}(\lambda r_n)\), where \(r_n := n \tau _n /\kappa _n\); and \(S_{i,n} | A_{i,n} \sim \text {Binomial}(A_{i,n}, q(p))\) independently of \(A_{i,n}\). The estimates of \(\lambda _n\) and \(q(p)\) have the representation \(\widehat{\lambda }_n = \mathbb {1}_{\left[ {{\overline{A}_n} < nx} \right] } {\overline{A}_n}/(n\tau _n) + \mathbb {1}_{\left[ {{\overline{A}_n} \ge nx} \right] } Z\) and \(\widehat{q}_{n}(p) = I S_{i,n}A_{i,n}^{-1} + (1-I) Z\) respectively, where \(I=\mathbb {1}_{\left[ {{\overline{A}_n} < nx, A_{i,n} > 0} \right] }\) and Z is a suitable random variable in each case.

A key intermediate result is Lemma 1 below; it bounds the probability of certain estimation errors and is based on the following.

Condition 1

Let \(\lim _{n\rightarrow \infty }\kappa _n = \infty \). Moreover assume: (a) For some \(\underline{c}_\tau , \overline{c}_\tau > 0\) and \(0< \psi _1\le \psi _2< 1\), \(\underline{c}_\tau n^{\psi _1} \le n\tau _n \le \overline{c}_\tau n^{\psi _2}\) for all \(n\ge 1\); and (b) For some \(\underline{c}_r> 0\) and \(\beta > 0\), \(r_n := n\tau _n/\kappa _n \ge \underline{c}_rn^\beta \) for all \(n\ge 1\).

Lemma 1

Fix \(\eta \ge 2\), define \(r_n := n \tau _n/\kappa _n\), and let Condition 1 hold. Let \(\delta _n = \delta _n(\lambda ) = \left[ 4\eta \lambda \log n/(n\tau _n)\right] ^{1/2}\); and \(l_n= l_n(\lambda ) = r_n (\lambda - \zeta _n)\), where \(\zeta _n = \zeta _n(\lambda ) = \left[ 2\eta \lambda \log (n)/r_n\right] ^{1/2}\). Put \(\mathcal{C}=[\underline{p},\overline{p}]\). Then

-

(a)

For any finite \(\lambda > 0\), there exists a finite \(n_0=n_0(\lambda )\) such that for all \(n \ge n_0(\lambda )\) and for \(\epsilon _n = \epsilon _n(\lambda ) = \left[ \eta \log n/(2 l_n)\right] ^{1/2}\), we have

$$\begin{aligned} \inf _{p\in \mathcal{C}} {\mathbb P}\{ |\widehat{\lambda }_n \widehat{q}_{n}(p)- \lambda q(p)| \le \delta _n + \lambda \epsilon _n\} \ge 1 - C_1n^{-\eta } \end{aligned}$$(16)where \(C_1= C_1(\lambda ) := 2C_0(\lambda ) + 3\), where \(C_0(\lambda ) = \max \{1, [4\eta /(\lambda \beta e)]^{\eta /\beta }\}\).

-

(b)

Put \(C_1= C_1(\underline{\lambda })\). For any \(\alpha > 0\), there exists a finite \(\underline{n}=\underline{n}(\alpha )\) such that for all \(n \ge \underline{n}\),

$$\begin{aligned} \sup _{(\lambda ,q) \in \mathcal{L}} \sup _{p \in \mathcal{C}} {\mathbb P}\left\{ |\widehat{\lambda }_n \widehat{q}_{n}(p)-\lambda q(p)| > (1+\alpha ) \left( \frac{\overline{\lambda }\eta \log n}{2 r_n}\right) ^{1/2}\right\}\le & {} C_1n^{-\eta } ,\nonumber \\ \end{aligned}$$(17)$$\begin{aligned} \sup _{(\lambda ,q) \in \mathcal{L}} \sup _{p\in \mathcal{C}} {\mathbb P}\left\{ |\widehat{q}_{n}(p)-q(p)| > (1+\alpha ) \left( \frac{\eta \log n}{2 \underline{\lambda }r_n}\right) ^{1/2} \right\}\le & {} C_1n^{-\eta }.\nonumber \\ \end{aligned}$$(18)

Proof of Lemma 1

First, we construct \(n_1(\lambda )\) and \(n_2(\lambda )\) such that

Since \(r_n \ge \underline{c}_rn^\beta \), the condition \(\log (n)/n^\beta < \underline{c}_r^{-1} \lambda /(2\eta )\) implies \(l_n > 0\). Since \(\log (n)/n^\beta \) is maximized at \(x^*=e^{1/\beta }\) and decreases to zero for \(x \in [x^*,\infty )\), we may set \(n_1(\lambda ):=\min \{n: n\ge e^{1/\beta },\ \log (n)/n^\beta<\underline{c}_r^{-1}\lambda /(2\eta )\} < \infty \). By simple calculation, \( n_2(\lambda ) := \lceil \{\overline{c}_\tau (\lambda + [4\eta \lambda /(e\psi _1\underline{c}_\tau )]^{1/2} )/x\}^{1/(1-\psi _2)} \rceil < \infty \) suffices.

Proof of (a). Let p denote the i-th price applied during learning, i.e., \(p=p_{i,n}\) for some \(i \in \{1,\ldots ,\kappa _n\}\). Define the events

Put \(G_n := U_n \cap L_n \cap D_n\). Then

for \(n\ge n_0(\lambda )\), where \(n_0(\lambda ):=\max \{n_1(\lambda ),n_2(\lambda )\} < \infty \). Step (a) above uses that \({\overline{A}_n} \le n\tau _n(\lambda +\delta _n) < nx\) and \(l_n > 0\), which exclude a stock-out and a no-arrivals event, respectively; hence on the set \(G_n\) we have \(|\widehat{\lambda }_n-\lambda |=|{\overline{A}_n}/(n\tau _n)-\lambda |\le \delta _n\) and \(|\widehat{q}_{n}(p)-q(p)| = |S_{i,n}A_{i,n}^{-1}-q(p)|\le \epsilon _n\). Step (b) above uses the triangle inequality \( |\widehat{\lambda }_n \widehat{q}_{n}(p) - \lambda q(p)| \le |\widehat{\lambda }_n - \lambda | + \lambda |\widehat{q}_{n}(p) - q(p)| \). Now:

where (23) uses Lemma 2 (Poisson large-deviation bound); and step (a) in (24) uses Lemma 3 (Hoeffding’s inequality; the number of summands is \(A_{i,n} \ge \max (l_n, 1)\) since \(A_{i,n} \ge l_n> 0\) is integer-valued). From (22), (23), and (24), we obtain \({\mathbb P}({G_n}^c) \le (2C_0(\lambda )+3) n^{-\eta }\), and note that the same \(n_0(\lambda )\) in (21) suffices for any \(p\in \mathcal{C}\). Thus, part (a) is proven.

Proof of (b). Letting \(\theta _n := \sup _{(\lambda ,q) \in \mathcal{L}} \left[ \delta _n(\lambda ) + \lambda \epsilon _n(\lambda )\right] \), (16) gives

for all \(n \ge m\), where \(m := \max \{\sup _{\lambda \in \mathcal{L}} n_1(\lambda ), \sup _{\lambda \in \mathcal{L}} n_2(\lambda )\} = \max \{n_1(\underline{\lambda }), n_2(\overline{\lambda })\}\). Now

where step (a) uses that the first term vanishes relative to the second one (since \(n\tau _n/r_n = k_n \rightarrow \infty \)); and that \((1 - \sqrt{(2\eta \log n ) / (\lambda r_n)})^{-1/2} \sim 1\). This proves (17). Result (18) follows by an analogous argument. \(\square \)

Proof of Theorem 1

By construction, there exist positive \(\underline{c}_\tau , \overline{c}_\tau , \underline{c}_{\kappa }, \overline{c}_{\kappa }\) such that \(\underline{c}_\tau (\log n / n)^{1/4} \le \tau _n \le \overline{c}_\tau (\log n / n)^{1/4}\) and \(\underline{c}_{\kappa }(n/\log n)^{1/4} \le \kappa _n \le \overline{c}_{\kappa }(n/\log n)^{1/4}\) for all \(n \ge 1\). Condition 1 is in force: in part (a) use \(\psi _1=3/4\) and \(\psi _2> 3/4\); in part (b) use \(\beta =1/2\). Thus, Lemma 1 is in force. Let \(\underline{n}=\underline{n}(\alpha )\) be as in Lemma 1(b). The proof now parallels that of Besbes and Zeevi (2009, Proposition 1), but the regret will be bounded by

whose order \(O((\log n/n)^{1/4})\) is smaller than theirs. Lemma 1 replaces Besbes and Zeevi (2009, Online Companion, Lemma 2). Put \(X_n^{(L)}= \lambda n\tau _n\kappa _n^{-1} \sum _{i=1}^{\kappa _n} q(p_i)\) and \(X_n^{(P)}= \lambda q(\widehat{p}) n (T-\tau _n)\); put \(Y_n^{(L)}= N(X_n^{(L)})\), \(Y_n^{(P)}= N(X_n^{(P)})\), and \(Y_n= N(X_n^{(L)}+ X_n^{(P)})\) (\(Y_n^{(L)}\), \(Y_n^{(P)}\), and \(Y_n\) are the would-be sales during learning, pricing, and overall, respectively, if inventory were infinite).

Step 1 The revenue achieved by \(\pi _n\) is bounded below by the revenue achieved in the pricing phase; during this phase, the number of units sold is \(\min \{Y_n^{(P)}, (nx-Y_n^{(L)})^+\}\). It follows that

Step 2 We separate two cases: \(\Lambda (\overline{p}) \le x/T\) and \(\Lambda (\overline{p}) > x/T\).

Case 1 \(\Lambda (\overline{p}) \le x/T\). We will show that \(J_n^{\pi _n}\) is at least \(n J^D\) minus an \(O(u_n)\) term. Since \(\min \{Y_n^{(P)}, (nx-Y_n^{(L)})^+\} \ge Y_n^{(P)}- (Y_n-nx)^+\), (26) gives

using \(\widehat{p}\le \overline{p}\) in the first step and \({\mathbb E}[\widehat{p}Y_n^{(P)}]={\mathbb E}[r(\widehat{p})] \lambda n(T-\tau _n)\) in the second step. Lemma 7 shows that \({\mathbb E}[r(\widehat{p})] \ge r(p^D) - Ru_n - R_2/n^{\eta -1}\) for positive constants \(R\), \(R_2\) and for \(n \ge \underline{n}\). Moreover, Lemma 9 shows that \({\mathbb E}[(Y_n-nx)^+] \le K_En u_n\) for some constant \(K_E> 0\) and all \(n\ge \underline{n}\). Thus

for a constant \(K_1\). By Assumption 2(c), \(J^D \ge m^D\) for some \(m^D>0\), and thus

Case 2 \(\Lambda (\overline{p}) > x/T\). Here, \(p^D= \overline{p}\) and \(J_n^D = n \overline{p}x\). Lemma 10 shows that \(\min \{Y_n^{(P)}, (nx-Y_n^{(L)})^+\}\) (a lower bound on the quantity sold during the pricing phase) is close to nx and also \(\widehat{p}\) is close to \(p^D\), with high probability. Specifically, put \(\mathcal{E}:= \{\omega : \min \{Y_n^{(P)}, (nx-Y_n^{(L)})^+\} \ge nx - \tilde{K}_Yn u_n, |\widehat{p}- p^D| \le K_cu_n \}\) where \(\tilde{K}_Y\) and \(K_c\) are constants defined in Lemma 10. The mean revenue generated by \(\pi _n\) is bounded as follows:

where both (a) and (b) follow from the definition of \(\mathcal{E}\) and Lemma 10, and where \(C_2\) and \(K_2\) are suitable constants. We conclude that

Step 3 The result (15) now follows from (29) and (30). \(\square \)

Remark 4

We now provide nearly-explicit formulae for \(n_1(\lambda )\) and \(n_2(\lambda )\) in (19). In view of Theorem 1, it is optimal to set \(\tau _n = c_{\tau }(\log n/n) ^{1/4}\) and \(\kappa _n \sim c_{\kappa }(n / \log n)^{1/4}\) for some positive \(c_{\tau }, c_{\kappa }\); for simplicity, we now ignore the integrality of \(\kappa _n\) and equate it to \(c_{\kappa }(n / \log n)^{1/4}\). Substituting into \(\delta _n\) and \(r_n\), it is easy to see that \(n_1(\lambda ):=\min \{n:n\ge e,\ \log n / n < [(c_{\kappa }/c_{\tau })^{-1}\lambda /(2\eta )]^{2}\}\) suffices. Moreover, since \(\delta _n\) is decreasing for \(n\ge e\), it is easy to see that \(n_2(\lambda ):=\min \{n: n\ge e, (\log n / n) < {x/[c_{\tau }(\lambda +\delta _n)]}^4\}\) suffices; a simpler but weaker formula for \(n_2(\lambda )\) replaces \(\delta _n\) by \(\sup _n \delta _n = (4\eta \lambda c_{\tau }^{-1} e^{-3/4})^{1/2}\).

5.2 Besbes and Zeevi (2009) revisited: an improved convergence rate

We consider the setting in Besbes and Zeevi (2009). The demand function \(\Lambda (p)\) is postulated directly, without reference to arrivals.

Theorem 2

Let \(\mathcal{L}_{BZ}\) be the demand class defined in Besbes and Zeevi (2009, Section 4.2), and let \(\pi '_n\) be given by Algorithm \(\pi (\tau ,\kappa )\) in Section 4.1 there, with \(\tau =\tau _n\) and \(\kappa =\kappa _n\). If \( \tau _n \asymp (\log (n)/n)^{1/4} \) and \( \kappa _n \asymp (n/\log (n))^{1/4}, \) then there exists a constant \(K_0'\) such that for all \(n\ge 1\),

Proof of Theorem 2

In analogy to Theorem 1, probability bounds are obtained for events defined via deviations proportional to a \(u_n\) defined as in (25) and such that \(u_n \asymp (\log n / n)^{1/4}\). For example, to bound the error \(|\widehat{p}^c-p^c|\), let the demand function \(\Lambda (\cdot )\) satisfy \(\underline{M}_{\Lambda }' |p_1-p_2| \le |\Lambda (p_1)-\Lambda (p_2)| \le \overline{M}_{\Lambda }'|p_1-p_2|\) for all \(p_1\), \(p_2\) in the price domain \([\underline{p},\overline{p}]\); put \(M=\sup _p \Lambda (p)\) and \(K_c' = 4 \underline{M}_{\Lambda }'^{-1} \max \{c_1, c_2\}\), where: \(c_1:=\overline{M}_{\Lambda }'(\overline{p}-\underline{p})/2\) and \(c_2:=2(\eta M)^{1/2}\), for some \(\eta \ge 2\). The proof proceeds as in Lemma 6; in step (e) the Poisson large-deviation bound (Lemma 2) replaces (18); we obtain, for all \(n\ge 1\), \({\mathbb P}\{|\widehat{p}^c-p^c| > K_c' u_n\} \le C_0(M) n^{-\eta +1}\). We proceed as follows: the constants multiplying \(u_n\) to form the deviation events in Lemmata 5, 6, 7, 8, 9, and 10 are increased, when necessary, by a factor no larger than \(2\sqrt{2}\); these deviations are no less than a positive constant times \((\log n/r_n)^{1/2}\) (by \((\log n/r_n)^{1/2} \asymp (\log n/n)^{1/4}\)); then, by Lemma 2, these deviation events have probability at most a constant times \(n^{-\eta +1}\) for all n. The proof then closely parallels that of Theorem 1. \(\square \)

In comparison to Besbes and Zeevi (2009, Proposition 1), \(\tau _n\) is larger by a factor \((\log n)^{1/4}\); \(\kappa _n\) is smaller by a factor \((\log n)^{-1/4}\); and the order of the regret upper bound is smaller, by the factor \((\log n)^{-1/4}\).

5.3 Lower bound on regret

Example 2

Let \(\underline{p}=1/2\), \(\overline{p}=3/2\), \(x=2\) and \(T=1\). For any \(\lambda _0\in [\underline{\lambda },\overline{\lambda }]\), define \(\mathcal{M}\) as the family of demand models as in Assumption 1 with arrival rate \(\lambda _0\) and purchase probability \(q(p) = q(p;z) = 1/2 + p(1-z)\), where z is a parameter taking values in \(Z=[\underline{z},\overline{z}]=[1/3,2/3]\).

For any z, \(r(p; z) := p q(p;z)\) is the revenue rate per arrival, and \(p^D(z)\) is the optimal price under z. For any z and any admissible policy \(\pi \) (\(\pi \in \mathcal{P}\)), the regret for the scale-n problem is abbreviated as \(\mathcal{R}^{\pi }_n(z) := 1 - J_n^\pi (z)/(nJ^D(z))\), where \(J_n^\pi (z)\) and \(nJ^D(z)\) are the scale-n expected revenue under \(\pi \), and that of the deterministic relaxation, respectively, under z.

Theorem 3

(Lower-bound example)

-

(a)

The family \(\mathcal{M}\) is contained in \(\mathcal{L}\), i.e., \(\mathcal{M}\subseteq \mathcal{L}\).

-

(b)

Let the n-th problem instance have arrival rate \(\lambda _n = n \lambda _0\) for all \(n\ge 1\). For any admissible pricing policy \(\pi \) and all \(n \ge 1\),

$$\begin{aligned} \sup _{z \in Z} \mathcal{R}^{\pi }_n(z) \ge \frac{K_3}{\sqrt{n}} \end{aligned}$$

where \(K_3\) is a positive constant that may depend on \(\lambda _0\).

Proof of Theorem 3

The proof of (a) is a simple verification that we omit, so we now focus on proving (b). Consider an arbitrary admissible policy \(\pi \) and let \(\psi _t\) denote the associated price at time t. By its admissibility, the stochastic process \(\{\psi _t\}\) is adapted to \(\mathcal{F}_t\). Let \(z_1,z_2\) be any elements of Z and let \(t \in [0,T]\). For \(i=1,2\), let \({\mathbb P}_{z_i}^{\pi (t)}\) denote the probability measure induced by \(\pi \) up to time t (i.e., describing the process \(\{(A_s,D_s): 0 \le s \le t\}\)) when \(z=z_i\) and \({\mathbb E}_{z_i}^{\pi (t)}\) denote the corresponding expectation. Putting

(these are stochastic processes adapted to \(\mathcal{F}_t\)), the Kullback-Leibler (KL) divergence between the two measures \({\mathbb P}_{z_1}^{\pi (t)}\) and \({\mathbb P}_{z_2}^{\pi (t)}\) is

where \(\mathrm{d}{\mathbb P}_{z_1}^{\pi (t)} / \mathrm{d}{\mathbb P}_{z_2}^{\pi (t)}\) is the Radon–Nikodym derivative of \({\mathbb P}_{z_1}^{\pi (t)}\) with respect to \({\mathbb P}_{z_2}^{\pi (t)}\), and the last step uses that \({{\mathbb E}_{z_1}^{\pi (t)}} \int _0^t \log {\xi }_s \mathrm{d}D_s = {{\mathbb E}_{z_1}^{\pi (t)}} \int _0^t (\log {\xi }_s) n\lambda _0Q_{1,s} \mathrm{d}s\); see Brémaud (1981) for background. When \(t=T\), we write \({\mathbb P}_{z}^{\pi }\) instead of \({\mathbb P}_{z}^{\pi (t)}\).

Claim 1. For \(z_1 = 1/2\), any \(z_2 \in Z\), and any policy \(\pi \in \mathcal{P}\) we have

Proof of Claim 1. Observe that \({\xi }_s = 1+\epsilon ,\) where \(\epsilon =\epsilon _s=(z_1-z_2)(1-\psi _s) Q_{2,s}^{-1}\). Note that \( I_s = 1-(1+\epsilon )+(1+\epsilon )\log (1+\epsilon ) \le -\epsilon +(1+\epsilon )\epsilon = \epsilon ^2, \) where the inequality uses \(\underline{q}:= \inf _z \inf _p q(p;z)= 1/6\); \(|\epsilon | \le |z_1-z_2| \cdot |1-\psi _s| \cdot \underline{q}^{-1} \le (1/6) \cdot (1/2) \cdot 6 = 1/2\); and \(\log (1+\epsilon )\le \epsilon \). Now we bound the integrand in (31): \(Q_{2,s} I_s \le Q_{2,s} \epsilon ^2 = Q_{2,s}^{-1}(z_1-z_2)^2(1-\psi _s)^2 \le \underline{q}^{-1}(z_1-z_2)^2(1-\psi _s)^2\); thus

Moreover, when z equals \(z_1=1/2\), the optimal price is \(p^D:=p^D(z_1)=1\), and

step (a) follows from the fact that the inventory constraint is being relaxed; step (b) uses that \(r(p^D;z)-r(p;z)\ge (1/2) K (p^D-p)^2\) for any p and z, where \(K := \inf _z\inf _p |r''(p;z)| = 2/3\); this follows from Taylor’s theorem with order-two Lagrange remainder (since \(r'(p^D;z)=0\) and \(r''(p;z)=-2z\) for all p and z, with primes denoting derivatives with respect to p); step (b) also notes that \(r(p^D;z_1)=1/2\). This and (33) prove Claim 1, which is analogous to Wang et al. (2014, Lemma 9). The proof onwards is fully analogous to that of Wang et al. (2014, Lemma 10 and Theorem 2) and omitted. \(\square \)

Remark 5

The KL divergence in (31) involves a change of intensity for a Poisson process. Below we motivate this formula; note that Besbes and Zeevi (2012), Wang et al. (2014) start with a KL formula claimed from Brémaud (1981), but no specific reference is given. The fixed arrival rate \(\lambda _0\) implies that the Radon-Nikodym derivative (likelihood ratio) with respect to the arrival element in the sample space, \(\{A_s: 0\le s \le t\}\), contributes nothing (the log-likelihood ratio is zero). The change of intensity is thus captured through the paths of the demand process only, \(\{D_s: 0 \le s \le t\}\), with \(q(\cdot ;z_1)\) and \(q(\cdot ;z_2)\) appearing exactly as in these works, while the intensity of the base measure \({\mathbb P}_{z_1}^{\pi (t)}\) brings in \(\lambda _0\) as multiplier.

Remark 6

In Claim 1, the constant 9 improves the constant of Wang et al. (2014, Lemma 9), which is 24.

Theorem 3 is parallel to Wang et al. (2014, Theorem 2) and Broder and Rusmevichientong (2012, Theorem 3.1); it replaces their demand function by our purchase probability function; their insights carry over here. Specifically, the functions \(q\) in \(\mathcal{M}\) take the same value at the price \(p=1\): \(q(1;z) = 1/2\) for all \(z \in Z\). Such a price is called an uninformative price (Broder and Rusmevichientong 2012). Whenever one prices at this uninformative price, there is no gain in information about \(q\). In order to learn the function \(q\) (i.e., the parameter z) and determine the optimal price, \(p^D\), one must (for at least some time) set a price other than the uninformative one; on the other hand, when \(p^D\) and the uninformative price coincide (i.e., z equals \(z_1=1/2\)), pricing anywhere other than at \(p^D\) incurs revenue losses. This tension is reflected in the lower bound, which reflects lower bounds on error probabilities in hypothesis testing (Tsybakov 2009) that are fully analogous to those in Besbes and Zeevi (2012), Broder and Rusmevichientong (2012), Wang et al. (2014).

6 Numerical results

We compare policies that we index as follows: (1) our policy (Algorithm AS) (AS); (2) the policy in Besbes and Zeevi (2009, Section 4.1) (BZ); two variants of the policy in Wang et al. (2014, Section 7.1) with respect to the price interval that initializes their step 3: (3) the interval from step 2 (W0); (4) the interval \([\underline{p},\overline{p}]\) (W1); and (5) policy BZ modified as in Theorem 2 (BZ-M).

In constructing test prices, the midpoints of relevant intervals are used; these work slightly better then left- or right-endpoints.

We revisit Wang et al. (2014, Table 1) so that regret numbers are comparable. Thus, we fix the initial inventory \(x=20\); selling horizon \(T=1\); and feasible price set \([\underline{p},\overline{p}]=[0.1, 10]\). A pair \((\lambda ,q)\) (in \(\mathcal{L}\)) and a corresponding demand function \(\Lambda (p)=\lambda q(p)\) are drawn randomly from one of two families:

-

Linear: \(\Lambda (p) = \lambda -\alpha p\) and \(q(p) = 1-(\alpha /\lambda ) p\), with \(\lambda \in [20,30]\), \(\alpha \in [2, 10]\).

-

Exponential: \(\Lambda (p)= \lambda e^{-\beta p}\) and \(q(p) = e^{-\beta p}\), with \(\lambda \in [40, 80]\), \(\beta \in [1/3, 1]\).

The probability law is uniform for each parameter; to match their law in the linear case, uniform sampling applies to \(\lambda \) and \(\alpha \), not to \(\alpha /\lambda \).

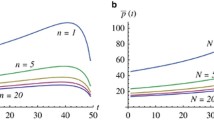

Existing theory describes an optimal growth function for each policy parameter (phase duration, number of test prices, etc.); for example, for AS, \(\tau _n \asymp f(n)\) where \(f(n) = (\log n / n)^{1/4}\). Let \(c>0\) be any scaling (constant), fixed for all n. Note \(f(cn) \sim c^{-1/4} f(n)\) as \(n\rightarrow \infty \); for each parameter, a similar asymptotic applies, so that its growth order (and that of the regret) is unchanged when n is replaced by cn. We find that (mean-regret) sensitivity to c is substantial. Therefore, each parameter is set as \(f(c_i x_n\)), where \(c_i>0\) is a variable; \(x_n=20n\) is the n-th initial inventory; and f is the optimal growth function for that parameter; see our theorems and Besbes and Zeevi (2009), Wang et al. (2014). Figure 1 shows the (estimated mean) regret as \(c_i\) varies relative to a reference value \(c^*_i\) found as the near-minimizer of the regret for \(n=10^6\) (for that case). We use: \(c^*_1=c^*_5=100\); \(c^*_2=5\); \(c^*_3=c^*_4=1\) (for linear family); and \(c^*_3=c^*_4=1/4\) (for exponential family). We see that suboptimal scaling affects policies W0 and W1 more than the others. The result \((c^*_1/c^*_2)^{-1/4} \approx 47\%\) indicates a learning-time ratio (policies AS to BZ) reduction relative to \(c_1=c_2\), and is consistent with policy AS having higher estimation efficiency. Table 1 shows the near-optimal regret (\(c_i= c^*_i\)); standard errors are \(<2\%\) for policies W0 and W1 for \(n=10^6\); and \(<1\%\) otherwise.

Our main findings follow. Policies W0 and W1 are superior for large systems, but they risk being inferior if the scaling is far from the optimal one (Fig. 1); we think the latter can happen in practice. With all policies scaled near-optimally, there is a system-size threshold n in \([10^3,10^4]\) such that policy AS is (modestly) superior below it; as the size increases above this threshold, policy W1 dominates by a margin that increases. This point persists when we focus on policy W0 and take its performance from Wang et al. (2014) (they use near-optimal scaling). Policies BZ and BZ-M perform nearly identically, despite the latter’s theoretically faster convergence.

7 Conclusion

Our estimation results apply independently of a pricing problem: start with a Poisson process N of rate \(\lambda \) on some finite interval and a probability \(q(p)\), where p is a control. Setting the control at p thins the process N (each event of N is accepted with probability \(q(p)\)), inducing a thinned process \(N'\). We showed that the empirical rate of N multiplied by the empirical thinning probabilities is a more efficient estimator of the rate of \(N'\), that is \(\lambda q(p)\), than the empirical rate of \(N'\). In the pricing problem, our regret upper bound improves that in Besbes and Zeevi (2009) by the factor \((\log n)^{-1/4}\) and is the result of refined bounding. Numerically, our method performs better than Wang et al. (2014) for systems that are not very large, and dominates Besbes and Zeevi (2009) regardless of system size; its superior estimation efficiency is behind this. In future work, the arrivals-and-sales estimator could replace the sales-only one commonly used in the literature; indeed, experience with the demand families in Sect. 6 suggests that this reduces the mean regret of the algorithm of Wang et al. (2014) (but not the convergence rate).

8 Proofs

Lemma 2

Let \(N(\cdot )\) be a unit-rate Poisson process. Suppose that \(\lambda \in [0,M]\) and \(r_n \ge n^{\beta }\) with \(\beta > 0\). Let \(\eta > 0\), \(\epsilon _n = 2 \eta ^{1/2}M^{1/2}(\log n/r_n)^{1/2}\), and \(C_P=C_P(M) := [4\eta /(M\beta e)]^{\eta /\beta }\). Define the events \(\mathcal{A}_n := \{N(\lambda r_n) - \lambda r_n \ge r_n \epsilon _n\}\) and \(\mathcal{B}_n := \{N(\lambda r_n) - \lambda r_n < -r_n \tilde{\epsilon }_n\}\), where \(\tilde{\epsilon }_n := \epsilon _n/\sqrt{2}\). Then for all \(n\ge 1\),

so \({\mathbb P}(\mathcal{A}_n) \le C_0n^{-\eta }\) for all \(n \ge 1\), where \(C_0:= \max \{1,C_P\}\). Moreover,

Remark 7

Lemma 2 parallels Besbes and Zeevi (2009, Online Companion, Lemma 2) but corrects their constant leading the \(n^{-\eta }\) term.

Proof of Lemma 2

For any nonnegative sequence \(\{\epsilon _n\}\),

where \(f_*(x; \lambda ) := x\log (x/\lambda ) + \lambda - x\), with \(x\ge 0\), is the Fenchel-Legendre transform of the logarithmic moment-generating function of the Poisson\((\lambda )\) law. Step (a) is Cramér’s Theorem (Dembo and Zeitouni 1998, Theorem 2.2.3); step (b) notes the exponent’s derivative in \(\lambda \), i.e., \(-r_n[\log (1+x/\lambda )-x/\lambda ]\), with \(x=\epsilon _n\) in (36) and with \(x=-\epsilon _n\) in (37), is non-negative. Now a second-order Taylor expansion of \(f_*(x;M)\) in x is used; note \(f_*'(x;M):= \mathrm{d}f_*(x;M)/\mathrm{d}x = \log (x/M)\); \(f_*''(x;M):= \mathrm{d}^2 f_*(x;M)/\mathrm{d}x^2 = 1/x\); and \(f_*(M;M)=f_*'(M;M)=0\). Thus, for some \(\xi =\xi _n\) in \([0,\epsilon _n]\), \(f_*(M + \epsilon _n;M) = [2(M + \xi )]^{-1} \epsilon _n^2.\)

Proof of (34). Case 1: \(\epsilon _n < M\). Since \(\xi \in [0, \epsilon _n]\), we have \(\xi < M\) and \([2(M+\xi )]^{-1} > 1/(4M)\); now (36) implies \({\mathbb P}(\mathcal{A}_n) \le e^{-r_n\epsilon _n^2/(4M)}\); equating this to \(n^{-\eta }\) and taking logarithms gives \(-r_n\epsilon _n^2/(4M) = -\eta \log (n)\), i.e., \(\epsilon _n = 2 \eta ^{1/2}M^{1/2}(\log n/r_n)^{1/2}\), as assumed. This proves (34) for \(\epsilon _n < M\).

Case 2: \(\epsilon _n \ge M\). We have \([2(M+\xi )]^{-1}\epsilon _n^2 {\mathop {\ge }\limits ^{}} [2(M+\epsilon _n)]^{-1}\epsilon _n^2 {\mathop {\ge }\limits ^{}} M/4\), where the first step uses that \(\xi \le \epsilon _n\) and the second step uses that the left side is minimized at \(\epsilon _n = M\). Thus, (36) gives \({\mathbb P}(\mathcal{A}_n) \le e^{-r_n M/4} \le e^{-n^\beta M/4}\). A simple calculation shows that \(e^{-n^\beta M/4} \le c n^{-\eta }\) for \(c = [4\eta /(M\beta e)]^{\eta /\beta }\) and all \(n\ge 1\). This proves (34) for \(\epsilon _n \ge M\).

Proof of (35). For \(\tilde{\epsilon }_n \ge M\), the result holds trivially. For \(\tilde{\epsilon }_n < M\), a Taylor expansion gives \( f_*(M - \tilde{\epsilon }_n;M) = [2(M - \xi )]^{-1} \tilde{\epsilon }_n^2 \) for some \(\xi =\xi _n\) in \([0,\tilde{\epsilon }_n]\); note \(0 < M-\xi \le M\) and thus \([2(M-\xi )]^{-1} \tilde{\epsilon }_n^2 \ge [2M]^{-1}\tilde{\epsilon }_n^2\). Now (37) implies \({\mathbb P}(\mathcal{B}_n) \le e^{-r_n\tilde{\epsilon }_n^2/(2M)}\), and the result follows. \(\square \)

The following result is known as Hoeffding’s inequality.

Lemma 3

Let \(\{I_n\}\) be independent Bernoulli(q) with \(q \in (0,1)\), and let \(S_n := \sum _{i=1}^n I_i\). Then for any nonnegative sequence \(\{\epsilon _n\}\) and all \(n \ge 1\), \(\max \big \{ {\mathbb P}\big (S_n - nq \ge n\epsilon _n\big ) , {\mathbb P}\big (S_n - nq \le -n\epsilon _n\big ) \big \} \le e^{-2n \epsilon _n^2}. \)

8.1 Auxiliary results and their proofs

8.1.1 Results supporting section 4

Lemma 4

Let \(X_n\) be a Poisson random variable with mean n, and let \(Z_n = (X_{n}/n)^{-1} \mathbb {1}_{\left[ {X_{n}>0} \right] }\). Then \(\lim _{n\rightarrow \infty } {\mathbb E}[Z_n] = 1\).

Proof

We claim: (a) \(Z_n {\mathop {\Rightarrow }\limits ^{}} 1\) as \(n\rightarrow \infty \); and (b) \(\lim _{\alpha \rightarrow \infty } \sup _n {\mathbb E}[Z_n \mathbb {1}_{\left[ {|Z_n| \ge \alpha } \right] } ] {\mathop {=}\limits ^{}} 0\); then, the result follows from Theorem 25.12 of Billingsley (1986). Condition (a) follows from: (i) \(X_n/n \Rightarrow 1\); (ii) \(Z_n = f(X_n/n)\), where \(f(x) := x\mathbb {1}_{\left[ {x>0} \right] }\) is continuous at \(x=1\); and (iii) the Continuous Mapping Theorem (Billingsley 1986, Theorem 29.2). To verify condition (b), note: \( {\mathbb E}[Z_n\mathbb {1}_{\left[ {|Z_n|\ge \alpha } \right] }] {\mathop {=}\limits ^{}} {\mathbb E}[n X_n^{-1} \mathbb {1}_{\left[ {X_n \le n/\alpha } \right] }] {\mathop {=}\limits ^{}} n \sum _{k=1}^{\lfloor n/\alpha \rfloor } p(k;n) / k, \) where the first step uses that \(\{|Z_n| \ge \alpha \} = \{X_n \le n/\alpha \}\); and the second step puts \(p(k;n) := {\mathbb P}(X_n = k) = e^{-n}n^k/k!\). The sum has at most \(n/\alpha \) summands, and each of them is at most \( \max _{1\le k\le n/\alpha } p(k,n) \le e^{-n}n^{n/\alpha }/\lfloor n/\alpha \rfloor ! \sim c_{\alpha }^n (\alpha /(2\pi n))^{1/2} =: u_n(\alpha ) \) where \(c_{\alpha } := \left( \alpha ^{1/\alpha } e^{-1+1/\alpha }\right) \) and the “\(\sim \)” step uses Stirling’s formula. Thus \(\lim _{\alpha \rightarrow \infty } \sup _n {\mathbb E}[Z_n\mathbb {1}_{\left[ {Z_n\ge \alpha } \right] }]\) is at most \(\lim _{\alpha \rightarrow \infty } \sup _n n \frac{n}{\alpha } u_n(\alpha ) = 0\), which follows from \(\lim _{\alpha \rightarrow \infty } c_{\alpha } =e^{-1} < 1\). \(\square \)

8.1.2 Results supporting section 5.1

In this section, the notation and conditions used in Theorem 1 are in force; in particular, \(\eta \) satisfies \(\eta \ge 2\); \(r_n = n\tau _n/\kappa _n\); and \(u_n\), defined in (25), satisfies

for some \(\underline{c}_u>0\) and \(\overline{c}_u> 0\). By Assumption 2 and \(\Lambda (p) := \lambda q(p)\),

for any \(p_1,\ p_2, \lambda \), where \(\overline{M}_{\Lambda }:= \overline{\lambda }\overline{M}\) and \(\underline{M}_{\Lambda }:= \underline{\lambda }\underline{M}\). Moreover, the same assumption gives \(|p_1q(p_1)-p_2q(p_2)| \le \overline{p}\overline{M}|p_1-p_2| + \overline{q}|p_1-p_2|\) for any \(p_1,\ p_2\), where \(\overline{q}:=\sup _p q(p)=q(\underline{p})\). Thus \(r(\cdot )\) is \(\overline{M}_{r}\)-Lipschitz with \(\overline{M}_{r}:=\overline{q}+\overline{M}\overline{p}\).

Lemma 5

(Unconstrained maximizer) Let \(\eta \ge 2\), \({\alpha }> 0\), and define \(R_u:= 4\max \{c_1, c_2\}\), where \(c_1:=\overline{M}_{r}(\overline{p}-\underline{p})/2\), \(c_2:=\overline{p}\eta ^{1/2}(2\underline{\lambda })^{-1/2}(1+{\alpha })\). Then \( {\mathbb P}\{ r(p^u) - r(\widehat{p}^u) \ge R_uu_n\} \le C_1/n^{\eta -1} \) for all \(n\ge \underline{n}\), with \(C_1\) and \(\underline{n}\) as in Lemma 1(b).

Proof of Lemma 5

Recall that \(p_i\) is a short form for \(p_{i,n}\). Put \(\widehat{q}(p_i)=\widehat{q}_n(p_i)\) and \(\widehat{r}(p_i) = p_i \widehat{q}_n (p_i)\), and let j be the interval \((p_{j-1},p_j]\) that contains \(p^u\) (we drop the dependence on n to lighten the notation). Now

since \(|r(p^u)-r(p_j)| \le \overline{M}_{r}(\overline{p}-\underline{p})\kappa _n^{-1}\) (since \(r(\cdot )\) is \(\overline{M}_{r}\)-Lipschitz and \(|p^u-p_j| \le (\overline{p}-\underline{p})\kappa _n^{-1}\)); \(\widehat{r}(p_j) - \widehat{r}(\widehat{p}^u) \le 0\) (since \(\widehat{p}^u= \text {arg max\;}_{1\le j\le \kappa _n} p_i\widehat{q}(p_i)\)); and the other two terms’ absolute value is at most \(\max _{1\le i\le \kappa _n} |r(p_i)-\widehat{r}(p_i)|\). Now

by construction of \(R_u\). Now

where step (a) uses (40); step (b) uses a union bound; step (c) uses (41); and step (d) uses Lemma 1(b) and that \(\kappa _n = o(n)\). \(\square \)

Lemma 6

(Clearance price) Let \(\eta \ge 2\), \({\alpha }> 0\), \(K_c:= 4 \underline{M}_{\Lambda }^{-1} \max \{c_1, c_2(\alpha )\}\), where \(c_1:=\overline{M}_{\Lambda }(\overline{p}-\underline{p})/2\) and \(c_2:=c_2(\alpha )=(\eta \overline{\lambda }/2)^{1/2}(1+{\alpha })\), and \(\overline{M}_{r}:=\overline{q}+\overline{M}\overline{p}\). Let \(C_1\) and \(\underline{n}=\underline{n}(\alpha )\) be as in Lemma 1(b). For all \(n\ge \underline{n}\),

Proof of Lemma 6

Put \(\widehat{\Lambda }(p_i) := \widehat{\lambda }_n\widehat{q}_n(p_i)\) and let j be the interval \((p_{j-1},p_j]\) that contains \(p^c\) (we drop the dependence on n to lighten the notation). Now

where (a) uses the triangle inequality; (b) uses that \(\widehat{p}^c= \text {arg min\;}_{1\le i\le \kappa _n} |\widehat{\Lambda }(p_i) - x/T| = \text {arg min\;}_{1\le i\le \kappa _n} |\widehat{\Lambda }(p_i) - \Lambda (p^c)|\); and (c) uses the triangle inequality; that \(\Lambda (\cdot )\) is \(\overline{M}_{\Lambda }\)-Lipschitz (see (39)); and that \(|p_j-p^c| \le (\overline{p}-\underline{p}) \kappa _n^{-1}\). Now

where step (a) uses (39); step (b) uses that \( |\Lambda (\widehat{p}^c) - \Lambda (p^c)| \le 2\max _{1\le i\le \kappa _n} |\widehat{\Lambda }(p_i)-\Lambda (p_i)| + \overline{M}_{\Lambda }(\overline{p}-\underline{p})/\kappa _n, \) which follows from (44); step (c) uses that \(K_cu_n/2 - c_1\kappa _n^{-1} \ge (\eta \overline{\lambda }/2)^{1/2} (1+\alpha )(\log n /r_n)^{1/2}\) (by construction of \(K_c\)); step (d) uses a union bound; and step (e) uses Lemma 1(b) and that \(\kappa _n = o(n)\). The left part of (43) is proven, and the right part follows since \(r(\cdot )\) is \(\overline{M}_{r}\)-Lipschitz. \(\square \)

Lemma 7

(Revenue rate) Let \({\alpha }> 0\). Put \(R= \max \{2R_u, \overline{M}_{r}^{-1}K_c, 2\overline{M}_{r}K_c\}\), with \(R_u\) as in Lemma 5 and \(K_c\) as in Lemma 6. Let \(C_1\) and \(\underline{n}=\underline{n}(\alpha )\) be as in Lemma 1(b). Then \( {\mathbb E}[r(\widehat{p})] \ge r(p^D) -Ru_n - 2 r(p^D) C_1/n^{\eta -1}\) for all \(n \ge \underline{n}\).

Proof of Lemma 7

The proof is fully parallel to Besbes and Zeevi (2009, Electronic Companion, Lemma 4, Step 3), except that: Lemmata 5 and 6 replace their analogous results; our \(u_n\) in (25) and revenue-rate per arrival, \(r(p)=pq(p)\), replace their \(u_n\) and revenue rate per time, \(r(\cdot )\), respectively. \(\square \)

Lemma 8

(Sales during learning) For \(M := \overline{\lambda }\overline{q}\), \(K_{L}= M + 2\eta ^{1/2}M^{1/2}\), and \(C_0\) as in Lemma 2, we have \( {\mathbb P}( Y_n^{(L)}> K_{L}n u_n ) \le C_0/ n^{\eta -1}\) for all \(n \ge 1\) and \(\eta \ge 2\).

Proof of Lemma 8

We have \( {\mathbb P}( Y_n^{(L)}> K_{L}n u_n ) = {\mathbb P}\big (\sum _{i=1}^{\kappa _n} N(\lambda q(p_i) r_n)> K_{L}n u_n \big ) \le \sum _{i=1}^{\kappa _n} {\mathbb P}\big ( N(\lambda q(p_i) r_n) > K_{L}n u_n/\kappa _n \big ),\) and thus

where step (a) uses that \(\lambda q(p_i) \le M\); step (b) uses that \( - M r_n + K_{L}n u_n / \kappa _n = - M n\tau _n/\kappa _n + K_{L}n u_n/\kappa _n \ge n/\kappa _n (K_{L}-M) u_n \ge 2\eta ^{1/2}M^{1/2} (\log n/r_n)^{1/2},\) which uses that \(u_n \ge \max \{ \tau _n, (\log n / r_n)^{1/2}\}\); \(n/\kappa _n \ge 1\); and the definition of \(K_{L}\); and step (c) uses Lemma 2 and that \(\kappa _n=o(n)\). \(\square \)

Lemma 9

(Mean overshoot) Let \(\Lambda (\overline{p}) \le x/T\). Take \(\underline{n}=\underline{n}(\alpha )\) as in Lemma 1. Then \( {\mathbb E}[(Y_n - nx)^+] \le K_En u_n\) for some positive \(K_E\) and all \(n\ge \underline{n}\).

Proof of Lemma 9

Let \(K_{\Lambda }= \overline{M}_{\Lambda }K_c\), where \(K_c\) is defined in Lemma 6. Put \(K_m= x + K_{\Lambda }T \sup _{n\ge 1} u_n < \infty \). Let \(K_Y= 2\max \{K_{L}, K_{\Lambda }T + 2\underline{c}_u^{-1}\eta ^{1/2}K_m^{1/2}e^{-1/4}\}\), with \(K_{L}\) as in Lemma 8, \(\underline{c}_u\) as in (38), and \(\eta \ge 2\). Since \(Y_n= Y_n^{(L)}+ Y_n^{(P)}\), we have

where step (a) uses Lemma 8 and \(K_Y/2 \ge K_{L}\); and \(C_0\) comes from Lemma 2. To bound the last term in (46), we note that \(p^c- \widehat{p}= p^c- \max (\widehat{p}^u, \widehat{p}^c) \le p^c- \widehat{p}^c\). By Lemma 6, \( {\mathbb P}(p^c- \widehat{p}> K_cu_n) \le {\mathbb P}(p^c- \widehat{p}^c> K_cu_n) \le C_1n^{-\eta +1} \) for all \(n \ge \underline{n}\). This and the fact that \(\Lambda ()\) is decreasing and \(\overline{M}_{\Lambda }\)-Lipschitz imply

Outside the event above, \(\Lambda (\widehat{p})n(T-\tau _n)\) (the mean \(Y_n^{(P)}\)) is at most \(n v_n\), where

where the inequality uses \(\Lambda (p^c) \le x/T\), which follows from \(\Lambda (\overline{p}) \le x/T\). Now

for all \(n \ge \underline{n}\), where step (a) uses (47); and step (b) applies Lemma 2 with \(r_n=n\) and \(M=K_m\ge \sup _n v_n\); the lemma applies because \( nx + K_Yn u_n/2 - n v_n {\mathop {\ge }\limits ^{(b1)}} nx + K_Yn u_n/2 - n (x + K_{\Lambda }T u_n) {\mathop {\ge }\limits ^{(b2)}} 2 (\eta K_mn \log n)^{1/2},\) where step (b1) uses (48); and step (b2) uses that \(u_n \ge \underline{c}_u(\log n/n)^{1/4}\) and \(K_Y/2 - K_{\Lambda }T \ge 2\underline{c}_u^{-1}\eta ^{1/2}K_m^{1/2}\sup _{n\ge 1} (\log n/n)^{1/4}\), by construction of \(K_Y\). Now

for all \(n\ge \underline{n}\) and some constant \(K_E\), where step (a) uses that for a Poisson random variable Z with mean \(\mu \), \({\mathbb E}[Z|Z>a] \le a+1+\mu \) (Besbes and Zeevi 2009, Online Companion, Lemma 5); and it also uses \( {\mathbb P}( Y_n- nx > K_Yn u_n ) \le (2C_0+C_1)/n^{\eta -1}, \) which follows from (46) and (49). \(\square \)

Lemma 10

Let \(\Lambda (\overline{p}) > x/T\). Let \(\tilde{K}_Y= \max \{ K_{L}, \Lambda (\overline{p})+2\underline{c}_u^{-1} [\eta \Lambda (\overline{p}) T]^{1/2} e^{-1/4}\}\), with \(K_{L}\) as in Lemma 8, \(\underline{c}_u\) as in (38), and \(\eta \ge 2\). Put \( \mathcal{A}:= \{\omega : \min \{Y_n^{(P)}, (nx-Y_n^{(L)})^+\} \ge nx - \tilde{K}_Yn u_n, |\widehat{p}- p^D| \le K_cn u_n \} \), with \(K_c\) as in Lemma 6. Then \( {\mathbb P}(\mathcal{A}) \ge 1 - (2C_0+C_1)/n^{\eta -1} \) for all \(n \ge \underline{n}\), with \(C_0\) as in Lemma 2 and \(C_1\), \(\underline{n}\) as in Lemma 1.

Proof of Lemma 10

where (a) uses a union bound; step (b) uses Lemma 8 and that \(|\widehat{p}-p^D| \le |\widehat{p}^c-p^c|\); and step (c) uses Lemma 6. Now, putting \(\gamma _n := n\Lambda (\overline{p})(T-\tau _n)\),

where step (a) uses that \(\Lambda (\widehat{p}) \ge \Lambda (\overline{p})\) and \(x \le \Lambda (\overline{p}) T\); and step (b) applies Lemma 2 with \(r_n=n\) and \(M=\Lambda (\overline{p})T\); the lemma applies because \( n (\tilde{K}_Yu_n - \Lambda (\overline{p}) \tau _n) {\mathop {\ge }\limits ^{}} (\tilde{K}_Y- \Lambda (\overline{p})) \underline{c}_un^{3/4}\log ^{1/4} n {\mathop {\ge }\limits ^{}} 2 [\eta \Lambda (\overline{p}) T n \log n]^{1/2}\), where the last step uses \(\tilde{K}_Y- \Lambda (\overline{p}) \ge 2\underline{c}_u^{-1}[\eta \Lambda (\overline{p})T]^{1/2}\sup _{n\ge 1} (\log n/n)^{1/4}\), by construction of \(\tilde{K}_Y\). This proves (51); this and (50) give the result. \(\square \)

9 List of notation

Table 2 lists the symbols that are essential to all formal statements (definitions, assumptions, conditions, claims, lemmata, propositions, theorems). Each symbol is accompanied by a description in English, or an expression, or definition, via other symbols in the table.

References

Araman VF, Caldentey R (2009) Dynamic pricing for nonperishable products with demand learning. Oper Res 57:1169–1188

Auer P, Cesa-Bianchi N, Fischer P (2002) Finite-time analysis of the multiarmed bandit problem. Mach Learn 47:235–256

Babaioff M, Dughmi S, Kleinberg R, Slivkins A (2015) Dynamic pricing with limited supply. ACM Trans Econ Comput 3(1):1–26

Besbes O, Zeevi A (2009) Dynamic pricing without knowing the demand function: risk bounds and near-optimal algorithms. Oper Res 57:1407–1420

Besbes O, Zeevi A (2012) Blind network revenue management. Oper Res 60:1537–1550

Besbes O, Zeevi A (2015) On the (surprising) sufficiency of linear models for dynamic pricing with demand learning. Manag Sci 61:723–739

Bickel PJ, Doksum KA (1977) Mathematical statistics: basic ideas and selected topics. Holden-Day Company, Oakland

Billingsley P (1986) Probability and measure, 2nd edn. Wiley, New York

Bitran G, Caldentey R (2003) An overview of pricing models for revenue management. Manuf Serv Oper Manag 5:203–229

Brémaud P (1981) Point processes and queues: martingale dynamics, vol 50. Springer, Berlin

Broder J, Rusmevichientong P (2012) Dynamic pricing under a general parametric choice model. Oper Res 60:965–980

Chen B, Chao X, Ahn H-S (2019) Coordinating pricing and inventory replenishment with nonparametric demand learning. Oper Res 67:1035–1052

Cope E (2009) Regret and convergence bounds for a class of continuum-armed bandit problems. IEEE Trans Autom Control 54:1243–1253

Dembo A, Zeitouni O (1998) Large deviation techniques and applications. Springer, Berlin

den Boer AV (2015) Dynamic pricing and learning: historical origins, current research, and new directions. Surv Oper Res Manag Sci 20:1–18

den Boer AV, Keskin NB (2019) Discontinuous demand functions: estimation and pricing. Manag Sci. https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3003984

den Boer AV, Zwart B (2014) Simultaneously learning and optimizing using controlled variance pricing. Manag Sci 60:770–783

Farias VF, Van Roy B (2010) Dynamic pricing with a prior on market response. Oper Res 58:16–29

Gallego G, van Ryzin G (1994) Optimal dynamic pricing of inventories with stochastic demand over finite horizons. Manag Sci 40:999–1020

Keskin NB, Zeevi A (2014) Dynamic pricing with an unknown demand model: asymptotically optimal semi-myopic policies. Oper Res 62:1142–1167

Kleinberg RD (2004) Nearly tight bounds for the continuum-armed bandit problem. In: Proceedings of the 17th international conference on neural information processing systems, pp 697–704

Lei Y, Jasin S, Sinha A (2014) Near-optimal bisection search for nonparametric pricing with inventory constraint. https://deepblue.lib.umich.edu/handle/2027.42/108717

Lin KY (2006) Dynamic pricing with real-time demand learning. Eur J Oper Res 174:522–538

Rudin W (1976) Principles of mathematical analysis, 3rd edn. McGraw-Hill, New York

Talluri K, van Ryzin G (2005) Theory and practice of revenue management. Springer, Berlin

Tsybakov AB (2009) Introduction to nonparametric estimation. Springer, New York