Abstract

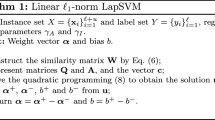

Laplacian support vector machine (LapSVM), which is based on the semi-supervised manifold regularization learning framework, performs better than the standard SVM, especially for the case where the supervised information is insufficient. However, the use of hinge loss leads to the sensitivity of LapSVM to noise around the decision boundary. To enhance the performance of LapSVM, we present a novel semi-supervised SVM with the asymmetric squared loss (asy-LapSVM) which deals with the expectile distance and is less sensitive to noise-corrupted data. We further present a simple and efficient functional iterative method to solve the proposed asy-LapSVM, in addition, we prove the convergence of the functional iterative method from two aspects of theory and experiment. Numerical experiments performed on a number of commonly used datasets with noise of different variances demonstrate the validity of the proposed asy-LapSVM and the feasibility of the presented functional iterative method.

Similar content being viewed by others

Notes

References

Balasundaram S, Benipal G (2016) On a new approach for Lagrangian support vector regression. Neural Comput Appl. 29(9):533–551

Belkin M, Niyogi P, Sindhwani V (2006) Manifold regularization: a geometric framework for learning from labeled and unlabeled examples. J Mach Learn Res 7:2399–2434

Bi J, Zhang T (2004) Support vector classification with input data uncertainty. Neural Inf Process Syst (NIPS) 17:161–168

Calma A, Reitmaier T, Sick B (2018) Semi-supervised active learning for support vector machines: a novel approach that exploits structure information in data. Inform Sci 456:13–33

Chapelle O, Scholkopf B, Zien A (2006) Semi-supervised learning. MIT Press, Cambridge

Chen W, Shao Y, Xu D, Fu Y (2014) Manifold proximal support vector machine for semi-supervised classification. Appl Intell 40:623–638

Cristianini N, Shawe-Taylor J (2000) An introduction to support vector machines and other kernel-based learning methods. Cambridge University Press, Cambridge

Demsar J (2006) Statistical comparisons of classifiers over multiple data sets. J Mach Learn Res 7:1–30

Du B, Tang X, Wang Z, Zhang L, Tao D (2019) Robust graph-based semisupervised learning for noisy labeled data via maximum correntropy criterion. IEEE Trans Cybern 49(4):1440–1453

Fung G, Mangasarian OL (2004) A feature selection Newton method for support vector machine classification. Comput Optim Appl 28(2):185–202

Gu N, Fan P, Fan M, Wang D (2019) Structure regularized self-paced learning for robust semi-supervised pattern classification. Neural Comput Appl 31(10):6559–6574

Guzella TS, Caminhas WM (2009) A review of machine learning approaches to spam filtering. Expert Syst Appl 36(7):10206–10222

Huang G, Zhu Q, Siew C (2006) Extreme learning machine: theory and applications. Neurocomputing 70:489–501 (Neural Networks Selected Papers from the 7th Brazilian Symposium on Neural Networks, SBRN’04)

Huang X, Shi L, Suykens JAK (2014) Support vector machine classifier with pinball loss. IEEE Trans Pattern Anal Mach Intell 36(5):984–997

Huang X, Shi L, Suykens JAK (2014) Asymmetric least squares support vector machine classifiers. Comput Stat Data Anal 70:395–405

Huang G, Song S, Gupta J, Wu C (2014) Semi-supervised and unsupervised extreme learning machines. IEEE Trans Cybern 44:2405–2417

Jumutc V, Huang X, Suykens JAK (2013) Fixed-size Pegasos for hinge and pinball loss SVM. In: Proceedings of the international joint conference on neural network, Dallas, TX, USA. pp 1122–1128

Khemchandani R, Pal A (2016) Multi-category Laplacian least squares twin support vector machine. Appl Intell 45:458–474

Koenker R (2005) Quantile regression. Cambridge University Press, Cambridge

Li Z, Tian Y, Li K, Zhou F, Yang W (2017) Reject inference in credit scoring using semi-supervised support vector machines. Expert Syst Appl 74:105–114

Lu L, Lin Q, Pei H, Zhong P (2018) The aLS-SVM based multi-task learning classifiers. Appl Intell 48:2393–2407

Ma J, Wen Y, Yang L (2019) Lagrangian supervised and semi-supervised extreme learning machine. Appl Intell 49(2):303–318

Melki G, Kecman V, Ventura S, Cano A (2018) OLLAWV: online learning algorithm using worst-violators. Appl Soft Comput 66:384–393

Pei H, Chen Y, Wu Y, Zhong P (2017) Laplacian total margin support vector machine based on within-class scatter. Knowl-Based Syst 119:152–165

Pei H, Wang K, Zhong P (2017) Semi-supervised matrixized least squares support vector machine. Appl Soft Comput 61:72–87

Pei H, Wang K, Lin Q, Zhong P (2018) Robust semi-supervised extreme learning machine. Knowl-Based Syst 159:203–220

Scardapane S, Fierimonte R, Lorenzo PD, Panella M, Uncini A (2016) Distributed semi-supervised support vector machines. Neural Netw. 80:43–52

Shivaswamy P, Bhattacharyya C, Smola A (2006) Second order cone programming approaches for handling missing and uncertain data. J Mach Learn Res 7:1283–1314

Steinwart I, Christmann A (2008) Support vector machines. Springer, New York

Sun S (2013) Multi-view Laplacian support vector machines. Appl Intell 41(4):209–222

Tikhonov AN (1963) Regularization of incorrectly posed problems. Sov. Math. Dokl 4:1624–1627

Tur G, Hakkani-Tür D, Schapire RE (2005) Combining active and semi-supervised learning for spoken language understanding. Speech Commun 45(2):171–186

Vapnik VN (1995) The nature of statistical learning theory. Springer, New York

Wang K, Zhong P (2014) Robust non-convex least squares loss function for regression with outliers. Knowl-Based Syst 71:290–302

Wang K, Zhu W, Zhong P (2015) Robust support vector regression with generalized loss function and applications. Neural Process Lett 41:89–106

Xu H, Caramanis C, Mannor S (2009) Robustness and regularization of support vector machines. J Mach Learn Res 10:1485–1510

Ye J (2005) Generalized low rank approximations of matrices. Mach Learn 61(1–3):167–191

Zhang T, Liu S, Xu C, Lu H (2011) Boosted multi-class semi-supervised learning for human action recognition. Pattern Recognit 44(10–11):2334–2342

Zhao J, Xu Y, Fujita H (2019) An improved non-parallel Universum support vector machine and its safe sample screening rule. Knowl-Based Syst 170:79–88

Zhong P (2012) Training robust support vector regression with smooth non-convex loss function. Optim Methods Softw 27(6):1039–1058

Acknowledgements

The authors gratefully acknowledge the helpful comments and suggestions of the reviewers, which have improved the presentation.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Pei, H., Lin, Q., Yang, L. et al. A novel semi-supervised support vector machine with asymmetric squared loss. Adv Data Anal Classif 15, 159–191 (2021). https://doi.org/10.1007/s11634-020-00390-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11634-020-00390-y