An Improved Boundary-Aware Perceptual Loss for Building Extraction from VHR Images

Abstract

:1. Introduction

- We first propose an improved boundary-aware perceptual loss to refine and enhance the building extraction performance of CNN models on boundary areas. The proposed BP loss consists of a loss network and transfer loss functions. Different from other approaches, we design a simple but efficient loss network named CycleNet to learn the structural information embeddings, and the learned structural information is transferred into the building extraction networks with the proposed transfer loss functions. The proposed BP loss can refine the CNN model to learn both the semantic information and the structural information simultaneously without other per-pixel loss functions such as cross-entropy loss. This character can prevent CNN models from the conflicts of different loss functions and naturally achieve better building extraction performance.

- We design easy-to-use and efficient learning schedules to train the loss network and apply the BP loss to refine the building extraction network.

- We execute verification experiments on the popular WHU aerial dataset [31] and INRIA dataset [32]. With two representative semantic segmentation models (UNet and PSPNet), we analyze the mechanisms of how the proposed BP loss teaches the building extraction networks with the structural information embeddings. On both of these datasets, the experimental results demonstrate that the proposed BP loss can effectively refine representative CNN model architectures and apparent performance improvements can be observed on the boundary areas.

2. Proposed Method

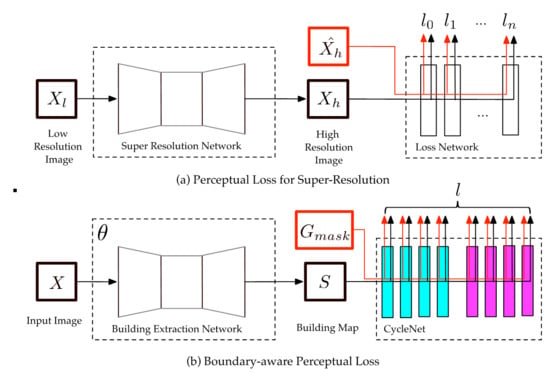

2.1. Perceptual Loss

2.2. CycleNet Architecture

| Algorithm 1 Training the CycleNet |

| Training Model: CycleNe Input: Ground Truth Building Mask Ground Truth Building Boundary Output: Parameter of CycleNet for epoch in Total Epoch: End for |

2.3. Transfer Loss Functions

| Algorithm 2 Structural Information Embedding |

| Training Model: Pipeline Network Input: VHR Remote Sensing Image Ground Truth Building Mask Ground Truth Building Boundary Output: Parameter of Pipeline Network for epoch in Total Epoch: Generate the balance weight: End for |

3. Experiments and Analysis

3.1. Study Materials

3.1.1. Datasets

3.1.2. Boundary Generation

| Algorithm 3 Boundary Generation |

| Input: Ground Truth Building Mask Output: Ground Truth Building Boundary Define for in : if any End for |

3.1.3. Evaluation Metrics

3.2. Ablation Evaluation

3.2.1. Structural Information Embedding of the Boundary-Aware Perceptual Loss

3.2.2. Adaptation of Boundary-aware Perceptual Loss

3.3. Boundary Analyse

3.4. SOTA Comparison

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Huang, H.; Xu, K. Combing Triple-Part Features of Convolutional Neural Networks for Scene Classification in Remote Sensing. Remote. Sens. 2019, 11, 1687. [Google Scholar] [CrossRef] [Green Version]

- Zhu, R.; Yan, L.; Mo, N.; Liu, Y. AttentionBased Deep Feature Fusion for the Scene Classification of HighResolution Remote Sensing Images. Remote. Sens. 2020, 12, 742. [Google Scholar] [CrossRef] [Green Version]

- Cui, B.; Zhang, Y.; Yan, L.; Wei, J.; Wu, H. An Unsupervised SAR Change Detection Method Based on Stochastic Subspace Ensemble Learning. Remote. Sens. 2019, 11, 1314. [Google Scholar] [CrossRef] [Green Version]

- Li, L.; Wang, C.; Zhang, H.; Zhang, B.; Wu, F. Urban Building Change Detection in SAR Images Using Combined Differential Image and Residual U-Net Network. Remote. Sens. 2019, 11, 1091. [Google Scholar] [CrossRef] [Green Version]

- Mahdavi, S.; Salehi, B.; Huang, W.; Amani, M.; Brisco, B. A PolSAR Change Detection Index Based on Neighborhood Information for Flood Mapping. Remote. Sens. 2019, 11, 1854. [Google Scholar] [CrossRef] [Green Version]

- Chen, C.; Gong, W.; Chen, Y.; Li, W. Object Detection in Remote Sensing Images Based on a Scene-Contextual Feature Pyramid Network. Remote. Sens. 2019, 11, 339. [Google Scholar] [CrossRef] [Green Version]

- Pan, X.; Yang, F.; Gao, L.; Chen, Z.; Zhang, B.; Fan, H.; Ren, J. Building Extraction from High-Resolution Aerial Imagery Using a Generative Adversarial Network with Spatial and Channel Attention Mechanisms. Remote Sens. 2019, 11, 917. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Y.; Gong, W.; Sun, J.; Li, W. Web-Net: A Novel Nest Networks with Ultra-Hierarchical Sampling for Building Extraction from Aerial Imageries. Remote. Sens. 2019, 11, 1897. [Google Scholar] [CrossRef] [Green Version]

- Neuville, R.; Pouliot, J.; Poux, F.; Billen, R. 3D Viewpoint Management and Navigation in Urban Planning: Application to the Exploratory Phase. Remote. Sens. 2019, 11, 236. [Google Scholar] [CrossRef] [Green Version]

- Khanal, N.; Uddin, K.; Matin, M.; Tenneson, K. Automatic Detection of Spatiotemporal Urban Expansion Patterns by Fusing OSM and Landsat Data in Kathmandu. Remote. Sens. 2019, 11, 2296. [Google Scholar] [CrossRef] [Green Version]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the 2015 Ieee Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Ibtehaz, N.; Rahman, M.S. MultiResUNet : Rethinking the U-Net architecture for multimodal biomedical image segmentation. Neural Netw. 2020, 121, 74–87. [Google Scholar] [CrossRef]

- Zhao, J.; He, X.; Li, J.; Feng, T.; Ye, C.; Xiong, L. Automatic Vector-Based Road Structure Mapping Using Multibeam LiDAR. Remote. Sens. 2019, 11, 1726. [Google Scholar] [CrossRef] [Green Version]

- Huang, J.; Zhang, X.; Xin, Q.; Sun, Y.; Zhang, P. Automatic building extraction from high-resolution aerial images and LiDAR data using gated residual refinement network. ISPRS J. Photogramm. Remote. Sens. 2019, 151, 91–105. [Google Scholar] [CrossRef]

- Sun, G.; Huang, H.; Zhang, A.; Li, F.; Zhao, H.; Fu, H. Fusion of Multiscale Convolutional Neural Networks for Building Extraction in Very High-Resolution Images. Remote. Sens. 2019, 11, 227. [Google Scholar] [CrossRef] [Green Version]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention, Pt Iii; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Springer: Cham, Switzerland, 2015; Volume 9351, pp. 234–241. [Google Scholar]

- Peng, D.; Zhang, Y.; Guan, H. Guan End-to-End Change Detection for High Resolution Satellite Images Using Improved UNet++. Remote. Sens. 2019, 11, 1382. [Google Scholar] [CrossRef] [Green Version]

- Yue, K.; Yang, L.; Li, R.; Hu, W.; Zhang, F.; Li, W. TreeUNet: Adaptive Tree convolutional neural networks for subdecimeter aerial image segmentation. ISPRS J. Photogramm. Remote. Sens. 2019, 156, 1–13. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Badrinarayanan, V.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Z.W.; Siddiquee, M.M.R.; Tajbakhsh, N.; Liang, J.M. UNet plus plus : A Nested U-Net Architecture for Medical Image Segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support, Dlmia 2018; Stoyanov, D., Taylor, Z., Carneiro, G., SyedaMahmood, T., Eds.; Springer: Cham, Switzerland, 2018; Volume 11045, pp. 3–11. [Google Scholar]

- Wu, G.; Shao, X.; Guo, Z.; Chen, Q.; Yuan, W.; Shi, X.; Xu, Y.; Shibasaki, R. Automatic Building Segmentation of Aerial Imagery Using Multi-Constraint Fully Convolutional Networks. Remote. Sens. 2018, 10, 407. [Google Scholar] [CrossRef] [Green Version]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the 30th Ieee Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6230–6239. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Krähenbühl, P.; Koltun, V. Efficient Inference in Fully Connected CRFs with Gaussian Edge Potentials. Available online: http://papers.nips.cc/paper/4296-efficient-inference-in-fully-connected-crfs-with-gaussian-edge-potentials.pdf (accessed on 8 April 2020).

- Zheng, S.; Jayasumana, S.; Romera-Paredes, B.; Vineet, V.; Su, Z.; Du, D.; Huang, C.; Torr, P.H.S. Conditional Random Fields as Recurrent Neural Networks. In Proceedings of the 2015 Ieee International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1529–1537. [Google Scholar]

- Bertels, J.; Eelbode, T.; Berman, M.; Vandermeulen, D.; Maes, F.; Bisschops, R.; Blaschko, M.B. Optimizing the Dice score and Jaccard index for medical image segmentation: Theory and practice. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Cham, Switzerland, 2019; pp. 92–100. [Google Scholar]

- Iglovikov, V.; Shvets, A. TernausNet: U-Net with VGG11 Encoder Pre-Trained on ImageNet for Image Segmentation. arXiv 2018, arXiv:1801.05746. [Google Scholar]

- Johnson, J.; Alahi, A.; Li, F.-F. Perceptual Losses for Real-Time Style Transfer and Super-Resolution. In Computer Vision-Eccv 2016, Pt Ii; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer: Cham, Switzerland, 2016; Volume 9906, pp. 694–711. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Chen, Y.; Dapogny, A.; Cord, M. SEMEDA: Enhancing Segmentation Precision with Semantic Edge Aware Loss. arXiv 2019, arXiv:1905.01892. [Google Scholar]

- Ji, S.; Wei, S.; Lu, M. Fully Convolutional Networks for Multisource Building Extraction From an Open Aerial and Satellite Imagery Data Set. IEEE Trans. Geosci. Remote. Sens. 2018, 57, 574–586. [Google Scholar] [CrossRef]

- Maggiori, E.; Tarabalka, Y.; Charpiat, G.; Alliez, P. Can semantic labeling methods generalize to any city? The inria aerial image labeling benchmark. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Worth, TX, USA, 23–28 July 2017; pp. 3226–3229. [Google Scholar]

- Sobel, I. History and Definition of the Sobel Operator. Retrieved from the World Wide Web 2014, 1505. Available online: https://www.researchgate.net/publication/239398674_An_Isotropic_3x3_Image_Gradient_Operator (accessed on 8 April 2020).

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 Ieee Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Liu, P.; Liu, X.; Liu, M.; Shi, Q.; Yang, J.; Xu, X.; Zhang, Y. Building Footprint Extraction from High-Resolution Images via Spatial Residual Inception Convolutional Neural Network. Remote. Sens. 2019, 11, 830. [Google Scholar] [CrossRef] [Green Version]

- Liu, H.; Luo, J.; Huang, B.; Hu, X.; Sun, Y.; Yang, Y.; Xu, N.; Zhou, N. DE-Net: Deep Encoding Network for Building Extraction from High-Resolution Remote Sensing Imagery. Remote. Sens. 2019, 11, 2380. [Google Scholar] [CrossRef] [Green Version]

- Bischke, B.; Helber, P.; Folz, J.; Borth, D.; Dengel, A. Multi-task learning for segmentation of building footprints with deep neural networks. arXiv 2017, arXiv:1709.05932. [Google Scholar]

- Mou, L.; Zhu, X.X. RiFCN: Recurrent network in fully convolutional network for semantic segmentation of high resolution remote sensing images. arXiv 2018, arXiv:1805.02091. [Google Scholar]

| Block | Boundary IoU (%) | Mask IoU (%) |

|---|---|---|

| Boundary Block (Sigmoid+BCE) | 97.94 | — |

| Boundary Block (Relu+BCE) | 99.96 | — |

| Boundary Block (Relu+L1) | 99.69 | — |

| Mask Block (Sigmoid+BCE) | — | 16.46 |

| Mask Block (Relu+BCE) | — | 12.77 |

| Mask Block (Relu+L1) | — | 9.83 |

| CycleNet (Sigmoid+BCE) | 96.64 | 98.10 |

| CycleNet (Relu+BCE) | 99.83 | 99.72 |

| CycleNet (Relu+L1) | 99.56 | 99.58 |

| Architecture | Acc (%) | Mask IoU (%) | Time (fps) |

|---|---|---|---|

| (16, 16, 1) | 98.80(+0.09) | 89.77(+0.83) | 2.04 |

| (16, 32, 1) | 98.81(+0.10) | 89.81(+0.87) | 1.95 |

| (16, 16, 16, 1) | BP 98.85(+0.14) | 90.11(+1.17) | 1.88 |

| (16, 32, 16, 1) | 98.86(+0.15) | 90.27(+1.33) | 1.71 |

| L1 Loss | 98.68(-0.03) | 88.57(-0.14) | 2.31 |

| UNet-Res34 | |||||

| BCE Loss | ✓ | ✓ | ✓ | ✓ | ✓ |

| Jaccard Loss | ✓ | ✓ | |||

| Dice Loss | ✓ | ✓ | |||

| SEMEDA Loss | ✓ | ✓ | |||

| Acc (%) IoU (%) | 98.71 | 98.73 | 98.74 | 98.75 | 98.77 |

| 88.94 | 89.11 | 89.18 | 89.29 | 89.37 | |

| UNet-Res34+BP Loss | |||||

| Acc (%) IoU (%) | 98.85 | 98.84 | 98.86 | 98.81 | 98.89 |

| 90.11 | 90.07 | 90.21 | 89.81 | 90.40 | |

| UNet | UNet +BP Loss | |

|---|---|---|

| Acc (%) | 98.47 | 98.62 |

| IoU (%) | 53.55 | 56.84 |

| SA (%) | 78.42 | 79.86 |

| Method | Acc (%) | IoU (%) | Precision (%) | Recall (%) | F1(%) |

|---|---|---|---|---|---|

| SegNet [19] | - | 86.58 | 92.55 | 93.06 | 92.80 |

| PSPNet [22] | 98.61 | 88.14 | 94.42 | 92.99 | 93.70 |

| UNet [16] | 98.74 | 89.31 | 93.85 | 94.87 | 94.35 |

| SiU-Net [31] | - | 88.40 | 93.80 | 93.90 | - |

| SRINet [35] | - | 89.09 | 95.21 | 93.28 | 94.23 |

| DeNet [36] | 90.12 | 95.00 | 94.60 | 94.80 | |

| PSPNet+BP Loss | 98.69 | 88.78 | 95.02 | 93.14 | 94.07 |

| UNet+BP Loss | 98.84 | 90.78 | 95.06 | 94.89 | 94.97 |

| Methods | Austin | Chicago | Kitsap Country | Western Tyrol | Vienna | Overall | |

|---|---|---|---|---|---|---|---|

| SegNet (Single-Loss) [37] | IoU | 74.81 | 52.83 | 68.06 | 65.68 | 72.90 | 70.14 |

| Acc. | 92.52 | 98.65 | 97.28 | 91.36 | 96.04 | 95.17 | |

| SegNet (Multi-Task Loss) [37] | IoU | 76.76 | 67.06 | 73.30 | 66.91 | 76.68 | 73.00 |

| Acc. | 93.21 | 99.25 | 97.84 | 91.71 | 96.61 | 95.73 | |

| RiFCN [38] | IoU | 76.84 | 67.45 | 63.95 | 73.19 | 79.18 | 74.00 |

| Acc. | 96.50 | 91.76 | 99.14 | 97.75 | 93.95 | 95.82 | |

| UNet [16] | IoU | 78.51 | 68.53 | 66.36 | 77.48 | 80.26 | 75.58 |

| Acc. | 97.05 | 92.69 | 99.29 | 98.30 | 94.56 | 96.38 | |

| PSPNet [22] | IoU | 75.05 | 69.93 | 61.09 | 73.32 | 78.06 | 73.99 |

| Acc. | 96.48 | 93.11 | 99.11 | 97.88 | 93.90 | 96.10 | |

| UNet+BP Loss | IoU | 79.59 | 70.06 | 68.04 | 78.97 | 80.84 | 76.62 |

| Acc. | 97.20 | 92.90 | 99.33 | 98.42 | 94.76 | 96.52 | |

| PSPNet+BP Loss | IoU | 76.80 | 71.51 | 62.51 | 73.90 | 80.17 | 75.68 |

| Acc. | 96.82 | 93.58 | 99.16 | 98.05 | 94.65 | 96.45 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y.; Li, W.; Gong, W.; Wang, Z.; Sun, J. An Improved Boundary-Aware Perceptual Loss for Building Extraction from VHR Images. Remote Sens. 2020, 12, 1195. https://doi.org/10.3390/rs12071195

Zhang Y, Li W, Gong W, Wang Z, Sun J. An Improved Boundary-Aware Perceptual Loss for Building Extraction from VHR Images. Remote Sensing. 2020; 12(7):1195. https://doi.org/10.3390/rs12071195

Chicago/Turabian StyleZhang, Yan, Weihong Li, Weiguo Gong, Zixu Wang, and Jingxi Sun. 2020. "An Improved Boundary-Aware Perceptual Loss for Building Extraction from VHR Images" Remote Sensing 12, no. 7: 1195. https://doi.org/10.3390/rs12071195