Abstract

Handling large-scale software variability is still a challenge for many organizations. After decades of research on variability management concepts, many industrial organizations have introduced techniques known from research, but still lament that pure textbook approaches are not applicable or efficient. For instance, software product line engineering—an approach to systematically develop portfolios of products—is difficult to adopt given the high upfront investments; and even when adopted, organizations are challenged by evolving their complex product lines. Consequently, the research community now mainly focuses on re-engineering and evolution techniques for product lines; yet, understanding the current state of adoption and the industrial challenges for organizations is necessary to conceive effective techniques. In this multiple-case study, we analyze the current adoption of variability management techniques in twelve medium- to large-scale industrial cases in domains such as automotive, aerospace or railway systems. We identify the current state of variability management, emphasizing the techniques and concepts they adopted. We elicit the needs and challenges expressed for these cases, triangulated with results from a literature review. We believe our results help to understand the current state of adoption and shed light on gaps to address in industrial practice.

Similar content being viewed by others

1 Introduction

Companies often need to engineer a portfolio of software variants instead of one-size-fits-all solutions. Creating variants allows tailoring systems towards varying stakeholder requirements—different functionalities, but also non-functional requirements, such as performance or power consumption. Variant-rich systems are especially common in traditional engineering domains including automotive, industrial automation, and telecommunication. In addition, recent trends, such as the Internet of Things (IoT) (Atzori et al. 2010), cyber-physical systems (Krüger et al. 2017; Romero et al. 2015) or robotics (Garcia et al. 2019), further increase the need for customization.

Software product line engineering (SPLE) aims at effectively engineering a variant-rich system—a software product line—in an application domain. SPLE advocates establishing an integrated software platform from which individual variants can be derived, typically in an automated, configuration-driven process. SPLE provides a range of dedicated concepts, including processes, modeling techniques or design patterns, supported by commercial and open-source tools, such as pure::variants (Beuche 2004), Gears (Krueger 2007) or FeatureIDE (Kästner et al. 2009).

Recognizing the benefits, especially the radically decreased time-to-market for new variants, industry has adopted SPLE concepts (Jepsen and Beuche 2009; Flores et al. 2012; Berger et al. 2013a; Mohagheghi and Conradi 2007; Chen and Ali Babar 2010; Bastos et al. 2017; Thörn 2010) at different levels of maturity (Bosch 2002). Yet, many organizations still lament an adoption barrier. In fact, most organizations create variants in ad hoc ways (Berger et al. 2013a), such as clone & own, which is simple and cheap (Dubinsky et al. 2013; Businge et al. 2018; Stanciulescu et al. 2015; Staples and Hill 2004), but does not scale with the number of variants and then requires product-line migration efforts (Assunção et al. 2017; Faust and Verhoef 2003a; Jepsen et al. 2007b; Debbiche et al. 2019; Akesson et al. 2019; Fenske et al. 2014). When adopted, a product line can also limit flexibility, since evolving the platform affects many variants (Melo et al. 2016). To improve this situation, we need to improve our empirical understanding of the state of product-line adoption and the needs for improvement in industrial practice.

Such an updated empirical understanding of industrial needs helps steering the scope of research efforts. Consider the many SPLE concepts that have been conceived by researchers. Already until 2011, a survey identified 91 variability management approaches (Chen and Babar 2011), most of which rely on feature modeling to specify variability information (Kang et al. 1990; Czarnecki et al. 2012; Acher et al. 2013a; Nesic et al. 2019). Another trend was to build hundreds of dedicated analyses for product lines, typically by lifting single-system analyses (e.g., model checking) (Midtgaard et al. 2014; Liebig et al. 2013; Sattler et al. 2018). A recent survey (Thüm et al. 2014) identified 123 analyses from the literature, including lifted type-checking, static-analysis, and model-checking techniques. Already eight years ago, a survey (Benavides et al. 2010) identified 30 different feature-model analyses in 53 papers. Other analyses check the consistency between feature models and implementation artifacts or analyze code properties. Notably, more recent work (Mukelabai et al. 2018b) shows that industrial needs substantially deviate from the state of the art, while most analyses are not applicable in industrial contexts. Likewise, traceability of features across a product line’s lifecycle is another challenge (Vale et al. 2017), where the state of the art and the state of the practice differ significantly, and the low industrial relevance of solutions proposed in the literature prevents a wider adoption of traceability for product lines in industry.

We present a study on the state of adoption of variability-management concepts and remaining practical challenges in twelve industrial cases from different organizations engineering variant-rich systems. We used document analysis, semi-structured interviews, and focus groups on cases that cover a wide range of domains and development scales, from a rather small web-application case to ultra-large software engineering for automotive or industrial component production. In addition, we conducted a lightweight literature review on relevant SPLE adoption case studies, experience reports, surveys, and meta-studies, supporting the formulation and synthesis of challenges we present.

Our industrial cases primarily represent the development in a small part of a company, such as a single division or development team. We refer to these as use cases in the remainder. We also investigated cases provided by tool vendors who are looking to integrate product-line engineering concepts into their tools, referred to as tool cases. For our study, we combined eliciting structured case descriptions with focus-group interviews to identify the concepts that were adopted, as well as variability drivers and variable assets.

We report our cases’ drivers of variability, the SPLE concepts they adopted, as well as remaining challenges to be addressed by the research community and tool vendors.

We believe our results support practitioners and researchers. Practitioners can use our results as a baseline to compare their own organization’s capabilities and understand which concepts are at their disposal for future development. Researchers obtain the current state of adoption of SPLE concepts in industry, as well as they learn about remaining challenges.

2 Background

We briefly discuss strategies and important concepts for engineering variant-rich systems, gradually from ad hoc strategies to more advanced variant management strategies and concepts, partly inspired by the levels proposed by Antkiewicz et al. (2014).

Clone & own

An organization creates variants by copying and adapting existing variants to new requirements. Assets are propagated in an ad hoc way among the variants. No platform or any kind of systematic variability management exists. Clone & own is a simple and readily available (Dubinsky et al. 2013; Duc et al. 2014; Businge et al. 2018; Stanciulescu et al. 2015) strategy for developing variants, but does not scale with the number of variants and easily causes maintenance overheads.

Clone Management

Enhancing the governance, an organization could adopt a clone-management framework. Such frameworks (Rubin et al. 2012, 2013a, ??; Pfofe et al.2016; Antkiewicz et al. 2014) have been proposed, but have not found any documented adoption. They allow managing clones by using features as the main entities of reuse (instead of code assets) and record meta-data about the clones.

Configuration

An organization can introduce configuration mechanisms to reduce redundancies. Such a mechanism is an implementation technique to realize calibration or variation. In the latter case, it is commonly referred to as a variability mechanism (Van Gurp et al. 2001; Berger et al. 2014c), ranging from simple conditional compilation (e.g., #ifdef) via control-flow conditional statements (e.g., IF), build systems (Berger et al. 2010a; Dietrich et al. 2012), and component frameworks to so-called feature modules (Apel and Kästner 2009) or delta modules (Schaefer et al. 2010), or combinations thereof (Behringer et al. 2017; Mukelabai et al. 2018a). The configuration options (a.k.a., calibration parameters or just parameters) and their constraints are typically declared in a model, such as a feature model (explained shortly). Using such a model, an interactive configurator tool can support the configuration process, guiding users by propagating choices or resolving configuration conflicts.

Platform

Further scaling the development, an organization can adopt an integrated platform. There, instead of cloning, all variants are integrated into one software platform. By exploiting the commonalities among the variants, redundancies are removed while variability among the variants is represented by variation points within the platform. Variability is typically described in terms of features declared in a feature model, as follows.

Feature

Using the notion of feature is core to scaling the development, for instance, when adopting a platform. A feature represents end-to-end functionality of a system (Berger et al. 2015). Features are intuitive entities that can be understood by different roles, including marketing experts, project leads, and customers. Features abstractly represent assets and are tracked, for instance, in a database or, more formally, in a feature model. Many features also serve as a configuration option (a.k.a., optional feature).

Feature Model

Keeping an overview understanding of features, organizations should create a feature model—an intuitive, tree-like representation of features and their constraints (Kang et al. 1990; Czarnecki et al. 2012; Acher et al. 2013a; Nesic et al. 2019). The typical graphical notation is shown in Fig. 1, representing a small excerpt of the Linux kernel’s variability model. For brevity, we refer to related work (Berger et al.2010b, 2013b, 2014c) for an introduction into feature models and this particular example.

Feature model example (Berger et al. 2014c)

SPLE Process

For an organization to effectively engineer a platform, textbook SPLE methods (Apel et al. 2013; Pohl et al. 2005; Czarnecki and Eisenecker 2000) introduce two main processes: domain engineering, which aims at engineering the platform, and application engineering, which aims at deriving individual variants from the platform. Each process comprises typical software-engineering tasks, such as requirements engineering, design, implementation and quality assurance, with domain engineering also containing scoping (determining and prioritizing platform features).

Product-Line Quality Assurance

Enhancing the quality assurance, organizations can adopt analyses of product lines (Thüm et al. 2014; Benavides et al. 2010; Mukelabai et al. 2018b), which differ conceptually from single-system analyses. The latter, especially dynamic analyses such as testing, can only be used for individual variants. While they can be beneficial to optimize individual variants, single-system analyses are usually insufficient when a product-line platform has been adopted and errors related to all possible variants should be found. For instance, unwanted feature interactions can occur for certain variants based on the combination of features in the variant. Applying single-system analyses for finding such errors requires configuration sampling (Cohen et al. 2007; Perrouin et al. 2010).

3 Methodology

Our study aims to “collect and summarize evidence from a large representative sample of the overall population” (Molléri et al. 2016) and can therefore be constructed as a survey. We follow the well-known guidelines by Kitchenham and Pfleeger (2002) to structure this section, and we rely on the simplified 7-step process of Linåker et al. (2015).

3.1 Aims and objectives

As laid out in Section 1, the motivation for our study was to understand which variability management concepts are adopted in substantial industrial cases, and the challenges that industrial practitioners perceive in their daily work, determining the concepts that are still needed. Coming from an academic perspective, we also aimed to identify in how far the academic literature on SPLE takes industrial circumstances into account and to what extent the solutions offered by academia correspond to the practical challenges. Our discussions with practitioners, and our lightweight literature review, supported our view that there is a disconnect between SPLE practice and theory. We formulated three research questions:

- RQ1::

-

What are drivers for variability in our cases?

- RQ2::

-

Which SPLE concepts are adopted in our cases?

- RQ3::

-

What concepts are missing for our cases and for cases previously reported in the literature?

3.2 Planning, scheduling, and designing the study

Based on the research questions, we planned a descriptive study using qualitative data. The study was planned to be conducted in several iterations where data was collected repeatedly, then analyzed, and finally followed up. The data collection itself ran during 2018. In addition, we conducted a lightweight literature review on industrial case studies and experience reports on adopting SPLE, to triangulate with the challenges from our twelve cases.

Our personal network gave us access to companies that engineer variant-rich systems and intend to invest into improving their engineering. We therefore employed purposive sampling, in particular expert sampling (Etikan et al. 2016) in which we identified those experts that deal with the problems we set out to investigate. We created a list of potential candidates and narrowed it down by excluding those with insufficient resources to participate. We also selected companies that would provide a specific viewpoint and tried to achieve heterogeneity both in terms of company size, age, and size of the system, and implementation technology. We also achieved a good geographical distribution by including companies from five different countries in the European Union.

The final list consisted of twelve companies, nine of which create a variant-rich system and three of which are tool vendors selling software-engineering tools that want to integrate variability mechanisms into their existing tools. For assuring anonymity, we report the cases we studied and only provide high-level information about the different companies, and the meetings we had with them.

3.3 Data collection

The primary data collection method was a case description provided by the companies that describes the case for product line engineering. It relied on a template that included the product and market context, the technology context, a description of existing processes and automation techniques, goals, and key performance indicators, the variability management practices currently in use, and the principal assets, their reuse and management. The template was piloted with one of the organizations and subsequently refined. The organizations involved in this study iteratively improved the case descriptions to homogenize them and to ensure that relevant information was included.

Based on the description, we conducted a preliminary analysis to identify open questions. This resulted in an interview guide for each of the cases that was used in semi-structured interviews or in small focus groups. While the interviews were conducted with experts from individual companies, the focus groups included representatives from several companies. We chose to use the latter format when cases were similar to each other and we needed to understand the differences and similarities better, and when resources allowed such a meeting. Each of these occasions included one or two researchers and at least two industrial participants. In all cases, the industrial participants were engineers working actively with software product lines. Each interview or focus group lasted between 30 and 60 minutes and was conducted by the researchers in person. Data was collected in the form of extensive notes and shared among all authors. The semi-structured format allowed us to explore additional aspects that were not covered in the original case descriptions. Specifically, we had the following number of small focus-group meetings or interviews: power electronics (3 meetings, 4 participants), traffic control (1 meeting, 1 participant), chip modeling (2 meetings, 3 participants), modeling platform (2 meetings, 2 participants), railway (8 meetings, 3 participants), aerospace (3 meetings, 4 participants), truck manufacturing (2 meetings, 3 participants), web application (1 meeting, 2 participants), automotive firmware (2 meetings, 3 participants), requirements engineering (1 meeting, 1 participant).

On two occasions, we held larger focus group meetings with a majority of the involved researchers and companies. This assured that we met representatives of all companies at least once. We discussed adopted concepts and needs, providing another opportunity to explore differences and similarities.

We also conducted a lightweight literature study where we collected and inspected meta-studies, surveys, exploratory studies, industrial case studies, and experience reports. Even though, we know from experience that the large majority of these publications focus on praising the benefits of SPLE (e.g., cost savings and shorter time to markets) while not providing sufficiently detailed data on the adopted concepts and on challenges (i.e., remaining challenges for SPLE research, as opposed to those that were solved in the case study), we decided to systematically collect those publications and triangulate our synthesized challenges with those from these cases. To collect publications, we used our own expertise as well as we consulted the SPLE community’s “Hall of Fame,”Footnote 1 a book with a collection of successful SPLE cases (van der Linden et al. 2007), and the Software Engineering Institute’s catalog of case studies (Software Engineering Institute 2008).

3.4 Data Analysis

We analyzed the data in several iterations. The first iteration based on the use case descriptions was mainly targeted at identifying which aspects of the current product line approach in the cases remained unclear—to be able to follow-up with our industrial contacts. For this purpose, we transformed each use case into a narrative as presented in Section 6. This allowed us to structure the information and find aspects that needed clarification. We then derived questions for semi-structured interviews and small focus groups from this. The results also guided the discussion in the larger focus groups. We met regularly to discuss the open questions and to gain an overall understanding of the issues.

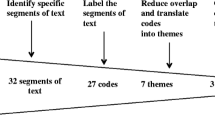

We then merged information from the interviews and the small and large focus groups in the common narrative. In addition, we performed coding on the available data. The codes focused on the current practices and the challenges stated by the companies. We pre-defined codes based on variability management concepts and known challenges from the literature, and complemented these with emergent codes that were based on the first analysis round and the information from the interviews and focus groups. Once a stable set of codes emerged, we conducted a coding workshop to harmonize and refine the codes. Again, all information available at this point was used to validate the codes, to join codes with a high degree of similarity, and to refine the codes, in particular with respect to their concrete formulation. If questions arose during the data analysis or the researchers disagreed on the data, we used personal contacts within the organizations to clarify issues quickly before misunderstandings could arise or bias could manifest.

Once the concepts were identified and the authors agreed on the adopted concepts, we forwarded the information to our contacts for member checking. We also asked clarifying questions about the overall study and the long-term perspective of the cases.

For the literature review, we analyzed the collected publications by reading through the paper, specifically searching for challenges related to variability management concepts that were not solved within the respective case. We mapped those challenges to our challenges, enriching the challenge descriptions. Of course, the validity of reporting challenges from other cases, most of which are at least one or two decades old, is lower than the challenges we extracted from our companies. Still, they substantially enhance the relevance and richness of our reported challenges.

4 Literature review

In our lightweight literature review we identified the following related meta-studies, surveys, case studies, and experience reports.

4.1 Meta studies

Marimuthu and Chandrasekaran (2017) conduct a tertiary study of systematic studies on variability management. They provide detailed bibliometrics, but do not extract any challenges that might be reported in their identified studies. Bastos et al. (2017) investigate product-line adoption in small and medium-scale companies via a multi-method approach comprised of a mapping study, a case study, and a survey among experts. They mainly elicit success factors and practices, but no challenges. Chen et al. (2009) conduct a literature review on variability management and, among other results, list the challenges evolution (“systematic approach to provide a comprehensive support for variability evolution is not available”), scalability of techniques, as well as testing and quality assurance in general. In another study, Chen and Babar (2011) study the state of evaluation of variability management techniques, concluding the lack of proper evaluations as a challenge that researchers should address. Finally, Mohagheghi and Conradi (2007) conduct a literature study on the benefits of software reuse (not limited to reuse via variability management), emphasizing that: “For industry, evaluating reuse of COTS or OSS components, integrating reuse activities in software processes, better data collection and evaluating return on investment are major challenges.”

4.2 Exploratory studies

Chen and Ali Babar (2010) present an exploratory study relying on focus-group research investigating the perceived challenges of variability management using eleven participants from organizations that do consulting or in-house SPLE. With respect to variability modeling, the focus group reports, among others, the following challenges: visualization of features, evolution and maintenance of models (in particular dependency management), and variability modeling being not very user-friendly. In general, the study questions academic techniques. It also points out challenges when migrating to SPLE (cf. Section 7.3), specifically that clone detection techniques are not applicable to multiple systems. Furthermore, while structural variability is well supported, behavioral and timing aspects are not.

4.3 Surveys

A survey of variability modeling in industrial practice by Berger et al. (2013a) lists specific challenges for variability modeling, including visualization, model evolution, and traceability. Thörn (2010) survey variability management in small and medium-scale companies in Sweden; however, the reported challenges are rather general and not specific to variability-management concepts.

4.4 Experience reports and case studies

Over the last decades, practitioners and researchers have published a large number of case studies and experience reports, the majority between the end of the 1990s and the beginning of the 2000s. We now list all cases we identified. When available, we provide all references that describe the case in detail. However, some cases were described in the respective source only, not as part of a publication on its own.

All the cases in the book of van der Linden et al. (2007) describe successful adoptions of product lines, where usually the typical concepts are adopted (platform, feature modeling, automated product derivation, automated testing); for each, the obstacles and limitations in SPLE are also described. We inspected all the cases: AKVAsmart, Bosch (Tischer et al. 2011; Steger et al. 2004; Thiel et al. 2001), DNV Software, MarketMaker (Verlage and Kiesgen 2005), Nokia Mobile Phones, Nokia Networks, Philips Consumer Electronics Software for Televisions, Philips Medical Systems, Siemens Medical Solutions, and Telvent. Furthermore, the book also referenced the following cases, each of which we inspected as well: Salion (Buhrdorf et al. 2003; Clements and Northrop 2002), Testo (Schmid et al. 2005), Axis and Ericsson (Svahnberg and Bosch 1999), Axis and Securitas (Bosch 1999a, 1999b), and RPG Games (Zhang and Jarzabek 2005)

From the SPLE community’s “Hall of Fame”Footnote 2 we identified and inspected: Boeing (Sharp 1998), CelsiusTech Systems AB (Bass et al. 2003; Brownsword and Clements 1996), Cummins (Clements and Northrop 2001b), Ericsson Telecommunications Switches, Fiscan Security Inspection Systems (Li and Chang 2009), Hewlett Packard’s printer firmwar Owen (Toft et al. 2000), HomeAway (Krueger et al. 2008), Lockheed Martin, LSI Logic (Hetrick et al. 2006), Lucent, Siemens Healthcare (Bartholdt and Becker 2011), Toshiba (Matsumoto 2007), U.S. Army (Lanman et al. 2013), U.S. Naval Research Laboratory (Bass et al. 2003), General Motors (Flores et al. 2012), and Danfoss (Jepsen and Beuche 2009; Jepsen et al. 2007b; Fogdal et al. 2016). The cases Salion, Bosch, MarketMaker, Nokia, and Philips (Medical Systems and Software for Television Sets) were already contained in the book of van der van der Linden et al. (2007), explained above.

We inspected all cases of the Software Engineering Institute’s catalog of case studies (Software Engineering Institute 2008): US Army’s Common Avionics Architecture System (CAAS) (Clements and Bergey 2005), CCT (Control Channel Toolkit) (Clements et al. 2001a), Naval Underwater Warfare Center (Cohen et al.2002, 2004a, 2004b), Argon (Bergey et al. 2004), ABB (Ganz and Layes 1998; Rösel 1998; Pohl et al. 2005; Stoll et al. 2009), Deutsche Bank (Faust and Verhoef 2003b), Dialect Solutions (Staples and Hill 2004), E-COM (Liang et al. 2005), Ericsson (Mohagheghi and Conradi 2008; Andersson and Bosch 2005), Enea (Andersson and Bosch 2005), Eurocopter (Dordowsky and Hipp 2009; Hess and Dordowsky 2008), Hitachi (Takebe et al. 2009), LG (Pohl et al. 2005), Lufthansa (Chastek et al. 2011), MSI (Sellier et al. 2007), NASA (Ganesan et al. 2009), NASA JPL (Gannod et al. 2001), Nortel (Dikel et al. 1997), ORisk Consulting (Quilty and Cinneide 2011), Overwatch Textron Systems (Jensen 2007a), Ricoh (Kolb et al. 2005), Rockwell Collins (Faulk 2001), Rolls-Royce (Habli and Kelly 2007), TomTom (Slegers 2009), and Wikon (Pech et al. 2009). The cases CelsiusTech, Salion (Clements and Northrop 2002), Axis (Bosch 2000), Boeing, Cummins, Danfoss, and DNV Software were already contained in one of the other sources above.

Finally, we also included some cases that we know, from our experience, are neither contained in the book of van der Linden et al., the SPLE community’s “Hall of Fame” nor the Software Engineering Institute’s catalog. These were: six German SMEs (including MarketMaker from above) (John et al. 2001), a telecommunication system known as Terrestrial Trunked Radio (TETRA) (Pohjalainen 2011), Volvo Cars and Scania (Eklund and Gustavsson 2013; Gustavsson and Eklund 2010), Audi (Hardung et al. 2004), and Daimler (Dziobek et al. 2008; Bayer et al. 2006).

4.5 Summary

While we will report the identified challenges from the literature together with our challenges in Section 7, we learned that the majority of publications does not report challenges that pertain specifically to variability management or that have not been resolved in the course of the respective case. Most case studies report practices or lessons learned that contributed to the success, but not challenges. Especially, all are about successful adoption, and primarily report about the perceived benefits (some also quantified) that SPLE brought. Negative experiences, shortcomings of tooling, or actual challenges for the SPLE community are largely missing. For most of the case studies and experience reports, we conjecture that these are biased, since the case study authors primarily want to show success stories instead of problems and failed attempts. As such, most of these publications primarily report on the benefits that were achieved, as well as they report success factors experienced as deemed relevant for SPLE. When reporting about the specific product line, the predominant focus is on the product-line architecture, followed by organizational and process aspects. Interestingly, some publications even have “challenges” in the title, but those challenges are usually experiences and hindrances that the organization faced before adopting SPLE or that occurred during the case and that were addressed.

Furthermore, it is apparent that some challenges mentioned in previous case studies have been addressed nowadays. For instance, for Bosch (Tischer et al. 2011; Steger et al. 2004) and MarketMaker (Verlage and Kiesgen 2005), the publications emphasize the lack of proper, industry-strength SPLE tooling, including feature modeling, as the main challenge, which is addressed with commercial and open-source tools nowadays. Also, Chen and Ali Babar (2010) report that variability modeling is not very user-friendly, which can be seen as a solved challenge with the commercial and open-source feature-modeling tooling that exists nowadays.

Finally, we also observed that the extent and level of detail in which challenges relevant for SPLE researchers are reported is not sufficient. Some authors provide information about adopted concepts, for instance, as van der Linden et al. (2007) point out for their collection of cases: “Most architectures are based on a platform, supporting the requirements of present and future products. Often there are several similar products that are combined in the product line to improve the benefit of reuse. The development of a common, variable platform is often considered as the basis for introducing the product line in the organisation. Plug-in mechanisms and the definition of the right interfaces seem to be crucial.” The majority of publications does not provide a finer level of details.

5 Variability drivers and variable artifacts in our cases

We begin the report on our results by discussing the factors driving the variability in our cases in Section 5.1. These variability drivers affect various types of artifacts, which we discuss in Section 5.2. This data has been derived from the data we collected in the case descriptions, interviews, and focus groups.

5.1 Primary variability drivers

For our cases, a number of different variability drivers was reported, as shown in Table 1. The most prominent drivers are markets and hardware. Being able to place products on different markets with different regulations and to ensure that the products are able to adopt to new market needs is crucial. Hardware is a relevant driver, since many of our cases concern systems, and the software needs to be able to work with a variety of different target hardware. In many cases, it is the customer who can select certain hardware, and the software needs to be able to run on the hardware configuration chosen by the customer. This is one form of end-user customization, another important variability driver.

An increase in variability through a number of forces was also reported. One case of firmware for power electronics, for instance, sees an increased need for variability driven by the more wide-spread use of different types of multi-core processors in their products (hardware) and increased industrial digitalization (markets, operating environments). The importance of the market and its growth as the prime driver of variability is also mentioned for the automotive firmware and traffic control cases. The latter also emphasizes that innovation is an important driver for variability: the company needs to be able to deliver innovative solutions while at the same time be able to maintain the existing products in the portfolio. For our modeling platform case, the organization explained that the product needs to compete with software-as-a-service (SaaS) offers, where customization is seen as an advantage. Furthermore, IoT is a new, core driver of variability, as prominently mentioned for the cases power electronics (more precisely, Industrial IoT) and chip modeling.

Another interesting driver is simulation. In our aerospace case, a simulator resembles the real aircraft, but has more variability through the use of models at different levels of fidelity. For instance, verifying a specific sub-system might require a detailed high-fidelity model, while for real-time simulation, the model needs to be replaced with a lower-fidelity model (which might use interpolation) due to limits in computation capability.

5.2 Variable artifact types

Not surprisingly, the most frequently mentioned variable artifact is source code as shown in Table 1. Many companies use conditional compilation with preprocessor directives to include variability information in the source code. Custom descriptors are also relatively common, for instance, as the foundation for code generation. These are often expressed using domain-specific languages. We found little evidence for variability in tests and requirements. Only one company explicitly reports to use components as variable assets, but we expect that there are many companies that do this implicitly.

A use case that is a bit neglected in research is variability in models used for code generation. Five of our subjects write application logic in Simulink and then generate code. Apparently, common variability-management techniques on the code level are not applicable; instead, variability modeling concepts, especially variation-point support is needed in the models and needs to be supported by the modeling tools.

6 Adoption of variability management concepts in our cases

We now introduce our use and tool cases by describing core characteristics and the adopted variability management concepts. An overview can be found in Table 2, which also shows the near-term adoption goals. The particular challenges faced by the organizations will be presented thereafter, in Section 7. Each case description follows a common format: context, variability drivers, variability strategy, additional capabilities (e.g., traceability or testing), and product derivation.

6.1 Power electronics use case

This case concerns the production of, among others, motor controllers (drives) for electric motors. Around 300 million drives are in industrial use worldwide and used in mining, ski lifts, big industry automation processes, and turbines (e.g., solar and wind turbines). The development is characterized as agile through clone & own.

The diversity in hardware and usage scenarios is the primary driver of variability. In addition, country-specific regulations contribute to the number of required variants. The company expects a further increase in variability through trends such as multi-core processing and increased industrial digitalization, as well as adding more software features to the drives.

This use case primarily relies on clone & own. Yet, configuration mechanisms in individual variants also exist. The drive software is written in C/C++ and a substantial part of the code is generated from XML files with an in-house generator. The source code contains preprocessor statements controlled by configuration options. Automatic testing of individual variants is in place through a continuous integration system using Jenkins with automated, nightly tests. The connection between customer adaptations and features is tracked in a database to ensure long-term maintainability. Likewise, rationales for variability decisions are recorded.

The specific variants are built through a Python-based build system that allows component selection.

6.2 Truck manufacturing use case

This case comprises the software development of a large truck manufacturer. All of its products (80,000 trucks per year) come from the same platform.

Almost every product shipped has a unique configuration. The main differentiator of the brand on the global market is full product customizability.

The truck manufacturing case relies on an integrated platform to manage variability and employs separate domain and application engineering. All configuration options are realised with configurable values (i.e., without #ifdef or similar constructs).

Features are maintained in a feature database that contains traceability links to the code. Assets (different levels of specifications and source code) are stored in separate databases. Consistency is checked when a system is made ready for release.

On release, all relevant information about a system, including trace links and variability information (presence conditions) are released to the product data management (PDM) system. When deriving a product from the PDM, a specialized configuratorselects the relevant components and derives source code parameters to generate the source code variant that implements the desired functionality using the possible values for configuration parameters and the constraints as input.

6.3 Aerospace use case

This case is related to the development of an aircraft simulator for a full, configurable aircraft. Both the simulator and the aircraft software can be seen as product lines.

Variability is driven by differences in equipment and software between aircrafts. Assets are primarily developed for the aircraft product line and then propagated to the simulator product line via clone & own of the entire product line. For the simulator, multiple variants target different scenarios ranging from simple computer-screen-based simulators to realistic simulator with actual cockpit hardware. We focus on the simulator.

The simulator is an integrated platform with features that can be mandatory, optional, or a special type of optional features that need to be deletable without a trace to protect customer interests. Each of the latter is completely modularized, meaning that the complete module can be left out and that none of these features is cross-cutting. Another type of feature, so called “role change equipment,” are customer-configurable features that are placeholders for future development. This means that configurations can be partial and are selected with component/module selection at checkout time. The organization maintains an informal feature model as a spreadsheet with a hierarchy, but no explicitly modeled dependencies, as well as manifest files describing components. In addition, calibration parameters refine components.

To derive a product, these parameters are set at build time. Preprocessor directives are prohibited.

6.4 Automotive firmware use case

This case concerns a complex product line for electronic control units (ECUs). Around 2,000 variants are delivered per year; each deliverable is a distinct configuration. Each variant can comprise over 100 function packages and up to 2,000 functional components; the latter are updated regularly (every three months).

Variability arises from the need to customize ECUs to different vehicle types and customers. An integrated platform was defined to reduce time-to-market of variants for customers. It is combined with a limited version of clone & own, since developers can choose to create a new branch for a new feature based on guidelines that take longevity and complexity of the feature into account. Packages are developed as part of the platform (domain engineering), but variant- and customer-specific packages can exist (application engineering). The functional components can be configured via static parameters, which are feature-like entities used within variation points relying on conditional compilation (e.g., with #ifdefs).

These parameters are arranged in a relatively flat hierarchy stored in a distributed feature database and allow feature-to-code traceability. Rationales are recorded by tracing feature information to requirements. An informal feature model maps the high-level features and the static parameters. Constraints are defined over the static parameters. Interestingly, these parameters represent both variations and versions, since some of them map to pre-processor macros, and the version-control system directly supports checking out variants. As such, variability and version management are to some extent unified (like in variation control systems Linsbauer et al. 2017; Stanciulescu et al. 2016; Berger et al.2019a).

Also, dynamic (calibration) parameters for late binding exist, some of which can be defined by the customers after delivery. These parameters, including preconditions, are stored in separate databases upon which experts configure customer adaptations using in-house tools. Configurations are recorded in a special database.

Various analyses are run on the distributed feature database, including internal feature consistency checking. An SPLE tool is increasingly used to complement the existing cross-database consistency analyzes with rules and predicates. Single-system performance analysis is done for the products shipped to customers.

6.5 Railway use case

This case concerns the development of signalling systems for urban transport networks for many cities in the world. Each city has specific needs for the signalling system, which is reflected in the variants.

The specific signalling system for each city is created from a different city’s variant using clone & own by creating branches in the software repository. The variants are composed by component selection, where components represent modules for different sensors and functionality. In addition, each variant also contains a set of internal configuration options based on C preprocessor directives.

The software was refactored and broadly re-architected at least in one branch. Due to development constraints (e.g., time, budget, separate responsibilities), the company never had time to integrate these branches. Customer adaptations are tracked, since each client is represented by a specific branch.

6.6 Web application use case

This case focuses on the creation of web applications that, among others, allow companies to manage media campaigns. Each client receives a customized variant.

Different variants are created with clone & own. Each application consists of frontend and backend code and most variability is in the user-facing frontend part of the system, realized using JavaScript and AngularJS. Features are recorded in a highly informal feature model. Otherwise, the company relies on the knowledge of an expert engineer who knows the distribution of features across branches. The system is implemented using different services where a final product is a composition of different services from a core library, product-specific code, and third-party services. The core services can be adapted with calibration parameters in configuration files to achieve specific behavior for each product. Component selection is performed on the service level.

This architecture allows customer adaptations through the services and provides limited traceability between features and code, since features are mapped to services.

6.7 Modeling platform use case

This case concerns a commercial model-driven engineering tool for business architects, system architects, and developers. The tool is component-based and either comes pre-packaged for one of these target audiences or can be assembled based on customer wishes. It is also possible that specific features are developed for a certain customer. Additionally, different license schemes can be employed (e.g., commercial and open source versions), and six different operating systems versions are supported, further driving variability. There are twelve solutions, with two major releases per year, that include more than 50 modules and three meta-models. More than 30 modules with variations can be obtained from a store to fit specific needs. The store also contains scripts and model components for different versions of the platform.

To this end, an integrated software platform based on Eclipse plug-ins is used. All provided solutions share a common set of modules. Because features are localized in specific plug-ins, feature-to-code traceability is available. Currently, the variability in terms of packaging is not managed in a tool-supported way.

Variants are composed by component selection, relying on the Eclipse P2 packaging system, which considers constraints between features and plugins, and uses a limited feature model. The configurator defines which modules are part of a package and has limited support to set configuration options for the individual plug-ins.

6.8 Imaging technology use case

This case comprises camera software that is packaged for individual customers based on customer requirements. This packaging includes configuring sensor parameters, prototyping and testing different sensor configurations, integrating onto the hardware for sensor validation and exporting sensor configuration parameters for use in a production software system.

An integrated platform along with a home-grown configurator tool is used to create these packages. Assets are currently managed via repositories (e.g., Git) and file-based storage. To create a product, module selection is used to package the correct firmware and driver software. In addition, calibration parameters are used to define the correct sensor and software configuration.

6.9 Traffic control use case

This case concerns the development of several generations of integrated road traffic control and surveillance solutions, including custom sensors along with embedded software. Backend software is produced to process the data sent by these sensors. Different variants of the product are created for different customers and different regional markets, in particular if certification is necessary.

Ad hoc reuse via clone & own is present, relying on branching in the version-control system, which the company considers bad practice and strives for an integrated platform. If a software needs certification it can become a permanent branch in the software repository. Calibration parameters are used to configure the software for specific sensors. Some of these are considered features exposed and sold to customers. Component selection happens at compile time where source code modules are statically linked or at runtime via dynamic linking.

6.10 Requirements engineering tool case

This case is our first tool case, concerning a tool for improving the quality of requirements. It allows defining an ontology of the application domain. Based on the ontology, clients can write semi-natural language requirements. The company also develops pattern-based extractors to populate the ontology from semi-structured documents (conceptually similar to techniques that offer languages for defining patterns that can be used to extract requirements from semi-structured documents Rauf et al. 2011). The clients of requirements engineering produce safety-critical software-intensive systems mostly in the transportation and defense industries.

Currently, the tool customers apply clone & own of the requirements specification. The tool does not offer variability features out-of-the-box. However, one stated use-case is the extraction of variability information —including a vocabulary and ontology of variability and assets —from requirements about an existing product-line platform. In other words, the requirements describe variation points and variants using dedicated terminology to be extracted by pattern-based extractors.

6.11 Hardware modeling tool case

This case concerns the development of a tool that allows to assemble system models (e.g., about automotive suspensions) from pre-defined building blocks and simulate them. The tool interfaces with other tools such as the company’s own product-lifecycle management tool. The variability in the considered models concerns the blocks that vary, e.g., among automotive suspensions.

The current tool uses an ad hoc representation of variability only visible in terms of component selection inside the architecture models. The modeling language allows realizing variability encoding (von Rhein et al. 2016), that is, using built-in conditionals relying on configuration options, which enables this component selection. The tool is already able to explore the design space by creating all possible architecture models that are supported by the selected components. Nevertheless, the current tool chain does not support product line concepts explicitly. In particular, the concept of feature is not supported and variability is only managed within the design space without any knowledge about the problem space.

6.12 Chip modeling tool case

This case concerns a tool for designing Systems-on-Chips (SoC) and the reusable hardware component designs from which these SoCs are assembled. These designs are passed to machines that produce the corresponding integrated circuits. The company believes that emerging applications with huge growth potential, such as SoC for IoT will lead to a combinatorial explosion of variants, with features being related via complex trade-off constraints.

The variants differ by: their provided functions, execution performance of functions, packaging of SoC inside a circuit, power consumption, safety level (typically determined by standards), and life-cycle durations.

The modeling tool is based on the IP-XACT standard (IEEE 1685) and extensions for modeling registers and memory. The integrated software platform currently does not support any specific variability management facilities, nor is it seamlessly integrated with an SPLE tool. Consequently, the tool users are currently restricted to clone & own, specifically, using branching and merging facilities of the tool’s built-in version-control facilities. Also, there is no centralized variability representation.

7 Variability management challenges

We now synthesize and discuss the challenges faced among our cases. We observed that our cases cover a wide range of maturity levels with respect to the adoption of variability management concepts. Consequently, we structure the challenges according to these maturity levels, providing the context in which the challenge occurs and in which it should be addressed by researchers or tool builders.

We first present two general challenges that affect any maturity level. We then discuss those related to support for clone management, which are encountered in organizations that use clone & own as their main technique to derive new products. We then identify challenges that occur when migrating to an integrated platform, that is, when clone & own starts to be complemented by feature and asset management. Next, we discuss challenges that exist once migration is more or less complete and working with an integrated platform becomes the focus of work, followed by challenges that appear when the product line is modernized and evolved.

7.1 General challenges

Challenge 1, Model-Driven Engineering

A common challenge we observed for any maturity level is model-driven engineering (MDE) and code generation. While source code is still the most frequently mentioned variable artifact (cf. Section 5.2), we observed that often code is generated from domain-specific languages (DSLs). While the relation between DSLs and SPLE has been studied (Völter and Visser 2011), the DSLs used in our cases do not support SPLE concepts. As seen in our power electronics, aerospace, and truck manufacturing cases, embedded systems organizations often rely on MDE using Simulink with code generation. Such a setup challenges not only handling cloned variants (e.g., when trying to trace features), but also adopting, working with, and evolving an integrated platform (Kolassa et al. 2015). This observation is substantiated by our three tool cases striving to integrate variability mechanisms into the modeling tools.

Furthermore, the experience reports on Danfoss (Fogdal et al. 2016), General Motors (Flores et al. 2012), and CCT (Clements et al. 2001a) also mention this challenge. They request a better integration of SPLE concepts, specifically variability mechanisms, with modeling tools. Notably, the experience report on Daimler (Dziobek et al. 2008) focuses on handling variability in Simulink models, specifically, it describes how to represent variation points in models. Nokia Networks (van der Linden et al. 2007) laments missing support for reusing systems engineering assets, which is in line with the experienced needs of customers of our our two tool cases chip modeling (Section 6.12) and hardware modeling (Section 6.11).

Challenge 2, Tool Integration

These elaborations from our cases and the literature illustrate a more general problem: tool integration. Since variability is a cross-cutting concern, variability-related tooling usually needs to be integrated with other engineering tools as named, for instance by our automotive firmware and aerospace cases that exhibit overall high maturity. This tool integration challenge was also expressed in the literature for Danfoss (Fogdal et al. 2016), General Motors (Flores et al. 2012), Siemens Medical Solutions (van der Linden et al. 2007), Fiscan (Li and Chang 2009), Argon (Bergey et al. 2004), and CCT (Clements et al. 2001a), who not only request integrated tool chains, but more mature variability-related tooling in general. General Motors (Flores et al. 2012) suggests to integrate SPLE concepts with product lifecycle management (PLM) concepts. Argon (Bergey et al. 2004) requests the integration of variability in version-control systems. In summary, this challenge is further supported by a recent study on product-line analyzes (Mukelabai et al. 2018b), which found that adopting such is, among others, a tool-integration problem.

7.2 Clone management

As seen in Table 2, seven of our use cases exercise clone & own for managing variants—while for two of our tool cases, the customers’ variant management also relies on clone & own. The other tool vendor’s modeling tool (cf. Section 6.11) offers variability encoding (von Rhein et al. 2016) using built-in conditionals relying on configuration options. While clone & own is the most common strategy for engineering variants, we observed substantial awareness among our subjects of the problems connected to it.

Challenge 3, Visualize and Track Variability

A need expressed by all cases is keeping an overview understanding of cloned variants, since understanding the content and purpose of individual variants is challenging without more abstract representations of the codebases.

Feature-Orientation

Adding the notion of features and feature locations to clone & own would enhance the practice. When features are present, then stakeholders can know what is in the branches, as opposed to trying to understand the difference between variants through assets differences, which is challenging. In addition to recording features, recording their location, for instance, through lightweight code annotations (Ji et al. 2015; Andam et al. 2017; Abukwaik et al. 2018; Entekhabi et al. 2019; Krueger et al.2019b) also helps with maintenance. As such, this challenge is about keeping clone & own, but giving developers more control and overview understanding, which will help with maintenance (e.g., developers know the, potentially scattered (Passos et al. 2015, 2018), locations of a feature when cloning it to another variant).

In addition, better visualization and support for code propagation, ideally based on features, is needed. Especially for the power electronics case, better support for localization and propagation of features across cloned variants is requested. In summary, introducing some notion of feature-orientation to facilitate a more abstract understanding (abstracting over code-level structures) of variants, is an expressed need for our cases.

The existing case studies on Axis and Securitas (Bosch 1999a, 1999b) emphasize the lack of architectural abstractions (features) in programming languages, as well as the exploratory study of Chen and Ali Babar (2010) confirming this challenge.

Record and Analyze Variability Decisions

Several of our cases stated that they need a way to record and later analyze variability decisions (railway, imaging technology, and traffic control case), such as rationales for introducing variability. It was also stated that recording information about refactorings would be helpful, preferably using embedded annotations (Ji et al.2015; Andam et al. 2017; Abukwaik et al. 2018; Seiler and Paech 2017; Krueger et al. 2018a, 2019a). The recording should explicitly relate refactorings to either the introduction of new functionality (features) or to maintenance. This information otherwise needs to be recovered when later propagating code across variants or migrating towards a platform (e.g., when assessing architectural mismatches). Specifically, for the railway and traffic control case, there is a need to reconstruct the architectural and functional evolution of the product line. While architectural evolution is mainly represented by typical refactorings (for the railway case, eight typical refactorings from Fowler (1999) are mentioned), functional evolution (i.e., the implementation of new functionality, bug fixes, and so on), needs to be distinguished.

Challenge 4, Cloning in Combination with Variability

An interesting observation is that none of our subjects exercises pure clone & own, but that variants already use variation points. This challenge is also mentioned in existing experience reports about Danfoss (Fogdal et al. 2016), AKVAsmart (van der Linden et al. 2007), Philips Consumer Electronics Software for Televisions (van der Linden et al. 2007), Philips Medical Systems (van der Linden et al. 2007), and Dialect Solutions (Staples and Hill 2004), as well as the case studies on Axis and Ericsson (Svahnberg and Bosch 1999). Interestingly, Staples and Hill (2004) point out for Dialect Solutions, and Fogdal et al. (2016) for Danfoss, that using variability mechanisms also avoids merge conflicts during clone & own and allows more isolated feature development.

Limitations of Clone-Management Techniques

This challenge complicates using clone-management frameworks proposed in the literature (Pfofe et al. 2016; Rubin et al. 2012, 2013a, 2013b; Antkiewicz et al. 2014) and techniques for integrating variants (Fischer et al. 2014; Martinez et al. 2015), since existing variability needs to be taken into account.

In fact, as explained for the railway case (cf. Section 6.5), the integration is done by experts who should rather realize new functionality instead of recovering information about variability in cloned variants and re-engineering code. Furthermore, most of our use cases applying clone & own also have an integrated platform, that is, a project with common assets. We are only aware of one work in this direction (Lillack et al. 2019), which should further be complemented with methodologies and tools.

Clone Management of Whole Product Lines

Approaches to enable product lines of product lines have been investigated in the literature (Kästner et al. 2012; Rosenmüller and Siegmund 2010; Krueger 2006). However, what our cases require is clone management at the product-line level. A previous experience report on Philips Medical Systems (van der Linden et al. 2007) also emphasizes the reuse of components across product lines.

Our aerospace case exercises clone & own for two highly complex product lines. The primary product line controls a real aircraft, and the cloned product line the simulator. The case strives to improve the—currently manual and laborious—synchronization between aircraft and the simulator product line. Specifically, it requests guidance for creating variability models (e.g., whether one or separate, but largely redundant models should be created; how they should be decomposed to reflect the architecture) and modeling constraints. This should help defining an aircraft or a simulator configuration, including matching an aircraft configuration to a suitable simulator configuration for a particular test activity.

7.3 Migration to an integrated platform

Our three use cases that want to establish an integrated platform, as well as the customers of our two tool cases that aim to establish a platform, practice clone & own. Recall the core motivation for our railway case to free experienced developers from performing migrations (cf. Section 6.5). As such, migrations should be semi-automated, supporting less experienced developers or enabling domain experts performing it. We observed the following challenges.

Challenge 5, Platform Migration Process and Tools

For two of our use cases (power electronics and railway), the need for a dedicated migration process was expressed. Such a process should be lightweight and should guide engineers through the whole migration. Almost all of our use cases expressed the need for commonality and variability analysis. Furthermore, such a process should guide through the identification and location of features (potentially integrated with manual feature-location techniques Krüger et al. 2018b) or through creating the target architecture (potentially supported by feature-model composition techniques (Acher et al. 2010)). According to our railway and aerospace cases, such a process should also support engineers in creating variation points with an appropriate variability mechanism (cf. Section 2), in other words, prescribing the introduction of variation points. This challenge is also mentioned by previous experience reports on Bosch (Tischer et al. 2011), MSI (Sellier et al. 2007), and Siemens Healthcare (Bartholdt and Becker 2011), both requesting a process for incremental migration, the latter even during running projects, without disrupting the development.

Diffing of Cloned Variants

We observed the need for higher-level diffing support for cloned variants. Specifically, as pointed out for our railway case, there should be means to express historical additions, suppressions, and modifications at the highest level of abstraction, specifically distinguishing architectural and functional evolutions. As such, practically usable, dedicated diffing techniques, which support existing variation points (e.g., #ifdef), are needed, potentially building upon recent techniques such as intention-based clone integration (Lillack et al. 2019). Enhanced visualization capabilities should also support highly scattered features (Passos et al. 2015, 2018), as explained for the web application and power electronics case. Furthermore, the visualization should be guided by extracting the structure of the underlying configuration management base (including branching and revision structures), as pointed out for our railway case.

Notably, this challenge is also expressed for Ricoh (Kolb et al. 2005), and the exploratory study of Chen and Ali Babar (2010) reports that clone detection techniques are not applicable to software variants.

From a tool-vendor perspective, expressed for the chip modeling case, the organization strives to provide automated commonality and variability analyses to customers of the tool, so that existing cloned models can be migrated. As such, the challenge is to adopt automated analyses conceived by researchers, ideally adhering to standards, which are almost non-existent for variability management.

Asset Integration at Code and Model Level

Integrating assets into a platform is challenging. Such an integration differs from integrating assets during clone & own in the sense that platform integration needs to consider many more variants—those derived from the platform—which might make it more challenging. Understanding and characterizing both kinds of integration is subject to future work. For our cases, better merge-refactoring techniques taking existing variability (e.g., #ifdef in the cloned variants) into account are necessary. For instance, our railway case needs such techniques for automatically proposing integration strategies for features from variants, that is, focusing on identifying functional (feature-based) evolution from the branching history, ignoring refactorings and other non-functional evolutions. Finally, asset integration is challenged (Challenge 1) when MDE techniques or code generation are used (e.g., in the power electronics case). This requires focusing on the migration (i.e., integration) of models, while not ignoring customized code.

Training, Certification, and Budgeting

Other issues to be addressed by a process are to: (i) establish a common understanding among the stakeholders about product-line engineering concepts (requirements engineering case), calling for training support in a migration process, to (ii) establish certification support, as requested by an experience report on Rolls-Royce (Habli and Kelly 2007), and (iii) as expressed for our relatively small web application case, there is no dedicated budget to develop the platform, so assisting in budgeting is a challenge a platform migration process should support.

Definition of a Target Architecture

A core issue when adopting an integrated platform is architectural degradation, and therefore architectural mismatches among the cloned variants, which need to be resolved. Architectures of the cloned variants need to be compared and a target architecture (Sinkala et al. 2018; Acher et al. 2011b) defined, which is expressed as a core challenge (e.g., for our railway case).

Specifically, expressed for the imaging technology case, the target architecture should be layered and modularized, to be maintainable. The goal is to enable fully automated variant derivation without programming effort (i.e., developing adaptations). Another challenge expressed is to create documentation (or generate such) about the architecture itself (chip modeling case), and about using such an architecture for variant derivation in parallel.

In our literature survey we found that most of the case studies focus on describing the architecture of the resulting product line. For instance, for RPG Games (Zhang and Jarzabek 2005), the authors discuss the architecture development and necessary adaptations of cloned variants (e.g., changes of variable types and refactorings) to obtain a common product-line architecture. Recently, Debbiche et al. (2019) and Akesson et al. (2019) provide datasets and experiences migrating cloned Java and Android game variants to such a common architecture.

Challenge 6, Migration Decision Support

A migration process should also provide decision support about the migration itself, based on the expected costs and benefits (Ali et al. 2009; Krüger et al. 2016). Specifically, for our power electronics case, the suggestion was made to enhance feature identification and location with indicators about the cost of the extraction and re-engineering of relevant assets for making them reusable or integrating into a platform.

Cost/Benefit Estimation

The need for effective cost/ benefit estimation is further supported by existing experience reports on Deutsche Bank (Faust and Verhoef 2003b), on Philips Medical Systems (van der Linden et al. 2007), on Hitachi (Takebe et al. 2009), on Ericsson (Mohagheghi and Conradi 2008; Andersson and Bosch 2005), on Axis and Ericsson (Svahnberg and Bosch 1999), as well as on Axis and Securitas (Bosch 1999a, 1999b). Specifically, for Philips Medical Systems (van der Linden et al. 2007), clone & own sometimes appeared to be beneficial (close to the break-even point), which led to tensions to develop outside the platform. Axis and Ericsson (Svahnberg and Bosch 1999) also need cost estimation for decision making. They explain it for the case that when a new product is added, sometimes rewriting the components instead of adapting them is easier, depending on the extent of the changes. The challenge is also described in other case studies on Axis and Securitas (Bosch 1999a, 1999b), among others, demanding decision making support for: “Deciding to include or exclude a product in the product-line [...] Guidelines or methods for making more objective decisions would be valuable to technical managers.”

Measurement

The pre-requisite for cost/benefit estimation are effective techniques to measure the costs and benefits of reuse. Previous experience reports on Bosch (Thiel et al. 2001), Testo (Schmid et al. 2005), Argon (Bergey et al. 2004), Overwatch Textron Systems (Jensen 2007a), Wikon (Pech et al. 2009), and CCT (Clements et al. 2001a) emphasize such a measurement technique as an important challenge. Generally, in a case study on Axis (Bosch 1999a), the lack of economic models is lamented.

Challenge 7, Continuous Integration

Six of our cases (web application, traffic control, aerospace, automotive firmware, railway) explicitly point out the need for supporting continuous integration. Most of them have sets of automated unit tests (grown incrementally, typically with the discovery of bugs), UI tests, and integration tests. We found typical tools used for continuous integration, such as Jenkins, Maven, BuildBot, Git, and Jira. While some approaches to automate, e.g., interaction testing in continuous integration exists (Johansen et al. 2012), the larger challenge is to obtain a feature-oriented and configurable architecture that supports continuous integration, ideally with the tools mentioned.

This challenge is implicitly expressed in the cases for Philips Consumer Electronics Software for Televisions (van der Linden et al. 2007), for Overwatch Textron Systems (Jensen 2007a), and for CelsiusTech (Bass et al. 2003; Brownsword and Clements 1996), where balancing between domain and application engineering is a core challenge, striving to bringing both closer together. In addition, CCT (Clements et al. 2001a) demands a process for integration testing.

7.4 Working with an integrated platform

We observed the following challenges expressed for our use cases and tool cases when dealing with an integrated platform.

Challenge 8, Representation of Variability

A need expressed by all cases is a centralized (and ideally unified Berger et al.2019a) representation of variability. For instance, for the aerospace and automotive firmware cases, an overall view providing a unified description of the variability was demanded, ideally in the form of a feature model declared in an established SPLE tool. Specifically, in one of these two cases, the variability information is scattered across different representations, including a distributed feature database and configuration files, preventing a coherent view of all relevant product line information. Also recall that many different types of artifacts (e.g., requirements, architecture, design models, source code) are used in the cases (cf. Section 5.2), and that each artifact type has its own technical representation of variation points, which challenges obtaining a global view of variability. This challenge is closely related to Challenges 1 and 2, requiring variability support in modeling languages, tool integrations, and traceability support.

Missing Standards

Standardisation efforts in the direction to provide unified representations for variability and variation points exist, such as CVL (Haugen et al. 2013a) and VEL (Schulze and Hellebrand 2015). However, CVL was never adopted as a standard and VEL is explicitly aimed at providing an exchange language between tools rather than a fully integrated variability representation to be edited directly. Recently, a new initiativeFootnote 3 was launched, aiming again at establishing a common feature modeling language, supporting common, community-agreed usage scenarios for such a language (Berger and Collet 2019b).

Representation of Behavioral Variability

Furthermore, the cases from the literature CCT (Clements et al. 2001a), ENEA (Andersson and Bosch 2005), and the study of Chen and Ali Babar (2010) request the support for quality properties when representing variability. Specifically, “structural variability is well supported, behavioral and timing aspects are not” (Chen and Ali Babar 2010).

Representation of Topological Variability

We observed the need for representing topological variability in the five cases that use design models (hardware modeling, modeling platform, chip modeling, aerospace, and power electronics). These are often XML-based domain-specific modeling languages (DSMLs) using graphs as their underlying structure. As opposed to feature-oriented (switch on/off features) variability, topological variability requires dedicated modeling languages. Typically, organizations create their own domain-specific languages (DSLs) for this reason, given limitations of established variability modeling languages (Berger et al. 2014d; Fantechi 2013; Behjati et al. 2014). A challenge is that topological variability can hardly be expressed using variability annotations and preprocessors, but typically requires more flexible code generators.

Representation of User-Interface Variability

An interesting need was explained for the web application case, where the prime driver of variability is the customization of user interfaces. The current web frameworks do not offer any facilities for customization, so the company needs to rely on clone & own. UI variability has several peculiarities compared to traditional source code, as UIs encapsulate human computer interaction (HCI) assets (e.g., dialog models, context models, and aesthetic concerns). This challenge is related to Challenge 1 (MDE and code generation), given that abstract HCI models are sometimes used before the generation of concrete HCI implementations in target languages (Martinez et al. 2017). Also note that web applications as cases are rather rare among the existing literature. Exceptions are HomeAway (Krueger et al. 2008) and MarketMaker (Verlage and Kiesgen 2005); however, none of the publications describes the realization of variability in the web-based user interfaces of these web applications.

Challenge 9, Feature Modeling

In the light of obtaining a unified representation of variability, almost all of our subjects (9 of 12) strive to adopt feature modeling. For the power electronics case, a feature model would aim at visualizing the variant space and keeping the variants manageable. For the automotive firmware case, a feature model would be the basis for a configurator with intelligent configuration facilities, which should support product derivation using defaults, choice propagation, and conflict resolution—easing the configuration (and avoiding having to decide all features) Likewise, for the modeling platform case, an improved product derivation through an intelligent configurator tool is needed. In the imaging technology case, a more intuitive representation of configuration knowledge is needed, where even configuration profiles (partial configurations) or a feature hierarchy is seen as beneficial. In fact, as explained for the automotive firmware case, the feature hierarchy is considered the most valuable information. Furthermore, the experience report by Audi (Hardung et al. 2004) also requests modeling guidelines. A first step in this direction are probably the feature modeling principles of Nesic et al. (2019).

Feature-Modeling Process

As such, a feature modeling process is needed, that supports obtaining a feature model from a diverse set of variability information sources (Bécan et al. 2016; Davril et al. 2013; Krueger et al. 2019a), integrated with feature-model management, merging, and synthesis techniques (Acher et al. 2010, 2013a, 2013b, She et al.2011, 2014). Our cases express that functional evolution is well reflected in commit messages (e.g., bug fix information, new feature implementations, new branches), which confirms some insights that commit messages are a good source for feature identification (Krueger et al. 2018a, 2019a; Zhou et al.2018).

Variation-Point Methodology

Another issue pertaining to such a process is a variation-point methodology (expressed for the aerospace case). It should guide, upon the decision to introduce a feature (originating from some scoping process), the flow of variation points into requirements, code, models, and other affected artifact types.

Feature-Oriented Authorization

For the chip modeling, aerospace, and requirements engineering case, the need for user access control and authorization mechanisms was explicitly mentioned. Users have different access rights for different parts (features) of the product line. Especially the aerospace case needs to control visibility of variation points, where certain variation points or variants even need to be completely invisible (i.e., no trace should be visible that a variation point exists) for unauthorized personnel. Unfortunately, beyond work by Fægri and Hallsteinsen (2006), this challenge has not yet been recognized or received attention in the research community.

Feature-Oriented Views and Synchronization

For nine of our twelve cases, the need for better visualizations, especially establishing feature-oriented views (Acher et al. 2011a; Andam et al. 2017; Montalvillo and Díaz 2015, 2017; Passos et al. 2013; Martinez et al. 2014), was expressed. In one of our cases it is

planned to reverse-engineer feature constraints from the codebase and distributed feature databases, including identifying the model hierarchy (Nadi et al. 2014, 2015), and to synthesize a feature model. This challenge is also reported by the exploratory study of Chen and Ali Babar (2010), and by the survey of Berger et al. (2013a).