Abstract

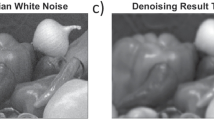

The study of non-convex penalties has recently received considerable attentions in sparse approximation. The existing non-convex penalties are proposed on the principle of seeking for a continuous alternative to the ℓ0-norm penalty. In this paper, we come up with a cubic spline penalty (CSP) which is also continuous but closer to ℓ0-norm penalty compared to the existing ones. As a result, it produces the weakest bias among them. Wavelet tight frames are efficient for sparse approximation due to its redundancy and fast implementation algorithm. We adopt a tight frame balanced model with our proposed cubic spline penalty since the balanced model takes the advantages of both analysis and synthesis model. To solve the non-convex CSP penalized problem, we employ a proximal local linear approximation (PLLA) algorithm and prove the generated sequence converges to a stationary point of the model if it is bounded. Under additional conditions, we find that the limit point behaves as well as the oracle solution, which is obtained by using the exact support of the ground truth signal. The efficiency of our cubic spline penalty are further demonstrated in applications of variable selection and image deblurring.

Similar content being viewed by others

References

Anderson, S. R., Auquier, A., Hauck, W. W., Oakes, D., Vandaele, W., Weisberg, H. I.: Statistical methods for comparative studies: techniques for bias reduction, vol. 170. Wiley, New York (2009)

Antoniadis, A.: Wavelets in statistics: a review. J Italian Stat Soc 6(2), 97 (1997)

Attouch, H., Bolte, J., Redont, P., Soubeyran, A.: Proximal alternating minimization and projection methods for nonconvex problems: an approach based on the Kurdyka-Łojasiewicz inequality. Math. Oper. Res. 35(2), 438–457 (2010)

Attouch, H., Bolte, J., Svaiter, B. F.: Convergence of descent methods for semi-algebraic and tame problems: Proximal algorithms, forward-backward splitting, and regularized Gauss-Seidel methods. Math. Program. 137(1-2), 91–129 (2013)

Beck, A., Teboulle, M.: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2(1), 183–202 (2009)

Bolte, J., Daniilidis, A., Lewis, A.: The Łojasiewicz inequality for nonsmooth subanalytic functions with applications to subgradient dynamical systems. SIAM J. Optim. 17(4), 1205–1223 (2006)

Bolte, J. B., Sabach, S., Teboulle, M.: Proximal alternating linearized minimization for nonconvex and nonsmooth problems. Math. Program. 146(1-2), 459–494 (2014)

de Boor, C.: A Practical Guide to Splines (revised edition). Springer, Berlin (2001)

Boyd, S., Parikh, N., Chu, E., Peleato, B., Eckstein, J.: Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends Mach. Learn. 3(1), 1–122 (2011)

Breiman, L.: Heuristics of instability and stabilization in model selection. Ann. Statist. 24(6), 2350–2383 (1996)

Cai, J. F., Chan, R. H., Shen, Z.: A framelet-based image inpainting algorithm. Appl. Comput. Harmon. Anal. 24(2), 131–149 (2008)

Cai, J. F., Dong, B., Osher, S., Shen, Z.: Image restoration: total variation, wavelet frames, and beyond. J. Amer. Math. Soc. 25(4), 1033–1089 (2012)

Chai, A., Shen, Z.: Deconvolution: a wavelet frame approach. Numer. Math. 106(4), 529–587 (2007)

Daubechies, I.: Ten lectures on wavelets society for industrial and applied mathematics (1992)

Daubechies, I., Han, B., Ron, A., Shen, Z.: Framelets: MRA-based constructions of wavelet frames. Appl. Comput. Harmon. Anal. 14(1), 1–46 (2003)

Davis, G., Mallat, S., Avellaneda, M.: Adaptive greedy approximations. Constr. Approx. 13(1), 57–98 (1997)

Dong, B., Shen, Z.: MRA-based wavelet frames and applications. In: Mathematics in Image Processing, IAS/Park City Mathematics Series. American Mathematical Society and and IAS/Park City Mathematics Institute, vol. 19, pp 7–158 (2013)

Dong, B., Shen, Z.: Image restoration: a data-driven perspective. In: Proceedings of the International Cogress on Industrial and Applied Mathematics (ICIAM) (2015)

Donoho, D. L., Johnstone, J. M.: Ideal spatial adaptation by wavelet shrinkage. Biometrika 81(3), 425–455 (1994)

Elad, M., Starck, J.L., Querre, P., Donoho, D.: Simultaneous cartoon and texture image inpainting using morphological component analysis (MCA). Appl. Comput. Harmon. Anal. 19(3), 340–358 (2005). Computational Harmonic Analysis - Part 1

Fan, J., Li, R.: Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Stat. Assoc. 96(456), 1348–1360 (2001)

Fan, J., Xue, L., Zou, H.: Strong oracle optimality of folded concave penalized estimation. Ann. Statist. 42(3), 819 (2014)

Figueiredo, M. A. T., Nowak, R. D.: An em algorithm for wavelet-based image restoration. IEEE Trans. Image Process. 12(8), 906–916 (2003)

Figueiredo, M. A. T., Nowak, R. D.: A bound optimization approach to wavelet-based image deconvolution. In: IEEE International Conference on Image Processing 2005, vol. 2, pp II–782–5 (2005)

Friedman, J., Hastie, T., Tibshirani, R.: Regularization paths for generalized linear models via coordinate descent. J. Stat. Softw. 33(1), 1–22 (2010)

Hsu, D., Kakade, S. M., Zhang, T.: A tail inequality for quadratic forms of subgaussian random vectors. Electron. Commun. Probab. 17(25), 1–6 (2011)

Kurdyka, K.: On gradients of functions definable in o-minimal structures. Ann. Inst. Fourier (Grenoble) 48(3), 769–783 (1998)

Liu, H., Yao, T., Li, R., Ye, Y.: Folded concave penalized sparse linear regression: sparsity, statistical performance, and algorithmic theory for local solutions. Math. Program. 166(1-2), 207–240 (2017)

Łojasiewicz, S.: Une propriété topologique des sous-ensembles analytiques réels. In: Les Équations Aux Dérivées Partielles (Paris, 1962). Éditions Du Centre National De La Recherche Scientifique, Paris, pp 87–89 (1963)

Rockafellar, R. T., Wets, R. J. B.: Variational Analysis. Grundlehren der Mathematischen Wissenschaften, vol. 317. Springer, Berlin (1998)

Ron, A., Shen, Z.: Affine systems in \(l_{2}(\mathbb {R}^{d})\): The analysis of the analysis operator. J. Funct. Anal. 148(2), 408–447 (1997)

Shen, Z., Toh, K., Yun, S.: An accelerated proximal gradient algorithm for frame-based image restoration via the balanced approach. SIAM J. Imaging Sci. 4 (2), 573–596 (2011)

Starck, J.L., Elad, M., Donoho, D.L.: Image decomposition via the combination of sparse representations and a variational approach. IEEE Trans. Image Process. 14(10), 1570–1582 (2005)

Tibshirani, R.: Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society. Series B (Methodological) 58(1), 267–288 (1996)

Zhang, C.H.: Nearly unbiased variable selection under minimax concave penalty. Ann. Statist. 38(2), 894–942 (2010)

Zou, H.: The adaptive lasso and its oracle properties. J Am Stat Assoc 101 (476), 1418–1429 (2006)

Zou, H., Li, R.: One-step sparse estimates in nonconcave penalized likelihood models. Ann. Statist. 36(4), 1509–1533 (2008)

Acknowledgments

The work of the second author was supported by National Natural Science Foundation of China (Grants 11301289, 11531013 and 11871035), Recruitment Program of Global Young Expert, and the Fundamental Research Funds for the Central Universities. The work of the third author was supported by Chinese Scholarship Council and PHD Program 52XB2013 of Tianjin Normal University. The authors are grateful to the editor and the anonymous referees for their valuable suggestions and comments, which helped improve this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by: Yuesheng Xu

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

We provide some related definitions and results about Kurdyka–Łojasiewicz (KL) property in this section.

Definition 1 (Subdifferentials 30)

Let \(h: \mathbb {R}^{p} \to \mathbb {R}\cup \{+\infty \}\) be a proper, lower semicontinuous function.

-

(i)

The regular subdifferential of h at \(\bar {z} \in \text{dom } h = \{z \in \mathbb {R}^{p}: h(z) < +\infty \}\) is defined as follows:

$$ \widehat{\partial}h(\bar{z} ) := \left\{ v \in\mathbb{R}^{p}:\underset{z \neq \bar{z}}{\liminf\limits_{z \to \bar{z}}} \frac{h(z)-h(\bar{z})- \langle v,z -\bar{z} \rangle}{\|z-\bar{z}\|}\geq 0 \right\}; $$ -

(ii)

The (limiting) subdifferential of h at \(\bar {z} \in \text{dom } h \) is defined as follows:

$$ \partial h(\bar{z} ):=\left\{ v \in\mathbb{R}^{p}: \exists z^{(k)} \to \bar{z} , h(z^{(k)}) \to h(z), v^{(k)}\in \widehat{\partial} h(z^{(k)}), v^{(k)} \to v \right\}. $$

In [27, 29], Łojasiewicz and Kurdyka established the foundational works on the Kurdyka–Łojasiewicz (KL) property. The development of the application of KL property in optimization theory can be found in [3, 4, 6, 7] and reference therein.

Definition 2 (KL property 3)

A proper function h is said to have the KL property at \(\bar {z} \in \text{dom } \partial h = \{z \in \mathbb {R}^{p}: \partial h(z) \neq \emptyset \}\) if there exist \(\zeta \in (0, +\infty ]\), a neighborhood U of \(\bar {z}\), and a continuous concave function \(\varphi : [0, \zeta ) \to \mathbb {R}_{+}\) such that

-

(i)

φ(0) = 0.

-

(ii)

φ(0) is C1 on (0,ζ).

-

(iii)

For all s ∈ (0,ζ), \(\varphi ^{\prime }(s) > 0\).

-

(iv)

For all z ∈ U satisfying \(h(\bar {z}) < h(z) < h(\bar {z}) + \zeta \), the KL inequality holds:

$$ \varphi^{\prime}(h(z) - h(\bar{z})) \text{dist } (0,\partial h(z)) \geq 1. $$where \(\text{dist } (0,\partial h(z)) = \min \limits \{\| v \|: v \in \partial h(z) \}\).

For a proper, lower semi-continuous function h, we say it a KL function if it satisfies the KL property at all points in dom ∂h. Examples of KL functions can be referred to [3, 4, 7]. It is known that a proper closed semi-algebraic function is a KL function.

Definition 3

[7] A subset \(\mathcal {X}\) of \(\mathbb {R}^{p}\) is a real semi-algebraic set if it can be represented as follows:

where \(g_{i,j},h_{i,j}: \mathbb {R}^{p} \to \mathbb {R}\) are real polynomial functions. A function \(h :\mathbb {R}^{p} \to \mathbb {R}\cup \{+\infty \} \) is called semi-algebraic if its graph

is a semi-algebraic set.

We present some known basic properties of semi-algebraic sets and semi-algebraic functions below, which help identify semi-algebraic functions [7].

-

Finite intersections and unions of semi-algebraic sets are semi-algebraic.

-

The complementation of a semi-algebraic set is semi-algebraic.

-

Cartesian products of semi-algebraic sets are semi-algebraic.

-

Indicator functions of semi-algebraic sets are semi-algebraic.

-

Finite sums and products of semi-algebraic functions are semi-algebraic.

-

The composition of semi-algebraic functions is semi-algebraic.

Rights and permissions

About this article

Cite this article

Pang, T., Wu, C. & Liu, Z. A cubic spline penalty for sparse approximation under tight frame balanced model. Adv Comput Math 46, 36 (2020). https://doi.org/10.1007/s10444-020-09786-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10444-020-09786-y