Cloud Computation Using High-Resolution Images for Improving the SDG Indicator on Open Spaces

Abstract

:1. Introduction

2. Study Area and Input Data

2.1. Overview of the Study Area

2.2. Input Data

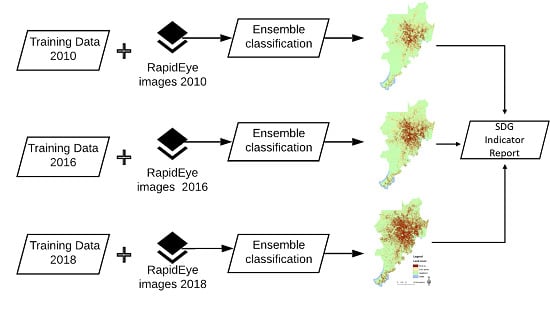

3. Methodology

3.1. Assessment of the GHSL for the Study Area

- Taking 50% built-up area (building footprints) as the threshold for classifying a cell as built-up area.

- Taking 10% built-up area (building footprints) as the threshold for classifying a cell as built-up area.

- Taking more than 0% built-up area (building footprints) as the threshold for classifying a cell as built-up area.

3.2. Calculation of the BuiltOpen Indicator

3.2.1. Ensemble Classification

3.2.2. Consistency of Changes

- The classified mosaic of 2016 was taken as a reference because this dataset obtained a higher accuracy in the classification.

- Pixel values in the 2016 mosaic were replaced by the pixel value in the 2010 mosaic if those belong to built-up areas or gray spaces.

- Pixel values in 2010 were replaced by gray spaces as visual inspection confirmed that these pixels were wrongly classified as water, mainly being areas of shadows (e.g., along roads).

- Pixel values in the 2018 mosaic were substituted by the pixel value of the classified mosaic in the previous reference year, i.e., the classified mosaic for 2016.

4. Results

4.1. The Strength and Weaknesses of the GHSL for Built-Up and Open Space Mapping

4.2. Image Classification and BuiltOpen Indicator

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A. Textural Feature Formulas

- is the (i,j)th entry in a normalized gray tone matrix,

- , is the ith entry in the marginal probability matrix computed by summing the rows of for fixed i,

- , is the jth entry in the marginal probability matrix computed by summing the columns of for fixed j,

- is the number of distinct gray levels in the quantized image,

- , and

| Name/Formula | Name/Formula |

|---|---|

| Angular Second Moment | Contrast |

| Correlation | Variance |

| Inverse Difference Moment | Sum Average |

| Sum Variance | Sum Entropy |

| Entropy | Difference Variance variance of |

| Difference Entropy | Information Measures of Correlation 1 where, and are entropies of and |

| Information Measures of Correlation 2 , where | Maximal Correlation Coefficient where |

| Dissimilarity |

- is the (i,j)th entry in a normalized Gray Level Co-occurrence Matrix, equivalent to,

- represents the region and shape used to estimate the second order probabilities, and

- is the displacement vector.

References

- Douglas, O.; Lennon, M.; Scott, M. Green space benefits for health and well-being: A life-course approach for urban planning, design and management. Cities 2017, 66, 53–62. [Google Scholar] [CrossRef] [Green Version]

- UN-Habitat. Streets as Public Spaces and Drivers of Urban Prosperity; UN-Habitat: Nairobi, Kenya, 2013. [Google Scholar]

- Wicht, M.; Kuffer, M. The continuous built-up area extracted from ISS night-time lights to compare the amount of urban green areas across European cities. Eur. J. Remote Sens. 2019, 52, 58–73. [Google Scholar] [CrossRef]

- Wang, J.; Kuffer, M.; Sliuzas, R.; Kohli, D. The exposure of slums to high temperature: Morphology-based local scale thermal patterns. Sci. Total Environ. 2019, 650, 1805–1817. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.-H.; Lee, C.; Sohn, W. Urban Natural Environments, Obesity, and Health-Related Quality of Life among Hispanic Children Living in Inner-City Neighborhoods. Int. J. Environ. Res. Public Health 2016, 13, 121. [Google Scholar] [CrossRef]

- Dayo-Babatunde, B.; Martinez, J.; Kuffer, M.; Kyessi, A.G. The Street as a Binding Factor: Measuring the Quality of Streets as Public Space within a Fragmenting City: The Case of Msasani Bonde la Mpunga, Dar es Salaam, Tanzania. In GIS in Sustainable Urban Planning and Management; van Maarsevee, M.F.A.M., Martinez, M., Flacke, J., Eds.; CRC Press: Boca Raton, FL, USA, 2018; pp. 183–201. [Google Scholar]

- Shrestha, S.R.; Sliuzas, R.; Kuffer, M. Open spaces and risk perception in post-earthquake Kathmandu city. Appl. Geogr. 2018, 93, 81–91. [Google Scholar] [CrossRef]

- UN-Habitat. Metadata Indicator 11.7.1: Average Share of the Built-up Area of Cities that is Open Space for Public Use for All, by Sex, Age and Persons with Disabilities; United Nations Statistics Division, Development Data and Outreach Branch: New York, NY, USA, 2018. [Google Scholar]

- OECD. Green Area per Capita; UN-Habitat: Nairobi, Kenya, 2018. [Google Scholar]

- WHO. Urban Green Spaces. Available online: https://www.who.int/sustainable-development/cities/health-risks/urban-green-space/en/ (accessed on 1 March 2020).

- Esch, T.; Thiel, M.; Schenk, A.; Roth, A.; Müller, A.; Dech, S. Delineation of urban footprints from TerraSAR-X data by analyzing speckle characteristics and intensity information. IEEE Trans. Geosci. Remote Sens. 2010, 48, 905–916. [Google Scholar] [CrossRef]

- Taubenböck, H.; Esch, T.; Felbier, A.; Wiesner, M.; Roth, A.; Dech, S. Monitoring urbanization in mega cities from space. Remote Sens. Environ. 2012, 117, 162–176. [Google Scholar] [CrossRef]

- Pesaresi, M.; Guo, H.D.; Blaes, X.; Ehrlich, D.; Ferri, S.; Gueguen, L.; Halkia, M.; Kauffmann, M.; Kemper, T.; Lu, L.L.; et al. A global human settlement layer from optical HR/VHR RS data: Concept and first results. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 2102–2131. [Google Scholar] [CrossRef]

- Florczyk, A.J.; Melchiorri, M.; Corbane, C.; Schiavina, M.; Maffenini, M.; Pesaresi, M.; Politis, P.; Sabo, S.; Freire, S.; Ehrlich, D.; et al. Description of the GHS Urban Centre Database 2015; Public Release 2019; Version 1.0; Office of the European Union: Luxembourg, 2019. [Google Scholar]

- Angel, S.; Blei, A.M.; Parent, J.; Lamson-Hall, P.; Galarza-Sanchez, N.; Civco, D.L.; Thom, K. Atlas of Urban Expansion—2016 Edition; The NYU Urbanization Project: New York, NY, USA, 2016. [Google Scholar]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Uganda Bureau of Statistics. Statistical Abstract; Uganda Bureau of Statistics: Kampala, Uganda, 2019. [Google Scholar]

- UN-Habitat. The State of African cities 2014. Re-imagining Sustainable Urban Transitions; UN-Habitat: Nairobi, Kenya, 2014. [Google Scholar]

- Pérez-Molina, E.; Sliuzas, R.; Flacke, J.; Jetten, V. Developing a cellular automata model of urban growth to inform spatial policy for flood mitigation: A case study in Kampala, Uganda. Comput. Environ. Urban Syst. 2017, 65, 53–65. [Google Scholar] [CrossRef]

- World Bank. Kampala Metropolitan Area Spatial Extent; World Bank: Washington, DC, USA, 2017. [Google Scholar]

- Planet Team. Planet Application Program Interface: In Space for Life on Earth. Available online: https://api.planet.com (accessed on 1 January 2019).

- Planet Team. Rapideye™ Imagery Product Specifications; Planet Labs Inc.: San Francisco, CA, USA, 2016. [Google Scholar]

- OpenStreetMap contributions. Planet Dump. 2017. Available online: https://planet.osm.org (accessed on 9 December 2018).

- Duque, J.C.; Patino, J.E.; Betancourt, A. Exploring the Potential of Machine Learning for Automatic Slum Identification from VHR Imagery. Remote Sens. 2017, 9, 895. [Google Scholar] [CrossRef] [Green Version]

- Pesaresi, M.; Ehrlich, D.; Ferri, S.; Florczyk, A.J.; Freire, S.; Halkia, M.; Julea, A.; Kemper, T.; Soille, P.; Syrris, V. Operating Procedure for the Production of the Global Human Settlement Layer from Landsat Data of the Epochs 1975, 1990, 2000, and 2014; Publications Office of the European Union: Luxembourg, 2016. [Google Scholar]

- Aguilar, R.; Zurita-Milla, R.; Izquierdo-Verdiguier, E.; de By, R. A Cloud-Based Multi-Temporal Ensemble Classifier to Map Smallholder Farming Systems. Remote Sens. 2018, 10, 729. [Google Scholar] [CrossRef] [Green Version]

- Louhaichi, M.; Borman, M.M.; Johnson, D.E. Spatially Located Platform and Aerial Photography for Documentation of Grazing Impacts on Wheat. Geocarto Int. 2001, 16, 65–70. [Google Scholar] [CrossRef]

- Huete, A.; Didan, K.; Miura, T.; Rodriguez, E.P.; Gao, X.; Ferreira, L.G. Overview of the radiometric and biophysical performance of the MODIS vegetation indices. Remote Sens. Environ. 2002, 83, 195–213. [Google Scholar] [CrossRef]

- Huete, A.R. A soil-adjusted vegetation index (SAVI). Remote Sens. Environ. 1988, 25, 295–309. [Google Scholar] [CrossRef]

- Haboudane, D.; Miller, J.R.; Tremblay, N.; Zarco-Tejada, P.J.; Dextraze, L. Integrated narrow-band vegetation indices for prediction of crop chlorophyll content for application to precision agriculture. Remote Sens. Environ. 2002, 81, 416–426. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel algorithms for remote estimation of vegetation fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef] [Green Version]

- McFeeters, S.K. The use of the Normalized Difference Water Index (NDWI) in the delineation of open water features. Int. J. Remote Sens. 1996, 17, 1425–1432. [Google Scholar] [CrossRef]

- Space4water. Normalized Difference Water Index (NDWI). Available online: http://space4water.org/taxonomy/term/1315 (accessed on 3 April 2020).

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Texture features for image classification. IEEE Trans. Syst. Man. Cybern. 1973, 3, 610–621. [Google Scholar] [CrossRef] [Green Version]

- Conners, R.W.; Trivedi, M.M.; Harlow, C.A. Segmentation of a high-resolution urban scene using texture operators. Comput. Vis. Graph. Image Process. 1984, 25, 273–310. [Google Scholar] [CrossRef]

- Shen, H.; Abuduwaili, J.; Ma, L.; Samat, A. Remote sensing-based land surface change identification and prediction in the Aral Sea bed, Central Asia. Int. J. Environ. Sci. Technol. 2019, 16, 2031–2046. [Google Scholar] [CrossRef]

- Wurm, M.; Weigand, M.; Schmitt, A.; Geiß, C.; Taubenböck, H. Exploitation of textural and morphological image features in Sentinel-2A data for slum mapping. In Proceedings of the Joint Urban Remote Sensing Event (JURSE), Dubai, United Arab Emirates, 6–8 March 2017; pp. 1–4. [Google Scholar]

- Pesaresi, M.; Gerhardinger, A.; Kayitakire, F. A robust built-up area presence index by anisotropic rotation-invariant textural measure. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2008, 1, 180–192. [Google Scholar] [CrossRef]

- Izquierdo-Verdiguier, E.; Zurita-Milla, R. An evaluation of Guided Regularized Random Forest for classification and regression tasks in remote sensing. Int. J. Appl. Earth Obs. Geoinf. 2020, 88, 102051. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.F.; Ghimire, B.; Rogan, J.; Chica-Olmo, M.; Rigol-Sanchez, J.P. An assessment of the effectiveness of a random forest classifier for land-cover classification. ISPRS J. Photogramm. Remote Sens. 2012, 67, 93–104. [Google Scholar] [CrossRef]

- Mountrakis, G.; Im, J.; Ogole, C. Support vector machines in remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- Camps-Valls, G.; Gomez-Chova, L.; Calpe-Maravilla, J.; Martin-Guerrero, J.D.; Soria-Olivas, E.; Alonso-Chorda, L.; Moreno, J. Robust support vector method for hyperspectral data classification and knowledge discovery. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1530–1542. [Google Scholar] [CrossRef]

- Kavzoglu, T.; Colkesen, I. A kernel functions analysis for support vector machines for land cover classification. Int. J. Appl. Earth Obs. Geoinf. 2009, 11, 352–359. [Google Scholar] [CrossRef]

- Leonita, G.; Kuffer, M.; Sliuzas, R.; Persello, C. Machine Learning-Based Slum Mapping in Support of Slum Upgrading Programs: The Case of Bandung City, Indonesia. Remote Sens. 2018, 10, 1522. [Google Scholar] [CrossRef] [Green Version]

- Linard, C.; Gilbert, M.; Snow, R.W.; Noor, A.M.; Tatem, A.J. Population Distribution, Settlement Patterns and Accessibility across Africa in 2010. PLoS ONE 2012, 7, 8. [Google Scholar] [CrossRef] [Green Version]

- Wardrop, N.A.; Jochem, W.C.; Bird, T.J.; Chamberlain, H.R.; Clarke, D.; Kerr, D.; Bengtsson, L.; Juran, S.; Seaman, V.; Tatem, A.J. Spatially disaggregated population estimates in the absence of national population and housing census data. Proc. Natl. Acad. Sci. USA 2018, 115, 3529. [Google Scholar] [CrossRef] [Green Version]

- Grippa, T.; Georganos, S.; Zarougui, S.; Bognounou, P.; Diboulo, E.; Forget, Y.; Lennert, M.; Vanhuysse, S.; Mboga, N.; Wolff, E. Mapping Urban Land Use at Street Block Level Using OpenStreetMap, Remote Sensing Data, and Spatial Metrics. ISPRS Int. J. Geo-Inf. 2018, 7, 246. [Google Scholar] [CrossRef] [Green Version]

- Mahabir, R.; Stefanidis, A.; Croitoru, A.; Crooks, A.; Agouris, P. Authoritative and volunteered geographical information in a developing country: A comparative case study of road datasets in Nairobi, Kenya. ISPRS Int. J. Geo-Inf. 2017, 6, 24. [Google Scholar] [CrossRef]

- Huang, C.; Yang, J.; Lu, H.; Huang, H.; Yu, L. Green Spaces as an Indicator of Urban Health: Evaluating Its Changes in 28 Mega-Cities. Remote Sens. 2017, 9, 1266. [Google Scholar] [CrossRef] [Green Version]

- Giezen, M.; Balikci, S.; Arundel, R. Using Remote Sensing to Analyse Net Land-Use Change from Conflicting Sustainability Policies: The Case of Amsterdam. ISPRS Int. J. Geo-Inf. 2018, 7, 381. [Google Scholar] [CrossRef] [Green Version]

| Year | Data Source | Criteria | Total Points |

|---|---|---|---|

| 2010 | Municipal Map City of Kampala | Built-up: Buildings with an area >100 m2 Centre line of roads: 4m buffer around roads Vegetation: natural vegetation areas Water: Water bodies | 3996 |

| 2016 | ESA Africa Land cover map of 2016 | Built-up 2010 (check for changes): Buildings with an area >100 m2 Centre line of roads 2010 (check for changes): 4m buffer around roads ESA vegetation: grass, greenfield, forest, garden, brownfield, greenspace Water: Water bodies | 3931 |

| 2018 | OSM | Built-up: buildings with an area >100 m2 Centre line of roads: 4m buffer around roads Vegetation: grass, greenfield, forest, garden, brownfield, greenspace Water bodies | 3893 |

| Features | Features per Image |

|---|---|

| Spectral image bands | 5 |

| Indices | 9 |

| GLCM-based textural features | 180 |

| Total | 194 |

| Indices | Formula |

|---|---|

| Enhanced Vegetation Index (EVI) | 2.5 × (NIR − R)/(NIR +6 × R − 7.5 × B + 1) |

| Transformed Chlorophyll Absorption in Reflectance Index (TCARI) | 3 × ((RE − R) − 0.2 × (RE − G) × (RE/R)) |

| Soil Adjusted Vegetation Index (SAVI) | (1 + L) × (NIR − R)/(NIR + R + L), where L = 0.5 |

| Modified Soil Adjusted Vegetation Index (MSAVI) | |

| Visible Atmospherically Resistance Index (VARI) | (G − R)/(G + R − B) |

| Green Leaf Index (GLI) | (2 × G − R − B)/(2 × G + R + B) |

| Normalized Difference Vegetation Index (NDVI) | (NIR − R)/(NIR + R) |

| Normalized Difference Water Index with green band (NDWIGreen) | (G − NIR)/(G + NIR) |

| Normalized Difference Water Index with red band (NDWIRed) | (R − NIR)/(R + NIR) |

| (1) Building Level | (2) 50% Built up | (3) 10% Built up | (4) >0% Built up | |

|---|---|---|---|---|

| Overall Accuracy | 0.354 | 0.268 | 0.711 | 0.836 |

| Kappa | 0.075 | 0.022 | 0.395 | 0.564 |

| Feature\Year | 2010 | 2016 | 2018 |

|---|---|---|---|

| Image bands | b2, b5 | b1, b3, b4, b5 | b1, b5 |

| Indices | NDWIRed, VARI | NDWIGreen, TCARI, VARI | GLI, NDWIRed, TCARI, VARI |

| Textural—3 × 3 | b3_inertia, b3_shade, b5_dvar, b5_imcorr1 | b1_sent, b2_prom, b3_savg, b4_savg, b5_var | |

| Textural—5 × 5 | b2_dvar, b3_inertia, b3_savg, b3_var, b4_contrast, b4_dvar, b4_var b5_contrast, b5_savg, b5_dvar, b5_corr | b3_dvar, b3_savg, b1_savg, b2_idm, b4_dent, b4_dvar, b5_asm, b5_contrast, b5_corr, b5_dent, b5_dvar, b5_ent, b5_idm, b5_imcorr1, b5_prom, b5_savg, b5_sent, b5_svar | b1_asm, b1_shade, b2_dvar, b2_savg, b2_shade, b3_savg, b3_shade, b4_inertia, b4_shade, b5_asm, b5_corr, b5_dent, b5_diss, b5_idm, b5_imcorr1, b5_prom, b5_savg, b5_sent, b5_svar |

| Total | 15 | 29 | 30 |

| Year | 2010 | 2016 | 2018 |

|---|---|---|---|

| OA 4 classes | 0.78 | 0.83 | 0.73 |

| OA 3 classes | 0.84 | 0.88 | 0.86 |

| Year | 2010 | 2016 | 2018 |

|---|---|---|---|

| Built-up area | 9087.40 | 14,698.49 | 24,374.61 |

| Green space | 63,608.26 | 54,998.31 | 48,984.30 |

| Gray space | 18,263.67 | 21,230.23 | 17,600.43 |

| BuiltOpen | 9.00 | 5.19 | 2.73 |

| BuiltOpen per capita in m2 | 294.97 | 212.80 | 170.15 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aguilar, R.; Kuffer, M. Cloud Computation Using High-Resolution Images for Improving the SDG Indicator on Open Spaces. Remote Sens. 2020, 12, 1144. https://doi.org/10.3390/rs12071144

Aguilar R, Kuffer M. Cloud Computation Using High-Resolution Images for Improving the SDG Indicator on Open Spaces. Remote Sensing. 2020; 12(7):1144. https://doi.org/10.3390/rs12071144

Chicago/Turabian StyleAguilar, Rosa, and Monika Kuffer. 2020. "Cloud Computation Using High-Resolution Images for Improving the SDG Indicator on Open Spaces" Remote Sensing 12, no. 7: 1144. https://doi.org/10.3390/rs12071144