A Color Consistency Processing Method for HY-1C Images of Antarctica

Abstract

:1. Introduction

2. Data Preprocessing and Analysis

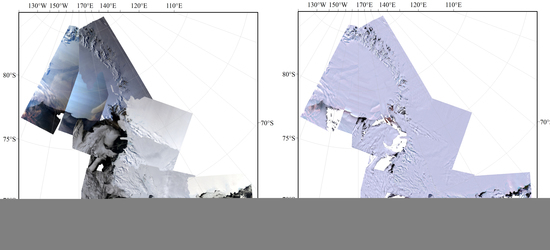

2.1. Study Dataset

2.2. Data Analysis

2.2.1. Uneven Lighting

2.2.2. Inconsistent Colors

3. Consistency Processing Method

3.1. Color Consistency Framework

3.1.1. Single-view Consistency Process

- Feature map extraction

- Uneven light removal

- Calculation of Artificial Lighting Information

- Information overlay

3.1.2. Multi-View Consistency Process

- to color space

- Feature calculation

- Feature normalization

- Results visualization

4. Results and Discussion

4.1. Single-View Consistency Evaluation

4.1.1. Subjective Evaluation

4.1.2. Quantitative Evaluation

4.2. Multi-View Color Consistency Evaluation

4.2.1. Subjective Evaluation

4.2.2. Quantitative Evaluation

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Pan, D.L.; Wang, D.F. Advances in the science of marine optical remote sensing application in China. Adv. Earth Sci. 2004, 19, 506–512. [Google Scholar]

- Liu, J.; Liu, J.H.; He, X.Q.; Pan, D.L.; Bai, Y.; Zhu, F.; Chen, T.Q.; Wang, Y.H. Diurnal Dynamics and Seasonal Variations of Total Suspended Particulate Matter in Highly Turbid Hangzhou Bay Waters Based on the Geostationary Ocean Color Imager. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 2170–2180. [Google Scholar] [CrossRef]

- Pan, D.; Gong, F.; Chen, J. The Chinese environment satellite mission status and future plan. Proc. SPIE—Int. Soc. Opt. Eng. 2009, 7474. [Google Scholar] [CrossRef]

- Yu, H.P.; Cheng, P.G.; Li, Y.S. PhotoShop software in RS image processing. Sci. Surv. Mapp. 2011, 3, 199–201. [Google Scholar]

- Mi, H.C.; Zhang, X.D.; Lai, K. Correction of Interpolation Methods in ENVI for Remotely Sensed Imagery. Comput. Sci. 2014, 41, 322–326. [Google Scholar]

- Wang, M.; Pan, J.; Chen, S.Q.; Li, H. A method of removing the uneven illumination phenomenon for optical remote sensing image. In Proceedings of the 2005 IEEE International Geoscience and Remote Sensing Symposium, Seoul, Korea, 25–29 July 2005; Volume 5, pp. 3243–3246. [Google Scholar]

- Wang, W.T.; Wen, D.B.; Chen, Z.C.; Zhu, J.J. An Improved MASK Dodging Method Research on Neighborhood Pixels. Available online: http://www.en.cnki.com.cn/Article_en/CJFDTotal-DBCH201712013.htm (accessed on 2 January 2020).

- Li, D.; Wang, M.; Pan, J. Auto-dodging processing and its application for optical RS images. Geomat. Inf. Sci. Wuhan Univ. 2006, 31, 753–756. [Google Scholar]

- Zhang, Z.; Zhu, B.S.; Zhu, S.L. Improved MASK dodging method based on wavelet. J. Remote Sens. 2009, 6, 1074–1081. [Google Scholar]

- Yao, F.; Hu, H.; Wan, Y. Research on the Improved Image Dodging Algorithm Based on Mask Technique. Remote Sens. Inf. 2012, 28, 8–13. [Google Scholar] [CrossRef] [Green Version]

- Sun, W.; You, H.J.; Fu, X.Y.; Song, M.C. A non-linear MASK dodging algorithm for remote sensing images. Sci. Surv. Mapp. 2014, 39, 130–134. [Google Scholar]

- Celik, T. Two-dimensional histogram equalization and contrast enhancement. Pattern Recognit. 2012, 45, 3810–3824. [Google Scholar] [CrossRef]

- Li, Z.J. Theory and Practice on Tone Image Reproduction of Color Photos; Wuhan University: Wuhan, China, 2005. [Google Scholar]

- Bracewell, R. The Fourier Transform and Its Applications. Am. J. Phys. 2005, 34. [Google Scholar] [CrossRef]

- Cooley, J.W.; Lewis, P.A.W.; Welch, P.D. The Fast Fourier Transform and Its Applications. IEEE Trans. Educ. 1969, 12, 27–34. [Google Scholar] [CrossRef] [Green Version]

- Antonini, M.; Barlaud, M.; Mathieu, P.; Daubechies, I. Image coding using wavelet transform. IEEE Trans. Image Process. 1992, 1, 205–220. [Google Scholar] [CrossRef] [Green Version]

- Zheng, J.; Xu, C.G.; Xiao, D.G.; Li, H.; Huang, H. The Technique of Digit Image’s Illumination Uneven Elimination. J. Beijing Inst. Technol. 2003, 23, 286–289. [Google Scholar]

- Liu, Y.; Jia, X.F.; Tian, Z.J. A processing method for underground image of uneven illumination based on holomorphic filtering theory. Ind. Mine Autom. 2013, 1, 9–12. [Google Scholar]

- Zhang, X.X.; Li, Z.J.; Zhang, J.Q.; Zheng, L. Use of discrete chromatic space to tune the image tone in a color image mosaic. In Proceedings of the Third International Symposium on Multispectral Image Processing and Pattern Recognition, Beijing, China, 20–22 October 2003; pp. 16–21. [Google Scholar]

- Li, H.F.; Shen, H.F.; Zhang, L.P.; Li, P.X. An Uneven Illumination Correction Method Based on Variational Retinex for Remote Sensing Image. Acta Geod. Cartogr. Sin. 2010, 39, 585–591. [Google Scholar]

- Zhang, S.W.; Zeng, P.; Luo, X.M.; Zheng, H.H. Multi-Scale Retinex with Color Restoration and Detail Compensation. J. Xi’an Jiaotong Univ. 2012, 46, 32–37. [Google Scholar]

- Rahman, Z.; Jobson, D.J.; Woodell, G.A. Retinex processing for automatic image enhancement. J. Electron. Imaging 2004, 13, 100–111. [Google Scholar]

- Sun, M.W. Research on the Key Technologies of Automatic and Rapid Production of Orthophoto; Wuhan University: Wuhan, China, 2009. [Google Scholar]

- Zhan, Z.Q.; Rao, Y.Z. Application of Rank Deficient Free Network Adjustment to Color Equalization for Close-range Imagery. Remote Sens. Inf. 2014, 6, 3–24. [Google Scholar]

- Wang, B.S.; Ai, H.B.; An, H.; Zhang, L. Research on Color Consistency Processing of Aerial Images. Remote Sens. Inf. 2011, 1. [Google Scholar] [CrossRef]

- Zhou, T.G. Study on the Seam-line Removal under Mosaicking of Remote Sensing Color Images. Comput. Eng. Appl. 2004, 36, 84–86. [Google Scholar]

- Pan, J. The Research on Seamless Mosaic Approach of Stereo Orthophoto; Wuhan University: Wuhan, China, 2005. [Google Scholar]

- Pan, L.; Fu, L. Ambiguity resolution using genetic algorithm. Wtusm Bull. Sci. Technol. 2004, 3, 26–29. [Google Scholar]

- Chen, K.; Huang, T.Y.; Wen, P.; Luan, Y.K.; Yang, L.B. Research on establishment of seamless DOM image database with color consistency using dodging and uniform color. Eng. Surv. Mapp. 2014, 23, 66–69. [Google Scholar]

- Reinhard, E.; Ashikhmin, M.; Gooch, B.; Shirley, P. Color transfer between images. IEEE Comput. Graph. Appl. 2001, 21, 34–41. [Google Scholar] [CrossRef]

- Ruderman, D.L.; Cronin, T.W.; Chiao, C.C. Statistics of cone responses to natural images: Implications for visual coding. J. Opt. Soc. Am. A 1998, 15, 2036–2045. [Google Scholar] [CrossRef] [Green Version]

- Zhang, L.; Zhang, Z.X.; Zhang, J.Q. The Image Matching Based on Wallis Filtering. J. Wuhan Tech. Univ. Surv. Mapp. 1999, 24, 24–27. [Google Scholar]

- MCM Zhipin. Ver. 6.5.0; Beijing Huazhi Yixin Technology Co., Ltd.: Beijing, China, 2020.

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Z.; Zhu, H.; Zhou, C.; Cao, L.; Zhong, Y.; Zeng, T.; Liu, J. A Color Consistency Processing Method for HY-1C Images of Antarctica. Remote Sens. 2020, 12, 1143. https://doi.org/10.3390/rs12071143

Li Z, Zhu H, Zhou C, Cao L, Zhong Y, Zeng T, Liu J. A Color Consistency Processing Method for HY-1C Images of Antarctica. Remote Sensing. 2020; 12(7):1143. https://doi.org/10.3390/rs12071143

Chicago/Turabian StyleLi, Zhijiang, Haonan Zhu, Chunxia Zhou, Liqin Cao, Yanfei Zhong, Tao Zeng, and Jianqiang Liu. 2020. "A Color Consistency Processing Method for HY-1C Images of Antarctica" Remote Sensing 12, no. 7: 1143. https://doi.org/10.3390/rs12071143