1. Introduction

In the modern world, the internet has been integrated into people’s daily lives. While online information enriches people’s lives, the probability of children being exposed to violent and gory content also increases in this environment. As a result, school bullying is becoming increasingly more common. School bullying is a violent event that occurs in various forms, such as physical violence, verbal bullying, destroying personal property, etc. Physical violence is considered to be the most harmful to teenagers. According to the “Campus Violence and Bullying” released by UNESCO (United Nations Educational, Scientific and Cultural Organization) in 2017, 32.5% of students all over the world suffer from campus bullying, with a total number of 243 million. Therefore, school bullying prevention is an urgent but timeless topic.

Studies on school bullying prevention have been developed since the 1960s. The methods for school bullying prevention used to be human-driven, i.e., when a school bullying event happened, the bystanders would report the event to the teachers. However, bystanders might fear retaliation from their bullies and thus often do not report the event. As smartphones become increasingly more popular, antibullying applications have been developed for victim use. However, these applications are also human-driven. When school bullying happens, the victim operates the application to send an alarm message, which may enrage the bully to cause further harm.

As artificial intelligence techniques develop, automatic methods are being developed for school bullying detection. In our previous work [

1,

2,

3,

4], we used movement sensors to detect physical violence. These movement sensors collected 3D acceleration data and 3D gyro data from the user. Then, the algorithms extracted time-domain features and frequency-domain features from the raw data. Feature selection algorithms, such as Relief-F [

5] and Wrapper [

6], were used to exclude useless features. PCA (Principal Component Analysis) [

7] and LDA (Linear Discriminant Analysis) [

8] further decreased the feature dimensionality. We developed several classifiers for different features and different activities, such as FMT (Fuzzy Multi-Thresholds) [

1], PKNN (Proportional K-Nearest Neighbor) [

2], BPNN (Back Propagation Neural Network) [

3], and DT–RBF (Decision Tree–Radial Basis Function) [

4], ultimately achieving an average accuracy of 93.7%. These algorithms detect physical violence from the perspective of the victims. In case bullies remove the movement sensors from the victims, this paper proposes an alternative school bullying detection method based on campus surveillance cameras.

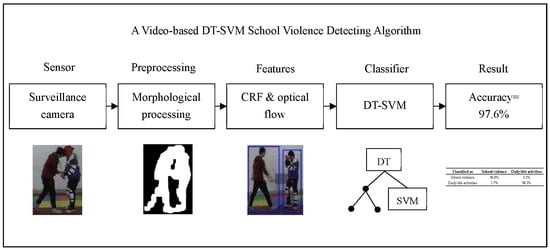

Figure 1 illustrates the structure of this violence detecting system.

The surveillance cameras used are conventional security cameras that only capture an image of the campus. All the recognition procedures are performed using a computer. Once a violent event has been detected, alarms are sent to the teacher and/or parents by either short messages or other social media.

Figure 2 shows the flow chart of the proposed detection method.

There can be several cameras on campus, each of which monitors a certain area. The camera takes pictures of the monitoring area and first detects whether there are moving targets. If there are moving targets, the KNN (K-Nearest Neighbors) algorithm then extracts the foreground targets. We use morphological processing methods to preprocess the detected targets and propose a circumscribed rectangular frame integrating method to optimize the detected moving target. According to the differences between physical violence and daily-life activities, we extract circumscribed rectangular frame features, such as aspect ratio and optical-flow features, and then reduce the feature dimensionality with Relief-F and Wrapper. By boxplotting, we determined that some features can accurately distinguish physical violence from daily-life activities, so we designed a DT–SVM two-layer classifier. The first layer is a Decision Tree that takes advantage of such features, and the second layer is an SVM that uses the remaining features for classification. According to the simulation results, the accuracy reaches 97.6%, and the precision reaches 97.2%.

The remainder of this paper is organized as follows:

Section 2 explores some related works on video-based activity recognition,

Section 3 shows the procedures for data gathering and data preprocessing,

Section 4 describes the feature extraction and feature selection methods,

Section 5 constructs the 2-layer DT–SVM classifier,

Section 6 analyzes the simulation results, and

Section 7 presents the conclusions.

2. Video-Based Activity Recognition

Activity recognition is a popular topic in the areas of artificial intelligence [

9,

10] and smart cities [

11,

12]. Most related research focuses on daily-life activity or sports recognition. Sun et al. [

13] studied action recognition based on the kinematic representation of video data. The authors proposed a kinematic descriptor named Static and Dynamic feature Velocity (SDEV), which models the changes of both static and dynamic information with time for action recognition. They tested their algorithm on some sport activities and achieved an average accuracy of 89.47% for UCF (University of Central Florida) sports and 87.82% for Olympic sports, respectively. Wang et al. [

14] studied action recognition using nonnegative action component representation and sparse basis selection. The authors proposed a context-aware spatial temporal descriptor, action learning units using the graph regularized nonnegative matrix factorization, and a sparse model. For the UCF sports dataset, they achieved an average accuracy of 88.7%. Tu et al. [

15] studied Action-Stage Emphasized Spatiotemporal VLAD for Video Action Recognition. The authors proposed an action-stage emphasized spatiotemporal vector of locally aggregated descriptors (Actions-ST-VLAD) method to aggregate informative deep features across the entire video according to adaptive video feature segmentation and adaptive segment feature sampling (AVFS-ASFS). Furthermore, they exploited an RGBF modality to capture motion salient regions in the RGB images corresponding to action activities. For the UCF101 dataset, they achieved an average accuracy of 97.9%, for the HMDB51 (human motion database) dataset they achieved 80.9%, and for activity net they achieved 90.0%.

In recent years, researchers have begun to pay attention to violence detection. A.S. Keçeli et al. [

16] studied violent activity detection with the transfer learning method. The authors designed a transfer learning-based violence detector and tested it on three datasets. For violent-flow (videos downloaded from YouTube) they achieved an average accuracy of 80.90%, for hockey they achieved 94.40%, and for movies they achieved 96.50%. Ha et al. [

17] studied violence detection for video surveillance systems using irregular motion information. The authors estimated the motion vector using the Combined Local-Global approach with Total Variation (CLG-TV) after target detection, and detected violent events by analyzing the characteristics of the motion vectors generated in the region of the object by using the Motion Co-occurrence Feature (MCF). They used the CAVIAR database but did not give a numerical result for the average accuracy. Tahereh Zarrat Ehsan et al. [

18] studied violence detection in indoor surveillance cameras using motion trajectory and a differential histogram of the optical flow. They extracted the motion trajectory and spatiotemporal features and then used an SVM for classification. They also used the CAVIAR dataset and achieved an average accuracy of 91%.

This paper studies the detection of school violence, a kind of physical violence that occurs on campus. The following sections will explain the used dataset and the proposed algorithms in detail.

6. Experimental Results

In this experiment, we altogether captured 24,896 frames of activities, including 12,448 school violence frames, 9963 daily-life activity frames, and 2485 static frames. After feature selection, 10 features were used for SVM classification: maximum width, maximum width variation, maximum height, maximum height variation, maximum area, maximum area variation, maximum aspect ratio, the maximum centroid distance variation of circumscribed rectangular frames, and the sum of areas and state of the detected targets. These features and their corresponding thresholds used for DT are given in

Section 5.2.

We define school violence as positive, and daily-life activity as negative, so TP (True Positive) means that school violence is recognized as school violence, FP (False Positive) means that daily-life activity is recognized as school violence (also known as a false alarm), TN (True Negative) means that daily-life activity is recognized as a daily-life activity, and FN (False Negative) means that school violence is recognized as a daily-life activity (also known as a missing alarm). The following four metrics are used to evaluate the classification performance:

Firstly, only SVM was used for classification. RBF was used as the kernel function, and five-fold cross validation was used.

Table 6 shows the confusion matrix of SVM classification. Accuracy = 89.6%, precision = 94.4%, recall = 81.5%, and F1-Score = 87.5%.

Then, we used the DT–SVM for classification.

Table 7 shows the confusion matrix of the DT–SVM classification.

Accuracy = 97.6%, precision = 97.2%, recall = 98.3%, and F1-Score = 97.8%. It can be seen that the designed Decision Tree improves the recognition accuracy of daily-life activities significantly, from 81.5% to 98.3%. The proposed school violence detecting algorithm also has better performance than existing violence detecting algorithms (e.g., 96.50% in [

16] and 91% in [

18]).

7. Discussions and Conclusions

School violence is a common social problem that does harm to teenagers. Fortunately, there are now several methods that can detect school violence, such as methods that use movement sensors and those that use cameras. We have used movement sensors to detect school violence in our previous work, but in this paper, we chose another method—one that uses cameras in case the movement sensors are removed by the bullies. The camera was used to take pictures of the monitoring area and detect moving targets. We proposed a circumscribed rectangular frame integrating method to optimize the detected foreground target. Then, the rectangular frame features and optical-flow features were extracted to describe the differences between school violence and daily-life activities. Relief-F and Wrapper were used to reduce feature dimensionality. Then, a DT–SVM classifier was built for classification. The accuracy reached 97.6%, and the precision reached 97.2%. By analyzing the simulation results, we determined that images with great changes in light and shadow and violent actions with slight amplitudes are easily misclassified. This work shows promise for campus violence monitoring. In future work, we intend to involve more complex activities, such as group bullying/fighting and more complex scenes with both nearby and distant objects, and improve the recognition performance of the misclassified samples.