Abstract

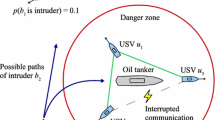

The advancement of hardware and software technology makes multiple cooperative unmanned surface vehicles (USVs) utilized in maritime escorting with low cost and high efficiency. USVs can work as edge computing devices to locally and cooperatively perform heavy computational tasks without dependence of remote cloud servers. As such, we organize a team of USVs to escort a high value ship (e.g., mother ship) in a complex maritime environment with hostile intruder ships, where the significant challenge is to learn cooperation of USVs and assign each USV tasks to achieve optimal performance. To this end, in this paper, a hierarchical scheme is proposed for the USV team to guard a valuable ship, which belongs to problems of sparse rewards and long-time horizons in multi-agent reinforcement learning. The core idea utilized in the proposed scheme is centralized training with decentralized execution, where USVs learn policies to guard a high-value ship with extra shared environmental data from other USVs through communication. Specifically, the ships are divided into two groups, i.e., high-level ship and low-level USVs. The high-level ship optimizes decision-level policy to predict intercept points, while each low-level USV utilizes multi-agent reinforcement learning to learn task-level policy to intercept intruders. The hierarchical task guidance is exploited in maritime escorting, whereby high-level ship’s decision-level policy guides the training and execution of task-level policies of USVs. Simulation results and experiment analysis show that the proposed hierarchical scheme can efficiently execute the escort mission.

Similar content being viewed by others

References

Liu Z, Zhang Y, Yu X, Yuan C (2016) Unmanned surface vehicles: an overview of developments and challenges. Annual Reviews in Control 41:71

Meng W China’s first use of unmanned boats for comprehensive geological survey of coastal zones, Xinhua, October 23, 2017. Accessed October 23, 2017, http://www.xinhuanet.com/tech/2017-10/23/c_1121844724.htm

Xu QC, Su Z, Yang Q (2019) Blockchain-based trustworthy edge caching scheme for mobile cyber physical system IEEE Internet of Things Journal. https://doi.org/10.1109/JIOT.2019.2951007

Xu QC, Su Z, Dai MH, Yu S (2019) APIS: privacy-preserving incentive for sensing task allocation in cloud and edge-cooperation mobile internet of things with SDN. IEEE Internet of Things Journal. https://doi.org/10.1109/JIOT.2019.2954380

Li JL, Xing R, Su Z, Zhang N, Hui YL, Luan TH, Shan HG (2019) Trust based secure content delivery in vehicular networks: a bargaining game approach. IEEE Trans Veh Technol. https://doi.org/10.1109/TVT.2020.2964685

Mnih V, Kavukcuoglu K, Silver D, Rusu AA, Veness J, Bellemare MG, Graves A, Riedmiller M, Fidjeland AK, Ostrovski G, et al (2015) Human-level control through deep reinforcement learning. Nature 518(7540):529

Peng P, Yuan Q, Wen Y, Yang Y, Tang Z, Long H, Wang J (2017) Multiagent bidirectionally-coordinated nets for learning to play starcraft combat games. arXiv:1703.10069 2

Silver D, Huang A, Maddison C J, Guez A, Sifre L, Van Den Driessche G, Schrittwieser J, Antonoglou I, Panneershelvam V, Lanctot M, et al. (2016) Mastering the game of Go with deep neural networks and tree search. Nature 529(7587):484

Xu Z, Wang Y, Tang J, Wang J, Gursoy MC (2017) A deep reinforcement learning based framework for power-efficient resource allocation in cloud RANs. In: 2017 IEEE international conference on communications (ICC). IEEE, pp 1–6

Isele D, Rahimi R, Cosgun A, Subramanian K, Fujimura K (2018) Navigating occluded intersections with autonomous vehicles using deep reinforcement learning. In: 2018 IEEE international conference on robotics and automation (ICRA). IEEE, pp 2034–2039

Cheng Y, Zhang W (2018) Concise deep reinforcement learning obstacle avoidance for underactuated unmanned marine vessels. Neurocomputing 272:63

Zhang R, Tang P, Su Y, Li X, Yang G, Shi C (2014) An adaptive obstacle avoidance algorithm for unmanned surface vehicle in complicated marine environments. IEEE/CAA Journal of Automatica Sinica 1(4):385

Russell SJ, Norvig P (2016) Artificial intelligence: A modern approach. Pearson Education Limited, Malaysia

Wu Y, Ni K, Zhang C, Qian LP, Tsang DH (2018) NOMA-assisted multi-access mobile edge computing: a joint optimization of computation offloading and time allocation. IEEE Trans Veh Technol 67(12):12244–12258

Duarte MAF (2016) Engineering evolutionary control for real-world robotic systems. Ph.D. thesis, ISCTE-Instituto Universitario de Lisboa (Portugal)

Qin Z, Lin Z, Yang D, Li P (2017) A task-based hierarchical control strategy for autonomous motion of an unmanned surface vehicle swarm. Applied Ocean Research 65:251

Simetti E, Turetta A, Casalino G, Storti E, Cresta M (2010) Protecting assets within a civilian harbour through the use of a team of usvs: Interception of possible menaces. In: IARP workshop on robots for risky interventions and environmental surveillance-maintenance (RISE’10), Sheffield, UK

Raboin E, Švec P, Nau DS, Gupta SK (2015) Model-predictive asset guarding by team of autonomous surface vehicles in environment with civilian boats. Autonomous Robots 38(3):261

Savkin AV, Marzoughi A (2017) Distributed control of a robotic network for protection of a region from intruders. In: 2017 IEEE international conference on robotics and biomimetics (ROBIO). IEEE, pp 804–808

Fang F, Jiang AX, Tambe M (2013) Designing optimal patrol strategy for protecting moving targets with multiple mobile resources. In: International workshop on optimisation in multi-agent systems (OPTMAS)

Foerster JN, Farquhar G, Afouras T, Nardelli N, Whiteson S (2018) Counterfactual multi-agent policy gradients. In: Thirty-second AAAI conference on artificial intelligence

Lowe R, Wu Y, Tamar A, Harb J, Abbeel OP, Mordatch I (2017) Multi-agent actor-critic for mixed cooperative-competitive environments. In: Advances in neural information processing systems, pp 6379–6390

Oliehoek FA, Spaan MT, Vlassis N (2008) Optimal and approximate Q-value functions for decentralized POMDPs. J Artif Intell Res 32:289

Nantogma S, Ran W, Yang X, Xiaoqin H (2019) Behavior-based genetic fuzzy control system for multiple USVs cooperative target protection. In: 2019 3rd international symposium on autonomous systems (ISAS). IEEE, pp 181–186

Kuyer L, Whiteson S, Bakker B, Vlassis N (2008) Multiagent reinforcement learning for urban traffic control using coordination graphs. In: Joint European conference on machine learning and knowledge discovery in databases. Springer, pp 656–671

Bakker B, Whiteson S, Kester L, Groen FC (2010) Traffic light control by multiagent reinforcement learning systems. In: Interactive collaborative information systems. Springer, pp 475–510

Wiering M (2000) Multi-agent reinforcement learning for traffic light control. In: Machine learning: Proceedings of the seventeenth international conference (ICML’2000), pp 1151–1158

Mason K, Mannion P, Duggan J, Howley E (2016) Applying multi-agent reinforcement learning to watershed management. In: Proceedings of the adaptive and learning agents workshop (at AAMAS 2016)

Buṡoniu L, Babuška R, De Schutter B (2010) Multi-agent reinforcement learning: An overview. In: Innovations in multi-agent systems and applications-1. Springer, pp 183–221

Palmer G, Tuyls K, Bloembergen D, Savani R (2018) Lenient multi-agent deep reinforcement learning. In: Proceedings of the 17th international conference on autonomous agents and multiagent systems (International foundation for autonomous agents and multiagent systems), pp 443–451

Sukhbaatar S, Fergus R, et al (2016) Learning multiagent communication with backpropagation. In: Advances in neural information processing systems, pp 2244–2252

Yang Y, Luo R, Li M, Zhou M, Zhang W, Wang J (2018) Mean field multi-agent reinforcement learning. arXiv:1802.05438

Omidshafiei S, Pazis J, Amato C, How JP, Vian J (2017) Deep decentralized multi-task multi-agent reinforcement learning under partial observability. In: Proceedings of the 34th international conference on machine learning-volume 70. JMLR. org, pp 2681–2690

Bishop G, Welch G, et al (2001) An introduction to the kalman filter. Proc of SIGGRAPH, Course 8 (27599–23175):41

Sutton RS, Barto AG (2018) Reinforcement learning: An introduction. MIT press, Cambridge

Lu X (2012) Multi-agent reinforcement learning in games. Ph.D. thesis, Carleton University

Mordatch I, Abbeel P (2018) Emergence of grounded compositional language in multi-agent populations. In: Thirty-scond AAAI conference on artificial intelligence

Acknowledgements

Research supported by National Natural Science Foundation of China (grant no 61625304)and Project of Shanghai Municipal Science and Technology Commission (grant no 17DZ1205000)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article is part of the Topical Collection: Special Issue on Emerging Trends on Data Analytics at the Network Edge

Guest Editors: Deyu Zhang, Geyong Min, and Mianxiong Dong

Rights and permissions

About this article

Cite this article

Xie, J., Luo, J., Peng, Y. et al. Data driven hybrid edge computing-based hierarchical task guidance for efficient maritime escorting with multiple unmanned surface vehicles. Peer-to-Peer Netw. Appl. 13, 1788–1798 (2020). https://doi.org/10.1007/s12083-019-00857-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12083-019-00857-6