Abstract

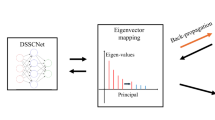

The robustness to outliers, noises, and corruptions has been paid more attention recently to increase the performance in linear feature extraction and image classification. As one of the most effective subspace learning methods, low-rank representation (LRR) can improve the robustness of an algorithm by exploring the global representative structure information among the samples. However, the traditional LRR cannot project the training samples into low-dimensional subspace with supervised information. Thus, in this paper, we integrate the properties of LRR with supervised dimensionality reduction techniques to obtain optimal low-rank subspace and discriminative projection at the same time. To achieve this goal, we proposed a novel model named Discriminative Low-Rank Projection (DLRP). Furthermore, DLRP can break the limitation of the small class problem which means the number of projections is bound by the number of classes. Our model can be solved by alternatively linearized alternating direction method with adaptive penalty and the singular value decomposition. Besides, the analyses of differences between DLRP and previous related models are shown. Extensive experiments conducted on various contaminated databases have confirmed the superiority of the proposed method.

Similar content being viewed by others

References

Wang X, Xing H, Li Y, Hua Q, Dong C, Pedrycz W (2015) A study on relationship between generalization abilities and fuzziness of base classifiers in ensemble learning. IEEE Trans Fuzzy Syst 23(5):1638–1654

Wang X, Wang R, Xu C (2018) Discovering the relationship between generalization and uncertainty by incorporating complexity of classification. IEEE Trans Cybern 48(2):703–715

Donoho D (2000) High-dimensional data analysis: the curses and blessings of dimensionality. In: AMS conference on math challenges of the 21st century, pp 1–33

Wang R, Wang X, Kwong S, Xu C (2017) Incorporating diversity and informativeness in multiple-instance active learning. IEEE Trans Fuzzy Syst 25(6):1460–1475

Sirovich L, Kirby M (1987) Low-dimensional procedure for the characterization of human faces. J Opt Soc Am A 4(3):519–524

Turk M, Pentland A (1991) A eigenfaces for face detection/recognition. J Cognit Neurosci 3(1):71–86

Yi S, Lai Z, He Z, Cheung Y, Liu Y (2017) Joint sparse principal component analysis. Pattern Recognit 61:524–536

Ben X, Gong C, Zhang P, Yan R, Wu Q, Meng W (2019) Coupled bilinear discriminant projection for cross-view gait recognition. IEEE Trans Circuits Syst Video Technol. https://doi.org/10.1109/TCSVT.2019.2893736

Ye Q, Yang J, Liu F, Zhao C, Ye N, Yin T (2016) L1-norm distance linear discriminant analysis based on an effective iterative algorithm. IEEE Trans Circuits Syst Video Technol 28(1):114–129

Sun W, Xie S, Han N (2019) Robust discriminant analysis with adaptive locality preserving. Int J Mach Learn Cybern. https://doi.org/10.1007/s13042-018-00903-4

Zhang W, Kang P, Fang X, Teng L, Han N (2019) Joint sparse representation and locality preserving projection for feature extraction. Int J Mach Learn Cybern 10(7):1731–1745

Lu G-F, Wang Y, Zou J, Wang Z (2018) Matrix exponential based discriminant locality preserving projections for feature extraction. Neural Netw 97:127–136

Roweis S, Saul L (2000) Nonlinear dimensionality reduction by locally linear embedding. Sicence 290:2323–2326

Sun B, Zhang X, Li J, Mao X (2010) Feature fusion using locally linear embedding for classification. IEEE Trans Neural Networks Learn Syst 21(1):163–168

Pang Y, Zhang L, Liu Z (2005) Neighborhood preserving projections (NPP): a novel linear dimension reduction method. In: International conference on intelligent computing, pp 117–125

He X, Cai D, Yan S, Zhang H (2005) Neighborhood preserving embedding. In: IEEE international conference on computer vision, pp 1208–1213

Gui J, Sun Z, Jia W, Hu R, Lei Y, Ji S (2012) Discriminant sparse neighborhood preserving embedding for face recognition. Pattern Recognit 45(8):2884–2893

Liu Z, Lai Z, Ou W, Zhang K, Zheng R (2020) Structured optimal graph based sparse feature extraction for semi-supervised learning. Signal Process. https://doi.org/10.1016/j.sigpro.2020.107456

Xiao C, Ding C, Nie F, Huang H (2013) On the equivalent of low-rank linear regressions and linear discriminant analysis based regressions In: International conference on knowledge discovery and data mining, pp1124–1132

Torre F (2012) A least-squares framework for component analysis. IEEE Trans Pattern Anal Mach Intell 34(6):1041–1055

Strutz T (2010) Data fitting and uncertainty: a practical introduction to weighted least squares and beyond. Springer Vieweg and Teubner, Wiesbaden, Germany

Liu H, Ma Z, Han J, Chen Z, Zheng Z (2018) Regularized partial least squares for multi-label learning. Int J Mach Learn Cybern 9(2):335–346

Wold S, Ruhe H, Wold H, Dunn W (1984) The collinearity problem in linear regression. The partial least squares (PLS) approach to generalized inverses. SIAM J Sci Stat Comput 5(3):735–743

Darmstadt T (2017) A compact formulation for the l21 mixed-norm minimization problem. In: IEEE international conference on acoustics, speech and signal processing, pp 4730–4734

Lai Z, Mo D, Wong W, Xu Y, Miao D, Zhang D (2018) Robust discriminant regression for feature extraction. IEEE Trans Cybern 48(8):2472–2484

Wang S, Xie D, Chen F, Gao Q (2018) Dimensionality reduction by LPP-L21. IET Comput Vis 12(5):659–665

Zhao L, Sun Y, Hu Y, Yin B (2014) Robust face recognition based l21-norm sparse representation. In: International conference on digital home, pp 25–29

Gu Q, Li Z, Han J (2011) Joint feature selection and subspace learning. In: International joint conference on artificial intelligence, pp 1294–1299

Ji S, Tang L, Yu S, Ye J (2010) A shared-subspace learning framework for multi-label classification. ACM Trans Knowl Discov Data 4(2):1–29

Lai Z, Xu Y, Yang J, Shen L, Zhang D (2017) Rotational invariant dimensionality reduction algorithms. IEEE Trans Cybern 47(11):3733–3746

Ma Z, Nie F, Yang Y, Uijlings J, Sebe N, Hauptmann A (2012) Discriminating joint feature analysis for multimedia data understanding for multimedia data understanding. IEEE Trans Multimed 14(6):1662–1672

Ma Z, Yang Y, Sebe N, Zheng K, Hauptmann A (2013) Multimedia event detection using a classifier-specific intermediate representation. IEEE Trans Multimed 15(7):1628–1637

Yang Y, Shen H, Ma Z, Huang Z, Zhou X (2010) L2,1-norm regularized discriminative feature selection for unsupervised learning. In: International joint conference on artificial intelligence, pp 1589–1594

Cheng L, Yang M (2018) Graph regularized weighted low-rank representation for image clustering. In: Chinese control conference, pp 9051–9055

Li S, Fu Y (2016) Learning robust and discriminative subspace with low-rank constraints. IEEE Trans Neural Networks Learn Syst 27(11):2160–2173

Liu Z, Wang X, Pu J, Wang L, Zhang L (2017) Nonnegative low-rank representation based manifold embedding for semi-supervised learning. Knowl Based Syst 136:121–129

Wen J, Fang X, Xu Y, Tian C, Fei L (2018) Low-rank representation with adaptive graph regularization. Neural Netw 108:83–96

Wong W, Lai Z, Wen J, Fang X, Lu Y (2017) Low rank embedding for robust feature extraction. IEEE Trans Image Process 26(6):2905–2917

Yin M, Gao J, Lin Z (2016) Laplacian regularized low-rank representation and its applications. IEEE Trans Pattern Anal Mach Intell 38(3):504–517

Candes E, Li X, Ma Y, Wright J (2011) Robust principal component analysis? J ACM 58(3):1–39

Liu G, Lin Z, Yan S, Sun J, Yu Y, Ma Y (2013) Robust recovery of subspace structures by low-rank representation. IEEE Trans Pattern Anal Mach Intell 35(1):171–184

Chen J, Yang J (2014) Robust subspace segmentation via low-rank representation. IEEE Trans Cybern 44(8):1432–1445

Li P, Yu J, Wang M, Zhang L, Cai D, Li X (2017) Constrained low-rank learning using least squares-based regularization. IEEE Trans Cybern 47(12):4250–4262

Zhuang L, Gao S, Tang J, Wang J, Lin Z, Ma Y (2015) Constructing a non-negative low rank and sparse graph with data-adaptive features. IEEE Trans Image Process 24(11):3717–3728

Liu G, Yan S (2011) Latent low-rank representation for subspace segmentation and feature extraction. In: International conference on computer vision, pp 1615–1622

Fang X, Han N, Wu J, Xu Y, Yang J, Wong W, Li X (2018) Approximate low-rank projection learning for feature extraction. IEEE Trans Neural Netw Learn Syst 29(11):5228–5241

Liu Y, Pados D (2016) Compressed-sensed-domain l1-PCA video surveillance. IEEE Trans Multimed 18(3):351–363

Wang Z, Xie X (2010) An efficient face recognition algorithm based on robust principal component analysis. In: International conference on internet multimedia computing and service, pp 99–102

Umap P, Chaudhari K (2015) Singing voice separation from polyphonic music accompanient using compositional model. Int J Adv Res Electr Instrum Eng 4:541–546

Zhou P, Lin Z, Zhang C (2015) Integrated low-rank-based discriminative feature learning for recognition. IEEE Trans Neural Networks Learn Syst 27(5):1080–1093

Lin Z, Liu R, Su Z (2011) Linearized alternating direction method with adaptive penalty for low-rank representation. In: Proceedings of 25th annual conference on neural information processing systems, pp 1–9

Yang J, Yin W, Zhang Y, Wang Y (2009) A fast algorithm for edge-preserving variational multichannel image restoration. SIAM J Sci Stat Comput 2(2):569–592

Cai J, Candes E, Shen Z (2010) A singular value thresholding algorithm for matrix completion. SIAM J Optim 20(4):1956–1982

Martinez A (1998) The AR face database. Cvc technical report, 24

Phillips P, Moon H, Rizvi S, Rauss P (2000) The FERET evaluation methodology for face-recognition algorithms. IEEE Trans Pattern Anal Mach Intell 22(10):1090–1104

Georghiades S, Belhumeur P, Kriegman D (2001) From few to many: Illumination cone models for face recognition under variable lighting and pose. IEEE Trans Pattern Anal Mach Intell 23(6):643–660

Learned-miller E, Huang G, Mattar M, Berg T (2007) Labeled faces in the wild : a database for studying face recognition in unconstrained environments. Technical Report

Wen Y, Zhang K, Li Z, Qiao Y (2016) A discriminative feature learning approach for deep face recognition. In: European conference on computer vision, pp 499–515

Acknowledgements

This work was supported by the Natural Science Foundation of China (Grant Nos. 61976145, 61802267, 61732011), and in part by the Shenzhen Municipal Science and Technology Innovation Council under (Grant Nos. JCYJ20180305124834854, JCYJ20160429182058044), and in part by the Natural Science Foundation of Guangdong Province (Grant No. 2017A030313367).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Lai, Z., Bao, J., Kong, H. et al. Discriminative low-rank projection for robust subspace learning. Int. J. Mach. Learn. & Cyber. 11, 2247–2260 (2020). https://doi.org/10.1007/s13042-020-01113-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-020-01113-7