Abstract

We study measures \(\mu \) on the plane with two independent Alberti representations. It is known, due to Alberti, Csörnyei, and Preiss, that such measures are absolutely continuous with respect to Lebesgue measure. The purpose of this paper is to quantify the result of A–C–P. Assuming that the representations of \(\mu \) are bounded from above, in a natural way to be defined in the introduction, we prove that \(\mu \in L^{2}\). If the representations are also bounded from below, we show that \(\mu \) satisfies a reverse Hölder inequality with exponent 2, and is consequently in \(L^{2 + \epsilon }\) by Gehring’s lemma. A substantial part of the paper is also devoted to showing that both results stated above are optimal.

Similar content being viewed by others

1 Introduction

Before stating any results, we need to define a few key concepts.

Definition 1.1

(Cones and \({\mathcal {C}}\)-graphs) A cone stands for a subset of \({\mathbb {R}}^{d}\) of the form

where \(e \in S^{d - 1}\) and \(0 < \theta \le 1\). Given a cone \({\mathcal {C}}\subset {\mathbb {R}}^{d}\), a \({\mathcal {C}}\)-graph is any set \(\gamma \subset {\mathbb {R}}^{d}\) such that

A \({\mathcal {C}}\)-graph \(\gamma \) is called maximal if the orthogonal projection \(\pi _{e} :\gamma \rightarrow {\text {span}}(e)\) is surjective. The family of all maximal \({\mathcal {C}}\)-graphs is denoted by \(\Gamma _{{\mathcal {C}}}\). We record here that if \(\gamma \) is a \({\mathcal {C}}(e,\theta )\)-graph with \(\theta > 0\), then the orthogonal projection \(\pi _{e} :\gamma \rightarrow {\text {span}}(e)\) is a bilipschitz map. Also, if \(\gamma \) is maximal, then \({\mathcal {H}}^{1}|_{\gamma }\) is a 1-regular measure on \(\gamma \). In other words, there exist constants \(0< c \le C < \infty \) such that \(cr \le {\mathcal {H}}^{1}(\gamma \cap B(x,r)) \le Cr\) for all \(x \in \gamma \) and \(r > 0\).

We say that two cones \({\mathcal {C}}_{1},{\mathcal {C}}_{2}\) are independent if they are angularly separated as follows:

Definition 1.3

(Alberti representations) Let \({\mathcal {C}}\subset {\mathbb {R}}^{d}\) be a cone. Let \((\Omega ,\Sigma ,{\mathbb {P}})\) be a measure space with \({\mathbb {P}}(\Omega ) < \infty \), and let \(\gamma :\Omega \rightarrow \Gamma _{{\mathcal {C}}}\) be a map such that

for all Borel sets \(B \subset {\mathbb {R}}^{d}\). Then, the formula

makes sense for all Borel sets \(B \subset {\mathbb {R}}^{d}\), and evidently \(\nu (K) < \infty \) for all compact sets \(K \subset {\mathbb {R}}^{d}\). We extend the definition to all sets \(A \subset {\mathbb {R}}^{d}\) via the usual procedure of setting first \(\nu ^{*}(A) := \inf \{\nu (B) : A \subset B \text { Borel}\}\). This process yields a Radon measure \(\nu ^{*}\) which agrees with \(\nu \) on Borel sets. In the sequel, we just write \(\nu \) in place of \(\nu ^{*}\).

If \(\mu \) is another Radon measure on \({\mathbb {R}}^{d}\), we say that \(\mu \)is representable by \({\mathcal {C}}\)-graphs if there is a triple \((\Omega ,{\mathbb {P}},\gamma )\) as above such that \(\mu \ll \nu _{(\Omega ,{\mathbb {P}},\gamma )} =: \nu \). In this case, the quadruple \((\Omega ,{\mathbb {P}},\gamma ,\tfrac{d\mu }{d\nu })\) is an Alberti representation of \(\mu \)by \({\mathcal {C}}\)-graphs. The representation is

bounded above (BoA) if \(\tfrac{d\mu }{d\nu } \in L^{\infty }(\nu )\),

bounded below (BoB) if \((\tfrac{d\mu }{d\nu })^{-1} \in L^{\infty }(\nu )\).

We also consider local versions of these properties: the representation is BoA (resp. BoB) on a Borel set \(B \subset {\mathbb {R}}^{2}\) if \(\tfrac{d\mu }{d\nu } \in L^{\infty }(B,\nu )\) (resp. \((\tfrac{d\mu }{d\nu })^{-1} \in L^{\infty }(B,\nu )\)). Two Alberti representations of \(\mu \) by \({\mathcal {C}}_{1}\)- and \({\mathcal {C}}_{2}\)-graphs are independent, if the cones \({\mathcal {C}}_{1},{\mathcal {C}}_{2}\) are independent in the sense (1.2).

Representations of this kind first appeared in Alberti’s paper [1] on the rank-1 theorem for BV-functions. It has been known for some time that planar measures with two independent Alberti representations are absolutely continuous with respect to Lebesgue measure; this fact is due to Alberti, Csörnyei, and Preiss, see [2, Proposition 8.6], but a closely related result is already contained in Alberti’s original work, see [1, Lemma 3.3]. The argument in [2] is based on a decomposition result for null sets in the plane, [2, Theorem 3.1]. Inspecting the proof, the following statement can be easily deduced: if \(\mu \) is a planar measure with two independent BoA representations, then \(\mu \in L^{2,\infty }\). The proof of [1, Lemma 3.3], however, seems to point towards \(\mu \in L^{2}\), and the first statement of Theorem 1.6 below asserts that this is the case. Our argument is short and very elementary, see Sect. 2.1. The main work in the present paper concerns measures with two independent representations which are both BoA and BoB. In this case, Theorem 1.6 asserts an \(\epsilon \)-improvement over the \(L^{2}\)-integrability.

Theorem 1.6

Let \(\mu \) be a Radon measure on \({\mathbb {R}}^{2}\) with two independent Alberti representations. If both representations are BoA, then \(\mu \in L^{2}({\mathbb {R}}^{2})\). If both representations are BoA and BoB on B(2), then there exists a constant \(C \ge 1\) such that \(\mu \) satisfies the reverse Hölder inequality

As a consequence, \(\mu \in L^{2 + \epsilon }(B(\tfrac{1}{2}))\) for some \(\epsilon > 0\).

The final conclusion follows easily from Gehring’s lemma, see [5, Lemma 2].

1.1 Sharpness of the main theorem

We now discuss the sharpness of Theorem 1.6. For illustrative purposes, we make one more definition. Let \(\mu \) be a Radon measure on \({\mathbb {R}}^{2}\). We say that \((\Omega ,{\mathbb {P}},\gamma ,\tfrac{d\mu }{d\nu })\) is an axis-parallel representation of \(\mu \) if \(\Omega = {\mathbb {R}}\), and \(\gamma :\Omega \rightarrow {\mathcal {P}}({\mathbb {R}}^{2})\) is one of the two maps \(\gamma _{x}(\omega ) = \{\omega \} \times {\mathbb {R}}\) or \(\gamma _{y}(\omega ) = {\mathbb {R}}\times \{\omega \}\). Note that two axis-parallel representations \(({\mathbb {R}},{\mathbb {P}}_{1},\gamma _{1},\tfrac{d\mu }{d\nu _{1}})\) and \(({\mathbb {R}},{\mathbb {P}}_{2},\gamma _{2},\tfrac{d\mu }{d\nu _{2}})\) are independent if and only if \(\{\gamma _{1},\gamma _{2}\} = \{\gamma _{x},\gamma _{y}\}\).

The following example shows that two independent BoA representations —even axis parallel ones—do not guarantee anything more than \(L^{2}\):

Example 1.8

Fix \(r > 0\) and consider the measure \(\mu _{r} = \tfrac{1}{r} \cdot {\mathbf {1}}_{[0,r]^{2}}\). Note that \(\Vert \mu _{r}\Vert _{L^{p}} = r^{2 - p}\) for \(p \ge 1\), so \(\mu _{r} \in L^{p}\) uniformly in \(r > 0\) if and only if \(p \le 2\). On the other hand, consider the probability \({\mathbb {P}}:= \tfrac{1}{r} \cdot {\mathcal {L}}^{1}|_{[0,r]}\) on \(\Omega = {\mathbb {R}}\), and the maps \(\gamma _{1} := \gamma _{x}\) and \(\gamma _{2} := \gamma _{y}\), as above. Writing \(\nu _{j} := \nu _{(\Omega ,{\mathbb {P}},\gamma _{j})}\) for \(j \in \{1,2\}\), it is easy to check that

So, \(\mu _{r}\) has two independent axis-parallel BoA representations with constants uniformly bounded in \(r > 0\). After this, it is not difficult to produce a single measure \(\mu \) with two independent axis-parallel BoA representations which is not in \(L^{p}\) for any \(p > 2\): simply place disjoint copies of \(c_{j}\mu _{r_{j}}\) along the diagonal \(\{(x,y) : x = y\}\), where \(\sum c_{j} = 1\) and \(r_{j} \rightarrow 0\) rapidly.

The situation where both representations are (locally) both BoA and BoB is more interesting. We start by recording the following simple proposition, which shows that Theorem 1.6 is far from sharp for axis-parallel representations:

Proposition 1.9

Let \(\mu \) be a finite Radon measure on \({\mathbb {R}}^{2}\) with two independent axis-parallel representations, both of which are BoA and BoB on \([0,1)^{2}\). Then there exist constants \(0< c \le C < \infty \), depending only on the BoA and BoB constants, such that \(\mu |_{[0,1)^{2}} = f \, d{\mathcal {L}}^{2}|_{[0,1)^{2}}\), where \(0< c \le f(x) \le C < \infty \) for \({\mathcal {L}}^{2}\) almost every \(x \in [0,1)^{2}\).

We give the easy details in the “Appendix”. In the light of the proposition, the following theorem is perhaps a little surprising:

Theorem 1.10

Let \(0< \alpha < 1\). The measure \(\mu = f\, d{\mathcal {L}}^{2}\), where

has two independent Alberti representations which are both BoA and BoB on B(1).

The representations are, of course, not axis-parallel. For a picture, see Fig. 4. Since

this shows that \(L^{2 + \epsilon }\)-integrability claimed in Theorem 1.6 is sharp.

Remark 1.12

The localisation in Theorem 1.10 is necessary: for \(0< \alpha < 1\), the weight \(\mu = |x|^{-\alpha } \, dx\) has no BoA representations in the sense of Definition 1.3, where we require that \({\mathbb {P}}(\Omega ) < \infty \). Indeed, let \({\mathcal {C}}= {\mathcal {C}}(e,\theta )\) be an arbitrary cone, and assume that \((\Omega ,{\mathbb {P}},\gamma ,\tfrac{d\mu }{d\nu })\) is an Alberti representation of \(\mu \) by \({\mathcal {C}}\)-graphs. Let \(e' \perp e\), and let T be a strip of width 1 around \({\text {span}}(e')\). Then \(\mu (T) = \infty \). However, \({\mathcal {H}}^{1}(\gamma \cap T) \lesssim _{\theta } 1\) for all \(\gamma \in \Gamma _{{\mathcal {C}}}\), and hence \(\nu (T) \lesssim _{\theta } {\mathbb {P}}(\Omega ) < \infty \). This implies that \(\tfrac{d\mu }{d\nu } \notin L^{\infty }(\nu )\).

Notation 1.13

For \(A,B > 0\), the notation \(A \lesssim B\) will signify that there exists a constant \(C \ge 1\) such that \(A \le CB\). This is typically used in a context where one or both of A, B are functions of some variable “x”: then \(A(x) \lesssim B(x)\) means that \(A(x) \le CB(x)\) for some constant \(C \ge 1\) independent of x. Sometimes it is worth emphasising that the constant C depends on some parameter “p”, and we will signal this by writing \(A \lesssim _{p} B\).

1.2 Higher dimensions, and connections to PDEs

The problems discussed above have natural—but harder— generalisations to higher dimensions. A collection of d cones \({\mathcal {C}}_{1},\ldots ,{\mathcal {C}}_{d} \subset {\mathbb {R}}^{d}\) is called independent if \(|\mathrm {det} (v_{1},\ldots ,v_{d})| \ge \tau > 0\) for any choices \(v_{j} \in {\mathcal {C}}_{j} \cap S^{d - 1}\), \(1 \le j \le d\). With this definition in mind, one can discuss Radon measures on \({\mathbb {R}}^{d}\) with d independent Alberti representations. It follows from the recent breakthrough work of De Philippis and Rindler [4] that such measures are absolutely continuous with respect to Lebesgue measure. It is tempting to ask for more quantitative statements, similar to the ones in Theorem 1.6. Such statement do not appear to easily follow from the strategy in [4].

Question 1

If \(\mu \) is a Radon measure on \({\mathbb {R}}^{d}\) with d independent BoA representations, then is \(\mu \in L^{p}\) for some \(p > 1\)?

In the case of independent axis-parallel representations, \(\mu \in L^{d/(d - 1)}\), see the next paragraph. This is the best exponent, as can be seen by a variant of Example 1.8. In general, we do not know how to prove \(\mu \in L^{p}\) for any \(p > 1\). Some results of this nature will likely follow from work in progress recently announced by Csörnyei and Jones.

Question 1 is closely connected with the analogue of the multilinear Kakeya problem for thin neighbourhoods of \({\mathcal {C}}\)-graphs. A near-optimal result on this variant of the multilinear Kakeya problem is contained in the paper [6] of Guth, see [6, Theorem 7]. We discuss this connection explicitly in [3, Section 5]. It seems that the “\(S^{\epsilon }\)-factor” in [6, Theorem 7] makes it inapplicable to Question 1, and it does not even imply the qualitative absolute continuity of \(\mu \) established in [4]. On the other hand, the analogue of [6, Theorem 7] without the \(S^{\epsilon }\)-factor would imply a positive answer to Question 1 with \(p = d/(d - 1)\), see the proof of [3, Lemma 5.2]. We do not know if this is a plausible strategy, but it certainly works for the axis-parallel case: the analogue of [6, Theorem 7] for neighbourhoods of axis-parallel lines is simply the classical Loomis-Whitney inequality (see [7] or [6, Theorem 3]), where no \(S^{\epsilon }\)-factor appears.

As mentioned above, the main results in this paper, and Question 1, are related to the recent work of De Philippis and Rindler [4] on \({\mathcal {A}}\)-free measures. Introducing the notation of [4] would be a long detour, but let us briefly explain some connections, assuming familiarity with the terminology of [4].

The qualitative absolute continuity result, mentioned above Question 1, follows from [4, Corollary 1.12] after realising that, for each Alberti representation of \(\mu \), (1.5) may be used to construct a normal 1-current \(T_i = \vec {T_i}\Vert T_i\Vert \) on \({\mathbb {R}}^{d}\), \(1 \le i \le d\), such that \(\mu \ll \Vert T_i\Vert \). The independence of the representations translates into the statement

One may view the d-tuple of normal currents \({\mathbf {T}} = (T_{1},\ldots ,T_{d})\) as an \({\mathbb {R}}^{d \times d}\)-valued measure \({\mathbf {T}}= \vec {{\mathbf {T}}} \Vert {\mathbf {T}}\Vert \), where \(|\vec {{\mathbf {T}}}| \equiv 1\), and \(\Vert {\mathbf {T}}\Vert \) is a finite positive measure. Since each \(T_i\) is normal, \(\mathrm {div}\,{\mathbf {T}}\) is also a finite measure, and this is the key point relating our situation with the work of De Philippis and Rindler. If the Alberti representations of \(\mu \) are BoA, then \(d\mu /d\Vert {\mathbf {T}}\Vert \in L^{\infty }(\Vert {\mathbf {T}}\Vert )\), and \(\mu \in L^{2}({\mathbb {R}}^{2})\) by Theorem 1.6. As far as we know, PDE methods do not yield the same conclusion. However, if in addition the Jacobian of \(\vec {{\mathbf {T}}}\) is uniformly bounded from below \(\Vert {\mathbf {T}}\Vert \) almost everywhere, PDE methods look more promising. We formulate the following question, which is parallel to Question 1:

Question 2

Let \({\mathbf {T}}= \vec {{\mathbf {T}}} \Vert {\mathbf {T}}\Vert \) be a finite \({\mathbb {R}}^{d \times d}\)-valued measure, whose divergence is also a finite (signed) measure such that the Jacobian of \(\vec {{\mathbf {T}}}\) is uniformly bounded from below in absolute value \(\Vert {\mathbf {T}}\Vert \) a.e. Is it true that \(\Vert {\mathbf {T}}\Vert \in L^{p}({\mathbb {R}}^d)\) for some \(p > 1\)?

2 Proof of the main theorem

We prove Theorem 1.6 in two parts, first considering representations which are only BoA, and then representations which are both BoA and BoB at the same time.

2.1 BoA representations

The first part of Theorem 1.6 easily follows from the next, more quantitative, statement:

Theorem 2.1

Assume that \(\mu \) is a Radon measure on \({\mathbb {R}}^{2}\) with two independent BoA representations \((\Omega _{1},{\mathbb {P}}_{1},\gamma _{1},\tfrac{d\mu }{d\nu _{1}})\) and \((\Omega _{2},{\mathbb {P}}_{2},\gamma _{2},\tfrac{d\mu }{d\nu _{2}})\). Then

where the implicit constant only depends on the opening angles \(\theta _{1},\theta _{2}\) and angular separation \(\tau \) of the cones \({\mathcal {C}}_{1} = {\mathcal {C}}(e_{1},\theta _{1})\) and \({\mathcal {C}}_{2} = {\mathcal {C}}(e_{2},\theta _{2})\).

Proof

It suffices to show that the restriction of \(\mu \) to any dyadic square \(Q_{0} \subset {\mathbb {R}}^{2}\) is in \(L^{2}\), with norm bounded (independently of \(Q_{0}\)) as in (2.2). For notational simplicity, we assume that \(Q_{0} = [0,1)^{2}\). Let \({\mathcal {D}}_{n} := {\mathcal {D}}_{n}([0,1)^{2})\), \(n \in {\mathbb {N}}\), be the family of dyadic sub-squares of \([0,1)^{2}\) of side-length \(2^{-n}\). Fix \(n \in {\mathbb {N}}\), pick \(Q \in {\mathcal {D}}_{n}\), and write

Note that \(\{\omega \in \Omega _{j} : {\mathcal {H}}^{1}(\gamma (\omega ) \cap Q) > 0\} \in \Sigma _{j}\) for \(j \in \{1,2\}\) by (1.4), so \(\Omega (Q)\) lies in the \(\sigma \)-algebra generated by \(\Sigma _{1} \times \Sigma _{2}\). We start by showing that

To prove (2.3), it suffices to fix a pair \((\gamma _{1},\gamma _{2}) \in \Gamma _{1} \times \Gamma _{2}\), where \(\Gamma _{j} := \Gamma _{{\mathcal {C}}_{j}}\), and show that there are \(\lesssim _{\tau } 1\) squares \(Q \in {\mathcal {D}}_{n}\) with \(\gamma _{1} \cap Q \ne \varnothing \ne \gamma _{2} \cap Q\). So, fix \((\gamma _{1},\gamma _{2}) \in \Gamma _{1} \times \Gamma _{2}\), and assume that there is at least one square Q such that \(\gamma _{1} \cap Q \ne \varnothing \ne \gamma _{2} \cap Q\), see Fig. 1. To simplify some numerics, assume that \(Q = [0,2^{-n})^{2}\). Pick \(x_{1} \in \gamma _{1} \cap Q\) and \(x_{2} \in \gamma _{2} \cap Q\), and note that

since \(\gamma _{1} \in \Gamma _{{\mathcal {C}}_{1}}\) and \(\gamma _{2} \in \Gamma _{{\mathcal {C}}_{2}}\). It follows that whenever \(Q' \in {\mathcal {D}}_{n}\) is another square with \(\gamma _{1} \cap Q' \ne \varnothing \ne \gamma _{2} \cap Q'\), we can find points

which then satisfy \({\text {dist}}(x_{j}',{\mathcal {C}}_{j}) \lesssim 2^{-n}\) for \(j \in \{1,2\}\), because \(|x_{j}| \lesssim 2^{-n}\). Consequently,

But the independence assumption (1.2) implies that \({\text {dist}}(y,{\mathcal {C}}_{1}) > rsim _{\tau } |y|\) or \({\text {dist}}(y,{\mathcal {C}}_{2}) > rsim _{\tau } |y|\) for any \(y \in {\mathbb {R}}^{2}\), and in particular the centre of \(Q'\). Hence, (2.4) shows that \({\text {dist}}(Q',Q) \le {\text {dist}}(Q',0) \lesssim 2^{-n}\), and (2.3) follows.

Now, we can finish the proof of the theorem. Given any square \(Q \in {\mathcal {D}}_{n}\), we note that \({\mathcal {H}}^{1}(Q \cap \gamma ) \lesssim _{\theta _{1},\theta _{2}} 2^{-n}\) for all \(\gamma \in \Gamma _{1} \cup \Gamma _{2}\) , whence

Observe that

Denoting the Lebesgue measure of Q by |Q|, and combining (2.5) with (2.3) gives

This inequality shows that the \(L^{2}\)-norms of the measures

are uniformly bounded by the right hand side of (2.2). The proof can then be completed by standard weak convergence arguments. \(\square \)

2.2 Representations which are both BoA and BoB

Before finishing the proof of Theorem 1.6, we need to record a few geometric observations.

Lemma 2.6

Let \({\mathbf {n}} := (0,1)\), \(\tau > 0\), and let \(v = (e_{1},e_{2}) \in S^{1}\) with \(e_{2} \le -\tau < 0\). Then, the following holds for \(\epsilon := \min \{\tau ^{4},10^{-4}\}\):

Proof

Write \(\kappa := \min \{\tau ,10^{-1}\}\), so that \(\epsilon = \kappa ^{4}\) and \(\kappa ^{2} = \sqrt{\epsilon }\). Then, fix \(x \in B({\mathbf {n}},\epsilon )\) and \(t \in (\kappa ^{2},2\kappa ^{2})\). Noting that \({\mathbf {n}} \cdot v = e_{2}\), \(|x|^{2} \le 1 + 3\epsilon \), and \(|x - {\mathbf {n}}| \le \epsilon \), we compute that

because (using first that \(e_{2} < 0\) and \(\kappa ^{2}< t < 2\kappa ^{2}\), and then that \(e_{2} \le -\tau \) and \(\kappa = \min \{\tau ,10^{-1}\}\))

This completes the proof. \(\square \)

In the next corollary, we write

for \(x \in {\mathbb {R}}^{2}\) and \(0< r< R < \infty \). Also, if \({\mathcal {C}}= {\mathcal {C}}(e,\theta ) \subset {\mathbb {R}}^{2}\) is a cone, we write

for the corresponding “one-sided” cones.

Corollary 2.7

Let \({\mathcal {C}}_{1},{\mathcal {C}}_{2} \subset {\mathbb {R}}^{2}\) be two cones with

Then, the following holds for \(\epsilon := \min \{|\tau /100|^{4},10^{-4}\}\), and for any \(x \in {\mathbb {R}}^{2}\), \(r > 0\), and \(n \in \partial B(x,r)\). There exists \(j \in \{1,2\}\) and a sign \(\star \in \{-,+\}\) (depending only on x, n) such that

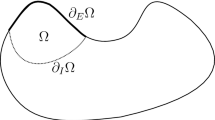

The statement is best illustrated by a picture, see Fig. 2.

The scenario in Corollary 2.7. One of the four half-cones always has large intersection with B(x, r)

Proof of Corollary 2.7

After rescaling, translation, and rotation, we may assume that

Write \(\pi _{y}(x,y) := y\). We start by noting that

for either \(j = 1\) or \(j = 2\). If this were not the case, we could find \(x_{1} \in {\mathcal {C}}_{1} \cap S^{1}\) and \(x_{2} \in {\mathcal {C}}_{2} \cap S^{1}\) such that \(|\mathrm {sin} \, \angle (x_{j},(1,0))| = |\pi _{y}(x_{j})| \le \tau /100\) for \(j \in \{1,2\}\). Then either \(\angle (x_{1},x_{2}) < \tau \) or \(\angle (x_{1},-x_{2}) < \tau \). Both contradict the definition of \(\tau \), given that also \(x_{2} \in {\mathcal {C}}_{2}\). This proves (2.10).

Fix \(j \in \{1,2\}\) such that (2.10) holds, and write, for \(\star \in \{-,+\}\),

Then \(J_{j}^{+} = -J_{j}^{-}\), and consequently \(\pi _{y}(J_{j}^{+}) = -\pi _{y}(J_{j}^{-})\). It follows from this, (2.10), and the fact that \(\pi _{y}(J_{j}^{\star })\) is an interval, that either \(\pi _{y}(v) < -\tau /100\) for all \(v \in J_{j}^{+}\) or \(\pi _{y}(v) < -\tau /100\) for all \(v \in J_{j}^{-}\). We pick \(\star \in \{-,+\}\) such that this conclusion holds. In other words, the y-coordinate of every point \(v \in {\mathcal {C}}^{\star }_{j} \cap S^{1}\) is \(< -\tau /100\). It follows from the previous lemma, and the choice of \(\epsilon \), that

which is equivalent to (2.8) (recalling (2.9)). \(\square \)

For the rest of the section, we assume that \(\mu \) is a Radon measure on \({\mathbb {R}}^{2}\) with \(\mu (B(1)) > 0\), and that \(\mu \) has two independent Alberti representations which are both BoA and BoB on B(2). Thus, there exists a constant \(C \ge 1\) such that

for all Borel sets \(A \subset B(2)\). By Theorem 2.1, we already know that \(\mu \in L^{2}(B(1))\). We next aim to show that \(B(1) \subset {\text {spt}}\mu \), and \(\mu \) is a doubling weight on B(1) in the following sense:

After this, it will be easy to complete the proof of the reverse Hölder inequality (1.7).

Lemma 2.13

Let \(\mu \) be a measure as above. Then \(\mu \) is doubling on B(1) in the sense of (2.12), where the constants only depend on C from (2.11) and \(\tau \) from (1.2). In particular, \(B(1) \subset {\text {spt}}\mu \).

Remark 2.14

To prove the “in particular” statement, recall that \(\mu (B(1)) > 0\), so \(B(1) \cap {\text {spt}}\mu \ne \varnothing \). Hence, if \({\text {spt}}\mu \subsetneq B(1)\), one could find a ball \(B(x,r) \subset B(1)\) such that \(\mu (B(x,r)) = 0\), but \(\partial B(x,r) \cap {\text {spt}}\mu \ne \varnothing \). This would immediately violate (2.12).

Proof of Lemma 2.13

Let \(0< \epsilon < 1/10\) be the parameter given by Corollary 2.7, applied with the angular separation constant \(\tau > 0\) of the cones \({\mathcal {C}}_{1},{\mathcal {C}}_{2}\). It suffices to argue that

Cover the annulus \(B(x,(1 + \tfrac{\epsilon }{2})r) \, {\setminus } \, B(x,r)\) by a minimal number of balls \(B_{1},\ldots ,B_{N}\) of radius \(\epsilon r\) centred on \(\partial B(x,r)\), and let \(B := B_{i} = B(x_{i},\epsilon r)\) be the ball maximising \(B_{i} \mapsto \mu (B_{i})\). Since \(N \lesssim 1/\epsilon \), we have

and consequently it suffices to show that \(\mu (B(x,r)) > rsim _{C,\epsilon } \mu (B)\). Recalling (2.11), and noting that \(B(x,r) \cup B \subset B(2)\), this will follow once we manage to show that

for either \(j = 1\) or \(j = 2\).

For \(y \in {\mathbb {R}}^{2}\), write A(y) for the annulus

Recall the half-cones \({\mathcal {C}}_{j}^{\star }\), \(\star \in \{-,+\}\), defined above Corollary 2.7. By Corollary 2.7, there exist choices of \(j \in \{1,2\}\) and \(\star \in \{-,+\}\), depending only on x and \(x_{i} \in \partial B(x,r)\) (i.e. the centre of B), such that

Consequently,

Define

We observe that if \(\omega \in \Omega _{j}(B)\), then \({\mathcal {H}}^{1}(G \cap \gamma _{j}(\omega )) \sim _{\epsilon } r\). Indeed, if \(\omega \in \Omega _{j}(B)\), then certainly \(\gamma (\omega )\) contains a point \(y \in B\) and then one half of the graph \(\gamma _{j}(\omega )\) is contained in \(y + {\mathcal {C}}_{j}^{\star }\). This half intersects A(y) in length \(\sim _{\epsilon } r\), and the intersection is contained in G by definition. It follows that

Since \(G \subset B(x,r)\), this yields (2.15) and completes the proof. \(\square \)

We can now complete the proof of the reverse Hölder inequality (1.7).

Concluding the proof of Theorem 1.6

Fix a ball \(B := B(x,r) \subset B(1)\), and consider the restrictions of the measures \({\mathbb {P}}_{1},{\mathbb {P}}_{2}\) to the sets

Writing \({\mathbb {P}}_{j}^{B} := ({\mathbb {P}}_{j})|_{\Omega _{j}(B)}\), the restriction \(\mu ^{B} := \mu |_{B}\) has two independent Alberti representations \(\{\Omega _{j}(B),{\mathbb {P}}_{j}^{B},\gamma _{j},\tfrac{d\mu ^{B}}{d\nu _{j}}\}\), \(j \in \{1,2\}\). Evidently \(\Vert \mu ^{B}\Vert _{L^{\infty }(\nu _{j})} \le C\) for \(j \in \{1,2\}\), where \(C \ge 1\) is the constant from (2.11), so we may deduce from Theorem 2.1 that

It remains to prove that

since the reverse Hölder inequality (1.7) is equivalent to \(\Vert \mu \Vert _{L^{2}(B(x,r))} \lesssim _{C,\tau } r^{-1} \cdot \mu (B(x,r))\). To see this, we note that

for \(j \in \{1,2\}\), because any \(\gamma \in \Gamma _{{\mathcal {C}}_{j}}\) meeting B satisfies \({\mathcal {H}}^{1}(B(x,\tfrac{3}{2}r) \cap \gamma ) \sim r\). Taking a geometric average over \(j \in \{1,2\}\), this implies (2.16) with \(\mu (B(x,\tfrac{3}{2}r))\) on the right hand side. But since \(B(x,r) \subset B(1)\), Lemma 2.13 yields \(\mu (B(x,\tfrac{3}{2}r)) \lesssim _{C,\tau } \mu (B(x,r))\). This completes the proofs of (2.16) and Theorem 1.6. \(\square \)

3 Sharpness of the reverse Hölder exponent

The purpose of this section is to prove Theorem 1.10. The statement is repeated below:

Theorem 3.1

Let \(0< \alpha < 1\). The measure \(\mu = f\, d{\mathcal {L}}^{2}\), where

has two independent Alberti representations which are both BoA and BoB on \([-1,1]^{2}\).

Remark 3.3

It may be worth pointing out that, in the construction below, the BoA and BoB constants stay uniformly bounded for \(\alpha \in (0,1)\). However, the independence constant of the two representations (that is, the constant “\(\tau \)” from (1.2)) tends to zero as \(\alpha \nearrow 1\). In this section, the constants hidden in the “\(\sim \)” and “\(\lesssim \)” notation will not depend on \(\alpha \).

We have replaced B(1) by \([-1,1]^{2}\) for technical convenience; since \(B(1) \subset [-1,1]^{2}\), the result is technically stronger than Theorem 1.10. The two representations will be denoted by \(\{\Omega _{1},{\mathbb {P}}_{1},\gamma _{1},\tfrac{d\mu }{d\nu _{1}}\}\) and \(\{\Omega _{2},{\mathbb {P}}_{2},\gamma _{2},\tfrac{d\mu }{d\nu _{2}}\}\). We will first construct one representation of \(\mu \) restricted to \([0,1]^{2}\), as in Fig. 3, and eventually extend that representation to \([-1,1]^{2}\), as on the left hand side of Fig. 4. We set

and we let \({\mathbb {P}}={\mathbb {P}}_{j} := {\mathcal {H}}^{1}|_{\Omega _{j}}\). The main challenge is of course to construct the graphs \(\gamma _{j}(\omega )\), \(\omega \in \Omega _{j}\). A key feature of f is that \(f(r,t) = f(t,r)\) for \((r,t) \in [-1,1]^{2}\). Hence, as we will argue carefully later, it suffices to construct a single representation by \({\mathcal {C}}\)-graphs, where \({\mathcal {C}}\) is a cone around the y-axis, with opening angle strictly smaller than \(\pi /2\); such a representation is depicted on the left hand side of Fig. 3. We remark that, as the picture suggests, every \({\mathcal {C}}\)-graph associated to the representation can be expressed as a countable union of line segments. The second representation is eventually acquired by rotating the first representation by \(\pi /2\), see the right hand side of Fig. 4.

Now we construct certain graphs \(\gamma (\omega )\) for \(\omega \in \Omega := [0,1] \times \{1\} \subset \Omega _{1}\). The idea is that eventually \(\gamma _{1}(\omega ) \cap [0,1]^{2} = \gamma (\omega )\) for \(\omega \in \Omega \). The graphs \(\gamma (\omega )\) will be constructed so that

The right idea to keep in mind is that the graph \(\gamma (\omega )\) “starts from \(\omega \in \Omega = [0,1] \times \{1\}\), travels downwards, and ends somewhere on \([0,1] \times \{0\}\)”. We will ensure that \([0,1]^{2}\) is foliated by the graphs \(\gamma (\omega )\), \(\omega \in \Omega \).

Start by fixing a point \(p \in \Omega \) whose x-coordinate lies in (1/2, 1), see Fig. 3. The relationship between p and the exponent \(\alpha \) in (3.2) will be specified under (3.6). Let

We can now specify the graphs \(\gamma (\omega )\) with \(\omega \in I_{1}\). Each of them consists of two line segments: the first one connects \(I_{1}\) to \(((\tfrac{1}{2},\tfrac{1}{2}),(1,\tfrac{1}{2})]\), and the second one is vertical, connecting \(((\tfrac{1}{2},\tfrac{1}{2}),(1,\tfrac{1}{2})]\) to \([1/2,1] \times \{0\}\), see Fig. 3. We also require that the graphs \(\gamma (\omega )\) foliate the yellow pentagon \(R_{0}\) in Fig.3. This description still gives some freedom on how to choose the first segments, but if the choice is done in a natural way, we will find that

for all balls \(B \subset R_{0}\). Here, and in the sequel, \(|\cdot |\) stands for 1-dimensional Hausdorff measure. The implicit constant of course depends on the length of \(I_{1}\) (and hence p, and eventually \(\alpha \)).

We then move our attention to defining the graphs \(\gamma (\omega )\) with \(\omega \in I_{0}\). Look again at Fig. 3 and note the green trapezoidal regions, denoted by \(T_{j}\), \(j \ge 0\). To be precise, \(T_{0}\) is the convex hull of \(I_{0} \cup [(0,\tfrac{1}{2}),(\tfrac{1}{2},\tfrac{1}{2})]\), and

For \(j \ge 0\), we also define

Then \(\Omega ^{j}\) is the bottom edge of the trapezoid \(T_{j - 1}\), and \(I_{0}^{j}\) is the top edge of the trapezoid \(T_{j}\) for \(j \ge 1\). Also,

We point out that \(\Omega ^{0} = \Omega \), \(I_{0}^{0} = I_{0}\) and \(I_{1}^{0} = I_{1}\).

We then construct initial segments of the graphs \(\gamma (\omega )\), \(\omega \in I_{0}\) as follows. Define the map \(\sigma _{0} :I_{0} \rightarrow \Omega ^{1}\) by

where \(\beta = \beta (p) \in (\tfrac{1}{2},1)\) is chosen so that \(\sigma _{0}(I_{0}) = \Omega ^{1}\), that is,

Note that as p varies in \(((\tfrac{1}{2},1),(1,1))\), the number \(\beta (p)\) takes all values in \((\tfrac{1}{2},1)\). In particular, we may choose \(\beta (p) = 2^{-\alpha }\), where \(\alpha \in (0,1)\) is the exponent in (3.2).

Now, we connect every \(\omega \in I_{0}\) to \(\sigma _{0}(\omega )\) by a line segment, see Fig. 3; this is an initial segment of \(\gamma (\omega )\). We record that if \(I \subset \Omega ^{1}\) is any horizontal segment (or even a Borel set), then

Now, we have defined the intersections of the curves \(\gamma (\omega )\) with \(T_{0} \cup R_{0}\). In particular, the following set families are well-defined:

The graphs in \(\Gamma (R_{0})\) are already complete in the sense that they connect \(\Omega \) to \([0,1] \times \{0\}\). The graphs in \(\Gamma (T_{0})\) are evidently not complete, and they need to be extended. To do this, we define \(R_{j} := 2^{-j}R_{0}\) for \(j \ge 1\), see Fig. 3, and we define the set families

for \(j \ge 1\). In other words, the sets in \(\Gamma (R_{j})\) are obtained by rescaling the graphs in \(\Gamma (R_{0})\) so they fit inside, and foliate, \(R_{j}\). We note that the sets in \(\Gamma (R_{j})\) connect points in \(I_{1}^{j}\) to \([0,1] \times \{0\}\) for \(j \ge 0\).

Finally, we define the complete graphs \(\gamma (\omega )\), \(\omega \in I_{0}\) as follows. Fix \(\omega \in I_{0}\), and note that \(\gamma _{0} := \gamma (\omega ) \cap T_{0}\) has already been defined, and the intersection \(\gamma _{0} \cap \Omega ^{1}\) contains a single point \(z = \sigma _{0}(\omega )\), which lies in either \(I_{0}^{1}\) or \(I_{1}^{1}\). If \(z \in I_{1}^{1} \subset R_{1}\), then there is a unique set \(\gamma _{1} \in \Gamma (R_{1})\) with \(z \in \gamma _{1}\). Then we define

In this case \(\gamma (\omega )\) is now a complete graph, and the construction of \(\gamma (\omega )\) terminates. Before proceeding with the case \(z \in I_{0}^{1}\), we pause for a moment to record a useful observation. If \(B \subset R_{1}\) is a ball, consider

Since all the graphs \(\gamma \in \Gamma \) entering \(R_{1}\) can be written as \(\gamma _{0} \cup \gamma _{1}\) with \(\gamma _{0}\) terminating at \(I_{1}^{1}\) and \(\gamma _{1} \in \Gamma (R_{1})\), the set \(\Omega ^{1}(B)\) can be rewritten as

Then, recalling that \(I_{1}^{1} = 2^{-1}I_{1}\), and \(\Gamma (R_{1}) = 2^{-1}\Gamma (R_{0})\), and noting that \(2B \subset R_{0}\), we see that

using (3.5). The main point here is that the implicit constant is the same (absolute constant) as in (3.5). We remark that here \(2B = \{2x : x \in B\}\) is the honest dilation of B (and not a ball with the same centre and twice the radius as B).

We then consider the case \(z = \sigma _{0}(\omega ) \in I_{0}^{1} \subset T_{1}\). We define a map \(\sigma _{1} :I_{0}^{1} \rightarrow \Omega ^{2}\) by

and then connect every point \((x,\tfrac{1}{2}) \in I_{0}^{1}\) to \(\sigma _{1}(x,\tfrac{1}{2}) \in \Omega ^{2}\) by a line segment. In particular, this gives us the definition of \(\gamma (\omega )\) in \(T_{0} \cup T_{1}\): namely, \(\gamma (\omega ) \cap [T_{0} \cup T_{1}]\) is a union of two line segments, the first connecting \(\omega \) to \(\sigma _{0}(\omega ) = z\), and the second connecting z to \(\sigma _{1}(z)\). We note that if \(\omega = (x,1) \in I_{0}\), then \(\gamma (\omega ) \cap \Omega ^{2}\) consists of the point \(\sigma _{1}(\sigma _{0}(\omega )) = (\beta ^{2}x,\tfrac{1}{4})\). For any Borel set \(I \subset \Omega ^{2}\), this gives

which is an analogue of (3.7) for subsets of \(\Omega ^{2}\).

It is now clear how to proceed inductively, assuming that \(\gamma (\omega ) \cap [T_{0} \cup \ldots \cup T_{k}]\) has already been defined for some \(k \ge 1\), and then considering separately the cases

In the case \(\gamma (\omega ) \cap \Omega ^{k + 1} \subset I_{1}^{k + 1}\), we extend \(\gamma (\omega )\) to a complete graph contained in \(T_{0} \cup \ldots \cup T_{k} \cup R_{k + 1}\) by concatenating \(\gamma (\omega ) \cap [T_{1} \cup \ldots \cup T_{k}]\) with a set from \(\Gamma (R_{k + 1})\). Arguing as in (3.8), we have in this case the following estimate for all balls \(B \subset R_{k + 1}\):

where the implicit constant is the same as in (3.5). Indeed, the set on the left hand side of (3.10) is equal to a translate of \(2^{-(k + 1)}\{\omega \in I_{1} : \gamma (\omega ) \cap 2^{k + 1}B \ne \varnothing \}\).

In the case \(\gamma (\omega ) \cap \Omega ^{k + 1} \subset I_{0}^{k + 1}\), we define the map \(\sigma _{k + 1} :I_{0}^{k + 1} \rightarrow \Omega ^{k + 2}\) as before:

and connect the points \(z \in I_{0}^{k + 1}\) to \(\sigma _{k + 1}(z) \in \Omega ^{k + 2}\) by line segments. Arguing as in (3.7) and (3.9), we find that

This completes the definition of the graphs in \(\Gamma \). It is easy to check inductively that graphs in \(\Gamma \) foliate \((0,1]^{2}\). Moreover, the (partially defined) graph \(\gamma (0,1)\) never leaves \(\{0\} \times [0,1]\) during the construction, so we can simply agree that (0, 0) is the endpoint of \(\gamma (0,1)\), thus completing the foliation of \([0,1]^{2}\).

The sets in \(\Gamma \) are clearly (non-maximal) \({\mathcal {C}}\)-graphs with respect to some cone of the form \({\mathcal {C}}= {\mathcal {C}}((0,1),\theta )\). As long as \(p \ne (1,1)\), the opening angle of \({\mathcal {C}}\) is strictly smaller than \(\pi /2\), or in other words \(\theta > \sin (\tfrac{\pi }{4})\). We then extend the graphs \(\gamma (\omega ) \in \Gamma \) to maximal \({\mathcal {C}}\)-graphs \(\gamma _{1}(\omega )\), \(\omega \in \Omega \), as follows (see Fig. 4 for an idea of what is happening). For \(\omega \in \Omega \in [0,1] \times \{1\}\), let \(\gamma (\omega ) \subset [0,1]^{2}\) be the graph constructed above, and let

be the reflections over the x-axis and y-axis, respectively. First concatenate \(\gamma (\omega )\) with a vertical half-line starting from \(\omega \) and travelling upwards. Denoting this “half-maximal” graph by \({\tilde{\gamma }}(\omega )\), we let

Noting that \(\gamma (\omega )\) has one endpoint on \([0,1] \times \{0\}\), this procedure defines a maximal \({\mathcal {C}}\)-graph \(\gamma _{1}(\omega )\). Finally, recalling that \(\Omega \) was only the right half of \(\Omega _{1} = [-1,1] \times \{1\}\), we define

This completes the definition of the triple \((\Omega _{1},{\mathbb {P}}_{1},\gamma _{1})\). We then consider the measure

Recall the measure \(\mu = f \, d{\mathcal {L}}^{2}|_{[-1,1]^{2}}\) defined in (3.2). We will next show that

In other words, the Alberti representation \((\Omega ,{\mathbb {P}},\gamma ,\tfrac{d\mu }{d\nu _{1}})\) of \(\mu \) by \({\mathcal {C}}\)-graphs is both BoA and BoB on \([-1,1]^{2}\). Noting that \(f \circ X = f \circ Y = f\), and \(X(\nu _{1}) = Y(\nu _{1}) = \nu _{1}\), it suffices to compare \(\mu \) and \(\nu _{1}\) on \([0,1]^{2}\). Moreover, it suffices to show that the Radon-Nikodym derivative \((d\nu _{1}/d{\mathcal {L}}^{2})(z)\) at \({\mathcal {L}}^{2}\) almost every interior point z of one of the regions \(T_{k}\) or \(R_{k}\) is comparable to f(z).

Assume first that \(k \ge 0\) and \(z \in {\text {int}} T_{k}\), and fix \(r > 0\) so small that \(B := B(z,r) \subset T_{k}\). Then

We write \({\mathbb {P}}(B) := {\mathbb {P}}\{\omega \in \Omega : \gamma (\omega ) \cap B \ne \varnothing \}\), and we claim that

This follows easily from (3.11), since every curve \(\gamma \in \Gamma \) meeting either B or B/2 also intersects \(\Omega ^{k}\). In fact, the set

is a segment of length \(\sim {\text {diam}}(B)\) (and the same holds for B/2), so

by (3.11). Since moreover

\({\mathcal {H}}^{1}(\gamma (\omega ) \cap B) \lesssim {\text {diam}}(B)\) for all \(\omega \in \Omega \), and

\({\mathcal {H}}^{1}(\gamma (\omega ) \cap B) > rsim {\text {diam}}(B)\) for all \(\omega \in \Omega \) with \(\gamma (\omega ) \cap (B/2) \ne \varnothing \),

we infer from (3.13) that

Writing \(z = (s,t)\), we observe that \(2^{-k + 1} \le t \le 2^{-k}\) whenever \(z \in T_{k}\) (simply because this holds for \(k = 0\), and \(T_{k} = 2^{-k}T_{0}\)). Also, \(f(z) \sim t^{-\alpha }\), or more precisely

since \(t \ge s\) on \(T_{k}\). Note that \(2^{-\alpha /2} \in [1/2,1]\) for \(\alpha \in [0,1]\), so the implicit constant in \(f(z) \sim t^{-\alpha }\) can really be chosen independent of \(\alpha \). Now, recalling the choice \(\beta = \beta (p) = 2^{-\alpha }\) from under (3.6), we find from (3.15) that

All the implicit constants can, again, be chosen independently of \(\alpha \in (0,1)\).

Next, we fix \(k \ge 0\) and \(z \in {\text {int}} R_{k}\). Again, we choose \(r > 0\) so small that \(B := B(z,r) \subset R_{k}\), and we observe that (3.13) holds. The main task is again to find upper and lower bounds for \({\mathbb {P}}(B)\). Note that, by construction, every graph \(\gamma (\omega ) \in \Gamma \) intersecting \(B \subset R_{k}\) also intersects \(I_{1}^{k} \subset \Omega ^{k}\) (with the convention \(I_{1}^{0} = I_{1}\) and \(\Omega _{0} = \Omega \)). Hence, defining \(\Omega ^{k}(B)\) as before, in (3.14), we find that

using (3.11) in the last estimate. Combining this with (3.10), we find that

This implies (3.15) as before. Finally, we write \(z = (s,t)\), and observe that \(2^{-k + 1} \le s \le 2^{-k}\) for all \(z \in R_{k}\), and also that \(f(s,t) \sim s^{-\alpha }\) for all \((s,t) \in R_{k}\) (because \(s \ge t/2\)). Consequently,

This completes the proof of (3.12).

It remains to produce the second representation \((\Omega _{2},{\mathbb {P}}_{2},\gamma _{2},\tfrac{d\mu }{d\nu _{2}})\) for \(\mu \), which is independent of the first one. Let \(M :{\mathbb {R}}^{2} \rightarrow {\mathbb {R}}^{2}\) be a rotation by \(\pi /2\) (clockwise, say), and consider the push-forward measures \(M(\mu )\) and \(M(\nu )\). Note that \(f \circ M = f\), so \(M(\mu ) = \mu \). It follows that from this and (3.12) that

On the other hand,

where \(\gamma _{2}(\omega ) := M(\gamma _{1}(\omega ))\). So, we find that \((\Omega _{2},{\mathbb {P}}_{2},\gamma _{2},\tfrac{d\mu }{d\nu _{2}})\) is the desired second representation of \(\mu \). The proof of Theorem 3.1 is complete.

References

Alberti, G.: Rank one property for derivatives of functions with bounded variation. Proc. R. Soc. Edinburgh Sect. A 123(2), 239–274 (1993)

Alberti, G., Csörnyei, M., Preiss, D.: Structure of null sets in the plane and applications. In: Laptev, A. (ed.) European Congress of Mathematics, pp. 3–22. European Mathematical Society, Zürich (2005)

Bate, D., Kangasniemi, I., Orponen, T.: Cheeger’s differentiation theorem via the multilinear Kakeya inequality. arXiv:1904.00808, (2019)

De Philippis, G., Rindler, F.: On the structure of \(\cal{A} \)-free measures and applications. Ann. of Math. (2) 184(3), 1017–1039 (2016)

Gehring, F.W.: The \(L^{p}\)-integrability of the partial derivatives of a quasiconformal mapping. Acta Math. 130, 265–277 (1973)

Guth, L.: A short proof of the multilinear Kakeya inequality. Math. Proc. Cambridge Philos. Soc. 158(1), 147–153 (2015)

Loomis, L.H., Whitney, H.: An inequality related to the isoperimetric inequality. Bull. Am. Math. Soc 55, 961–962 (1949)

Acknowledgements

We would like to thank Vesa Julin for many useful conversations on the topics of the paper. We also thank the anonymous referee for helpful suggestions leading to Question 2.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by C. De Lellis.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

D.B. is supported by the Academy of Finland via the project Projections, densities and rectifiability: new settings for classical ideas, Grant No. 308510. T.O. is supported by the Academy of Finland via the project Quantitative rectifiability in Euclidean and non-Euclidean spaces, Grant No. 309365.

Appendix A. The case of two independent axis-parallel representations

Appendix A. The case of two independent axis-parallel representations

Here we prove Proposition 1.9. The statement is repeated below:

Proposition A.1

Let \(\mu \) be a Radon measure on \({\mathbb {R}}^{2}\) which has two independent axis-parallel representations \(({\mathbb {R}},{\mathbb {P}}_{1},\gamma _{x},\tfrac{d\mu }{d\nu _{1}})\) and \(({\mathbb {R}},{\mathbb {P}}_{2},\gamma _{y},\tfrac{d\mu }{d\nu _{2}})\). If both of them are BoA and BoB on \([0,1)^{2}\), then \(\mu |_{[0,1)^{2}} \ll {\mathcal {L}}^{2}\) with \(\mu (x) \sim \mu ([0,1)^{2})\) for \({\mathcal {L}}^{2}\) almost every \(x \in [0,1)^{2}\).

Proof

Note that \(\mu |_{[0,1)^{2}} \in L^{2}\) by Theorem 1.6. Let \(Q_{1},Q_{2} \in {\mathcal {D}}_{n}([0,1)^{2})\) be dyadic sub-squares of \([0,1)^{2}\) of side-length \(2^{-n}\), \(n \ge 0\). Write \(Q_{1} := I_{1} \times J_{1}\) and \(Q_{2} := I_{2} \times J_{2}\). Then also \(Q^{*} := I_{1} \times J_{2} \in {\mathcal {D}}_{n}([0,1)^{2})\), and

Similarly \(\mu (Q_{2}) \sim \mu (Q^{*})\), so \(\mu (Q_{1})/{\mathcal {L}}^{2}(Q_{1}) \sim \mu (Q_{2})/{\mathcal {L}}^{2}(Q_{2})\), and consequently

for all \(Q \in {\mathcal {D}}_{n}([0,1)^{2})\) and \(n \ge 0\). The claim now follows from the Lebesgue differentiation theorem. \(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bate, D., Orponen, T. Quantitative absolute continuity of planar measures with two independent Alberti representations. Calc. Var. 59, 72 (2020). https://doi.org/10.1007/s00526-020-1714-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00526-020-1714-x