Abstract

Iterative Hessian sketch (IHS) is an effective sketching method for modeling large-scale data. It was originally proposed by Pilanci and Wainwright (J Mach Learn Res 17(1):1842–1879, 2016) based on randomized sketching matrices. However, it is computationally intensive due to the iterative sketch process. In this paper, we analyze the IHS algorithm under the unconstrained least squares problem setting and then propose a deterministic approach for improving IHS via A-optimal subsampling. Our contributions are threefold: (1) a good initial estimator based on the A-optimal design is suggested; (2) a novel ridged preconditioner is developed for repeated sketching; and (3) an exact line search method is proposed for determining the optimal step length adaptively. Extensive experimental results demonstrate that our proposed A-optimal IHS algorithm outperforms the existing accelerated IHS methods.

Similar content being viewed by others

Notes

Data can be found in https://www.icpsr.umich.edu/icpsrweb/ICPSR/studies/21960#.

References

Benzi, M.: Preconditioning techniques for large linear systems: a survey. J. Comput. Phys. 182(2), 418–477 (2002)

Boutsidis, C., Gittens, A.: Improved matrix algorithms via the subsampled randomized Hadamard transform. SIAM J. Matrix Anal. Appl. 34(3), 1301–1340 (2013)

Clarkson, K. L., Woodruff, D. P.: Low rank approximation and regression in input sparsity time. In: Proceedings of the Forty-Fifth Annual ACM Symposium on Theory of Computing, pp. 81–90. ACM (2013)

Drineas, P., Mahoney, M. W., Muthukrishnan, S.: Sampling algorithms for l 2 regression and applications. In: Proceedings of the Seventeenth Annual ACM-SIAM Symposium on Discrete Algorithm, pp. 1127–1136. Society for Industrial and Applied Mathematics (2006)

Drineas, P., Mahoney, M.W., Muthukrishnan, S., Sarlós, T.: Faster least squares approximation. Numer. Math. 117(2), 219–249 (2011)

Drineas, P., Magdon-Ismail, M., Mahoney, M.W., Woodruff, D.P.: Fast approximation of matrix coherence and statistical leverage. J. Mach. Learn. Res. 13(Dec), 3475–3506 (2012)

Gonen, A., Orabona, F., Shalev-Shwartz, S.: Solving ridge regression using sketched preconditioned SVRG. In: International Conference on Machine Learning, pp. 1397–1405 (2016)

Horn, R.A., Johnson, C.R.: Matrix Analysis. Cambridge University Press, Cambridge (2012)

Johnson, W.B., Lindenstrauss, J.: Extensions of Lipschitz mappings into a Hilbert space. Contemp. Math. 26(189–206), 1 (1984)

Knyazev, A.V., Lashuk, I.: Steepest descent and conjugate gradient methods with variable preconditioning. SIAM J. Matrix Anal. Appl. 29(4), 1267–1280 (2007)

Lu, Y., Dhillon, P., Foster, D. P., Ungar, L.: Faster ridge regression via the subsampled randomized Hadamard transform. In: Advances in Neural Information Processing Systems, pp. 369–377 (2013)

Ma, P., Mahoney, M.W., Yu, B.: A statistical perspective on algorithmic leveraging. J. Mach. Learn. Res. 16(1), 861–911 (2015)

Mahoney, M.W., et al.: Randomized algorithms for matrices and data. Found. Trends® Mach. Learn. 3(2), 123–224 (2011)

Martınez, C.: Partial quicksort. In: Proceedings of the 6th ACMSIAM Workshop on Algorithm Engineering and Experiments and 1st ACM-SIAM Workshop on Analytic Algorithmics and Combinatorics, pp 224–228 (2004)

McWilliams, B., Krummenacher, G., Lucic, M., Buhmann, J. M.: Fast and robust least squares estimation in corrupted linear models. In: Advances in Neural Information Processing Systems, pp. 415–423 (2014)

Nocedal, J., Wright, S.J.: Numerical Optimization. Springer, Berlin (2006)

Pilanci, M., Wainwright, M.J.: Iterative Hessian sketch: fast and accurate solution approximation for constrained least-squares. J. Mach. Learn. Res. 17(1), 1842–1879 (2016)

Pukelsheim, F.: Optimal Design of Experiments, vol. 50. SIAM, Philadelphia (1993)

Seber, G.A.: A Matrix Handbook for Statisticians, vol. 15. Wiley, New York (2008)

Tropp, J.A.: Improved analysis of the subsampled randomized Hadamard transform. Adv. Adapt. Data Anal. 3(1–2), 115–126 (2011)

Wang, D., Xu, J.: Large scale constrained linear regression revisited: faster algorithms via preconditioning. In: Thirty-Second AAAI Conference on Artificial Intelligence (2018)

Wang, J., Lee, J.D., Mahdavi, M., Kolar, M., Srebro, N., et al.: Sketching meets random projection in the dual: a provable recovery algorithm for big and high-dimensional data. Electron. J. Stat. 11(2), 4896–4944 (2017)

Wang, H., Yang, M., Stufken, J.: Information-based optimal subdata selection for big data linear regression. J. Am. Stat. Assoc. 114(525), 393–405 (2019)

Woodruff, D.P., et al.: Sketching as a tool for numerical linear algebra. Found. Trends® Theor. Comput. Sci. 10(1–2), 1–157 (2014)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Extra results with different ridged preconditioners

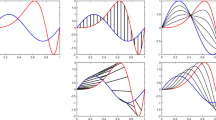

We further compare the ridged preconditioner \(\varvec{M}=\frac{n}{m}\sum _{i=1}^n\delta _i\varvec{x}_i\varvec{x}_i^T+\lambda \varvec{I}_d\) with its two components, the non-ridged term and the scaled identity matrix. Three preconditioners are evaluated through \(\mathrm{MSE}_2\) under our proposed algorithm framework. We only consider the identity matrix \(\varvec{M}= \varvec{I}\) since any scaling multiplier \(\lambda \) in \(\varvec{M}= \lambda \varvec{I}\) can be canceled out during the update of \({\hat{\varvec{\beta }}}_t\). The results strengthen that the ridging operation may enhance the preconditioner performance (Fig. 7).

Appendix B: Extra results with the same proposed initial estimator

In this section, we perform some additional experiments where all the methods are initialized by our proposed A-optimal estimator. The subsample size is fixed as \(m=1000\). These experiments further justify that the proposed Aopt-IHS method generally enjoys the better convergent rates than the benchmark methods (Figs. 8, 9).

Rights and permissions

About this article

Cite this article

Zhang, A., Zhang, H. & Yin, G. Adaptive iterative Hessian sketch via A-optimal subsampling. Stat Comput 30, 1075–1090 (2020). https://doi.org/10.1007/s11222-020-09936-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11222-020-09936-8