Abstract

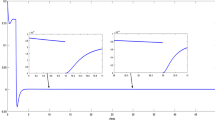

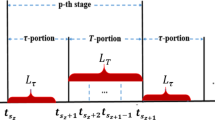

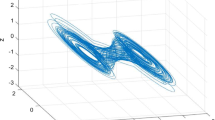

This paper focuses on the state estimation issue of T–S fuzzy Markovian generalized neural networks (GNNs) with reaction–diffusion terms. An estimator-based nonfragile time-varying proportional retarded sampled-data controller that permits norm-bounded indeterminacy and contains a time-varying delay is designed to guarantee the asymptotical stability of the error system. By establishing a novel Lyapunov–Krasovskii functional that involves positive indefinite items and discontinuous items, meanwhile, by combining the reciprocally convex combination method, Jenson’s inequality and Wirtinger inequality, a less conservative stability criterion can be derived. Moreover, the principle for the number of selected variables in the process of deriving main results is also analyzed. Finally, two numerical examples are given to demonstrate the validity and advantages of the results proposed in this paper.

Similar content being viewed by others

References

Zhang X, Han Q (2011) Global asymptotic stability for a class of generalized neural networks with interval time-varying delays. IEEE Trans Neural Netw 22(8):1180

Saravanakumar R, Syed AM, Ahn CK, Karimi HR, Shi P (2017) Stability of Markovian jump generalized neural networks with interval time-varying delays. IEEE Trans Neural Netw Learn Syst 28(8):1840–1850

Samidurai R, Manivannan R, Ahn CK, Karimi HR (2016) New criteria for stability of generalized neural networks including Markov jump parameters and additive time delays. IEEE Trans Syst Man Cybern Syst 48(4):485–499

Chen G, Xia J, Zhuang G (2016) Delay-dependent stability and dissipativity analysis of generalized neural networks with Markovian jump parameters and two delay components. J Frankl Inst 353(9):2137–2158

Rajchakit G, Saravanakumar R (2018) Exponential stability of semi-Markovian jump generalized neural networks with interval time-varying delays. Neural Comput Appl 29(2):483–492

Dharani S, Balasubramaniam P (2019) Delayed impulsive control for exponential synchronization of stochastic reaction–diffusion neural networks with time-varying delays using general integral inequalities. Neural Comput Appl. https://doi.org/10.1007/s00521-019-04223-8

Song X, Man J, Fu Z, Wang M, Lu J (2019) Memory-based state estimation of T–S fuzzy Markov jump delayed neural networks with reaction–diffusion terms. Neural Process Lett 50(3):2529–2546

Zeng D, Zhang R, Park JH, Pu Z, Liu Y (2019) Pinning synchronization of directed coupled reaction–diffusion neural networks with sampled-data communications. IEEE Trans Neural Netw Learn Syst. https://doi.org/10.1109/TNNLS.2019.2928039

Wei H, Chen C, Tu Z, Li N (2018) New results on passivity analysis of memristive neural networks with time-varying delays and reaction–diffusion term. Neurocomputing 275:2080–2092

Song X, Wang M, Song S, Wang Z (2019) Intermittent pinning synchronization of reaction–diffusion neural networks with multiple spatial diffusion couplings. Neural Comput Appl 31(12):9279–9294

Huang Y, Hou J, Yang E (2019) General decay anti-synchronization of multi-weighted coupled neural networks with and without reaction–diffusion terms. Neural Comput Appl. https://doi.org/10.1007/s00521-019-04313-7

Jiang B, Karimi HR, Kao Y, Gao C (2019) Takagi–Sugeno model based event-triggered fuzzy sliding mode control of networked control systems with semi-Markovian switchings. IEEE Trans Fuzzy Syst. https://doi.org/10.1109/TFUZZ.2019.2914005

Ali MS, Gunasekaran N, Zhu Q (2017) State estimation of T–S fuzzy delayed neural networks with Markovian jumping parameters using sampled-data control. Fuzzy Sets Syst 306:87–104

Zhang Y, Shi P, Agarwal RK, Shi Y (2015) Dissipativity analysis for discrete time-delay fuzzy neural networks with Markovian jumps. IEEE Trans Fuzzy Syst 24(2):432–443

Arunkumar A, Sakthivel R, Mathiyalagan K, Park JH (2014) Robust stochastic stability of discrete-time fuzzy Markovian jump neural networks. ISA Trans 53(4):1006–1014

Ali MS, Gunasekaran N, Saravanakumar R (2018) Design of passivity and passification for delayed neural networks with Markovian jump parameters via non-uniform sampled-data control. Neural Comput Appl 30(2):595–605

Liu Y, Tong L, Lou J, Lu J, Cao J (2019) Sampled-data control for the synchronization of Boolean control networks. IEEE Trans Cybern 49(2):726–732

Li L, Yang Y, Lin G (2016) The stabilization of BAM neural networks with time-varying delays in the leakage terms via sampled-data control. Neural Comput Appl 27(2):447–457

Chen W, Luo S, Zheng W (2017) Generating globally stable periodic solutions of delayed neural networks with periodic coefficients via impulsive control. IEEE Trans Cybern 47(7):1590–1603

Wang Y, Shen H, Duan D (2017) On stabilization of quantized sampled-data neural-network-based control systems. IEEE Trans Cybern 47(10):3124–3135

Wu Z, Xu Z, Shi P, Chen MZ, Su H (2018) Nonfragile state estimation of quantized complex networks with switching topologies. IEEE Trans Neural Netw Learn Syst 29(10):5111–5121

Yue D, Han Q (2005) Delayed feedback control of uncertain systems with time-varying input delay. Automatica 41(2):233–240

Zhang C, He Y, Jiang L, Wu Q, Wu M (2017) Delay-dependent stability criteria for generalized neural networks with two delay components. IEEE Trans Neural Netw Learn Syst 25(7):1263–1276

Chen W, Zheng W (2010) Robust stability analysis for stochastic neural networks with time-varying delay. IEEE Trans Neural Netw 21(3):508–514

Li T, Ye X (2010) Improved stability criteria of neural networks with time-varying delays—an augmented LKF approach. Neurocomputing 73(4–6):1038–1047

Zuo Z, Yang C, Wang Y (2010) A new method for stability analysis of recurrent neural networks with interval time-varying delay. IEEE Trans Neural Netw 21(2):339–344

Wu Z, Lam J, Su H, Chu J (2012) Stability and dissipativity analysis of static neural networks with time delay. IEEE Trans Neural Netw Learn Syst 23(2):199–210

Li T, Song A, Fei S, Wang T (2010) Delay-derivative-dependent stability for delayed neural networks with unbound distributed delay. IEEE Trans Neural Netw 21(8):1365

Liu Y, Park JH, Guo B, Shu Y (2018) Further results on stabilization of chaotic systems based on fuzzy memory sampled-data control. IEEE Trans Fuzzy Syst 26(2):1040–1045

Liu Y, Guo B, Park JH, Lee SM (2018) Nonfragile exponential synchronization of delayed complex dynamical networks with memory sampled-data control. IEEE Trans Neural Netw Learn Syst 29(1):118–128

Zhang R, Zeng D, Park JH, Liu Y, Zhong S (2018) A new approach to stabilization of chaotic systems with nonfragile fuzzy proportional retarded sampled-data control. IEEE Trans Cybern 49(9):3218–3229

Park PG, Ko JW, Jeong C (2011) Reciprocally convex approach to stability of systems with time-varying delays. Automatica 47(1):235–238

Wu Z, Shi P, Su H, Chu J (2013) Stochastic synchronization of Markovian jump neural networks with time-varying delay using sampled data. IEEE Trans Cybern 43(6):1796–1806

Xu Z, Su H, Shi P, Lu R, Wu Z (2016) Reachable set estimation for Markovian jump neural networks with time-varying delays. IEEE Trans Cybern 47(10):3208–3217

Ma Y, Zheng Y (2018) Delay-dependent stochastic stability for discrete singular neural networks with Markovian jump and mixed time-delays. Neural Comput Appl 29(1):111–122

Xiao Q, Huang T, Zeng Z (2018) Passivity and passification of fuzzy memristive inertial neural networks on time scales. IEEE Trans Fuzzy Syst 26(6):3342–3355

Liu Y, Wang Z, Liu X (2006) Global exponential stability of generalized recurrent neural networks with discrete and distributed delays. Neural Netw 19(5):667–675

Shen H, Huang X, Zhou J, Wang Z (2012) Global exponential estimates for uncertain Markovian jump neural networks with reaction–diffusion terms. Nonlinear Dyn 69(1–2):473–486

Ali MS, Gunasekaran N (2018) Sampled-data state estimation of Markovian jump static neural networks with interval time-varying delays. J Comput Appl Math 343(C):217–229

Wu Z, Shi P, Su H, Chu J (2014) Sampled-data fuzzy control of chaotic systems based on a T–S fuzzy model. IEEE Trans Fuzzy Syst 22(1):153–163

Rakkiyappan R, Dharani S (2017) Sampled-data synchronization of randomly coupled reaction–diffusion neural networks with Markovian jumping and mixed delays using multiple integral approach. Neural Comput Appl 28(3):1–14

Ali MS, Arik S, Saravanakumar R (2015) Delay-dependent stability criteria of uncertain Markovian jump neural networks with discrete interval and distributed time-varying delays. Neurocomputing 158:167–173

Huang D, Jiang M, Jian J (2017) Finite-time synchronization of inertial memristive neural networks with time-varying delays via sampled-date control. Neurocomputing 266:527–539

Guo Z, Gong S, Huang T (2018) Finite-time synchronization of inertial memristive neural networks with time delay via delay-dependent control. Neurocomputing 293:100–107

Gu K, Chen J, Kharitonov V (2003) Stability of time-delay systems. Birkhauser Boston, Inc., Secaucus

Guojun L (2008) Global exponential stability and periodicity of reaction–diffusion delayed recurrent neural networks with Dirichlet boundary conditions. Chaos Solitons Fractals 35(1):116–125

Wang Y, Xie L, De Souza CE (1992) Robust control of a class of uncertain nonlinear systems. Syst Control Lett 19(2):139–149

Seuret A, Gouaisbaut F (2013) Wirtinger-based integral inequality: application to time-delay systems. Automatica 49(9):2860–2866

Acknowledgements

Project supported by National Natural Science Foundation of China (Nos. 61976081, U1604146) and Foundation for the University Technological Innovative Talents of Henan Province (No. 18HASTIT019).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declared that they have no conflict of interest to this work. We declare that we do not have any commercial or associative interest that represents a conflict of interest in connection with the work submitted.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

1.1 Appendix 1: Crucial Lemmas

Lemma 1

[45] If there exists a matrix \(X \in {{\mathbb {R}}^{n \times n}}\), \(X = {X^\mathrm{T}} > 0\) and \(c \le s \le d\), then one has

Lemma 2

[46] Let \(\Omega\) be a cube \(\left| {{x_k}} \right| < {\tilde{l}_k}(k = 1,2,\ldots ,m)\), \(\nu (x)\) be a real-valued function belonging to \({C^1}(\Omega )\) which satisfies \(\nu (x)\left| {_{\partial \Omega }} \right. = 0\). Then

Lemma 3

[32] Let \({g_1},{g_2},\ldots ,{g_N}:{{\mathbb {R}}^m} \rightarrow {{\mathbb {R}}^1}\) have positive values in an open subset E of \({{\mathbb {R}}^m}\). Then, the reciprocally convex combination of \({g_i}\) over E satisfies

subject to

Lemma 4

[47] Given real matrices A, B and D with appropriate dimensions and a scalar \(\varepsilon > 0\), moreover, \({D^\mathrm{T}}D \le I\), for any vectors \(x,y \in {{\mathbb {R}}^n}\), the following inequation holds:

Lemma 5

[48] For any matrix \(\mathcal{M} \in {{\mathbb {R}}^{n \times n}}\), \(\mathcal{M} = {\mathcal{M}^\mathrm{T}} > 0\), the integrable function \(\dot{\omega }(x)\) in \([a,b]\rightarrow {{\mathbb {R}}^n}\) satisfies:

where

1.2 Appendix 2: Proof of Theorem 1

For the purpose of simplicity, the vector notations are denoted as follows:

We choose the following LKF candidate:

where

Then, it can be deduced that for each \(\alpha \in \mathcal{S}\),

where

For \({h_1}< h(t) < {h_2}\), the following inequalities can be deduced by employing Lemmas 1 and 3:

where

In addition,

From Assumption 1, we can obtain the following inequations for n-dimensional positive definite diagonal matrices \({\Theta _{o}}(o = 1,2,3)\):

According to the error system (6), one has

Then, using Lemma 4, we get

Combining (24) and Lemma 2, one can easily derive that

where

and \({l_k} > 0\) are given scalars.

Let \({\hat{K}_{1j}} = \Gamma {K_{1j}}, {\hat{K}_{2j}} = \Gamma {K_{2j}}\), then, for \({t_m} \le t < {t_{m + 1}}\), by combining (18)–(25), we get

where

and \({\Sigma _{1ij}}\), \({\Sigma _2}\), \({\Sigma _3}\) have been defined in (8) and (9). It is obvious that if (8)–(11) hold, \({\mathcal{S}_{1ij}} = {\bar{\Sigma }_{1ij}} + {\varsigma _m}{\Sigma _2} < 0\) and \({\mathcal{S}_{2ij}} = {\bar{\Sigma }_{1ij}} + {\varsigma _m}{\Sigma _3} < 0\), then \(\mathcal{L}V({y_\mu },t) < 0\). As a result, the error system (6) is asymptotically stable.

Additionally, \({K_{1j}} = {\Gamma ^{ - 1}}{\hat{K}_{1j}}, \, {K_{2j}} = {\Gamma ^{ - 1}}{\hat{K}_{2j}}\). This completes the proof. \(\square\)

Rights and permissions

About this article

Cite this article

Song, X., Man, J., Song, S. et al. State estimation of T–S fuzzy Markovian generalized neural networks with reaction–diffusion terms: a time-varying nonfragile proportional retarded sampled-data control scheme. Neural Comput & Applic 32, 14639–14653 (2020). https://doi.org/10.1007/s00521-020-04817-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-020-04817-7