Abstract

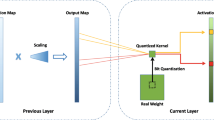

Deep neural networks (DNNs) have become ubiquitous in artificial intelligence applications, including image processing, speech processing and natural language processing. However, the main characteristic of DNNs is that they are computationally and memory intensive, making them difficult to deploy on embedded systems with limited hardware resources and power budgets. To address this limitation, we introduce a new quantization method with mixed data structure and bit-shifting broadcast accelerator structure BSHIFT. These works together reduce the storage requirement of neural networks models from 32 to 5 bits without affecting their accuracy. We implement BSHIFT at TSMC 16 nm technology node, and the efficiency achieves 64 TOPS/s per watt in our experiments.

Similar content being viewed by others

References

Amodei, D., Anubhai, R., Battenberg, E., Case, C., Casper, J., Catanzaro, B., Chen, J., Chrzanowski, M., Coates, A., Diamos, G.: Deep speech 2: end-to-end speech recognition in English and Mandarin. In: International Conference on Machine Learning, pp. 173–182 (2016)

Chen, T., Du, Z., Sun, N., Wang, J., Wu, C., Chen, Y., Temam, O.: Diannao: a small-footprint high-throughput accelerator for ubiquitous machine-learning. ACM Sigplan Not. 49(4), 269–284 (2014)

Chen, Y., Luo, T., Liu, S., Zhang, S., He, L., Wang, J., Li, L., Chen, T., Xu, Z., Sun, N., et al.: Dadiannao: a machine-learning supercomputer. In: Proceedings of the 47th Annual IEEE/ACM International Symposium on Microarchitecture, pp. 609–622. IEEE Computer Society (2014)

Chen, Y.H., Emer, J., Sze, V.: Eyeriss: a spatial architecture for energy-efficient dataflow for convolutional neural networks. In: ACM SIGARCH Computer Architecture News, vol. 44, pp. 367–379. IEEE Press (2016)

Cheng, J., Wang, P., Li, G., Hu, Q., Lu, H.: A Survey on Acceleration of Deep Convolutional Neural Networks. arXiv preprint arXiv:1802.00939 (2018)

Conneau, A., Schwenk, H., Barrault, L., Lecun, Y.: Very Deep Convolutional Networks for Natural Language Processing. arXiv preprint arXiv:1606.01781v1 (2016)

Courbariaux, M., Hubara, I., Soudry, D., El-Yaniv, R., Bengio, Y.: Binarized Neural Networks: Training Neural Networks with Weights and Activations Constrained to \(+\)1 or \(-\)1. arXiv preprint arXiv:1602.02830 (2016)

Du, Z., Fasthuber, R., Chen, T., Ienne, P., Li, L., Luo, T., Feng, X., Chen, Y., Temam, O.: Shidiannao: shifting vision processing closer to the sensor. In: ACM SIGARCH Computer Architecture News, vol. 43, pp. 92–104. ACM (2015)

Esmaeilzadeh, H., Sampson, A., Ceze, L., Burger, D.: Architecture support for disciplined approximate programming. In: ACM SIGPLAN Notices, vol. 47, pp. 301–312. ACM (2012)

Farabet, C., Martini, B., Corda, B., Akselrod, P., Culurciello, E., LeCun, Y.: Neuflow: a runtime reconfigurable dataflow processor for vision. In: 2011 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), pp. 109–116. IEEE (2011)

Guo, Y., Yao, A., Chen, Y.: Dynamic network surgery for efficient DNNS. In: Advances In Neural Information Processing Systems, pp. 1379–1387 (2016)

Gupta, S., Agrawal, A., Gopalakrishnan, K., Narayanan, P.: Deep learning with limited numerical precision. In: International Conference on Machine Learning, pp. 1737–1746 (2015)

Han, S., Liu, X., Mao, H., Pu, J., Pedram, A., Horowitz, M.A., Dally, W.J.: Eie: efficient inference engine on compressed deep neural network. In: 2016 ACM/IEEE 43rd Annual International Symposium on Computer Architecture (ISCA), pp. 243–254. IEEE (2016)

Han, S., Mao, H., Dally, W.J.: Deep compression: compressing deep neural networks with pruning, trained quantization and huffman coding. Fiber 56(4), 3–7 (2015)

Jia, Y., Shelhamer, E., Donahue, J., Karayev, S., Long, J., Girshick, R., Guadarrama, S., Darrell, T.: Caffe: Convolutional Architecture for Fast Feature Embedding. arXiv preprint arXiv:1408.5093 (2014)

Khazraee, M., Zhang, L., Vega, L., Taylor, M.B.: Moonwalk: Nre optimization in asic clouds. ACM SIGOPS Oper. Syst. Rev. 51(2), 511–526 (2017)

Köster, U., Webb, T., Wang, X., Nassar, M., Bansal, A.K., Constable, W., Elibol, O., Gray, S., Hall, S., Hornof, L., et al.: Flexpoint: an adaptive numerical format for efficient training of deep neural networks. In: Advances in Neural Information Processing Systems, pp. 1742–1752 (2017)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. In: International Conference on Neural Information Processing Systems, pp. 1097–1105 (2012)

Mnih, V., Hinton, G.: Learning to label aerial images from noisy data. In: International Conference on Machine Learning (2013)

Rastegari, M., Ordonez, V., Redmon, J., Farhadi, A.: Xnor-net: imagenet classification using binary convolutional neural networks. In: European Conference on Computer Vision, pp. 525–542. Springer, Berlin (2016)

Ren, S., He, K., Girshick, R., Sun, J.: Faster r-CNN: towards real-time object detection with region proposal networks. In: International Conference on Neural Information Processing Systems, pp. 91–99 (2015)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. Comput. Sci. arXiv preprint arXiv:1409.1556 (2014)

Song, H., Pool, J., Tran, J., Dally, W.: Learning both weights and connections for efficient neural network. In: International Conference on Neural Information Processing Systems, pp. 1135–1143 (2015)

Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed, S., Anguelov, D., Erhan, D., Vanhoucke, V., Rabinovich, A.: Going deeper with convolutions. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–9 (2014)

Wang, Y., Xu, C., You, S., Tao, D., Xu, C.: Cnnpack: packing convolutional neural networks in the frequency domain. In: Advances in Neural Information Processing Systems, pp. 253–261 (2016)

Zhang, S., Du, Z., Zhang, L., Lan, H., Liu, S., Li, L., Guo, Q., Chen, T., Chen, Y.: Cambricon-x: an accelerator for sparse neural networks. In: The 49th Annual IEEE/ACM International Symposium on Microarchitecture, p. 20. IEEE Press (2016)

Zhou, A., Yao, A., Guo, Y., Xu, L., Chen, Y.: Incremental Network Quantization: Towards Lossless CNNS with Low-Precision Weights. arXiv preprint arXiv:1702.03044 (2017)

Acknowledgements

This work is partially supported by the National Key Research and Development Program of China (under Grant 2017YFA0700902, 2017YFB1003101), the NSF of China (under Grants 61472396, 61432016, 61473275, 61522211, 61532016, 61521092, 61502446, 61672491, 61602441, 61602446, 61732002, and 61702478), the 973 Program of China (under Grant 2015CB358800) and National Science and Technology Major Project (2018ZX01031102).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Yu, Y., Zhi, T., Zhou, X. et al. BSHIFT: A Low Cost Deep Neural Networks Accelerator. Int J Parallel Prog 47, 360–372 (2019). https://doi.org/10.1007/s10766-018-00624-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10766-018-00624-9