Abstract

This paper studies mean maximization and variance minimization problems in finite horizon continuous-time Markov decision processes. The state and action spaces are assumed to be Borel spaces, while reward functions and transition rates are allowed to be unbounded. For the mean problem, we design a method called successive approximation, which enables us to prove the existence of a solution to the Hamilton-Jacobi-Bellman (HJB) equation, and then the existence of a mean-optimal policy under some growth and compact-continuity conditions. For the variance problem, using the first-jump analysis, we succeed in converting the second moment of the finite horizon reward to a mean of a finite horizon reward with new reward functions under suitable conditions, based on which the associated HJB equation for the variance problem and the existence of variance-optimal policies are established. Value iteration algorithms for computing mean- and variance-optimal policies are proposed.

Similar content being viewed by others

References

Bäuerle N, Rieder U (2011) Markov decision processes with applications finance to universitext. Springer, Heidelberg

Boucherie RJ, van Dijk NM (2017) Markov decision processes in practice. Springer, Switzerland

Ghosh MK, Saha S (2012) Continuous-time controlled jump Markov processes on the finite horizon. Optimization, control, and applications of stochastic systems. Birkhäuser, New York

Guo XP, Song XY (2009) Mean-variance criteria for finite continuous-time Markov decision processes. IEEE Trans Automat Control 54:2151–2157

Guo XP, Hernández-Lerma O (2009) Continuous-time Markov decision processes. Springer, Now York

Guo XP, Ye L, Yin G (2012a) A mean-variance optimization problem for discounted Markov decision processes. European J Oper Res 220:423–429

Guo XP, Huang YH, Song XY (2012b) Linear programming and constrained average optimality for general continuous-time Markov decision processes in history-dependent policies. Linear SIAM J Control Optim 50:23–47

Guo XP, Huang XX, Huang YH (2015a) Finite horizon optimality for continuous-time Markov decision processes with unbounded transition rates. Adv Appl Probab 47:1064–1087

Guo XP, Huang XX, Zhang Y (2015b) On the first passage g-mean-variance optimality for discounted continuous-time Markov decision processes. SIAM J Control Optim 53:1406–1424

Hernández-Lerma O, Lasserre JB (1999) Further topics on discrete-time Markov control processes. Springer, New York

Hernández-Lerma O, Vega-Amaya O, Carrasco G (1999) Sample-path optimality and variance-minimization of average cost Markov control processes Sample-path. SIAM J Control Optim 38:79–93

Huang YH, Guo XP (2015) Mean-variance problems for finite horizon semi-Markov decision processes. Appl Math Optim 72:233–259

Jacod J (1975) Multivariate point processes: predictable projection, Radon-Nikodym derivatives, representation of martingales. Z Wahrscheinlichkeitstheorie und Verw Gebiete 31:235–253

Kitaev MY (1985) Semi-Markov and jump Markov controllable models: average cost criterion. SIAM Theory Probab Appl 30:272–288

Mannor S, Tsitsiklis JN (2013) Algorithmic aspects of mean-variance optimization in Markov decision processes. European J Oper Res 231:645–653

Mendoza-Pérez AF, Hernández-Lerma O (2012) Variance-minimization of Markov control processes with pathwise constraints. Optimization 61:1427–1447

Miller BL (1968) Finite state continuous time Markov decision processes with a finite planning horizon. SIAM J Control 6:266–280

Piunovskiy A, Zhang Y (2011) Discounted continuous-time Markov decision processes with unbounded rates: the convex analytic approach. SIAM J control Optim 49:2032–2061

Pliska SR (1975) Controlled jump processes. Stoch Process Appl 3:259–282

Prieto-Rumeau T, Hernández-Lerma O (2009) Variance minimization and the overtaking optimality approach to continuous-time controlled Markov chains. Math Meth Oper Res 70:527–540

Piunovskiy A, Zhang Y (2014) Discounted continuous-time Markov decision processes with unbounded rates and randomized history-dependent policies: the dynamic programming approach. 4OR-Q J Oper Res 12:49–75

Xia L (2016) Optimization of Markov decision processes under the variance criterion. Automatica 73:269–278

Yushkevich AA (1977) Controlled Markov models with countable state space and continuous time. SIAM Theory Probab Appl 22:215–235

Yushkevich AA (1980) On reducing a jump controllable Markov model to a model with discrete time. SIAM Theory Probab Appl 25:58–69

Acknowledgments

This work was supported by NSFC (No.11471341).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

In this section, we provide proofs of related results in Sections 3 and 4.

1.1 A.1 Proofs of related results in Section 3

Proof of Lemma 3.1

From Lemma 2.1(b) and Assumption 3.1, it follows that

which implies that V π is in \(\mathbb {B}_{w}([0,T] \times E)\). □

Proof of Lemma 3.2

By properties (2.3)–(2.4), we see that

Thus, V f satisfies (3.1). Now, multiplying by \( e^{-{{\int }_{0}^{t}} q(v,x,f(v,x))dv}\) both sides of the above equality yields

Differentiating both sides of the above equality with respect to t, we have

Dividing by \( e^{-{{\int }_{0}^{t}} q(v,x,f(v,x))dv}\) both sides of the above equality yields

The proof is complete. □

Proof of Theorem 3.1

Under Assumptions 2.1 and 3.2, using a similar argument to the proof of the Dynkin’s formula in Guo et al. (2015a) for denumerable states, we can show that,

and

On the other hand, it follows from Lemma 2.1(c) that, for almost every s > t ≥ 0,

Thus, by Eqs. A.1–A.3, using Fubini’s theorem and the integration by part, we have

which yields the result. □

Proof of Theorem 3.2

-

(a)

By Theorem 3.1, we have

$$\begin{array}{@{}rcl@{}} & & \mathbb{E}_{(t,x)}^{\pi} \left[ u(T,X_{T}) \right] -u(t,x)\\ &=& \mathbb{E}_{(t,x)}^{\pi} \left[{\int}_{t}^{T}\left( u_{t}(s, X_{s}) + {\int}_{E} u(s,y)q(dy|s, X_{s}, W_{s})\right)ds \right] \\ & \leq & -\mathbb{E}_{(t,x)}^{\pi} \left[{{\int}_{t}^{T}} r(s, X_{s}, W_{s})ds \right], \end{array} $$and so

$$\begin{array}{@{}rcl@{}} \mathbb{E}_{(t,x)}^{\pi} \left[{\int}_{t}^{T} r(s, X_{s}, W_{s})ds +g(T, X_{T}) \right] \leq u(t,x) \ \ \forall (t,x)\in [0,T] \times E, \end{array} $$which implies part (a).

-

(b)

From Lemma 3.2, we see that V f(t, x) is a solution in \(\mathbb {B}_{w}([0,T] \times E)\) to the differential equation, and is differentiable in almost everywhere t ∈ [0, T]. To show V f(t, x) is in \(\mathbb {C}_{w, \bar {w}}^{0,1}([0,T] \times E)\), it remains to verify \({V^{f}_{t}}\) is \(\bar {w}\)-bounded. Indeed, by Lemma 3.2, Assumptions 2.1, 3.1 and 3.2, we have

$$\begin{array}{@{}rcl@{}} | V^f_t(t,x)| &\leq & M w(x) + \| V^f \|_w {\int}_E w(y) |q(dy|t,x,f(t,x))| \\ &\leq & M w(x) + \| V^f \|_w \left[ {\int}_E w(y) q(dy|t,x,f(t,x)) + 2 q^{*}(x) w(x) \right] \\ &\leq & M L_1 \bar{w}(x) + \| V^f \|_w \left[ c_0 L_1 \bar{w}(x)+b_0 + 2 L_1 \bar{w}(x) \right] \\ &\leq & \bar{w}(x) \left[ M L_1 + \| V^f \|_w (c_0 L_1+ b_0 + 2 L_1) \right].\end{array} $$Now, if u(t, x) is also a solution in \(\mathbb {C}_{w, \bar {w}}^{0,1}([0,T] \times E)\) of the differential equation, then by part (a), we must have V f(t, x) = u(t, x) for all (t, x) ∈ [0, T] × E.

□

Proof of Theorem 3.3

-

(a)

The monotonicity of the sequence {un}n≥ 0 is proved by a mathematical induction. We first show that u1 ≥ u0. Indeed, under Assumptions 2.1, and 3.1, for every (t, x) ∈ [0, T] × E, a direct calculation gives

$$\begin{array}{@{}rcl@{}} && u_{1}(t,x)\\ &\!\geq& - M w(x) e^{-m(x)(T-t)} - M w(x){\int}_{0}^{T-t}e^{-m(x)s}d s +{\int}_{0}^{T-t}e^{-m(x)s} \sup\limits_{a\in A(t,x)} \\ &&\times\left[{\int}_{E}u_{0}(t+s,y)q(dy|t+s,x,a)+m(x)u_{0}(t+s,x)\right]d s \\ &\!\geq & - M w(x) e^{-m(x)(T-t)} - M w(x){\int}_{0}^{T-t}e^{-m(x)s}d s \\ & & \!- \frac{M}{c_{0}} {\int}_{0}^{T-t}e^{-m(x)s} \left[ (c_{0}e^{c_{0}(T-t-s)}+ e^{c_{0}(T-t-s)}-1)(c_{0} w(x)+ b_{0}) \right] ds + \frac{M}{c_{0}} {\int}_{0}^{T\!-t}\\ &&\times \left[ (c_{0}e^{c_{0}(T-t-s)}+e^{c_{0}(T-t-s)}-1) (w(x)+ \frac{b_{0}}{c_{0}}) - b_{0} (T-t-s + 1)\right] d e^{-m(x)s} \\ &\!=& - M w(x) e^{-m(x)(T-t)} - M w(x){\int}_{0}^{T-t}e^{-m(x)s}d s \\ & & - \frac{M}{c_{0}} {\int}_{0}^{T-t}e^{-m(x)s} \left[ (c_{0}e^{c_{0}(T-t-s)}+ e^{c_{0}(T-t-s)}-1)(c_{0} w(x)+ b_{0}) \right] ds\\ && +\frac{M}{c_{0}} e^{-m(x)(T-t)} \left[c_{0} (w(x)+ \frac{b_{0}}{c_{0}}) - b_{0} \right] \\ && -\frac{M}{c_{0}} \left[ (c_{0}e^{c_{0}(T-t)}+e^{c_{0}(T-t)}-1) (w(x)+ \frac{b_{0}}{c_{0}}) - b_{0} (T-t + 1)\right] \\ && -\frac{M}{c_{0}} {\int}_{0}^{T-t} e^{-m(x)s} \left[ (-{c_{0}^{2}} e^{c_{0}(T-t-s)}) -c_{0} e^{c_{0}(T-t-s)}) (w(x)+ \frac{b_{0}}{c_{0}}) + b_{0} \right] d s \\ &\!=& -\frac{M}{c_{0}} \left[ (c_{0}e^{c_{0}(T-t)}+e^{c_{0}(T-t)}-1) (w(x)+ \frac{b_{0}}{c_{0}}) - b_{0} (T-t + 1)\right] = u_{0}(t,x). \end{array} $$Now, assume that un+ 1 ≥ un for some n ≥ 0. Then, the monotonicity of the operator G yields that Gun+ 1 ≥ Gun, i.e., un+ 2 ≥ un+ 1. Thus, by induction, un+ 1 ≥ un for all n ≥ 0. This implies the existence of the point-wise limit u∗.

Moreover, by a similar calculation as in the proof of u1 ≥ u0 and an induction argument, one can show that

which indicates that u∗ is in \(\mathbb {B}_{w}([0,T]\times E)\).

-

(b)

On the one hand, by the monotonicity of G, Gu∗≥ Gun = un+ 1 for all n ≥ 0. Hence, Gu∗≥ u∗. On the other hand, for all \((z,x,a)\in \mathbb {K}\), the monotone convergence theorem yields

$$\begin{array}{@{}rcl@{}} & & \lim\limits_{n\rightarrow \infty}\sup\limits_{a\in A(z,x)}\left[ r(z,x,a) + m(x) {\int}_{E}u_n(z,y)Q(dy|z,x,a)\right]\\ &\geq& r(z,x,a) + m(x) {\int}_{E}u^{*}(z,y)Q(dy|z,x,a), \end{array} $$which gives

$$\begin{array}{@{}rcl@{}} & & \lim\limits_{n\rightarrow \infty}\sup\limits_{a\in A(z,x)}\left[ r(z,x,a) + m(x) {\int}_{E}u_n(z,y)Q(dy|z,x,a)\right]\\ &\geq& \sup_{a\in A(z,x)}\left[ r(z,x,a) + m(x) {\int}_{E}u^{*}(z,y)Q(dy|z,x,a)\right]. \end{array} $$Thus, using the monotone convergence theorem again, we obtain

$$\begin{array}{@{}rcl@{}} & & u^{*}(t,x) \\ &=& \lim\limits_{n\rightarrow\infty} u_{n + 1}(t,x) \\ &=& e^{-m(x)(T-t)}g(T,x) + \lim\limits_{n\rightarrow\infty} {\int}_{0}^{T-t}e^{-m(x)s} \sup\limits_{a\in A(t+s,x)} \left[ r(t+s,x,a) \right.\\ & & \left.+ m(x) {\int}_{E} u_n(t+s,y)Q(dy|t+s,x,a)\right]d s \\ &\geq& e^{-m(x)(T-t)}g(T,x) + {\int}_{0}^{T-t}e^{-m(x)s} \sup\limits_{a\in A(t+s,x)} \left[ r(t+s,x,a)\right.\\ && \left.+ m(x) {\int}_{E} u^{*}(t+s,y)Q(dy|t+s,x,a)\right]d s \end{array} $$for all (t, x) ∈ [0, T] × E, which gives the reverse inequality u∗≥ Gu∗. This shows that Gu∗ = u∗. Further, using a similar argument to those in the proof of Lemma 3.2, one can verify u∗ satisfies the HJB equation (3.3). The last statement is from part (a), Eq. 3.3, and Assumptions 2.1, 3.1, and 3.2.

□

Proof of Theorem 3.4

We prove (a) and (b) together. By Theorem 3.3, we know u∗ verifies the HJB equation

This implies that for all a ∈ A(t, x),

which together with Theorem 3.2(a) yield that

Thus, V ∗≤ u∗.

On the other hand, under Assumption 3.3, the measurable selection theorem ensures the existence of \(f^{*} \in \mathbb {F}\) satisfying

for every (t, x) ∈ [0, T) × E). Therefore, we obtain

It then follows from Theorem 3.2(b) that \(u^{*}(t,x)=V^{f^{*}}(t,x)\leq V^{*}(t,x)\), which together with V ∗≤ u∗ yields that

Since u∗ is in \(\mathbb {C}_{w, \bar {w}}^{0,1}([0,T] \times E)\), V ∗ is also in \(\mathbb {C}_{w, \bar {w}}^{0,1}([0,T] \times E)\). □

1.2 A.2 Proofs of related results in Section 4

Proof of Theorem 4.1

-

(a)

Using Cauchy-Schwartz inequality, Assumptions 3.1 and 4.1 along with Lemma 4.1, we have

$$\begin{array}{@{}rcl@{}} & & S^{f}(t,x) \\ &\leq & M^{2} \mathbb{E}_{(t,x)}^{\pi}\left[{{\int}_{t}^{T}} w(X_{s})d s + w(X_{T}) \right]^{2} \\ &\leq& 2 M^{2} \mathbb{E}_{(t,x)}^{\pi}\left[{{\int}_{t}^{T}} w(X_{s})d s \right]^{2} + 2 M^{2}\mathbb{E}_{(t,x)}^{\pi}\left[w^{2}(X_{T}) \right] \\ &\leq & 2 M^{2} \mathbb{E}_{(t,x)}^{\pi}\left[ (T-t) {{\int}_{t}^{T}} w^{2}(X_{s})d s \right]+ 2M^{2} \left[ e^{c_{2}(T-t)}w^{2}(x) + \frac{b_{2}}{c_{2}}(e^{c_{2}(T-t)} -1) \right] \\ &\leq & 2 M^{2} (T-t) {{\int}_{t}^{T}} \mathbb{E}_{(t,x)}^{f} \left[w^{2}(X_{s}) \right]d s + 2 M^{2} e^{c_{2}(T-t)}(w^{2}(x) + \frac{b_{2}}{c_{2}})\\ &\leq & 2 M^{2} (T-t)^{2} e^{c_{2}(T-t)}(w^{2}(x) + \frac{b_{2}}{c_{2}}) + 2 M^{2} e^{c_{2}(T-t)}(w^{2}(x) + \frac{b_{2}}{c_{2}})\\ &\leq & 2M^{2} [(T-t)^{2} + 1] e^{c_{2}(T-t)}(1 + \frac{b_{2}}{c_{2}})w^{2}(x), \end{array} $$which indicates that Sπ is in \(\mathbb {B}_{w^{2}}([0,T] \times E)\) for each π ∈ Π.

-

(b)

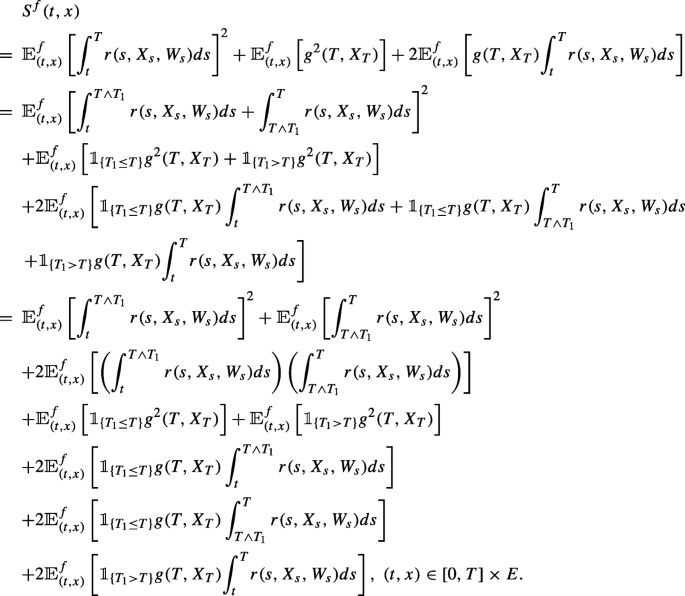

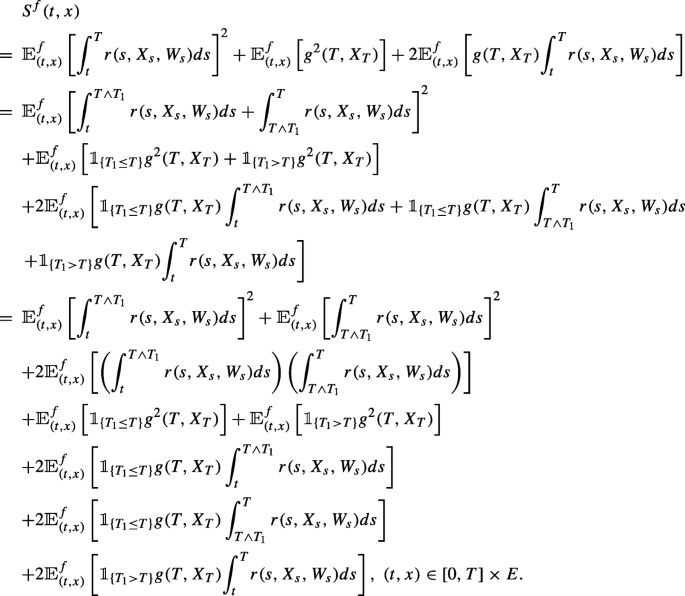

We rewrite Sf as the following form

For simplicity of notation, let

We next compute L1, L2,…, and L8. First, since Xs = Z0 = x for all s < T1, we obtain

Second, using the properties (2.3) and (2.4) yields

Third, it follows from the properties (2.3) and (2.4) again that

Thus, for every (t, x) ∈ [0, T] × E, we have

Multiplying by \( e^{-{{\int }_{0}^{t}} q(v,x,f(v,x))dv}\) both sides of the above equality yields

Differentiating both sides of the above equality with respect to t, we have

Dividing by \( e^{-{{\int }_{0}^{t}} q(v,x,f(v,x))dv}\) both sides of the above equality and using Lemma 3.2 yield

Hence, we obtain the formula

Clearly, for every \(f \in \mathbb {F}_{h}\), Sf satisfies the differential equation

On the other hand, it is easy to verify that Sf is in \(\mathbb {C}_{w^{2}, \hat {w}}^{0,1}([0,T] \times E)\) under Assumptions 2.1, 3.1, 4.1 and 4.2.

Finally, note that Ch(t, x, a) := 2r(t, x, a)h(t, x) is w2-bounded under Assumptions 2.1 and 3.1. With w and \(\bar {w}\) in lieu of w2 and \(\hat {w}\) in Theorem 3.2, respectively, it follows from Assumptions 4.1 and 4.2 that

is the unique solution in \(\mathbb {C}_{w^{2}, \hat {w}}^{0,1}([0,T] \times E)\) to the equation

Hence, we must have \(S^{f}(t,x)= \mathbb {E}_{(t,x)}^{f} \left [{{\int }_{t}^{T}} C_{h}(s,X_{s}, f(s,X_{s})ds +g^{2}(T,X_{T}) \right ]\), for every (t, x) ∈ [0, T] × E and \( f \in \mathbb {F}_{h}\). □

Proof of Lemma 4.2

-

(a)

Using a similar argument to the proof of Lemma 8.3.7 in Hernández-Lerma and Lasserre (1999), part (a) follows from Assumption 3.3(c) and Assumption 4.3.

-

(b)

Fix (t, x) ∈ [0, T] × E. To show Ah(t, x) is compact, it suffices to prove Ah(t, x) is closed because Ah(t, x) ⊂ A(t, x) and A(t, x) is compact. Indeed, let {an}⊂ Ah(t, x) such that an → a ∈ A(t, x). Then, for each n, we have

$$\begin{array}{@{}rcl@{}} h_{t}(t,x)+r(t,x,a_{n})+ {\int}_{E} h(t,y)q(dy|t,x,a_{n}) = 0. \end{array} $$Since \(h \in \mathbb {B}_{w}([0,T] \times E)\) under Assumptions 2.1 and 3.1, by Assumption 3.3, \({\int }_{E} h(t,y) q(dy|t,x,a)\) is continuous in a ∈ A(t, x). Thus, let n →∞ in the above equality, we obtain

$$\begin{array}{@{}rcl@{}} h_{t}(t,x)+r(t,x,a)+ {\int}_{E} h(t,y)q(dy|t,x,a) = 0, \end{array} $$which implies that a ∈ Ah(t, x).

□

Proof of Theorem 4.3

We prove (a) and (b) together. First, note that under Assumptions 2.1, 3.1 and 3.2, Theorem 3.2(b) implies that a policy \(f \in \mathbb {F}\) is in \(\mathbb {F}_{h}\) if and only if f(t, x) ∈ Ah(t, x) for all (t, x) ∈ [0, T) × E. Now, by Theorem 4.2, we know that v∗ verifies the HJB equation

Hence, for all \(f \in \mathbb {F}_{h}\) and (t, x) ∈ [0, T) × E,

Under Assumptions 4.1 and 4.2, the Dynkin’s formula in Theorem 3.1 also holds for functions \(u \in \mathbb {C}_{w^{2}, \hat {w}}^{0,1}([0,T] \times E)\). Since v∗ is in \(\mathbb {C}_{w^{2}, \hat {w}}^{0,1}([0,T] \times E)\) by Theorem 4.2, using Eq. A.4, the Dynkin’s formula and Theorem 4.1 yields that

Thus, S∗(h, t, x) ≥ v∗(t, x).

On the other hand, under Assumptions 2.1, 3.1, 3.3 and 4.3, the measurable selection theorem and Lemma 4.2 ensure the existence of \(f^{*}_{h} \in \mathbb {F}_{h}\) satisfying

It then follows from Theorem 4.1(b) that \(v^{*}(t,x)=S^{f^{*}_{h}}(t,x)\geq S^{*}(h,t,x)\), which together with S∗(h, t, x) ≥ v∗(t, x) yields that

Since v∗ is in \(\mathbb {C}_{w^{2}, \hat {w}}^{0,1}([0,T] \times E)\), S∗(h) is also in \(\mathbb {C}_{w^{2}, \hat {w}}^{0,1}([0,T] \times E)\). □

Rights and permissions

About this article

Cite this article

Huang, Y. Finite horizon continuous-time Markov decision processes with mean and variance criteria. Discrete Event Dyn Syst 28, 539–564 (2018). https://doi.org/10.1007/s10626-018-0273-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10626-018-0273-1