Abstract

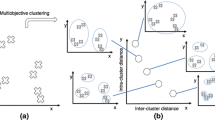

A variety of general strategies have been applied to enhance the performance of multi-objective optimization algorithms for many-objective optimization problems (those with more than three objectives). One of these strategies is to split the solutions to cover different regions of the search space (clusters) and apply an optimizer to each region with the aim of producing more diverse solutions and achieving a better distributed approximation of the Pareto front. However, the effectiveness of clustering in this context depends on a number of issues, including the characteristics of the objective functions. In this paper we show how the choice of the clustering strategy can greatly influence the behavior of an optimizer. We investigate the relation between the characteristics of a multi-objective optimization problem and the efficiency of the use of a clustering combination (clustering space, metric) in the resolution of this problem. Using as a case study the Iterated Multi-swarm (I-Multi) algorithm, a recently introduced multi-objective particle swarm optimization algorithm, we scrutinize the impact that clustering in different spaces (of variables, objectives and a combination of both) can have on the approximations of the Pareto front. Furthermore, employing two difficult multi-objective benchmarks of problems with up to 20 objectives, we evaluate the effect of using different metrics for determining the similarity between the solutions during the clustering process. Our results confirm the important effect of the clustering strategy on the behavior of multi-objective optimizers. Moreover, we present evidence that some problem characteristics can be used to select the most effective clustering strategy, significantly improving the quality of the Pareto front approximations produced by I-Multi.

Similar content being viewed by others

References

Adra, S. F. (2007). Improving convergence, diversity and pertinency in multiobjective optimisation. Ph.D. Thesis, Department of Automatic Control and Systems Engineering, The University of Sheffield, UK.

Aggarwal, C. C., Hinneburg, A., & Keim, D. A. (2001). On the surprising behavior of distance metrics in high dimensional space. In International Conference on Database Theory (pp. 420–434). Springer.

Bader, J., Deb, K., & Zitzler, E. (2010). Faster hypervolume-based search using Monte Carlo sampling. In Multiple Criteria Decision Making for Sustainable Energy and Transportation Systems. Lecture Notes in Economics and Mathematical Systems (Vol. 634, pp. 313–326). Berlin: Springer.

Benameur, L., Alami, J., & Imrani, A. E. (2009). A new hybrid particle swarm optimization algorithm for handling multiobjective problem using fuzzy clustering technique. In Proceedings of the 2009 International Conference on Computational Intelligence, Modelling and Simulation, CSSIM ’09 (pp. 48–53). Washington, DC: IEEE Computer Society.

Berkhin, P. (2006). A survey of clustering data mining techniques. In J. Kogan, C. Nicholas, & M. Teboulle (Eds.), Grouping multidimensional data: Recent advances in clustering (pp. 25–71). Berlin: Springer.

Bosman, P. A., & Thierens, D. (2002). Multi-objective optimization with diversity preserving mixture-based iterated density estimation evolutionary algorithms. International Journal of Approximate Reasoning, 31(3), 259–289.

Bringmann, K., Friedrich, T., Igel, C., & Voß, T. (2013). Speeding up many-objective optimization by Monte Carlo approximations. Artificial Intelligence, 204, 22–29.

Britto, A., Mostaghim, S., & Pozo, A. (2013). Iterated multi-swarm: A multi-swarm algorithm based on archiving methods. In Genetic and Evolutionary Computation-GECCO (pp. 583–590). ACM.

Britto, A., & Pozo, A. (2012). Using archiving methods to control convergence and diversity for many-objective problems in particle swarm optimization. In IEEE Congress on Evolutionary Computation (pp. 1–8). IEEE Computer Society.

Brownlee, A. E. I., & Wright, J. A. (2012). Solution analysis in multi-objective optimization (pp. 317–324). Loughborough University, IBPSA-England.

Castro, O. R, Jr., Santana, R., & Pozo, A. (2016). C-Multi: A competent multi-swarm approach for many-objective problems. Neurocomputing, 180, 68–78.

Clerc, M., & Kennedy, J. (2002). The particle swarm-explosion, stability, and convergence in a multidimensional complex space. IEEE Transactions on Evolutionary Computation, 6(1), 58–73.

Coello, C. A. C., & Cortés, N. C. (2005). Solving multiobjective optimization problems using an artificial immune system. Genetic Programming and Evolvable Machines, 6(2), 163–190.

Coello, C. A. C., Lamont, G. B., & Veldhuizen, D. A. V. (2006). Evolutionary algorithms for solving multi-objective problems (genetic and evolutionary computation). Secaucus: Springer, New York Inc.

Davies, D. L., & Bouldin, D. W. (1979). A cluster separation measure. IEEE Transactions on Pattern Analysis and Machine Intelligence, 1(2), 224–227.

Deb, K., Agrawal, S., Pratap, A., & Meyarivan, T. (2000). A fast elitist non-dominated sorting genetic algorithm for multi-objective optimisation: NSGA-II. In Proceedings of the 6th International Conference on Parallel Problem Solving from Nature, PPSN VI (pp. 849–858). London: Springer.

Deb, K., Thiele, L., Laumanns, M., & Zitzler, E. (2002). Scalable multi-objective optimization test problems. In IEEE Congress on Evolutionary Computation (Vol. 1, pp. 825–830). IEEE Press.

Deb, K., Thiele, L., Laumanns, M., & Zitzler, E. (2005). Scalable test problems for evolutionary multiobjective optimization. In A. Abraham, L. Jain, & R. Goldberg (Eds.), Evolutionary multiobjective optimization, advanced information and knowledge processing (pp. 105–145). London: Springer.

Deborah, H., Richard, N., & Hardeberg, J. Y. (2015). A comprehensive evaluation of spectral distance functions and metrics for hyperspectral image processing. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 8(6), 3224–3234.

Demsar, J. (2006). Statistical comparisons of classifiers over multiple data sets. Journal of Machine Learning Research, 7, 1–30.

Deza, M. (2009). Encyclopedia of distances. Berlin: Springer.

Emmendorfer, L. R., & Pozo, A. (2009). Effective linkage learning using low-order statistics and clustering. IEEE Transactions on Evolutionary Computation, 13(6), 1233–1246.

Friedman, M. (1937). The use of ranks to avoid the assumption of normality implicit in the analysis of variance. Journal of the American Statistical Association, 32(200), 675–701.

Fritsche, G., Strickler, A., Pozo, A., & Santana, R. (2015). Capturing relationships in multi-objective optimization. In Brazilian Conference on Intelligent Systems (BRACIS) (pp. 222–227).

Hartigan, J., & Wong, M. (1979). Algorithm AS 136: A K-means clustering algorithm. Applied Statistics, 28(1), 100–108.

Howarth, P., & Rüger, S. (2005). Fractional distance measures for content-based image retrieval. In European Conference on Information Retrieval (pp. 447–456). Springer.

Huband, S., Hingston, P., Barone, L., & While, L. (2006). A review of multiobjective test problems and a scalable test problem toolkit. IEEE Transactions on Evolutionary Computation, 10(5), 477–506.

Ikeda, K., Kita, H., & Kobayashi, S. (2001). Failure of Pareto-based MOEAs: does non-dominated really mean near to optimal? In IEEE Congress on Evolutionary Computation (Vol. 2, pp. 957–962. IEEE Press.

Ishibuchi, H., Akedo, N., Ohyanagi, H., & Nojima, Y. (2011). Behavior of EMO algorithms on many-objective optimization problems with correlated objectives. In IEEE Congress on Evolutionary Computation (pp. 1465–1472).

Ishibuchi, H., Tsukamoto, N., & Nojima, Y. (2008). Evolutionary many-objective optimization: A short review. In IEEE Congress on Evolutionary Computation (pp. 2419–2426). IEEE Press.

Jin, Y. & Sendhoff, B. (2004). Reducing fitness evaluations using clustering techniques and neural network ensembles. In K. Deb (Ed.), Genetic and Evolutionary Computation-GECCO. Lecture Notes in Computer Science (Vol. 3102, pp. 688–699). Berlin: Springer.

Karshenas, H., Santana, R., Bielza, C., & Larrañaga, P. (2014). Multi-objective optimization based on joint probabilistic modeling of objectives and variables. IEEE Transactions on Evolutionary Computation, 18(4), 519–542.

Kaufman, L., & Rousseeuw, P. (1987). Clustering by means of medoids. In Statistical data analysis based on the L1-Norm and related methods (pp. 405–416). Elsevier Science Ltd.

Kennedy, J., & Eberhart, R. (1995). Particle swarm optimization. In Proceedings of IEEE International Conference on Neural Networks (pp. 1942–1948). IEEE Press.

Kruskal, W. H., & Wallis, W. A. (1952). Use of ranks in one-criterion variance analysis. Journal of the American Statistical Association, 47(260), 583–621.

Kukkonen, S., & Deb, K. (2006). Improved pruning of non-dominated solutions based on crowding distance for bi-objective optimization problems. In IEEE International Conference on Evolutionary Computation (pp. 1179–1186). IEEE Press.

Larrañaga, P., & Lozano, J. A. (Eds.). (2002). Estimation of distribution algorithms: A new tool for evolutionary computation. Boston: Kluwer Academic Publishers.

Laumanns, M., & Zenklusen, R. (2011). Stochastic convergence of random search methods to fixed size Pareto front approximations. European Journal of Operational Research, 213(2), 414–421.

Li, K., Deb, K., Zhang, Q., & Kwong, S. (2015). An evolutionary many-objective optimization algorithm based on dominance and decomposition. IEEE Transactions on Evolutionary Computation, 19(5), 694–716.

Liang, X., Li, W., Zhang, Y., & Zhou, M. (2015). An adaptive particle swarm optimization method based on clustering. Soft Computing, 19(2), 431–448.

Lozano, J., & Larrañaga, P. (1999). Applying genetic algorithms to search for the best hierarchical clustering of a dataset. Pattern Recognition Letters, 20(9), 911–918.

Ma, X., Liu, F., Qi, Y., Wang, X., Li, L., Jiao, L., et al. (2016). A multiobjective evolutionary algorithm based on decision variable analyses for multiobjective optimization problems with large-scale variables. IEEE Transactions on Evolutionary Computation, 20(2), 275–298.

Mahfoud, S. W. (1995). Niching methods for genetic algorithms. Ph.D. Thesis, University of Illinois at Urbana–Champaign, Urbana, IL. Also IlliGAL Report No. 95001.

Mostaghim, S., & Teich, J. (2004). Covering pareto-optimal fronts by subswarms in multi-objective particle swarm optimization. In IEEE Congress on Evolutionary Computation (Vol. 2, pp. 1404–1411). IEEE Press.

Nebro, A. J., Durillo, J. J., Garcia-Nieto, J., Coello, C. A. C., Luna, F., & Alba, E. (2009). SMPSO: A new PSO-based metaheuristic for multi-objective optimization. In Computational Intelligence in Multi-criteria Decision-Making (pp. 66–73). IEEE Press.

Nemenyi, P. (1963). Distribution-free multiple comparisons. Ph.D. Thesis, Princeton University.

Okabe, T., Jin, Y., Sendhoff, B., & Olhofer, M. (2004). Voronoi-based estimation of distribution algorithm for multi-objective optimization. In IEEE Congress on Evolutionary Computation (pp. 1594–1601). Portland: IEEE Press.

Pelikan, M. (2005). Hierarchical Bayesian optimization algorithm: Toward a new generation of evolutionary algorithms. In Studies in fuzziness and soft computing (Vol. 170). Springer.

Pelikan, M., & Goldberg, D. E. (2000). Genetic algorithms, clustering, and the breaking of symmetry. In M. Schoenauer, K. Deb, G. Rudolph, X. Lutton, J. J. Merelo, et al. (Eds.), Parallel Problem Solving from Nature-PPSN VI 6th International Conference. Lecture Notes in Computer Science (pp. 385–394). Paris: Springer.

Pelikan, M., Sastry, K., & Goldberg, D. E. (2005). Multiobjective hBOA, clustering and scalability. IlliGAL Report No. 2005005, University of Illinois at Urbana–Champaign, Illinois Genetic Algorithms Laboratory, Urbana, IL.

Pulido, G. T., & Coello Coello, C. A. (2004). Using clustering techniques to improve the performance of a multi-objective particle swarm optimizer. In K. Deb (Ed.), Genetic and Evolutionary Computation-GECCO. Lecture Notes in Computer Science (Vol. 3102, pp. 225–237). Berlin: Springer.

Santana, R., Larrañaga, P., & Lozano, J. A. (2010). Learning factorizations in estimation of distribution algorithms using affinity propagation. Evolutionary Computation, 18(4), 515–546.

Schutze, O., Esquivel, X., Lara, A., & Coello, C. A. C. (2012). Using the averaged Hausdorff distance as a performance measure in evolutionary multiobjective optimization. IEEE Transactions on Evolutionary Computation, 16(4), 504–522.

Sindhya, K., Miettinen, K., & Deb, K. (2013). A hybrid framework for evolutionary multi-objective optimization. IEEE Transactions on Evolutionary Computation, 17(4), 495–511.

Tsou, C. -S., Fang, H. -H., Chang, H. -H., & Kao, C. -H. (2006). An improved particle swarm Pareto optimizer with local search and clustering. In T. -D. Wang, X. Li, S. -H. Chen, X. Wang, H. Abbass, H. Iba, et al. (Eds.), Simulated Evolution and Learning. Lecture Notes in Computer Science (Vol. 4247, pp. 400–407). Berlin: Springer.

Tsuji, M., Munetomo, M., & Akama, K. (2006). Linkage identification by fitness difference clustering. Evolutionary Computation, 14(4), 383–409.

While, L., Bradstreet, L., & Barone, L. (2012). A fast way of calculating exact hypervolumes. IEEE Transactions on Evolutionary Computation, 16(1), 86–95.

Yen, G., & Daneshyari, M. (2006). Diversity-based information exchange among multiple swarms in particle swarm optimization. In IEEE Congress on Evolutionary Computation (pp. 1686–1693).

Zhang, Q., & Xue, S. (2007). An improved multi-objective particle swarm optimization algorithm. In L. Kang, Y. Liu, & S. Zeng (Eds.), Advances in Computation and Intelligence. Lecture Notes in Computer Science (Vol. 4683, pp. 372–381). Berlin: Springer.

Zhang, Y., wei Gong, D., & hai Ding, Z. (2011). Handling multi-objective optimization problems with a multi-swarm cooperative particle swarm optimizer. Expert Systems with Applications, 38(11), 13933–13941.

Zitzler, E., & Thiele, L. (1999). Multiobjective evolutionary algorithms: a comparative case study and the strength Pareto approach. IEEE Transactions on Evolutionary Computation, 3(4), 257–271.

Author information

Authors and Affiliations

Corresponding author

Additional information

Olacir R. Castro Jr and Roberto Santana are supported by CNPq, National Council for Scientific and Technological Development–Brazil, Program Science Without Borders No. 200040/2015-4. Aurora Pozo is supported by CNPq productivity grant No. 306103/2015-0. Roberto Santana and Jose A. Lozano are supported by the Basque Government Research Groups IT-609-13 2013-2018 program and Spanish Ministry of Economy and Competitiveness MINECO: TIN2016-78365-R. Jose A. Lozano is also supported by Basque Government through the BERC 2014-2017 and Spanish Ministry of Economy and Competitiveness MINECO: BCAM Severo Ochoa excellence accreditation SEV-2013-0323.

Appendix

Appendix

This section contains the summarized table of the related works. Tables with the ranked results per algorithm, problem and objective number, including the Kruskal–Wallis test results, are included in this section as well.

Rights and permissions

About this article

Cite this article

Castro, O.R., Pozo, A., Lozano, J.A. et al. An investigation of clustering strategies in many-objective optimization: the I-Multi algorithm as a case study. Swarm Intell 11, 101–130 (2017). https://doi.org/10.1007/s11721-017-0134-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11721-017-0134-9