Abstract

A set of k points that optimally summarize a distribution is called a set of k-principal points, which is a generalization of the mean from one point to multiple points and is useful especially for multivariate distributions. This paper discusses the estimation of principal points of multivariate distributions. First, an optimal estimator of principal points is derived for multivariate distributions of location-scale families. In particular, an optimal principal points estimator of a multivariate normal distribution is shown to be obtained by using principal points of a scaled multivariate t-distribution. We also study the case of multivariate location-scale-rotation families. Numerical examples are presented to compare the optimal estimators with maximum likelihood estimators.

Similar content being viewed by others

1 Introduction

An underlying principal of many statistical methods is to find a useful summarization of a distribution (empirical or otherwise). A common approach in the realm of classification is to determine a set of points that optimally represent a distribution (e.g. cluster analysis). Mean squared error is perhaps the most common optimality criterion in practice. The set of k points that optimally represent a distribution in terms of mean squared error is called a set of kprincipal points. This paper presents results on optimal estimators of principal points for a wide class of multivariate distributions, namely multivariate distributions of location-scale and location-scale-rotation families.

Formally, suppose that \(\varvec{X}\) is a p-dimensional random vector with finite second moments. The k-principal points (Flury 1990) of the distribution of \(\varvec{X}\) is defined to be a set \(\{ \varvec{\gamma }_{1}^{*},\dots ,\varvec{\gamma }_{k}^{*}\}\) that minimizes the mean squared distance

The 1-principal point is the mean \(E[\varvec{X}]\) and represents the optimal 1-point summarization of a distribution in terms of mean squared error. A set of k-principal points with \(k\ge 2\) can be seen as a generalization of the mean of the distribution from one point to multiple points.

Theoretical aspects and applications of principal points of univariate and multivariate distributions have been studied in many papers. Tarpey et al. (1995) showed that when \(\{ \varvec{\gamma }_{1}^{*},\dots ,\varvec{\gamma }_{k}^{*}\}\) is a set of k-principal points of the distribution of \(\varvec{X}\), it holds for any \(a\in \mathfrak {R}\), \(\varvec{b}\in \mathfrak {R}^p\), and \(\varvec{G}\in O_p\) that \(\{ a\varvec{G}'\varvec{\gamma }_{1}^{*}+\varvec{b},\dots ,a\varvec{G}'\varvec{\gamma }_{k}^{*}+\varvec{b} \}\) is a set of k-principal points of the distribution of \(a\varvec{G}'\varvec{X}+\varvec{b}\), where \(O_p\) denotes the set of \(p\times p\) orthogonal matrices. Patterns of principal points and their symmetry and uniqueness have been discussed extensively in the literature, e.g., Trushkin (1982), Li and Flury (1995), Tarpey (1995), Tarpey (1998), Yamamoto and Shinozaki (2000a), Gu and Mathew (2001), and Mease and Nair (2006). Connections between principal points and principal subspaces (i.e., subspaces spanned by the first several principal eigenvectors of the covariance matrix) have also been investigated (Tarpey et al. 1995; Yamamoto and Shinozaki 2000b; Kurata 2008; Kurata and Qiu 2011; Matsuura and Kurata 2010, 2011, 2014; Tarpey and Loperfido 2015). Principal points have been studied mainly for continuous distributions, but also for discrete distributions (Gu and Mathew 2001; Yamashita et al. 2017; Yamashita and Goto 2017) and for random functions (Tarpey and Kinateder 2003; Shimizu and Mizuta 2007; Bali and Boente 2009). Applications of principal points to classification in medical applications include distinguishing between drug and placebo responses (Tarpey et al. 2003, 2010; Petkova and Tarpey 2009; Tarpey and Petkova 2010). Ruwet and Haesbroeck (2013) studied the performance of classification using 2-principal points. Vector quantization in the signal processing literature is a closely related topic to principal points (Gersho and Gray 1992; Graf and Luschgy 2000) with many common mathematical connections.

In addition to the theoretical properties, applications of principal points typically require methods of estimating principal points. If \(\varvec{X}\) is assumed to belong to some parametric family, we may estimate the parameters of \(\varvec{X}\) from a random sample and use these parameter estimates to obtain estimates of the k-principal points of \(\varvec{X}\). An alternative but simple approach is to estimate k-principal points by computing the k-principal points of the empirical distribution derived from the random sample, which is a nonparametric estimator of k-principal points. However, as others have shown (Flury 1993; Tarpey 1997, 2007; Stampfer and Stadlober 2002), the maximum likelihood estimator of k-principal points (i.e., k-principal points of the distribution where the unknown parameters are replaced by their maximum likelihood estimates obtained by the random sample) performs better than the nonparametric estimator.

Recently, as an alternative to the maximum likelihood estimator, Matsuura et al. (2015) proposed a minimum expected mean squared distance (EMSD) estimator of k-principal points that minimizes

where \(\{ \hat{\varvec{\gamma }}_{1},\dots ,\hat{\varvec{\gamma }}_{k} \}\) denotes an estimator of k-principal points of the distribution of \(\varvec{X}\).

With point estimation, optimality is typically measured using a loss function that measures how “close” parameter estimates are to the true parameter values, e.g., a mean squared error loss of the form \(E[\Vert \hat{\varvec{\theta }}-\varvec{\theta } \Vert ^2]\) where \(\varvec{\theta }\) is a parameter and \(\hat{\varvec{\theta }}\) is an estimator of \(\varvec{\theta }\). However, in terms of estimating a set of points that best represent the distribution generating the data, a natural criterion, one that we consider in this paper as well as Matsuura et al. (2015), is the EMSD (1.2) that measures the expected squared distance between the point estimates and the random vector.

Matsuura et al. (2015) studied the minimum EMSD estimation of principal points, mainly in the case of univariate distributions of location-scale families. Although they discussed an extension to the multivariate case, their results are restricted to the case where principal points are collinear (i.e., principal points are on a line).

This paper presents a minimum EMSD estimator of k-principal points of multivariate distributions of location-scale families. In particular, we show that a minimum EMSD estimator of k-principal points of a multivariate normal distribution is obtained by using k-principal points of a scaled multivariate t-distribution, which corresponds to the result on the univariate case given by Matsuura et al. (2015). In addition, we give an extension to the case of multivariate distributions of location-scale-rotation families. Several numerical examples are also presented.

2 Minimum EMSD estimator of principal points for multivariate location-scale families

Suppose that the distribution of \(\varvec{X}\) belongs to a location-scale family: its probability density function (pdf) is of the form

where \(\varvec{\mu }\in \mathfrak {R}^p\) is a location parameter and \(\sigma >0\) is a scale parameter.

Let \(\varvec{\delta }_{1}^{*},\dots ,\varvec{\delta }_{k}^{*}\) be k-principal points of the distribution with pdf \(g(\varvec{x})\) (i.e., the distribution of \(\frac{\varvec{X}-\varvec{\mu }}{\sigma }\)). Then, k-principal points \(\varvec{\gamma }_{1}^{*},\dots ,\varvec{\gamma }_{k}^{*}\) of the distribution of \(\varvec{X}\) are given by

Suppose that the values of \(\varvec{\mu }\) and \(\sigma \) are unknown and the function g is known (hence, the covariance matrix of \(\varvec{X}\) is known up to a multiplicative constant). Let \(\varvec{X}_1,\dots ,\varvec{X}_n\) be a random sample of size n drawn from the distribution of \(\varvec{X}\) and let \(\hat{\varvec{\mu }}\) and \(\hat{\sigma }\) be the maximum likelihood estimators of \(\varvec{\mu }\) and \(\sigma \) obtained by the sample \(\varvec{X}_1,\dots ,\varvec{X}_n\), respectively. We note that we assume the existence of maximum likelihood estimators throughout this paper.

Since k-principal points of the distribution of \(\varvec{X}\) are expressed as (2.2), we consider a principal points estimator of the following form:

If we set \(\varvec{a}_{j}=\varvec{\delta }_{j}^{*}, \ j=1,\dots ,k\), then \(\{ \hat{\varvec{\gamma }}_{1},\dots ,\hat{\varvec{\gamma }}_{k} \}\) is the maximum likelihood estimator of k-principal points of the distribution of \(\varvec{X}\).

Here, we consider an alternative aim to derive the best set of values of \(\{ \varvec{a}_{1},\dots ,\varvec{a}_{k} \}\) minimizing EMSD (1.2)

For preparation, we let \(\varvec{X}\) and \(\varvec{X}_1,\dots ,\varvec{X}_n\) be independent and identically distributed with pdf (2.1), and define \(\varvec{U}\equiv \frac{\varvec{X}-\hat{\varvec{\mu }}}{\sigma }\) and \(W \equiv \frac{\hat{\sigma }}{\sigma }\). A straightforward extension of Antle and Bain (1969) shows that the joint distribution of (\(\varvec{U},W\)) does not depend on the unknown parameters \(\varvec{\mu }\) and \(\sigma \). We also let \(\psi (\varvec{u}|W)\) be the pdf of \(\varvec{U}|W\) (the pdf of the conditional distribution of \(\varvec{U}\) given W). Then, the following theorem presents a minimum EMSD estimator of k-principal points of the distribution of \(\varvec{X}\).

Theorem 1

Let

Then, the values of \(\{ \varvec{a}_1,\dots ,\varvec{a}_k\}\) minimizing (2.3) are given by k-principal points of the distribution with pdf (2.4).

Proof

First, we can easily show that the function (2.4) is a pdf: in fact,

Then, it follows that

where in the last line we let \(\varvec{Z}\) be a p-dimensional random vector with pdf (2.4). Hence, we see that the values of \(\{ \varvec{a}_1,\dots ,\varvec{a}_k \}\) minimizing (2.3) are given by k-principal points of the distribution with pdf (2.4). \(\square \)

We note that since the joint distribution of \(\varvec{U}\equiv \frac{\varvec{X}-\hat{\varvec{\mu }}}{\sigma }\) and \(W \equiv \frac{\hat{\sigma }}{\sigma }\) does not depend on the unknown parameters \(\varvec{\mu }\) and \(\sigma \), k-principal points of the distribution with pdf (2.4) also do not depend on the unknown parameters \(\varvec{\mu }\) and \(\sigma \). This theorem is an extension of Matsuura et al. (2015, Theorem 3) that gave a minimum EMSD estimator of principal points of univariate distributions of location-scale families. In fact, if \(p=1\), then \(h(\varvec{z})=\frac{E[ W^{p+2} \psi (\varvec{z}W|W) ]}{E[W^{2}]}\) reduces to the equivalent form of h(z) given in Matsuura et al. (2015, Theorem 3).

Example

(Multivariate normal distribution). Let \(\varvec{X}\) be distributed as \(N(\varvec{\mu },\sigma ^{2}\varvec{\Psi })\), where \(\varvec{\mu }\) and \(\sigma \) are unknown and \(\varvec{\Psi }\) is known. Let \(\varvec{X}_1,\dots ,\varvec{X}_n\) be a random sample of size n drawn from \(N(\varvec{\mu },\sigma ^{2}\varvec{\Psi })\). Then, the maximum likelihood estimators of \(\varvec{\mu }\) and \(\sigma \) are given as follows:

Let

Then, we see from the following proposition and Theorem 1 that the values of \(\{ \varvec{a}_1,\dots ,\varvec{a}_k \}\) minimizing (2.3) are given by k-principal points of \(\sqrt{\frac{(n+1)p}{(n-1)p+2}}\varvec{t}_{(n-1)p+2}(\varvec{0},\varvec{\Psi })\), where \(\varvec{t}_{\nu }(\varvec{0},\varvec{\Psi })\) is the multivariate t-distribution with \(\nu \) degrees of freedom, location vector \(\varvec{0}\), and scale matrix \(\varvec{\Psi }\) with pdf

Proposition 2

Let \(\varvec{X}\) and \(\varvec{X}_1,\dots ,\varvec{X}_n\) be independent and identically distributed as \(N(\varvec{\mu },\sigma ^{2}\varvec{\Psi })\). Let \(\hat{\varvec{\mu }}=\bar{\varvec{X}}={1\over n}\sum _{i=1}^{n}{\varvec{X}_i}\) and \(\hat{\sigma }=\sqrt{\frac{\sum _{i=1}^{n}(\varvec{X}_i-\bar{\varvec{X}})'\varvec{\Psi }^{-1}(\varvec{X}_i-\bar{\varvec{X}})}{np}}\). Define \(\varvec{U}\equiv \frac{\varvec{X}-\hat{\varvec{\mu }}}{\sigma }\) and \(W \equiv \frac{\hat{\sigma }}{\sigma }\). Let \(\psi (\varvec{u}|W)\) be the pdf of \(\varvec{U}|W\). Then, the function (2.4) is the pdf of \(\sqrt{\frac{(n+1)p}{(n-1)p+2}}\varvec{t}_{(n-1)p+2}(\varvec{0},\varvec{\Psi })\).

Proof

It is well known that \(\hat{\varvec{\mu }} \sim N(\varvec{\mu },\frac{\sigma ^{2}}{n}\varvec{\Psi })\), \(\frac{np\hat{\sigma }^2}{\sigma ^{2}} \sim \chi ^2((n-1)p)\), and \(\hat{\varvec{\mu }}\) and \(\hat{\sigma }\) are independent. Hence, it holds that \(\varvec{U} \sim N(\varvec{0},\frac{n+1}{n}\varvec{\Psi })\), \(\sqrt{np}W \sim \chi ((n-1)p)\), and \(\varvec{U}\) and W are independent. We see that \(\varvec{U}|W \sim N(\varvec{0},\frac{n+1}{n}\varvec{\Psi })\) and

We also see that \(E[W^2] = \frac{(n-1)p}{np}=\frac{n-1}{n}\) and the pdf of \(\sqrt{np}W\) is

Hence,

We note that the pdf of \(\varvec{t}_{(n-1)p+2}(\varvec{0},\varvec{\Psi })\) is

and the pdf of \(\sqrt{\frac{(n+1)p}{(n-1)p+2}}\varvec{t}_{(n-1)p+2}(\varvec{0},\varvec{\Psi })\) is given as

Thus, we see that (2.5) is the pdf of \(\sqrt{\frac{(n+1)p}{(n-1)p+2}}\varvec{t}_{(n-1)p+2}(\varvec{0},\varvec{\Psi })\). \(\square \)

Since the covariance matrix of \(\varvec{t}_{\nu }(\varvec{0},\varvec{\Psi })\) is \(\frac{\nu }{\nu -2}\varvec{\Psi }\), we see that, for any dimension p of the distribution, the minimum EMSD estimator is based on k-principal points of a scaled multivariate t-distribution with mean \(\varvec{0}\) and covariance matrix \(\frac{n+1}{n-1}\varvec{\Psi }\). We also see that, when the sample size n goes to infinity, the minimum EMSD estimator tends to be the k-principal points of \(N(\varvec{0},\varvec{\Psi })\). These results correspond to the results of Matsuura et al. (2015, Section 4.2) that gave a minimum EMSD estimator of the best k collinear points.

Numerical example

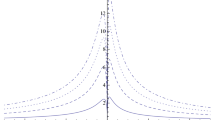

We assume that \(\varvec{X}\sim N\left( \varvec{\mu },\sigma ^{2} {2^2 \ 0\atopwithdelims ()\ 0 \ \ 1^2} \right) \) and consider the case in which 4-principal points of the distribution of \(\varvec{X}\) are estimated from a random sample of size n. The actual 4-principal points of \(\varvec{X}\) are expressed by

and the MSD (1.1) value is \(1.4180\sigma ^{2}\).

Here, we suppose that \(\varvec{\mu }\) and \(\sigma \) are unknown and compare the maximum likelihood estimator and the minimum EMSD estimator of 4-principal points. The maximum likelihood estimator of 4-principal points is simply given by

while the minimum EMSD estimator of 4-principal points is expressed by

where \(\varvec{a}_{1}^{*},\varvec{a}_{2}^{*},\varvec{a}_{3}^{*},\varvec{a}_{4}^{*}\) are 4-principal points of \(\sqrt{\frac{(n+1)p}{(n-1)p+2}}\varvec{t}_{(n-1)p+2}(\varvec{0},\varvec{\Psi })\) with \(p=2\) and \(\varvec{\Psi }={2^2 \ 0\atopwithdelims ()\ 0 \ \ 1^2}\).

Table 1 shows the results obtained by numerical computations for the maximum likelihood estimator and the minimum EMSD estimator of 4-principal points and their EMSD (2.3) values when the sample size is \(n=2,3,5,7,10\). We see from Table 1 that the minimum EMSD estimator gives much smaller EMSD values than the maximum likelihood estimator, especially for small sample size. It is interesting to note that, as Tarpey (1998) showed, the 4-principal points of \(N\left( \varvec{\mu },\sigma ^2 {\tau ^2 \ 0\atopwithdelims ()\ 0 \ \ 1^2} \right) \) form a “cross” pattern for \(1\le \tau \le 2.15\) (approximately) and form a line pattern (i.e. the 4 points lie on the first principal component axis) for \(\tau > 2.15\). Hence, since we set \(\tau =2\) in this example, the actual 4-principal points form a cross pattern and the maximum likelihood estimator also forms a cross pattern; however, the minimum EMSD estimator forms a line pattern when \(n=2,3,5\). It is also interesting to note that in the case where both the maximum likelihood estimator and the minimum EMSD estimator form cross patterns (\(n=7\) and 10 in Table 1), the minimum EMSD estimator points are spread out more than the maximum likelihood estimator points, i.e. in order to minimize the EMSD, the points need to move further away from the mean. It is also worth noting that nonparametric estimators of principal points obtained by the k-means algorithm (Hartigan and Wong 1979) are not available when \(k>n\) and they are extremely unstable when \(k\approx n\).

3 Extension to multivariate location-scale-rotation families

In this section, we extend Theorem 1 to multivariate location-scale-rotation families.

The distribution of \(\varvec{X}\) belongs to a location-scale-rotation family if its pdf is of the form

where \(\varvec{\mu }\in \mathfrak {R}^p\) is a location parameter, \(\sigma >0\) is a scale parameter, and \(\varvec{H}\in O_p\) is a rotation parameter.

Let \(\varvec{\delta }_{1}^{*},\dots ,\varvec{\delta }_{k}^{*}\) be k-principal points of the distribution with pdf \(g(\varvec{x})\). Then, k-principal points \(\varvec{\gamma }_{1}^{*},\dots ,\varvec{\gamma }_{k}^{*}\) of the distribution of \(\varvec{X}\) are given by

Suppose that \(\varvec{\mu }\), \(\sigma \), and \(\varvec{H}\) are unknown and the function g is known. Let \(\varvec{X}_1,\dots ,\varvec{X}_n\) be a random sample of size n drawn from the distribution of \(\varvec{X}\) and let \(\hat{\varvec{\mu }}\), \(\hat{\sigma }\), and \(\hat{\varvec{H}}\) be the maximum likelihood estimators of \(\varvec{\mu }\), \(\sigma \) and \(\varvec{H}\) obtained by the sample \(\varvec{X}_1,\dots ,\varvec{X}_n\), respectively.

Since k-principal points of the distribution of \(\varvec{X}\) are expressed as (3.2), the following form of a principal points estimator is considered:

Before deriving a minimum EMSD estimator of k-principal points, we present some preparatory results. We let \(\varvec{X}\) and \(\varvec{X}_1,\dots ,\varvec{X}_n\) be independent and identically distributed with pdf (3.1), and define \(\varvec{U}_{Rot}\equiv \frac{\hat{\varvec{H}}'(\varvec{X}-\hat{\varvec{\mu }})}{\sigma }\) and \(W \equiv \frac{\hat{\sigma }}{\sigma }\). Here, we show the following Lemma, which is a straightforward extension of Antle and Bain (1969).

Lemma 1

The joint distribution of \(\varvec{U}_{Rot}\equiv \frac{\hat{\varvec{H}}'(\varvec{X}-\hat{\varvec{\mu }})}{\sigma }\) and \(W \equiv \frac{\hat{\sigma }}{\sigma }\) does not depend on \(\varvec{\mu }\), \(\sigma \), and \(\varvec{H}\).

Proof

We let \(\varvec{Y}\equiv \frac{\varvec{H}'(\varvec{X}-\varvec{\mu })}{\sigma }\), \(\varvec{Y}_i \equiv \frac{\varvec{H}'(\varvec{X}_i-\varvec{\mu })}{\sigma }, \ i=1,\dots ,n\), \(\hat{\varvec{u}}\equiv \frac{\varvec{H}'(\hat{\varvec{\mu }}-\varvec{\mu })}{\sigma }\), \(\hat{w} \equiv \frac{\hat{\sigma }}{\sigma }\), and \(\hat{\varvec{V}}\equiv \varvec{H}'\hat{\varvec{H}}\). It is obvious that the distributions of \(\varvec{Y}\) and \(\varvec{Y}_i, \ i=1,\dots ,n\) (whose pdfs are all \(g(\varvec{y})\)) do not depend on \(\varvec{\mu }\), \(\sigma \), and \(\varvec{H}\). Since

we see that

Hence, the joint distribution of (\(\hat{\varvec{u}},\hat{w},\hat{\varvec{V}}\)) does not depend on \(\varvec{\mu }\), \(\sigma \), and \(\varvec{H}\). Since \(\varvec{U}_{Rot}\equiv \frac{\hat{\varvec{H}}'(\varvec{X}-\hat{\varvec{\mu }})}{\sigma } =\hat{\varvec{V}}'(\varvec{Y}-\hat{\varvec{u}})\) and \(W \equiv \frac{\hat{\sigma }}{\sigma }=\hat{w}\), the joint distribution of (\(\varvec{U}_{Rot},W\)) does not depend on \(\varvec{\mu }\), \(\sigma \), and \(\varvec{H}\).

We also let \(\psi _{Rot}(\varvec{u}|W)\) be the pdf of \(\varvec{U}_{Rot}|W\). Then, a minimum EMSD estimator of principal points is given as the following theorem.

Theorem 3

Let

Then, the values of \(\{ \varvec{a}_1,\dots ,\varvec{a}_k \}\) minimizing

are given by k-principal points of the distribution with pdf (3.3).

Proof

Noting that \(\Vert \varvec{x} \Vert = \Vert \varvec{G}'\varvec{x}\Vert \) holds for any orthogonal matrix \(\varvec{G}\), we see that

The remainder of the proof follows exactly as in the proof of Theorem 1 and is thus omitted. \(\square \)

We note that Theorem 3 treats a wider model (location-scale-rotation family) than Theorem 1 and Proposition 2 (location-scale family): The rotation parameter \(\varvec{H}\) is assumed to be known in Sect. 2, while \(\varvec{H}\) is unknown and is estimated in this section. This means that if \(\varvec{\Sigma }=\sigma ^2\varvec{\Psi }=\sigma ^2\varvec{H}\varvec{\Lambda }\varvec{H}'\) denotes a spectral decomposition of the covariance matrix \(\varvec{\Sigma }\), then Theorem 1 and Proposition 2 need \(\varvec{\Psi }\) to be known but Theorem 3 needs only \(\varvec{\Lambda }\) to be known. Hence, we can reduce the number of parameters assumed to be known for location-scale families from the entire covariance matrix (up to a scalar factor) to just needing to know the eigenvalues \(\varvec{\Lambda }\) (up to a scalar factor).

Numerical example

Here is a simple numerical illustration. Assume that \(\varvec{X}\sim N\left( \varvec{\mu },\sigma ^{2}\varvec{H} {\lambda ^2 \ 0\atopwithdelims ()\ 0 \ \ 1^2} \varvec{H}' \right) \) with \(\lambda > 1\) and \(\varvec{H}\in O_2\), and consider the case in which 2-principal points are estimated from a random sample of size \(n=2\). The actual 2-principal points are given by

and the MSD (1.1) value is \(\{ (1-2/\pi )\lambda ^2 + 1\} \sigma ^{2}\).

Suppose that \(\varvec{\mu }\), \(\sigma \), and \(\varvec{H}\) are unknown and \(\lambda \) is known. Then, the maximum likelihood estimators of \(\varvec{\mu }\), \(\sigma \), and \(\varvec{H}\) obtained by the random sample \(\varvec{X}_1,\varvec{X}_2\) of size \(n=2\) are given as follows:

where

Here, we compare the following three estimators of 2-principal points. One is to use the maximum likelihood estimator of 2-principal points:

This is simple but not optimal. Another estimator is to use the location-scale-family-based minimum EMSD estimator presented in Sect. 2 by substituting \(\hat{\varvec{H}} {\lambda ^2 \ 0\atopwithdelims ()\ 0 \ \ 1^2} \hat{\varvec{H}}'\) for the \(\varvec{\Psi }\), that is, to use

where \(\varvec{b}_{1}^{\dagger },\varvec{b}_{2}^{\dagger }\) are 2-principal points of \(\sqrt{\frac{(n+1)p}{(n-1)p+2}}\varvec{t}_{(n-1)p+2}(\varvec{0},\varvec{\tilde{\Psi }})\) with \(n=2\), \(p=2\), and \(\varvec{\tilde{\Psi }}=\hat{\varvec{H}} {\lambda ^2 \ 0\atopwithdelims ()\ 0 \ \ 1^2} \hat{\varvec{H}}'\). If \(\varvec{H}\) is known and \(\varvec{\tilde{\Psi }}=\varvec{H} {\lambda ^2 \ 0\atopwithdelims ()\ 0 \ \ 1^2} \varvec{H}'\) is used, then this approach will be optimal. However, in case \(\varvec{H}\) is unknown and \(\hat{\varvec{H}}\) is used, this approach is suboptimal. We note that (3.5) is equivalent to

where \(\varvec{a}_{1}^{\dagger },\varvec{a}_{2}^{\dagger }\) are 2-principal points of \(\sqrt{\frac{(n+1)p}{(n-1)p+2}}\varvec{t}_{(n-1)p+2}\left( \varvec{0},{\lambda ^2 \ 0\atopwithdelims ()\ 0 \ \ 1^2}\right) \) with \(n=2\) and \(p=2\). The third option is to use the location-scale-rotation-family-based minimum EMSD estimator presented in this section:

where \(\varvec{a}_{1}^{*},\varvec{a}_{2}^{*}\) are 2-principal points of the distribution with pdf (3.3), which is the optimal procedure in this case.

Table 2 shows the results obtained by numerical computations for the maximum likelihood estimator (denoted by MLE), the location-scale-family-based minimum EMSD estimator (denoted by LSF-MEMSDE), and the location-scale-rotation-family-based minimum EMSD estimator (denoted by LSRF-MEMSDE) for \(\lambda =1.5,2,2.5,3\). The EMSD (3.4) values of the three estimators are also shown. Table 2 indicates that the LSRF-MEMSDE gives much smaller EMSD values than the MLE. The difference between the LSF-MEMSDE and the LSRF-MEMSDE is relatively small, but may not be negligible especially when \(\lambda \) is large. In this example, the matrix of eigenvectors \(\varvec{H}\) of the covariance matrix plays the role of the rotation parameter but we note that Theorem 3 applies to any rotation matrix in the location-scale-rotation family.

4 Conclusion and remark

Cluster analysis applications occur mostly in multivariate settings. Efficiency in estimating cluster means can be improved by incorporating parametric information, namely, using information on the principal points of the underlying parametric distribution.

In this paper, we have discussed the parametric estimation of principal points for a wide class of multivariate distributions. More specifically, we have derived optimal estimators of principal points minimizing the expected mean squared distance (EMSD) for location-scale and location-scale-rotation families. Numerical results have suggested that the minimum EMSD estimators given in this paper enable substantial improvements over conventional maximum likelihood estimators.

A possible extension of our results will be to the case when the covariance matrix is fully unknown. Different approaches may be needed to study the optimality of principal points estimators in this important and interesting case.

The final remark of this paper is that the proofs of Theorems 1 and 3 depend on the properties of maximum likelihood estimators only through their equivariance properties, which hold in general under a very mild condition (Eaton 1983, Section 7.4), with respect to the transformation \(\varvec{X}_i \rightarrow a\varvec{X}_i + \varvec{b}\) (in Theorem 1) or \(\varvec{X}_i \rightarrow a\varvec{C}\varvec{X}_i + \varvec{b}\) (in Theorem 3) with \(a > 0\), \(\varvec{b} \in \mathfrak {R}^p\), and \(\varvec{C}\in O_p\) (\(i = 1,2,\dots ,n\)), e.g., \(\hat{\varvec{\mu }} \rightarrow a\varvec{C}\hat{\varvec{\mu }}+\varvec{b}\), \(\hat{\sigma } \rightarrow a\hat{\sigma }\), and \(\hat{\varvec{H}} \rightarrow \varvec{C}\hat{\varvec{H}}\) when \(\varvec{X}_i \rightarrow a\varvec{C}\varvec{X}_i + \varvec{b}\). Hence, our results remain valid for a class of equivariant estimators of \(\varvec{\mu }\), \(\sigma \) (and \(\varvec{H}\)).

References

Antle CE, Bain LJ (1969) A property of maximum likelihood estimators of location and scale parameters. SIAM Rev 11(2):251–253

Bali JL, Boente G (2009) Principal points and elliptical distributions from the multivariate setting to the functional case. Stat Probab Lett 79(17):1858–1865

Eaton ML (1983) Multivariate statistics: a vector space approach. Wiley, New York

Flury B (1990) Principal points. Biometrika 77(1):33–41

Flury B (1993) Estimation of principal points. J R Stat Soc 42(1):139–151

Gersho A, Gray RM (1992) Vector quantization and signal compression. Kluwer Academic Publishers, Boston

Graf L, Luschgy H (2000) Foundations of quantization for probability distributions. Springer, Berlin

Gu XN, Mathew T (2001) Some characterizations of symmetric two-principal points. J Stat Plann Inference 98(1–2):29–37

Hartigan JA, Wong MA (1979) A \({K}\)-means clustering algorithm. J R Stat Soc 28(1):100–108

Kurata H (2008) On principal points for location mixtures of spherically symmetric distributions. J Stat Plann Inference 138(11):3405–3418

Kurata H, Qiu D (2011) Linear subspace spanned by principal points of a mixture of spherically symmetric distributions. Commun Stat 40(15):2737–2750

Li L, Flury B (1995) Uniqueness of principal points for univariate distributions. Stat Probab Lett 25(4):323–327

Matsuura S, Kurata H (2010) A principal subspace theorem for 2-principal points of general location mixtures of spherically symmetric distributions. Stat Prob Lett 80(23–24):1863–1869

Matsuura S, Kurata H (2011) Principal points of a multivariate mixture distribution. J Multivar Anal 102(2):213–224

Matsuura S, Kurata H (2014) Principal points for an allometric extension model. Stat Pap 55(3):853–870

Matsuura S, Kurata H, Tarpey T (2015) Optimal estimators of principal points for minimizing expected mean squared distance. J Stat Plann Inference 167:102–122

Mease D, Nair VN (2006) Unique optimal partitions of distributions and connections to hazard rates and stochastic ordering. Stat Sin 16(4):1299–1312

Petkova E, Tarpey T (2009) Partitioning of functional data for understanding heterogeneity in psychiatric conditions. Stat Interface 2(4):413–424

Ruwet C, Haesbroeck G (2013) Classification performance resulting from a 2-means. J Stat Plann Inference 143(2):408–418

Shimizu N, Mizuta M (2007) Functional clustering and functional principal points. In: Apolloni B, Howlett RJ, Jain LC (eds) Knowledge-based intelligent information and engineering systems. Lecture notes in computer science, vol 4693, pp 501–508

Stampfer E, Stadlober E (2002) Methods for estimating principal points. Commun Stat 31(2):261–277

Tarpey T (1995) Principal points and self-consistent points of symmetric multivariate distributions. J Multivar Anal 53(1):39–51

Tarpey T (1997) Estimating principal points of univariate distributions. J Appl Stat 24(5):499–512

Tarpey T (1998) Self-consistent patterns for symmetric multivariate distributions. J Classif 15(1):57–79

Tarpey T (2007) A parametric \(k\)-means algorithm. Comput Stat 22(1):71–89

Tarpey T, Kinateder K (2003) Clustering functional data. J Classif 20(1):93–114

Tarpey T, Li L, Flury B (1995) Principal points and self-consistent points of elliptical distributions. Ann Stat 23(1):103–112

Tarpey T, Loperfido N (2015) Self-consistency and a generalized principal subspace theorem. J Multivar Anal 133:27–37

Tarpey T, Petkova E (2010) Principal point classification: applications to differentiating drug and placebo responses in longitudinal studies. J Stat Plan Inference 140(2):539–550

Tarpey T, Petkova E, Lu Y, Govindarajulu U (2010) Optimal partitioning for linear mixed effects models: applications to identifying placebo responders. J Am Stat Assoc 105(491):968–977

Tarpey T, Petkova E, Ogden RT (2003) Profiling placebo responders by self-consistent partitions of functional data. J Am Stat Assoc 98(464):850–858

Trushkin A (1982) Sufficient conditions for uniqueness of a locally optimal quantizer for a class of convex error weighting functions. IEEE Trans Inf Theory 28(2):187–198

Yamamoto W, Shinozaki N (2000a) On uniqueness of two principal points for univariate location mixtures. Stat Probab Lett 46(1):33–42

Yamamoto W, Shinozaki N (2000b) Two principal points for multivariate location mixtures of spherically symmetric distributions. J Jpn Stat Soc 30(1):53–63

Yamashita H, Goto M (2017) The analysis based on principal matrix decomposition for 3-mode binary data. Asian J Manag Sci Appl 3(1):24–37

Yamashita H, Matsuura S, Suzuki H (2017) Estimation of principal points for a multivariate binary distribution using a log-linear model. Commun Stat 46(2):1136–1147

Acknowledgements

We are grateful to the Editors for considering our paper and to anonymous reviewers for their thoughtful and helpful comments.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Matsuura, S., Tarpey, T. Optimal principal points estimators of multivariate distributions of location-scale and location-scale-rotation families. Stat Papers 61, 1629–1643 (2020). https://doi.org/10.1007/s00362-018-0995-z

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00362-018-0995-z