Abstract

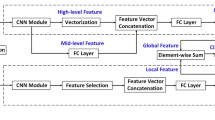

Emotion recognition based on facial expression is a challenging research topic and has attracted a great deal of attention in the past few years. This paper presents a novel method, utilizing multi-modal strategy to extract emotion features from facial expression images. The basic idea is to combine the low-level empirical feature and the high-level self-learning feature into a multi-modal feature. The 2-dimensional coordinate of facial key points are extracted as low-level empirical feature and the high-level self-learning feature are extracted by the Convolutional Neural Networks (CNNs). To reduce the number of free parameters of CNNs, small filters are utilized for all convolutional layers. Owing to multiple small filters are equivalent of a large filter, which can reduce the number of parameters to learn effectively. And label-preserving transformation is used to enlarge the dataset artificially, in order to address the over-fitting and data imbalance of deep neural networks. Then, two kinds of modal features are fused linearly to form the facial expression feature. Extensive experiments are evaluated on the extended Cohn–Kanade (CK+) Dataset. For comparison, three kinds of feature vectors are adopted: low-level facial key point feature vector, high-level self-learning feature vector and multi-modal feature vector. The experiment results show that the multi-modal strategy can achieve encouraging recognition results compared to the single modal strategy.

Similar content being viewed by others

References

Zhao XM, Zhang SQ (2016) Outcome facial expression recognition: feature extraction and classification. IETE Tech Rev 33(5):505–517

Ding CX, Tao DC (2015) Robust face recognition via multimodal deep face representation. IEEE Trans Multimed 17(11):2049–2058

Shi XS, Guo ZH, Nie FP, Yang L, You J, Tao DC (2015) Neutral face classification using personalized appearance models for fast and robust emotion detection. IEEE Trans Image Process 24:2701–2711

Taigman Y, Yang M, Ranzato M, Wolf L (2014) Deepface: closing the gap to human-level performance in face verification. In: IEEE conference on computer vision and pattern recognition (CVPR), IEEE,Columbus, pp 1701–1708

Sun Y, Chen Y, Wang X, Tang X (2014) Deep learning face representation by joint identification-verification. In: Annual conference on neural information processing systems, NIPS, 2014, pp 1988–1996

Zhang FF, Yu YB, Mao QR, Gou JP, Zhan YZ (2016) Pose-robust feature learning for facial expression recognition. Front Comput Sci 10:832–844

Zhao GY, Pietikäinen M (2007) Dynamic texture recognition using local binary patterns with an application to facial expressions. IEEE Trans Pattern Anal Mach Intell 29(6):915–928

Dalal N, Triggs B (2005) Histograms of oriented gradients for human detection. In: IEEE computer society conference on computer vision and pattern recognition(CVPR), IEEE,San Diego, 2005, pp 886–893

Jones JP, Palmer LA (1987) An evaluation of the two-dimensional gabor filter model of simple receptive fields in cat striate cortex. J Neurophysiol 58(6):1233–1258

Ren FJ, Huang Z (2015) Facial expression recognition based on AAM-SIFT and adaptive regional weighting. IEEJ Trans Electr Electron Eng 10(6):713–722

Gao XB, Su Y, Li XL, Tao DC (2010) A review of active appearance models. IEEE Trans Syst Man Cybern Part C Appl Rev 40(2):145–158

Sung JW, Kanada T, Kim DJ (2007) A unified gradient-based approach for combining ASM into AAM. Int J Comput Vis 75(2):297–310

Chen SZ, Wang HP, Xu F, Jin YQ (2016) Target classification using the deep convolutional networks for SAR images. IEEE Trans Geosci Remote Sens 58(8):4806–4817

Xu J, Luo XF, Wang GH, Gilmore H, Madabhushi A (2016) A deep convolutional neural network for segmenting and classifying epithelial and stromal regions in histopathological images. Neurocomputing 191:214–223

Chowdhury A, Kautz E, Yener B, Lewis D (2016) Image driven machine learning methods for microstructurerecognition. Comput Mater Sci 123:176–187

Shi BG, Bai X, Yao C (2016) Script identification in the wild via discriminativeconvolutional neural network. Pattern Recognit 52:448–458

Yu ZD, Zhang C (2015) Image based static facial expression recognition with multiple deep network learning. In: ACM international conference on multimodal interaction, ACM, Seattle, 2015, pp 435–442

Song H (2017) Facial expression classification using deep convolutional neural network. J Broadcast Eng 11:162–172

Shi J, Zhou SC, Liu X, Zhang Q, Lu MH, Wang TF (2016) Stacked deep polynomial network based representationlearning for tumor classification with small ultrasound imagedataset. Neurocomputing 194:87–94

Ranzato M, Susskind J, Mnih V, Hinton G (2011) On deep generative models with applications to recognition. In: IEEE conference on computer vision and pattern recognition (CVPR), IEEE, Colorado Springs, 2011, pp 2857–2864

Rifai S, Bengio Y, Courville A, Vincent P, Mirza M (2012) Disentangling factors of variation for facial expression recognition. In: European conference on computer vision (ECCV), Springer, Florence, 2012, pp 808–822

Panagakis Y, Nicolaou MA, Zafeiriou S, Pantic M (2016) Robust correlated and individual component analysis. IEEE Trans Pattern Anal Mach Intell 38(8):1665–1678

Luo Y, Zhang T, Zhang Y (2016) A novel fusion method of PCA and LDP for facial expressionfeature extraction. OPTIK 127(2):718–721

Turan C, Lam KM, He XJ (2015) Facial expression recognition with emotion-based feature fusion. In: Asia-Pacfic signal and information processing association annual summit and conference (APSIPA), IEEE, Hong Kong, 2015, pp 1–6

Zavaschi THH, Britto AS, Oliveira LES, Koerich AL (2013) Fusion of feature sets and classifiers for facial expression recognition. Expert Syst Appl 40(2):646–655

Krizhevsky A, Sutskever I, Hinton G (2012) Imagenet classification with deep convolutional neural networks. In: Annual conference on neural information processing systems, NIPS, Lake Tahoe, 2012, pp 1097–1105

Burges CJC (1998) A tutorial on support vector machines for pattern recognition. Data Min Knowl Discov 2(2):121–167

Zhong L, Liu Q, Yang P, Liu B, Huang J, Metaxas D (2012) A review on facial patches for expression analysis. IEEE conference on computer vision and pattern recognition (CVPR), IEEE, Providence, 2012, pp 2562–2569

Cohen I, Sebe N, Garg A, Chen LS, Huang TS (2003) Facial expression recognition from video sequences: temporal and static modeling. Comput Vis Image Underst 91(1–2):160–187

Breiman L (2001) Random forests. Mach Learn 45(1):5–32

Itti L, Koch C, Niebur E (1998) A model of saliency-based visual attention for rapid sceneanalysis. IEEE Trans Pattern Anal Mach Intell 20(11):1254–1259

Caruana R (1997) Multitask learning. Chine Learn 28(1):41–75

Liu ZH, Wang HZ, Yan Y, Guo GJ (2015) Effective facial expression recognition via the boosted convolutional neural network. Commun Comput Inf Sci 546:179–188

Fasel B, Luettin J (2003) Automatic facial expression analysis: a survey. Pattern Recognit 36(1):259–275

Patrick L, Jeffrey FC, Takeo K, Jason S, Zara A (2010) The extended Cohn–Kanade dataset (CK+): a complete dataset for action unit and emotion-specified expression. In: IEEE computer society conference on computer vision and pattern recognition-workshops, IEEE, San Francisco, 2010, pp 94–101

Happy SL, Routray A (2015) Automatic facial expression recognition using features of salient facial patches. IEEE Trans Affect Comput 6(1):1–12

Happy SL, Routray A (2015) Automatic facial expression recognition using features of salient facial patches. IEEE Trans Affect Comput 6(1):1–12

Liu MY, Li SX, Shan SG, Chen XL (2015) AU-inspired deep networks for facial expression feature learning. Neurocomputing 159:126–136

Vo DM, Sugimoto A, Le TH (2016) Facial expression recognition by re-ranking with global and local generic features. In: 23rd international conference on pattern recognition, IEEE, New York, 2016, pp 4118–4123

Acknowledgements

This work was supported by the National Natural Science Foundation of China (No. 61573066, No. 61327806).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Wei, W., Jia, Q., Feng, Y. et al. Multi-modal facial expression feature based on deep-neural networks. J Multimodal User Interfaces 14, 17–23 (2020). https://doi.org/10.1007/s12193-019-00308-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12193-019-00308-9