Abstract

Session search, the task of document retrieval for a series of queries in a session, has been receiving increasing attention from the information retrieval research community. Session search exhibits the properties of rich user-system interactions and temporal dependency. These properties lead to our proposal of using partially observable Markov decision process to model session search. On the basis of a design choice schema for states, actions and rewards, we evaluate different combinations of these choices over the TREC 2012 and 2013 session track datasets. According to the experimental results, practical design recommendations for using PODMP in session search are discussed.

Similar content being viewed by others

1 Introduction

Session search is the task of document retrieval for a series of queries in a session. It has been receiving increasing attention from the Information Retrieval (IR) research community (Guan et al. 2013; Kotov et al. 2011; Luo et al. 2014; Raman et al. 2013; Kanoulas et al. 2013). For instance, the Text Retrieval Conference (TREC) Session Tracks (Kanoulas et al. 2012, 2013) has greatly promoted the research in session search. A session usually starts when a user writes a query according to his/her information need, submits the query to a search engine, and then receives a ranked list of documents from the search engine. After viewing the titles and snippets of documents in the list, the user may click on some documents and read them for a certain time period, which is called “dwell time”. The user’s cognitive focus and understanding of the information need may change as the user gains more information from the documents. Oftentimes, the user reformulates a query and submits it to the search engine again. We term the process from one query formulation to the next a search iteration. A session may last for several search iterations until the user stops the session either because of satisfaction of the information need (Kanoulas et al. 2013) or abandonment (Chilton and Teevan 2011).

For instance, in the TREC 2013 Session Track (Kanoulas et al. 2013) session #12, the session proceeds as the following:

-

Information Need I: “You’re planning a trip to Kansas city, MO. You want to know what Kansas city is famous for ...What hotels are near the Kansas city airport ...”

-

The user writes the first query q 1 = “wiki kansas city tourism”, and receives a ranked document set \(D_1\) from a search engine.

-

The user clicks on some documents from \(D_1\). The clicked documents, along with clicking order and dwell time, are stored in \(C_1\). Through reading the documents, the user gains useful travel information about Kansas city.

-

A new query \(q_2\) = “kansas city hotels airport” is submitted to the search engine for another sub information need in I. The session keeps going until the user ends the session.

Interactions between user and search engine in session search. The subscripts index the search iterations. \(D_i\) is documents retrieved for query \(q_i\), \(C_i\) is the clicked documents. I stands for the information need. The dotted lines indicate invisibility of some interactions. n is the number of queries in a session, i.e., the session length

Session search demonstrates interesting properties that differ from ad-hoc search. First, there are rich forms of interactions when searching in a session. In a session, the user interacts frequently with the search engine by various forms of interactions, including query formulation, document clicks, document examination, eye movements, mouse movements, etc. These interactions provide abundant signals to describe the dynamic search process. The research challenge is how to use them effectively to improve search accuracy for the session.

Second, there is temporal dependency when searching in a session. As we mentioned previously, a session consists of several search iterations. In early search iteration(s), the user learned from the retrieved documents, especially from those clicked documents that he/she has examined. This information influences the user’s behavior, such as query formulation and document clicks, in the current search iteration. This temporal dependency between the current search iteration and the previous one(s) suggests the presence of the Markov property in session search. Figure 1 illustrates the interactions between the user and the search engine in session search.

It may seem convenient to use classic feedback models for session search. However, these feedback models, such as Rocchio (Joachims 1997), uses relevance judgements for no more than one search iteration. They may no longer be adequate in tackling this problem more complex than ad-hoc retrieval.

Another seemingly appealing idea would be use learning to rank algorithms for session search. Note that the effectiveness of such a solution is mainly based on the assumption that query logs provide enough repeated query sequences. Due to the diversity of natural language, such an assumption does not hold in reality even when the scale of query log is large. Table 1 lists the repeated adjacent query pairs in the Yandex search logs. Only 2.68% of the adjacent query pairs appear in one’s or other users’ search history in WSCD 2013Footnote 1 and 5.54% in WSCD 2014.Footnote 2

The unique challenges presented in session search call for new statistical retrieval models. In addition, the inadequacy of the existing technologies motivates us to look for an ideal model for session search. We think that such an ideal model should be able to:

-

Model user interactions, which means it needs to contain parameters encoding actions;

-

Model information need hiding behind user queries and other interactions;

-

Set up a reward mechanism to guide the entire search algorithm to adjust its retrieval strategies; and

-

Support Markov properties to handle the temporal dependency.

In this paper, we suggest that the Partially Observable Markov decision Process (POMDP) is the most suitable model for the task. We lay out available options for elements in POMDP, including states, actions, and rewards, when modeling session search, and experiment on combinations of options over the TREC Session 2012 and 2013 datasets. We report our findings on the impacts of various settings in terms of search accuracy and efficiency. Based on the findings, we recommend design choices for using POMDP in session search.

The remainder of this paper is organized as follows: Sect. 2 reviews the Markov models. Section 3 discusses the related work in IR. Section 4 proposes the design choices of POMDP and Sect. 5 presents the solution framework. Section 6 experiments various design options. Section 7 makes recommendation to a practical session search task. Section 8 concludes the paper.

2 Review of Markov models

This section presents the review of the Markov models. Based on the comparisons, we point out why POMDP fits best for session search.

2.1 Markov chains (MC)

A very basic model that respects the Markov property is the Markov Chain (Norris 1998). A Markov chain can be defined as (S, M), where

-

A set of environment states \(S=\{s_1,\ldots ,s_n\}\): The state of the environment can be directly observed.

-

The state transition matrix M: An element \(m_{ij}\) in \(M=[m_{ij}]_{n \times n}\) denotes the probability of transition from state \(s_i\) to state \(s_j\). More generally, the transition matrix M could be a transition function.

The dynamic process of MC starts from an initial probability distribution \(x^{(0)}\) over S at iteration 0. Then it iterates according to

MC can converge to an equilibrium distribution when M satisfies certain conditions.

The widely used PageRank (Altman and Tennenholtz 2005) algorithm is an example of MC being applied to Web surfing. In PageRank, each Web page is a state. The process starts from a uniform distribution over all the Web pages. The transition probability from page i to page j is

The equilibrium state distribution of such an MC is the PageRank scores, which is used to rank Web pages. The assumption in PageRank is that a popular document will be relevant; however, it is not always true.

What is missing here for session search is that MC does not have place holders to model the actions (interactions between the user and the search engine). In addition, there is no clear reward mechanism to capture the match between the information need and the retrieved documents.

2.2 Hidden Markov models (HMM)

HMM has been extensively used in speech recognition and natural language processing, particularly for three main types of applications, evaluation, decoding, and learning (Rabiner 1989). An HMM (Baum and Petrie 1966) can be described as a tuple \((S, M, O, \varTheta )\).

-

A set of environment states S: Unlike it is in MC, S can no longer be directly observed, and thus becomes hidden.

-

The state transition matrix M: An element \(m_{ij}\) in \(M=[m_{ij}]_{n \times n}\) denotes the probability of transition from state \(s_i\) to state \(s_j\).

-

A set of observable symbols O: It is also referred to as observations, which can be directly observed according to the hidden states.

-

Observation function \(\varTheta\): \(\varTheta _{ij}\) in \(\varTheta =[\varTheta _{ij}]_{n \times n}\) is the probability of \(o_j\) being observed at state \(s_i\).

HMM has also been used for single query document retrieval. Miller et al. (1999) constructed one HMM for each document, where each HMM has two states – a document state and a general English (GE) state. The observations are terms. Miller et al. calculated the document-to-query relevance P(D|q) by

where \(a_0\) and \(a_1\) are transition probabilities estimated by the Expectation Maximization algorithm, where

and

where tf is the term frequency and C is the corpus. This approach can be viewed as a kind of vector space model if \(a_0\) and \(a_1\) are constants.

HMM’s advantage over MC lies in that it supports hidden states, which makes it more suitable for modeling session search. However, HMM does not support formulating interactions between users and the search engine. Moreover, it does not set up a long-term goal to guide the search engine throughout the session. Therefore, HMM is still not adequate to model session search.

2.3 Markov decision processes (MDP)

A Markov Decision Process (Blumenthal and Getoor 1968) is a tuple: \((S, M, A, R, \gamma )\), where

-

A set of environment states S: The state of the environment can be directly observed.

-

The state transition function M: \(M(s'|s,a)\) denotes the probability of transition from state s to state \(s'\) triggered by an action a.

-

A set of agent actions A. The agent can take some action \(a \in A\).

-

Reward function R: A reward R(s, a) is given to the agent after the agent takes action a at s.

-

\(\gamma \in [0, 1]\) is a discount factor for future rewards.

The goal of MDP is to find an optimal policy, i.e., the best sequence of actions \(a_{i}, a_{i+1}, \dots\) that maximizes \(\sum _{t=0}^{\infty }\gamma ^tR(s_{i+t}, a_{i+t})\), the long-term accumulated reward which sums up all (discounted) rewards from the beginning to the end of the process. MDP can be solved by dynamic programming using the Bellman equation (Bellman 1957):

Guan et al. (2013) modeled the procedure of session search by a modified MDP named the query change retrieval model (QCM). QCM uses queries as states. User’s query change is treated as user actions. Unlike classic MDP where future rewards are discounted, QCM discounts the past rewards. The accumulated rewards are used to rank documents for a given query. The immediate reward in each search iteration is measured by query-document relevance.

MDP brings actions into the family of stochastic Markov models. Hence it is able to model interactions between the user and the search engine. Its reward function also provides a goal for the search engine to make decisions in the long term and leads the entire information seeking process to eventually meet the user’s information need. MDP can definitely be used in situations where the states are completely observable. In the case where states are hidden, for instance if we model information need as states, MDP becomes inadequate.

2.4 Multi-armed bandits (MAB)

The Multi-Armed Bandits (Katehakis and Veinott 1987) were originally described as the decision of a gambler who wants to obtain the maximum reward by operating n one-arm slot machines. When he pulls the arm of a machine \(B_i\), a random reward \(R_i\) was generated by distribution \(P_i\). The goal is to find a series of actions \(a(0), a(1), \cdots\) that maximizes the expected total reward

where \(S_{a(i)}(i)\) is the state of the machine that the gambler selects at round i.

Radlinski et al. (2008) proposed an online learning approach named Ranked Bandits Algorithm for ad-hoc search. It sets up an MAB instance at each rank position. The algorithm updates its rewards in an iterative way. In each iteration, a list of documents retrieved by all the MAB instances is presented to the user. If the user clicks on a document, the corresponding MAB instance receives some reward. Through many such interactions, the MAB instances receive rewards from different users. Different users prefer different documents, thus diversity in document ranking are captured.

Note that there is no notion of session in the context of online learning. Moreover, an MAB do not necessary possesses the Markov property. Its state space is the cross-product of state spaces of the k machines. If the state transition of each one-arm machine has the Markov property, we may consider all the k machines to form one Markov process. An MAB can thus be viewed as an MDP. Hence, it bears the same weakness as an MDP does in the context of session search: it cannot encode hidden information needs into the model.

2.5 Partially observable Markov decision processes (POMDP)

POMDP has been widely applied to Artificial Intelligence (AI) and recently has been applied to IR tasks. For instance, Yuan and Wang (2012) applied POMDP to ad recommendation. Jin et al. (2013) and Zhang et al. (2014) provided two different POMDP models for document re-ranking. The theoretical model of a POMDP (Sondik 1978) can be described as a tuple (S, M, A, R, \(\gamma , O, \varTheta , B)\). In this model, there are two roles, the environment and the agent. All the elements in this tuple characterize the interaction between the environment and the agent altogether.

-

A set of environment states \(S=\{s_1,\ldots ,s_n\}\): The state of the environment can not be directly observed, and thus becomes hidden.

-

A set of agent actions A. The agent can take some action \(a \in A\).

-

The state transition function M: \(M(s, a, s')\) denotes the probability of transition from state s to state \(s'\) triggered by an action a.

-

A set of observable symbols O: The environment emits an observable symbol \(o \in O\) according to the current hidden state. o is also called as an observation.

-

Observation function \(\varTheta\): \(\varTheta (s', a, o)\) denotes the probability that the agent observes o at a state \(s'\) after taking an action a.

-

Reward function R: A reward R(s, a) is given to the agent after the agent takes action a at s.

The interaction procedure of POMDP evolves from an initial state \(s \in S\). When the agent takes some action a, a reward R(s, a) is given. Afterwards, the environment change its state stochastically according to M. Then a symbol o is emitted according to \(\varTheta\) and the new state. The interactive procedure iterates for finite or infinite steps in this way. Figure 2 illustrates an example of POMDP interaction procedure.

In such a procedure, the agent maintains its belief \(b \in B\) over the hidden states. It is a probability distribution over S and is updated in each iteration. The goal of POMDP is to find an optimal policy \(\pi\) mapping from B to A. According to \(\pi\), the best sequence of actions \(a_0, a_1, \dots\) can be generated for the purpose of maximizing the expected discounted long-term reward \(\sum _{t=0}^{\infty }\gamma ^t r(b_t, a_t)\), where \(\gamma \in [0, 1]\) is a discount factor for future rewards, and \(r(b_t, a_t)=\sum _{s \in S}b_t(s)R(s, a_t)\).

Let us use s, a, o and b for the state, action, observation and belief in the current iteration, and use \(s'\), \(o'\) and \(b'\) for the state, observation and belief in the next iteration. The solution to a POMDP can be expressed in an iterative form of value function V(b) using the Bellman equation (Kaelbling et al. 1998):

In this equation, the maximal value of the expected discounted long-term reward on current belief state b is represented by V(b), where V is usually referred to as value function. This function takes the maximum value over all the possible actions. The total reward is decomposed into the current immediate reward \(r\left( b, a\right)\) for taking action a at b and the future estimated reward discounted by \(\gamma\). The future reward is estimated on the basis of the belief state \(b'\) in the next iteration and is an expectation of the value function over probability distribution \(P\left( O| a, b\right)\). Here, we use \(P\left( o'| a, b\right)\) to denote the probability of observing \(o'\) in the next iteration after taking action a at b. The next belief state \(b'\) is obtained by updating b as follows.

In Eq. 3, \(P(s'|o',a,b)\) is the probability of the system being in state \(s'\) when observing \(o'\) after taking action a at belief b. The observation probability \(\varTheta (s',a,o')=P(o'|s',a)=P(o'|s',a,b)\). \(\sum _{s\in S}{M(s, a, s')b(s)}\) is actually \(P(s'|a,b)\). Obviously, the new belief is calculated merely according to the Bayes rule.

If we define Q-function (Kaelbling et al. 1998) in the following recursive form,

then \(V(b)=\max \limits _{a}{Q(b,a)}\) holds and the optimal action \(a^{*}\) can be selected according to the following equation.

Standard algorithms to solve POMDP include Witness algorithm, QMDP, MC-POMDP, etc (Kaelbling et al. 1998). Reinforcement learning is often utilized as an effective technique for solutions to MDP and POMDP.

To use POMDP in session search, we can model hidden information via hidden states. Any visible signals in the process could be treated as observations. The interaction between the user and the search engine can be formalized by actions. POMDP also supports reward functions.

2.6 Relationships of POMDP with other Markov models

Figure 3 elaborates the relationships among these Markov models. Models in the left part of Fig. 3, including MAB, MC, and MDP, have completely observable states. On the contrary, models in the right part, including HMM and POMDP, have partially observable states, which means they support hidden states. Given an MC, if we make its states hidden and its observable symbols O emitted with the probability specified by \(\varTheta\), then the MC becomes an HMM. The relationship between POMDP and MDP goes the same way, where POMDP is a partially observable version of MDP. The functionalities of actions and rewards in MAB are similar to that in MDP. An MAB is not necessarily Markovian. However, it can be converted into an MDP by enforcing the Markov property. In this respect, MAB is still a kind of fully observable decision process.

Models in the upper part of Fig. 3, including MC and HMM, do not have place holders for actions and rewards while models in the lower part, including MDP and POMDP, have. MDP is an extended version of MC with the augmentation of actions and rewards, and so is POMDP to HMM.

POMDP can be viewed as a combination of MDP and HMM, which supports a wide range of desirable features for session search, including the Markov property, hidden states, actions, and rewards. The characteristics in POMDP meet the requirements for session search well, especially when we want to incorporate the hidden information need into a theoretical model.

3 Related work in IR

In this section, we will investigate some research work on applying MDP, POMDP and reinforcement learning approaches to IR domain. Although some approaches mentioned here are not based on POMDP or not exactly for the purpose of session search, they all share the idea of using states, actions, and rewards, and provide us much quotable experiences for the development of our POMDP framework.

Guan et al. (2013) proposed the query change model (QCM), an MDP approach for session search, where all the queries in a session were used as states. The idea was based on an intuitive assumption that the retrieved result in each search iteration could be inferred according to the queries from the first to the current iteration. They formulated queries as states, so state transitions were changes between two adjacent queries. They thought that state transitions arose from search engine actions, which was formalized by adjustment to the weights of search terms. The cumulated score of document relevance was treated as the reward guiding the whole search session.

Researchers also attempted to model session search with hidden states. This led to the use of POMDP. For instance, Luo et al. (2014) formulated session search as a dual agent stochastic game. They identified two agents in the dynamic interactive search procedure, the user agent and the search engine agent. Each agent took turn to act to meet the hidden information need. They designed a small set of four hidden states on two dimensions. One dimension was about query-document relevance in the view of search engine. Another dimension was about “exploration” or “exploitation” search status from the aspect of the user agent. Both agents updated their belief states based on observations of the current retrieval results. Actions were selected from a fixed set of search methods, which were termed as search technologies, according to the current state of the game resulting from the other agent’s action. This approach used nDCG scores as the estimations of future rewards.

Jin et al. (2013) provided another POMDP model for document re-ranking over multiple result pages in order to improve the online diversity of search results by considering implicit user feedback in the exploration search phase. Similar to session search, the task is a kind of interactive search for a single intent. The main difference of this task from session search is that it aims at a sequence of search pages for a single query, rather than a series of queries. The hidden states in this model were the probability distribution of document relevance and the beliefs were derived by a multivariate Gaussian distribution. This approach assumed that the document relevancy to a single query could not be affected by the interactions between the user and the search engine in the multi-page document re-ranking and be invariant in different search pages. Therefore, there was no state transition in its model. This is one major difference between Jin et al. (2013) and other typical POMDP models, including Luo et al. (2014). The search engine actions that they used were ranked lists of documents, which were termed as portfolios. The nDCG metric was regarded as a measure of reward in this approach.

Similarly, Zhang et al. (2014) used portfolios as actions when re-ranking documents in session search by using POMDP. In order to decrease the numerous possible actions, they grouped these actions into four high-level action strategies. For the same purpose, a fixed small number of two hidden states were defined to indicate document relevance. Such a state definition could be viewed as a subset of states in Luo et al. (2014). Zhang et al.’s approach considered both global rewards and local rewards. The former was a measure to the relevance of a document based on multiple users and multiple sessions, and could be estimated from the sessions recorded in the query log. The latter was a measure to document relevance based on the current session.

Prior to these recent MDP and POMDP approaches, earlier work has presented similar ideas. Shen et al. (2005) proposed a user model to infer a user’s interest from the search context, such as previous queries and click data in a same search session, for the task of personalized search. The focus of this task is the user, instead of a single query or a series of queries. A user model is maintained for a specific user and all the documents are re-ranked immediately after the model is updated. Under a decision-theoretic framework, they treated Web search as a decision process with user actions, search engine responses to actions, and user model based on the Bayesian decision theory. In order to choose an optimal system response, it introduces a loss function defined on the space of responses and user models. The loss function depends on the responses thus is inevitably application-dependent. This model can detect search session boundaries automatically. However, there are no states and no transitions defined in this model.

In addition to using short-term session context to improve retrieval accuracy, which is the goal of our study, session interaction data is analyzed for different purposes. Mitra (2015) used embeddings learnt by the convolutional latent semantic model to predict query reformulation behaviours within search sessions. Mitsui et al. (2016) conducted a user study to extract searchers’ intentions from session data. Some other studies focus on modeling and predicting user satisfaction, user struggling, user behavior after search success (Zhuang et al. 2016; Hassan et al. 2014; Odijk et al. 2015). In another line of research, aspect-based selection of feedback terms in pseudo-relevance feedback technique is investigated which can be suitable for updating the ranked lists in session search (Singh and Croft 2016).

In the following sections, we will discuss the available design choices for using POMDP in session search based on a detailed study on the above closely related approaches.

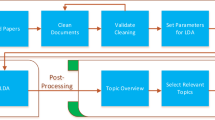

4 Design choices in POMDP

Section 2 suggests that POMDP is the most suitable to session search among the Markov models. Among all parameters of a POMDP, S, A, and R are three basic elements. All the other parameters can be easily set up once S, A and R are defined. Although definitions about the three elements are much flexible, some principles must be followed to design a robust POMDP model:

-

\({\textit{States}}\) should reflect the dynamic changes in the environment during the whole process;

-

\({\textit{Actions}}\) must result in changes on the environment state;

-

\({\textit{Rewards}}\) can guide the agent to act towards a long term goal as effectively and efficiently as possible.

According to the above principles, we will develop design choices about states, actions, and rewards in our POMDP framework for session search. Experiments in Sect. 6 will evaluate the impact of different choices of these three elements on the search accuracy and search efficiency (Table 2).

4.1 State definition: an art

State definition is essential in the POMDP modeling of session search. As we can see, related research in similar tasks have proposed a variety of state definitions. They include queries (Guan et al. 2013; Hofmann et al. 2011), document relevance (Jin et al. 2013; Zhang et al. 2014), and relevance versus exploration decision making states (Luo et al. 2014). We would like to divide them into two categories as follows.

-

(\(S_1\)) Fixed number of states. One tractable way to characterize the search status is using a fixed number of predefined states. For instance, Zhang et al. (2014) used a binary relevance state, “relevant” or ‘irrelevant”. Luo et al. (2014) defined a set of four hidden states about document relevance and user search status: Relevant exploRation (RR), Relevant exploiTation (RT), NonRelevant exploRation (NRR), and NonRelevant exploiTation (NRT). Intuitively, such design over states leads to less expensive computational costs due to the small size of state space.

-

(\(S_2\)) Varying number of states. Another way to characterize the search status is employing a state space of varying size through the session or even an infinite state space. For instance, both Hofmann et al. (2011) and Guan et al. (2013) modeled queries as states, where the number of states changes according to the number of queries in a session. Jin et al. (2013) used the true document relevance distribution as the hidden states. This abstract state space also leads to a varying number of real numbered values forming an infinite state space.

The large differences in design options for states suggest that state definition could be an art. Under the POMDP framework, researchers can design the states flexibly and creatively to fit into their problems. Some state definitions come from natural observations, e.g., the queries. Others are rather abstract, e.g., the relevance score space.

However, there are some general design guidelines shared by existing approaches. The state definitions are similar to each other because they all try to model the status of information need satisfaction at a certain point of time: right after the user has formulated the current query and before the search engine starts to retrieve documents. Therefore, all state definitions can be unified as an abstract definition of current estimation of search status by the search engine. A particular state definition remains an art, though.

4.2 Actions: your search algorithm

In one session, there are two autonomous agents, the user and the search engine. Typical user actions include: Add query terms; Remove query terms; Keep query terms; Click on documents; and SAT clicks on documents (click and stay in the page for long enough) (Kim et al. 2014). Typical search engine actions include: increase/decrease/keep term weights; switch on or switch off query expansion; adjust the number of top documents used in Pseudo Relevance Feedback (PRF) and considering the ranked list itself as actions etc. In this paper, our focus lies on the actions of search engine. The above search engine actions can be grouped into three categories.

-

(\(A_1\)) Technology Selection. Actions can be defined at a meta level. In other words, the decision at each search iteration is to make choice among multiple search methods, which we call search technology. A search technology could be query expansion, pseudo relevance feedback (PRF), spam detection, duplicate detection, etc. An action in this category is to select one within a set of technology given in advance, or to adjust the parameters of a technology. The approach presented in Luo et al. (2014) falls in this category. For instance, a parameter that is selected in that approach is the number of top retrieved documents to be included in PRF.

-

(\(A_2\)) Term Weight Adjustment. Another feasible idea is to adjust individual terms weight directly. The weighting schemes could be increasing term weights, decreasing term weights, or keeping term weights unchanged. A realistic example is Guan et al. [5], which choose actions from four types of term weighting scheme (theme terms, novel added terms, previously retrieved added terms, removed terms) actions according to the query changes between adjacent search iterations.

-

(\(A_3\)) Portfolio. One straightforward way to model actions is treating the entire ranked list of documents, which is named as portfolio in Jin et al. (2013), as an action. The phrase, “ranked list”, tends to consider each document as an individual item. By contrast, the term, “portfolio”, considers all the ranked documents as a whole, so we use this term here. Under such a design, taking an action implies an exhaustive comparison on different portfolios (document lists). The enumeration over all the portfolios often makes the computation infeasible. A more realistic way is to perform the comparison on a sample of some possible portfolios.

It is worth noting that action definitions may affect the search efficiency. The above three action options differ in computational complexity. The efficiency of Technology Selection depends on how many technology candidates are included and how efficient each of them are. Term Weight Adjustment requires certain calculations to decide how much weight to adjust. Portfolio could be computationally demanding because it requires a comparison across permutations over all possible rankings. Sampling techniques are thus used as a remedy. For instance, Jin et al. (2013) applied Monte Carlo sampling to reduce the computational complexity and approximated the solution in polynomial time. Therefore, we could say that Actions are essentially your search algorithm.

4.3 Reward is the key

In this section, we will discuss how to select among different action candidates based on Rewards. The reward R, which can be viewed as the loss function or risk function in supervised learning, provides the optimization objective to guide the POMDP throughout the entire dynamic process. As a task of document retrieval, the goal of session search is related to document relevance. This is a basis to set up a long term reward for session search.

Existing approaches have employed nDCG (Järvelin and Kekäläinen 2002) or user clicks as their reward functions. The former assesses document relevance explicitly. The latter gives implicit evaluations on relevance. Therefore, we design two categories of rewards as follows.

-

(\(R_1\)) Explicit Feedback. This kind of rewards is generated from relevance assessments given by the user directly. Such rewards need annotation information on documents from users or experts explicitly. For instance, both Jin et al. (2013) and Luo et al. (2014) defined rewards using nDCG, which measures the document relevance for a ranked document list.

-

(\(R_2\)) Implicit Feedback. On the contrary, this kind of rewards is calculated from the interaction data, such as dwell time on a document, between the user and the search engine. In this case, no explicit annotation is needed. As an example, Hofmann et al. (2011) used clicks as the rewards in their reinforcement learning style online algorithm.

In the original POMDP, it is necessary to calculate the expectation of accumulated discounted future rewards in order to make an optimal decision at the current iteration. However, when employing POMDP in session search, it is usually difficult to know an accurate reward function in advance. To handle this, we can use the rewards obtained from previous iterations in the same search session or utilize the historical query log to estimate future rewards. Guan et al. (2013) has successfully used past rewards to calculate the long term reward. They claimed that this backwards model better represents user behaviors in session search. However, using future rewards is also possible as long as we can estimate it from some other search sessions in the query logs.

4.4 Combinations of options

We propose to summarize existing research work (Sect. 3) using a \({\textit{State}}\)/\({\textit{Action}}\)/\({\textit{Reward}}\) design schema. Here we show two options for states, three for actions, and two for reward, which result in a total of \(2\times 3 \times 2=12\) combinations. For example, the search system proposed by Luo et al. (2014) uses a combination of \(S_1A_1R_1\), which means “Fixed number of states”, “Technology Selection” as the actions, and “Explicit Feedback” as the reward. Section 6 examines the design options and reports our findings on their search accuracy and efficiency.

5 Solution framework for POMDP

The remaining elements in a POMDP are rather standard. We present them as a solution framework for POMDP.

5.1 Observations

Observations O could be any observable data emitted according to the hidden states. \(\varTheta (s,a,o)\) is the observation function, which calculates the probability of observing o at state s after taking action a, i.e. P(o|s, a). The observation function \(\varTheta (\cdot ,\cdot ,\cdot )\) can be defined according to the context of IR applications or estimated based on sample datasets.

Let us use a toy example to demonstrate the idea. Suppose we have only two hidden states, “exploration” and “exploitation”. “Exploration” means a user would like to explore new information in the next search iteration, whereas “exploitation” means the user would like to exploit the current search topic and know more details in the next iteration. For every query in the search log, we can judge whether the user is “exploring” or “exploiting” by reviewing query change and dwell time.

For instance, the first three queries in TREC 2013 session #17 are “jp morgan data centers”, “jp morgan data” and “jp morgan data centers investment”. The first search iteration is annotated with \(s^{(0)}\)=“exploration” since the user is always exploring at the beginning. The corresponding observation \(o^{(0)}\) could be identified by “added terms”, because it has no previous query. The second iteration should be annotated with \(s^{(1)}\)=“exploration” because the second query is more general than the first query. The observation \(o^{(1)}\) is “deleted terms” since the second query is formed by removing “centers” from its previous query. By reviewing the search log, it can be seen that the user did not click on any documents listed for his second query. This indicates that he tends to abandon the exploration. The user’s third query is more specific than the previous two queries. It confirms that he is in \(s^{(2)}\)=“exploitation” state in the third iteration. The observation \(o^{(2)}\) is “added terms”. For the sake of completeness, observations neither “added terms” nor “deleted terms” could be identified as “others”.

After annotating the session search log, an MLE estimation of \(P(o|s)=\frac{\#{\textit{iters}}(s,o)}{\#{\textit{iters}}(s)}\) can be calculated. \(\#{\textit{iters}}(s,o)\) is the number of iterations with hidden state s and observation o. \(\#{\textit{iters}}(s)\) is the number of iterations with hidden state s. We can let this MLE estimation to be the observation function \(\varTheta (s,a,o)\). Here, for simplicity, we ignore the parameter of action a in the observation function. If we want to consider actions in the estimation of P(o|s, a), we can annotate each search iteration by the action generating the result that best approximates the returned document list in the search log.

5.2 Belief updates

Belief is often set up as the probability distribution over hidden states. The agent needs to update its belief about the hidden states according to the observations and the actions. In a POMDP, the standard formula for belief update is Eq. 3.

For session search, the transition probability \(M(s, a, s')\) in Eq. 3 should be given in advance or learned from training samples. A learning scheme is its MLE estimation \(\frac{\#{\textit{Trans}}(s, a, s')}{\#{\textit{Trans}}(s, a)}\). \(\#{\textit{Trans}}(s, a, s')\) is the number of transitions from s to \(s'\) by taking action a. \(\#{\textit{Trans}}(s, a)\) is the number of transitions of taking action a at s. Based on the manual annotations of hidden states and actions in the session logs, these two quantities can be counted. For instance, in TREC 2013 session #17, if the transition from s=“exploration” to \(s'\)=“exploration” is activated by some action a, it should be counted into \(\#{\textit{Trans}}\)(“exploration”, a) and \(\#{\textit{Trans}}(``{\textit{exploration}}\)”, a, “exploration”). By counting transitions in the entire search log, \(M(s, a, s')\) can be calculated. Having the observation function and the transition probabilities, the belief can be updated by Eq. 3.

Equation 3 is a regular method to update belief with the Bayes rule when applying POMDP to session search, or generally speaking, to IR. In real application, if some model parameters, such as \(\varTheta\) or T, are not known to us, Eq. 4 is not available any more. In such a case, we can replace it with a self-defined procedure or algorithm specific to the real application to update the agent’s belief over hidden states. Details about such a topic is beyond this paper. We will investigate it in future work.

6 Experiments

6.1 Task and experiment setup

We evaluate a number of systems, each of which represents a combination of design choices as we mentioned in Sect. 4.4. The session search task is to retrieve 2000 relevant documents for the last query in a session using all previous interaction data only in that session; the queries prior to the current, the ranked lists of documents, the clicked documents and the time spent on each clicked document. In particular, we focus on the RL4 task in TREC session track 2012 (Kanoulas et al. 2012), and RL2 task in TREC session track 2013 (Kanoulas et al. 2013). Session logs, including queries, retrieved URLs, Web page titles, snippets, clicks, and dwell time, were generated by the following process. Search topics were provided to the user. The user was then asked to create queries and perform search using a standard search engine provided by TREC. An example search topic is “You just learned about the existence of long-term care insurance. You want to know about it: costs/premiums, companies that offer it, types of policies, ...” (TREC 2013 Session #6).

The dataset used for TREC 2012 is ClueWeb09 CatB, which contains 50 million English Web pages crawled in 2009. The dataset used for TREC 2013 is ClueWeb12 CatB, which contains 50 million English Web pages crawled in 2012. We filter out spam documents in both collections whose Waterloo spam scores (Cormack et al. 2011) are less than 70. All duplicated documents are also removed from the corpora. Table 3 shows the statistics of the TREC 2012 and 2013 Session Track datasets.

For the experiments using SAT clicks, we follow the definition proposed in Fox et al. (2005) and consider a click whose dwell time equals or exceeds 30 seconds as a SAT-click. The more recent study showed that dwell time depends on query-click attributes, such as query type and page content. Based on these features, the authors trained a binary classification model to predict SAT-clicks. We leave the use of this model to estimate SAT-clicks for future work.

We use the evaluation scripts and the ground truth provided by TREC to evaluate the runs. The TREC metrics are mainly about search accuracy, including nDCG@10, nERR@10, nDCG, and MAP (Kanoulas et al. 2013). We also report the retrieval efficiency in Wall Clock Time, CPU cycles and the Big O notation.

6.2 Systems

In this paper, we aim to examine the design choices for using POMDP in session search. Among the 12 combinations mentioned in Sect. 4.4, we have not yet found a realistic way to implement some of them. They include \(S_1A_2R_2\), \(S_1A_3R_1\), \(S_2A_1R_2\), \(S_2A_2R_2\) and \(S_2A_3R_2\). The codes are explained in Table 2. We evaluate the remaining seven choices. We also re-implement UCAIR system (Shen et al. 2005) for comparison. In total, we implement eight systems:

-

\({S_1A_1R_1}\) (win–win) This is a re-implementation of Luo et al.’s system (Luo et al. 2014). Its configuration is “\(S_1\) Fixed number of states” + “\(A_1\) Technology Selection” + “\(R_1\) Explicit Feedback”. Its search engine actions include six retrieval technologies: (1) increasing weights of the added query terms by a factor of \(x \in\){1.05, 1.10, 1.15, 1.20, 1.25, 1.5, 1.75 or 2}; (2) decreasing weights of the added query terms by a factor of \(y\in\) { 0.5, 0.57, 0.67, 0.8, 0.83, 0.87, 0.9 or 0.95}; (3) QCM (Guan et al. 2013; 4) PRF (Pseudo Relevance Feedback) (Salton and Buckley 1997) with the number of top documents p ranges from 1 to 20; (5) LastQuery, which uses the last query in a session to perform document retrieval using Multinomial Language Modeling and Dirichlet Smoothing (Zhai and Lafferty 2002); and (6) AllQuery, which equally weighs and combines all unique query terms in a session and then perform document retrieval as in Zhai and Lafferty (2002). The system employs 20 search engine actions in total and uses nDCG@10 as the reward.

-

\({S_1A_1R_2}\) This is a variation of \(S_1A_1R_1\)(win–win). Its configuration is “\(S_1\) Fixed number of states” + “\(A_1\) Technology Selection” + “\(R_2\) Implicit Feedback”. This system also uses 20 actions. Unlike win–win, its rewards are SAT Clicks (satisfactory clicked documents (Fox et al. 2005)).

-

\({S_1A_2R_1}\) This system’s configuration is “\(S_1\) Fixed number of states” + “\(A_2\) Term Weight Adjustment” + “\(R_1\) Explicit Feedback”. Here we use two states, “Exploitation” and “Exploration” as in the toy example in Sect. 5. The term weights are adjusted similarly to Guan et al. (2013). For example, if the user is currently under “Exploitation” and adds terms to the current query, we let the search engine take an action to increase the weights for the added terms by a factor \(\alpha\), which is empirically set to 1.05.

-

\({S_1A_3R_2}\) This system’s configuration is “\(S_1\) Fixed number of states” + “\(A_3\) Portfolio” + “\(R_2\) Implicit Feedback”. It contains a single state, which is the current query. It uses the last query in a session to retrieve the top X documents as in Zhai and Lafferty (2002) and then re-ranks them to boost the ranks of the SAT Clicked documents. The actions are portfolios, i.e., all possible rankings for the X documents. For each ranked list \(D_i\), the system calculates a reward and selects the ranked list with the highest reward. The reward function is \({\textit{Reward}}(D_i) = \sum _{d \in \cap D_i } \frac{{\textit{Rel}}_{d,LM} + {\textit{Rel}}_{d,LOCAL}}{log_2(1+{\textit{Rank}}_d)}\), where \({\textit{Rel}}_{d,LM}\) is document d’s relevance score calculated as in Zhai and Lafferty (2002). \(Rel_{d,{\textit{LOCAL}}}\) is the relevance assessment score decided by SAT Clicks: if document d is a SAT Clicked document in the session, then \({\textit{Rel}}_{d,{\textit{LOCAL}}}=2\); otherwise 0. \({\textit{Rank}}_d\) is d’s rank in \(D_i\). \({\textit{Reward}}(D_i)\) suggests that if a document is SAT Clicked at the early part of a session, its rank in the final ranked list should be boosted. The number of actions, i.e. the number of possible ranked list, is X!, which is computationally demanding. Due to efficiency concerns, we generate the optimal re-ranked list by a greedy algorithm, which first sorts documents by \({\textit{Rel}}_{d,LM} + {\textit{Rel}}_{d,{\textit{LOCAL}}}\) in descending order and then adds them one by one to the final ranked list. As a result, the number of actions is dramatically reduced to 1.

-

UCAIR This is a re-implementation of Shen et al.’s work (Shen et al. 2005). Every query is a state. Query expansion and re-ranking are the two search technologies. In UCAIR, if a previous query term occurs frequently in the current query’s search results (e.g. more than 5 out of the top K retrieved documents), the term is added to the current query. The expanded query is then used for retrieval. After that, there is a re-ranking phase, where each SAT Click’s snippet in a vector \({\mathbf {v}}_i\) and the current query in another vector \(\mathbf {q}\) are merged by \({\mathbf {v}}=\alpha {\mathbf {q}} + (1-\alpha )\frac{1}{c}\sum _{i=1}^c {\mathbf {v}}_i\), where c is the number of SAT Clicks. A similarity score between \({\mathbf {v}}\) and the top K retrieval results are calculated by cosine similarity, based on which the documents are re-ranked.

-

\({S_2A_2R_1}\) (QCM) This is a re-implementation of Guan et al.’s system in Guan et al. (2013), which has been elaborated in detail in Sect. 3. Its configuration is “\(S_2\) Varying number of states”+ “\(A_2\) Term Weight Adjustment” + “\(R_1\) Explicit Feedback”. In QCM, every query is a state. The search engine actions are term weight adjustments. QCM’s actions include increasing theme terms’ weights, decreasing added terms’ weights and decreasing removed terms’ weights. We tune the parameters of \(\alpha\), \(\beta\), \(\epsilon\), \(\delta\), and \(\gamma\) on TREC session track 2011 dataset and finally set them to 2.2, 1.8, 0.07, 0.4, and 0.92, respectively.

-

\({S_2A_1R_1}\) This system’s configuration is “\(S_2\) Varying number of states”+ “\(A_1\) Technology Selection” + “\(R_1\) Explicit Feedback”. It is built on top of \(S_2A_2R_1\)(QCM). Its search engine actions are two: QCM with or without spam detection. The spam detection is done by using Waterloo’s spam scores. Documents with score less than 70 are filtered out. The rest settings are the same as in QCM.

-

\({S_2A_3R_1}\) (IES) This is a reimplementation of Jin et al.’s work (2013). Its configuration is “\(S_2\) Varying number of states” + “\(A_3\) Portfolio” + “\(R_1\) Explicit Feedback”. This system uses the top K documents as pseudo relevance feedback to re-rank the remaining retrieved documents. It assumes each document’s relevance score is a random variable and all documents’ true relevance scores follow a multi-variable normal distribution \({\mathcal {N}}(\mathbf {\theta }, \varSigma )\). \(\mathbf {\theta }\) is the mean vector and is set as the relevance score calculated directly by Zhai and Lafferty (2002). The \(\varSigma\) is approximated using document cosine similarity. In IES, every possible ranked list is a search engine action. The action space is as big as \({\mathcal {O}}{X}{K}\) if we re-rank the top K documents within the retrieved X documents. In order to reduce the computation cost, IES does not try all the possible document lists, instead, the re-ranked document list is formed by selecting documents with maximal value scores repeatedly from the X retrieved documents in a greedy way. Then the rest \(X-K\) documents are sorted following the traditional IR scores. This is called as “Sequential Ranking Decision” in Jin et al. (2013). Moreover, IES employs Monte Carlo Sampling to numerically calculate the integral in its Bellman equation. In our implementation, we set \(Z=3\), \(X=2000\) and \(K=10\), where Z is the sample size in Monte Carlo Sampling.

6.3 Search accuracy

Tables 4 and 5 display the search accuracy of the above systems using TREC’s effectiveness metrics for TREC 2012 and 2013, respectively. In the two tables, both the mean values and the standard deviation values are listed, and the systems are decreasingly sorted by the average nDCG@10 values.

As we can see, \(S_1A_1R_1\) (win–win) outperforms all other systems in both years. For example, in TREC 2012, \(S_1A_1R_1\) (win–win) shows 37.5% improvement in nDCG@10 and 46.3% in nERR@10 over \(S_2A_2R_1\) (QCM), a strong state-of-the-art session search system which uses a single search technology (Guan et al. 2013). The improvements are statistically significant (\(p<0.05\), t test, one-sided). It also shows 6.0% nDCG and 14.5% MAP improvements over QCM, however they are not statistically significant. Another system \(S_2A_1R_1\), which also uses technology selection improves 25.3% in nDCG@10 and 34.9% in nERR@10 over QCM, too. The improvements are statistically significant (\(p<0.05\), t test, one-sided).

It suggests that “\(A_1\) Technology Selection”, the meta-level search engine action, is superior than a single search technology, for example, term weight adjustment in QCM. Moreover, \(S_1A_1R_1(\hbox {win}-\hbox {win})\) performs even better than \(S_2A_1R_1\), where the former uses more search technologies than the latter. We therefore suggest that using more alternative search technologies can be very beneficial to session search.

The results of the \(S_1A_1R_1\) and \(S_1A_1R_2\) systems allow to clearly see the impact of user’s feedback type. The first system, \(S_1A_1R_1\), uses nDCG@10 as the reward, assuming explicit feedback from the user, while the latter is based on implicit user’s feedback where rewards are defined based on SAT clicks, which can be noisy indicator of document relevance. Figure 4 depicts performance difference between the two systems in terms of all evaluation metrics over two TREC 2012 and 2013 datasets. The system using explicit user feedback consistently outperforms the one using implicit feedback in all metrics. The substantial differences between the performance of the two systems demonstrate how noisy implicit feedback impacts learning of the optimal policy. Therefore, learning the optimal policy in case of implicit feedback requires more interaction data and/or techniques that boost the learning process.

6.4 Efficiency

In this section, we report the efficiency of various systems under a hardware support of 4 CPU cores (2.70 GHz), 32 GB Memory, and 22 TB NAS.

Table 6 presents the wall clock running time, CPU cycles, as well as the Big O notation for each system over the TREC 2012 and 2013 Session Track datasets. The systems are decreasingly ordered by wall clock time, which is measured in seconds.

The experiment shows that \(S_1A_3R_2\), \(S_1A_2R_1\), UCAIR, \(S_2A_2R_1\)(QCM) and \(S_2A_1R_1\) are quite efficient and finished within 2.5 h. \(S_1A_1R_1\)(win–win) and \(S_1A_1R_2\) also show moderate efficiency and finished within 9 h.

\(S_2A_3R_2\)(IES) is the slowest system, which used 27 h to finish. We investigate the reasons behind its slowness. Based on Algorithm 1 for IES in Jin et al. (2013), the system first retrieves X documents using a standard document retrieval algorithm (Zhai and Lafferty 2002), then it obtains the top K documents among the X documents by re-ranking. The algorithm has three nested loops. The first loop enumerates each rank position and its time complexity is O(K). The second loop iterates over each retrieved document, thus its time complexity is O(X). Inside the second loop, it first samples Z documents from the top K documents, then runs the third loop. The third loop enumerates each sample and has a time complexity of O(Z). Inside the third loop, there is a matrix multiplication calculation for every retrieved document, which alone contributes to a time complexity of \(O(X^2)\). Therefore, IES’s total time complexity is \(O(KZX^3)\), which makes IES computationally demanding.

We also look into the time complexity of other systems and present their Big O notations in Table 6. We use O(L) to describe the time complexity of performing one standard document retrieval as in Zhai and Lafferty (2002) and O(X) to describe the time complexity of re-ranking X documents. We notice that \(S_2A_2R_1\)(QCM), UCAIR and \(S_1A_2R_1\) only perform one document retrieval, hence their time complexity is O(L). \(S_1A_1R_2\), \(S_1A_1R_1\)(win–win) and \(S_2A_1R_1\) conduct l document retrievals, hence their time complexity is O(lL). \(S_1A_3R_2\) performs one document retrieval and one document re-ranking, hence its time complexity is \(O(L + X)\). Their time complexities range from linear, e.g. O(L) or O(X), to quadratic, e.g. O(lL), which suggests that these systems are efficient.

We suspect an association between efficiency and the number of actions present in the system. Therefore we plot the efficiency with respect to the number of actions in Fig. 5. The figure shows that in TREC 2012, the systems’ running time increases as the number of actions increases. It suggests that the number of actions used in POMDP is another important factor in deciding its running time, besides time complexity. We do not observe similar relationship between actions and accuracy though (Fig. 6).

6.5 Tradeoff between accuracy and efficiency

Based on the search accuracy and efficiency results, we observe a trade-off between them. In Figs. 7 and 8, we place the systems in a two-dimensional plot about accuracy and efficiency. They show that accuracy tends to increase when efficiency decreases. This is because systems with higher accuracy tend to be more computationally demanding. For instance, \(S_1A_1R_1\)(win–win) achieves better accuracy than \(S_2A_1R_1\) while it has worse efficiency than \(S_2A_1R_1\).

In addition, we find that QCM strikes a good balance between search accuracy and efficiency. With a simple feedback mechanism based on the vector space model, this system reaches high efficiency while can still achieve quite good nDCG@10.

6.6 Action selection

It is interesting to see how likely an action will be selected by a POMDP framework. We thus look into individual search actions in the \(S_1A_1R_1\)(win–win) system, which includes the biggest number of actions from six retrieval technologies: (1) increase term weight; (2) decrease term weight; (3) QCM; (4) PRF; (5) LastQuery and (6) AllQuery.

Figure 6 illustrates how likely an action was selected by \(S_1A_1R_1\)(win–win). Among all six retrieval technologies, QCM is the most frequent choice and was selected over half of the time. It suggests that the strategies for term weight adjustment proposed in QCM are highly effective. On the other hand, AllQuery has never been selected. In AllQuery, unique query terms are treated equally and their original term weights are kept. The result suggests that adjusting term weights based on their changing importance at moments in a session is far better than applying no change to the term weights.

7 Our recommendations

Suppose there is a TREC Session Track participant, who would like to know how to model session search in order to finish a TREC run within 1 day? Within 1 h? What’s the best settings for a POMDP model to be successful in session search? We make recommendations according to our findings under the following circumstances:

-

Dataset size: The TREC 2013 Session dataset contains 442 queries in 87 sessions. The corpus, ClueWeb12 CatB, contains 35 million documents, which take up 500 GB physical space, plus a full text index created by Lemur which takes up additional 158 GB.

-

Hardware Environment: 4 CPU cores (Intel Xeon E5-2680 @ 2.70 GHz), 32 GB Memory, and 22 TB NAS.

Our recommendation is the following. If the time constraint is to finish a run within 1 day, we recommend \(S_1 A_1 R_1\) (win–win), whose settings are “Fixed number of states”, “Technology Selection”, and “Explicit Feedback” as the reward, for its highest search accuracy (Tables 5, 6). All other approaches, except \(S_2 A_3 R_1\)(IES), are also able to finish within 1 day.

If the time constraint is tightened up to 1 h, our recommendation will become \(S_2 A_2 R_1\)(QCM), whose settings are “Varying number of states”, “Term Weight Adjustment” and “Explicit Feedback” as the reward, for its high accuracy within the time constraint. In addition, we also recommend UCAIR, which is the runner-up in search accuracy among runs finishing within 1 h, while only taking half as much time as QCM. We recommend this approach for its good balance between accuracy and efficiency.

Comparing these systems under different time constraints, we noticed that the number of actions heavily influences the efficiency. Specifically, using more actions may benefit the search accuracy, while hurts the efficiency. For instance, with a lot of action candidates, \(S_1 A_1 R_1\) (win–win) outperforms other runs in accuracy. However, the cost of having more actions in the model is that it requires more calculations and longer retrieval time. Therefore, we recommend a careful design of the number of total actions, when creating a new POMDP model, to balance between accuracy and efficiency.

8 Conclusion

This paper aims to provide guidelines for designing new POMDP models to tackle session search and other related IR tasks. We first compare POMDP with its close family of Markov models and suggest that POMDP best suits session search, which is characterized by rich interactions and temporal dependency. We then examine related IR approaches and group their use of MDP, POMDP and RL into major design options. The design options are evaluated extensively in TREC 2012 and 2013 Session Track datasets, focusing on search accuracy and efficiency. Then, we make recommendations for a typical session search task for IR researchers and practitioners to use POMDP in session search. From our experiments, we have learned that a model with more action options tends to have better accuracy but worse efficiency. This once again proves the importance of managing a good balance between accuracy and efficiency. We hope our work can motivate the use of POMDP and other Markov models for session search.

References

Altman A., & Tennenholtz, M. (2005). Ranking systems: The pagerank axioms. In Proceedings of the 6th ACM conference on electronic commerce, EC ’05 (pp. 1–8).

Baum, L. E., & Petrie, T. (1966). Statistical inference for probabilistic functions of finite state markov chains. The Annals of Mathematical Statistics, 37(6), 1554–1563.

Bellman, R. (1957). Dynamic programming. Princeton: Princeton University Press.

Blumenthal, R., & Getoor, R. (1968). Markov processes and potential theory. New York: Academic Press.

Chilton, L. B., & Teevan, J. (2011). Addressing people’s information needs directly in a web search result page. In Proceedings of the 20th international conference on world wide web, WWW ’11 (pp. 27–36).

Cormack, G. V., Smucker, M. D., & Clarke, C. L. (2011). Efficient and effective spam filtering and re-ranking for large web datasets. Information Retrieval, 14(5), 441–465.

Fox, S., Karnawat, K., Mydland, M., Dumais, S., & White, T. (2005). Evaluating implicit measures to improve web search. ACM Transactions on Information Systems (TOIS), 23(2), 147–168.

Guan, D., Zhang, S., & Yang, H. (2013). Utilizing query change for session search. In Proceedings of the 36th international ACM SIGIR conference on research and development in information retrieval, ACM, New York, SIGIR ’13 (pp. 453–462).

Hassan, A., White, R. W., Dumais, S. T., & Wang, Y. M. (2014). Struggling or exploring?: Disambiguating long search sessions. In Proceedings of the 7th ACM international conference on web search and data mining, ACM, New York, NY, USA, WSDM ’14 (pp. 53–62). doi:10.1145/2556195.2556221.

Hofmann, K., Whiteson, S., & de Rijke, M. (2011). Balancing exploration and exploitation in learning to rank online. In Proceedings of the 33rd European conference on advances in information retrieval, ECIR’11 (pp. 251–263).

Järvelin, K., & Kekäläinen, J. (2002). Cumulated gain-based evaluation of ir techniques. ACM Transactions on Information Systems (TOIS), 20(4), 422–446.

Jin, X., Sloan, M., & Wang, J. (2013). Interactive exploratory search for multi page search results. In Proceedings of the 22nd international conference on world wide web, WWW ’13 (pp. 655–666).

Joachims, T. (1997). A probabilistic analysis of the Rocchio algorithm with TFIDF for text categorization. In Proceedings of the fourteenth international conference on machine learning, ICML ’97 (pp. 143–151).

Kaelbling, L., Littman, M., & Cassandra, A. (1998). Planning and acting in partially observable stochastic domains. Artificial Intelligence, 101(1–2), 99–134.

Kanoulas, E., Carterette, B., Hall, M., Clough, P., & Sanderson, M. (2012). Overview of the TREC 2012 session track. In Proceedings of the 21st text retrieval conference, TREC ’12.

Kanoulas, E., Carterette, B., Hall, M., Clough, P., & Sanderson, M. (2013). Overview of the TREC 2013 session track. In Proceedings of the 22nd text retrieval conference, TREC ’13.

Katehakis, M. N., & Veinott, A. F. (1987). The multi-armed bandit problem: Decomposition and computation. Mathematics of Operations Research, 12(2), 262–268.

Kim, Y., Hassan, A., White, R. W., & Zitouni, I. (2014). Modeling dwell time to predict click-level satisfaction. In Proceedings of the 7th ACM international conference on web search and data mining, ACM, New York, NY, USA, WSDM ’14 (pp. 193–202).

Kotov, A., Bennett, P. N., White, R. W., Dumais, S. T., & Teevan, J. (2011). Modeling and analysis of cross-session search tasks. In Proceedings of the 34th international ACM SIGIR conference on research and development in information retrieval, ACM, New York, NY, USA, SIGIR ’11 (pp. 5–14).

Luo, J., Zhang, S., & Yang, H. (2014). Win–win search: Dual-agent stochastic game in session search. In Proceedings of the 37th international ACM SIGIR conference on research and development in information retrieval, SIGIR ’14.

Miller, D. R. H., Leek, T., & Schwartz, R. M. (1999). A hidden Markov model information retrieval system. In Proceedings of the 22nd annual international ACM SIGIR conference on research and development in information retrieval, SIGIR ’99 (pp. 214–221).

Mitra, B. (2015). Exploring session context using distributed representations of queries and reformulations. In Proceedings of the 38th international ACM SIGIR conference on research and development in information retrieval, ACM, New York, NY, USA, SIGIR ’15 (pp. 3–12). doi:10.1145/2766462.2767702.

Mitsui, M., Shah, C., & Belkin, N. J. (2016). Extracting information seeking intentions for web search sessions. In Proceedings of the 39th international ACM SIGIR conference on research and development in information retrieval, ACM, New York, NY, USA, SIGIR ’16 (pp. 841–844). doi:10.1145/2911451.2914746.

Norris, J. R. (1998). Markov chains. Cambridge: Cambridge University Press.

Odijk, D., White, R. W., Hassan Awadallah, A., & Dumais, S. T. (2015). Struggling and success in web search. In Proceedings of the 24th ACM international on conference on information and knowledge management, ACM, New York, NY, USA, CIKM ’15 (pp. 1551–1560). doi:10.1145/2806416.2806488.

Rabiner, L. R. (1989). A tutorial on hidden markov models and selected applications in speech recognition. Proceedings of the IEEE, 77(2), 257–286.

Radlinski, F., Kleinberg, R., & Joachims, T. (2008). Learning diverse rankings with multi-armed bandits. In Proceedings of the 25th international conference on machine learning, ICML ’08 (pp. 784–791).

Raman, K., Bennett, P. N., & Collins-Thompson, K. (2013). Toward whole-session relevance: Exploring intrinsic diversity in web search. In Proceedings of the 36th international ACM SIGIR conference on research and development in information retrieval, SIGIR ’13 (pp. 463–472).

Salton, G., & Buckley, C. (1997). Improving retrieval performance by relevance feedback. In K. S. Jones & P. Wille (Eds.), Readings in information retrieval. San Francisco: Morgan Kaufmann.

Shen, X., Tan, B., & Zhai, C. (2005). Implicit user modeling for personalized search. In Proceedings of the 14th ACM international conference on information and knowledge management, CIKM ’05 (pp. 824–831).

Singh, M., & Croft, W. B. (2016). Iterative search using query aspects. In Proceedings of the 25th ACM international on conference on information and knowledge management, ACM, New York, NY, USA, CIKM ’16 (pp. 2037–2040). doi:10.1145/2983323.2983903.

Sondik, E. (1978). The optimal control of partially observable Markov processes over the infinite horizon: Discounted cost. Operations Research, 26(2), 282–304.

Yuan, S., & Wang, J. (2012). Sequential selection of correlated ads by POMDPs. In Proceedings of the 21st ACM international conference on information and knowledge management, CIKM ’12 (pp. 515–524).

Zhai, C., & Lafferty, J. (2002). Two-stage language models for information retrieval. In Proceedings of the 25th annual international ACM SIGIR conference on research and development in information retrieval, SIGIR ’02 (pp. 49–56).

Zhang, S., Luo, J., & Yang, H. (2014). A POMDP model for content-free document re-ranking. In Proceedings of the 37th international ACM SIGIR conference on research and development in information retrieval, SIGIR ’14 (pp. 1139–1142).

Zhuang, M., Toms, E. G., & Demartini, G. (2016). Search behaviour before and after search success. In Proceedings of the second international workshop on search as learning, SAL 2016, co-located with the 39th international ACM SIGIR conference on research and development in information retrieval, SIGIR 2016, Pisa, Italy.

Acknowledgements

The research was supported by NSF CAREER IIS-1453721, NSF CNS-1223825, and DARPA FA8750-14-2-0226. The U.S. Government is authorized to reproduce and distribute reprints for Governmental purposes notwithstanding any copyright notation thereon. Thanks to the sponsorship of the China Scholarship Council as well. Any opinions, findings, conclusions, or recommendations expressed in this paper are of the authors, and do not necessarily reflect those of the sponsors.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Yang, G.H., Dong, X., Luo, J. et al. Session search modeling by partially observable Markov decision process. Inf Retrieval J 21, 56–80 (2018). https://doi.org/10.1007/s10791-017-9316-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10791-017-9316-8