Abstract

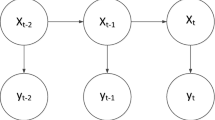

Semi-supervised learning is drawing increasing attention in the era of big data, as the gap between the abundance of cheap, automatically collected unlabeled data and the scarcity of labeled data that are laborious and expensive to obtain is dramatically increasing. In this paper, we first introduce a unified view of density-based clustering algorithms. We then build upon this view and bridge the areas of semi-supervised clustering and classification under a common umbrella of density-based techniques. We show that there are close relations between density-based clustering algorithms and the graph-based approach for transductive classification. These relations are then used as a basis for a new framework for semi-supervised classification based on building-blocks from density-based clustering. This framework is not only efficient and effective, but it is also statistically sound. In addition, we generalize the core algorithm in our framework, HDBSCAN*, so that it can also perform semi-supervised clustering by directly taking advantage of any fraction of labeled data that may be available. Experimental results on a large collection of datasets show the advantages of the proposed approach both for semi-supervised classification as well as for semi-supervised clustering.

Similar content being viewed by others

1 Introduction

Semi-supervised learning algorithms tackle cases where a relatively small amount of labeled data yet a large amount of unlabeled data is available for training (Chapelle et al. 2006; Zhu and Goldberg 2009). We find examples of semi-supervised learning scenarios in various fields, such as email filtering, sound/speech recognition, text/webpage classification, and compound discovery, just to mention a few. For instance, in areas such as biology, chemistry, and medicine, domain experts and laboratory analyses may be required to label observations, thus only a small collection of labeled data can usually be afforded, which may not be representative enough for supervised learning to be applied (Batista et al. 2016).

Typically, semi-supervised learning algorithms are based on extensions of either supervised or unsupervised algorithms by including additional information in the form originally handled by the other learning paradigm. For instance, in semi-supervised clustering, a collection of labeled observations can be used to guide the (otherwise unsupervised) search for clustering solutions that better meet users’ prior expectations. Labels in clustering only indicate whether observations are expected to be part of the same cluster or different clusters, there is no one-to-one association between the unique labels known to the user and the possible categories to be discovered in the data. In semi-supervised classification, the classes are known in advance, and unlabeled observations are used in addition to the labeled ones to improve on (the otherwise supervised) classification performance.

Semi-supervised learning can be categorized into inductive and transductive learning. Inductive learning uses both labeled and unlabeled training data to generate a model able to predict the labels for the unlabeled training data as well as for future data to be labeled. Transductive learning predicts the labels of the unlabeled training data only (from which a model may optionally be derived afterwards, if prediction of new, unseen data objects is required). In transductive classification, which is the focus of the first part of this paper, a large amount of unlabeled objects can be classified based on a small fraction of labeled objects, which is not representative enough to successfully train a classifier in a traditional, fully supervised way. Label-based semi-supervisedclustering, which is the focus of the second part of this paper, is intrinsically transductive in its nature. The main difference from transductive classification is that in clustering not necessarily all possible categories and their labels are known in advance, so unlabeled objects may be assigned newly discovered labels that are not present in the original training set.

In the case of semi-supervised classification, unlabeled data can help improve classification performance when there is a good match between the problem structure and the model’s assumptions: “...there’s no free lunch. Bad matching of problem structure with model assumption can lead to degradation in classifier performance” (Zhu 2005). Different models of semi-supervised classification exist, relying on different model assumptions (Zhu 2005). One of the major paradigms, clustering-based models, follows the well-known cluster assumption of semi-supervised classification:

Assumption 1

(Cluster assumption; Chapelle et al. 2006) If points are in the same cluster, they are likely to be of the same class.

This assumption is quite general and broad in scope as there are many possible interpretations of “cluster”, under different clustering paradigms. An important, statistically sound paradigm is density-based clustering (Kriegel et al. 2011), where clusters are defined as high-density data regions separated by low-density regions. Under this paradigm, Assumption 1 is closely related to another common assumption in semi-supervised classification:

Assumption 2

(Smoothness assumption; Chapelle et al. 2006) The label function is smoother in high-density than in low-density regions...If two points in a high-density region are close, then so should be their outputs (labels)...If, on the other hand, they are separated by a low-density region, then their outputs need not be close.

According to Chapelle et al. (2006), in the context of classification and from a density-based clustering perspective, Assumptions 1 and 2 are equivalent to each other and can be read as “The decision boundary should lie in a low-density region (low density separation)”. In spite of the obvious connections between these two areas, however, the use of density-based clustering for semi-supervised classification has been surprisingly overlooked in the literature. Many methods focus instead on the use of graphs (as opposed to clusters) to model the notions of locality and connectivity of the data (de Sousa et al. 2013). Such graph-based methods mostly rely on the following model assumption:

Assumption 3

(Graph assumption; Zhu and Goldberg 2009) Class labels are “smooth” with respect to the graph, so that they vary slowly, i.e., if two points are connected by a strong edge, their labels tend to be the same.

In this paper we show that there is a strong relation between density-based clustering methods and the graph-based approach for transductive classification, by first establishing formal relationships between a number of key unsupervised and semi-supervised clustering algorithms under a unified view of density-based clustering, then establishing the links and interpretations of these algorithms from the perspective of graph theory. Taking advantage of such a unified view, we then firstly introduce a framework of density-based clustering for semi-supervised classification that brings the three assumptions above (namely cluster, smoothness, and graph) under a common umbrella. In this context, we make the following initial contributions: (a) our framework extends the state-of-the-art density-based hierarchical clustering algorithm HDBSCAN* (Campello et al. 2015), originally proposed as an unsupervised or (constraint-based) semi-supervised clustering algorithm, to perform transductive classification from a small collection of pre-labeled data objects; (b) we show that, in the context of transductive classification, other well-known density-based algorithms for semi-supervised clustering can also be derived as particular cases, with the advantage that our framework eliminates possible order-dependency and graph re-computation issues of these algorithms, while being simpler and easier to interpret; and (c) by combining building blocks from different algorithms, a number of novel variants follow naturally from our framework, which, to the best of our knowledge, have never been tried before.

We published the aforementioned contributions in a preliminary conference paper (Gertrudes et al. 2018). The current paper is an extension of this preliminary publication that expands our unified view of density-based methods from the semi-supervised classification scenario to the label-based semi-supervised clustering scenario, where labels for certain categories may be missing in the training set. As a novel contribution in this context, we extend HDBSCAN*, which plays a central role in our unified view and framework for density-based classification, to also perform semi-supervised clustering from a collection of pre-labeled data objects, rather than instance-level pairwise constraints (as currently supported by the algorithm). The direct use of labels can be shown to be both simpler and more effective. To that end, a new collection of experiments focused on clustering has also been included as extended material in this paper, in addition to the classification experiments from our preliminary publication (Gertrudes et al. 2018).

The remainder of this paper is organized as follows: in Sect. 2 we discuss related work that is close to our approach. In Sect. 3 we present our unified view of density-based clustering algorithms that bridges between the areas of semi-supervised clustering and classification. In Sect. 4 we introduce our framework for density-based semi-supervised classification. In Sect. 5 we present our newly proposed strategy to perform label-based semi-supervised clustering. In Sects 6 and 7 we discuss our experiments and results, respectively. Finally, in Sect. 8 we conclude the paper and discuss some future work.

2 Related work

In the context of semi-supervised classification, different categories of algorithms have been described in the literature (Zhu 2005). Closer to our work are the clustering-based and the graph-based approaches. Graph-based algorithms construct a graph with vertices from both labeled and unlabeled objects. Generally, neighboring vertices are connected by edges such that edge weights are proportional to some measure of local connectivity strength (de Sousa et al. 2013). Once the neighborhood graph is built, labels can be somehow transfered from labeled to unlabeled objects, e.g., using Markov chain propagation techniques (Szummer and Jaakkola 2002) or regularized methods based on the graph Laplacian (Zhao et al. 2006). The Laplacian SVM (LapSVM) (Belkin et al. 2006) is related to the latter category and is a state-of-the-art algorithm in the semi-supervised classification literature.

A well-known strategy for label propagation in graph-based semi-supervised classification is the use of a so-called harmonic function. In this context, a harmonic function is a function that has the same values as the labels on the labeled data, and satisfies the weighted average property on the unlabeled data, i.e., the value assigned to each unlabeled object is the weighted average of the values of its neighbors. In a binary classification problem, the class labels are coded, e.g., as \(\{-1,+1\}\), and these values are assigned to the vertices corresponding to the labeled objects. Each remaining (unlabeled) object has its value determined as the average of the values of its adjacent vertices in the neighborhood graph, weighted by the corresponding edge weights. The resulting real values, which allow for different physical and probabilistic interpretations, can be discretized back into \(\{-1,+1\}\) to achieve the final transductive classification. A classic algorithm that follows this type of approach is the Gaussian Field Harmonic Function (GFHF) (Zhu et al. 2003).

To address multi-class semi-supervised classification, Liu and Chang (2009) formulated a constrained label propagation problem by incorporating class priors, leading to a simple closed-form solution. The algorithm, called Robust Multi-Class Graph Transduction (RMGT), is an extension of the GFHF algorithm, which can be viewed as a constrained optimization problem using a graph Laplacian as smoothness measure (de Sousa 2015). Both RMGT and GFHF, as well as the previously mentioned LapSVM algorithm, are used as baseline for comparisons in our experimental evaluation.

In contrast to graph-based methods, clustering-based algorithms for semi-supervised classification perform label transduction based on the clustering structure of the data, rather than by using an explicit graph (Zhu and Goldberg 2009). However, since certain clustering techniques are implicitly or explicitly built upon graphs and related algorithms, there are connections between these two different paradigms of semi-supervised learning, which are investigated in this paper. Of particular interest in our context are density-based clustering methods (Kriegel et al. 2011), which are popular in the data mining field as a statistically sound approach that has also been used for semi-supervised classification, and has also been shown to have strong connections with elements from graph theory (Campello et al. 2015). Two noticeable algorithms in this context are HISSCLU (Böhm and Plant 2008) and Semi-Supervised DBSCAN (SSDBSCAN) (Lelis and Sander 2009), both of which are built upon notions inherited from two classic, widely used unsupervised density-based clustering algorithms, namely, OPTICS (Ankerst et al. 1999) and DBSCAN (Ester et al. 1996).

SSDBSCAN (Lelis and Sander 2009), which has more recently also been extended to the active learning scenario (Li et al. 2014), was in principle proposed as a semi-supervised clustering method, which does not necessarily label all objects (as it would normally be expected in transductive classification), but rather leave certain objects unlabeled as noise, as usual (and meaningful) in density-based clustering applications. Despite this, the algorithm explicitly relies on a classification assumption:

Assumption 4

(Classification assumption) There is at least one (possibly more) labeled object from each class.

Unlike SSDBSCAN, HISSCLU (Böhm and Plant 2008) already includes an extended label-propagation scheme that assigns a label from the training set to every unlabeled object in the database. It further differs from SSDBSCAN in that it also includes a preprocessing mechanism to widen the gap between nearby classes by stretching distances between objects around class boundaries. This mechanism allows HISSCLU to expand different class labels even within clusters that are formed by more than one class (i.e., clusters of objects that are density connected but not pure in their labels). SSDBSCAN, in contrast, makes the label consistency assumption:

Assumption 5

(Label consistency assumption) “Label consistency requires different labels to belong to different clusters; under this assumption, a single class can still have multiple modes [clusters or sub-clusters]; in other words, objects in different clusters can have the same label, only in a single cluster the labels have to be the same.” (Lelis and Sander 2009)

From this perspective, SSDBSCAN relies more strictly than HISSCLU on the clustering assumption of semi-supervised classification (Assumption 1). Unlike SSDBSCAN, which was originally proposed for the semi-supervised clustering task, HISSCLU can perform both semi-supervised classification and clustering. Both remain state-of-the-art algorithms in the density-based literature, so they are also used as baseline for comparisons in our experimental evaluation.

Apart from SSDBSCAN and HISSCLU, very few algorithms exist in the realm of semi-supervised density-based clustering. Ruiz et al. (2007, 2010) proposed C-DBSCAN, which is a modified version of DBSCAN designed to cope with instance-level constraints. However, C-DBSCAN has the same limitation as DBSCAN in that it uses a single, critical global density threshold determined by two user-defined parameters. In addition, the algorithm enforces constraints in a hard sense, i.e., clusters under cannot-link constraints are not allowed to be formed and different clusters under must-link constraints are forced to be merged. Hence, while satisfying the user-provided constraints, the algorithm violates the implicit assumptions behind the clustering model adopted, namely, the definitions of density connectivity and density-based clusters.

An algorithm of particular interest that does not suffer from any of the above limitations is HDBSCAN* (Campello et al. 2013a, 2015). Originally, HDBSCAN* was proposed as a method for unsupervised or (constraint-guided) semi-supervised density-based clustering. In this paper, HDBSCAN* is extended in two different ways: first, it is extended to also perform semi-supervised classification via label propagation; second, it is extended to perform semi-supervised clustering directly from labels, rather than instance-level pairwise constraints.

In the following section we discuss in more detail fundamental concepts and ideas underpinning the algorithms DBSCAN, OPTICS, SSDBSCAN, HISSCLU, and HDBSCAN*, while establishing the links between these algorithms as well as their connections with graph theory, which will be subsequently required to understand our proposed unified approach for density-based semi-supervised clustering and classification.

3 A unified view of density-based clustering algorithms

In this document, we adopt the following notations: \(\mathbf {X} = \{\mathbf {x}_{1}, \mathbf {x}_{2}, \ldots , \mathbf {x}_{n}\}\) is a dataset with n data objects, \(\mathbf {x}_{i}\). Some of the algorithms described in this paper assume that data objects are points in a d-dimensional Euclidean space, i.e., \(\mathbf {x}_{i} \in \mathbb {R}^{d}\) is a d-dimensional feature vector with real-valued coordinates (\(\mathbf {x}_{i} = [x_{i1} \cdots x_{id}]^{{\textsf {T}}}\)). Others do not make any assumptions about features and only require a measure of dissimilarity between pairs of data objects, \(d(\mathbf {x}_{i},\mathbf {x}_{j})\), in order to operate. This dissimilarity is assumed to be a distance but not necessarily a metric. \(\mathbf {X}_{L} \subset \mathbf {X}\) is a subset of the data objects for which class labels are available, and \({{\,\mathrm{class}\,}}(\mathbf {x}_{i})\) is the class label of object \(\mathbf {x}_{i} \in \mathbf {X}_{L}\). The subset of unlabeled objects is denoted by \(\mathbf {X}_{U}\), such that \(\mathbf {X}_{L} \cup \mathbf {X}_{U} = \mathbf {X}\) and \(\mathbf {X}_{U} = \mathbf {X}~\backslash ~ \mathbf {X}_{L}\).

3.1 DBSCAN*, DBSCAN, and OPTICS

A number of concepts used later in this work refer back to ideas and definitions from DBSCAN (Ester et al. 1996), OPTICS (Ankerst et al. 1999), and related algorithms. We start describing DBSCAN*, which is a simplified version of DBSCAN defined in terms of core and noise objects only (Campello et al. 2013a):

Definition 1

(Core and noise) An object \(\mathbf {x}\) is called a core object w.r.t. \(\epsilon \in \mathbb {R}_{\ge 0}\) and \(m_{\text {pts}} \in \mathbb {N}_{> 0}\) if its \(\epsilon \)-neighborhood (a ball of radius \(\epsilon \) centered at \(\mathbf {x}\)) contains at least \(m_{\text {pts}}\) many objects, i.e., if \(|N_{\epsilon }(\mathbf {x})| \ge m_{\text {pts}}\), where \(N_{\epsilon }(\mathbf {x})=\{ \mathbf {x}_{i} \in \mathbf {X} ~|~ d(\mathbf {x}, \mathbf {x}_{i}) \le \epsilon \}\) and \(| \cdot |\) stands for set cardinality. An object is called noise if it is not a core object.

Definition 2

(\(\epsilon \)-reachable) Two core objects \(\mathbf {x}_{i}\) and \(\mathbf {x}_{j}\) are \(\epsilon \)-reachable w.r.t. \(\epsilon \) and \(m_{\text {pts}}\) if \(\mathbf {x}_{i} \in N_{\epsilon } (\mathbf {x}_{j})\) and \(\mathbf {x}_{j} \in N_{\epsilon } (\mathbf {x}_{i})\).

Definition 3

(Density-connected) Two core objects \(\mathbf {x}_{i}\) and \(\mathbf {x}_{j}\) are density-connected w.r.t. \(\epsilon \) and \(m_{\text {pts}}\) if they are directly or transitively \(\epsilon \)-reachable.

Definition 4

(Cluster) A cluster \(\mathbf {C}\) w.r.t. \(\epsilon \) and \(m_{\text {pts}}\) is a non-empty maximal subset of \(\,\mathbf {X}\) such that every pair of objects in \(\mathbf {C}\) is density-connected.

Like in DBSCAN, two parameters define a density threshold given by a minimum number of objects, \(m_{\text {pts}}\), within a ball of radius \(\epsilon \) centered at an object \(\mathbf {x}\). Clusters are formed only by objects \(\mathbf {x}\) satisfying this minimum density threshold (core objects). Two such objects are in the same cluster if and only if they can reach one another directly or through a chain of objects in which every consecutive pair is within each other’s \(\epsilon \)-neighborhood.

DBSCAN* is not only simpler, but it is also statistically more rigorous than the original DBSCAN, as it strictly conforms with the classic principle of density-contour clusters as defined by Hartigan (1975). The original DBSCAN relaxes this principle by allowing some objects below the density threshold, called border objects, to be incorporated into clusters. Specifically, a border object in DBSCAN is a non-core object that lies within the \(\epsilon \)-neighborhood of a core object. For convenience, here we will formalize this notion by using the following definitions adapted from OPTICS (Ankerst et al. 1999):

Definition 5

(Core distance) The core distance of an object \(\mathbf {x}_{i} \in \mathbf {X}\) w.r.t. \(m_{\text {pts}}\), \(d_{\text {core}}(\mathbf {x}_{i})\), is the distance from \(\mathbf {x}_{i}\) to its \(m_{\text {pts}}\)-nearest neighbor (where the 1st-nearest neighbour is by convention the query object itself, \(\mathbf {x}_{i}\), the 2nd-nearest neighbour is thus the next object closest to \(\mathbf {x}_{i}\), and so on).

Definition 6

(Reachability distance) The (asymmetric) reachability distance from an initial object \(\mathbf {x}_{i}\) to an end object \(\mathbf {x}_{e}\) w.r.t. \(m_{\text {pts}}\), \(d_{\text {reach}}(\mathbf {x}_{i},\mathbf {x}_{e})\), is the largest of the core distance of \(\mathbf {x}_{i}\) and the distance between \(\mathbf {x}_{i}\) and \(\mathbf {x}_{e}\): \(d_{\text {reach}}(\mathbf {x}_{i},\mathbf {x}_{e}) = \max \{d_{\text {core}}(\mathbf {x}_{i}), d(\mathbf {x}_{i},\mathbf {x}_{e})\}\).

From Definition 5, it is clear that the core distance of an object \(\mathbf {x} \in \mathbf {X}\) is the minimum value of the radius \(\epsilon \) for which \(\mathbf {x}\) is a core object (i.e., its density is above the minimum density threshold). By definition, a border object \(\mathbf {x}_{e}\) in DBSCAN is not a core object, which means \(\epsilon < d_{\text {core}}(\mathbf {x}_{e})\). Also by definition, a border object \(\mathbf {x}_{e}\) falls within the \(\epsilon \)-neighborhood of a core object, say \(\mathbf {x}_{i}\), which means \(d(\mathbf {x}_{i},\mathbf {x}_{e}) \le \epsilon \) and, since \(\mathbf {x}_{i}\) is core, \(\epsilon \ge d_{\text {core}}(\mathbf {x}_{i})\). From these inequalities, it follows that \(d_{\text {core}}(\mathbf {x}_{e}) > \epsilon \ge \max \{d_{\text {core}}(\mathbf {x}_{i}), d(\mathbf {x}_{i},\mathbf {x}_{e})\}\), and using Definition 6 a border object can then be defined as:Footnote 1

Definition 7

(Border object) An object \(\mathbf {x}_{e} \in \mathbf {X}\) is called a border object w.r.t. \(\epsilon \) and \(m_{\text {pts}}\) if \(d_{\text {core}}(\mathbf {x}_{e}) > \epsilon \) and there exists another object \(\mathbf {x}_{i} \in \mathbf {X}\) such that \(\epsilon \ge d_{\text {reach}}(\mathbf {x}_{i},\mathbf {x}_{e})\).

The clusters in the original DBSCAN are the same as in DBSCAN*, augmented with their corresponding border objects; all the other objects are labeled as noise by both algorithms. From a graph perspective, it is straightforward to see that the clusters in DBSCAN* (Definition 4) are the connected components of an undirected graph where each core object is represented as a vertex and two vertices are adjacent if and only if the corresponding core objects fall within each other’s \(\epsilon \)-neighborhood (i.e., iff they are \(\epsilon \)-reachable—Definition 2). DBSCAN also includes border objects as vertices, each of which is adjacent to a core object. For a border object \(\mathbf {x}_{e}\), if there is more than one core object \(\mathbf {x}_{i}\) satisfying Definition 7, the original DBSCAN makes \(\mathbf {x}_{e}\) adjacent to one of those chosen randomly, but the choice can be made deterministically, e.g., the one that minimizes \(d_{\text {reach}}(\mathbf {x}_{i},\mathbf {x}_{e})\) (or \(d(\mathbf {x}_{i},\mathbf {x}_{e})\) in case of ties).

Starting from an arbitrary object in the dataset, OPTICS (Ankerst et al. 1999) derives an ordering (\(\prec \)) of the data objects that implicitly encodes all possible DBSCAN solutions for a given value of \(m_{\text {pts}}\). The algorithm does not require the radius \(\epsilon \) to produce such an ordering, which has the following property: given an object \(\mathbf {x}_{q}\), the smallest reachability distance to \(\mathbf {x}_{q}\) from any of its preceding objects is no greater than the smallest reachability distance from any of its preceding objects to an object succeeding \(\mathbf {x}_{q}\), i.e.,

This property is important for two reasons: (a) it ensures that by plotting \(\min _{\mathbf {x}_{p}:\, \mathbf {x}_{p} \prec \mathbf {x}_{q}} d_{\text {reach}}(\mathbf {x}_{p},\mathbf {x}_{q})\) for every object \(\mathbf {x}_{q}\) in the given order, the so-called OPTICS reachability plot, density-based clusters and sub-clusters appear as valleys or “dents” in the plot; and (b) if one wants to set a threshold \(\epsilon \), as a horizontal line cutting through the plot, it is straightforward to show that DBSCAN clusters with radius \(\epsilon \) correspond essentially to the contiguous subsequences of the ordered points for which the plot is below the threshold.

The OPTICS ordering and reachability plot can be easily achieved by keeping an adaptable priority queue sorted by the smallest reachability distance from an object outside the queue (already processed) to each object inside the queue. At each iteration, the object with the smallest such distance (priority key) is removed from the queue (processed), the reachability distances from that object to the objects inside the queue are computed, and the queue is readjusted accordingly. From a graph perspective, this is algorithmically analogous to Prim’s algorithm to compute a Minimum Spanning Tree (MST), the only difference being that OPTICS operates on a directed graph where each pair of vertices (\(\mathbf {x}_{p},\mathbf {x}_{q}\)) is connected by a pair of unique edges, one in each direction, whose weights are the corresponding reachability distances, i.e. \(d_{\text {reach}}(\mathbf {x}_{p},\mathbf {x}_{q})\) and \(d_{\text {reach}}(\mathbf {x}_{q},\mathbf {x}_{p})\). The optional threshold \(\epsilon \) to extract DBSCAN clusters corresponds to pruning out from such a complete digraph any edge whose weight is larger than \(\epsilon \). The strongly connected components of the resulting digraph (subsets of vertices mutually reachable via directed paths) correspond to the DBSCAN* clusters with radius \(\epsilon \), whereas the DBSCAN clusters additionally include vertices that are not part of any of the strongly connected components, but are reachable from those (i.e., the border objects).

3.2 SSDBSCAN

3.2.1 Conceptual approach

SSDBSCAN (Lelis and Sander 2009) is a semi-supervised algorithm that performs semi-supervised clustering of an unlabeled dataset \(\mathbf {X}_{U} \subset \mathbf {X}\) from a small fraction of labeled data \(\mathbf {X}_{L} \subset \mathbf {X}\) using a label expansion engine that is very similar to OPTICS. Unlike OPTICS, however, SSDBSCAN circumvents the unnecessary complications related to border objects and the asymmetric nature of the original reachability distance in Definition 6 by using a symmetric version of it, which has been formally defined as mutual reachability distance by Campello et al. (2013a, 2015):

Definition 8

(Mutual reachability distance) The mutual reachability distance between two objects \(\mathbf {x}_{i}\) and \(\mathbf {x}_{j}\) in \(\mathbf {X}\) w.r.t. \(m_{\text {pts}}\) is defined as \(d_{\text {mreach}}(\mathbf {x}_{i}, \mathbf {x}_{j}) = \max \{d_{\text {core}}(\mathbf {x}_{i}), d_{\text {core}}(\mathbf {x}_{j}), d(\mathbf {x}_{i},\mathbf {x}_{j})\}\).

The interpretation of this definition plays a fundamental role not only in SSDBSCAN but also more broadly here in our work: the mutual reachability distance is the smallest value of the radius\(\epsilon \) for which the corresponding pair of objects are still core objects and are \(\epsilon \)-reachable from each other (Definition 2). For a given \(m_{\text {pts}}\), \(\epsilon \) establishes a density threshold that is inversely proportional to this radius, and the mutual reachability distance is henceinversely proportional to the largest density threshold for which the corresponding pair of objects is directly density-connected according to Definition 3.

Conceptually, SSDBSCAN attempts to solve the following problem: for each unlabeled object, \(\mathbf {x}_{i} \in \mathbf {X}_{U}\), the goal is to assign \(\mathbf {x}_{i}\) the same label, \({{\,\mathrm{class}\,}}(\mathbf {x}_{j})\), as the object \(\mathbf {x}_{j} \in \mathbf {X}_{L}\) that is the “closest” to \(\mathbf {x}_{i}\) from a density-connectivity perspective, if such a labeling is possible, without violating the label consistency assumption (Assumption 5). This assumption requires that objects with different labels have to reside in disjunct density-based clusters following Definition 4 (w.r.t. the same \(m_{\text {pts}}\), but possibly different \(\epsilon \) values).

Let us provisionally put the label consistency assumption aside and first focus on the primary goal, namely, what “closest to\(\mathbf {x}_{i}\)from a density-connectivity perspective” means. In density-based clustering, this refers to the object that can reach out (or be reached from) \(\mathbf {x}_{i}\) through a path along which the lowest density connection is as high as possible (in other words, the weakest point in the connection is as strong as possible). Notice that, in light of Definition 8, this is equivalent to the path along which the largestmutual reachability distance is as small as possible. From a graph perspective, if objects are vertices and any two vertices are connected by an undirected edge weighted by their mutual reachability distance, the goal is to find the path between the unlabeled vertex \(\mathbf {x}_{i}\) in question and a labeled vertex \(\mathbf {x}_{j}\) along which the largest edge is minimal. Given \(\mathbf {x}_{i}\) and any labeled candidate \(\mathbf {x}_{j}\), this corresponds to the classic minmax problem in graph theory, whose solution can be proven to be the path between \(\mathbf {x}_{i}\) and \(\mathbf {x}_{j}\) along the minimum spanning tree (MST) of the graph. Hence, a preliminary approach to label the set of unlabeled objects \(\mathbf {X}_{U} \subset \mathbf {X}\) could be the following:

Definition 9

(Label propagation) Compute the MST of the dataset \(\mathbf {X}\) in the transformed space of mutual reachability distances, \(\text {MST}_r\), find the largest \(\text {MST}_r\) edge connecting each object \(\mathbf {x}_{i} \in \mathbf {X}_{U}\) to every object \(\mathbf {x}_{j} \in \mathbf {X}_{L}\), and make \({{\,\mathrm{class}\,}}(\mathbf {x}_{i}) = {{\,\mathrm{class}\,}}(\mathbf {x}_{j})\) where \(\mathbf {x}_{j}\) is the labeled object for which such a maximum edge is minimal.

From the density-based clustering perspective, however, there is a problem with this approach. Let us consider two labeled objects, \(\mathbf {X}_{L} = \{\mathbf {x}_{j1}, \mathbf {x}_{j2}\}\), such that \({{\,\mathrm{class}\,}}(\mathbf {x}_{j1}) \ne {{\,\mathrm{class}\,}}(\mathbf {x}_{j2})\) and \(\mathbf {x}_{j2}\) is on the \(\text {MST}_r\) path between \(\mathbf {x}_{j1}\) and an unlabeled object \(\mathbf {x}_{i} \in \mathbf {X}_{U}\). Now consider two possible scenarios:

-

In the first scenario, the largest \(\text {MST}_r\) edge on the path between \(\mathbf {x}_{j1}\) and \(\mathbf {x}_{i}\), say 20, is located on the sub-path between \(\mathbf {x}_{j1}\) and the intermediate object, \(\mathbf {x}_{j2}\). The largest edge on the path between \(\mathbf {x}_{j2}\) and \(\mathbf {x}_{i}\) is then smaller than 20, say 10 (Fig. 1-I). In this case, it is safe to make \({{\,\mathrm{class}\,}}(\mathbf {x}_{i}) = {{\,\mathrm{class}\,}}(\mathbf {x}_{j2})\) without violating the label consistency assumption because any threshold \(\epsilon \in [10,20)\) can make \(\mathbf {x}_{i}\) density-connected to \(\mathbf {x}_{j2}\) (and, therefore, part of the same density-based cluster according to Definition 4) but not to \(\mathbf {x}_{j1}\).

-

In the second scenario, the largest \(\text {MST}_r\) edge on the path between \(\mathbf {x}_{j1}\) and \(\mathbf {x}_{i}\), say 20, is located on the sub-path between \(\mathbf {x}_{j2}\) and \(\mathbf {x}_{i}\), whereas the largest edge on the path between \(\mathbf {x}_{j1}\) and \(\mathbf {x}_{j2}\) is, say, 10 again (Fig. 1-II). In this case, the largest edge on the path between \(\mathbf {x}_{i}\) and the two labeled objects in question is the same, yet those two labeled objects have different labels. There is no threshold that can keep \(\mathbf {x}_{i}\) density-connected to either \(\mathbf {x}_{j1}\) or \(\mathbf {x}_{j2}\) but not to both. The only way to split \(\mathbf {x}_{j1}\) apart from \(\mathbf {x}_{j2}\) is by a threshold \(\epsilon < 10\), but this also splits both \(\mathbf {x}_{j1}\) and \(\mathbf {x}_{j2}\) from \(\mathbf {x}_{i}\). SSDBSCAN handles this label inconsistency scenario by not labeling \(\mathbf {x}_{i}\) at all, leaving it unclustered as noise.

3.2.2 Algorithmic approach

Algorithmically, SSDBSCAN does not pre-compute the \(\text {MST}_r\). Instead, SSDBSCAN runs an OPTICS search starting from each labeled object and assigning the corresponding label temporarily to the unlabeled objects as they are found and processed by the OPTICS ordering traversal. However, this procedure, called label expansion, uses the mutual reachability distance in Definition 8, rather than the original, asymmetric version of OPTICS in Definition 6. The use of a symmetric distance makes OPTICS algorithmically identical to Prim’s algorithm to compute MSTs. From this perspective, SSDBSCAN dynamically builds an MST in the space of mutual reachability distances, starting from each object \(\mathbf {x}_{j1} \in \mathbf {X}_{L}\) and provisionally assigning its label \({{\,\mathrm{class}\,}}(\mathbf {x}_{j1})\) to the traversed unlabeled objects until an object \(\mathbf {x}_{j2} \in \mathbf {X}_{L}\) with a different label, \({{\,\mathrm{class}\,}}(\mathbf {x}_{j2}) \ne {{\,\mathrm{class}\,}}(\mathbf {x}_{j1})\), is found. When such an object is found, SSDBSCAN backtracks and only confirms the labels assigned to objects before the largest edge on the path from \(\mathbf {x}_{j1}\) to \(\mathbf {x}_{j2}\), as these objects are “closer” to \(\mathbf {x}_{j1}\) than to \(\mathbf {x}_{j2}\) from a density-connectivity perspective, and they can be separated from those objects beyond such a largest edge (including \(\mathbf {x}_{j2}\)) by any threshold \(\epsilon \) smaller than this edge’s weight.

SSDBSCAN stops when the label expansion procedure has been run from each \(\mathbf {x}_{j} \in \mathbf {X}_{L}\). Objects not labeled by any of the OPTICS initializations are left unclustered as noise. These objects are those for which no density threshold exists that can make them part of any cluster without incurring label inconsistency.

3.2.3 Shortcomings

SSDBSCAN has a number of shortcomings that will be addressed later in this work:

-

1.

Order-dependency: If the \(\text {MST}_r\) is not unique, i.e., when there are different yet equally optimal (minimum) spanning trees in the transformed space of mutual reachability distances, some objects may end up with different labels depending on the order of the various OPTICS traversals. The reason is that, for different traversals, the implicit minimum spanning trees that are partially and dynamically built from different starting vertices (labeled objects) may be different, and these differences can be shown to possibly cause the algorithm to be order-dependent. Order-dependency may also occur when there is more than one largest edge (i.e., a tie) on the sub-path of the \(\text {MST}_r\) between two labeled objects that have different labels;

-

2.

Re-computations: rather than pre-computing the \(\text {MST}_r\), SSDBSCAN implicitly and partially builds it from different starting vertices. Clearly, many portions of the \(\text {MST}_r\) are likely to be recomputed multiple times, which is unnecessarily inefficient from a computational point of view;

-

3.

Noise and missing clusters: from a clustering perspective, the fact that some objects are left unclustered as noise is expected, especially from a density-based perspective. In SSDBSCAN, however, entire clusters may be left unclustered as noise, typically when they do not contain any labeled object and are well-separated from other clusters, closer to each other, containing objects with different labels, such that the missed clusters cannot be reached at a density level without incurring violations of the label consistency assumption (Assumption 5). This is why SSDBSCAN assumes that there is “at least one (possibly more) labeled object from each class” (Assumption 4), which is, however, a classification rather than a clustering assumption. From a classification perspective, even when no cluster is missed, leaving a fraction of objects unlabeled as noise (typically global or local outliers) may be undesired. This particular issue is not present in a related algorithm, HISSCLU, which we discuss next.

3.3 HISSCLU

HISSCLU (Böhm and Plant 2008) can be seen as a semi-supervised version of OPTICS that produces, instead of the original, purely unsupervised reachability plot, a colored version of the plot where every unlabeled object of the dataset is assigned a class label (i.e., a color) from the labeled set, \(\mathbf {X}_{L} \subset \mathbf {X}\). The colored plot provides a visual contrast between the clustering structure, revealed as valleys and peaks following an OPTICS ordering (\(\prec \)), and the transductive classification, mapped as colors in the plot. The main algorithm consists of two stages, a preprocessing stage and a label expansion stage, as described next.

3.3.1 Preprocessing stage (label-based distance weighting)

Before any label expansion takes place, HISSCLU pre-computes a weight for each pairwise distance \(d(\mathbf {x}_{i},\mathbf {x}_{j})\) in the dataset. The resulting, weighted distances are used in lieu of the original (e.g., Euclidean) distances in the subsequent steps of the algorithm, namely, reachability distance computations and OPTICS-based label propagation. Such a preprocessing stage is designed to widen the gap between nearby classes by stretching distances between objects around class boundaries, thus allowing the algorithm to expand different class labels even in situations where no natural boundaries of low density between different classes exist. The mathematical and algorithmic details are omitted here for the sake of compactness, but the basic intuition is the following: a pair of objects \((\mathbf {x}_{p},\mathbf {x}_{q}) \in \mathbf {X}_{L}\times \mathbf {X}_{L}\) for which \({{\,\mathrm{class}\,}}(\mathbf {x}_{p}) \ne {{\,\mathrm{class}\,}}(\mathbf {x}_{q})\) establishes a separating hyperplane that perpendicularly crosses the midpoint on the line segment between these two objects (as points in an Euclidean space). A pair of objects \((\mathbf {x}_{i},\mathbf {x}_{j})\) on different sides of this hyperplane in the vicinity of \(\mathbf {x}_{p}\) and \(\mathbf {x}_{q}\) will have their distance stretched by a multiplicative weight \(\ge 1\). The closer to the hyperplane, the greater the weight (stretching). The maximum weight is a user-defined parameter, \(\rho \ge 1\) (\(\rho = 1\) means no weighting). The weighting decays towards the minimum value 1 with an increasing distance of the objects from the separating hyperplane. Objects “behind” \(\mathbf {x}_{p}\) and \(\mathbf {x}_{q}\) in relation to the hyperplane are not affected (unitary weight). The shape and rate of decay is controlled by a second parameter, \(\xi > 0\) (\(\xi = 1\) gives a parabolic decrease, \(\xi > 1\) gives a faster, bell-shaped decrease corresponding to a sharper “influence region” around the separating hyperplane, whereas \(0< \xi < 1\) gives a more square-shaped decrease, i.e., a wider influence region around the separating hyperplane). This mechanism allows HISSCLU to expand different class labels even within clusters that are formed by more than one class, i.e., clusters of objects that are density connected but not pure in their labels.

3.3.2 Label expansion stage

Conceptually, the transductive classification performed by HISSCLU is essentially the label propagation procedure described in Definition 9, which also serves as a basis for SSDBSCAN as previously discussed in Sect. 3.2. However, unlike SSDBSCAN, HISSCLU does not make the label consistency assumption (Assumption 5), thus being able to assign a label to every unlabeled object in \(\mathbf {X}_{U} \subset \mathbf {X}\). In other words, no object is left unlabeled as noise.

But if noise is not an option, how does HISSCLU handle possible label inconsistencies, which can be caused by ties w.r.t. the maximum \(\text {MST}_r\) edge (referred to in the definition)? For instance, let us consider the illustrative example in Fig. 2, where we have 5 data objects and the corresponding \(\text {MST}_r\). The label propagation procedure described in Definition 9 would arguably assign \({{\,\mathrm{class}\,}}(\mathbf {x}_{2}) = {{\,\mathrm{class}\,}}(\mathbf {x}_{1})\) (green square) and \({{\,\mathrm{class}\,}}(\mathbf {x}_{4}) = {{\,\mathrm{class}\,}}(\mathbf {x}_{5})\) (red star). The middle point (\(\mathbf {x}_{3}\)), however, is undefined, as there is a tie in the largest edge (10) from this point to objects with different labels. In SSDBSCAN, this point should be left unlabeled (noise), because there is no density threshold that can keep it density-connected to either \(\mathbf {x}_{1}\) or \(\mathbf {x}_{2}\) but not to both.Footnote 2 Differently, HISSCLU uses instead the 2nd largest edge to resolve the tie (then the 3rd if there is another tie, and so on). In our example, the 2nd largest edge is 9 (green square) versus 5 (red star), thus \({{\,\mathrm{class}\,}}(\mathbf {x}_{3}) = {{\,\mathrm{class}\,}}(\mathbf {x}_{5})\) (red star).

Algorithmically, HISSCLU operates similarly to SSDBSCAN in the sense that it also runs an OPTICS search starting from each labeled object in \(\mathbf {X}_{L}\) and assigning labels temporarily to unlabeled objects as they are found by the OPTICS ordering traversal. Unlike SSDBSCAN, however, the traversal does not stop and backtracks once an object with a different label is found. More importantly, the OPTICS traversal in HISSCLU occurssimultaneously from all labeled objects. To that end, the OPTICS priority queue is initialized with every unlabeled object having its smallest reachability distance from a labeled object, say \(\mathbf {x}_{j} \in \mathbf {X}_{L}\), as its priority key, and \({{\,\mathrm{class}\,}}(\mathbf {x}_{j})\) as its temporary label. This corresponds to initializing OPTICS from all objects in \(\mathbf {X}_{L}\) at the same time, rather than from a single object. Each object \(\mathbf {x}_{o}\) removed from the queue is processed and its temporary label becomes permanent. The reachability distance from \(\mathbf {x}_{o}\) to every object \(\mathbf {x}_{i}\) still unprocessed inside the queue is computed, and whenever \(\mathbf {x}_{i}\) is reached by a processed object \(\mathbf {x}_{o}\) with reachability distance smaller than \(\mathbf {x}_{i}\)’s current priority key, \(\mathbf {x}_{i}\)’s key is updated, its temporary label is set to \({{\,\mathrm{class}\,}}(\mathbf {x}_{o})\), and the priority queue is rearranged accordingly. This procedure has been shown (Böhm and Plant 2008) to ensure that the final labels respect the desired “min-max” reachability notion, resolving ties in the maximum edge as described above. However, HISSCLU is still subject to issues previously discussed in the context of SSDBSCAN (Sect. 3.2.3), which relate to the fact that (possibly different) minimum spanning trees are built partially and dynamically, rather than pre-computed.

3.3.3 Flat clustering extraction (k-clustering)

Like OPTICS, the reachability plot that results from HISSCLU encodes only visually and implicitly a density-based clustering hierarchy. The colors in the plot, in turn, represent a transductive classification of the data from the collection of pre-labeled objects, rather than a clustering result. For scenarios where an explicit clustering solution is desired, Böhm and Plant (2008) offer an optional, post-processing flat clustering extraction stage of HISSCLU, called k-clustering, which essentially applies an arbitrary global density threshold to perform a conventional horizontal cut through the reachability plot, analogous to extracting DBSCAN solutions from OPTICS.

3.4 HDBSCAN*

HDBSCAN* (Campello et al. 2013a) is a hierarchical algorithm for unsupervised density-based clustering, which has also been extended to perform hierarchy simplification and visualization, optimal non-hierarchical clustering, and outlier detection (Campello et al. 2015). In the following we describe the core algorithm and extensions that are relevant in our context.

3.4.1 Basic algorithm

Following Hartigan’s principles of density-contour clusters and trees (Hartigan 1975), the core HDBSCAN* algorithm provides as a result a complete hierarchy composed of all possible DBSCAN* clustering solutions (as defined in Sect. 3.1) for a given value of \(m_{\text {pts}}\) and an infinite range of density thresholds, \(\epsilon \in [0,\infty )\), in a nested (i.e., dendrogram-like) way. Key to achieving this is the following transformed proximity graph (conceptual only, it does not need to be materialized) (Campello et al. 2013a, 2015):

Definition 10

(Mutual reachability graph) The mutual reachability graph is a complete graph, \(G_{m_{\text {pts}}}\), in which the objects of \({\mathbf X}\) are vertices and the weight of each edge is the mutual reachability distance (w.r.t. \(m_{\text {pts}}\)) between the respective pair of objects.

Let \(G_{m_{\text {pts}},\epsilon } \subseteq G_{m_{\text {pts}}}\) be the graph obtained by removing all edges from \(G_{m_{\text {pts}}}\) having weights greater than some value of \(\epsilon \). From our previous discussions it is clear that clusters according to DBSCAN* w.r.t. \(m_{\text {pts}}\) and \(\epsilon \) are the connected components of core objects in \(G_{m_{\text {pts}},\epsilon }\), whereas the remaining objects are noise. This observation allows to produce all DBSCAN* clusterings for any \(\epsilon \in [0,\infty )\) in a nested, hierarchical way by removing edges in decreasing order of weight from \(G_{m_{\text {pts}}}\).

Notice that this is essentially the graph-based definition of the hierarchical Single-Linkage algorithm (Jain and Dubes 1988), and therefore there is a conceptual relationship between the algorithms DBSCAN* and Single-Linkage in the transformed space of mutual reachability distances: the clustering obtained by DBSCAN* w.r.t. \(m_{\text {pts}}\) and some value \(\epsilon \) is identical to the one obtained by first running Single-Linkage on the transformed space of mutual reachability distances (w.r.t. \(m_{\text {pts}}\)), then, cutting the resulting dendrogram at level \(\epsilon \) of its scale, and treating all resulting singletons with \(d_{\text {core}} > \epsilon \) as noise. This suggests that we could implement a hierarchical version of DBSCAN* by applying an algorithm that computes a Single-Linkage hierarchy on the transformed space of mutual reachability distances.

One of the fastest ways to compute a Single-Linkage hierarchy is by using a divisive algorithm that works by removing edges from a minimum spanning tree in decreasing order of weights (Jain and Dubes 1988), here, corresponding to mutual reachability distances, i.e., edges from the \(\text {MST}_r\). HDBSCAN* augments the ordinary \(\text {MST}_r\) with self-loops whose weights correspond to the core distance of the respective object (vertex), to directly represent the level in the hierarchy below which an isolated object is a noise object (\(d_{\text {core}}> \epsilon \)), and above which it may be part of a cluster or a cluster on its own, i.e., a dense singleton. In short, the core HDBSCAN* is as follows: the \(\text {MST}_r\) is computed using some computationally efficient method (e.g. Prim’s), augmented with self-edges, then edges are removed in decreasing order and the resulting connected components are labeled as clusters. In case of ties, edges are removed simultaneously.

Notice that, unlike SSDBSCAN, which also makes use of the \(\text {MST}_r\), HDBSCAN* provides a hierarchical rather than a flat clustering solution, and unlike OPTICS and HISSCLU, whose reachability plots only implicitly encode DBSCAN clustering solutions for a given value of \(m_{\text {pts}}\) and \(\epsilon \in [0,\infty )\), the corresponding hierarchical relations in HDBSCAN* are explicit and readily available.

3.4.2 HDBSCAN* with all-points core distance

Campello et al. (2013a, 2015) showed that the parameter \(m_{\text {pts}}\), which is commonly shared by all density-based algorithms previously discussed, corresponds to a classic smoothing factor of a nonparametric, nearest neighbors density estimate. This parameter is not critical and can be useful to provide the user with fine-tuning control of the results, e.g., by visual inspection of the clustering hierarchies or of the reachability plots. It can be removed though, if desired, basically by replacing the core and mutual reachability distances in Definitions 5 and 8 with a parameterless alternative. In particular, a parameterless version of HDBSCAN* was proposed (Moulavi 2014) that is based on a new core distance of an object, which does not depend on its \(m_{\text {pts}}\)-neighborhood, but rather considers the dataset in a way that closer objects contribute more to the density than farther objects do:

Definition 11

(All-points core distance) The all-points core-distance of a d-dimensional point \(\mathbf {x}\) of a dataset \(\mathbf {X}\) with respect to all other \(n-1\) points in \(\mathbf {X}\), i.e., \(\mathbf {X} \backslash \{\mathbf {x}\}\), is defined as (Moulavi 2014):

Let us note that the all-points core distance is only meaningful for datasets as points in a d-dimensional real vector space, as opposed to the original HDBSCAN* (as well as DBSCAN, OPTICS, and SSDBSCAN) that can operate with any dataset for which some type of pairwise distance between objects can be defined. HISSCLU shares the same limitation when its preprocessing stage is required.

A summary table with the properties and assumptions of all the density-based algorithms studied in this paper, including our new algorithms for semi-supervised classification and clustering (to be introduced in Sects. 4 and 5, respectively), is provided in “Appendix”.

4 Unified framework for density-based classification

In the previous section, we elaborated how previous clustering and semi-supervised clustering algorithms in the density-based clustering paradigm can all conceptually be viewed as processing minimum spanning trees in a space of reachability distances, i.e., processing MSTs of a conceptual, complete graph where the nodes are the objects, and the edge weights are the reachability distances between objects. These reachability distances can be based on unmodified or modified (e.g., weighted, streched) distances between objects.

In this section, we present a new framework for semi-supervised classification by extending the HDBSCAN* clustering framework with additional, optional steps, derived from “decoupled” building blocks of the algorithms discussed in Sect. 3, so that these building blocks can be re-combined and applied in different ways. This will allow us to study the performance gain of each building block and specify different instances for semi-supervised classification—one can be considered a close approximation of HISSCLU, and another one is a looser, but faster approximation, others are novel variants that have not been investigated before.

4.1 The components of the framework (building blocks)

The building blocks for semi-supervised classification in our framework are the following:

-

1.

The adopted definition of core and reachability distances: In our framework, we will study both the standard definition of core-distance with the parameter \(m_{\text {pts}}\), which has been adopted by all the algorithms discussed in Sect. 3 (Definition 5), as well as the parameter-free all-points core distance (Definition 11). Given a notion of core distance, we will only use the symmetric notion of mutual reachability distance (Definition 8) as used by SSDBSCAN, DBSCAN*, and HDBSCAN*, even though the “older” algorithms HISSCLU, DBSCAN, and OPTICS are based on the asymmetric notion in Definition 6. The reason is that the mutual reachability distance has a statistically more sound interpretation, is simpler and, in practice, the difference in results tends not to be very noticeable.

-

2.

MST computation in the space of mutual reachability distances: Recall from Sect. 3 that SSDBSCAN and HISSCLU compute multiple MSTs “on-the-fly”, starting from labeled objects. Such an approach is inefficient and, from a conceptual point of view, not necessary. It is obviously not necessary when the MST of the conceptual, complete graph in the transformed space of mutual reachability distances is unique; then the same MST will just be re-computed multiple times. But even when the MST is not unique, the different MSTs are in a sense equivalent from the perspective of representing the inherent cluster structure of a data set: none of them should lead to fundamentally different conclusions about the density distribution of the dataset. Therefore, in our framework we explicitly decouple the MST construction from the label expansion (as we have already done conceptually in the discussion of the algorithms SSDBCAN and HISSCLU in the previous section), and we will use HDSBCAN*’s efficient algorithm to compute the “extended” \(\text {MST}_r\) as described in Sect. 3.4.

-

3.

Label expansion: Given a computed graph \(\text {MST}_r\), in our framework for semi-supervised classification we implement a label expansion method on top of the \(\text {MST}_r\), similar to HISSCLU (see Sect. 3.3.2), but using the single \(\text {MST}_r\) computed by HDBSCAN* to propagate the labels based on the path with the smallest largest edge to a labeled object, resolving ties, possibly consecutively, by considering the smaller of the next largest edge on the paths. This can be implemented by starting with a “connected component” \(C_i\) for each pre-labeled object \(\mathbf {x}_i \in \mathbf {X}_L\) that initially contains only \(\mathbf {x}_i\). These connected components \(C_i\) are then iteratively extended by traversing the \(\text {MST}_r\) in a way that ensures correctness of the final result (Gertrudes et al. 2018). A detailed description of the algorithm alongside with a pseudo-code is provided in “Appendix”.

Example: Figure 3 illustrates our label expansion with an example. Figure 3a displays 15 objects and their minimum spanning tree in the mutual reachability distance space (\(\text {MST}_r\)). To perform the label expansion, we initialize connected components with the pre-labeled objects, \(C_1 = \{\mathbf {x}_{1}\}\), \(C_2 = \{\mathbf {x}_{5}\}\), \(C_3 = \{\mathbf {x}_{9}\}\), and \(C_4 = \{\mathbf {x}_{13}\}\).

-

(a)

From all the components, the first edge to be analyzed is the one connecting \(\mathbf {x}_{5}\) to \(\mathbf {x}_{6}\) since it is the one with the lowest weight, 1.0, among all of the currently “outgoing” edges of the current components. Since \(\mathbf {x}_{6}\) is not labeled yet, it will receive the label of \(C_{2}\) (i.e., \({{\,\mathrm{class}\,}}(\mathbf {x}_{5})\)), \(\mathbf {x}_{6}\) is added to \(C_{2}\), and since there is no other edge incident to \(\mathbf {x}_{6}\), no new outgoing edges are added.

-

(b)

Then, the edges with the next largest edge weight, 1.5, connecting to \(C_1, \ldots , C_4\), which are the edges connecting \(\mathbf {x}_{1}\) to \(\mathbf {x}_{3}\) and connecting \(\mathbf {x}_{13}\) to \(\mathbf {x}_{14}\), are selected. The two edges connect to a different, not-yet-labeled object and thus \(\mathbf {x}_{3}\) is labeled with \({{\,\mathrm{class}\,}}(\mathbf {x}_{1})\) and added to \(C_1\), while \(\mathbf {x}_{14}\) is labeled with \({{\,\mathrm{class}\,}}(\mathbf {x}_{13})\) and added to \(C_4\).

-

(c)

Two new unprocessed edges are now incident to \(C_1\), connecting \(\mathbf {x}_{2}\) and \(\mathbf {x}_{4}\) to \(\mathbf {x}_{3}\), with edge weights of 4.0 and 1.0, respectively; three new unprocessed edges are now incident to \(C_4\), connecting \(\mathbf {x}_{11}\), \(\mathbf {x}_{12}\), and \(\mathbf {x}_{15}\) to \(\mathbf {x}_{14}\), with edge weights of 2.5, 4.0, and 2.0, respectively. The smallest edge weight of all the outgoing edges of the current connected components is now 1.0 on the edge connecting \(\mathbf {x}_{4}\) to \(\mathbf {x}_{3}\). Since \(\mathbf {x}_{4}\) is unlabeled, it is labeled with \({{\,\mathrm{class}\,}}(\mathbf {x}_{1})\) and added to \(C_1\), and the edge connecting \(\mathbf {x}_{4}\) to \(\mathbf {x}_{5}\) is added to the outgoing edges of \(C_1\).

-

(d)

The now smallest edge weight of outgoing edges is 2.0 on the edges connecting \(\mathbf {x}_{9}\) to \(\mathbf {x}_{8}\) and connecting \(\mathbf {x}_{14}\) to \(\mathbf {x}_{15}\). The two edges are incident to two different unlabeled objects. Hence \(\mathbf {x}_{8}\) is added to \(C_3\) (which currently had only \(\mathbf {x}_{9}\) in it) and receives its label, and \(\mathbf {x}_{15}\) is added to \(C_4\) (currently with \(\mathbf {x}_{13}\) and \(\mathbf {x}_{14}\)) and receives its label; no new outgoing edges are incident to \(C_4\) but three new outgoing edges are incident to \(C_3\): (\(\mathbf {x}_{8}\), \(\mathbf {x}_{7}\)), (\(\mathbf {x}_{8}\), \(\mathbf {x}_{5}\)), and (\(\mathbf {x}_{8}\), \(\mathbf {x}_{10}\)), with corresponding edge weights 1.0, 3.0, and 4.0.

-

(e)

Next, the smallest edge weight of outgoing edges is now 1.0 on the edge (\(\mathbf {x}_{8}\), \(\mathbf {x}_{7}\)); \(\mathbf {x}_{7}\) is added to \(C_3\) and receives its label, no new outgoing edges are incident to \(\mathbf {x}_{7}\). Similarly, \(\mathbf {x}_{11}\) is added to \(C_4\) since its edge weight of 2.5 is the next smallest.

-

(f)

After adding \(\mathbf {x}_{11}\) to \(C_4\), \(C_4\) has a new outgoing edge, connecting \(\mathbf {x}_{11}\) to \(\mathbf {x}_{10}\), with edge weight 4.0. Next, the smallest edge weight of outgoing edges is now 3.0 on the edge between \(\mathbf {x}_{5}\) and \(\mathbf {x}_{8}\), outgoing from \(C_2\) as well as \(C_3\). This edge connects two components with the same label. Hence \(C_2\) and \(C_3\) are merged into and replaced by \(C_{2\_3}\).

-

(g)

In the next step the smallest edge weight of outgoing edges is 4.0 on the edges (\(\mathbf {x}_{3}\), \(\mathbf {x}_{2}\)), outgoing from \(C_1\), (\(\mathbf {x}_{8}\), \(\mathbf {x}_{10}\)), outgoing from \(C_{2\_3}\), (\(\mathbf {x}_{11}\), \(\mathbf {x}_{10}\)) outgoing from \(C_4\), and (\(\mathbf {x}_{14}\), \(\mathbf {x}_{12}\)), outgoing from \(C_4\). Similar to previous cases in which an unlabeled object is connected to just one of the current components, \(\mathbf {x}_{2}\) is labeled and added to \(C_1\), and \(\mathbf {x}_{12}\) is labeled and added to \(C_4\).

-

(h)

The unlabeled object \(\mathbf {x}_{10}\) is connected to two components with different class labels. The smallest largest edge weight on paths to pre-labeled objects in both \(C_{2\_3}\) and \(C_{4}\) is 4.0, so the next (2nd) largest edge on all paths to pre-labeled objects is considered, which are 2.0 on the path from \(\mathbf {x}_{9}\) in \(C_{2\_3}\) to \(\mathbf {x}_{10}\), 3.0 on the path from \(\mathbf {x}_{5}\) in \(C_{2\_3}\) to \(\mathbf {x}_{10}\), and 2.5 on the path from \(\mathbf {x}_{13}\) in \(C_{4}\) to \(\mathbf {x}_{10}\). The smallest of these is 2.0 from \(\mathbf {x}_{9}\), hence \(\mathbf {x}_{10}\) is added to \(C_{2\_3}\) and obtains its label.

-

(i)

In the last step, the edge (\(\mathbf {x}_{4}\), \(\mathbf {x}_{5}\)) with edge weight 5.0 is ignored since it connects two components with different class labels.

Figure 3b shows the final result.

-

4.

Preprocessing: Label-based distance weighting: As an optional step, one may want to compute weighted distances based on the labeled subset of the data, as described in Sect. 3.3.1 for HISSCLU. We include such a step in our framework that applies label-based distance weighting on all the pairwise distances before computing core and reachability distances, as in HISSCLU. However, this step can be computationally time consuming. As an approximation, we also propose to apply distance weighting after the \(\text {MST}_r\) has been constructed, so that it only needs to be applied to the mutual reachability distances of the edges in the \(\text {MST}_r\).

4.2 The framework

The above building blocks can be combined in different ways to obtain different and novel semi-supervised classification methods as instances of our framework, through which we can study the contribution of each building block in an overall approach to semi-supervised classification. We denote different instances using the notation HDBSCAN*(core-distance-definition, label-based-distance-weighting-scheme), where core-distance-definition stands either for the standard core distance definition, abbreviated by “cd”, or the all-points core distance, abbreviated by “ap”; and label-based-distance-weighting-scheme stands for label-based distance weighting of all pairwise distances, abbreviated as “wPWD”, or label-based distance weighting of the \(\text {MST}_r\) edges, abbreviated as “wMST”; no weighting is denoted as “—”.

Figure 4 presents a schematic view of the instances of our framework for semi-supervised classification, which we will study in the experimental evaluation. Each branch in the diagram starts with a distance matrix as input and represents a different algorithm. In the leftmost branch, label-based distance weighting on the pairwise distances of the distance matrix is performed, standard core-distance is used, \(\text {MST}_r\) is computed, and label expansion is performed. This algorithm is denoted by HDBSCAN*(cd,wPWD), and it is similar to HISSCLU, but it uses the symmetric, mutual reachability distance rather than the old, asymmetric version, and relies only on a single pre-computed \(\text {MST}_r\). The third branch that uses standard core distance and performs label-based distance weighting only on the \(\text {MST}_r\) edges can be considered an even looser but much faster approximation of HISSCLU. The other branches show other methods, using alternatively the all-points core distance, and perform or omit completely the two options for label-based distance weighting.

4.3 Complexity

The asymptotic complexity of instances of our framework is as follows. Given the dataset \(\mathbf {X}\), computing the core orall-points-core distances and the construction of the \(\text {MST}_r\) has an overall time complexity of \(O(n^{2})\) (this part is the same as HDBSCAN*).

The label expansion takes, in the worst case, \(O(n \log n)\) time if the set of outgoing edges of the connected components is maintained in a heap, with edge weights as priority key.

-

In the algorithms that apply the label-based weighting function to the entire distance matrix—HDBSCAN*(cd,wPWD) and HDBSCAN*(ap,wPWD)—the additional runtime is of the order \(O(n^{2} + |\mathbf {X}_L|n)\), where \(|\mathbf {X}_L|\) is the number of pre-labeled objects: the first term is the number of distances that have to be weighted, whereas the second term corresponds to the pre-computation of the elements required to compute any weight in constant time (following the optimized approach of Böhm and Plant (2008)).

-

For the algorithms that apply the weighting function in the \(\text {MST}_r\) instead—HDBSCAN*(cd,wMST) and HDBSCAN*(ap,wMST)—the additional runtime is \(O(n + |\mathbf {X}_L|n) \rightarrow O(|\mathbf {X}_L|n)\) since the \(\text {MST}_r\) has only \(n-1\) edges to be weighted. Assuming \(|\mathbf {X}_L| \ll n\) as usual in semi-supervised classification, the additional runtime of this approach is O(n), in contrast to \(O(n^{2})\) of the original HISSCLU weighting. The former becomes even more attractive when it is necessary to compute the label expansion with different sets of labeled objects. In this case, it will be necessary only to adapt the edges of the \(\text {MST}_r\), instead of repeating the process of computing the core distance (or the all-points core distance), and to compute the \(\text {MST}_r\) in every different label configuration.

In total, the overall runtime complexity of the algorithms in the framework for semi-supervised classification is hence \(O(n^{2})\). If pairwise distances are computed on demand, it requiresO(n) memory only.

5 Density-based semi-supervised clustering

When performing semi-supervised classification, all classes are known in advance, pre-labeled objects from all of these classes are available, and all unlabeled objects are in principle supposed to be labeled by the algorithm. In contrast, when the task at hand is semi-supervised clustering, not all categories are necessarily known in advance, which means that labels may not be available for some (unknown) classes yet to be discovered, and part of the unlabeled objects may be left unclustered as noise. In this case, the framework proposed in Sect. 4 is no longer suitable.

HDBSCAN* offers an optional post-processing method of its clustering hierarchy, called FOSC, that can extract a flat clustering solution by performing local cuts through the hierarchy in order to select a collection of non-overlapping clusters (and, possibly, objects unclustered as noise) that is optimal according to a given unsupervised or semi-supervised criterion. FOSC is unique in that it can perform non-horizontal cuts through a hierarchy, which means that clusters can be extracted from different hierarchical levels. In HDBSCAN*, this means that solutions composed of clusters at various density levels can be obtained, which could not be obtained by a conventional, global horizontal cut at a single hierarchical level. In the following we revisit FOSC as this method plays a central role in our approach for density-based semi-supervised clustering.

5.1 FOSC

FOSC (Framework for Optimal Extraction of Clusters) was proposed by Campello et al. (2013b) as a general framework to perform optimal extraction of flat clustering solutions from clustering hierarchies. In order to understand how the method operates in HDBSCAN*, let us consider an example.

Figure 5 shows a toy dataset with 22 objects. The complete clustering hierarchy produced by HDBSCAN* with \(m_{\text {pts}} = 3\) is shown in Table 1,Footnote 3 where rows correspond to hierarchical levels (density thresholds for varied \(\epsilon \)), columns correspond to data objects, and entries contain cluster labels (“0” stands for noise). Notice that, unlike traditional dendrograms, clusters can shrink and yet retain the same label when individual objects (or spurious components with fewer than an optional, user-defined minimum cluster size, \(m_{\text {ClSize}}\)) are disconnected from them becoming noise, as the density threshold increases for decreasing values of \(\epsilon \) (top-down the hierarchy). Only when a cluster is divided into two non-spurious subsets of density-connected objects the resulting subsets are deemed new clusters. This way, the complete hierarchy in Table 1 can actually be represented as a simplified cluster tree where the root (\(\mathbf {C}_1\)) is the “cluster” containing the whole dataset, which subdivides into two child nodes corresponding to clusters \(\mathbf {C}_2\) and \(\mathbf {C}_3\), and these further subdivide into two sub-clusters each (\(\mathbf {C}_4\) and \(\mathbf {C}_5\) from \(\mathbf {C}_2\), \(\mathbf {C}_6\) and \(\mathbf {C}_7\) from \(\mathbf {C}_3\)).

Notice in Table 1 that objects belonging to a parent cluster do not necessarily belong to any of its children, as they may become noise before a cluster splits. For instance, objects \(\mathbf {x}_1\), \(\mathbf {x}_2\), \(\mathbf {x}_5\), and \(\mathbf {x}_{20}\) belong to \(\mathbf {C}_2\) but not to \(\mathbf {C}_4\) or \(\mathbf {C}_5\). Technically, each of these objects is assigned an individual node on its own in the cluster tree, in this example all as descendants from \(\mathbf {C}_2\).Footnote 4 As we will see later, these singleton nodes can only affect cluster extraction in the semi-supervised scenario, and only if the corresponding objects are pre-labeled.

Figure 6 illustrates the cluster tree corresponding to the clustering hierarchy in Table 1, along with all the information needed to run FOSC in various different unsupervised and semi-supervised settings. Singleton nodes corresponding to noise objects that are not pre-labeled are omitted for the sake of clarity, as they do not affect computations. Notice that the only singleton node displayed, as a dotted circle in Fig. 6b–e, corresponds to object \(\mathbf {x}_1\), which is assumed to be pre-labeled (represented as a red cross). Thus it affects computations in the semi-supervised scenarios.

HDBSCAN* cluster tree for the hierarchy in Table 1 and flat clustering extraction using FOSC: a unsupervised extraction using Stability;b–d Labeled-based semi-supervised extraction using \(B^3\) Precision, Recall, and F-Measure, respectively; e mixed case. The set of pre-labeled objects, \(\mathbf {X}_L\), is represented using colored symbols: \(\mathbf {x}_1\) and \(\mathbf {x}_6\) as red crosses, \(\mathbf {x}_8\) as a green hash, \(\mathbf {x}_{15}\) as a magenta triangle, and \(\mathbf {x}_{18}\) as a blue star. Singleton nodes corresponding to noise objects that are not pre-labeled are omitted as they do not affect computations. Extracted clusters in each case are highlighted in bold (Color figure online)

If clusters in the cluster tree can be properly assessed according to a suitable unsupervised or semi-supervised measure of cluster quality, an optimal flat solution in which objects are guaranteed not to belong to more than one cluster can be extracted by FOSC. Formally, let \(\{\mathbf {C}_{1}, \ldots , \mathbf {C}_{k}\}\) be the set of all candidate clusters in the cluster tree from which we want to extract a flat solution, \(\mathbf {P}\). Assume that there is an objective function \(J_T(\mathbf {P})\) that we want to maximize, such that \(J_T\) can quantitatively assess the quality of every valid candidate solution \(\mathbf {P}\). Functional \(J_T(\mathbf {P})\) must be decomposable according to two properties:Footnote 5

-

1.

Additivity:\(J_T(\mathbf {P})\) must be written as the sum of individual components \(J(\mathbf {C}_i)\), each of which is associated with a single cluster \(\mathbf {C}_i\) of \(\mathbf {P}\);

-

2.

Locality: Every component \(J(\mathbf {C}_i)\) must be computable locally to \(\mathbf {C}_i\), regardless of what the other clusters that compose the candidate solution \(\mathbf {P}\) are.

Due to the property of locality, the value \(J(\mathbf {C}_i)\) associated with every cluster in the cluster tree can be computed beforehand, i.e., prior to the decision on which clusters will compose the final solution to be extracted. These are the values illustrated below each node in Fig. 6.

Due to the property of additivity, the objective function can be written as \(J_T(\mathbf {P}) = \sum _{\mathbf {C}_i \in \mathbf {P}} J(\mathbf {C}_i)\), and the problem we want to solve is to choose a collection \(\mathbf {P}\) of clusters such that: (a) \(J_T(\mathbf {P})\) is maximized; and (b) \(\mathbf {P}\) is a valid flat solution, i.e., clusters and their sub-clusters are mutually exclusive (no data object belongs to more than one cluster). Mathematically, the optimization problem can be formulated as (Campello et al. 2013b):

where \(\delta _{i}~(i = 1, \cdots , k)\) is an indicator function that denotes whether cluster \(\mathbf {C}_i\) is selected to be part of the solution \((\delta _{i} = 1)\) or not \((\delta _{i} = 0)\), and \(I_h\) is the set of cluster indices on the path from any external node \(\mathbf {C}_h\) up to the root \(\mathbf {C}_1\). Note that the constraints ensure that a single cluster is selected in any branch from the root to a leaf.

FOSC solves this problem taking advantage of the fact that, due to the locality property of the cluster quality measure J, the partial selections made inside any subtree remain optimal in the context of larger trees containing that subtree. This allows for a very efficient, globally optimal dynamic programming method that traverses the cluster tree bottom-up starting from the leaves, comparing the quality of parent clusters against the aggregated quality of the respective subtrees, carrying the optimal choices upwards until the root is reached.

In Fig. 6a, notice that the sum of \(J(\mathbf {C}_4) = 3.23\) and \(J(\mathbf {C}_5)= 3.41\) (as well as the hidden singleton nodes descending from \(\mathbf {C}_2\), all of which have J value of zero) is equal to 6.64, which is smaller than \(J(\mathbf {C}_2) = 7.28\), hence \(\mathbf {C}_2\) is temporarily selected while its subtrees are discarded. Analogously, the aggregated value of \(J(\mathbf {C}_6)\) and \(J(\mathbf {C}_7)\) (1.4) is compared against \(J(\mathbf {C}_3) = 7.67\), which is larger, hence \(\mathbf {C}_3\) is temporarily selected whereas \(\mathbf {C}_6\) and \(\mathbf {C}_7\) are discarded. Now, the sum of \(J(\mathbf {C}_2)\) and \(J(\mathbf {C}_3)\) (14.95) is larger than \(J(\mathbf {C}_1) = 6.67\), hence \(\mathbf {C}_1\) is discarded while \(\mathbf {C}_2\) and \(\mathbf {C}_3\) are retained. Since the root has been reached, the final solution is \(\mathbf {P} = \{\mathbf {C}_2, \mathbf {C}_3\}\), with \(J_T(\mathbf {P}) = 14.95\).

While many clustering quality criteria from the literature satisfy the additive property, it is not easy to find criteria that satisfy the locality property required by FOSC. In the original publication (Campello et al. 2013b), a criterion was introduced that is based on the classic notion of cluster lifetime. The lifetime of a cluster in a clustering dendrogram is basically the length of the dendrogram scale along which the cluster exists (Jain and Dubes 1988). More prominent clusters persist longer across multiple hierarchical levels, so they have a longer lifetime.

This concept has been adapted by Campello et al. (2013b) to account for the fact that in certain hierarchies, including density-based hierarchies such as the one in Table 1, not all data objects stay in the cluster during its whole lifetime, because some objects become noise along the way. In other words, objects have different lifetimes as part of a cluster. The unsupervised measure of Stability of a cluster as proposed by Campello et al. (2013b) is the sum of the lifetimes of every object in that cluster, \(J(\mathbf {C}_i) = \sum _{\mathbf {x}_j \in \mathbf {C}_i} {{\,\mathrm{lifetime}\,}}(\mathbf {x}_j)\).

For example, in Table 1, cluster \(\mathbf {C}_{4}\) appears bottom-up at level 0.61 (formed by objects \(\mathbf {x}_{12}\), \(\mathbf {x}_{15}\), and \(\mathbf {x}_{16}\)) and disappears when it gets merged with cluster \(\mathbf {C}_{5}\), giving rise to \(\mathbf {C}_{2}\) at level 1.22. Along this interval, another three objects join this cluster, at levels 0.82 (\(\mathbf {x}_{14}\)), 0.98 (\(\mathbf {x}_{13}\)), and 1.00 (\(\mathbf {x}_{3}\)). Hence, its Stability is given by \(J(\mathbf {C}_{4}) = 3*(1.22 - 0.61) + (1.22 - 0.82) + (1.22 - 0.98) + (1.22 - 1.00) = 2.69\).

To perform flat cluster extraction in an unsupervised way, HDBSCAN* uses FOSC with the Stability criterion as described above, except that it replaces \(\epsilon \) with \(\frac{1}{\epsilon }\) in the scale (which is therefore flipped) for the computation of lifetime. This makes Stability more statistically sound in the density-based context as it becomes equivalent to the concept of relative excess of mass of a cluster (Campello et al. 2015).Footnote 6 In this case, the Stability of cluster \(\mathbf {C}_{4}\) in our example above would be computed as \(J(\mathbf {C}_{4}) = 3*(1/0.61 - 1/1.22) + (1/0.82 - 1/1.22) + (1/0.98 - 1/1.22) + (1/1.00 - 1/1.22) = 3.23\). These are precisely the values show below each cluster in Fig. 6a.

The Stability of any singleton node containing a noise object is defined as zero as the lifetime of noise is undefined. This way, singleton nodes with noise do not directly affect unsupervised cluster extraction. Indirectly though, larger amounts of noise in a final solution \(\mathbf {P}\) are indirectly penalized because noise objects do not add anything to the Overall Stability of that solution.