Abstract

Distributed and parallel algorithms have been frequently investigated in the recent years, in particular in applications like machine learning. Nonetheless, only a small subclass of the optimization algorithms in the literature can be easily distributed, for the presence, e.g., of coupling constraints that make all the variables dependent from each other with respect to the feasible set. Augmented Lagrangian methods are among the most used techniques to get rid of the coupling constraints issue, namely by moving such constraints to the objective function in a structured, well-studied manner. Unfortunately, standard augmented Lagrangian methods need the solution of a nested problem by needing to (at least inexactly) solve a subproblem at each iteration, therefore leading to potential inefficiency of the algorithm. To fill this gap, we propose an augmented Lagrangian method to solve convex problems with linear coupling constraints that can be distributed and requires a single gradient projection step at every iteration. We give a formal convergence proof to at least \(\varepsilon \)-approximate solutions of the problem and a detailed analysis of how the parameters of the algorithm influence the value of the approximating parameter \(\varepsilon \). Furthermore, we introduce a distributed version of the algorithm allowing to partition the data and perform the distribution of the computation in a parallel fashion.

Similar content being viewed by others

References

Aussel, D., Sagratella, S.: Sufficient conditions to compute any solution of a quasivariational inequality via a variational inequality. Math. Methods Oper. Res. 85(1), 3–18 (2017)

Bertsekas, D.P.: Nonlinear Programming. Athena Scientific, Belmont (1999)

Bertsekas, D.P.: Convex Optimization Algorithms. Athena Scientific, Nashua, NH, USA (2015)

Bertsekas, D.P., Tsitsiklis, J.N.: Parallel and Distributed Computation: Numerical Methods, vol. 23. Prentice hall, Englewood Cliffs (1989)

Birgin, E.G., Martinez, J.M.: Practical Augmented Lagrangian Methods for Constrained Optimization, vol. 10. SIAM, Philadelphia (2014)

Boyd, S., Parikh, N., Chu, E., Peleato, B., Eckstein, J., et al.: Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends® Mach. Learn. 3(1), 1–122 (2011)

Cannelli, L., Facchinei, F., Scutari, G.: Multi-agent asynchronous nonconvex large-scale optimization. In: 2017 IEEE 7th International Workshop on Computational Advances in Multi-Sensor Adaptive Processing (CAMSAP), pp. 1–5. IEEE (2017)

Cassioli, A., Di Lorenzo, D., Sciandrone, M.: On the convergence of inexact block coordinate descent methods for constrained optimization. Eur. J. Oper. Res. 231(2), 274–281 (2013)

Chang, C.C., Lin, C.J.: Libsvm: a library for support vector machines. ACM Trans. Intell. Syst. Technol. (TIST) 2(3), 27 (2011)

Clarke, F.H.: Optimization and Nonsmooth Analysis, vol. 5. SIAM, Philadelphia (1990)

Daneshmand, A., Sun, Y., Scutari, G., Facchinei, F., Sadler, B.M.: Decentralized dictionary learning over time-varying digraphs (2018). arXiv preprint arXiv:1808.05933

Di Pillo, G., Lucidi, S.: On exact augmented lagrangian functions in nonlinear programming. In: Di Pillo, G., Giannessi, F. (eds.) Nonlinear Optimization and Applications, pp. 85–100. Springer, Boston (1996)

Di Pillo, G., Lucidi, S.: An augmented lagrangian function with improved exactness properties. SIAM J. Optim. 12(2), 376–406 (2002)

Facchinei, F., Kanzow, C.: Generalized Nash equilibrium problems. 4OR 5(3), 173–210 (2007)

Facchinei, F., Kanzow, C., Karl, S., Sagratella, S.: The semismooth Newton method for the solution of quasi-variational inequalities. Comput. Optim. Appl. 62(1), 85–109 (2015)

Facchinei, F., Pang, J.S.: Finite-Dimensional Variational Inequalities and Complementarity Problems. Springer, Berlin (2007)

Facchinei, F., Sagratella, S.: On the computation of all solutions of jointly convex generalized Nash equilibrium problems. Optim. Lett. 5(3), 531–547 (2011)

Facchinei, F., Scutari, G., Sagratella, S.: Parallel selective algorithms for nonconvex big data optimization. IEEE Trans. Signal Process. 63(7), 1874–1889 (2015)

García, R., Marín, A., Patriksson, M.: Column generation algorithms for nonlinear optimization, I: convergence analysis. Optimization 52(2), 171–200 (2003)

Gondzio, J., Grothey, A.: Exploiting structure in parallel implementation of interior point methods for optimization. Comput. Manag. Sci. 6(2), 135–160 (2009)

Harchaoui, Z., Juditsky, A., Nemirovski, A.: Conditional gradient algorithms for machine learning. In: NIPS Workshop on Optimization for ML, vol. 3, pp. 3-2 (2012)

Hong, M., Luo, Z.Q.: On the linear convergence of the alternating direction method of multipliers. Math. Program. 162(1–2), 165–199 (2017)

Jaggi, M.: Revisiting Frank-Wolfe: projection-free sparse convex optimization. ICML 1, 427–435 (2013)

Lacoste-Julien, S., Jaggi, M.: On the global linear convergence of Frank-Wolfe optimization variants. In: Advances in Neural Information Processing Systems, pp. 496–504 (2015)

Latorre, V., Sagratella, S.: A canonical duality approach for the solution of affine quasi-variational inequalities. J. Global Optim. 64(3), 433–449 (2016)

Lin, C.J., Lucidi, S., Palagi, L., Risi, A., Sciandrone, M.: Decomposition algorithm model for singly linearly-constrained problems subject to lower and upper bounds. J. Optim. Theory Appl. 141(1), 107–126 (2009)

Lucidi, S.: New results on a class of exact augmented lagrangians. J. Optim. Theory Appl. 58(2), 259–282 (1988)

Lucidi, S., Palagi, L., Risi, A., Sciandrone, M.: A convergent decomposition algorithm for support vector machines. Comput. Optim. Appl. 38(2), 217–234 (2007)

Mangasarian, O.: Machine learning via polyhedral concave minimization. In: Fischer, H., Riedmüller, B., Schäffler, S. (eds.) Applied Mathematics and Parallel Computing, pp. 175–188. Springer, Berlin (1996)

Manno, A., Palagi, L., Sagratella, S.: Parallel decomposition methods for linearly constrained problems subject to simple bound with application to the SVMs training. Comput. Optim. Appl. 71(1), 115–145 (2018)

Manno, A., Sagratella, S., Livi, L.: A convergent and fully distributable SVMs training algorithm. In: 2016 International Joint Conference on Neural Networks (IJCNN), pp. 3076–3080. IEEE (2016)

Ouyang, H., Gray, A.: Fast stochastic Frank-Wolfe algorithms for nonlinear SVMs. In: Proceedings of the 2010 SIAM International Conference on Data Mining, pp. 245–256. SIAM (2010)

Piccialli, V., Sciandrone, M.: Nonlinear optimization and support vector machines. 4OR 16(2), 111–149 (2018). https://doi.org/10.1007/s10288-018-0378-2

Rockafellar, R.T.: Augmented Lagrange multiplier functions and duality in nonconvex programming. SIAM J. Control 12(2), 268–285 (1974)

Rockafellar, R.T., Wets, R.J.B.: Variational Analysis, vol. 317. Springer, Berlin (2009)

Sagratella, S.: Algorithms for generalized potential games with mixed-integer variables. Comput. Optim. Appl. 68(3), 689–717 (2017)

Scutari, G., Facchinei, F., Lampariello, L.: Parallel and distributed methods for constrained nonconvex optimization—part I: theory. IEEE Trans. Signal Process. 65(8), 1929–1944 (2016)

Scutari, G., Facchinei, F., Lampariello, L., Sardellitti, S., Song, P.: Parallel and distributed methods for constrained nonconvex optimization—part II: applications in communications and machine learning. IEEE Trans. Signal Process. 65(8), 1945–1960 (2016)

Scutari, G., Facchinei, F., Lampariello, L., Song, P.: Parallel and distributed methods for nonconvex optimization. In: 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 840–844. IEEE (2014)

Woodsend, K., Gondzio, J.: Hybrid MPI/OpenMP parallel linear support vector machine training. J. Mach. Learn. Res. 10(Aug), 1937–1953 (2009)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The work of the authors was partially supported by the Grant: “Finanziamenti di ateneo per la ricerca scientifica 2018” n. RP11816432902D1E, Sapienza University of Rome.

The classical gradient projection algorithm defined in (2) is not distributable

The classical gradient projection algorithm defined in (2) is not distributable

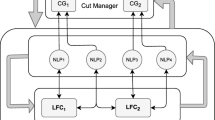

Consider the general case in which S is not separable due to the presence of the constraints h that couple the different blocks of variables \(x_{(\nu )}\), i.e. \(m > 0\). To solve Problem (1), one could think to employ the following naive parallel version of the classical gradient projection algorithm (whose original generic iteration is defined in (2)):

where \(x^k \in S\) and the decomposed subsets \(S_\nu \) are defined in the following way

Let \(\{x^k\}\) be the sequence produced by this algorithm. The sets \(S_\nu \) are fixed during the iterations and depend only on the starting point \(x^0\):

A fixed point \({\overline{x}}\) for \(\{x^k\}\) is therefore a solution of the following variational inequality problem (see e.g. [1, 15, 16, 25])

On the other hand, computing a solution of Problem (1), being a fixed point for the iterations defined in (2), turns out to be a solution \(x^*\) of this different variational inequality

Notice that the point \({\overline{x}}\), solution of the variational inequality (10), could not be a solution of the variational inequality (11), and therefore of Problem (1). This is due to the fact that, for any feasible starting guess \(x^0 \in S\), we obtain only

but not the other inclusion in general. Actually the fixed point \({\overline{x}}\) of \(\{x^k\}\) is only an equilibrium of the (potential) generalized Nash equilibrium problem (see e.g. [14, 17, 36]) whose generic player \(\nu \in \{1,\ldots , N\}\) solves the following optimization problem that is parametric with respect to all the blocks of variables \(x_{(\mu )}\) of the other players \(\mu \ne \nu \):

Rights and permissions

About this article

Cite this article

Colombo, T., Sagratella, S. Distributed algorithms for convex problems with linear coupling constraints. J Glob Optim 77, 53–73 (2020). https://doi.org/10.1007/s10898-019-00792-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10898-019-00792-z