Abstract

Traditional image enhancement techniques produce different types of noise such as unnatural effects, over-enhancement, and artifacts, and these drawbacks become more prominent in enhancing dark images. To overcome these drawbacks, we propose a dark image enhancement technique where local transformation of the pixels have been performed. Here, we apply a transformation method of different parts of the histogram of an input image to get a desired histogram. Afterwards, histogram specification technique has been done on the input image using this transformed histogram. The performance of the proposed technique has been evaluated in both qualitative and quantitative manner, which shows that the proposed method improves the quality of the image with minimal unexpected artifacts as compared to the other techniques.

Similar content being viewed by others

1 Introduction

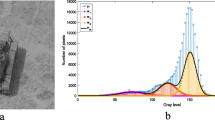

Image enhancement is commonly used to improve the visual quality of an image. The quality of the images may be degraded for several reasons like the lack of operator expertise and quality of image capturing device. Generally, images are captured in bright, dark, or any uncontrolled environments. And if an image is captured in too bright or too dark environment (Fig. 1), then enhancement is necessary for creating better looking image. Furthermore, events such as scanning or transmitting an image from one place to another may cause distortion to that image. Differences in brightness of different parts of an image due to shadow or non-uniform illumination also demand enhancement. For example, we may take an image where the face looks dark as compared to the background. However, in spite of the availability of many enhancement algorithms, there have been very little work focusing specifically on dark image (image mean, μ<0.5 [1], where the darkness is due to low illumination, not for dark-colored objects) enhancement, and these methods might also result in over-enhancement and/or unnatural effects.

Histogram equalization (HE) is a simple and effective contrast enhancement technique for enhancing an image. HE spreads the intensities of an image pixels based on the whole image information. As a result, there might be a case where some low occurring intensities are transformed to become merged with neighboring high occurring intensities, which creates over-enhancement [2, 3]. Moreover, mean shift problems may also occur in such cases. So, brightness preservation cannot be guaranteed in HE. An improved version of HE is the brightness preserving bi-histogram equalization (BBHE) [4] which produces better results as compared to HE, and most often, it preserves the brightness of the original image. However, it may not give desired outcome when the image pixel distribution does not follow the symmetric distribution [5]. A method called equal area dualistic sub-image histogram equalization (DSIHE) [6] performs better than BBHE because it separates the histogram based on the image median. Recently, Rahman et al. proposed an enhancement technique where the histogram is divided using harmonic mean of the image, and then, HE has been applied [7]. Still, these techniques may not always increase image contrast, especially for dark images, since the focuses of these methods are the preservation of the brightness.

A combination of BBHE and DSIHE is recursively separated and weighted histogram equalization (RSWHE) [8], and it preserves the brightness and enhances the contrast of an image. The core idea of this algorithm is breaking down a histogram into two or more portions and then applying a weighting function on each of the sub-histograms, on which the histogram equalization is performed. However, some statistical information might be lost after the histogram transformation, and the desired enhancement may not be achieved [9]. Inspired by the RSWHE, the adaptive gamma correction with weighting distribution (AGCWD) [9] uses gamma correction and luminance pixel probability distribution to enhance the brightness and preserves the available histogram information. Here, a hybrid histogram modification (HM) technique is used to combine the traditional gamma correction (TGC) and traditional histogram equalization (THE) methods. Although this method enhances the brightness of the input image in most of the cases, it may not give satisfactory results when an input image has lack of bright pixels.

An extended version of BBHE and DSIHE is the minimum mean brightness bi-histogram equalization (MMBEBHE) [10] where input image histogram is recursively separated into multiple sub-histograms using absolute mean brightness error (AMBE). Although this technique performs good in contrast enhancement, MMBEBHE incurs much side effects. ChaoWang and Zhongfu Ye [11] propose the brightness preserving histogram equalization with maximum entropy (BPHEME), which provides acceptable results for continuous case, but fails for discrete ones. Chao Zuo et al. [12] propose range limited bi-histogram equalization (RLBHE) that preserves the mean brightness of the image. However, the computational complexity is very high as compared to the other methods. Huang et al. address this problem by proposing a hardware-oriented implementation [13]. Bilateral Bezier curve (BBC) method works in dark and bright regions of an image separately [14].

The Histogram Modification Framework (HMF) [15] focuses on minimizing a cost function to get the target histogram. SM Pizer et al. propose weighted adaptive histogram equalization (WAHE) [16], which processes the input image based on the impact of pixels to the histogram by considering the closeness of the pixels. Although WAHE improves image contrast, it requires intensive computation. Content-aware channel division (CACD) is proposed in [17] for dark images enhancement. CACD groups the image information with common characteristics. However, only grouping the contrast pairs into intensity channels may not always be sufficient because different intensity channels may possess same characteristics [18].

Some renowned histogram specification-based methods such as automatic exact histogram specification (AEHS) [19] and dynamic histogram specification (DHS) [20] are available for image enhancement. AEHS is proposed for both local and global contrast enhancement whereas DHS uses differential information from an input histogram to eliminate the annoying side effects. Besides the aforementioned enhancement methods, there exist few other methods such as guided image contrast enhancement [21] and image enhanccement by entropy maximization [22], contrast enhancement based on piece-wise linear transformation (PLT) [23], layered difference representation (LDR) [24], and inter pixel contextual information (named as CVC) [25]. LDR first divides the gray levels of an image into different layers and makes a tree structure for deriving a transformation function. After getting the transformation functions for each layer, all of those are aggregated to achieve the final desired transformation function handling the sudden peaks. However, it may not perform accurately in some cases of dark images.

Few methods are solely developed for dark image enhancement, but their performances are not always satisfactorily. The main limitation is that these techniques may transfer most of the pixels from dark region to the bright region which might cause over-enhancement and unwanted shift in brightness. To mitigate this, we propose a method specifically to enhance images having dark portions in them (a preliminary version of this work can be found in [26]). We divide the whole image histogram into several segments and then modify those to have a histogram with desired characteristics. Finally, histogram specification is performed on the input image using this desired histogram. According to our method, the desired histogram is carefully created to enhance the image, especially the dark parts. Moreover, the gray levels of one segment are not transferred to another segment. This helps to avoid over-enhancement and additional noise. Experimental results also advocate for the effectiveness of the proposed method.

Rest of the paper is organized as follows. Section 2 discusses the proposed method, and the results are presented in Section 3 using both qualitative and quantitative analysis. Finally, Section 4 concludes the contribution of this paper.

2 Proposed method

The proposed method enhances an image with a special attention to the dark region of that image. This method can be applied on both gray and color images. For color images, color space conversion is needed because direct RGB color processing cannot preserve the original color; rather, it produces undesirable effect on the output image. Different kinds of color spaces are available such as HSV, HSI, Lab, and Luma. In the proposed method, we have used HSV color space to process the image due to its additive advantages. The advantages which HSV provides are delineated below [23].

-

In HSV color model, V (luminance) and color information (hue and saturation) are decoupled.

-

Color relationships of HSV color model are described more meticulously than RGB color model.

-

We can easily transform RGB color model to HSV color model and vice versa.

After applying the enhancement on V channel, the image is converted back to RGB. The whole enhancement process consists of two major steps: (1) preprocessing and (2) enhancement. These two are discussed in the following sub-sections.

2.1 Preprocessing

The proposed algorithm divides an image histogram into several parts based on the peaks and valleys, and then, each individual part is processed separately. However, an image, especially a dark image, contains noise (random fluctuation of intensities). Due to the presence of noise, many insignificant local peaks and valleys are n in the image histogram, which may lead to partition the histogram into too many parts and thus distract the whole process from getting the desired effect. For a good enhancement, these noise should be removed. Smoothing filter helps us to get the desired resullt in this case. We apply Gaussian smoothing filter [27] as presented in Eq. 1.

Here, σ represents standard deviation. In an image, specifically in a dark image, some intensities might exist at very few number of pixels that are not important to visualize the objects in that image. Hence, we propose to merge the histogram bins of such unimportant intensities with the neighboring bins so that our later procedures get more room to process the histogram segments.

We use Algorithm 1 to dynamically calculate a threshold (τ) from the input histogram and to find such insignificant intensities. τ gives a level for the accumulation of a bin justifying whether the presence of the corresponding intensity is significant or not. Algorithm 2 scans every bin of the histogram and compares it with τ. If the accumulation is less than τ, the accumulation is added to the next bin’s accumulation. Thus, we dissolve the insignificant bins in a histogram.

2.2 Enhancement

We are usually more interested in edges of an image as compared to smooth regions. Hence, edge and non-edge regions should be treated separately. To do so, we apply the Sobel operator to find the absolute gradient magnitude at each pixel of the given image (Iin) and then use a threshold on the values to approximate the regions (pixels) corresponding to edges (I E ) and non-edges (I NE ). After separating edge and non-edge images, we calculate histograms H E and H NE for these two images, respectively. We then find the segments S E and S NE from H E and H NE , respectively. S E and S NE are then used to generate two desired histograms \(\left (\text {namely}\, H_{E}^{\prime }\, \text {and}\, H_{NE}^{\prime }\right)\): one is to enhance edges and another for enhancing smooth regions. These two histograms are merged together to yield the final desired histogram, which is then used in histogram specification step to enhance the whole image. Algorithm 3 and Fig. 2 show our overall procedure for dark image enhancement.

The different steps of the proposed method. a Input image histogram. b Histogram of edge pixels. c Histogram of non-edge pixels. d After applying smoothing in (b). e After applying smoothing in (c). f After applying the Segment Identification, Segment Relocation and Intra Segment Transformation on the histogram in (d). g After applying the Segment Identification, Segment Relocation and Intra Segment Transformation on the histogram in (e). h Desired histogram after merging the histograms in (f) and (g). i Output image histogram

From the preprocessing step, we obtain prominent peaks and valleys for the histogram of a given image. These peaks and valleys are used to identify a set of segments where a segment is defined as follows:

Definition-1 segment: Histogram bins between two valleys are considered as a segment where valleys (V) are defined in Eq. 2.

where x i is the accumulation of the ith bin of the histogram. Formally, a segment (S i ) is a portion of a histogram that lies between the two valleys V i and Vi+1. In Fig. 3, s1, s2, s3, s4, and s5 are segments.

For enhancement, we perform two types of operations for each of these segments of the histogram: First, segment reallocation and second, gray level transformation within segment. Detail of these two steps are described in the following sub-sections.

2.2.1 Segment reallocation

The objective of relocating the segments is to transform a set of gray levels from the dark region of the image to the relatively brighter region. Figure 4 illustrates the shifting of segments from one location to another location of the histogram.

These segments are shifted based on the shifting distance D. This shifting distance is dynamically calculated for each segment using Eq. 3.

where N = total number of segments, D(i) = shifting distance of ith segment, x i = accumulation of ith segment.

For example, if the total number of accumulation =100, accumulation of the ith segment =10 and total number of segments =50, then the shifting distance will be D(i)=(10/100)×50=5. So, new ending position of this segment will be Vi+1+5. And thus, the width of a segment depends on the number of pixels it contains. Here, the segments containing more pixels will be allocated more gray levels, which are expected for enhancement.

After shifting each segment, there exist several empty bins at the end of the histogram. We distribute these empty bins to all the segments using Eq. 4

where l i = width of the ith segment, L i = resultant width of the ith segment, θ = ending bin position of the last segment. Thus, it also gives more space to perform intra-segment transformation.

2.2.2 Intra-segment transformation

By shifting the segments, we transform the intensities of a set of pixels as a whole. It enhances the contrast among the bins in different segments. However, we need to enhance within-segment contrasts for getting a better looking image. For this reason, we transform the intensities of the pixels within a segment which is performed using Eq. 5. The result of such transformation is shown in Fig. 5.

where T(i) = transformed intensity of ith bin, Ω(i) = accumulation of ith bin, s = starting bin position of the segment, k = last bin position of the segment, L = width of the corresponding segment (from Eq. 4).

2.2.3 Histogram specification

By performing the segment reallocation and intra-segment transformation, we get the the transformed histograms for both edge and non-edge images. The desired histogram is obtained by combining these two histograms which is used to perform histogram specification.

Here, a gray level, i, of the input image is mapped to another gray level, d, such that

where Cin(i) and Cdesired(d) represent the cumulative distribution functions calculated from the input image and the desired histogram, respectively. In other words, we seek the gray level, d, for which

We apply Eq. 7 on every gray level of the input image to get the enhanced image. The output image is transformed back to RGB if the original image is RGB.

3 Experimental results and discussion

In this section, the results of the proposed technique has been compared to the existing state-of-the-art image enhancement techniques, namely HE [2], AEHS [19], CVC [25], LDR [24], WAHE [16], CACD [17], AGCWD [9], and RSWHE [8]. The comparison has been performed in both qualitative and quantitative manner. To evaluate the proposed method, images are taken from CACD [17], Caltech [28] and UIUC Sport Event [29]. The details are presented in the following sections.

3.1 Qualitative measures

To show the qualitative results of our method, few experimental images are taken from CACD [17] and the outputs are given in Fig. 6. The challenges of each image and the improvements occurred by each method are described below.

In “girl” image, the main challenge is to increase overall brightness without incurring artifacts on hair and necklace. Most of the methods cannot reveal the detail texture and girl’s necklace. HE and AEHS over-enhance the image and produce lots of artifacts. CACD produces comparatively good result, but the image is not properly illuminated. LDR and WAHE cannot increase the brightness properly, and the original color of the image is also degraded whereas our proposed “girl” image increases brightness and preserves original color contrast. Thus, our proposed method performs better than the others in this respect.

In the “fountain” image, the challenges are to preserve the lamp as original as possible and keep naturalness of the grass. Here, HE and AEHS over-enhance the wall and grass. Glasses of windows do not look original. LDR, CVC, WAHE, and CACD cannot enhance the image properly and the output images are still a little bit dark. On the other hand, HE and AEHS increase brightness at a large rate which incurs artifacts on the wall. However, the proposed method preserves the brightness of lamp and the natural color of grass. Thus, the proposed method produces comparatively better result than others.

The desired enhancement of “street1” image is to increase the brightness in such a way that hidden information of the image can be extracted. CVC, LDR, WAHE, CACD, AGCWD, and RSWHE cannot enhance the brightness of this image properly because the hidden information of “three man” in the image is unclear. However, the proposed method increases the brightness, and hidden information is more clearly visible than the others.

The challenges of the “dark ocean” image are to preserve the ray of sunlight and enhance image contrast properly. HE and AEHS increase image brightness, but naturalness of sunshine is degraded. LDR and CVC cannot enhance the brightness of this image properly. The resultant images of these two methods are still significantly dark. CACD and AGCWD preserve the sunshine but loss the naturalness of the water whereas RSWHE degrades the quality of sun rays. In this case, the proposed approach preserves the sunshine and increases overall image brightness.

Our method works better if we apply on both edge and non-edge pixels of the image separately. Figures 7 and 8 show the output with separation of edge and without separation of edge respectively. We notice that if we do not separate edge and non-edge pixels, output image is not properly enhanced and some artifacts are produced.

3.2 Quantitative measures

For the purpose of quantitative evaluation, fifty images are taken from aforementioned three datasets namely CACD, Caltech and UIUC Sport Event. To evaluate these images quantitatively, we use Root mean square (RMS), structural similarity, and perceptual quality metric (PQM) metrics which are discussed in the following sub-sections.

3.2.1 RMS contrast

A common way to define the contrast of an image is to measure the RMS contrast [30]. The RMS is defined by Eq. 8.

Here, M and N are the image dimension. Ii,j, μ represent pixel intensity and mean of an image. Usually, larger value of the RMS represents better quality of the image. However, for enhancing a dark image, this might not be true because the increase of RMS depends on the increase of the diversified intensity which may also deteriorate the image quality with increased number of artifacts. This is also observed when we measure the average values of the outputs of different methods for 50 dark images. The average RMS values obtained by AGCWD, CVC, and LDR are 0.29, 0.27, and 0.26, respectively. On the other hand, the proposed method and CACD obtain only 0.25 and 0.27, respectively, though their outputs are qualitatively more soothing and better in terms of other measures such as PQM. Hence, the RMS values does not actually reflect the enhancement for dark images.

3.2.2 SSIM

The Structural SIMilarity (SSIM) index is a method for measuring the similarity between two images. The SSIM index can be viewed as a quality measure of one of the images being compared. The SSIM is defined by Eq. 9.

where l(x,y) is luminance comparison function, c(x,y) is contrast comparison function and s(x,y) and structure comparison function (for details, please see [31]). Assessment results of different methods using SSIM are shown in Fig. 9.

3.2.3 PQM

Wang et al. [32] proposed a perceptual quality metric (PQM) to evaluate the image quality. According to [33], for good perceptual quality, PQM should be close to 10. Eq. 10 has been used to calculate PQMFootnote 1.

where α, β, γ1, γ2, and γ3 are the model parameters. A, B, and Z are the features (for details, please see [32]). The average PQM calculated for the 50 enhanced images is the highest (9.03) for the proposed method. CACD also shows a very competitive PQM (8.94). The lowest PQM is found for HE (8.73), and it is 8.82 for AGCWD. According to these results, it is also clear that the proposed method produces better output as compared to the other image enhancement techniques in most of the cases.

4 Conclusion

In this paper, a locally transformed histogram-based technique has been proposed for dark image enhancement. This method works better as compared to other methods because we do not apply our transformation method on the whole histogram of an input image. Rather, our transformation method is applied on a small segment of the input image histogram. As a result, the proposed technique does not get affected from over-enhancement problem. Our experimental results show that it reaches higher performance metrics as compared to existing techniques.

References

Rahman S, Rahman MM, Abdullah-Al-Wadud M, Al-Quaderi GD, Shoyaib M (2016) An adaptive gamma correction for image enhancement. EURASIP J Image Video Process 2016(1):35.

Gonzalez RC, Woods RE (2002) Digital image processing. Prentice Hall, Upper Saddle River.

Abdullah-Al-Wadud M, Kabir MH, Dewan MAA, Chae O (2007) A dynamic histogram equalization for image contrast enhancement. IEEE Trans Consum Electron 53(2):593–600.

Kim YT (1997) Contrast enhancement using brightness preserving bi-histogram equalization. Consum Electron IEEE Trans 43(1):1–8.

Rahman S, Rahman MM, Hussain K, Khaled SM, Shoyaib M (2014) Image enhancement in spatial domain: a comprehensive study In: Computer and Information Technology (ICCIT), 2014 17th International Conference On. IEEE. pp 368–373

Wang Y, Chen Q, Zhang B (1999) Image enhancement based on equal area dualistic sub-image histogram equalization method. Consum Electron IEEE Trans 45(1):68–75.

Amil FM, Rahman MM, Rahman S, Dey EK, Shoyaib M (2016) Bilateral histogram equalization with pre-processing for contrast enhancement In: Software Engineering, Artificial Intelligence, Networking and Distributed Computing (SNPD), 17th IEEE International Conference on. IEEE, Shanghai

Kim M, Chung M (2008) Recursively separated and weighted histogram equalization for brightness preservation and contrast enhancement. Consum Electron IEEE Trans 54(3):1389–1397.

Huang SC, Cheng FC, Chiu YS (2013) Efficient contrast enhancement using adaptive gamma correction with weighting distribution. Image Process IEEE Trans 22(3):1032–1041.

Chen SD, Ramli AR (2003) Minimum mean brightness error bi-histogram equalization in contrast enhancement. Consum Electron IEEE Trans 49(4):1310–1319.

Wang C, Ye Z (2005) Brightness preserving histogram equalization with maximum entropy: a variational perspective. Consum Electron IEEE Trans 51(4):1326–1334.

Zuo C, Chen Q, Sui X (2013) Range limited bi-histogram equalization for image contrast enhancement. Optik-Int J Light Electron Optics 124(5):425–431.

Huang SC, Chen WC (2014) A new hardware-efficient algorithm and reconfigurable architecture for image contrast enhancement. IEEE Trans Image Process 23(10):4426–4437.

Cheng FC, Huang SC (2013) Efficient histogram modification using bilateral bezier curve for the contrast enhancement. J Display Technol 9(1):44–50.

Arici T, Dikbas S, Altunbasak Y (2009) A histogram modification framework and its application for image contrast enhancement. Image Process IEEE Trans 18(9):1921–1935.

Pizer SM, Amburn EP, Austin JD, Cromartie R, Geselowitz A, Greer T, ter Haar Romeny B, Zimmerman JB, Zuiderveld K (1987) Adaptive histogram equalization and its variations. Comput Vis Graphics Image Process 39(3):355–368.

Ramirez Rivera A, Ryu B, Chae O (2012) Content-aware dark image enhancement through channel division. Image Process IEEE Trans 21(9):3967–3980.

Priyakanth R, Malladi S, Abburi R (2013) Dark image enhancement through intensity channel division and region channels using Savitzky-Golay filter. Intl J Sci Res Publications ISSN 3(8):2050–2016.

Sen D, Pal SK (2011) Automatic exact histogram specification for contrast enhancement and visual system based quantitative evaluation. Image Process IEEE Trans 20(5):1211–1220.

Sun CC, Ruan SJ, Shie MC, Pai TW (2005) Dynamic contrast enhancement based on histogram specification. Consum Electron IEEE Trans 51(4):1300–1305.

Wang S, Gu K, Ma S, Lin W, Liu X, Gao W (2016) Guided image contrast enhancement based on retrieved images in cloud. IEEE Trans Multimedia 18(2):219–232.

Niu Y, Wu X, Shi G (2016) Image enhancement by entropy maximization and quantization resolution upconversion. IEEE Trans Image Process 25(10):4815–4828.

Tsai CM, Yeh ZM (2008) Contrast enhancement by automatic and parameter-free piecewise linear transformation for color images. Consum Electron IEEE Trans 54(2):213–219.

Lee C, Lee C, Kim CS (2012) Contrast enhancement based on layered difference representation In: Image Processing (ICIP), 19th IEEE International Conference On. IEEE, Florida. pp 965–968

Celik T, Tjahjadi T (2011) Contextual and variational contrast enhancement. Image Process IEEE Trans 20(12):3431–3441.

Hussain K, Rahman S, Khaled SM, Abdullah-Al-Wadud M, Shoyaib M (2014) Dark image enhancement by locally transformed histogram In: Software, Knowledge, Information Management and Applications (SKIMA), 8th International Conference On. IEEE, Dhaka. pp 1–7

Bhardwaj S, Mittal A (2012) A survey on various edge detector techniques. Procedia Technol 4:220–226.

Griffin G, Holub A, Perona P (2007) Caltech-256 object category dataset. Technical Report CNS-TR-2007-001, California Institute of Technology. http://authors.library.caltech.edu/7694/.

Li L-J, Fei-Fei L (2007) What, where and who? Classifying events by scene and object recognition In: Computer Vision, 2007. ICCV 2007. IEEE 11th International Conference On. IEEE, Rio de Janeiro. pp 1–8

Peli E (1990) Contrast in complex images. JOSA A 7(10):2032–2040.

Wang Z, Bovik AC, Sheikh HR, Simoncelli EP (2004) Image quality assessment: from error visibility to structural similarity. Image Process IEEE Trans 13(4):600–612.

Wang Z, Sheikh HR, Bovik AC (2002) No-reference perceptual quality assessment of JPEG compressed images In: Image Processing. 2002. Proceedings. 2002 International Conference On, vol. 1. IEEE, New York. p 477

Mukherjee J, Mitra SK (2008) Enhancement of color images by scaling the DCT coefficients. Image Process IEEE Trans 17(10):1783–1794.

Acknowledgements

This work is supported by the University Grants Commission, Bangladesh under the Dhaka University Teachers Research Grant No Reg/Admin-3/54290.

Author information

Authors and Affiliations

Contributions

All the authors have contributed in designing, developing, and analyzing the methodology, performing the experimentation, and writing and modifying the manuscript. All the authors have read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Hussain, K., Rahman, S., Rahman, M.M. et al. A histogram specification technique for dark image enhancement using a local transformation method. IPSJ T Comput Vis Appl 10, 3 (2018). https://doi.org/10.1186/s41074-018-0040-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s41074-018-0040-0