Abstract

Many questions cannot be answered simply; their answers must include numerous nuanced details and context. Complex Answer Retrieval (CAR) is the retrieval of answers to such questions. These questions can be constructed from a topic entity (e.g., ‘cheese’) and a facet (e.g., ‘health effects’). While topic matching has been thoroughly explored, we observe that some facets use general language that is unlikely to appear verbatim in answers, exhibiting low utility. In this work, we present an approach to CAR that identifies and addresses low-utility facets. First, we propose two estimators of facet utility: the hierarchical structure of CAR queries, and facet frequency information from training data. Then, to improve the retrieval performance on low-utility headings, we include entity similarity scores using embeddings trained from a CAR knowledge graph, which captures the context of facets. We show that our methods are effective by applying them to two leading neural ranking techniques, and evaluating them on the TREC CAR dataset. We find that our approach perform significantly better than the unmodified neural ranker and other leading CAR techniques, yielding state-of-the-art results. We also provide a detailed analysis of our results, verify that low-utility facets are indeed difficult to match, and that our approach improves the performance for these difficult queries.

Similar content being viewed by others

1 Introduction

It is common to use search technologies to find answers to questions. While considerable work has investigated techniques to answer questions that have factoid answers, there has been less focus on open-ended questions that cannot be answered with simple, standalone facts. The information need of these open-ended questions are fulfilled with complex answers, which contain comprehensive details and context pertaining to the question. Thus, Complex Answer Retrieval (CAR) is the process of finding answers to questions that have complex answers (Dietz et al. 2017).Footnote 1 More formally, given a question and a large collection of candidate answers, a CAR engine retrieves and ranks the answers by relevance to the question. Since candidate answers include details and context, they can be formulated as paragraphs of text. In this work, we propose and evaluate approaches for improving CAR by using structural and frequency information from the query and information from a knowledge graph constructed from CAR training data.

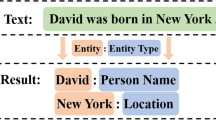

CAR queries can be broken down into two components: the topic and facet. The topic is the main entity of the question. For the query ‘Is cheese healthy?’, the topic is the entity ‘Cheese’ (see Fig. 1). All answers to the question must be about this entity, otherwise the answer is not valid. The facet is the particular detail about which the question inquires. Question facets differentiate CAR queries from topical queries by inquiring about a specific detail about the topic. In the example, the facet can be described as ‘Health effects’. For an answer to be considered valid to the question, it must refer to health effects of cheese—information such as nutrition, health risks, etc. These answers can come from multiple sources. For instance, an article about cardiovascular disease may claim that diets containing foods such as cheese that are high in saturated fat increase one’s risk of heart disease (Answer 2 in Fig. 1). Such a paragraph would be valuable to include in a complete answer because it contains contextual information about why cheese consumption can increase one’s risk of heart disease. Thus, it follows to frame CAR as retrieval of paragraphs of text from authoritative sources given a topic and facet. CAR queries with various facets about a single topic can be combined to produce a detailed article about a topic.

A straightforward approach for CAR is to use an existing IR technique as-is, concatenating the topic and facet information to build the query. Indeed, previous results showed that this basic approach can be effective (Magnusson and Dietz 2017), particularly when neural models are employed. However, such an approach is limited by several factors specific to CAR. We observe that facets are not necessarily mentioned verbatim in relevant paragraphs. In Fig. 1, the relevant answers (1–3) never include ‘health’ because the context is clear from the other entities mentioned in the answer. On the other hand, non-relevant answers sometimes do include the facet term (e.g., answer 4). An effective CAR engine needs to account for this. Not all facets exhibit this general behavior. Let’s consider another query: “What is the effect of curdling in cheese production?” (topic: cheese, facet: curdling). Since ‘curdling’ is not a general-language term, relevant answers probably need to use the term itself; related entity mentions are probably inadequate and would result in confusing text. We refer to this distinction as facet utility: high-utility facets use language that is specific to the topic and can be found directly in relevant answers (e.g., ‘curdling’), whereas low-utility facets use general language and requires additional domain knowledge to identify relevant paragraphs (e.g., ‘health effects’).

Given these observations, we propose a two-pronged approach to CAR. First, we attempt to predict facet utility. For this, we use both structural information about the query itself, and corpus statistics about how frequently facets are used. Then, to better accommodate low-utility facets, we utilize entity mentions in the candidate answer. To this end, we construct a knowledge graph embeddings that contain facet context using a training corpus, and measure distances between the topic entity and the entity mentions. We incorporate both the facet utility estimators and the entity scores into a neural ranking model, and use the model to retrieve complex answers. These approaches yield significant improvements over the unmodified neural ranker, and up to 26% improvement over the next best approach. We then provide a detailed analysis of our results, which shows that low-utility facets are indeed more difficult to match, and that our approach improves these results. In summary, our contributions are as follows:

-

1.

We demonstrate the value of modeling facet utility for CAR, provide two estimators of facet utility, and demonstrate how they can be easily incorporated into neural answer ranking models.

-

2.

We propose an approach for overcoming the low utility of some facet information using a knowledge graph specifically designed to encode CAR facet context.

-

3.

We demonstrate state-of-the-art performance at CAR, show that our methods are generally applicable.

-

4.

We provide a detailed analysis of our results, showing that our estimators are valuable indicators of facet utility, and that incorporating a knowledge graph information in the model can help overcome low-utility facets.

As one of the first comprehensive works on CAR, we expect these insights to guide future work in CAR.

2 Background and related work

2.1 Complex answer retrieval

Complex answer retrieval is a new area of research in IR. In 2017, the TREC conference ran a new shared task focused on CAR (Dietz et al. 2017). The goal of this task is to rank answer paragraphs corresponding to a complex question. The shared task frames CAR in terms of Wikipedia content generation. This is appropriate because the editorial guidelines and strong role of moderators makes Wikipedia an authoritative source of information (Heilman and West 2015). Paragraphs from articles meeting topic criteriaFootnote 2 are selected as a source of answers for retrieval. The task goes one step farther by asserting that Wikipedia is also a good source of CAR queries and relevance judgments. CAR queries are formed from article titles (the query topic, an entity), and headings (query facets of that particular topic). Figure 2 shows an article’s heading hierarchy, including the question topic and an example facet. Furthermore, paragraphs found under each heading are treated as automatically relevant to that particular topic, yielding a large amount of training data (Dietz and Gamari 2017). This makes it practical to train data-hungry neural IR models for CAR.

TREC CAR exploits the hierarchical nature of headings by using multiple headings to form each query. Let Q be a CAR query, consisting of n headings \(\{Q_1,Q_2,\ldots ,Q_n\}\). Let \(Q_n\) be the target heading of the query (that is, the primary facet of the query), \(Q_1\) be the title of the article containing the heading (representing the topic entity of the query), and \(\{Q_2,Q_3,\ldots ,Q_{n-1}\}\) be any intermediate headings along the shortest path between the title and target heading in the heading hierarchy. Intermediate headings are important to provide adequate context for target heading. For instance, in Fig. 2, heading 7.3 refers specially to the pasteurization of cheese as it relates to nutrition and health. Table 1 provides example queries using this terminology. Note that a given query does not necessarily have any intermediate headings, and that a target heading in one query (Cheese » Nutrition and health)Footnote 3 can appear as an intermediate heading in another query (Cheese » Nutrition and health » Pasteurization) because all headings containing paragraphs directly are treated as the target heading of a query.

Headings found in the Wikipedia article Cheese, including labels for the question “Is cheese healthy?”. Adapted from https://en.wikipedia.org/wiki/Cheese

Existing work in CAR is relatively limited. Prior to TREC, Magnusson and Dietz (2017) investigated baseline approaches using the automatic relevance judgments (assuming only paragraphs found under a heading are relevant to that query). Their approaches include BM25, cosine similarity (both with TF-IDF vectors and word embeddings), a baseline learning-to-rank approach, query expansion, and a deep neural model. They found that the deep neural model Duet (Mitra et al. 2017) outperforms the others.

The 2017 TREC CAR task inspired several new approaches to CAR. In this work, we extend our submission to TREC (MacAvaney et al. 2017b) and some follow-up analysis (MacAvaney et al. 2018). Another top-performing submission uses a Sequential Dependence Model (SDM) Metzler and Croft 2005) for answer retrieval (Lin and Lam 2017). They modified the SDM for CAR by considering ordered n-grams that occur within an individual heading component, and unordered n-grams to terms in different headings. This results in a bias toward matching partial phrases that appear in individual headings, and reduces processing time for long queries. Another approach uses a Siamese attention network (Maldonado et al. 2017). Features incorporated into this network include abbreviated entity names and lead paragraph entity mentions from DBPedia (Auer et al. 2007). Yet another approach uses reinforcement learning query reformulation for CAR (Nogueira et al. 2017; Nogueira and Cho 2017). The query reformulation step helps the model add terms that make up for low-utility facets. Our approach differs from these by (1) explicitly modeling facet utility to predict which headings are unlikely to appear in relevant documents, and (2) incorporating contextualized entity relatedness measures to improve performance on low-utility facets.

2.2 Neural IR models

Recent advances in neural IR have produced promising results in the ad-hoc domain, making them a potentially useful tool for CAR ranking. Early work on neural IR models focused on building dense representations of texts that are capable of being compared to measure relevance (e.g., Deep Structured Semantic Model (DSSM) Huang et al. 2013). These methods are akin to traditional vector space model approaches. While scalable, representation-focused methods suffer from loss of term locality information, making certain types of query term matching difficult. These methods are referred to as representation-focused because they differ in the way in which the text representation is formulated. Recent advances in this area include the distributed part of Duet model (Mitra et al. 2017) NRM-F (Zamani et al. 2018) (for handing multiple document fields).

More recently, most work has shifted to interaction-focused methods. These methods differ from representation-focused methods because they model the patterns that which query terms appear in relevant documents directly. In other words, they learn positions, frequencies, and patterns (such as n-grams) indicative of relevance. These approaches can be generalized to a two-phase approach (see Fig. 3a). The first phase finds term matches for a query-document pair, for instance using a convolutional neural network (CNN) and result pooling. The second phase combines the results for each query term using, for instance, a dense feedforward network. The combination results in a relevance score for the query-document pair, which is then used for ranking. Generally, these neural models have been shown to be competitive with conventional ranking techniques due to their ability to learn matching patterns (e.g., bigrams, trigrams, skipgrams) from the training data, and combine the results in an effective way.

Several interaction-focused methods have been recently proposed. The K-NRM model (Xiong et al. 2017) performs matching over s query-document similarity matrix.Footnote 4 It applies differentiable kernels of various sizes to the similarity matrix, resulting in term scores. It then combines the scores with a dense layer to produce a final relevance score. Since term matching is completed individually, this is referred to as a unigram interaction-focused method. Like K-NRM, the PACRR model (Hui et al. 2017) conducts matching over a similarity matrix. However, it uses a 2D CNN with several sizes of square kernels (e.g., \(2\times 2\), \(3\times 3\), etc.), allowing it to learn n-gram patterns. The matching signals for each kernel size are max-pooled, and subsequently k-max-pooled over the document dimension. This results in scores for each term that indicate how well each query term matches the document. Then, the results are combined with a dense combination phase. Because the convolutional kernels capture interactions between multiple query terms, PACRR is an n-gram interaction-focused method. Other prominent interaction-focused architectures include:

-

DRMM (Guo et al. 2016): A unigram model which represents term occurrences by histograms, and a term gating network for combination.

-

MatchPyramid (Pang et al. 2016): An n-gram model which uses hierarchal convolutions and dense combination.

-

The local part of Duet (Mitra et al. 2017): A unigram model which performs convolutions over the full document length and dense combination.

-

DeepRank (Pang et al. 2017): An n-gram model which first extracts pertinent document regions, then performs a CNN on each of these regions and combines these scores with a term gating network.

-

Conv-KNRM (Dai et al. 2018): An n-gram model which first performs 1D convolution, then applies K-NRM kernels.

-

Co-PACRR (Hui et al. 2018): An adaptation of PACRR, which which performs term disambiguation, cascaded pooling, and shuffling to improve result combination.

In this work, we choose to modify neural ranking architectures because preliminary work has shown them to be more effective than conventional approaches out-of-the-box (Magnusson and Dietz 2017), and they can easily incorporate new techniques and information specific to this task. By testing our approach on both a unigram (K-NRM) and n-gram (PACRR) interaction-focused model, we show that our methods are generally applicable.

2.3 Wikipedia and knowledge graphs

Since knowledge graphs encode which entities are related to one another, they may be valuable when identifying paragraphs with low-utility facets. There have been many efforts to organize Wikipedia information into a knowledge graph. Prominent efforts have been the DBPedia (Auer et al. 2007) and Freebase (Bollacker et al. 2008). Researchers have found that query expansion using knowledge bases can improve ad-hoc retrieval (Dalton et al. 2014; Xiong and Callan 2015). Others have investigated how to include knowledge graph features directly in learning-to-rank approaches (Schuhmacher et al. 2015). More recently, Xiong et al. (2017) explored an approach for including entity information in interaction-focused neural rankers by including additional entity-term and entity-entity similarity scores during matching, and an attention mechanism to deal with entity uncertainty. In contrast, in this work we exploit the topic and facet context information provided by CAR queries when modeling entity-entity similarity, and propose an approach for generating a knowledge graph suitable for training these embeddings.

Other efforts have been in how to train embeddings for entities and relations in knowledge graphs. Prominent approaches include translational embeddings (TransE) (Bordes et al. 2013), translational hyperplane embeddings (TransH) (Wang et al. 2014), and holographic embeddings (HolE) (Nickelet al. 2016). Knowledge graphs have also been employed extensively for general question answering tasks (Yih et al. 2015; Singh 2012). In this work, we observe that entity similarity act as a signal for answers that are otherwise difficult to match (i.e., when facets are low-utility). We build a knowledge graph from Wikipedia articles using entity mentions and heading labels. We then train embeddings, and use embedding similarities when ranking answers.

3 Method

As mentioned in Sect. 2, complex answer retrieval (CAR) is a new IR task focused on retrieving complex answers to questions that include a topic and facet. Paragraphs from authoritative resources (e.g., Wikipedia) are considered partial answers for these questions. Identifying and overcoming low-utility facets is a central challenge to CAR (MacAvaney et al. 2017b). Here, we propose approaches based on the Wikipedia-focused CAR problem:

-

High-utility facets We find that high-utility facets correspond to headings that relate to specific details of an article’s topic: topical headings. Thus, a given topical heading is unlikely to appear in most articles. However, we predict that terms found in topical headings are more likely to appear in relevant paragraphs because people are less likely to have the existing knowledge to determine meaning from the mentioned entities. Since the title of an article is the article’s topic, it is necessarily topical.

-

Low-utility facets We identify that low-utility facets often correspond to structural headings in Wikipedia. These headings provide coherence across articles by enforcing a predictable document structure. As a result, these headings occur frequently. Furthermore, they often appear as intermediate headings by organizing topical headings below them. Since the terminology is necessarily more general, we predict that terms in structural headings are less likely to appear in relevant paragraphs because readers can figure out the context by related entities. Thus, we propose including additional entity similarity information to accommodate these cases.

Recall that an interaction-focused neural ranker (Fig. 3a) involves two phases: a matching phase that identifies places in the document in which query terms are used (e.g., convolution), and a combination phase in which the scores for each query term are combined to produce a final relevance score (e.g., dense). In the remainder of this section, we present two approaches to inform an arbitrary interaction-focused neural ranker of facet utility (Sects. 3.1, 3.2, Fig. 3b, c), and one approach to include entity similarity signals to inform the model of relevance for low-utility facets using knowledge base embedding similarity scores (Sect. 3.3, Fig. 3d). Finally, we describe our implementation strategy for two concrete neural rankers (PACRR and K-NRM, Sect. 3.4).

3.1 Contextual vectors

Many information retrieval approaches use the simple (yet powerful) term IDF value as a signal for query term importance. Neural models incorporate this signal, too. For instance, DRMM (Guo et al. 2016) and PACRR (Hui et al. 2017) use an IDF vector in the combination phase. We generalize this approach by allowing an arbitrary number of contextual vectors to be included in the model alongside term interaction signals (Fig. 3b). Here, we use contextual vectors to provide an estimation of facet utility, understanding that the models can learn how to use these values when making relevance judgments. We propose two such estimators: heading position (HP) and heading frequency (HF).

3.1.1 Heading position

Recall that CAR queries consist of a title, intermediate headings, and a target heading. When estimating heading utility, the position of the heading in the list intuitively provides a signal of heading utility. For instance, the title is necessarily topical; it is the topic itself. Furthermore, any intermediate headings are likely structural because they provide categorizations for the target heading. Finally, the target heading may be either topical or structural; its position inherently tells the model nothing about whether or not to expect the term to appear. Thus, we encode three vectors to encode query term position information: one that indicates if the term exists in the title, and intermediate heading, or the target heading. An example of these vectors is given in Table 2. We also considered using query depth to capture the hierarchical information (i.e., title, level 1 heading, level 2 heading, etc.). However, the drawback of using depth information is that it is variable by article. For example, while some articles use level 1 headings as purely structural components, others use content-based level 1 headings. By using the heading position approach, all structural intermediate headings are grouped together, allowing the model to more easily learn to always treat them as context for the target heading.

The benefits of including heading position information can extend beyond simply distinguishing whether a term is likely in a topical or structural heading. For instance, the topic of a question may be abbreviated in relevant paragraphs to avoid excessive repetition. By including heading position information in this way, the model can distinguish when certain matching patterns are important to capture.

3.1.2 Heading frequency

Another possible estimator of heading utility is the frequency that a heading appears in a sufficiently representative dataset. For instance, because structural headings such as ‘Nutrition and health’ are general and can accommodate a variety of topics, one would expect to find the heading in many food-related articles. Indeed, the heading appears in Wikipedia articles such as Cheese, Beef, Raisin, and others. On the other hand, one would expect topical headings to use less general language, and thus be less likely to appear in other articles. For instance the heading ‘After the Acts of Union of 1707’ only appears in the article United Kingdom.

To represent this value, we use the document frequency of each heading: the heading frequency. Thus, frequent headings (e.g., ‘History’) have a large heading frequency value, and infrequent headings (e.g., ‘After the Acts of Union of 1707’) have a low value. For heading matching, we require a complete, case-insensitive match of the text as a heading in an article. Thus, ‘Health effects’ and ‘Health Effects’ are considered the same heading (capitalization), but not ‘Health effects’ and ‘Health’ (substring), ‘Health effect’ (difference in pluralization), or ‘Health affects’ (typographical error). To group similarly-frequent headings together, we stratify the heading frequency value when used as a contextual vector in a range of [0, 3], which allows easy incorporation into neural models. Based on tuning conducted in pilot studies, we found effective breakpoints to be the 60th, 90th, and 99th percentiles.Footnote 5 An example of the heading frequency vector is given in Table 2.

When the model encounters the heading frequency value, we expect it to learn to value high-frequency headings less than low-frequency headings (i.e., assign a negative weight). This results in similar behavior to IDF, but with the important distinction that it operates over entire headings, rather than terms. For instance, most terms in ‘After the Acts of Union of 1707’ have a low IDF (i.e., they appear frequently), but as a whole heading, it appears infrequently and is likely important to match.

Combining contextual vectors The two contextual vectors capture different notions of heading utility. Heading position contextual vectors are able to discriminate otherwise identical headings based on where they appear in the query. This can be valuable in some situations: for instance, ‘Imperialism’ and ‘Finland’ in Table 1 appear as target headings, but they could also appear as the topic of other queries. Heading frequency contextual vectors treat headings with the same text identically, regardless of their position in the query. However, their power comes from informing the model about the nature of the specific heading, and can help identify the utility of target headings (which are otherwise ambiguous), or headings that do not match the typical characteristics in other heading positions. By including both vectors, the model should be able to learn a better sense of heading utility by combining the two notions.

3.2 Heading independence

Although contextual vectors can help inform a trained ranker about which headings are structural and topical, they cannot directly affect which types of interactions are identified and how these interactions are scored because this occurs earlier in the pipeline of the neural model considered (matching phase). We hypothesize that heading utility can actually affect which signals are important. For instance, a low-utility structural heading might be more tolerant to weak term similarities (i.e., ‘colonial’ or ‘ancient’ might be acceptable matches for ‘History’), or a high-utility topical heading might have stricter requirements for maintaining the order of terms. Thus, we propose a general approach to modify a neural ranking architecture to adjust matching based on heading utility: heading independence.

Heading independence involves splitting the matching phase of a neural ranker into multiple segments, each responsible for processing a segment of the query. Here, we split the query by heading position: title, intermediate, and target headings (see Fig. 3c). The processing of each heading position is independent of the others, so a different set of parameters can be learned for each component. The results of the matching layers are then concatenated and sent to the combination layer of the unmodified model (e.g., a dense layer) for the calculation of the final relevance score.

This approach makes intuitive sense because one would expect the title to interact differently in the document than a structural or topical heading. For instance, abbreviated versions of the topic often are used to improve readability. Furthermore, this approach enforces alignment of headings during combination (see Fig. 4). When all query terms are simply concatenated, the alignment of each query position will change among queries due to differences in query length. Thus, the model will need to learn and accommodate multiple tendencies in a wider variety of positions of the query, rather in just certain areas.

3.3 Knowledge graph

Although interaction-focused neural rankers often employ word embedding similarities, word embeddings do not always capture similarities within contexts. This results in individual term being close to many topically-similar terms (Levy and Goldberg 2014). Within the context of CAR, we are only concerned with topics that are similar given a particular context (i.e., the question facet). Furthermore, we know that the most salient contextual information comes from entity mentions. Since article titles also correspond to entities, and headings can be considered the context, we use the following approach employing knowledge graph embeddings.

Example graph construction strategy from a Wikipedia article excerpt. Blue boxes are nodes representing the corresponding entity, and red boxes are edge labels. Adapted from https://en.wikipedia.org/wiki/Cheese

Before we can train these embeddings, we must have a knowledge graph. Since the evaluation topics we use come from Wikipedia, we cannot use a freely-available knowledge graph based on all of Wikipedia data (e.g., Freebase or DBPedia)—this could artificially improve results by including information from the relevant paragraphs themselves.Footnote 6 Instead, we construct our own knowledge graph from the available CAR training data. Our approach is based on the observation made by WikiLinks (Singh et al. 2012): a knowledge graph can be constructed using links in Wikipedia articles between the article’s entity and entities that the page links to. Unlike WikiLinks, we enrich our knowledge graph by labeling the edges using heading information. This contextualizes the relations between entities with respect to CAR, a quality that existing resources do not provide.

More formally, let knowledge graph \(G=(E,R)\), where E is the set of entities, and R is the set of relations. Let E be of the union of the set of all article topic entity and the set of all entities found in links. The set of directed relations R is defined as any pair of entities for (t, m) where t is an article topic, and m is an entity mention in a paragraph relevant to the query. The edges are labeled using the highest-level (non-title) heading of the paragraph. An illustration of this process is shown in Fig. 5. To address the data sparsity problem caused by the large number of low-frequency headings, we only use the lemmatized syntactic head of the target heading. Furthermore, we combine any edge label that doesn’t appear in the \(e_{max}\) most frequent headings into a single edge label. By using this approach, we are able to encode entity relations in a way that maintains relations between high-frequency headings—precisely the headings in most need of entity contextual hints.

We use the knowledge graph G to construct HolE embeddings for the entities and relations. HolE embeddings have been shown to be effective at entity link prediction by capturing rich interactions (Nickelet al. 2016). HolE embeddings produce a collection of entity embeddings and relation embeddings that provide similarity via circular correlation. First, we collect all entity mentions in the paragraph being ranked. We us entities from links that appear in Wikipedia paragraphs that target other articles. However, because Wikipedia guidelines suggest only linking an entity on its first occurrence in an article,Footnote 7 we also explore using an entity extractor to find entity mentions (DBPedia Spotlight Daiber et al. 2013). For each entity mention, we calculate the entity similarity score, given the current query topic entity and the current heading label. We include the top \(n_{entscores}\) from the paragraph during the combination phase of the model (see Fig. 3d). This is similar to how some models (e.g., PACRR) perform k-max pooling of query term results. This approach differs from contextual vectors because these signals refer to the entire query-document combination, and not specific query terms.

3.4 Implementation details

We apply our methods for altering the generalized interaction-focused neural ranking model to the PACRR (Hui et al. 2017) and K-NRM (Xiong et al. 2017) models. Both of these models are described in Sect. 2.2, and have been demonstrated to be a strong approaches for ad-hoc retrieval.

For PACRR, we include contextual vectors after the query term pooling. The vectors are simply concatenated alongside the query term matching scores. For heading independence, we split out the work up through the query term pooling. That is, we perform the CNN and pooling independently by heading position. We add an additional dense layer here to further consolidate heading position information before the final combination phase. We include knowledge graph embedding scores in the combination phase, alongside the matching scores and contextual vectors.

For K-NRM, we include contextual vectors alongside the kernel scores before combination. We split out kernel matching by heading position to achieve heading independence. Knowledge graph embedding scores are concatenated to the matching scores prior to combination.

4 Experiment

In this section, we describe our primary experiment using the approaches described in Sect. 3 and present our results using the CAR dataset.

4.1 Experimental setup

4.1.1 Dataset

We use the official TREC CAR dataset (version 1.5) for both training and evaluating our approach (Dietz and Gamari 2017; Dietz et al. 2017). This dataset was constructed from Wikipedia articles that represent topics (that is, it does not include meta or talk pages). The main datasource for retrieval consists of all the paragraphs, disassociated with their articles and surrounding content (30M paragraphs, paragraphcorpus). The dataset also includes queries, which were automatically generated from the article structure. For each query, the dataset also provides automatic relevance judgments based on the assumption that paragraphs under a particular heading are all valid answers to the corresponding query.

The dataset is split into subsets suitable for training and testing of systems (summary in Table 3). For a randomly-selected half of Wikipedia, all data are provided for training (split into 5 folds, train.fold0-4). We use folds 1 and 2 for training our models. A subset of approximately 200 articles from train.fold0 is an evaluation dataset provided by Magnusson and Dietz (2017) (test200). Finally, 133 articles from the second half of Wikipedia (the half that was not designated for training) serve as evaluation articles (benchmarkY1test). The TREC CAR 2017 task released manual relevance judgments in addition to the automatic judgments available for the other datasets (Dietz et al. 2017). The manual relevance judgments are graded on a scale from Must be mentioned (3) to Trash (− 2). We provide a summary of the frequency of these labels in Table 4. While the manual relevance judgments are considered gold standard and are capable of matching relevant paragraphs from other articles, they only cover a subset of queries (702 of the 2125 queries).

4.1.2 Knowledge graph embeddings

We first generate a knowledge graph using the method described in Sect. 3.3. We use the entire train.fold0-4 dataset to crease as extensive of a graph as possible. We generate two versions of the graph: one using hyperlinks as entity mentions, and one using entity mentions extracted using the automatic entity extractor DBPedia Spotlight (Daiber et al. 2013) with a confidence setting of 0.5. Although this tool is less accurate than manually-created links, it captures entities that are not linked (e.g., the Wikipedia guidelines suggest only linking the first mention of an entity in an article, leaving out subsequent mentions from the graph). Edge labels are limited to only the \(e_{max}=1000\) most frequently-used labels. We test both versions of the graph when evaluating the performance of using knowledge graph embedding scores. We set the embedding length to 100, and train on the link graph using the pairwise stochastic trainer with random sampling for 5000 training iterations (we found this to be enough iterations for the training to converge). When picking which entity scores to include in the model, we use top \(n_{sent\_scores}=2\) similarities (this matches the document term pooling parameter k in PACRR).

4.1.3 Training and evaluation

We train and evaluate several variations of the PACRR and K-NRM models to explore the effectiveness of each approach. For both models, we use the model configurations proposed in Hui et al. (2017) and Xiong et al. (2017), with some modifications to better suit the task. We increase the maximum query length to 18 to accommodate the longer queries often found in the CAR dataset, while shortening the maximum document length to 150 to reduce processing time (the CAR paragraphs are usually much shorter than the documents found in ad-hoc retrieval). For the PACRR model, we use an extended set of kernel sizes: up to \(5\times 5\), based prior work that found the larger kernel sizes effective in some situations (MacAvaney et al. 2017a). We made no changes to the Gaussian kernels for K-NRM proposed in Xiong et al. (2017). We train the PACRR model for 80 iterations of 2048 samples on train.fold1-2, and K-NRM for 200 iterations. We found that this was long enough for each of the models to converge.

Automatic relevance judgments serve as a source of relevant documents, and we use the top non-relevant BM25 documents as negative training examples. Negative samples are used for the pairwise loss function used to train PACRR, and BM25 results offer higher-quality negative samples than random paragraph would (e.g., these examples have matching terms, whereas random paragraphs likely would not).Footnote 8 For each positive sample, we include 6 negative samples. To a point, including more negative samples has been shown to improve the performance of PACRR at the expense of training time (MacAvaney et al. 2017a); we found 6 negative samples to be an effective balance between the two considerations. The training iteration that yields the highest R-Precision value on the validation dataset (test200) is selected for evaluation on the test dataset. We then rerank the top 100 BM25 results for each query in benchmarkY1test, and test using the manual and automatic relevance judgments. For each configuration, we report the 4 official TREC CAR metrics: Mean Average Precision (MAP), R-Precision (R-Prec), Mean Reciprocal Rank (MRR), and normalized Discounted Cumulative Gain (nDCG). We compare the results to an unmodified version of PACRR trained using the same approach, the initial BM25 ranking, and the other top approaches submitted to TREC.

4.2 Results

We present the performance of our methods in Table 5. Overall, the results show that our methods perform favorably compared to the unmodified PACRR and K-NRM models, the other top submissions to TREC CAR 2017 (sequential dependency model Lin and Lam 2017 and the Siamese attention network Maldonado et al. 2017), and the BM25 baseline (which our method re-ranks).

Due to the relatively low number of manual relevance judgments per query,Footnote 9 we report manual relevance judgments both including and excluding unjudged paragraphs. When unjudged paragraphs are included, they are assumed to be irrelevant.Footnote 10 When unjudged documents are excluded, we filter unjudged paragraphs from the ranked lists (i.e., we perform a condensed list evaluation). The condensed list evaluation is a better comparison for methods that were not included in the evaluation pool (Sakai and Kando 2008). Indeed, PACRR with the heading position and heading frequency contextual vectors outperforms the all other approaches, and significantly outperforms the unmodified PACRR model in terms of MAP and nDCG. Interestingly, the knowledge base approaches perform significantly worse than the unmodified PACRR in terms of R-Prec when unjudged paragraphs are included. However, when unjudged paragraphs are not included, it performs significantly better in every case. In fact, the version that uses entity extraction when calculating entity scores performs best overall in terms of MAP and nDCG. This shows that these approaches are effective, and likely rank paragraphs that are relevant yet unjudged high (reducing the score when unjudged documents are included).

When considering automatic relevance judgments, heading independence and the heading frequency contextual vector significantly outperforms unmodified PACRR, and performs best overall in every metric. By R-Prec, this configuration outperforms the next best approach (sequential dependence model) by 26%. However, the performance when evaluating with manual judgments does not significantly outperform unmodified PACRR. Since training is also conducted using automatic relevance judgments, this may be caused by PACRR overfitting to this sense of relevance. Interestingly, when the knowledge graphs features are added to this approach, performance drops with automatic judgments, but increases with manual judgments. This suggests that these features are useful for determining human-classified relevance.

Although the K-NRM does not outperform the PACRR model in our experiments, it is worth noting that our approach yields significant improvements for K-NRM compared to the unmodified model. Specifically, contextual vectors yield significant improvements when evaluating using both with manual and automatic relevance judgments three environments. Beyond that, using heading independence significantly improves on the contextual vector results. This indicates that our approaches are generally applicable to interaction-based neural ranking models for CAR.

5 Analysis

In this section, we investigate several questions surrounding the results to gain more insights into the behavior and functionality of the approaches to CAR detailed in Sect. 3.

5.1 Characteristics of heading positions

Our approaches assert that heading positions (i.e., title, intermediate, and target heading) act as a signal for heading utility. In Sect. 4.2 we showed that including the heading position as either the heading position contextual vector or via and heading independence improves performance beyond the baseline neural architecture. Here, we test the hypothesis that terms found in different heading positions exhibit different behaviors in relevant documents.

We use the term occurrence rate to assist in this analysis. Let the term occurrence rate occ(h) for a given heading h be the probability that any term in a given heading appears in relevant paragraphs. More formally:

where rel(h) is the set of relevant paragraphs for p and I is the indicator function. Although the term occurrence rate only accounts for a single binary term match within relevant documents, this assumption is justified by the fact that headings are usually terse, and the PACRR model only considers the top 2 matches for each query term for ranking purposes (query term pooling). Here, we use the term occurrence rate as a proxy for heading utility.

In Fig. 6, we plot a kernel density estimation of term occurrence distributions for each heading position. We use all topics from the training dataset, and calculate term occurrence rates using automatic relevance judgments. The figure shows that target headings are much more likely to appear in relevant documents than titles and intermediate headings, with a much higher density at the term occurrence rate of 1. This matches our prediction that target headings are more likely to be topical, and therefore appear in relevant documents. Furthermore, the distributions of intermediate and title headings are roughly opposite each other, with titles more likely to occur than intermediate headings. Note that, due to the hierarchical nature of CAR queries, intermediate headings also appear as target headings in other queries for the same topic. This contributes to the high frequency of target headings with a term occurrence rate of 0. Furthermore, many target headings are only used in a single article (i.e., only appear once in the Wikipedia collection), with only a handful of paragraphs associated with them. This explains the multi-modal distribution seen for target headings; the small denominators result in local maxima near \(\frac{1}{3}\), \(\frac{1}{2}\), and \(\frac{2}{3}\).

5.2 Characteristics of high-frequency headings

In Sect. 4.2, we showed that including the heading frequency contextual vector improved retrieval performance. We predicted that the model is able to use this information to predict which query terms are likely to appear in relevant documents. To investigate this behavior, we look at whether heading frequency is correlated with term occurrence rates, and whether there is a performance gap between low- and high-frequency headings.

Term occurrence rate plotted by heading frequency. Heading frequency is grouped and averaged by 100 for clarity. The area of each point is proportional to the number of heading instances used to calculate the term occurrence rate. One very high frequency heading (History) was omitted for readability (heading frequency: 15,220, term occurrence rate: 0.035). The trend shows that low-frequency headings are more likely to appear verbatim in relevant paragraphs than high-frequency headings

In Fig. 7, we plot term occurrence rates by heading frequency. The figure only includes headings that occur at least twice in train.fold0. We remove the extremely-frequent heading ‘History’ and bin frequencies by 100 to improve readability. The area of each point is proportional to the number of heading instances in the bin. It is clear from the figure that the less frequent a heading is, the higher the term occurrence rate. In general, headings with a frequency less than 1000 have an average heading frequency 10-20 points higher than higher-frequency headings. This indicates that heading frequency is a valuable signal for estimating the utility of a heading during ranking.

5.3 Difficultly matching high-frequency headings

Given the knowledge that high-frequency headings generally exhibit a low term occurrence rate, we are interested in measuring whether there exists a discrepancy between the performance at different heading frequencies. Table 6 shows a MAPFootnote 11 performance breakdown on the test dataset (manual relevance judgments, unjudged excluded) stratified by the heading frequency of the target heading found in the training dataset. The number of queries in each stratum varies because the strata were selected from equally-spaced breakpoints in the training data, and the test set does not represent a uniform selection of Wikipedia articles. The most frequent headings (80–100%, e.g., ‘History’ and ‘Early Life’) exhibit the worst performance among all strata. This matches our intuition that these low-utility headings are difficult to match. The low frequency headings exhibit the highest performance by all models. Notice that the gains compared to the unmodified PACRR model are higher for high-frequency headings than the low-frequency headings, despite having a worse performance overall. This validates our claims that low-utility headings are harder to match than high-utility headings, and that our approaches are able to improve performance for these queries.

5.4 Qualitative query analysis

To gain a more thorough understanding of how our methods enhance ranking techniques for CAR, we perform qualitative analysis. Table 7 gives example answers and rankings by various configurations. Query 1 in the table (Instant Coffee » History) saw an improvement from a MAP of 0.4468 (PACRR no modification) to 0.6987 (PACRR + HI + CV + KG (extr.)) when considering manually-judged answers. Both the unmodified model and the model with heading independence ranked the highly-relevant paragraph low (rank 10 and 9), and a non-relevant answer high (rank 1), while the knowledge graph approach did the reverse (rank 1 and 18, respectively). This is likely due to the difference in entities encountered in the answer. The relevant answer has historic and political entities such as World War II and the United Kingdom, while the non-relevant answer includes artists such as Jenifer Papararo.

We notice that in other situations, however, the knowledge base approach fails. In Query 2 of Table 7 (Taste » Basic tastes » Sweetness), the unmodified PACRR model performs better than all variations we explore, with a MAP of 0.7444, compared to 0.4221 for the knowledge graph approach. In this case, the top-level heading is not common enough to be included in the knowledge graph embeddings, so it was collapsed into the remainder class. This explains why it ranked the non-relevant answer listing other tastes high, while pushing down the relevant answer that includes more specific language pertaining to the reception of sweetness. This demonstrates the need in future work to better address low-frequency headings.

6 Conclusion

In this work, we proposed that a central challenge to CAR is the identification and mitigation of low-utility question facets. We introduced two techniques for identifying low-utility facets: contextual vectors based on the hierarchical structure of CAR queries and corpus-wide facet usage information; and incorporation of independent matching pipelines for separate query components. We then introduced one approach to mitigate low-utility facets, which involves building a knowledge graph from CAR training data, and using entity similarity scores during query processing. We applied these approaches to two leading neural ranking methods, and evaluated using the TREC CAR dataset. We found that our approach improved performance when compared to the unmodified version of the ranker, and improve performance by up to 26% (PACRR model with heading independence and heading frequency, evaluated with automatic relevance judgments, and compared to the next best approach, SDM), yielding state-of-the-art results. We then performed an analysis that verified that our indicators of facet utility were valuable, and that our approaches improve the performance on low-utility facets.

However, challenges still remain for this task. While we show that heading frequency and position can be used to assist in the modeling of heading utility, the absolute utility is low (as measured by term occurrence rate). This means that more attention should be given to alternative matching techniques, such as our proposal to use knowledge graph embedding scores during result combination. Additionally, while our approaches narrow the performance gap between high- and low-frequency facets, a gap still remains. This gap is particularly worrisome because high-frequency facets are straightforward candidates for automatic article scaffolding—an ultimate goal of CAR. Despite these open problems, we expect our observations to shape the directions taken for CAR in the future.

Notes

Note that CAR queries are not necessarily complex. A question as simple as ‘Is cheese healthy?’ requires a complex answer: a detailed and nuanced description of positive and negative health effects of cheese consumption is required to satisfy the information need. In contrast, a question such as ‘How much Mozzarella cheese do I need to eat to satisfy my daily requirement of calcium?’ is a complex question with a simple factoid answer because it involves advanced reasoning that goes beyond what is typically captured by a knowledge graph.

E.g., templates, talk pages, portals, lists, references, and pages representing people, organizations, music, books, and others are discarded (Dietz et al. 2017).

We use the symbol »to separate heading components of a query.

For query Q and document D, the similarity matrix S of size \(|Q|\times |D|\) is be computed by calculating the similarity (e.g., cosine) between the representations (e.g., word embeddings) of each query term and document term, i.e., \(S[i,j]=sim(Q_i,D_j)\). A similarity matrix allows for query terms to be soft-matched to document terms.

For reference, the 60th percentile is approximately the cutoff for headings that only appear a few times such as Red Hot Chili Peppers; the 90th percentile is approximately the cutoff of moderately frequent headings such as Finland; and the 99th percentile is approximately the cutoff of frequent headings such as Family and personal life.

We also cannot remove the evaluation topics when training the graph because that defeats the purpose; without target entities encoded in the embeddings, there is no way to find similar entities when ranking.

We acknowledge that some paragraphs included as negative training samples, if inspected manually, would be found relevant due to the limitations of the automatic relevance judgments. We deem this as okay, considering the high occurrence of non-relevant documents in the manual relevance judgments, and the comparatively poor performance of BM25 at CAR.

On average, there are 42 manual relevance judgments for the 702 queries that were manually assessed.

Unjudged evaluation is unavailable for the sequential dependency model and Siamese attention network.

We observed similar behavior for MAP, R-Prec, MRR, and nDCG, so we only report MAP here.

References

Auer, S., Bizer, C., Kobilarov, G., Lehmann, J., Cyganiak, R., & Ives, Z. (2007). DBpedia: A nucleus for a web of open data. In: The semantic web (pp. 722–735).

Bollacker, K. D., Evans, C., Paritosh, P., Sturge, T., & Taylor, J. (2008). Freebase: A collaboratively created graph database for structuring human knowledge. In: Proceedings of the 2008 ACM SIGMOD international conference on management of data (pp. 1247–1250).

Bordes, A., Usunier, N., García-Durán, A., Weston, J., & Yakhnenko, O. (2013). Translating embeddings for modeling multi-relational data. In: Advances in neural information processing systems (pp. 2787–2795.

Dai, Z., Xiong, C., Callan, J. P., & Liu, Z. (2018). Convolutional neural networks for soft-matching n-grams in ad-hoc search. In: Proceedings of the eleventh ACM international conference on web search and data mining (pp. 126–134).

Daiber, J., Jakob, M., Hokamp, C., & Mendes, P. N. (2013). Improving efficiency and accuracy in multilingual entity extraction. In: Proceedings of the 9th international conference on semantic systems (pp. 121–124).

Dalton, J., Dietz, L., & Allan, J. (2014). Entity query feature expansion using knowledge base links. In: Proceedings of the 37th international ACM SIGIR conference on research & development in information retrieval, ACM (pp. 365–374).

Dietz, L., & Gamari, B. (2017). TREC CAR: A data set for complex answer retrieval (version 1.5). http://trec-car.cs.unh.edu. Accessed 2 May 2018.

Dietz, L., Verma, M., Radlinski, F., & Craswell, N. (2017). TREC complex answer retrieval overview. In: Proceedings of TREC.

Guo, J., Fan, Y., Ai, Q., & Croft, W. B. (2016). A deep relevance matching model for Ad-hoc retrieval. In: Proceedings of the 25th ACM international on conference on information and knowledge management (pp. 55–64).

Heilman, J. M., & West, A. G. (2015). Wikipedia and medicine: quantifying readership, editors, and the significance of natural language. Journal of medical Internet research, 17(3), e62.

Huang, P.-S., He, X., Gao, J., Deng, L., Acero, A., & Heck, L. (2013). Learning deep structured semantic models for web search using clickthrough data. In: Proceedings of the 22nd ACM international conference on conference on information & knowledge management (pp. 2333–2338).

Hui, K., Yates, A., Berberich, K., & de Melo, G. (2017). PACRR: A position-aware neural IR model for relevance matching. In: Proceedings of the 2017 conference on empirical methods in natural language processing (pp. 1049–1058).

Hui, K., Yates, A., Berberich, K., & de Melo, G. (2018). Co-PACRR: A context-aware neural IR model for ad-hoc retrieval. In: Proceedings of the eleventh ACM international conference on web search and data mining (pp. 279–287).

Levy, O., & Goldberg, Y. (2014). Dependency-based word embeddings. In: Proceedings of the 52nd annual meeting of the association for computational linguistics (Volume 2: Short Papers) (vol 2, pp. 302–308).

Lin, X., & Lam, W. (2017), CUIS team for TREC 2017 CAR track. In: Proceedings of TREC.

MacAvaney, S., Hui, K., & Yates, A. (2017a). An approach for weakly-supervised deep information retrieval. In: SIGIR 2017 workshop on neural information retrieval.

MacAvaney, S., Yates, A., & Hui, K. (2017b). Contextualized PACRR for complex answer retrieval. In: Proceedings of TREC. .

MacAvaney, S., Yates, A., Cohan, A., Soldaini, L., Hui, K., Goharian, N., & Frieder, O. (2018). Characterizing question facets for complex answer retrieval. In: Proceedings of the 41st international ACM SIGIR conference on research & development in information retrieval (pp. 1205–1208).

Maldonado, R., Taylor, S., & Harabagiu, S. M. (2017). UTD HLTRI at TREC 2017: Complex answer retrieval track. In: Proceedings of TREC.

Metzler, D., & Croft, W. B. (2005). A markov random field model for term dependencies. In: Proceedings of the 28th annual international ACM SIGIR conference on research and development in information retrieval (pp. 472–479).

Mitra, B., Diaz, F., & Craswell, N. (2017). Learning to match using local and distributed representations of text for web search. In: Proceedings of the 26th International Conference on World Wide Web (pp. 1291–1299).

Nanni, F., Mitra, B., Magnusson, M., & Dietz, L. (2017). Benchmark for complex answer retrieval. In: Proceedings of the ACM SIGIR international conference on theory of information retrieval (pp. 293–296).

Nickel, M., Rosasco, L., & Poggio, T. A. (2016). Holographic embeddings of knowledge graphs. In: Proceedings of the thirtieth AAAI conference on artificial intelligence (pp. 1955–1961).

Nogueira, R., & Cho, K. (2017). Task-oriented query reformulation with reinforcement learning. In: Proceedings of the 2017 conference on empirical methods in natural language processing (pp. 574–583).

Nogueira, R., Cho, K., Patel, U., & Chabot, V. (2017). New york university submission to TREC-CAR 2017. In: Proceedings of TREC.

Pang, L., Lan, Y., Guo, J., Xu, J., & Cheng, X. (2016). 2016. A study of MatchPyramid models on ad-hoc retrieval. In: NeuIR at SIGIR.

Pang, L., Lan, Y., Guo, J., Xu, J., Xu, J., & Cheng, X. (2017). DeepRank: A new deep architecture for relevance ranking in information retrieval. In: Proceedings of the 2017 ACM on conference on information and knowledge management (pp. 257–266).

Sakai, T., & Kando, N. (2008). On information retrieval metrics designed for evaluation with incomplete relevance assessments. Information Retrieval, 11(5), 447–470.

Schuhmacher, M., Dietz, L., & Ponzetto, S. P. (2015). Ranking entities for web queries through text and knowledge. In: Proceedings of the 24th ACM international on conference on information and knowledge management (pp. 1461–1470).

Singh, A. (2012). Entity based Q&A retrieval. In: Proceedings of the 2012 Joint conference on empirical methods in natural language processing and computational natural language learning (pp. 1266–1277.

Singh, S., Subramanya, A., Pereira, F., & McCallum, A. (2012). Wikilinks: A large-scale cross-document coreference corpus labeled via links to wikipedia. University of Massachusetts, Amherst, Technical Report UM-CS-2012 15.

Wang, Z., Zhang, J., Feng, J., & Chen, Z. (2014). Knowledge graph embedding by translating on hyperplanes. In: Twenty-Eighth AAAI conference on artificial intelligence.

Xiong, C., & Callan, J. (2015). Query expansion with Freebase. In: Proceedings of the 2015 international conference on the theory of information retrieval, ACM (pp. 111–120).

Xiong, C., Callan, J. P., & Liu, T. -Y. (2017). Word-entity duet representations for document ranking. In: Proceedings of the 40th International ACM SIGIR conference on research and development in information retrieval.

Xiong, C., Dai, Z., Callan, J., Liu, Z., & Power, R. (2017). End-to-end neural ad-hoc ranking with kernel pooling. In: Proceedings of the 40th International ACM SIGIR conference on research and development in information retrieval, ACM (pp. 55–64).

Yih, W.-t., Chang, M.-W., He, X., & Gao, J. (2015). Semantic parsing via staged query graph generation: Question answering with knowledge base. In: Proceedings of the 53rd annual meeting of the association for computational linguistics (pp. 1321–1331).

Zamani, H., Mitra, B., Song, X., Craswell, N., & Tiwary, S. (2018). Neural ranking models with multiple document fields. In: Proceedings of the eleventh ACM international conference on web search and data mining (pp. 700–708).

Author information

Authors and Affiliations

Corresponding author

Additional information

This is an extended version of “Characterizing Question Facets for Complex Answer Retrieval. SIGIR 2018.” MacAvaney et al. (2018) Extensions include the use of knowledge graphs to inform model, and an extensive analysis.

Rights and permissions

About this article

Cite this article

MacAvaney, S., Yates, A., Cohan, A. et al. Overcoming low-utility facets for complex answer retrieval. Inf Retrieval J 22, 395–418 (2019). https://doi.org/10.1007/s10791-018-9343-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10791-018-9343-0