Abstract

Automated formal verification is often based on the Counterexample-Guided Abstraction Refinement (CEGAR) approach. Many variants of CEGAR have been developed over the years as different problem domains usually require different strategies for efficient verification. This has lead to generic and configurable CEGAR frameworks, which can incorporate various algorithms. In our paper we propose six novel improvements to different aspects of the CEGAR approach, including both abstraction and refinement. We implement our new contributions in the Theta framework allowing us to compare them with state-of-the-art algorithms. We conduct an experiment on a diverse set of models to address research questions related to the effectiveness and efficiency of our new strategies. Results show that our new contributions perform well in general. Moreover, we highlight certain cases where performance could not be increased or where a remarkable improvement is achieved.

Similar content being viewed by others

1 Introduction

Counterexample-Guided Abstraction Refinement (CEGAR) [31] is a widely used technique for the automated formal verification of different systems, including both software [15, 39, 53, 54, 56] and hardware [31, 34]. CEGAR works by iteratively constructing and refining abstractions until a proper precision is reached. It starts with computing an abstraction of the system with respect to some abstract domain and a given initial—usually coarse —precision. The abstraction over-approximates [32] the possible behaviors (i.e., the state space) of the original system. Thus, if no erroneous behavior can be found in the abstract state space then the original system is also safe. However, abstract counterexamples corresponding to erroneous behaviors must be checked whether they are reproducible (feasible) in the original system. A feasible counterexample indicates that the original system is unsafe. Otherwise, the counterexample is spurious and it is excluded in the next iteration by adjusting the precision to build a finer abstraction. The algorithm iterates between abstraction and refinement until the abstract system is proved safe, or a feasible counterexample is found.

CEGAR is a generic approach with many variants developed over the past two decades, improving both applicability and performance. There are different abstract domains, including predicates [41] and explicit values [15] and various refinement strategies, including ones based on interpolation [55, 63]. However, there is usually no single best variant: different algorithms are suitable for different verification tasks [43]. Therefore, generic frameworks are also emerging, which provide configurability [14], combinations of different strategies for abstraction and refinement [2, 45], and support for various kind of models [49, 60].

Contributions In our paper, we make the following novel contributions. (1) We propose six new strategies improving various aspects of the CEGAR algorithm, including abstraction and refinement as well. (2) We conduct an experimental evaluation on models from diverse domains, including both software and hardware.

Algorithmic improvements We propose various novel improvements and variations of existing strategies to different aspects of the CEGAR approach.

-

We generalize explicit-value abstraction to be able to enumerate a predefined, configurable number of successor states, improving its precision while still avoiding state space explosion.

-

We adapted a search strategy to the context of CEGAR that estimates the distance from the erroneous state in the abstract state space based on the structure of the original system.

-

We study different splitting techniques applied to complex predicates in order to generalize the result of refinement.

-

We introduce an interpolation strategy based on backward reachability, which traces back the reason of infeasibility to the earliest point.

-

We describe an approach for refinement based on multiple counterexamples, which provides better quality refinement since more information is available.

-

We present combinations of different interpolation strategies that enable selection from different refinements.

We implement our new contributions in Theta [60], an open source, generic and configurable framework. In this paper, we illustrate our new approaches on programs (control flow automata), but most of them can be generalized to other formalisms supported in Theta, such as hardware (transition systems). Theta already includes many of the state-of-the-art algorithms, which allows us to use them as a baseline to evaluate our new contributions.

Experimental evaluation We conduct an experimental evaluation on roughly 800 input models from diverse sources, including the Competition on Software Verification [9], the Hardware Model Checking Competition [25] and industrial PLC software from CERN [40]. The advantage of using a diverse set of models is that we can identify the most suitable application areas. Furthermore, we compare lower lever parameters of CEGAR as opposed to most experiments in the literature [11, 19, 36, 37], where different algorithms or tools are compared. We formulate and address a research question related to the effectiveness and efficiency of each of our contributions.

The results show that our new improvements perform well in general compared to the state of the art. In some cases the differences are subtle, but there are certain subcategories of the models for which a new algorithm yields a remarkable improvement. We also show negative results, i.e., models where a new algorithm is less effective—we believe that such results are also important: in a different domain these algorithms can still be successful.

Outline of the paper The rest of the paper is organized as follows. We first introduce the preliminaries of our work in Sect. 2. Then we describe our new contributions in Sect. 3 and evaluate them in Sect. 4. Finally, we present related work in Sect. 5 and conclude our paper in Sect. 6.

2 Background

This section introduces the preliminaries of our paper. First, we present control flow automata as the modeling formalism used in our work (Sect. 2.1). Then we describe the abstraction and CEGAR-based framework (Sect. 2.2), in which we formalize our new algorithms (Sect. 3).

We use the following notations from first-order logic (FOL) throughout our paper. Given a set of variables \(V = \{v_1, v_2, \ldots \}\) let \(V' = \{v_1', v_2', \ldots \}\) and \(V^{\langle i \rangle } = \{v_1^{\langle i \rangle }, v_2^{\langle i \rangle } \ldots \}\) represent the primed and indexed version of the variables. We use \(V'\) to refer to successor states and \(V^{\langle i \rangle }\) for paths. Given an expression \(\varphi \) over \(V \cup V'\), let \(\varphi ^{\langle i \rangle }\) denote the indexed expression obtained by replacing V and \(V'\) with \(V^{\langle i \rangle }\) and \(V^{\langle i+1 \rangle }\) respectively in \(\varphi \). For example, \((x< y)^{\langle 2 \rangle } \equiv x^{\langle 2 \rangle } < y^{\langle 2 \rangle }\) and \((x' =x + 1)^{\langle 2 \rangle } \equiv x^{\langle 3 \rangle } =x^{\langle 2 \rangle } + 1\). Given an expression \(\varphi \) let \(\mathsf {var}(\varphi )\) denote the set of variables appearing in \(\varphi \), e.g., \(\mathsf {var}(x < y + 2) = \{x, y\}\).

2.1 Control Flow Automata

In our work we describe programs using control flow automata (CFA) [13], a formalism based on FOL variables and expressions.

Definition 1

(Control flow automata) A control flow automaton is a tuple \(\textit{CFA}= (V, L, l_0, E)\) where

-

\(V = \{v_1, v_2, \ldots , v_n\}\) is a set of variables with domains \(D_{v_1}, D_{v_2}, \ldots , D_{v_n}\),

-

\(L\) is a set of program locations modeling the program counter,

-

\(l_0 \in L\) is the initial program location,

-

\(E\subseteq L\times \textit{Ops}\times L\) is a set of directed edges representing the operations that are executed when control flows from the source location to the target.

Operations \(\textit{op}\in \textit{Ops}\) are either assignments or assumptions over the variables of the CFA. Assignments have the form \(v {:}{=} \varphi \), where \(v \in V\), \(\varphi \) is an expression of type \(D_v\) and \(\mathsf {var}(\varphi ) \subseteq V\). Assumptions have the form \(\left[ \psi \right] \), where \(\psi \) is a predicate with \(\mathsf {var}(\psi ) \subseteq V\). An operation \(\textit{op}\in \textit{Ops}\) can also be regarded as a transition formula \(\mathsf {tran}(\textit{op})\) over \(V \cup V'\) defining its semantics. For an assignment operation, the transition formula is defined as \(\mathsf {tran}(v {:}{=} \varphi ) \equiv v' =\varphi \wedge \bigwedge _{v_i \in V {\setminus } \{v\}} v_i' =v_i\) and for an assume operation it is \(\mathsf {tran}(\left[ \psi \right] ) \equiv \psi \wedge \bigwedge _{v \in V} v' =v\). In other words, assignments change a single variable and assumptions check a condition.Footnote 1 By abusing the notation, we allow operations \(\textit{op}\in \textit{Ops}\) to appear as FOL expressions by automatically replacing them with their semantics, i.e., \(\mathsf {tran}(\textit{op})\).

A concrete data state\(c \in D_{v_1} \times \ldots \times D_{v_n}\) is a (many sorted) interpretation that assigns a value \(c(v) = d \in D_v\) to each variable \(v \in V\) of its domain \(D_v\). States with a prime (\(c'\)) or an index (\(c^{\langle i \rangle }\)) assign values to \(V'\) or \(V^{\langle i \rangle }\) respectively. A concrete state\((l, c)\) is a pair of a location \(l\in L\) and a concrete data state. The set of initial states is \(\{(l, c) \, | \, l= l_0\}\) and a transition exists between states \((l, c)\) and \((l', c')\) if an edge \((l, \textit{op}, l') \in E\) exists with \((c, c') \models \textit{op}\).

A concrete path is a finite, alternating sequence of concrete states and operations \(\sigma = ((l_1, c_1), \textit{op}_1, \ldots , \textit{op}_{n-1}, (l_n, c_n))\) if \((l_i, \textit{op}_i, l_{i+1}) \in E\) for every \(1 \le i < n\) and \((c_1^{\langle 1 \rangle }, c_2^{\langle 2 \rangle }, \ldots , c_n^{\langle n \rangle }) \models \bigwedge _{1 \le i < n} \textit{op}_i^{\langle i \rangle }\), i.e., there is a sequence of edges starting from the initial location and the interpretations satisfy the semantics of the operations. A concrete state \((l, c)\) is reachable if a path \(\sigma = ((l_1, c_1), \textit{op}_1, \ldots , \textit{op}_{n-1},\)\((l_n, c_n))\) exists with \(l= l_n\) and \(c = c_n\) for some n.

A verification task is a pair \((\textit{CFA}, l_E)\) of a CFA and a distinguished error location \(l_E \in L\). A verification task is safe if \((l_E, c)\) is not reachable for any c, otherwise it is unsafe.

Example

A simple program and its corresponding CFA can be seen in Fig. 1. Basic elements of structured programming (sequence, selection, repetition) are represented by the structure of the automaton. The assertion in line 8 is mapped as a selection at location \(l_7\). If the assertion holds, the program ends normally in the final location \(l_F\).Footnote 2 Otherwise, failure is indicated with the error location \(l_E\).

2.2 Counterexample-Guided Abstraction Refinement (CEGAR)

Counterexample-Guided Abstraction Refinement (CEGAR) [31] is a verification algorithm that automatically constructs and refines abstractions for a given model (Fig. 2). First, an abstraction algorithm computes an abstract reachability graph (ARG) [12] over some abstract domain with respect to a given initial precision. The ARG is an over-approximation of the original state space, therefore if no abstract state with the error location is reachable then the original model is also safe [32]. However, if an abstract counterexample (a path to an abstract state with the error location) is found, the refinement algorithm checks whether it is feasible in the original model. A feasible counterexample indicates that the original model is unsafe. Otherwise, the counterexample is spurious, the precision is adjusted and the ARG is pruned so that the same counterexample is not encountered in the next iteration of the abstraction.

2.2.1 Abstraction

We define abstraction based on an abstract domain\(D\), a set of precisions\(\varPi \) and a transfer function\(T\) [13].

Definition 2

(Abstract domain) An abstract domain is a tuple \(D= (S, \top , \bot ,\)\(\sqsubseteq {}, \mathsf {expr})\) where

-

\(S\) is a (possibly infinite) lattice of abstract states,

-

\(\top \in S\) is the top element,

-

\(\bot \in S\) is the bottom element,

-

\(\sqsubseteq {}{} \subseteq S\times S\) is a partial order conforming to the lattice and

-

\(\mathsf {expr}:S\mapsto \textit{FOL}\) is the expression function that maps an abstract state to its meaning (the concrete data states it represents) using a FOL formula.

By abusing the notation we will allow abstract states \(s\in S\) to appear as FOL expressions by automatically replacing them with their meaning, i.e., \(\mathsf {expr}(s)\).

Elements \(\pi \in \varPi \) in the set of precisions define the current precision of the abstraction. The transfer function \(T:S\times \textit{Ops}\times \varPi \mapsto 2^S\) calculates the successors of an abstract state with respect to an operation and a target precision.

In the following, we introduce two domains, namely predicate abstraction and explicit-value abstraction, and their extension with the locations of the CFA.

Predicate abstraction In Boolean predicate abstraction [5, 41] an abstract state \(s\in S\) is a Boolean combination of FOL predicates. The top and bottom elements are \(\top \equiv \textit{true}\) and \(\bot \equiv \textit{false}\) respectively. The partial order corresponds to implication, i.e., \(s_1 \sqsubseteq {}s_2\) if \(s_1 \Rightarrow s_2\) for \(s_1, s_2 \in S\). The expression function is the identity function as abstract states are formulas themselves, i.e., \(\mathsf {expr}(s) = s\).

A precision \(\pi \in \varPi \) is a set of FOL predicates that are currently tracked by the algorithm. The result of the transfer function \(T(s, \textit{op}, \pi )\) is the strongest Boolean combination of predicates in the precision that is entailed by the source state \(s\) and the operation \(\textit{op}\). This can be calculated by assigning a fresh propositional variable \(v_i\) to each predicate \(p_i \in \pi \) and enumerating all satisfying assignments of the variables \(v_i\) in the formula \(s\wedge \textit{op}\wedge \bigwedge _{p_i \in \pi } (v_i \leftrightarrow p_i')\). For each assignment, a conjunction of predicates is formed by taking predicates with positive variables and the negations of predicates with negative variables. The disjunction of all such conjunctions is the successor state \(s'\).

In Cartesian predicate abstraction [5] an abstract state \(s\in S\) is a conjunction of FOL predicates. Only the transfer function is defined differently than in Boolean predicate abstraction. The transfer function yields the strongest conjunction of predicates from the precision \(\pi \) that is entailed by the source state \(s\) and the operation \(\textit{op}\), i.e., \(T(s, \textit{op}, \pi ) = \bigwedge _{p_i \in \pi } \{p_i \, | \, s\wedge \textit{op}\Rightarrow p_i' \} \wedge \bigwedge _{p_i \in \pi } \{\lnot p_i \, | \, s\wedge \textit{op}\Rightarrow \lnot p_i' \}\).

Note, that when the precision is empty (\(\pi = \emptyset \)) the transfer function reduces to a feasibility checking of the formula \(s\wedge \textit{op}\), resulting in true or false (for Boolean and Cartesian predicate abstraction as well).

We represent abstract states (in both kind of abstractions) as SMT formulas. However, a possible optimization would be to use binary decision diagrams (BDDs) for compact representation of states and cheaper coverage checks [28].

Explicit-value abstraction In explicit-value abstraction [15] an abstract state \(s\in S\) is an abstract variable assignment, mapping each variable \(v \in V\) to an element from its domain extended with top and bottom values, i.e., \(D_v \cup \{\top _{d_v}, \bot _{d_v}\}\). The top element \(\top \) with \(\top (v) = \top _{v_d}\) holds no specific value for any \(v \in V\) (i.e., it represents an unknown value). The bottom element \(\bot \) with \(\bot (v) = \bot _{v_d}\) means that no assignment is possible for any \(v \in V\). The partial order \(\sqsubseteq {}\) is defined as \(s_1 \sqsubseteq {}s_2\) if \(s_1(v) = s_2(v)\) or \(s_1(v) = \bot _{d_v}\) or \(s_2(v) = \top _{v_d}\) for each \(v \in V\). The expression function is \(\mathsf {expr}(s) \equiv \textit{true}\) if \(s= \top \), \(\mathsf {expr}(s) \equiv \textit{false}\) if \(s(v) = \bot _{d_v}\) for any \(v \in V\), otherwise \(\mathsf {expr}(s) \equiv \bigwedge _{v \in V, s(v) \ne \top _{d_v}} v =s(v)\).

A precision \(\pi \in \varPi \) is a subset of the variables \(\pi \subseteq V\) that is currently tracked by the analysis. The transfer function is given based on the strongest post-operator\(\mathsf {sp}:S\times \textit{Ops}\mapsto S\), defining the semantics of operations under abstract variable assignments. Given an abstract variable assignment \(s\in S\) and an operation \(\textit{op}\in \textit{Ops}\), let the abstract variable assignment \(\hat{s} = \mathsf {sp}(s, \textit{op})\) denote the result of executing \(\textit{op}\) from \(s\).

If \(\textit{op}\) is an assumption \(\left[ \psi \right] \) then for all \(v \in V\)

where \(\psi _{/s}\) denotes the expression obtained by substituting all variables in \(\psi \) with values from \(s\), except top and bottom values.

Note, that if \(\left[ \psi \right] \) is only satisfiable with a single value for a variable v then the successor could be made more precise by setting \(\hat{s}(v)\) to this value [15]. This could be implemented with heuristicsFootnote 3 for a few simple cases (e.g., \(\left[ v = 1 \right] \)), but a general solution requires a solver. In our current paper we use a simple heuristic that can detect if an equality constraint has a variable on one side and a constant on the other (e.g., \(\left[ v = 1 \right] \)) and later we also present a general, configurable solution using a solver in Sect. 3.1.1.

If \(\textit{op}\) is an assignment \(w {:}{=} \varphi \) then for all \(v \in V\)

The transfer function \(T(s, \textit{op}, \pi ) = s'\) is defined based on the strongest post-operator \(\mathsf {sp}\) as follows. Let \(\hat{s} = \mathsf {sp}(s, \textit{op})\), then \(s'(v) = \hat{s}(v)\) if \(v \in \pi \) and \(s'(v) = \top _{d_v}\) otherwise, for each \(v \in V\), i.e., variables not included in the precision are omitted.

Locations Locations of the CFA are usually tracked explicitly regardless of the abstract domain used [13]. Given an abstract domain \(D= (S, \top , \bot , \sqsubseteq {}, \mathsf {expr})\) (e.g., predicate or explicit-value abstraction), let \(D_L= (S_L, \bot _L, \sqsubseteq {}_L, \mathsf {expr}_L)\) denote its extension with locations.Footnote 4 Abstract states \(S_L= L\times S\) are pairs of a location \(l\in L\) and a state \(s\in S\). The bottom element becomes a set \(\bot _L= \{(l, \bot ) \, | \, l\in L\}\) with each location and the bottom element \(\bot \) of \(D\). The partial order is defined as \((l_1, s_1) \sqsubseteq {}(l_2, s_2)\) if \(l_1 = l_2\) and \(s_1 \sqsubseteq {}s_2\). The expression function is \(\mathsf {expr}_L\equiv \mathsf {expr}\), i.e., the location is not required in the expression as it is encoded in the structure of the CFA.

The precisions \(\varPi \) are also extended with a location, becoming a function \(\varPi _L:L\mapsto \varPi \) that maps each location to its precision. Algorithms can be configured to use a global precision, which maps each location to the same precision, or a local precision, which can map different locations to different precisions.Footnote 5

The extended transfer function \(T_L:S_L\times \varPi _L\mapsto 2^{S_L}\) is defined as \(T_L((l, s), \pi _L) = \{(l', s') \, | \, (l, \textit{op}, l') \in E, \, s' \in T(s, \textit{op}, \pi _L(l')) \}\), i.e., \((l', s')\) is a successor of \((l, s)\) if there is an edge between \(l\) and \(l'\) with \(\textit{op}\) and \(s'\) is a successor of \(s\) with respect to the inner transfer function \(T\) and the precision assigned to \(l'\).

Abstract Reachability Graph We represent the abstract state space using an abstract reachability graph (ARG) [12].

Definition 3

(Abstract Reachability Graph) An abstract reachability graph is a tuple \(\textit{ARG}= (N, E, C)\) where

-

\(N\) is the set of nodes, each corresponding to an abstract state in some domain with locations \(D_L\).

-

\(E\subseteq N\times \textit{Ops}\times N\) is a set of directed edges labeled with operations. An edge \((l_1, s_1, \textit{op}, l_2, s_2) \in E\) is present if \((l_2, s_2)\) is a successor of \((l_1, s_1)\) with \(\textit{op}\).

-

\(C\subseteq S\times S\) is the set of covered-by edges. A covered-by edge \((l_1, s_1, l_2, s_2) \in C\) is present if \((l_1, s_1) \sqsubseteq {}(l_2, s_2)\).

A node \((l, s) \in N\) is expanded if all of its successors are included in the ARG with respect to the transfer function; covered if it has an outgoing covered-by edge \((l, s, l', s') \in C\) for some \((l', s') \in N\); and unsafe if \(l= l_E\). A node that is not expanded, covered or unsafe is called unmarked. An ARG is unsafe if there is at least one unsafe node and complete if no nodes are unmarked.

An abstract path\(\sigma = ((l_1, s_1), \textit{op}_1, (l_2, s_2), \textit{op}_2, \ldots , \textit{op}_{n-1}, (l_n, s_n))\) is an alternating sequence of abstract states and operations. An abstract path is feasible if a corresponding concrete path \(((l_1, c_1), \textit{op}_1, (l_2, c_2), \textit{op}_2, \ldots , \textit{op}_{n-1}, (l_n, c_n))\) exists, where each \(c_i\) is mapped to \(s_i\), i.e., \(c_i \models \mathsf {expr}(s_i)\). In practice, this can be decided by querying an SMT solver [20] with the formulaFootnote 6\(s_1^{\langle 1 \rangle } \wedge \textit{op}_1^{\langle 1 \rangle } \wedge s_2^{\langle 2 \rangle } \wedge \textit{op}_2^{\langle 2 \rangle } \wedge \ldots \wedge \textit{op}_{n-1}^{\langle n-1 \rangle } \wedge s_n^{\langle n \rangle }\). A satisfying assignment to this formula corresponds to a concrete path.

Abstraction algorithm Based on the concepts defined above, Algorithm 1 presents a basic procedure for abstraction (based on the CPA concept [13]). The input of the abstraction is a partially constructed ARG (with possibly unmarked states), an error location \(l_E\), an abstract domain \(D_L\) with locations, a current precision \(\pi _L\) and a transfer function \(T_L\). In the first iteration, the ARG only contains the initial state \(S_0 = \{(l_0, \top )\}\) and the precision \(\pi _L\) is usually empty, i.e., no predicates or variables are tracked.

The algorithm initializes the reached set with all states from the ARG and the waitlist with all unmarked states. The algorithm removes and processes states from the waitlist based on some search strategy (e.g., BFS or DFS). If the current state corresponds to the error location, the abstraction terminates with an unsafe result and an unsafe ARG. Otherwise, we check if some already reached state covers the current with respect to the partial order. If not, we calculate successors with the transfer function making the node expanded.

If there are no more nodes to explore and the error location was not found, the abstraction concludes with a safe result and a complete ARG. Note that due to its construction, the ARG without covered-by edges is actually a tree.

Example Figure 3a shows the ARG for the program in Fig. 1 using predicate abstraction with a single predicate \(\pi _L(l) = \{i < 100\}\) for each location \(l\in L\). Nodes are annotated with the location and the predicate (or its negation). Edges are marked with the operations from the CFA. Dashed arrows represent covered-by edges. It can be seen that an abstract state with the error location \(l_E\) is reachable, thus abstraction concludes with an unsafe result. However, using a different set of predicates, e.g., \(\pi '_L(l) = \{x \le 1\}\) would be able to prove the safety of the program.

Figure 3b shows the ARG for the same program using explicit-value abstraction with only tracking the variable x, i.e., \(\pi _L(l) = \{x\}\) for all \(l\in L\). Nodes are annotated with the location and the value of x. It can be seen that no abstract state is reachable in the ARG with the error location \(l_E\), therefore the original program is safe. Also note that tracking the loop variable i is not necessary, hence reducing the size of the ARG.

Example ARGs for the program in Fig. 1. Nodes are represented by rectangles, successors by solid arrows and coverage by dashed arrows

2.2.2 Refinement

Feasibility check Algorithm 2 presents the refinement procedure. The input is an unsafe ARG and the current precision \(\pi _L\). Refinement starts with extracting a path \(\sigma = ((l_1, s_1), \textit{op}_1, (l_2, s_2), \textit{op}_2, \ldots , \textit{op}_{n-1}, (l_n, s_n))\) to the unsafe state (i.e., \(l_n = l_E\)) for feasibility checking. A feasible path corresponds to a concrete path (in the original program) leading to the error location, which terminates refinement with an unsafe result. In this case the precision and the ARG is returned unmodified. Otherwise, an interpolant [55] is calculated from the infeasible path \(\sigma \) that holds information for the further steps of refinement.

Definition 4

(Binary interpolant) For a pair of inconsistent formulas A and B, an interpolant \(I\) is a formula such that

-

A implies \(I\),

-

\(I\wedge B\) is unsatisfiable,

-

\(\mathsf {var}(I) \subseteq \mathsf {var}(A) \cap \mathsf {var}(B)\).

A binary interpolant for an infeasible path \(\sigma \) can be calculated by defining \(A \equiv s_1^{\langle 1 \rangle } \wedge \textit{op}_1^{\langle 1 \rangle } \wedge \ldots \wedge \textit{op}_{i-1}^{\langle i-1 \rangle } \wedge s_i^{\langle i \rangle }\) and \(B \equiv \textit{op}_i^{\langle i \rangle } \wedge s_{i+1}^{\langle i+1 \rangle }\), where i corresponds to the longest prefix of \(\sigma \) that is still feasible.

Binary interpolants can be generalized to sequence interpolants [63] in the following way.

Definition 5

(Sequence interpolant) For a sequence of inconsistent formulas \(A_1, \ldots , A_n\), a sequence interpolant \(I_0, \ldots , I_n\) is a sequence of formulas such that

-

\(I_0 = \textit{true}\), \(I_n = \textit{false}\),

-

\(I_i \wedge A_{i+1}\) implies \(I_{i+1}\) for \(0 \le i < n\),

-

\(\mathsf {var}(I_i) \subseteq (\mathsf {var}(A_1) \cup \ldots \cup \mathsf {var}(A_i)) \cap (\mathsf {var}(A_{i+1} \cup \ldots \cup \mathsf {var}(A_n)))\) for \(1 \le i < n\).

A sequence interpolant for a path \(\sigma \) can be calculated by defining \(A_1 \equiv s_1^{\langle 1 \rangle }\) and \(A_i \equiv \textit{op}_{i}^{\langle i \rangle } \wedge s_{i+1}^{\langle i+1 \rangle }\) for \(1 \le i < n\). A binary interpolant \(I_k\) corresponding to a feasible prefix with length k can also be written as a sequence interpolant where \(I_i \equiv \textit{true}\) for \(i < k\), \(I_i \equiv I_k\) for \(i = k\) and \(I_i \equiv \textit{false}\) for \(i > k\). Note that each element \(I_i\) of the sequence corresponds to a single state \((l_i, s_i)\) in the counterexample \(\sigma \), except \(I_0\). Therefore, \(I_0\) is dropped and variables \(V^{\langle i \rangle }\) are replaced with V before using the formulas for refinement.

Precision adjustment The precision is adjusted by first mapping the formulas of the interpolant \(I_1, I_2, \ldots , I_n\) to a sequence of new precisions \(\pi _1, \pi _2, \ldots , \pi _n\) (in line 5). In predicate abstraction the formulas in the interpolant can simply be used as new predicates, i.e., \(\pi _i = I_i\), whereas in the explicit domain variables of these formulas are extracted,Footnote 7 i.e., \(\pi _i = \mathsf {var}(I_i)\). Then, the new precision \(\pi _L'\) is updated in the following way (in lines 6–10). If \(\pi _L\) is local, then \(\pi _L'(l_i)\) is calculated by joining the new precision for each location \(l_i\) in the counterexample to its previous precision. Otherwise if \(\pi _L\) is global, then \(\pi _L'(l)\) is a union of the old and new precisions for each location \(l\in L\).

Pruning The final step of the refinement is to prune the ARG back until the earliest state where actual refinement occurred, i.e., where the precision changed (lazy abstraction [47]). Formally, this is the node \((l_i, s_i)\) with lowest index \(1 \le i < n\), for which \(I_i \notin \{\textit{true}, \textit{false}\}\). Pruning is done by removing the subtree rooted at \((l_i, s_i)\), including all the successor and covered-by edges associated with the nodes of the subtree. Note, that during this process the parent of \((l_i, s_i)\) becomes unmarked (not expanded anymore) and nodes might also get unmarked due to the removal of covered-by edges. Thus, the abstraction algorithm can continue constructing the ARG in the next iteration.

2.2.3 CEGAR Loop

Algorithm 3 connects the abstraction (Algorithm 1) and refinement (Algorithm 2) methods into a CEGAR loop (Fig. 2). The input of the algorithm is an initial location \(l_0\), an error location \(l_E\), an abstract domain \(D_L\) with locations, an initial (usually empty) precision \(\pi _{L_0}\) and a transfer function \(T_L\).

First, an ARG is initialized with a single node corresponding to the initial location \(l_0\) and the top element of the domain. The current precision \(\pi _L\) is also set to the initial precision \(\pi _{L_0}\). Then the algorithm iterates between performing abstraction and refinement until abstraction concludes with a safe result, or refinement confirms a real counterexample.

3 Algorithmic Improvements

In this section we introduce several improvements both related to the abstraction (Sect. 3.1) and the refinement phase of the algorithm (Sect. 3.2). For abstraction, we define a modified version of the explicit domain where a configurable number of successors can be enumerated (Sect. 3.1.1). We also propose a new search strategy based on the syntactical distance from the error location (Sect. 3.1.2). Furthermore, we describe different ways of splitting predicates to control the granularity of predicate abstraction (Sect. 3.1.3).

For refinement, we present a novel interpolation strategy based on backward reachability (Sect. 3.2.1). We also introduce a method to use multiple counterexamples for refinement (Sect. 3.2.2). Finally, we define an approach to select from multiple refinements for a single counterexample (Sect. 3.2.3).

3.1 Abstraction

3.1.1 Configurable Explicit Domain

Motivation If an expression cannot be evaluated during successor computation in explicit-value abstraction [15] (e.g., due to top elements in abstract states), it is treated and propagated as the top element (i.e., an arbitrary value). In many cases, this is a desirable behavior, which can for example, avoid explicitly enumerating all possibilities for input variables that can indeed take any value from their domain. However, it is also possible that this behavior prevents successful verification.

Example Consider the program on the left side of Fig. 4. The program is safe, because \(0< x \wedge x < 5\) and \(x =0\) cannot hold at the same time. However, explicit-value abstraction fails to prove safety of this program. Even if x is tracked by the analysis, its value is unknown (\(x =\top \)) due to the nondeterministic assignment in line 1. The assumption in line 2 is satisfiable, but with multiple values for x. Therefore, the algorithm continues to line 3 with \(x =\top \), where the assumption is again satisfiable (with \(x =0\)), reaching the assertion violation. At this point, refinement returns the same precision (there are no more variables to be tracked), thus the same abstraction is built again and the algorithm fails to prove the safety of the program.

The problem is that this kind of abstraction can only learn the fact \((0< x \wedge x < 5)\) by enumerating all possibilities for x. This is actually feasible in this case since there are only 4 different values (successors) for x and from each of them, the assumption \(x =0\) is unsatisfiable, proving the safety of the program. Similar examples include variables with finite domains (e.g., Booleans) or modulo operations (e.g., \(x {:}{=} y\ \%\ 3\)).

However, explicitly enumerating all values for a variable is often impractical or even impossible due to the large (or infinite) number of possible values. As an example, consider now the program on the right side of Fig. 4. This program is also safe, because \(x \ne 0\) and \(x =0\) cannot hold at the same time. In this case however, enumerating all values for x such that \(x \ne 0\) is clearly impractical.

Proposed approach Motivated by the examples above, we propose an extension of the explicit-value domain [15], where in case of a non-deterministic expression we allow a limited number of successors to be enumerated explicitly. If the limit is exceeded, the algorithm works as previously (treating the result as unknown). This way we can still avoid state space explosion, but can also solve certain problems that could not be handled previously with traditional explicit-value analysis.

First, we define a modified version of the strongest post-operator (denoted by \(\mathsf {sp}'\)), which distinguishes unknown evaluation results from top elements (introduced deliberately by the abstraction). Given an abstract variable assignment \(s\in S\) and an operation \(\textit{op}\in \textit{Ops}\), let the resulting abstract variable assignment \(\hat{s} = \mathsf {sp}'(s, \textit{op})\) be defined as follows. If \(\textit{op}\) is an assumption \(\left[ \psi \right] \) then for all \(v \in V\)

If \(\textit{op}\) is an assignment \(w {:}{=} \varphi \) then for all \(v \in V\)

The difference between \(\mathsf {sp}\) and \(\mathsf {sp}'\) is that if \(\mathsf {sp}'\) cannot evaluate an assumption or an assignment to a literal then it is treated as a special unknown value.

Our extended, configurable transfer function \(T_k(s,\textit{op},\pi )\) works as follows (Algorithm 4). It first uses \(\mathsf {sp}'\) to compute the successor abstract variable assignment of \(s\) with respect to \(\textit{op}\). If an unknown value is encountered, we use an SMT solver to query satisfying assignments of the primed version of variables in \(\pi \) for the expression \(s\wedge \textit{op}\) with the given limit k. This is done with a feedback loop in the following way. We first query a satisfying assignment for the formula \(s\wedge \textit{op}\) and project it to only include variables in \(\pi '\). Then we add the negation of the assignment as a formula to the solver and repeat this process until the formula becomes unsatisfiable or we exceed k. Note, that if there are multiple variables in \(\pi '\), the limit k corresponds to all possible combinations and not to each individual variable separately (which would allow \(|\pi '|^k\) total assignments). For example, \(\{(x=1,y=5),(x=1,y=6),(x=2,y=6)\}\) counts as 3 assignments, even though both x and y can only take 2 different values.

If there are no more than k possible assignments, we treat all of them as a new successor state as if it was returned by \(\mathsf {sp}'\). Otherwise, if there are more than k assignments, we stop enumerating them and fall back to using \(\mathsf {sp}\) instead.

Finally, we perform abstraction by setting the non-tracked variables \(v \notin \pi \) to top elements in the successors (as it is done in plain explicit-value abstraction). Note that as a special case \(k = 1\) is similar to traditional explicit-value analysis because each state has at most one successor. However, if an expression cannot be evaluated (even using heuristics), we use an SMT solver which makes the analysis more expensive, but also more precise.

Discussion The advantage of this method is that k can be tuned to reduce the number of unknown values while still avoiding state space explosion. For the example on the left side of Fig. 4, any k with \(k \ge 4\) would work. Currently we experimented with different values for k from a fixed set of values (Sect. 4.2.1). However, it would also be possible to use heuristics for automatically selecting or even dynamically adjusting k during the analysis. Such heuristics could be based on the domain of variables (e.g., Booleans, bounded integers) or the operations (e.g., modulo arithmetic). Furthermore, different k values could be assigned to different locations \(l\in L\) in the CFA similarly to a local precision.

Note, that since we are enumerating k successors in each step, after n steps there could be \(k^n\) states in the worst case. However, this can only happen if there is a non-deterministic assignment for the variables in each step. Otherwise, we know the exact values of each variable after the first step and we can evaluate every expression in the following steps in exactly one way.

Operations in the CFA have their corresponding FOL expressions, therefore an SMT solver can be used out-of-the box to enumerate successors. However, our algorithm can work with other strategies (known e.g., from explicit model checkers [49]) as long as they can enumerate successors for a source state and an operation. Furthermore, since we only need the actual successors if there are no more than k of them, as an optimization, heuristics could be developed that can tell if an expression has more than k satisfying assignments without actually enumerating them.

3.1.2 Error Location-Based Search

Motivation Recall that the abstract state space can be explored using different search strategies, depending on how the ARG nodes in the waitlist are ordered (Algorithm 1). For example, breadth and depth-first search (BFS and DFS) orders nodes based on their depth ascending and descending respectively. These basic strategies however, use no information from the input verification task.

Proposed approach To focus the search more effectively, we propose a strategy based on the syntactical distance from the error location in the control flow automaton. Given a verification task \((\textit{CFA}, l_E)\) we define the distance \(d_E:L\mapsto \mathbb {N}\) of each location \(l\in L\) to the error location \(l_E\) as the length of the shortest directed path from \(l\) to \(l_E\) without considering the operations. Note that \(d_E(l)\) is an under-approximation of the actual distance between \(l\) and \(l_E\) in the ARG since shorter paths are not possible, but some operations might be infeasible, making the actual (feasible) distance longer. The distances can be calculated (and stored for later queries) at the beginning of the analysis using a backward breadth-first search from the error location.Footnote 8 Then from each node \((l, s)\) on the waitlist, we simply remove one where \(d_E(l)\) is minimal. However, some examples highlight that loops might trick this approach as well. Therefore, we also experiment with metrics based on a weighted sum of the distance to the error location and the depth of the current node in the ARG.

Example Consider the CFA in Fig. 5a. The distance to the error location \(l_E\) is written next to each location. For simplicity, operations are omitted from the edges. Furthermore, suppose that most of the paths are actually feasible at the current level of abstraction, as otherwise all search strategies perform similarly. It can be seen that the number of paths to the error location scales exponentially with the number of branches (if this diamond-shaped pattern is repeated). Therefore, a traditional BFS approach would cause an exponential execution time. DFS would however, find the first path to \(l_E\) quickly for example by exploring \(l_0, l_1, l_3, l_4, l_6, l_7, l_E\) in this order. The error location-based approach would act similarly, as it first starts with \(l_0\), discovering its successors \(l_1\) and \(l_2\) both with a distance of 5. Then, by picking for example \(l_1\), its only successor is \(l_3\) with a distance of 4. Therefore, the algorithm will pick \(l_3\) (with \(d_E(l_3) = 4\)) next instead of \(l_2\) (with \(d_E(l_2) = 5\)), similarly to DFS.

Consider now the CFA in Fig. 5b. DFS can easily fail for this case if it is feasible to unfold the loop \(l_0, l_1, l_2, l_1, l_2, l_1, l_2 \ldots \) many times. However, the error location-based search may also fail if the edge from \(l_1\) to \(l_6\) is not feasible. In this case, the algorithm would also iterate between \(l_1\) and \(l_2\) (as long as possible), since \(l_3\) on the other path has a greater distance. A possible way to overcome this problem is to use a combined metric based on the depth of the current node in the ARG (denoted by \(d_D\)) and the distance to the error location.

Discussion Simply summing the distance and the depth causes each node corresponding to Fig. 5a to be equal in the ordering. Hence, it is reasonable to use a weighted metric \(w_D\cdot d_D(s, l) + w_E\cdot d_E(l)\). Assigning a greater weight to \(d_E\) can guide the search effectively based on the CFA, while a nonzero weight for \(w_D\) can help to avoid unfolding loops too many times. Currently, we experimented with the following five different configurations for the weights (Sect. 4.2.2).

-

\((w_E= 0, w_D= 1)\) is a traditional breadth-first search.

-

\((w_E= 0, w_D= -1)\) is a traditional depth-first search.

-

\((w_E= 1, w_D= 0)\) considers only the distance from the error location.

-

\((w_E= 2, w_D= 1)\) combines the distance from the error with the depth (BFS), but with less weight.

-

\((w_E= 1, w_D= 2)\) also uses depth and the distance from the error but is biased towards depth.

The first three configurations serve as a baseline, while the last two demonstrate combinations. A possible future work could be to experiment with further values for the weights or with iteration strategies known from abstract interpretation [21].

Remark

One might wonder about the usefulness of this approach on safe verification tasks (where no concrete state with \(l_E\) is reachable). Even for such tasks, the intermediate iterations of CEGAR still encounter (spurious) counterexamples. In this case the error location-based search can help to find these counterexamples and converge faster.

3.1.3 Splitting Predicates

Motivation Predicates are the atomic units of predicate abstraction, i.e., each abstract state is labeled with some Boolean combination of predicates \(p_i \in \pi \) from the current precision \(\pi \). Cartesian predicate abstraction yields a conjunction (e.g., \(p_1 \wedge \lnot p_2 \wedge p_3\)), whereas Boolean predicate abstraction can give any combination (using a disjunction over conjunctions, e.g., \(p_1 \wedge \lnot p_2 \vee p_2 \wedge p_3\)). However, predicates themselves can also correspond to an arbitrary formula over some atoms, e.g., \(p \equiv (0< x) \wedge (y< 5) \vee (x < 5)\). In such cases we can treat a complex predicate both as a whole [19] or we can also split it into smaller parts such as its atoms [46]. This can influence both the precision of abstraction and the performance of the algorithm. For example, suppose that we want to represent a state \(a \wedge \lnot b \vee \lnot a \wedge b\), where a and b are some atoms. If we only consider the atoms \(\{a, b\}\) as the precision, their strongest conjunction implied by the state is \(\textit{true}\), i.e., Cartesian abstraction might not be precise enough. While Boolean predicate abstraction is able to faithfully reconstruct the original state, the number of possibly enumerated models grow exponentially with the number of atomic predicates. In contrast, keeping predicates as a whole may yield a slower convergence as subformulas cannot be reused.

Proposed approach New predicates are introduced during abstraction refinement using interpolation. However, interpolation procedures may return complex formulas, which are specific to a single counterexample. A possible way to generalize such formulas is to split complex predicates into smaller parts before adding them to the refined precision. Formally, we define different splitting functions\(\mathsf {split}:\textit{FOL}\mapsto 2^\textit{FOL}\) that map a FOL formula to a set of formulas.

We experimented with the following configurations, which give different granularities for the precision (Sect. 4.2.3).

-

\(\mathsf {atoms}(\varphi )\) splits predicates to their atoms, which is the finest granularity that can be achieved syntactically.Footnote 9 For example, \(\mathsf {atoms}(p_1 \wedge (p_2 \vee \lnot p_3)) = \{p_1, p_2, p_3\}\).

-

\(\mathsf {conjuncts}(\varphi )\) is a middle ground that splits predicates to their conjuncts. For example, \(\mathsf {conjuncts}(p_1 \wedge (p_2 \vee \lnot p_3)) = \{p_1, (p_2 \vee \lnot p_3)\}\).

-

\(\mathsf {whole}(\varphi ) \equiv \varphi \), i.e., the identity function keeps predicates as a whole, which is the coarsest granularity. It is motivated by Boolean variables, where the atoms are the variables themselves and the valuable information learned by the interpolation procedure lies in the logical connections.

A similar idea can be applied to generalize Boolean predicate abstraction, where the Boolean combination of predicates is represented by a single state as a disjunction over conjunctions of predicates. We define a modified version of Boolean predicate abstraction called splitting abstraction where this disjunction is split into its elements, which are then treated as separate abstract states (separate nodes in the ARG). This allows us to represent successor and coverage relations in a finer way. For example, the abstract state \(s= (p_1 \wedge \lnot p_2) \vee (p_2 \wedge p_3)\) is split into \(s_1 = (p_1 \wedge \lnot p_2)\) and \(s_2 = (p_2 \wedge p_3)\). Then it can be possible that although \(s\) cannot be covered, but \(s_1\) can be and we only have to continue with \(s_2\).

3.2 Refinement

3.2.1 Backward Binary Interpolation

Motivation The binary interpolation algorithm presented in Sect. 2.2.2 defines the two formulas A and B based on the longest feasible prefix. This yields an interpolant that refines the last abstract state on the counterexample that can still be reached in the concrete program (starting from the initial state). Therefore, from this point on we will refer to this strategy as forward binary interpolation. We observed that this strategy gives poor performance in many cases (Sect. 4.2.4).

Example

Consider the abstract counterexample in Fig. 6a. Rectangles are abstract states, with dots representing concrete states mapped to them. The initial state is \(s_1\) and the erroneous state is \(s_5\). Edges denote transitions in the concrete and abstract state space. Due to the existential property of abstraction, an abstract transition exists between two abstract states if at least one concrete transition exists between concrete states mapped to them [32].

It can be seen that the longest feasible prefix is \((s_1, \textit{op}_1, s_2, \textit{op}_2, s_3, \textit{op}_3, s_4)\). Forward binary interpolation would therefore set \(A \equiv s_1^{\langle 1 \rangle } \wedge \textit{op}_1^{\langle 1 \rangle } \wedge \ldots \wedge \textit{op}_3^{\langle 3 \rangle } \wedge s_4^{\langle 4 \rangle }\) and \(B \equiv \textit{op}_4^{\langle 4 \rangle } \wedge s_5^{\langle 5 \rangle }\). This gives an interpolant corresponding to \(s_4\), pruning the ARG back until \(s_3\). Continuing from \(s_3\) with the new precision yields \(s_{41}, s_{42}, s_{51}\) and \(s_{52}\) (instead of \(s_4\) and \(s_5\)), as seen in Fig. 6b. However, \(s_{51}\) is still reachable in the abstract state space (via \(s_1, s_2, s_3, s_{41}, s_{51}\)), but the counterexample is only feasible until \(s_3\) now. The algorithm needs to perform two additional refinements until \(s_3\) and \(s_2\) is refined, and the ARG is pruned back until \(s_1\) (Fig. 6c). All spurious behavior is now eliminated as neither \(s_{51}\) nor \(s_{52}\) is reachable. However, this requires many iterations for the same counterexample, and a potentially larger abstract state space in each round due to the increasing precision.

We observed such situations when a variable is assigned at a certain point of the path (e.g, \(\textit{op}_1 \equiv x {:}{=} 0\)), but only contradicts a guard later (e.g. \(\textit{op}_4 \equiv \left[ x > 5 \right] \)). Although the path is feasible until the guard, in these cases the root cause of the counterexample being spurious traces back to the assignment of the variable.

Proposed approach To alleviate the previous problems we define a novel refinement strategy that is based on the longest feasible suffix of the counterexample. We call this strategy backward binary interpolation as it starts with the erroneous state and progresses backward as long as the suffix is feasible. Formally, let \(\sigma = (s_1, \textit{op}_1, \ldots , \textit{op}_{n-1}, s_n)\) be an abstract counterexample and let \(1 < i \le n\) be the lowest index for which the suffix \((s_i, \textit{op}_i, \ldots , \textit{op}_{n-1}, s_n)\) is feasible. Then we define a backward binary interpolant as \(A \equiv s_i^{\langle i \rangle } \wedge \textit{op}_i^{\langle i \rangle } \wedge \ldots \wedge \textit{op}_{n-1}^{\langle n-1 \rangle } \wedge s_n^{\langle n \rangle }\) and \(B \equiv s_{i-1}^{\langle i-1 \rangle } \wedge \textit{op}_{i-1}^{\langle i-1 \rangle }\). In other words, A encodes the feasible suffix and B encodes the preceding transition that makes it infeasible. The formula \(A \wedge B\) is unsatisfiable, otherwise a longer feasible suffix would exist. Similarly to forward binary interpolation, the only common variables in A and B correspond to \(s_i\). Therefore, indexes can be removed from the interpolant \(I\).

As an example, consider Fig. 6a again. The longest feasible suffix is \((s_2, \textit{op}_2,\)\(s_3,\)\(\textit{op}_3, s_4, \textit{op}_4, s_5)\). Thus, the interpolation formulas are \(A \equiv s_2^{\langle 2 \rangle } \wedge \textit{op}_2^{\langle 2 \rangle } \wedge \ldots \wedge \textit{op}_4^{\langle 4 \rangle } \wedge s_5^{\langle 5 \rangle }\) and \(B \equiv s_1^{\langle 1 \rangle } \wedge \textit{op}_1^{\langle 1 \rangle }\). The resulting interpolant \(I\) corresponds to \(s_2\) and the ARG is pruned back until \(s_1\) (Fig. 6c) in a single step (assuming a global precision).

Discussion We motivated backward binary interpolation by comparing it to forward interpolation and showing that it can trace back the root cause in fewer steps. In software model checking however, sequence interpolation is the standard technique. Hence we also compare our backward interpolation approach to sequence interpolation (Sect. 4.2.4). A potential advantage of backward interpolation is that it can be more compact than sequence interpolation (which could yield a formula for each location along the counterexample, making the algorithm prune a larger portion of the state space). Backward search-based strategies also proved themselves efficient in the context of other algorithms, such as Impact [3] or Newton [38].

3.2.2 Multiple Counterexamples for Refinement

Motivation Most approaches in the literature stop exploring the abstract state space and apply refinement as soon as the first counterexample is encountered. Although collecting more counterexamples adds an overhead to abstraction, better refinements may be possible as more information is available. Altogether, this could reduce the number of iterations and increase the efficiency of the algorithm.

Proposed approach We modified the abstraction algorithm (Algorithm 1) so that it does not return the first counterexample (by removing line 7), but keeps exploring the state space. The algorithm can be configured (by adding a condition to the loop header in line 3) to stop after a given number of erroneous states or to explore all of them.

If at least one of the counterexamples is feasible, then the algorithm can terminate with an unsafe result. However, if all of them are infeasible, there are many possible ways to use the information for refinement. We propose a technique where we first calculate a refinement for each counterexample and derive a minimal set required to eliminate all spurious behavior. Then, we update the precision and apply pruning based on this minimal set.

Our approach is formalized in Algorithm 5. First, we extract paths \(\varSigma \) leading to states with the error location \(l_E\) from the ARG. If any path \(\sigma _i \in \varSigma \) is feasible, then the algorithm terminates with an unsafe result. Otherwise, we calculate an interpolant \(\textit{Itp}_i\) for each path \(\sigma _i\). Given a path \(\sigma _i\) and its corresponding interpolant \(\textit{Itp}_i\), we can determine the first state \(s_{r_i} \in \sigma _i\) of the path that actually needs refinement (i.e., the first state where the interpolant is not \(\textit{true}\) or \(\textit{false}\)). These states correspond to pruning points in the ARG.

Then, we determine the minimal set of counterexamples to be refined in the following way. For each path \(\sigma _i\) with its first state to be refined \(s_{r_i}\), we check if any other state in \(S_r\) is a proper ancestorFootnote 10 of \(s_{r_i}\) in the ARG.Footnote 11 If such state exists, it means that the other path shares its prefix with the currently examined path, and will need refinement earlier. That refinement will add new predicates and prune the ARG earlier, possibly eliminating the current counterexample as well. Therefore, the current path is skipped for now (lazy refinement).

For each path that is not skipped, we map the interpolant to a new precision and join it to the old one, taking into account whether the precision is local or global. Finally, we return a spurious result and the new precision \(\pi _L'\).

Example Consider the ARG (without covered-by edges) in Fig. 7. There are four counterexamples \(\sigma _1, \ldots , \sigma _4\) in the ARG leading to the abstract states \((s_1, l_E),\)\(\ldots ,\)\((s_4, l_E)\). The first states to be refined are denoted with a gray background. In this example the minimal set of counterexamples is \(\{\sigma _2, \sigma _3\}\), because \(s_{r_2}\) and \(s_{r_3}\) are proper ancestors of \(s_{r_1}\) and \(s_{r_4}\) respectively. Refining \(\sigma _2\) and \(\sigma _3\) will therefore, eliminate all spurious behavior from the current ARG. Note, that in the next iteration \((s_1, l_E)\) and \((s_4, l_E)\) might still be reached again if the predicates for \(\sigma _2\) and \(\sigma _3\) were not sufficient. In this case these counterexamples are eliminated in the next iteration.

Discussion Our approach for multiple counterexamples can work with any refinement strategy. In our current experiment (Sect. 4.2.5) we use sequence interpolation. However, it would even be possible to use different strategies for the different counterexamples as opposed to existing approaches that use multiple counterexamples (e.g., DAG interpolation [1] or global refinement [54]).

Currently we have a single error location in the CFA so each counterexample leads to the same location on a different path. However, our approach does not rely on this, and would work the same way even if the collected counterexamples lead to different locations.

The presented algorithm handles all counterexamples in the solver separately by reusing existing interpolation modules. A possible optimization would be to use the incremental API of SMT solvers by pushing the first counterexample, performing the check and interpolation and then popping only back to the common prefix of the current and next counterexample, and so on.

3.2.3 Multiple Refinements for a Counterexample

Motivation In Sect. 3.2.1 we presented a novel interpolation approach based on backward search, which performs better than the traditional forward search method according to our experiments (Sect. 4.2.4). Using a portfolio of refinements can combine the advantages of different methods [16, 45]. Therefore, in this section we suggest strategies that calculate both forward and backward interpolants and pick the “better” one based on certain heuristics.

Proposed approach The heuristics that we currently introduce are based on the index of pruning. Recall that given an interpolant in its general form \(I_0, \ldots , I_n\), the ARG is pruned back until actual refinement occurred, i.e., until the lowest index \(1 \le i < n\) with \(I_i \notin \{\textit{true}, \textit{false}\}\). This corresponds to the longest feasible prefix and suffix for forward and backward binary interpolants respectively.

Two basic heuristics that we experiment with (Sect. 4.2.6) are to select the interpolant with the minimal or maximal prune index. These heuristics prune the ARG as close as possible to the initial state or the error state respectively.

Example

Consider Fig. 8 with two possible abstract counterexamples. In case of Fig. 8a forward and backward interpolation would prune until \(s_4\) and \(s_2\) respectively. For the counterexample in Fig. 8b pruning would be the other way around. However, the minimal and maximal prune index strategies would prune until \(s_2\) and \(s_4\) respectively in both cases.

4 Evaluation

In this section, we evaluate the effectiveness and efficiency of our algorithmic contributions presented before (Sect. 3) by conducting an experiment. First, we introduce our experiment plans along with the research questions to be addressed (Sect. 4.1). Then, we present and discuss our results and analyses for each research question in a separate subsection (Sect. 4.2). Finally, we compare our implementation to other tools in order to provide a baseline for the research questions (Sect. 4.3). The design and terminology of the experiment are based on the book of Wohlin et al. [64]. The raw data, a detailed report and instructions to reproduce our experiment are available in a supplementary material [42].

4.1 Experiment Planning

The goal of our experiment is to evaluate our new contributions on a broad set of verification tasks from diverse sources. In our experiment we execute various configurations of the CEGAR algorithm on several input models.

4.1.1 Research Questions

We formulate a research question for the performance of each algorithmic contribution presented in Sect. 3. We are mainly interested in two performance aspects: the number of verification tasks solved within a given time limit per task (effectiveness) and the total execution time required (efficiency). Other measured aspects include a more refined categorization of unsolved tasks (timeout, out-of-memory, exception) and the peak memory consumption.

-

RQ1

How does the configurable explicit domain perform for increasing values of k compared to traditional explicit-value analysis?

-

RQ2

How does the error location-based search perform for different weights (\(w_D\), \(w_E\)) compared to breadth and depth-first search?

-

RQ3

How do splitting predicates (into conjuncts or atoms) and splitting states perform compared to predicate abstraction without splitting?

-

RQ4

How does backward binary interpolation perform compared to forward binary and sequence interpolation?

-

RQ5

How does refinement based on multiple counterexamples perform compared to using only a single one?

-

RQ6

How do the combined refinement strategies (based on the minimal/maximal prune index) perform compared to backward and forward binary interpolation?

4.1.2 Subjects and Objects

We implemented both the existing algorithms presented in the background (Sect. 2) and our new contributions (Sect. 3) in the open source Theta toolFootnote 12 [60]. Theta is a generic, modular and configurable framework, supporting the development and evaluation of abstraction-based algorithms in a common environment.

One of the distinguishing features of Theta is that it supports different kind of models (e.g., control flow automata, transition systems, timed automata). An interpreter hides the differences between these formalisms so the algorithms presented in this paper work for verification tasks from different domains (e.g., software, hardware). There are some exceptions though: the configurable explicit domain (Sect. 3.1.1) requires statements and the error location-based search (Sect. 3.1.2) requires locations. Therefore, these algorithms do not work for hardware models since those are encoded as transition systems.

For the objects of the experiment, we use C programs from the Competition on Software Verification (SV-COMP) [9], hardware models from the Hardware Model Checking Competition (HWMCC) [25] and industrial Programmable Logic Controller (PLC) software models from CERN [40].

Table 1 gives an overview of the number of input models and verification tasks along with size and complexity metrics. We selected models from four categories of the 2018 editionFootnote 13 of SV-COMP that are currently supported by the limitedFootnote 14 C front-end of Theta [59]. By applying backward slicing [59] we generate a separate verification task for each assertion. The category locks consists of small (94-234 LoC) locking mechanisms with several assertions per model. The collection loops includes small (9-70 LoC) programs focusing on loops. The ECA (event-condition-action) task set contains larger (591-1669 LoC) event-driven reactive systems. The tasks in ssh-simplified describe larger (557-713 LoC) client-server systems.

We also experimented with industrial PLC software modules from CERN. These modules operate in an infinite loop, where a formula (the requirement) is always checked at the end of the loop. It can be seen that the size of these models is greatly varying from a few dozens of locations to a couple of thousands.

Furthermore, we picked all 300 models from the 2017 editionFootnote 15 of HWMCC. These tasks are encoded as transition systems, describing circuits with inputs, logical gates and latches. The metrics reported in the table for the hardware models are after applying cone of influence (COI) reduction [33].

The majority of the CFA tasks (442) is expected to be safe, while the rest is unsafe (93). To the best of our knowledge, the (300) hardware models do not have an expected result.

Due to slicing [59] it is possible that different tasks corresponding to the same program will have different models (i.e., CFA). Hence, we encode each task in a separate file including the model (CFA) and the property and treat them as if they were different models. Therefore, from now on we use the terms “model” and “verification task” interchangeably.

4.1.3 Variables

Variables of our experiment are listed in Table 2, grouped into three main categories. Properties of the model and parameters of the algorithm configuration are independent variables, whereas output metrics of the algorithm are dependent.

Properties of the model

-

The variable Model represents the unique name of each model (verification task).

-

Furthermore, models have a Category based on their origin.

Parameters of the algorithm

-

The variable Domain represents the abstract domain used. The values PRED_BOOL and PRED_CART stand for Boolean and Cartesian predicate abstraction, while EXPL stands for explicit-value analysis. Furthermore, our Boolean predicate abstraction with state splitting (Sect. 3.1.3) is encoded by PRED_SPLIT.

-

The integer variable MaxEnum corresponds to the maximal number of successors allowed to be enumerated (denoted by k) in our configurable explicit domain (Sect. 3.1.1). The value 0 represents \(k = \infty \), i.e., there is no limit on the number of successors. Furthermore, the value \(1^*\) enumerates at most one successor without using an SMT solver (corresponding to traditional explicit-value analysis [15]).

-

The variable PrecGranularity represents the granularity of the precision. When the granularity is LOCAL, a different precision can be assigned to each location, whereas GLOBAL granularity means that the precision is the same for each location.

-

The variable PredSplit defines the way complex predicates are split into smaller parts before introducing them in the refined precision (Sect. 3.1.3). Possible values are ATOMS, CONJUNCTS and WHOLE (no splitting).

-

The variable Refinement corresponds to the refinement strategy used. The values FW_BIN_ITP and SEQ_ITP represent traditional binary and sequence interpolation (Sect. 2.2.2). The value BW_BIN_ITP is our novel backward search-based binary interpolation strategy (Sect. 3.2.1), whereas MAX_PRUNE and MIN_PRUNE refer to combined refinements with maximal and minimal prune index (Sect. 3.2.3). The value MULTI_SEQ uses sequence interpolation and our approach of multiple counterexamples (Sect. 3.2.2).

-

The variable Search represents the search strategy in the abstract state space. Values BFS and DFS denote breadth and depth first search. Other values correspond to our error location-based strategy (Sect. 3.1.2) with different weights \(w_D\) and \(w_E\). The strategy ERR only takes into account the error location, i.e., \(w_D= 0\) and \(w_E= 1\). The values ERR_DFS and DFS_ERR use both weights but are biased towards one or the other (\(w_D= 2\), \(w_E= 1\) and \(w_D= 1\), \(w_E= 2\) respectively).

Metrics

-

The dependent variable Succ indicates whether the algorithm terminated and provided a correct result (no false negative/positive) successfully within the given CPU time and memory limits (effectiveness).

-

The variable Termination indicates the reason for termination (success, timeout, out-of-memory, exception) in a finer way than Succ. It is only used in the detailed plots of the supplementary report [42].

-

The variable Result denotes whether the model is safe or unsafe according to the algorithm. We check that the result matches the expected (if available) and that all configurations agree.

-

The variable TimeMs holds the execution time (CPU time) of the algorithm in milliseconds (efficiency).

-

The variable Memory measures the peak (maximal) memory consumption during the execution of the algorithm in bytes (efficiency).

4.1.4 Experiment Design

The experiment design is summarized in Table 3. It would be possible to execute each configuration on every model (crossover design) and then select the relevant subsets of data for each research question. However, due to the high number of parameters and their possible values, it would yield hundreds of configurations. Instead, for each research question we identify and manipulate one or two parameters that correspond to our new contributions. These parameters are called factors, for which each value (level) is executed on every model and the output is observed.

Based on our previous experience and the literature, the domain of the abstraction is a prominent parameter of CEGAR. Therefore, we also include it in the experiments as a blocking factor to systematically eliminate its undesired effect. RQ1 forms an exception, where only the explicit domain is applicable, therefore we use the search strategy for blocking.

The rest of the independent variables are kept at a fixed level that usually performed well in our previous experiments. These fixed levels however, can be different based on the type of the model, e.g., a local precision granularity is used for PLC models, while SV-COMP models perform better with global precision. Furthermore, certain parameters might not be applicable ( NA) to hardware models since they are represented as transition systems instead of CFA.

To illustrate our design with an example, in RQ1 we evaluate 6 levels for MaxEnum and 2 levels for Search, while keeping other parameters at a fixed level. This yields a total number of 12 configurations.

4.1.5 Measurement Procedure

Measurements were executed on physical machines with 4 core (2.50 GHz) Intel Xeon L5420 CPUs and 32 GB of RAM, running Ubuntu 18.04.1 LTS and Oracle JDK 1.8.0_191 (Theta is implemented in Java). We used Z3 version 4.5.0 [57] for SMT solving.Footnote 16 To ensure reliable and accurate measurements, we used the RunExec tool from the BenchExec suite [18], which is a state-of-the-art benchmarking framework (also used at SV-COMP). Each measurement was executed with CPU time limit of 300 sFootnote 17 and a memory limit of 4 GB. The results were collected into CSV files for further analysis. Each measurement was repeated 2 times. Instructions to reproduce our experiment can be found in the supplementary material [42].

4.1.6 Threats to Validity

In this subsection we discuss threats to construct, internal and external validity [64] of our experiment. We are not concerned with conclusion validity, as we do not use statistical tests [64].

Construct validity can be ensured by using proper metrics to describe the “goodness” of algorithms. We use the number of solved instances for effectiveness, and the total execution time and peak memory consumption for efficiency. These metrics are widely used to characterize model checking algorithms [9, 25, 50].

Internal validity is concerned with identifying the proper relationship between the treatments and the outcome. We use dedicated hardware machines and repeated executions to reduce noise from the environment. Accuracy of the results is ensured by BenchExec [18], a state-of-the-art benchmarking tool. We also apply blocking factors to eliminate undesired effects from known factors systematically. Nevertheless, internal validity could still be improved using a full, crossover design (executing all configurations on all models).

External validity is increased by using models from different and diverse sources, including standard benchmark suites (SV-COMP [9] and HWMCC [25]) and industrial models [40]. We compared our new contributions with various state-of-the-art algorithms implemented within the same framework. Furthermore, we also compare our implementation to other tools to provide a baseline (Sect. 4.3). However, external validity would benefit from using additional models (for example from other categories of SV-COMP) and from comparing related algorithms as well. Describing models with additional variables (e.g., size or complexity) besides their category would also further generalize our results.

4.2 Results and Analysis

We present the results and analyses for each research question in a separate subsection. Analyses were performed using the R software environment [58] version 3.4.3. We only present the most important results in the paper, but the raw data, the R script and a detailed report can be found in the supplementary material [42].

In each analysis, we first merge the repeated executions of the same measurement (same configuration on the same model) into a single data point in the following way. We consider a measurement successful if at least one of the repeated executions is successful. This is a reasonable choice as in most cases either all executions are successful or none of them are. Then, we calculate the execution time of a measurement by taking the mean time of its successful repetitions. The relative standard deviationFootnote 18 between the repeated executions was usually around \(1\%\) to \(2\%\), allowing us to represent them with their mean. In a few cases, the repeated executions terminated due to a different reason (e.g., timeout first, then out-of-memory). In these cases we counted the first reason during aggregation.

4.2.1 RQ1: Configurable Explicit Domain

Results In this question we analyze 6 different levels for MaxEnum with respect to 2 levels for the blocking factor Search. This algorithm is applicable only to the 535 CFA models, giving a total number of \((6 \cdot 2) \cdot 535 = 6420\) measurements, from which 3928 (\(61\%\)) are successful.

The heatmap in Fig. 9 presents an overview of the results. Configurations are described by the levels of Search and MaxEnum. Categories are given by their name and the number of models, and the rightmost column is a summary of all categories. Each cell represents the number of successful measurements in a given category, along with the total execution time and peak memory consumption for successful measurements (rounded to 3 significant digits [18]). The background color of the cell indicates the success rate of the configurations, i.e., the percentage of successful measurements. The last row is the virtual best configuration, i.e., taking the result of the best configuration for each model individually.

Discussion It can be seen that traditional explicit-value analysis, i.e., configurations BFS_01* and DFS_01* perform well for the SV-COMP categories ( locks, eca, ssh), but give poor performance on PLC models.

On the other end of the spectrum, configurations BFS_0 and DFS_0 enumerate all possible successors (\(k = \infty \)). This gives a poor success rate on certain SV-COMP categories, having integer variables with a theoretically infiniteFootnote 19 domain. Note, that these configurations can still solve certain problems as they represent non-deterministic variables with the top value initially and only start enumerating possible values as soon as they appear in some expression (and are tracked explicitly). These configurations are more suitable for PLC models than traditional explicit-value analysis, because PLCs usually contain many Boolean input variables and it is often feasible to enumerate all possibilities to increase precision.

The advantage of our configurable approach is demonstrated by the configurations having 5, 10 or 50 for MaxEnum. These configurations give a good performance overall and a remarkably better success rate on category plc compared to traditional explicit-value analysis. Moreover, with \(k >= 10\), configurations can solve a few more plc instances than with enumerating all possibilities. It can also be observed, that using an SMT solver for expressions that cannot be evaluated with simple heuristics ( 01) can improve success rate compared to not using a solver ( 01*) with 13 and 17 models for DFS and BFS respectively. Furthermore, it can be seen that BFS is consistently more effective than DFS for the same MaxEnum value. The overall best configuration in this analysis is BFS_50, but BFS_05 and BFS_10 closely follows.

An interesting further research direction would be to determine the optimal value for MaxEnum in advance, based on static properties of the input model or to adjust it dynamically during analysis.

Summary. Our configurable explicit domain can combine the advantages of traditional explicit-value analysis and explicit enumeration of successor states, giving a good performance overall in each category. Furthermore, although using an SMT solver requires more time, it increases precision and achieves a slightly higher success rate.

4.2.2 RQ2: Error Location-Based Search

Results In this question we analyze 5 different levels for Search with respect to 3 levels for the blocking factor Domain. This algorithm is applicable only to the 535 CFA models, giving a total number of \((5 \cdot 3) \cdot 535 = 8025\) measurements, from which 6242 (\(78\%\)) are successful. The heatmap in Fig. 10 presents an overview of the results. Configurations are described by the levels of Domain and Search.

Discussion The overall performance of configurations is similar, ranging from 416 to 447 successful measurements for \({ \textsf {PRED\_}}^{ \textsf {*}}\) and 357 to 389 for EXPL. However, there are some interesting patterns in certain categories. The blocking factor ( Domain) is dominant for the loops, ssh and plc categories: configurations with EXPL perform better for ssh and \({ \textsf {PRED\_}}^{ \textsf {*}}\) is more effective for loops and plc.

The success rates for different search strategies within the same domain is quite similar with a few notable examples. Our purely error location-based strategy ( ERR) yields a higher success rate in general compared to others. In contrast, our ERR_DFS combined strategy has a poor performance for eca models in the predicate domain. The supplementary report [42] includes separate plots for safe and unsafe benchmarks. This confirms that the advantage of ERR strategies is more prominent for unsafe models and they are similar to others for safe instances.

A possible future research direction is to experiment with different combinations and weights for the strategies, possibly based on domain knowledge about the input models.

Summary.Our error location-based search can yield improvement for certain models. However, our combined strategies that are efficient for artificial examples (Fig. 5) provide no remarkable improvement for real-world models.

4.2.3 RQ3: Splitting Predicates

Results In this question we analyze 3 different levels for PredSplit and 3 levels for Domain. The levels of PredSplit determine how complex predicates obtained during refinement are treated, whereas the levels of Domain correspond to the way abstract states are formed from these predicates. These algorithms are applicable to all 835 models, giving a total number of \((3 \cdot 3) \cdot 835 = 7515\) measurements, from which 4345 (\(58\%\)) are successful. The heatmap in Fig. 11 presents an overview of the results. Configurations are described by the levels of Domain and PredSplit.

Discussion It can be seen that the overall performance of the configurations mainly ranges from 468 to 500 successful measurements. An exception is the configuration PRED_CART_ATOMS having a remarkably poor performance (due to plc models). This can be attributed to the fact that if we split complex formulas to their atoms and use Cartesian abstraction, we will only be able to represent conjunctions of atoms, but no disjunctions. Similarly for hardware models, splitting to atoms only works well with Boolean abstraction.

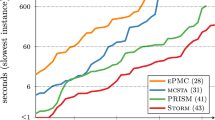

On the other hand, splitting into conjuncts is especially favorable with Cartesian abstraction, making PRED_CART_CONJUNCTS the most successful configuration. Although it can only solve 3 more models than the second best, the total execution time is much lower (7420 s compared to 11,800 s).