Abstract

Instance selection aims to search for the best patterns in the training set and main instance selection methods include condensation methods, edition methods and hybrid methods. Hybrid methods combine advantages of both edition methods and condensation methods. Nevertheless, most of existing hybrid approaches heavily rely on parameters and are relatively time-consuming, resulting in the performance instability and application difficulty. Though several relatively fast and (or) parameter-free hybrid methods are proposed, they still have the difficulty in achieving both high accuracy and high reduction. In order to solve these problems, we present a new parameter-free hybrid instance selection algorithm based on local sets with natural neighbors (LSNaNIS). A new parameter-free definition for the local set is first proposed based on the fast search for natural neighbors. The new local set can fast and reasonably describe local characteristics of data. In LSNaNIS, we use the new local set to design an edition method (LSEdit) to remove harmful samples, a border method (LSBorder) to retain representative border samples and a core method (LSCore) to condense internal samples. Comparison experiments show that LSNaNIS is relatively fast and outperforms existing hybrid methods in improving the k-nearest neighbor in terms of both accuracy and reduction.

Similar content being viewed by others

References

Chih-Fong T, Wei-Chao L, Hu Y-H, Guan-Ting Y (2019) Under-sampling class imbalanced datasets by combining clustering analysis and instance selection. Inf Sci 477:47–54

Pang X, Xu C, Xu Y (2018) Scaling KNN multi-class twin support vector machine via safe instance reduction. Knowl-Based Syst 148(15):17–30

Cano JR, Aljohani NR, Abbasi RA, Alowidbi JS, García S (2017) Prototype selection to improve monotonic nearest neighbor. Eng Appl Artif Intell 60:128–135

Schmidt K, Behrens T, Scholten T (2008) Instance selection and classification tree analysis for large spatial datasets in digital soil mapping. Geoderma 146(1–2):0–146

Aytuğ O (2015) A fuzzy-rough nearest neighbor classifier combined with consistency-based subset evaluation and instance selection for automated diagnosis of breast cancer. Expert Syst Appl 42(20):6844–6852

Hosseini S, Turhan B, Mäntylä M (2017) A benchmark study on the effectiveness of search-based data selection and feature selection for cross project defect prediction. Inf Softw Technol 95:296–312

Chen ZY, Lin WC, Ke SW, Tsai CF (2015) Evolutionary feature and instance selection for traffic sign recognition. Comput Ind 74:201–211

Kim Y, Enke D (2017) Instance selection using genetic algorithms for an intelligent Ensemble Trading System. Procedia Comput Sci 114:465–472

Hart P (1968) The condensed nearest neighbor rule. IEEE Trans Inf Theory 14(3):515–516

Wilson DL (1972) Asymptotic properties of nearest neighbor rules using edited data. IEEE Trans Syst Man Cybern 2(3):408–421

Chou CH, Kou BH, Fu C (2006) The generalized condensed nearest neighbor rule as a data reduction method. In: Proceedings of the 18th international conference on pattern recognition. IEEE Computer Society, pp 556-559

Dasarathy BV, Sanchez JS, Townsend S (2000) Nearest neighbour editing and condensing tools-synergy exploitation. Pattern Anal Applic 3(1):19–30

Ferri FJ, Albert JV, Vidal E (1999) Consideration about sample-size sensitivity of a family of edited nearest-neighbor rules. IEEE Trans Syst Man Cybern 29(4):667–672

Sánchez J, Barandela R, Marques A, Alejo R, Badenas J (2003) Analysis of new techniques to obtain quality training sets. Pattern Recogn Lett 24(7):1015–1022

Nikolaidis K, Goulermas JY, Wu QH (2011) A class boundary preserving algorithm for data condensation. Pattern Recogn 44(3):704–715

Nikolaidis K, Eduardo RM, John YG (2012) Spectral graph optimization for instance reduction. IEEE Trans Neural Netw Learn Syst 23(7):1169–1175

Cavalcanti GDC, Ren TI, Pereira CL (2013) ATISA: adaptive threshold-based instance selection algorithm. Expert Syst Appl 40(17):6894–6900

Vallejo CG, Troyano JA, Ortega FJ (2010) InstanceRank: bringing order to datasets. Pattern Recogn Lett 31(2):131–142

Hernandezleal P, Carrascoochoa JA, MartínezTrinidad JF, Olveralopez JA (2013) Instancerank based on borders for instance selection. Pattern Recogn 46(1):365–375

Hamidzadeh J, Monsefi R, Yazdi HS (2015) Irahc: instance reduction algorithm using hyperrectangle clustering. Pattern Recogn 48(5):1878–1889

Leyva E, Antonio G, Raúl P (2015) Three new instance selection methods based on local sets: a comparative study with several approaches from a bi-objective perspective. Pattern Recogn 48(4):1523–1537

Li J, Wang Y (2015) A new fast reduction technique based on binary nearest neighbor tree. Neurocomputing 149(3):1647–1657

Yang L, Zhu Q, Huang J, Cheng D (2017) Adaptive edited natural neighbor algorithm. Neurocomputing 230(22):427–433

Yang L, Zhu Q, Huang J, Cheng D, Wu Q, Hong X (2018) Natural neighborhood graph-based instance reduction algorithm without parameters. Appl Soft Comput 70:279–287

Yang L, Zhu Q, Huang J, Cheng D, Wu Q, Hong X (2019) Constraint nearest neighbor for instance reduction. Soft Comput 23:13235–13245

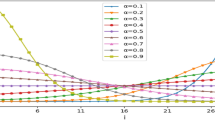

Zhu Q, Feng J, Huang J (2016) Natural neighbor: a self-adaptive neighborhood method without parameter k. Pattern Recogn Lett 80(1):30–36

Cover TM, Hart PE (1967) Nearest neighbor pattern classification. IEEE Trans Inform Theory 13(1):21–27

Brighton H, Mellish C (2002) Advances in instance selection for instance-based learning algorithms. Data Min Knowl Disc 6(2):153–172

Fayed HA, Atiya AF (2009) A novel template reduction approach for the K-nearest neighbor method. IEEE Trans Neural Netw 20(5):890–896

Marchiori E (2008) Hit miss networks with applications to instance selection. J Mach Learn Res 9:997–1017

Marchiori E (2009) Graph-based Discrete Differential Geometry for Critical Instance Filtering. European Conference on Machine Learning & Knowledge Discovery in Databases, pp 63–78

Marchiori E (2010) Class conditional nearest neighbor for large margin instance selection. IEEE Trans Pattern Anal Mach Intell 32(2):364–370

Rico-Juan JR, Iñesta JM (2012) New rank methods for reducing the size of the training set using the nearest neighbor rule. Pattern Recogn Lett 33(5):654–660

Cheng D, Zhu Q, Huang J, Yang L, Wu Q (2017) Natural neighbor-based clustering algorithm with local representatives. Knowl-Based Syst 123(1):238–253

Cheng D, Zhu Q, Huang J, Wu Q, Yang L (2018) A local cores-based hierarchical clustering algorithm for data sets with complex structures. Neural Computing & Applications, pp 1-18

Huang J, Zhu Q, Yang L, Feng J (2016) A non-parameter outlier detection algorithm based on natural neighbor. Knowl-Based Syst 92(15):71–77

Li J, Zhu Q, Wu Q (2019) A self-training method based on density peaks and an extended parameter-free local noise filter for k nearest neighbor. Knowl-Based Syst

Caises Y, González A, Leyva E, Pérez R (2011) Combining instance selection methods based on data characterization: an approach to increase their effectiveness. Inf Sci 181(20):4780–4798

Álvar A-G, Díez-Pastor J, Rodríguez JJ, García-Osorio C (2018) Local sets for multi-label instance selection. Appl Soft Comput 68:651–666

Xie J, Zhong-Yang X, Yu-Fang Z, Yong F, Ma J (2018) Density core-based clustering algorithm with dynamic scanning radius. Knowl-Based Syst 142(15):58–70

Bentley JL (1975) Multidimensional binary search trees used for associative searching. Commun ACM 18(9):509–517

Wei W, Liang J, Guo X, Peng S, Yijun S (2019) Hierarchical division clustering framework for categorical data. Neurocomputing 341(14):118–134

Wang G, Yiheng W, Peter T (2018) Clustering by defining and merging candidates of cluster centers via independence and affinity. Neurocomputing 315(13):486–495

Cheng Y, Dawei Z, Wenfa Z, Wang Y (2018) Multi-label learning of non-equilibrium labels completion with mean shift. Neurocomputing 321(10):92–102

Li J, Zhu Q (2019) Semi-supervised self-training method based on an optimum-path forest. IEEE Access 7:36388–36399

Acknowledgements

This work was supported by the National Natural Science Foundation of China (61802360) and Chongqing science and technology project (KJZH17104 and CSTC2017rgun-zdyfx0040).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Li, J., Zhu, Q. & Wu, Q. A parameter-free hybrid instance selection algorithm based on local sets with natural neighbors. Appl Intell 50, 1527–1541 (2020). https://doi.org/10.1007/s10489-019-01598-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-019-01598-y