Abstract

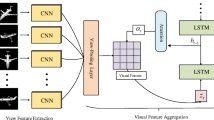

With the development of 3D model reconstruction, manufacturing, and 3D model vision technologies, 3D model recognition has attracted much attention recently. To handle the 3D model recognition problem, in this paper, we propose a panorama based on multi-channel-attention (MCA) CNN network for the representation of the 3D model. The proposed method is composed of three parts: extracting views, transform function learning, and generating 3D model descriptor. Concretely, we first extract the 2D panoramic views for each 3D model, and we use the multi-channel-attention neural network to extract the descriptor for each 3D model. Here, the attention model is used to find the unequal weights of each panorama view to generate the more robust 3D model descriptor. Finally, The fusion feature is used to handle the 3D model classification and retrieval problem. The popular data sets ModelNet and ShapeNet are used to demonstrate the performance of our approach. The experiments also demonstrate the superiority of our proposed method over the state-of-art methods.

Similar content being viewed by others

References

Jingyuan, C., Hanwang, Z., Xiangnan, H., Liqiang, N., Wei L., Tat Seng, C.: Attentive collaborative filtering: Multimedia recommendation with item- and component-level attention. In: International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 335–344 (2017)

He, X., Chua, T.-S.: Neural factorization machines for sparse predictive analytics. In: Proceedings of the 40th International ACM SIGIR conference on Research and Development in Information Retrieval, pp. 355–364. ACM (2017)

Zhang, H., Niu, Y., Chang, S.-F.: Grounding referring expressions in images by variational context (2018)

Kanezaki, A., Matsushita, Y., Yoshifumi, N.: RotationNet: joint object categorization and pose estimation using multiviews from unsupervised viewpoints. CVPR, pp. 5010–5019 (2018)

Su, H., Maji, S., Kalogerakis, E., Learned-Miller, E.: Multi-view convolutional neural networks for 3d shape recognition. In: Proceedings of the IEEE international conference on computer vision, pp. 945–953 (2015)

Liu, A.-A., Nie, W.-Z., Su, Y.-T.: 3d object retrieval based on multi-view latent variable model. In: IEEE Transactions on Circuits Systems for Video Technology. (99):1–1

Guo, H., Wang, J., Gao, Y., Li, J., Lu, H.: Multi-view 3d object retrieval with deep embedding network. In: IEEE Transactions on Image Processing A Publication of the IEEE Signal Processing Society 25(12), 5526–5537 (2016)

Wang, D., Wang, B., Zhao, S., Yao, H., Liu, H.: View-based 3d object retrieval with discriminative views. Neurocomputing 252, 58–66 (2017)

Zhang, H., Kyaw, Z., Jinyang, Y., Shih F.C.: Weakly supervised visual relation detection via parallel pairwise r-fcn, Ppr-fcn (2017)

Su, H., Maji, S., Kalogerakis, E., Learned-Miller, E.G.: Multi-view convolutional neural networks for 3d shape recognition. ICCV, pp. 945–953 (2015)

Papadakis, Panagiotis, Pratikakis, Ioannis, Theoharis, Theoharis, Perantonis, Stavros: Panorama: a 3d shape descriptor based on panoramic views for unsupervised 3d object retrieval. Int. J. Comput. Vis. 89(2–3), 177–192 (2010)

Zhang, H., Kyaw, Z., Chang, S.-F., Chua, T.-S.: Visual translation embedding network for visual relation detection. CVPR, pp. 3107–3115 (2017)

Liu, A., Wang, Z., Nie, W., Yuting, S.: Graph-based characteristic view set extraction and matching for 3d model retrieval. Inf. Sci. 320, 429–442 (2015)

Yang, Luren, Albregtsen, Fritz: Fast and exact computation of cartesian geometric moments using discrete green’s theorem. Pattern Recogn. 29(7), 1061–1073 (1996)

Ke, L., Wang, Q., Xue, J., Pan, W.: 3d model retrieval and classification by semi-supervised learning with content-based similarity. Inf. Sci. 281, 703–713 (2014)

Polewski, P., Yao, W., Heurich, M., Krzystek, P., Stilla, U.: Detection of fallen trees in als point clouds of a temperate forest by combining point/primitive-level shape descriptors. Gemeinsame Tagung (2014)

Kobbelt, L., Schrder, P., Kazhdan, M., Funkhouser, T., Rusinkiewicz, S.: Rotation invariant spherical harmonic representation of 3d shape descriptors. In: Proc. eurographics/acm Siggraph Symp.on Geometry Processing 43(2), 156–164 (2003)

Sinha, Y., Bai, J., Ramani, K.: Deep learning 3d shape surfaces using geometry images. In: European Conference on Computer Vision, pp. 223–240 (2016)

Nie, Wei-Zhi, Liu, An-An, Yu-Ting, Su: 3d object retrieval based on sparse coding in weak supervision. J. Vis. Commun. Image Represent. 37, 40–45 (2016)

He, X., He, Z., Du, X., Chua, T.-S.: Adversarial personalized ranking for recommendation (2018)

He, X., He, Z., Song, J., Liu, Z., Jiang, Y.-G., Chua, T.-S.: NAIS: neural attentive item similarity model for recommendation. IEEE Trans. Knowl. Data Eng. 30(12), 2354–2366 (2018)

Ding-Yun, C., Xiao-Pei, T., Yu-Te, S., Ming, O.: On visual similarity based 3d model retrieval. In: Computer graphics forum, 22, pp. 223–232. Wiley Online Library (2003)

Wu, Z., Song, S., Khosla, A., Yu, F., Zhang, L., Tang, X., Xiao, J.: 3d shapenets: a deep representation for volumetric shapes. pp. 1912–1920 (2014)

Maturana, D., Scherer, S.: Voxnet: A 3d convolutional neural network for real-time object recognition. In: Intelligent Robots and Systems (IROS), 2015. In: IEEE/RSJ International Conference on, pp. 922–928. IEEE (2015)

Shi, Baoguang, Bai, Song, Zhou, Zhichao, Bai, Xiang: Deeppano: deep panoramic representation for 3-d shape recognition. IEEE Signal Process. Lett. 22(12), 2339–2343 (2015)

Sedaghat, N., Zolfaghari, M., Amiri, E., Brox, T.: Orientation-boosted voxel nets for 3d object recognition. arXiv preprint arXiv:1604.03351 (2016)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556 (2014)

Srivastava, Nitish, Hinton, Geoffrey, Krizhevsky, Alex, Sutskever, Ilya, Salakhutdinov, Ruslan: Dropout: a simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 15(1), 1929–1958 (2014)

Wu, Z., Song, S., Khosla, A., Yu, F., Zhang, L., Tang, X., Xiao, J.: 3d shapenets: a deep representation for volumetric shapes. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 1912–1920 (2015)

Sfikas, K., Theoharis, T., Pratikakis, I.: Exploiting the panorama representation for convolutional neural network classification and retrieval. In: Eurographics Workshop on 3D Object Retrieval (2017)

Song, B., Xiang, B., Zhichao, Z., Zhaoxiang, Z., Longin Jan, L.: Gift: A real-time and scalable 3d shape search engine. In: Computer Vision and Pattern Recognition (CVPR), 2016 IEEE Conference on, pp. 5023–5032. IEEE (2016)

Sedaghat, N., Zolfaghari, M., Brox, T.: Orientation-boosted voxel nets for 3d object recognition (2017)

Wu, J., Zhang, C., Xue, T., Freeman, B., Tenenbaum, J.: Learning a probabilistic latent space of object shapes via 3d generative-adversarial modeling. In: Advances in Neural Information Processing Systems, pp. 82–90 (2016)

Alberto, G.-G., Francisco, G.-D., Jose, G.-R., Sergio, O.-E., Miguel, C., Azorin-Lopez, J.: Pointnet: A 3d convolutional neural network for real-time object class recognition. In: Neural Networks (IJCNN), 2016 International Joint Conference on, pp. 1578–1584. IEEE (2016)

Xu, X., Todorovic, S.: Beam search for learning a deep convolutional neural network of 3d shapes. In: ICPR, pp. 3506–3511 (2016)

Savva, M., Yu, F., Su, H., Aono, M., Chen, B., Cohen-Or, D., Deng, W., Su, H., Bai, S., Bai, X., et al.: Large-scale 3D shape retrieval from ShapeNet core55[C]// Eurographics Workshop on 3d Object Retrieval. Eurographics Association (2016)

Takahiko, F., Ryutarou, O.: Deep aggregation of local 3d geometric features for 3d model retrieval. In: BMVC (2016)

Acknowledgements

This work was supported in part by the National Natural Science Foundation of China (61502337,61872267).

Author information

Authors and Affiliations

Corresponding authors

Additional information

Communicated by X. He, H. Zhang, Z. Liu, C. Ngo, S. Karaman, Y. Zhang.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Nie, W., Wang, K., Liang, Q. et al. Panorama based on multi-channel-attention CNN for 3D model recognition. Multimedia Systems 25, 655–662 (2019). https://doi.org/10.1007/s00530-018-0600-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00530-018-0600-2