Abstract

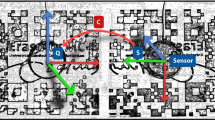

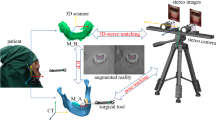

As of common routine in tumor resections, surgeons rely on local examinations of the removed tissues and on the swiftly made microscopy findings of the pathologist, which are based on intraoperatively taken tissue probes. This approach may imply an extended duration of the operation, increased effort for the medical staff, and longer occupancy of the operating room (OR). Mixed reality technologies, and particularly augmented reality, have already been applied in surgical scenarios with positive initial outcomes. Nonetheless, these methods have used manual or marker-based registration. In this work, we design an application for a marker-less registration of PET-CT information for a patient. The algorithm combines facial landmarks extracted from an RGB video stream, and the so-called Spatial-Mapping API provided by the HMD Microsoft HoloLens. The accuracy of the system is compared with a marker-based approach, and the opinions of field specialists have been collected during a demonstration. A survey based on the standard ISO-9241/110 has been designed for this purpose. The measurements show an average positioning error along the three axes of (x, y, z) = (3.3 ± 2.3, − 4.5 ± 2.9, − 9.3 ± 6.1) mm. Compared with the marker-based approach, this shows an increment of the positioning error of approx. 3 mm along two dimensions (x, y), which might be due to the absence of explicit markers. The application has been positively evaluated by the specialists; they have shown interest in continued further work and contributed to the development process with constructive criticism.

Similar content being viewed by others

References

Wiesenfeld D: Meeting the challenges of multidisciplinary head and neck cancer care. Int J Maxillofac Oral Surg 46:57, 2017

National Institutes of Health, Technologies Enhance Tumor Surgery: Helping Surgeons Spot and Remove Cancer, News In Health, 2016

Connolly JL, Schnitt SJ, Wang HH, Longtine JA, Dvorak A, Dvorak HF: Role of the Surgical Pathologist in the Diagnosis and Management of the Cancer Patient, 6th edition, Holland-Frei Cancer Medicine, 2003

Kuhnt D et al.: Fiber tractography based on diffusion tensor imaging (DTI) compared with high angular resolution diffusion imaging (HARDI) with compressed sensing (CS)–initial experience and clinical impact. Neurosurgery 72:A165–A175, 2013

McCahill LE, Single RM, Aiello Bowles EJ et al.: Variability in reexcision following breast conservation surgery. JAMA 307:467–475, 2012

Harréus U: Surgical Errors and Risks-The Head and Neck Cancer Patient, GMS Current Topics in Otorhinolaryngology. Head Neck Surg 12, 2013

Stranks J: Human Factors and Behavioural Safety. Oxford: Butterworth-Heinemann, 2007, pp. 130–131

Canadian Cancer Society’s Advisory Committee on Cancer Statistics: Canadian Cancer Statistic 2015. Toronto: Canadian Cancer Society, 2015

Kunkel N, Soechtig S: Mixed Reality: Experiences Get More Intuitive, Immersive and Empowering. Deloitte University Press, 2017

Coppens A, Mens T: Merging Real and Virtual Worlds: An Analysis of the State of the Art and Practical Evaluation of Microsoft Hololens. Mons: University of Mons, 2017

Uva AE, Fiorentino M, Gattullo M, Colaprico M, De Ruvo MF, Marino F, Trotta GF, Manghisi VM, Boccaccio A, Bevilacqua V, Monno G: Design of a projective AR workbench for manual working stations. In: Augmented Reality, Virtual Reality, and Computer Graphics. Berlin: Springer, 2016, pp. 358–367

Chen X et al.: Development of a surgical navigation system based on augmented reality using an optical see-through head-mounted display. J Biomed Inform 55:124–131, 2015

Wang J, Suenaga H, Yang L, Kobayashi E, Sakuma I: Video see-through augmented reality for oral and maxillofacial surgery, Int J Med Rob and Comput Assisted Surg 13:e1754, 2017

Pratt P, Ives M, Lawton G, Simmons J, Radev N, Spyropoulou L, Amiras D: Through the HoloLens™ looking glass: augmented reality for extremity reconstruction surgery using 3D vascular models with perforating vessels. Eur Radiol Exp 2:2018, 2018

Hanna MG, Ahmed I, Nine J, Prajapati S, Pantanowitz L: Augmented Reality Technology Using Microsoft HoloLens in Anatomic Pathology, Arch Pathol Lab Med 142(5):638-644, 2018. https://doi.org/10.5858/arpa.2017-0189-OA

Sappenfield JW, Smith WB, Cooper LA, Lizdas D, Gonsalves D, Gravenstein N, Lampotang S, Robinson AR: Visualization improves supraclavicular access to the subclavian vein in a mixed reality simulator, Anesth Analg 127(1):83-89, 2017. https://doi.org/10.1213/ANE.0000000000002572

Syed AZ, Zakaria A, Lozanoff S: Dark room to augmented reality: application of HoloLens technology for oral radiological diagnosis. Oral Surg Oral Med Oral Pathol Oral Radiol 124(1), 2017

Lia H, Paulin G, Yeo CY, Andrews J, Yi N, Hag H, Emmanuel S, Ludig K, Keri Z, Lasso A, Fichtinger G: HoloLens in suturing training, SPIE Medical Imaging 2018: Image-guided procedures, Robotic Interventions, and Modeling, vol. 10576, 2018

Hanna MG, Worrell S, Ishtiaque A, Fine J, Pantanowitz L: Pathology specimen radiograph co-registration using the HoloLens improves physician assistant workflow. Pittsburgh: Society for Imaging Informatics in Medicine-Innovating Imaging Informatics, 2017

Mojica CMM, Navkar NV, Tsagkaris D, Webb A, Birbilis TA, Seimenis I, TsekosNV: Holographic interface for three-dimensional visualization of MRI on HoloLens: a prototype platform for MRI Guided Neurosurgeries, IEEE 17th International Conference on Bioinformatics and Bioengineering, 2017, pp 21–27

Andress S et al.: On-the-fly augmented reality for orthopaedic surgery using a multi-modal fiducial. J Med Imaging (Bellingham) 5(2):021209, 2018

Luce P, Chatila N: AP-HP: 1ère intervention chirurgicale au monde à l’hôpital Avicenne réalisée avec une plateforme collaborative de réalité mixte, en interaction via Skype avec des équipes médicales dans le monde entier, grâce à HoloLens, Hub Presse Microsoft France, 05 12 2017. [Online]. Available: https://news.microsoft.com/fr-fr/2017/12/05/ap-hp-1ere-intervention-chirurgicale-monde-a-lhopital-avicenne-realisee-hololens-plateforme-collaborative-de-realite-mixte-interaction-via-skype-equipes-med/. Accessed 05 11 2018

Assistance Publique - Hôpitaux de Paris, Opération de l’épaule réalisée avec un casque de réalité mixte - hôpital Avicenne AP-HP, APHP, 05 12 2017. [Online]. Available: https://www.youtube.com/watch?v=xUVMeib0qek. Accessed 04 11 2018

Cho K, Yanof J, Schwarz GS, West K, Shah H, Madajka M, McBride J, Gharb BB, Rampazzo A, Papay FA: Craniofacial surgical planning with augmented reality: accuracy of linear 3D cephalometric measurements on 3D holograms. Int Open Access J Am Soc Plast Surg 5, 2017

Adabi K, Rudy H, Stern CS, Weichmann K, Tepper O, Garfein ES: Optimizing measurements in plastic surgery through holograms with Microsoft Hololens. Int Open Access J Am Soc Plast Surg 5:182–183, 2017

Perkins S, Lin MA, Srinivasan S, Wheeler AJ, Hargreaves BA, Daniel BL: A mixed-reality system for breast surgical planning, in IEEE International Symposium on Mixed and Augmented Reality Adjunct Proceedings, Nantes, 2017

Egger J et al.: Integration of the OpenIGTLink Network Protocol for image-guided therapy with the medical platform MeVisLab. Int J Med Rob 8(3):282–290, 2012

Egger J et al.: HTC Vive MeVisLab integration via OpenVR for medical applications. PLoS One 12(3):e0173972, 2017

Egger J et al.: GBM volumetry using the 3D slicer medical image computing platform. Sci Rep 3:1364, 2013

Bevilacqua V: Three-dimensional virtual colonoscopy for automatic polyps detection by artificial neural network approach: New tests on an enlarged cohort of polyps. Neurocomputing 116:62–75, 2013

Bevilacqua V, Cortellino M, Piccinni M, Scarpa A, Taurino D, Mastronardi G, Moschetta M, Angelelli G: Image processing framework for virtual colonoscopy, in Emerging Intelligent Computing Technology and Applications. ICIC 2009. In: Lecture Notes in Computer Science. Berlin: Springer, 2009, pp. 965–974

Bevilacqua V, Mastronardi G, Marinelli M: A neural network approach to medical image segmentation and three-dimensional reconstruction. In: International Conference on Intelligent Computing. Berlin: Springer, 2006, pp. 22–31

Zukic D, et al: Segmentation of vertebral bodies in MR images, Vision, Modeling, and Visualization, 2012, pp 135-142

Zukić D et al.: Robust detection and segmentation for diagnosis of vertebral diseases using routine MR images. Computer Graphics Forum 33(6):190–204, 2014

Bevilacqua V, Trotta GF, Brunetti A, Buonamassa G, Bruni M, Delfine G, Riezzo M, Amodio M, Bellantuono G, Magaletti D, Verrino L, Guerriero A: Photogrammetric meshes and 3D points cloud reconstruction: a genetic algorithm optimization procedure. In: Italian Workshop on Artificial Life and Evolutionary Computation. Berlin: Springer, 2016, pp. 65–76

Schinagl DA, Vogel WV, Hoffmann AL, van Dalen JA, Oyen WJ, Kaanders JH: Comparison of five segmentation tools for 18F-fluoro-deoxy-glucose–positron emission tomography–based target volume definition in head and neck cancer. Int J Radiat Oncol 69:1282–1289, 2007

Baumgart BG: A polyhedron representation for computer vision. In Proceedings of the May 19-22, 1975, national computer conference and exposition, ACM, 1975, pp 589–596

Bosma, M. K., Smit, J., & Lobregt, S. (1998). Iso-surface volume rendering. In Medical Imaging 1998: Image Display. International Society for Optics and Photonics, vol 3335, pp 10–20

Egger J, Gall M, Tax A, Ücal M, Zefferer U, Li X, Von Campe G, Schäfer U, Schmalstieg D, Chen X: Interactive reconstructions of cranial 3D implants under MeVisLab as an alternative to commercial planning software. PLoS One 12, 2017

Turner A, Zeller M, Cowley E, Brandon B: Hologram stability, 21 03 2018. [Online]. Available: https://docs.microsoft.com/en-us/windows/mixed-reality/hologram-stability. Accessed 05 11 2018

Visual Computing Laboratory, MeshLab, CNR, [Online]. Available: http://www.meshlab.net/. Accessed 05 11 2018

Dlib C++ library, [Online]. Available: http://www.dlib.net. Accessed 02 11 2018

Kazemi V, Sullivan J: One millisecond face alignment with an ensemble of regression trees, in IEEE conference on computer vision and pattern recognition (CVPR), Columbus, OH, USA, 2014

Bevilacqua V, Uva AE, Fiorentino M, Trotta GF, Dimatteo M, Nasca E, Nocera AN, Cascarano GD, Brunetti A, Caporusso N, Pellicciari R, Defazio G: A comprehensive method for assessing the blepharospasm cases severity. In: International conference on recent trends in image processing and pattern recognition. Berlin: Springer, 2016, pp. 369–381

Guyman W, Zeller M, Cowley E, Bray B: Locatable camera, Microsoft - Windows Dev Center, 21 03 2018. [Online]. Available: https://docs.microsoft.com/en-us/windows/mixed-reality/locatable-camera. Accessed 05 11 2018

Unity Technologies, Physics Raycast, 04 04 2018. [Online]. Available: https://docs.unity3d.com/ScriptReference/Physics.Raycast.html. Accessed 05 11 2018

Salih Y, Malik AS: Depth and geometry from a single 2D image using triangulation, in IEEE international conference on multimedia and expo workshops, 2012.

Alizadeh P: Object distance measurement using a single camera for robotic applications. Sudbury: Laurentian University, 2015

Holzmann C, Hochgatterer M: Measuring distance with mobile phones using single-camera stereo vision, IEEE Computer Society, 2012, pp 88–93

Enox Software, Dlib FaceLandmark Detector, Enox Software, 31 01 2018. [Online]. Available: https://assetstore.unity.com/packages/tools/integration/dlib-facelandmark-detector-64314. Accessed 05 11 2018

Abdel-Aziz YI, Karara HM: Direct linear transformation into object space coordinates in close-range photogrammetry, Proc. Symposium on Close-Range Photogrammetry, 1971, pp 1–18

Seedahmed G, Schenk AF: Direct linear transformation in the context of different scaling criteria, in In Proc. ASPRS. Ann. Convention, St. Louis, 2001

Levenberg K: A method for the solution of certain non-linear problems in least squares. Q Appl Math 2(2):164–168, 1944

Marquardt DW: An algorithm for the least-squares estimation of nonlinear parameters. SIAM J Appl Math 11(2):431–441, 1963

OpenCV Dev Team, Camera Calibration and 3D Reconstruction, 02 11 2018. [Online]. Available: https://docs.opencv.org/2.4/modules/calib3d/doc/camera_calibration_and_3d_reconstruction.html. Accessed 04 11 2018

J. Egger, J. Wallner, 3D printable patient face and corresponding PET-CT data for image-guided therapy, Figshare, 2018. https://doi.org/10.6084/m9.figshare.6323003.v1

Hsieh C-H, Lee J-D: Markerless augmented reality via stereo video see-through head-mounted display device. Math Probl Eng 2015, 2015

Acknowledgments

The authors would like to thank the team Kalkofen at the Institute of Computer Graphics and Vision, TU Graz, for their support.

Funding

This work received funding from the Austrian Science Fund (FWF) KLI 678-B31: “enFaced: Virtual and Augmented Reality Training and Navigation Module for 3D-Printed Facial Defect Reconstructions” (Principal Investigators: Drs. Jürgen Wallner and Jan Egger), the TU Graz Lead Project (Mechanics, Modeling and Simulation of Aortic Dissection), and CAMed (COMET K-Project 871132), which is funded by the Austrian Federal Ministry of Transport, Innovation and Technology (BMVIT) and the Austrian Federal Ministry for Digital and Economic Affairs (BMDW) and the Styrian Business Promotion Agency (SFG).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Antonio Pepe and Gianpaolo Francesco Trotta are joint first authors.

Rights and permissions

About this article

Cite this article

Pepe, A., Trotta, G.F., Mohr-Ziak, P. et al. A Marker-Less Registration Approach for Mixed Reality–Aided Maxillofacial Surgery: a Pilot Evaluation. J Digit Imaging 32, 1008–1018 (2019). https://doi.org/10.1007/s10278-019-00272-6

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10278-019-00272-6