Abstract

Consider an unweighted, directed graph G with the diameter D. In this paper, we introduce the framework for counting cycles and walks of given length in matrix multiplication time \(\widetilde {O}(n^{\omega })\). The framework is based on the fast decomposition into Frobenius normal form and the Hankel matrix-vector multiplication. It allows us to solve the All-Nodes Shortest Cycles, All-Pairs All Walks problems efficiently and also give some improvement upon distance queries in unweighted graphs.

Similar content being viewed by others

1 Introduction

The All-Pairs Shortest Paths (APSP) problem asks to find distances between all pairs of vertices in a graph. For a directed graphs with weights in \(\mathbb {R}\), there is a classical O(n3) time algorithm [11, 29]. Currently best upper bound for this problem is due to [30] who showed an \(O\left (\frac {n^{3}}{2^{{\Omega }(\log n)^{0.5}}}\right )\) algorithm. It is asymptotically faster than O(n3/logcn) for any c > 0 (see survey [6] for earlier algorithms). Showing any algorithm that would work in O(n3−𝜖) time for some 𝜖 > 0 is a major open problem [30].

If we consider unweighted, directed graphs there are subcubic algorithms that exploit fast matrix multiplication. For the undirected graph [24] presented the optimal \(\widetilde {O}(n^{\omega })\) time algorithm, where ω < 2.373 is the matrix multiplication exponent [16]. For the directed case [35] presented an O(n2.575) time algorithm that is based on the fast rectangular matrix multiplication. Moreover, if we are interested in small integer weights from the set {−M,…,M} we have O(M0.68n2.575) algorithm [35].

Because APSP in undirected graphs can be solved in \(\widetilde {O}(n^{\omega })\), diameter, radius, shortest cycle, etc. can be determined in \(\widetilde {O}(n^{\omega })\) time as well. It is surprising that for a directed case, where merely O(n2.575) APSP is known there are also \(\widetilde {O}(n^{\omega })\) algorithms for determining these properties. After a long line of improvements [9] showed an \(\widetilde {O}(M n^{\omega })\) time algorithms for finding minimum weight perfect matching, shortest cycle, diameter and radius (some of these results were already known [21]). Also, [9] showed an application of their techniques that improves upon [31] \(\widetilde {O}(M n^{\omega } t)\) time algorithm for the following problem: determine the set of vertices that lie on some cycle of length at mostt. Cygan et al. [9] managed to solve this problem in \(\widetilde {O}(M n^{\omega })\) time using Baur-Strassen’s theorem.

All of these algorithm are effective merely in the case of a dense graphs. For graphs with the small number of edges there are more efficient algorithms (e.g., APSP in \(\widetilde {O}(|V||E|)\) time [27]). But these algorithms are Θ(n3) when |E| = Θ(n2).

1.1 Related Work

1.1.1 Distance Queries

Yuster and Zwick [33] considered the weighted, directed graphs with weights in {−M,…,M}. They showed an algorithm that needs \(\widetilde {O}(M n^{\omega })\) preprocessing time. After preprocessing each distance δ(u, v) in the graph can be computed exactly in O(n) query time. In the special case M = 1 they showed \(\widetilde {O}(n^{\omega })\) algorithm that solves Single Source Shortest Paths (SSSP). This is the best known algorithm for a dense, weighted graph.

We will match their bounds (up to the polylogarithmic factors) using Frobenius normal form. Next we will extend that approach so it will return more information about a graph in the same query/preprocessing time.

1.1.2 Counting Cycles

For a given graph G and k determining whether G contains a simple cycle of length exactly k is NP-hard (in particular determining whether a graph contains a Hamiltonian cycle is NP-complete). However, if we fix k to be a constant this problem can be solved in polynomial time.

Alon et al. [4] introduced a color coding technique. For a fixed k if a graph G(V, E) contains a simple cycle of size exactly k then such a cycle can be found in \(\widetilde {O}(|V|^{\omega })\) time. Unfortunately, their algorithm depends exponentially 2O(k) on the length of the cycle and in consequence is inapplicable for large k. In the next years, [5] showed (using a different technique) that for k ≤ 7 one can count the number of cycles of length exactly k in a graph in \(\widetilde {O}(|V|^{\omega })\) time. In [32] it is shown that for any even k, cycles of length k can be found in O(|V |2) time in undirected graphs (if they contain such a cycle). Alon et al. [5] showed more methods that depend solely on a number of edges in a graph. For example for odd k they showed \(O\left (E^{2-\frac {2}{k + 1}}\right )\) algorithm for finding cycles of length k. However, for dense graphs these results are worse than [4].

From the other hand, to detect whether a non-simple cycle of length exactly k exists one can use the folklore algorithm. It starts by taking the adjacency matrix A of a graph G. Subsequently, in \(\widetilde {O}(n^{\omega })\) time compute Ak by repeated squaring. If Tr [Ak] > 0 then there exists a non-simple cycle of length k.Footnote 1

Yuster [31] considered the following problem: for every vertex in a graph find a shortest cycle that contains it. He called this problem All-Nodes Shortest Cycle (ANSC). He showed a randomized algorithm that solves ANSC for undirected graphs with weights {1,…,M} in \(\widetilde {O}(\sqrt {M} n^{(\omega + 3)/2})\) time. He noted that for simple digraphs (directed graphs with no anti-parallel edges) it reduces to All-Pairs Shortest Path problem. The fastest known APSP algorithm for unweighted, directed graphs runs in O(n2.575) due to [35]. Here, we will show how to solve ANSC in \(\widetilde {O}(n^{\omega })\) for general, unweighted, directed graphs. Unfortunately, our techniques will allow us merely to find the length of such a cycle. But we can return the set of points, that lie on some cycle of a given length. Independently to our work [3] proved that ANSC can be solved in \(\widetilde {O}(n^{\omega })\) for unweighted, undirected graphs using a completely different technique.

Yuster [31] also considered following problem: given a graph and an integert. LetS(k) denote the set of all vertices lying in a cycle of length≤ k. DetermineS(t). He considered directed graphs with weights in {−M,…,M} and showed \(\widetilde {O}(M n^{\omega } t)\) algorithm. Recently, [9] improved his algorithm. They showed that for a fixed t ∈ [0,nM] the set S(t) can be computed in \(\widetilde {O}(M n^{\omega })\) randomized time. We show, that for unweighted (M = 1) directed graphs we can compute sets S(1),S(2),…,S(D) in \(\widetilde {O}(n^{\omega })\) time with high probability.

2 Preliminaries

Let T(n) be the minimal number of algebraic operations needed to compute the product of n × n matrix by an n × n matrix. We say that ω is the exponent of square matrix multiplication. For now the best known upper bound on ω is due to [16]:

Theorem 1

[16] For every𝜖 > 0,T(n) < O(nω + 𝜖) whereω < 2.37287.

In this paper, we will omit 𝜖 in definition and will assume that O(nω) operations are needed to multiply two matrices. The best lower bound for the exponent of matrix multiplication is ω ≥ 2. For convenience in this paper we will assume that ω > 2. The \(\widetilde {O}\) notation hides polylogarithmic factors in complexity. We will use it to emphasize that all our algorithms need polylogarithmic number of calls to the fast matrix multiplication algorithm.

In this paper we will consider the Word RAM model of computation with a word size O(log n). The \(\mathbb {F}\) denotes a small finite field and often we will assume this field to be \(\mathbb {Z}_{p}\) for a prime number p with O(log n) bits. Note, that in Word RAM model arithmetic operations in \(\mathbb {Z}_{p}\) can be done in constant time. In some situations we will need to stress that we want to work on larger integers. We will use W to denote the upper bound on the largest number in the field. In this case the arithmetic operations in Word RAM model take O(log W) time.

For matrices \(A \in \mathbb {F}^{n\times k}\) and \(B \in \mathbb {F}^{n \times l}\) the \(A \oplus B \in \mathbb {F}^{n \times (k+l)}\) is the concatenation of their columns. \(C_{a,b} \in \mathbb {F}^{n\times (b-a)}\) denotes a matrix constructed by concatenating columns ca,ca+ 1,…,cb of matrix \(C \in \mathbb {F}^{n \times m}\).

In this paper we will consider the unweighted, directed graph G unless stated otherwise. We will denote by V (G) and E(G) the sets of vertices and edges of graph G. The adjacency matrix A(G) ∈{0,1}n×n of a directed graph G with n vertices is defined as:

3 Introduction to Cyclic Subspaces and Connection to Frobenius Matrices

We will start with a motivational example of application of cyclic subspaces. We have a constant-recursive sequence

of order r, where all ci are constants and initial conditions a0,…,ar− 1 are given. Perhaps, the most familiar example is the Fibonacci sequence Fn = Fn− 1 + Fn− 2.

We can define the companion matrix of our general sequence as:

The crucial property of the matrix C is that we can generate the next element of sequence with multiplication by C:

For example, for Fibonacci sequence we have:

Now, if we want the an+ 1 element of the series we can square the companion matrix and get:

And analogously to get the an + k− 1 element we need to compute the k-th power of the matrix C. In linear algebra such transformations are known as the cyclic subspaces generated by the vector a. For our purposes, we will restrict ourself to a conclusion, that some columns of a companion matrix occur in its powers. These properties are well known in the linear algebra theory (see [10, 13] for more cyclic properties).

3.1 Consequences of Frobenius Normal Form

Let \(\mathbb {F}\) be a commutative field. For any matrix \(A \in \mathbb {F}^{n\times n}\) there exists an invertible U over \(\mathbb {F}\) such that:

and F is the Frobenius-canonical-formFootnote 2 of A. The diagonal block Ci is called the companion matrix:

Each companion matrix corresponds to the monic polynomial \(C_{i}(x) = x^{r} + c_{r-1}x^{r-1} + {\ldots } + c_{0} \in \mathbb {F}\left [x\right ]\) (similarly to the sequence example) and this polynomial is called the minimal polynomial of A. Each minimal polynomial has a property that Ci(A) = 0. To guarantee that matrix has only one decomposition into Frobenius normal form we require that every polynomial must divide the next one, i.e., Ci(x)|Ci+ 1(x). The final list of polynomials is called the invariant factors of matrix A [25]. Storjohann [25] proposed the deterministic algorithm to compute the Frobenius canonical-form efficiently.

Theorem 2

[25] The Frobenius canonical-form of a matrix can be computeddeterministically using\(\widetilde {O}(n^{\omega })\)fieldoperations.

Moreover, there are also probabilistic algorithms that compute this form in expected \(\widetilde {O}(n^{\omega })\) time over small fields [10]. In this paper, all algorithms are deterministic if we the upper bound on the number of distinct walks is W. Then, due to the time of a single field operation we need additional O(log W) factor in the complexity. However, since we are mainly interested in determining if a cycle/walk of a given length exists in a graph, we can set a sufficiently small field \(\mathbb {Z}_{p}\) (p has O(log n) bits). This way when algorithm returns nonzero we are sure that there exists some walk. If algorithm returns zero, then with high probability there will be no such walk.

3.2 Cyclic Subspaces

Frobenius decomposition can be used to get the desired power of a matrix (analogously to the diagonal decomposition):

Moreover, we will use the property that the power of block diagonal matrix F is block diagonal:

Now, we need a property of companion matrices that will enable us to power them efficiently (Fig. 1).

Definition 1 (Cyclic Property)

Let v1,…,vn be the columns of a matrix \(C \in \mathbb {F}^{n\times n}\). Let vn+ 1,…,v2n be the columns of matrix Cn+ 1. If, for every 1 ≤ k ≤ n the columns of matrix Ck are vk,vk+ 1,…,vk + n then the C has a cyclic property.

It turns out, that companion matrices have a cyclic property.

Theorem 3 (Folklore 13, see [17] for generalization)

Every companion matrix has a cyclic property.

We will illustrate this property with an example:

The matrix Ci has 4 columns identical to matrix Ci+ 1. C has coefficients of order equal to dimension (dimension is 5 and maximum coefficient is 5). After powering to the 5th power, the coefficients can be of order 55. Over a finite field \(\mathbb {Z}_{p}\), all those coefficients will have O(log p) bits.

4 Matching Distance Queries on Directed Unweighted Graphs

In this section, we will present an algorithm that matches the best known upper bounds of [33] for distance queries in directed unweighted graphs and uses Frobenius matrices.

We take the adjacency matrix A of a graph G (i.e., n × n matrix with au, v = 1 when (u, v) ∈ G and 0 otherwise). The k-th power of the adjacency matrix of the graph G holds the number of walks, i.e., an au, v element of Ak is the count of distinct walks fromutovof lengthkin the graph.

Observation 1 (Folklore, [8])

Let A ∈{0,1}n×n be the adjacency matrix of a directed graph G. The (Ak)u, v is the number of distinct walks from u to v of length exactly k in the graph G.

Hence, the shortest path between vertices u, v is the smallest k such that Ak has nonzero element au, v. This will allow us to forget about graph theory interpretation for a brief moment and focus only on finding such k with algebraic tools.

In this section we will proof the following Lemma.

Lemma 1

Given a matrix\(A \in \mathbb {F}^{n \times n}\).There exists an algorithm that after some preprocessing, can answer queries for any givenpair of indicesi, j ∈{1,…,n} and integerk ∈{1,…,n}, such that:

-

query returns an element (Ak)i, j,

-

preprocessing takes\(\widetilde {O}(n^{\omega })\)fieldoperations and query takesO(n) field operations.

The algorithm is deterministic.

To proof this Lemma we decompose matrix A into the Frobenius normal form. Storjohann [25] showed an algorithm that returns U and F deterministically in \(\widetilde {O}(n^{\omega })\) field operations (note that matrix inverse can also be computed in \(\widetilde {O}(n^{\omega })\) field operations).

To better explain the idea, in the next section we will consider a simple case when a number of invariant factors of A is exactly 1. Then in Section 4.2 we will show how to generalize it to multiple invariant factors.

4.1 Single Invariant Factor

In that situation, the matrix F is a companion matrix \(C \in \mathbb {F}^{n\times n}\). First, we compute the (n + 1)-th power of the companion matrix Fn+ 1. This can be done by using \(\widetilde {O}(n^{\omega })\) field operations by repeated squaring (compute F, F2,F4,…,Fn+ 1 with O(log n) matrix multiplications).

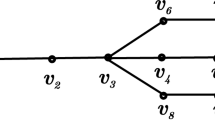

Let v1,…,vn be the columns of matrix UF and vn+ 1,…,v2n be the columns of matrix UFn+ 1. Note, that because matrix F has a cyclic property, the columns vk,…,vk + n− 1 construct UFk (see Fig. 2).

This step took just two matrix multiplications, because we need to multiply U times F and Fn+ 1. The preprocessing phase takes only \(\widetilde {O}(n^{\omega })\) field operations.

Now, if a query asks for the number of distinct walks from vertices u to v of length exactly k we:

-

select u-th row of matrix UFk (n numbers),

-

select v-th column of matrix U− 1,

-

multiply them in by using O(n) multiplications (dot product of two n-dimensional vectors).

This will give us the u, v element of matrix UFkU− 1 = Ak. To get the length of the shortest path (i.e., the minimal k such that (Ak)u, v > 0), we will modify our matrices slightly to get the number of walks of length ≤ k. At the end, we will use in \(\widetilde {O}(n)\) query tim (by using binary search) and \(\widetilde {O}(n^{\omega })\) preprocessing time.

Basically, for a given k we need to get the u, v element of matrix A + A2 + ⋯ + Ak. It suffices to add consecutive columns of matrix UF ⊕ UFn+ 1 = v1 ⊕ v2 ⊕… ⊕ v2n in the following manner:Footnote 3

Now, to get A + A2 + ⋯ + Ak one would need to multiply \(M^{\prime }_{k,k+n-1} U^{-1}\) and subtract \(M^{\prime }_{1,n} U^{-1}\) for a balance.Footnote 4

The naive algorithm can transform matrices U and F to matrix M′ in O(n2) field operations during preprocessing. During query, we will need to compute two dot products (u-th row of \(M^{\prime }_{k,k+n-1}\) times v-th column of U− 1 and u-th row of \(M^{\prime }_{1,n}\) times v-th column of U− 1) and subtract them.

We have an algorithm that for a given vertices u, v ∈ G and integer k ∈{1,…,n} can answer: how many walks fromutovof length less or equalkare in the graphG in \(\widetilde {O}(n)\) query time and \(\widetilde {O}(n^{\omega })\) preprocessing time.

Because the result of the query is increasing in k we can use binary search. We can determine the first k for which the query will answer nonzero value in O(log n) tries. Hence, in \(\widetilde {O}(n)\) we can find the length of the shortest path. This generalized query can also return the number of walks of length exactly k, i.e., q(u, v, k) − q(u, v, k − 1).

We matched the result of [33] for unweighted graphs with a single invariant factor. In the next section, we will show how to generalize this technique for graphs with any number of invariant factors.

4.2 Multiple Invariant Factors

Now, we will consider a case when k ≥ 1, i.e., matrix F has multiple invariant factors. First of all, we need to note that this generalization is not perfect and will allow to compute the number of walks of length up to D (the longest distance in a graph, i.e., diameter).

In a real world applications of our framework (detecting cycles, determining distance between nodes, etc.) one does not need to consider walks longer than the longest possible distance in a graph. It is natural that the diameter is considered to be a bound of an output in graph problems [1, 2, 7, 9].

4.2.1 Relation of the Graph Diameter and Frobenius Normal Form

We begin with relating the graph diameter to the Frobenius normal form. It turns out that the graph diameter is bounded by the degree of a smallest invariant factor.

Lemma 2

[8] Given a directed, unweighted graph G with a diameter D. Letμdenote the degree of the smallest invariant factor (i.e.,the dimension of the smallest block in the Frobeniusmatrix F) of an adjacency matrix of the graph G. ThenD ≤ μ.

This theorem is well known in literature [8]. We include the proof of this theorem for completeness.

Proof

For a contradiction assume that D > μ and let u, v ∈ G be vertices such that δ(u, v) = D. Let A be the adjacency matrix of G. We know, that there is the minimal polynomial of degree μ:

Term \(a^{k}_{i,j}\) denotes the i, j element of the matrix Ak. Now, consider the elements u, v of each matrix. The diameter D > μ and δ(u, v) = D, so for every k ≤ μ the elements \(a^{k}_{u,v} = 0\) (because there is no walk of length less than D from u to v). Now, if we multiply the minimal polynomial by the matrix A we get:

Hence \(a^{\mu + 1}_{u,v} = 0\), because every element in the sequence \(a^{k}_{u,v} = 0\) for k ≤ μ. By repeating this reasoning, we get that for every k > 0 the element \(a^{k}_{u,v} = 0\). So, for every achievable pair of vertices, there must be some k ≤ μ, such that \(a^{k}_{u,v} \ne 0\) and diameter is bounded by μ. □

The bounds of this inequality are tight. There are graphs with diameter D = μ and graphs with μ = n and arbitrary small diameter [8]. Our algorithms are able to return walks up to the length μ. We use the bound on D solely because it is easier to interpret diameter than the smallest degree of the invariant factor.

4.2.2 Generalization to Multiple Invariant Factors

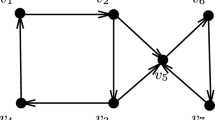

Let k denote the number of blocks in the Frobenius matrix F and μ be the number of columns of the smallest block. To multiply the matrix U by F we can start by multiplying strips of matrix U by appropriate blocks of F and concatenate them later (see Fig. 3).

Multiplication of the UFU− 1. Example for 3 invariant factors. We know that matrix F is block and consists of F1,F2,F3. We divide matrix U into strips U1,U2,U3 that corespond to blocks of F. The observation is that we can compute U1 ⋅ F1, U2 ⋅ F2 and U3 ⋅ F3 independently and concatenate them into matrix UF

We start by splitting the matrix U into k strips with rows corresponding to the appropriate blocks of F (strip Ui has as many columns as block Fi). Then we multiply UF and have k strips: U1F1,U2F2,…UkFk (each with at least μ columns). Next, we multiply UFμ and also keep k strips: \(U_{1} F_{1}^{\mu }, U_{2} F_{2}^{\mu },\ldots ,U_{k} F_{k}^{\mu }\). Our goal is to get a data structure such that if we need UFk, we can quickly choose appropriate columns and append them.

The matrix UiFi has li columns: \(v_{1},\ldots , v_{l_{i}}\). Because Fi is a companion matrix, the \(U_{i} F_{i}^{\mu }\) has the cyclic property (Definition 1). And the matrix \(U_{i} F_{i}^{\mu }\) has columns: \(v_{\mu },\ldots , v_{\mu +l_{i}}\). Note, that there are some duplicate columns in UiFi and \(U_{i} F_{i}^{\mu }\), when μ < li. Hence, we only need to keep columns \(v_{1},\ldots ,v_{\mu +l_{i}}\) for this strip. We do this for all strips U1F1,…UkFk (see Fig. 4).

Combining strips into a single matrix. The height of the matrix in the schema is scalled down. We computed U1F1 and \(U_{1} F_{1}^{\mu }\). Now we noted that companion matrices have a cyclic property so some of the rows in the strips are repeated. So in the single strip we can store only subsequent columns

We are left with a matrix that has at most 2n columns (because \(l_{1} + \mu + l_{2} + \mu + {\ldots } l_{k} + \mu = k \mu + {\sum }^{n}_{i = 1} l_{i} = n + k\mu \le 2n\)). To generate it we need to power F to μ and do multiplications U ⋅ F and U ⋅ Fμ. This can be computed in \(\widetilde {O}(n^{\omega })\) field operations via fast matrix multiplication and repeated squaring.

4.2.3 Queries with Multiple Invariant Factors

When a query for the number of walks of length k from node u to v comes, we do:

-

1.

For each strip i take the u-th row of \(U_{i} F_{i} \oplus U_{i} F_{i}^{\mu }\) and concatenate them (see Fig. 5) into vector \(\bar {u}\),

-

2.

Take v-th column of U− 1 matrix and denote it \(\bar {v}\),

-

3.

Return the dot product \(\bar {u} \cdot \bar {v}\).

Because l1 + l2 + … + lk = n the vector \(\bar {u} \in \mathbb {F}^{n}\). Query needs O(n) field operations.

Finally, this dot product is au, v element of the matrix UFkU− 1, for a fixed k ≤ μ because \(\bar {u}\) is the concatenation of original vector u. Analogously to Section 4.1 one can extend this result to return the number of walks of length less or equal k. This matches (up to the polylogarithmic factor) the result of [33]. We will omit the details of this observation because in the next section we will extend this framework even further.

5 Almost Optimal Query

In the previous section, we showed how to preprocess a matrix A with \(\widetilde {O}(n^{\omega })\) field operations in such a way that in query that uses O(n) field operations we can return a number (Ak)i, j. However, in linear time O(n) we return only a single number. The goal of this section is to get far richer information in \(\widetilde {O}(n)\) query time (with extra factors from field operations).

Theorem 4

LetA ∈{0,1}n×nbe a matrix such that the degree of smallest invariant factor isμ. Thereexists a deterministic algorithm that after some preprocessing can answer queries for anygiveni, j ∈{1,…,n}:

-

Query returns {ak|1 ≤ k ≤ μ},whereak = (Ak)i, j ,

-

Preprocessing takes \(\widetilde {O}(n^{\omega }\log {W})\) and query takes \(\widetilde {O}(n\log {W})\) time,

where W is an upper bound onakfor allk ∈{1,…,μ}.

Note, that this theorem has some immediate application in graph algorithms (see Section 6).

5.1 Hankel Matrix

Now, we will focus on the proof of Theorem 4. First, we need to introduce the Hankel matrix and its properties.

Hankel matrix is defined by its first row and last column (2n − 1 numbers define n × n Hankel matrix). The numbers from the previous row are left-shifted by one and the new number is added at the end. Hankel matrices have some similarities to Topelitz and Circulant matrices.

The basic property we need is that the product of Hankel matrix and vector can be computed in O(n log n) time (see [15, 26]) even though explicitly writing the Hankel matrix as n × n matrix takes O(n2) time. The algorithm takes 2n − 1 parameters that define the Hankel matrix and n parameters that define the vector. The technique is based on the Fast Fourier Transformation [15, 26].

5.2 Using Hankel Matrices to Get Richer Query

To proof the Theorem 4 we will modify only the last step in Section 4.2.3. The algorithm from Section 4.2.3 concatenates the strips UiFi and builds a single vector. Subsequently, that vector is multiplied by a column of matrix U− 1. But we can also do it in a different order: first we multiply the strip by a section of matrix U− 1 and sum the results at the end. Thus, we perform k (number of strips) dot products of smaller vectors (see Fig. 6).

Consider the query for a number of walks of length exactly k. The strips in the matrix U− 1 do not depend on k (vector (u0,…,ul)). However, the vector taken from UiFi (vectors (xi,…,xi + l)) will be left shifted if we want to compute the next one.

As you can see, the subsequent rows can be written as the Hankel matrix (we need to add zeros to get a square matrix, but it will not influence asymptotic complexity since there will be at most O(n) of them). By using the fast Hankel matrix-vector multiplication we can compute μ values for every strip i in time O(li log li) (li was defined as the length of i-th strip). At the end, we need to sum all results into a single array. Therefore, the number of operations is \(O\left ({\sum }_{i = 1}^{k} l_{i} \log l_{i} \right )\). Because \({\sum }^{k}_{i = 1} l_{i} = n\) the algorithm needs O(n log n) field operations. This proves Theorem 4.

Here, we silently assumed that the number of walks is bounded by W. Note, that for large W, the algorithm needs to output O(n2 log W) bits and the complexity of every arithmetic operation needs to be multiplied by log W. If one is only interested in the deciding if an entry of some power of adjacency matrix is nonzero, we can use a standard randomization technique to eliminate log W factors from the running time.

Corollary 1

LetA ∈{0,1}n×nbe a matrix such that the degree of smallest invariant factor isμ. Thereexists an algorithm that after some preprocessing can answer queries for any giveni, j ∈{1,…,n}:

-

Query returns {ak|1 ≤ k ≤ μ},whereak = 1 if (Ak)i, jis nonzero,

-

Preprocessing takes \(\widetilde {O}(n^{\omega })\) and query takes \(\widetilde {O}(n)\) time.

The algorithm is randomized with one-sided bounded error.

Proof

At the beginning we will randomly select a prime number with O(log n) bits. We can write the matrix A as a polynomial:

where xi, j are unique variables. Now we can apply Schwartz-Zippel Lemma [23, 34]. The \(\tilde {A}^{k}_{i,j}\) is the polynomial of degree at most n (because k ≤ μ ≤ n) and if we compute it over \(\mathbb {Z}_{p}\) for p = O(n2) we can correctly determine if \(\tilde {A}^{k}_{i,j}\) is a nonzero polynomial with a constant probability. We can repeat the above procedure O(log n) times to get a correct result in all entries with high probability. □

6 Applications

In this section we will show how to use Theorem 4 to improve known algorithms on graphs. First we will develop a data structure that returns a number of distinct walks efficiently.

Lemma 3

LetG = (V, E) be a directed, unweighted graph with n vertices and a diameter D. There exists andeterministic algorithm that after some preprocessing can answer queries for any givenu, v ∈ V:

-

Query returns {wi|1 ≤ i ≤ D},wherewiis the number of distinct walks from u to v of length exactly i,

-

Preprocessing takes \(\widetilde {O}(n^{\omega }\log {W})\) and query takes \(\widetilde {O}(n\log {W})\) field operations.

whereWis the upper bound onwifor alli ∈{1,…,D}.

Proof

We encode the graph G as an adjacency matrix A(G). We use the Theorem 4 to construct the data structure that given a query (u, v) outputs (Ak)u, v for all 1 ≤ k ≤ μ. Finally, we use Observation 1 to note, that (Ak)u, v is equal to the number of distinct walks from u to v of length exactly k. Moreover we use Lemma 2 to bound the number D ≤ μ, so we will always output more numbers (but we can truncate them in O(n) time). Finally we note, that the preprocessing and query of Theorem 4 matches the statement and construction of adjacency matrix is O(n2). □

This algorithm is a significant improvement over [33]:

-

One can use Lemma 3 to find the distance between u, v by linearly scanning the array and returning the first k such that wk > 0,

-

Lemma 3 can count cycles. In contrast the [33] cannot, because the distance from u to u is always 0 (see Section 6.1),

-

Lemma 3 is almost optimal, i.e., when D = O(n) then query will need to output O(n log W) bits.

From the other hand, Lemma 3 is merely a functional improvement and it does not break the \(\widetilde {O}(n^{\omega })\) of the Single Source Shortest Paths (SSSP) for dense, directed graphs.

Now we will show the application of Lemma 3. We begin with almost optimal algorithm to compute the number of all walks between all pairs of vertices. We are not aware of any other research concerning this problem.

Definition 2 (All-Pairs All Walk problem)

Given a directed, unweighted graph G with a diameter D. The task is to return an array A, such that for every pair of vertices u, v ∈ G and every k ∈{1,…,D} an element A[u, v, k] is the number of distinct walks from u to v of length k.

The folklore solution to this needs O(Dnω log W) time (where W is an upper bound on number of walks) and works as follows: take the adjacency matrixAof graphGand save it inA[u, v, 1]. Then, square it to getA2and save it inA[u, v, 2]. Continue until you fill out complete table. In the worst case this algorithm needs D = O(n) matrix multiplications, thus it needs O(Dnω) field operations. At the first glance it is surprising that we can improve it to \(\widetilde {O}(n^{3})\) field operations.

Theorem 5

All-Pairs All Walk problem admits an\(\widetilde {O}(n^{3} \log {W})\)algorithm(where W is upper bound on number of walks between every pair of vertices).

Proof

We will apply the Lemma 3 algorithm. The preprocessing takes \(\widetilde {O}(n^{\omega })\) time. Then, for every pair of vertices u, v ask a query. A single query takes \(\widetilde {O}(n \log {W})\) time. Next we will save it in the table A[u, v] (query gives D numbers w1,…,wD, such that wi is the number of walks of length i and save it A[u, v, i] := wi).

Because there are O(n2) pairs and for each pair we need \(\widetilde {O}(n\log {W})\) time, the complexity of our solution is \(\widetilde {O}(n^{3}\log {W})\). The algorithm is almost optimal because the output in the worst case may be O(n3 log W) (we may need O(log W) bits to encode a single entry in the table). □

6.1 Counting and Determining the Lengths of Cycles

We will use Theorem 4 to solve All-Nodes Shortest Cycle (ANSC) problem efficiently.

Definition 3 (All-Nodes Shortest Cycles 31)

Given a directed, unweighted graph G. The problem All-Nodes ShortestCycle asks to output for every vertex v the length of the shortest cycle that contains v.

Lemma 4

There exists a deterministic algorithm that for a given unweighted, directed G with a diameter D:

-

For every vertexureturnsDnumbers:\({c_{u}^{1}}, {c_{u}^{2}}, \ldots {c_{u}^{D}}\),where\({c_{u}^{k}}\)isthe number of non-simple cycles of length exactlyk, that contain vertex u,

-

Algorithm works in \(\widetilde {O}(n^{\omega } \log {W})\) time (where W is an upper bound on \({c^{k}_{u}}\) ).

Proof

We will use Theorem 4. We start by preprocessing the graph G in time \(\widetilde {O}(n^{\omega }\log {W})\). Theorem 4 allows us to ask for a number of walks from u to v and receive D numbers: \(w_{u,v}^{k}\). So, we ask for the number of walks from vertex u to the same vertex u. This is exactly the number of non-simple cycles of given length that contain vertex u.

Because we need to ask only n queries (it is the number of vertices in a graph) and each query takes \(\widetilde {O}(n\log {W})\) time we have \(\widetilde {O}(n^{\omega }\log {W} + n^{2}\log {W})\) = \(\widetilde {O}(n^{\omega }\log {W})\) algorithm. □

If we are only interested in deciding if the numbers \({c_{u}^{i}}\) are nonzero, instead of Theorem 4 we can use Corollary 1. It introduces the one-sided randomization but allows us to shave log W factors in the running time.

Corollary 2

There exists a randomized algorithm that for a given unweighted, directed G with a diameter D :

-

For every vertexureturnsDnumbers:\({c_{u}^{1}}, {c_{u}^{2}}, \ldots {c_{u}^{D}}\), where\({c_{u}^{k}}\)is1 if there exists a non-simple cycle of length exactlyk, that contain vertex uor 0 otherwise,

-

Algorithm works in \(\widetilde {O}(n^{\omega })\) time with one sided bounded error.

Now we will show how to improve upon [31] \(\widetilde {O}(n^{(\omega + 3)/2})\) algorithm with Corollary 2.

Theorem 6

All-Nodes Shortest Cycles admits an \(\widetilde {O}(n^{\omega })\) randomized time algorithm.

Proof

We use Lemma 2 to compute the table S[v]. For every vertex we search for the first nonzero element linearly. This with high probability is the length of the shortest cycle that contains it. Because the output contains O(n2) numbers the complexity is equal to the preprocessing time \(\widetilde {O}(n^{\omega })\). □

Also the Corollary 2 improves upon [9, Theorem 45] for unweighted graphs.

Corollary 3

Given a directed, unweighted graph G with a diameter D. LetS(c) denote the set of vertices that lie in the cycle of length exactly c.In\(\widetilde {O}(n^{\omega })\)timewe can return the setsS(1),…,S(D) with constant probability of success.

Proof

Similarly to the proof of Theorem 6, we can scan the output to compute the set S(c) that contains all vertices that lie on some cycle of length ≤ c. Then, by linear scan we can return the sets S(1),…,S(D). □

7 Conclusion and Open Problems

We introduced the framework based on Frobenius normal form and used it to solve some problems on directed, unweighted graphs in matrix multiplication time. The main open question is to use this framework to prove that APSP on such graphs can be solved in \(\widetilde {O}(n^{\omega })\) or at least O(n2.5). The promising way is to use the algorithms that determine operators of matrices of polynomials (e.g., determinant, solving linear system [14, 18]). Additionally, algorithms for a black-box polynomial degree determination seem to be a promising path.

Another interesting problem is to use this framework to obtain additive approximation for APSP. Currently, the best additive approximation of APSP is due to [20]. However, no additive approximation of APSP is known that would work in \(\widetilde {O}(n^{\omega })\) time.

Application in dynamic algorithm also seems to be a promising approach. Frandsen and Sankowski [12] showed an algorithm, that dynamically preserves Frobenius normal form in O(kn2) time. Our algorithms use fast Hankel matrix-vector multiplication that is based on FFT. Reif and Tate [19] presented an \(O(\sqrt {n})\) time per request algorithm for FFT. Can we use these approaches to obtain a faster dynamic algorithm?

Finally, it remains open how to apply the Frobenius normal form in the weighted directed graphs with small, integer weights {−M,…,M}. Cygan et al. [9] took degree M polynomials and used [18] algorithms to get \(\widetilde {O}(M n^{\omega })\) time radius and diameter detection. We suspect that similar technique can be applied to Frobenius normal form framework.

Notes

Tr [A] denotes the trace of a matrix A, i.e., the sum of elements on the main diagonal.

Sometimes this form is called rational-canonical form.

Operation ⊕ denotes concatenation.

Xa, b denotes a matrix constructed by concatenating columns xa,xa+ 1,…,xb of a matrix X.

References

Abboud, A., Grandoni, F., Williams, V.V.: Subcubic equivalences between graph centrality problems, APSP and diameter. In: Indyk, P. (ed.) Proceedings of the 26th Annual ACM-SIAM Symposium on Discrete Algorithms, SODA 2015, pp. 1681–1697. SIAM, San Diego (2015). ISBN 978-1-61197-374-7. https://doi.org/10.1137/1.9781611973730.112

Abboud, A., Williams, VV, Wang, J.R.: Approximation and fixed parameter subquadratic algorithms for radius and diameter in sparse graphs. In: Krauthgamer, R. (ed.) Proceedings of the 27th Annual ACM-SIAM Symposium on Discrete Algorithms, SODA 2016, pp. 377–391. SIAM, Arlington (2016). ISBN 978-1-61197-433-1. https://doi.org/10.1137/1.9781611974331.ch28

Agarwal, U, Ramachandran, V: Fine-grained complexity for sparse graphs. In: Diakonikolas, I., Kempe, D., Henzinger, M. (eds.) Proceedings of the 50th Annual ACM SIGACT Symposium on Theory of Computing, STOC 2018, pp. 239–252. ACM, Los Angeles (2018). https://doi.org/10.1145/3188745.3188888

Alon, N., Yuster, R., Zwick, U.: Color-coding. J. ACM 42 (4), 844–856 (1995). https://doi.org/10.1145/210332.210337

Alon, N., Yuster, R, Zwick, U: Finding and counting given length cycles. Algorithmica 17(3), 209–223 (1997). https://doi.org/10.1007/BF02523189

Chan, T.M.: More algorithms for all-pairs shortest paths in weighted graphs. In: Johnson, D.S., Feige, U. (eds.) Proceedings of the 39th Annual ACM Symposium on Theory of Computing, pp. 590–598. ACM, San Diego (2007). ISBN 978-1-59593-631-8. https://doi.org/10.1145/1250790.1250877

Chung, F.R.K., Faber, V., Manteuffel, T.A.: An upper bound on the diameter of a graph from eigenvalues associated with its laplacian. SIAM J. Discrete Math. 7(3), 443–457 (1994). https://doi.org/10.1137/S0895480191217776

Chung, F.R.K.: Spectral graph theory, volume 92 American Mathematical Soc. (1997)

Cygan, M., Gabow, H.N., Sankowski, P.: Algorithmic applications of baur-strassen’s theorem: shortest cycles, diameter, and matchings. J. ACM 62(4), 28 (2015). https://doi.org/10.1145/2736283

Eberly, W: Black box frobenius decompositions over small fields. In: [28], pp. 106–113. ISBN 1-58113-218-2. https://doi.org/10.1145/345542.345596

Floyd, R.W.: Algorithm 97: shortest path. Commun. ACM 5(6), 345 (1962). https://doi.org/10.1145/367766.368168

Frandsen, GS, Sankowski, P: Dynamic normal forms and dynamic characteristic polynomial. In: International Colloquium on Automata, Languages, and Programming, pp. 434–446. Springer, Berlin (2008)

Gantmacher, F.R.: The theory of matrices, Vol. 1 Chelsea (1959)

Giesbrecht, M., Jacobson, M.J. Jr, Storjohann, A.: Algorithms for large integer matrix problems. In: Boztas, S., Shparlinski, I.E. (eds.) 14th International Symposium Applied Algebra, Algebraic Algorithms and Error-Correcting Codes, AAECC-14. Proceedings, volume 2227 of Lecture Notes in Computer Science, pp. 297–307. Springer, Melbourne (2001). ISBN 3-540-42911-5. https://doi.org/10.1007/3-540-45624-4_31

Golub, G.H., Van Loan, C.F.: Matrix computations, 3rd edn. Johns Hopkins University Press, Baltimore (1996). ISBN 978-0-8018-5414-9

Le Gall, F.: Powers of tensors and fast matrix multiplication. In: Proceedings of the 39th International Symposium on Symbolic and Algebraic Computation, pp. 296–303. ACM (2014)

Lim, A., Dai, J.: On product of companion matrices. Linear Algebra Appl. 435(11), 2921–2935 (2011)

Mulders, T., Storjohann, A.: Rational solutions of singular linear systems. In: [28], pp. 242–249. ISBN 1-58113-218-2. https://doi.org/10.1145/345542.345644

Reif, J.H., Tate, S.R.: On dynamic algorithms for algebraic problems. J. Algorithms 22(2), 347–371 (1997). https://doi.org/10.1006/jagm.1995.0807

Roditty, L., Shapira, A.: All-pairs shortest paths with a sublinear additive error. ACM Trans. Algorithms 7(4), 45:1–45:12 (2011). ISSN 1549-6325. https://doi.org/10.1145/2000807.2000813

Roditty, L., Williams, V.V.: Minimum weight cycles and triangles: equivalences and algorithms. In: Ostrovsky, R. (ed.) IEEE 52nd Annual Symposium on Foundations of Computer Science, FOCS 2011, pp. 180–189. IEEE Computer Society, Palm Springs (2011). https://doi.org/10.1109/FOCS.2011.27

Sankowski, P., Wegrzycki, K.: Improved distance queries and cycle counting by frobenius normal form. In: Vollmer, H., Vallée, B. (eds.) 34th Symposium on Theoretical Aspects of Computer Science, STACS 2017, March 8-11, vol. 66 of LIPIcs, pp. 56:1–56:14. Schloss Dagstuhl - Leibniz-Zentrum fuer Informatik, Hannover (2017). ISBN 978-3-95977-028-6. https://doi.org/10.4230/LIPIcs.STACS.2017.56

Schwartz, J.T.: Fast probabilistic algorithms for verification of polynomial identities. J. ACM 27(4), 701–717 (1980). ISSN 0004-5411. https://doi.org/10.1145/322217.322225

Seidel, R.: On the all-pairs-shortest-path problem. In: Rao Kosaraju, S., Fellows, M., Wigderson, A., Ellis, J.A. (eds.) Proceedings of the 24th Annual ACM Symposium on Theory of Computing, May 4-6, 1992, pp. 745–749. ACM, Victoria (1992). ISBN 0-89791-511-9. https://doi.org/10.1145/129712.129784

Storjohann, A.: Deterministic computation of the frobenius form. In: 42nd Annual Symposium on Foundations of Computer Science, FOCS 2001, 14-17 October 2001, pp. 368–377. IEEE Computer Society, Las Vegas (2001). ISBN 0-7695-1390-5. https://doi.org/10.1109/SFCS.2001.959911

Tang, Z., Duraiswami, R., Gumerov, N.A.: Fast algorithms to compute matrix-vector products for pascal matrices. Technical Reports from UMIACS UMIACS-TR-2004-08, 2004/03/25/. http://drum.lib.umd.edu/handle/1903/1338 (2004)

Thorup, M.: Undirected single-source shortest paths with positive integer weights in linear time. J. ACM 46(3), 362–394 (1999). https://doi.org/10.1145/316542.316548

Traverso, C. (ed.): Proceedings of the 2000 International Symposium on Symbolic and Algebraic Computation, ISSAC 2000. ACM, St. Andrews (2000). ISBN 1-58113-218-2. http://dl.acm.org/citation.cfm?id=345542

Warshall, S.: A theorem on boolean matrices. J. ACM 9(1), 11–12 (1962). https://doi.org/10.1145/321105.321107

Williams, R.: Faster all-pairs shortest paths via circuit complexity. In: Shmoys, D.B. (ed.) Symposium on Theory of Computing, STOC 2014, pp. 664–673. ACM, New York (2014). ISBN 978-1-4503-2710-7. https://doi.org/10.1145/2591796.2591811

Yuster, R.: A shortest cycle for each vertex of a graph. Inf. Process. Lett. 111 (21-22), 1057–1061 (2011). https://doi.org/10.1016/j.ipl.2011.07.019

Yuster, R., Zwick, U.: Finding even cycles even faster. In: Abiteboul, S., Shamir, E. (eds.) 21st International Colloquium, Automata, Languages and Programming, ICALP94. Proceedings, volume 820 of Lecture Notes in Computer Science, pp. 532–543. Springer, Jerusalem (1994). ISBN 3-540-58201-0. https://doi.org/10.1007/3-540-58201-0_96

Yuster, R., Zwick, U.: Answering distance queries in directed graphs using fast matrix multiplication. In: Proceedings of 46th Annual IEEE Symposium on Foundations of Computer Science (FOCS 2005), 23-25 October 2005, pp. 389–396. IEEE Computer Society (2005). ISBN 0-7695-2468-0. https://doi.org/10.1109/SFCS.2005.20

Zippel, R.: Probabilistic algorithms for sparse polynomials. In: Ng, E.W. (ed.) Symbolic and algebraic computation, pp. 216–226. Springer, Berlin (1979). ISBN 978-3-540-35128-3

Zwick, U.: All pairs shortest paths using bridging sets and rectangular matrix multiplication. J. ACM 49(3), 289–317 (2002). https://doi.org/10.1145/567112.567114

Acknowledgment

This work is part of a project TOTAL that has received funding from the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation programme (grant agreement No 677651). Piotr Sankowski is supported by the Polish National Science Center, grant no 2014/13/B/ST6/00770. Karol Węgrzycki is supported by the grants 2016/21/N/ST6/01468 and 2018/28/T/ST6/00084 of the Polish National Science Center. Part of this work was done while authors were visiting the Simons Institute for the Theory of Computing.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended Abstract of this paper was presented at STACS 2017 [22]

This article is part of the Topical Collection on Special Issue on Theoretical Aspects of Computer Science (STACS 2017)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Sankowski, P., Węgrzycki, K. Improved Distance Queries and Cycle Counting by Frobenius Normal Form. Theory Comput Syst 63, 1049–1067 (2019). https://doi.org/10.1007/s00224-018-9894-x

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00224-018-9894-x