Abstract

Debugging data processing logic in data-intensive scalable computing (DISC) systems is a difficult and time-consuming effort. Today’s DISC systems offer very little tooling for debugging programs, and as a result, programmers spend countless hours collecting evidence (e.g., from log files) and performing trial-and-error debugging. To aid this effort, we built Titian, a library that enables data provenance—tracking data through transformations—in Apache Spark. Data scientists using the Titian Spark extension will be able to quickly identify the input data at the root cause of a potential bug or outlier result. Titian is built directly into the Spark platform and offers data provenance support at interactive speeds—orders of magnitude faster than alternative solutions—while minimally impacting Spark job performance; observed overheads for capturing data lineage rarely exceed 30% above the baseline job execution time.

Similar content being viewed by others

Notes

Note that the underscore (_) character is used to indicate a closure argument in Scala, which in this case is the individual lines of the log file.

dscr is a hash table mapping error codes to the related textual description.

Because doing so would be prohibitively expensive.

We optimize this HDFS partition scan with a special \(\mathsf {HadoopRDD}\) that reads records at offsets provided by the data lineage.

Apache Spark SQL has the ability to direct these full scans to certain data partitions only.

The task assigned to Node \(\mathsf {A}\) performs a non-trivial amount of work, i.e., it builds a hash table on the \(\mathsf {Combiner}\) partition that will be probed with the \(\mathsf {Reducer}\) partition, which, however, will be empty after the directed shuffle.

The term persistent is used to indicate that the lifetime of a task spans beyond a single data partition.

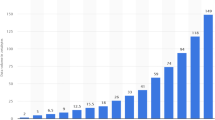

256,000 tasks correspond to approximately the scheduling workload generated by a dataset of about 16 TB (using the default Spark settings).

Our experimental cluster was equipped with a 1 Gbps Ethernet.

References

Alvaro, P., Rosen, J., Hellerstein, J.M.: Lineage-driven fault injection. In: SIGMOD, pp. 331–346 (2015)

Amsterdamer, Y., Davidson, S.B., Deutch, D., Milo, T., Stoyanovich, J., Tannen, V.: Putting lipstick on pig: enabling database-style workflow provenance. VLDB 5(4), 346–357 (2011)

Anand, M.K., Bowers, S., Ludäscher, B.: Techniques for efficiently querying scientific workflow provenance graphs. In: EDBT, pp. 287–298 (2010)

Armbrust, M., Xin, R.S., Lian, C., Huai, Y., Liu, D., Bradley, J.K., Meng, X., Kaftan, T., Franklin, M.J., Ghodsi, A., Zaharia, M.: Spark SQL: relational data processing in spark. In: SIGMOD, pp. 1383–1394 (2015)

Asterixdb. https://asterixdb.apache.org/

Bigdebug. sites.google.com/site/sparkbigdebug/

Biton, O., Cohen-Boulakia, S., Davidson, S.B., Hara, C.S.: Querying and managing provenance through user views in scientific workflows. In: ICDE, pp. 1072–1081 (2008)

Borkar, V., Carey, M., Grover, R., Onose, N., Vernica, R.: Hyracks: a flexible and extensible foundation for data-intensive computing. In: ICDE, pp. 1151–1162 (2011)

Chambi, S., Lemire, D., Kaser, O., Godin, R.: Better bitmap performance with roaring bitmaps. Softw. Pract. Exp. 46(5), 709–719 (2016)

Chothia, Z., Liagouris, J., McSherry, F., Roscoe, T.: Explaining outputs in modern data analytics. Proc. VLDB Endow. 9(12), 1137–1148 (2016)

Cui, Y., Widom, J.: Lineage tracing for general data warehouse transformations. VLDBJ 12(1), 41–58 (2003)

Dave, A., Zaharia, M., Shenker, S., Stoica, I.: Arthur: Rich post-facto debugging for production analytics applications. Tech. Rep. (2013)

Flink. https://flink.apache.org/

Glavic, B., Alonso, G.: Perm: Processing provenance and data on the same data model through query rewriting. In: ICDE, pp. 174–185 (2009)

Glavic, B., Alonso, G., Miller, R.J., Haas, L.M.: TRAMP: understanding the behavior of schema mappings through provenance. PVLDB 3(1), 1314–1325 (2010)

Gonzalez, J.E., Xin, R.S., Dave, A., Crankshaw, D., Franklin, M.J., Stoica, I.: Graphx: graph processing in a distributed dataflow framework. In: OSDI, pp. 599–613 (2014)

Graefe, G., McKenna, W.J.: The volcano optimizer generator: extensibility and efficient search. In: ICDE, pp. 209–218 (1993)

Green, T.J., Karvounarakis, G., Ives, Z.G., Tannen, V.: Update exchange with mappings and provenance. In: Proceedings of the 33rd International Conference on Very Large Data Bases, VLDB ’07, pp. 675–686. VLDB Endowment (2007)

Gulzar, M.A., Han, X., Interlandi, M., Mardani, S., Tetali, S.D., Millstein, T., Kim, M.: Interactive debugging for big data analytics. In: 8th USENIX Workshop on Hot Topics in Cloud Computing (HotCloud 16). USENIX Association, Denver, CO (2016)

Gulzar, M.A., Han, M.I.X., Li, M., Condie, T., Kim, M.: Automated debugging in data-intensive scalable computing. In: Proceedings of the Seventh ACM Symposium on Cloud Computing, SoCC ’17. ACM, New York (2017)

Gulzar, M.A., Interlandi, M., Condie, T., Kim, M.: Bigdebug: interactive debugger for big data analytics in apache spark. In: FSE, pp. 1033–1037 (2016)

Gulzar, M.A., Interlandi, M., Yoo, S., Tetali, S.D., Condie, T., Millstein, T., Kim, M.: Bigdebug: debugging primitives for interactive big data processing in spark. In: ICSE, pp. 784–795 (2016)

Hadoop. http://hadoop.apache.org

Heinis, T., Alonso, G.: Efficient lineage tracking for scientific workflows. In: SIGMOD, pp. 1007–1018 (2008)

Ikeda, R., Park, H., Widom, J.: Provenance for generalized map and reduce workflows. In: CIDR, pp. 273–283 (2011)

Interlandi, M., Tang, N.: Proof positive and negative in data cleaning. In: ICDE, pp. 18–29 (2015)

Interlandi, M., Tetali, S.D., Gulzar, M.A., Noor, J., Condie, T., Kim, M., Millstein, T.: Optimizing interactive development of data-intensive applications. In: Proceedings of the Seventh ACM Symposium on Cloud Computing, SoCC ’16, pp. 510–522. ACM, New York, NY, USA (2016)

Interlandi, M., Shah, K., Tetali, S.D., Gulzar, M.A., Yoo, S., Kim, M., Millstein, T.D., Condie, T.: Titian: data provenance support in spark. PVLDB 9(3), 216–227 (2015)

Karvounarakis, G., Ives, Z.G., Tannen, V.: Querying data provenance. In: Proceedings of the 2010 ACM SIGMOD International Conference on Management of Data, SIGMOD ’10, pp. 951–962. ACM, New York, NY, USA (2010)

Karvounarakis, G., Ives, Z.G., Tannen, V.: Querying data provenance. In: SIGMOD, pp. 951–962 (2010)

Logothetis, D., De, S., Yocum, K.: Scalable lineage capture for debugging disc analytics. In: SOCC, pp. 17:1–17:15 (2013)

Meliou, A., Gatterbauer, W., Moore, K.F., Suciu, D.: The complexity of causality and responsibility for query answers and non-answers. PVLDB 4(1), 34–45 (2010)

Missier, P., Belhajjame, K., Zhao, J., Roos, M., Goble, C.A.: Data lineage model for Taverna workflows with lightweight annotation requirements. In: IPAW, pp. 17–30 (2008)

Murray, D.G., McSherry, F., Isaacs, R., Isard, M., Barham, P., Abadi, M.: Naiad: a timely dataflow system. In: SOSP. ACM (2013)

Olston, C., Reed, B., Srivastava, U., Kumar, R., Tomkins, A.: Pig latin: a not-so-foreign language for data processing. In: SIGMOD, pp. 1099–1110. ACM (2008)

Olston, C., Reed, B.: Inspector gadget: a framework for custom monitoring and debugging of distributed dataflows. PVLDB 4(12), 1237–1248 (2011)

Roy, S., Suciu, D.: A formal approach to finding explanations for database queries. In: SIGMOD, pp. 1579–1590 (2014)

Spark. http://spark.apache.org

Thusoo, A., Sarma, J.S., Jain, N., Shao, Z., Chakka, P., Anthony, S., Liu, H., Wyckoff, P., Murthy, R.: Hive: a warehousing solution over a map-reduce framework. VLDB 2(2), 1626–1629 (2009)

Wang, L., Zhan, J., Luo, C., Zhu, Y., Yang, Q., He, Y., Gao, W., Jia, Z., Shi, Y., Zhang, S., Zheng, C., Lu, G., Zhan, K., Li, X., Qiu, B.: Bigdatabench: a big data benchmark suite from internet services. In HPCA, pp. 488–499 (2014)

Welsh, M., Culler, D., Brewer, E.: Seda: an architecture for well-conditioned, scalable internet services. In: SOSP, pp. 230–243 (2001)

Wu, E., Madden, S.: Scorpion: explaining away outliers in aggregate queries. Proc. VLDB Endow. 6(8), 553–564 (2013)

Zaharia, M., Chowdhury, M., Das, T., Dave, A., Ma, J., McCauley, M., Franklin, M.J., Shenker, S., Stoica, I.: Resilient distributed datasets: a fault-tolerant abstraction for in-memory cluster computing. In: NSDI (2012)

Zeller, A., Hildebrandt, R.: Simplifying and isolating failure-inducing input. TSE 28(2), 183–200 (2002)

Zhou, W., Fei, Q., Narayan, A., Haeberlen, A., Loo, B.T., Sherr, M.: Secure network provenance. In: SOSP, pp. 295–310 (2011)

Zhou, W., Sherr, M., Tao, T., Li, X., Loo, B.T., Mao, Y.: Efficient querying and maintenance of network provenance at internet-scale. In: SIGMOD, pp. 615–626 (2010)

Acknowledgements

Titian is supported through Grants NSF IIS-1302698 and CNS-1351047, and U54EB020404 awarded by the National Institute of Biomedical Imaging and Bioengineering (NIBIB) through funds provided by the trans-NIH Big Data to Knowledge (BD2K) initiative (www.bd2k.nih.gov). We would also like to thank our industry partners at IBM Research Almaden and Intel for their generous gifts in support of this research.

Author information

Authors and Affiliations

Corresponding author

Additional information

Matteo Interlandi was formally affiliated to University of California, Los Angeles, Los Angeles, CA, USA when the work was done.

Rights and permissions

About this article

Cite this article

Interlandi, M., Ekmekji, A., Shah, K. et al. Adding data provenance support to Apache Spark. The VLDB Journal 27, 595–615 (2018). https://doi.org/10.1007/s00778-017-0474-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00778-017-0474-5