Abstract

We are concerned with solving linear programming problems arising in the plastic truss layout optimization. We follow the ground structure approach with all possible connections between the nodal points. For very dense ground structures, the solutions of such problems converge to the so-called generalized Michell trusses. Clearly, solving the problems for large nodal densities can be computationally prohibitive due to the resulting huge size of the optimization problems. A technique called member adding that has correspondence to column generation is used to produce a sequence of smaller sub-problems that ultimately approximate the original problem. Although these sub-problems are significantly smaller than the full formulation, they still remain large and require computationally efficient solution techniques. In this article, we present a special purpose primal-dual interior point method tuned to such problems. It exploits the algebraic structure of the problems to reduce the normal equations originating from the algorithm to much smaller linear equation systems. Moreover, these systems are solved using iterative methods. Finally, due to high degree of similarity among the sub-problems after preforming few member adding iterations, the method uses a warm-start strategy and achieves convergence within fewer interior point iterations. The efficiency and robustness of the method are demonstrated with several numerical experiments.

Similar content being viewed by others

1 Introduction

Optimization of truss structures goes back to a seminal work of Michell [26] and has grown to a variety of disciplines in structural optimization for which several advanced formulations dealing with practical requirements, theories on existence and uniqueness of solutions, efficient solution methods, and benchmark problems have been proposed, [2,3,4,5, 15, 17, 20,21,22,23, 28, 29, 35] to mention only a few.

In this paper, we are concerned with solving the topology optimization problems in plastic design by linear programming. These problem formulations are known to ignore kinematic compatibility conditions. However, for single-load case, their equivalence to the (elastic design) minimum compliance problem has been shown, for examples see [1, 3,4,5, 17, 36]. For multiple-load case, establishing the equivalence is harder, except for some special cases [31]. Nevertheless, it is worth mentioning that the solution of the simplified linear programming formulations provides a reference lower bound plastic design that can be used at early design stages or as an initial truss layout for multilevel optimization problems, for example [16].

The optimization problems are usually formulated by using a ground structure approach [8] in which a set of nodes is distributed in the design domain and all the possible interconnecting bars are generated. The main goal is then to determine the optimal cross-sectional areas of these bars and obtain the lightest structure that is able to sustain a given set of applied load cases. In order to find the ultimate optimal designs that converge to the corresponding exact solution of the so-called generalized Michell trusses [15, 22, 23] or solutions less sensitive to nodal positions, which may actually be dealt with by non-linear geometry optimization of involving smaller size problems [5], we need to use a very large number of nodes [9]. However, this results in a huge number of possible bars and causes that the underlying optimization problems impose additional requirements on the existing solution techniques. The challenges are apparent for a single-load case problems and increase significantly when multiple-load case problems are dealt with. Although attempts have been made to split the multiple-load case problems into certain set of single-load case problems based on the principle of superposition, they have been successful only for special loading conditions [30]. A need for a rigorous treatment of multiple-load case problems still exists.

An adaptive ground structure approach proposed in [10] has been applied in several studies [33]. It is an iterative procedure, closely related to column generation methods for linear programming [14, 24] where the problems are initially solved for a minimal connecting bars and subsequently members are added until the optimal design is obtained. The technique is very attractive because it relies on solving a sequence of smaller problems and avoids the need of solving the full formulation which is prohibitively expensive due to its excessive size. However, after performing the first few member adding iterations, the size of the sub-problems grows and they require extensive computational effort to reach the solution.

The purpose of this article is to address these challenges by developing a special purpose solution technique based on primal-dual interior point method [37] which is well-known to deliver fast convergence in practice and excel on large-scale problems. For more details and a survey of recent developments in the primal-dual interior point method, we refer the reader to [12] and the references therein.

Interior point methods usually reach a solution after a modest number of iterations. However, a single iteration in these methods might be expensive when very large-scale problems are solved. In such cases, an improvement in the efficiency might sometimes be delivered by replacing direct linear algebra techniques with well-suited iterative methods equipped with efficient preconditioners, see [6, 7, 12] and the references therein.

In this paper, we address several aspects of interior point method implementation and demonstrate how to specialize it to single- and multiple-load case plastic truss layout optimization problems and achieve major improvements of its efficiency. In particular, we focus on three important algorithmic features of IPMs which contribute most to the improvement of the overall efficiency of the method.

First of all, we exploit the algebraic structure of structure of the optimization problems when solving normal equation formulation of the reduced Newton systems. We exploit a particular sparsity structure of the LP constraint matrix to perform implicit eliminations and to reduce significantly the size of these linear equation systems in which we determine the search direction for the virtual displacements. To be precise with the size of the linear systems, for problems on N-dimensional design domain, with d nodes inter-connected by \(n(\gg d)\) member bars, and subjected to \(n_L\) independent load cases, we solve linear systems with an \(m\cdot n_L\times m\cdot n_L\), \(m\approx Nd\), coefficient matrix instead of that with an \((m+n)\cdot n_L\times (m+n)\cdot n_L\) matrix in the case of standard normal equations.

Secondly, we employ iterative methods to solve the already reduced linear systems. We use conjugate gradient method [18, 32] with a preconditioner designed to exploit the particular sparsity structure and other mathematical properties of the reduced geometry matrix.

Finally, we take advantage of the similarity of the sequence of problems after some of the first few member adding iterations. In that case, a warm-start strategy [11, 13] is used to define an initial point for the interior point algorithm. This significantly reduces the number of interior point iterations when compared to a cold-start strategy which consists of solving every problem from scratch.

Let us mention at this point that interior point methods have already been applied in the context of truss topology optimization problems. A specialized variant of such method was used in [19]. The linear systems applied to compute search directions were reduced to involve only the displacement variables and one dual variable associated to a volume constraint. The resulting linear systems were dense because no member adding strategy was used. These systems were solved using direct methods of linear algebra. Alternative approaches to truss topology optimization problem include a reformulation as an unconstrained optimization problem using only displacement variables, solved by a gradient descent method [4].

The article is organized in the following manner. In Sect. 2, the overview of a the primal-dual interior point method for a standard linear programming is presented. In Sect. 3, the plastic truss layout optimization, its dual formulation, and the member adding scheme are described. In Sect. 4, the structure-exploiting linear algebra techniques are discussed. In Sect. 5, the iterative method and the applied preconditioner are described. In Sect. 6, the warm-start strategy is explained. The implementation of the method is discussed in Sect. 7 and the numerical results are discussed in Sect. 8. Finally, the conclusions are given in Sect. 9.

2 Primal-dual interior point method for linear programming

Consider the standard primal linear problem

where \(A\in \mathbb {R}^{m\times n}\), \(x,c\in \mathbb {R}^{n}\), and its dual problem

where \(y,s\in \mathbb {R}^{m}\). In primal-dual interior point methods, we introduce a barrier parameter \(\mu >0\) and formulate the perturbed first-order optimality conditions as

where \(X=\text {diag}(x)\), \(S=\text {diag}(s)\), and \(e=(1,\dots ,1)\) of appropriate size. Then, we solve the system (3) for a sequence of \(\mu _k\rightarrow 0\) to find the solution of the primal (1) and dual (2) problems. We apply Newton’s method to the optimality conditions (3) and solve the linear system

where \(\xi _d= c-A^Ty-s\), \( \xi _p= b-Ax\), , and \(\xi _c= \mu e- XSe\). We follow the Mehrotra’s predictor-corrector method [25] to determine the search directions \((\varDelta x,\varDelta y,\varDelta s)\) in two steps. First, we solve the system (4) with the right hand side \((\xi _d,\xi _p,-XSe)^T\) to find the predictor direction \((\varDelta x_a,\varDelta y_a,\varDelta s_a)\). Then, we determine the maximal primal \(\bar{\alpha }_{p}\) and dual \(\bar{\alpha }_{d}\) step lengths

Next, we compute \(\mu \) as

and solve once again the system (4) with the right hand side \((0,0,\mu e-\varDelta X_a\varDelta s_a)^T\) to find the corrector direction \((\varDelta x_c,\varDelta y_c,\varDelta s_c)\). Finally, we determine the final primal \(\alpha _{p}\) and dual \(\alpha _{d}\) step lengths as

where \(\tau \in (0,1)\). Then, the new iterate \((x^{+},y^{+},s^{+})\) is

When solving the system (4) in most primal-dual interior point algorithms, the unknowns \(\varDelta s\) and \(\varDelta x\) are eliminated first and a smaller system called the normal equations is solved.

where \(\xi \) is the appropriate right hand.

We assume any general solver that uses interior point method would solve the normal equations (9) or other larger systems and perform backward substitution to determine the other directions. Particularly, in case of (9), \(\varDelta x\) and \(\varDelta s\).

However, for the plastic layout optimization of trusses covered in this article, we can further exploit their algebraic structure and find a much smaller system than (9) which can be efficiently solved. This is described in Sect. 4.

3 The plastic truss layout optimization problem

The plastic truss layout optimization problem is formulated following the ground structure approach in which a finite set of nodes, say d, are (uniformly) distributed in the design domain. The nodes are then connected by all possible potentials bars \(n \gg d\). If the overlapping bars are included, then \(n=d(d-1)/2\). We define an optimization problem in which the design variables are the cross-sectional areas \(a_i\), \(i=1,\ldots ,n\) of the member bars.

Let \(m(\approx Nd\), N is the dimension of the design domain) be the number of the non-fixed degrees of freedom, \(f_\ell \in \mathbb {R}^m,\ell \in \{1,\dots ,n_L\} \) be a set of external forces applied to the structure, and \(q^{+}_\ell \), \(q^{-}_\ell \in \mathbb {R}_+^n\) be the associated tensile and compressive forces of the bars, respectively.

Then, the multiple-load least-weight truss topology optimization in plastic design can be formulated as

where \(l\in \mathbb {R}^n\) is a vector of bar lengths, and \(\sigma ^{-}>0\) and \(\sigma ^{+}>0\) are the material’s yield stresses in compression and tension, respectively. Problem (10) is a linear program. After introducing primal slack variables \(x_\ell \in \mathbb {R}_{+}^n,\ell \in \{1,\dots ,n_L\} \) to the inequality constraints and transforming them to equality constraints, i.e., to

we derive the dual problem associated with (10) given by

where \(u_\ell \in \mathbb {R}^m\) denotes the virtual nodal displacement, \(s_{q^{+}_\ell },s_{q^{-}_\ell },y_\ell \in \mathbb {R}^n, \ell \in \{1,\dots ,n_L\}\), and \(s_a\in \mathbb {R}^n\).

3.1 The member adding

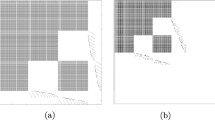

We follow the member adding strategy proposed in [10]. It is an iterative process that starts with a structure constituting a minimum connectivity, see Fig. 1 for example. Let \(n_0\) be the number of bars in the initial structure. Let \(K_0 \subset \{1,\dots ,n\}\) be the set of indices of the bars for which the optimization problems (10) and (11) are currently solved. Next, we compute the dual violations and generate the set K defined by

where the virtual strains are \(\varepsilon ^{+}_{\ell _j} = \max \{(B^Tu_\ell ^{*})_j ,0\}\) and \(\varepsilon ^{-}_{\ell _j} = \max \{-(B^Tu_\ell ^{*})_j, 0\}\) with \(u_\ell ^{*}\) denoting the optimal virtual nodal displacement and \(\beta >0\) some allowed tolerance. Then, the bars with indices in K are identified, filtered, and finally added in new problem instance. The member adding process stops when \(K=\emptyset \).

There are several heuristics approaches to filter and determine how many of the bars with indices in K should be added when formulating the new problem instances. Here, we present three.

-

AP1

Include all members in K.

-

AP2

Sort the members in K and include the largest \(\min \{\alpha n_0,|K|\}\) members, where \(\alpha \le 1\). For example, \(\alpha =0.1\) implies at most \(10\%\) of the initial number of bars. This is one of the techniques used in [10].

-

AP3

Include those in \(\{j\in K|l_j\le L_k\}\), where \(L_k\) is a limit on the lengths of the bars to be added at the kth member adding iteration. Its value increases at every member adding iteration, and reaches the maximum possible length. Particularly, for the numerical experiments in this paper, we use a simple heuristic rule \(L_k=2^kr\), where r is the diagonal distance between two adjacent nodes. This is motivated by the scheme used in [33].

4 Exploiting the algebraic structures

In this section, we describe how the utilize the structure of the least-weight truss layout problems. The primal and dual least-weight truss layout problems (10) and (11) are equivalent to the standard primal-dual linear programming problems (1)-(2) with

and

where (borrowing Matlab notation) \(\tilde{B}=\text {blkdiag}(B,\ldots ,B)\), \(\tilde{I}=\text {blkdiag}(I,\ldots ,I)\), and \(\tilde{I}_v=(I,\ldots ,I)^T\). Consequently, the coefficient matrix of the normal equations (9) is

where

and \(A_a=\text {diag}(a)\), \(\tilde{Q}^{+}=\text {blkdiag}(Q_1^{+}, \ldots , Q_{n_L}^{+})\) with \(Q^{+}_\ell =\text {diag}(q^{+}_\ell )\), \(\tilde{Q}^{-}=\text {blkdiag}(Q_1^{-}, \ldots , Q_{n_L}^{-})\) with \(Q^{-}_\ell =\text {diag}(q^{-}_\ell )\), \(\tilde{X}=\text {blkdiag}(X_1,\ldots ,X_{n_L})\) with \(X_\ell =\text {diag}(x_\ell )\), \(S_a=\text {diag}(s_a)\), \(\tilde{S}_{q_{+}}=\text {blkdiag}(S_{q_1^{+}},\ldots , S_{q_{n_L}^{+}})\) with \(S_{q_\ell ^{+}}=\text {diag}(s_{q^{+}_\ell })\), \(\tilde{S}_{q_{-}}=\text {blkdiag}(S_{q_1^{-}},\ldots , S_{q_{n_L}^{-}})\) with \(S_{q_\ell ^{-}}=\text {diag}(s_{q^{-}_\ell })\), and \(\tilde{S}_{x}=\text {blkdiag}(S_{x_1},\ldots ,S_{x_{n_L}} )\) with \(S_{x_\ell }=\text {diag}(s_{x_\ell })\).

The matrix in (15) has dimension \((m+n)\cdot n_L\times (m+n)\cdot n_L \) of which \(\tilde{D}_{22}\) is an \(n \cdot n_L\times n \cdot n_L \) matrix and recall that \(n\gg m\). When the problems are solved with general solvers that use the primal-dual interior point method, the Newton system in (4) is at most reduced to the normal equations with the coefficient matrix in (15).

In this article, we further utilize the structure of the matrix \(\tilde{D}_{22}\) which is a diagonal matrix for single-load case problems and built of blocks of diagonal matrices for multiple-load case problems. Example of such structure for a three-load case is displayed in Fig. 3b. In either case, it can be explicitly inverted at almost no cost. Then, instead of solving the normal equations with the larger coefficient matrix (15) which displays structures as in Fig. 2a and c, we solve a much smaller system

where

and \(\xi _u \) the resulting appropriate right hand side. The coefficient matrix \(\tilde{B}\tilde{D}\tilde{B}^T\) has dimension \(m \cdot n_L\times m \cdot n_L\) and its corresponding sparsity structures are shown in Fig. 2b and d. Note that for the single-load case problems, the reduction does not even affect the sparsity structure of the block (1, 1) of (15).

Remark 1

The matrix \(\tilde{B}\tilde{D}\tilde{B}^T\) has always a dimension \(m\cdot n_L\times m\cdot n_L\). However, its sparsity depends on the member adding iterations.

Remark 2

Algebraic structure was exploited in interior point method for a nonlinear programming formulation of the minimum compliance problem for truss design developed in [19]. The linear systems in this method were reduced to the ones which involved only the displacements and one extra dual variable corresponding to the volume constraint. The reduced systems in [19] solved using direct methods were completely dense, and no member adding strategy was used.

5 Iterative methods for linear systems

Applying direct methods of linear algebra to (17) is challenging due to the size and density of the matrix involved especially for the three-dimensional problems, see Sect. 8.3 and Table 6. Hence, we use the preconditioned conjugate gradient method, that is, we solve the system

where M is a suitable preconditioner. In this section, we propose a preconditioner that well approximates the matrix \(\tilde{B}\tilde{D}\tilde{B}^T\) in the sense of Frobenius norm and has the sparsity pattern determined from the detailed features of \(\tilde{B}\) and \(\tilde{D}\). These are described below for two-dimensional problems. Similar steps can be followed to extend the analysis to three-dimensional problems, see also Remark 3.

We start with analyzing the entries of the matrix \(B\in \mathbb {R}^{m\times n}\). Since, theses are direction cosines, we have \(|B_{ij}|\le 1, \forall (i,j)\). The number of non-zero entries in each row cannot exceed m / 2 which implies \((BB^T)_{ii}\le m/2, \forall i\). Note that, m / 2 is the number of nodes in the structure before removing any fixed degrees of freedom. Assembling B in the natural way, the sub-diagonal elements of \(BB^T\) are the sum of products of sines and cosines of angles which implies \(|(BB^T)_{i,i+1}|\le m/4\wedge |(BB^T)_{i-1,1}|\le m/4\). Otherwise, \(|(BB^T)_{i,j}|\le 1\). Therefore, the Frobenius norm of \(BB^T\) is dominated by the elements on its three diagonals, that is, the entries with indices in the set T defined by

See also Fig. 3a. Moreover, we derive the following bound

where the first and second terms in the right hand side are contributions from the entries with indices in T and the last term accounts for the remaining off-diagonal elements.

a The matrix \(BB^T\) for a two dimensional problem. \(m=56\), \(\max _{i}(BB^T)_{i,i}=20.1058\), \(\max _{i}|(BB^T)_{i,i+1}|=6.7788\), and \(||BB^T||_F= 108.8457\). b The sparsity structure of the matrix \(\tilde{D}\) in (17) for three-loads case problem

Recall that \(\tilde{B}=\text {blkdiag}(B,\ldots ,B)\) and \(\tilde{D}\) has the sparsity structure displayed in Fig. 3b. Then, the matrix \(\tilde{B}\tilde{D}\tilde{B}^T\) has the structure

where the block matrices \(\tilde{D}_{k,l}\), \(k,l\in \{1,\ldots ,n_L\}\) are diagonal. Define the sets

and consequently consider the partition \(B=[B_\mathcal {B},B_\mathcal {N}]\) that gives \(\tilde{B}=[\tilde{B}_\mathcal {B},\tilde{B}_\mathcal {N}]\) and \(\tilde{D}=[\tilde{D}_\mathcal {B},\tilde{D}_\mathcal {N}]\). Then

Let \(n_i\) be the maximum number of non-zero entries in row i of \(B_\mathcal {N}\). Then \(n_i\le m/2, \forall i\). Moreover, \(|(B_\mathcal {N}B_\mathcal {N}^T)_{i,i}|\le n_i\), \(|(B_\mathcal {N}B_\mathcal {N}^T)_{i,i-1}|\le n_i/2\) and \(|(B_\mathcal {N}B_\mathcal {N}^T)_{i,i+1}|\le n_i/2\). Then, the error in the normal equations after dropping the contribution of the \( D_{ii},i\in \mathcal {N}\) can be estimated as

where \(\varDelta \le (m^2-(3m-2))\) is the less significant contribution from the non-tridiagonal elements.

We propose the preconditioner M defined by

for \(k,l\in \{1,\ldots ,n_L\}\). In this case, we have

Remark 3

For three dimensional problems, the set of indces T in (20) is extended to

Remark 4

For some of the first few interior point iterations, we use a simpler preconditioner

for \(k,l\in \{1,\ldots ,n_L\}\) unless the the warm-start strategy described in Sect. 6 is activated.

In Sect. 7, we discuss in more detail the practical effects of using the preconditioners, their implementation, and the spectral properties of linear systems solved.

6 Warm-start strategy

Here, we describe the warm-start strategy for truss layout optimization problems. At every member adding iteration described in Sect. 3.1, we generate the set K in (12) to identify and add the new members. In that case, the size of the problem grows and the new variables are appended to the problem

where all the variables with the super-bar are vectors in \(\mathbb {R}^k\), \(k=|K|\), and \(\ell \in \{1,\ldots ,n_L\}\).

6.1 Computing a warm-start point

The starting point for the part of the variables in the right hand side of (30) that correspond to the old ones, i.e., those without super-bar, is the solution that is saved while solving the preceding problem with a loose relative optimality tolerance that depends on the level of similarity between the problems, see Sect. 7. This choice of loose tolerances is to avoid points located close to the boundary of the feasible region which could adversely affect the behaviour of interior point methods [11]. Below we propose the initial point for the newly added variables.

We set \(\bar{y}\) as

where \(\sigma _{max} =\max \{\sigma ^{-},\sigma ^{+}\}\) and \(\mu _0\) is the value of the barrier parameter at the time when the solution was saved. Next, we define the new dual slack variables as

Moreover, the new primal variables are set as

Finally, we derive the bounds on the violations of primal and dual infeasibility and complementarity constraints for these newly introduced variables.

6.1.1 Primal infeasibility

The primal infeasibilties \( \xi _{p_\ell }=(\xi _{p_{1,\ell }}, \xi _{p_{2,\ell }})\), \(\ell \in \{1,\ldots ,n_L\}\) are

where \(\sigma _{min} =\min \{\sigma ^{-},\sigma ^{+}\}\) and \(|| \xi ^0_{p_{1,\ell }}||\) is the infeasibiliy from the prior problem. The expressions in (34) illustrate that primal infeasibility is expected to be small and therefore should not be an issue.

6.1.2 Dual infeasibility

The last three dual infeasibilties in (11) can be easily shown to be \( (\xi _{d_{2,\ell }}, \xi _{d_{3,\ell }},\xi _{d_{4,\ell }})=(0,0,0)\) from (32) by direct substitution. However, for \(\xi _{d_{1,\ell }}\), we have

This is the violation of the first dual constraint in (11) which is actually proportional to the magnitude of the dual violations used as criteria for adding the members, see (12). Such violation may be considerable, especially in the early member adding iterations. The warm starting routine [11, 13] will be applied to absorb it.

6.1.3 Centrality

In order to assess the centrality of the new point, we will compute complementarity products for all newly added variables. The pairs \((\bar{a},\bar{s}_a)\) are \(\mu _0\)-centered from (33). Below, we evaluate the remaining complementarity products \((\bar{q}_\ell ^{+},\bar{s}_{q_\ell ^{+}})\), \((\bar{q}_\ell ^{-},\bar{s}_{q_\ell ^{-}})\), \((\bar{x}_\ell ,\bar{s}_{x_\ell })\), \(\ell \in \{1,\ldots ,n_L\}\).

The above two equations show that for any \(j\in K\), either of the products \((\bar{q}_\ell ^{+})_j(\bar{s}_{q_\ell ^{+}})_j\) or \((\bar{q}_\ell ^{-})_j(\bar{s}_{q_\ell ^{-}})_j\) is always nearly \(\mu _0\)-centered when \(\sigma ^{+}=\sigma ^{-}\) depending on the sign of \((\bar{B}^Tu_\ell )_j\). Nevertheless, using the fact that the maximum number of non-zero entries in each column of \(\bar{B}\) is 2N for N-dimensional problem and the absolute value of these entries does not exceed unity, we have

Then, we get the following general estimate.

Similarly,

Finally,

On the other hand,

Then,

The estimates in (38), (39), and (42) show that the pairs \((\bar{q}_\ell ^{+},\bar{s}_{q_\ell ^{+}})\), \((\bar{q}_\ell ^{-},\bar{s}_{q_\ell ^{-}})\), \((\bar{x}_\ell ,\bar{s}_{x_\ell })\), \(\ell \in \{1,\ldots ,n_L\}\) have no significant outliers from the \(\mu _0\)-centrality because the shift-like terms involving \(|u_\ell |_\infty \) in the upper bounds are multiplied by \(\mu _0^{\frac{1}{2}} \). Therefore, their contribution to the violation of \(\mu _0\)-centrality is reduced to some extent. Moreover, these terms are found out to be small in practice.

7 Implementation, algorithmic parameters, and problem data

The interior point method is implemented in MATLAB (R2016a). All numerical experiments are performed on Intel(R) Core(TM) i5-4590T CPU, running at 2.00 GHz with 16 GB RAM. The interior point iterations stop when

or \(\mu <10^{-10}\), where \(\xi _p\) and \(\xi _d\) are given as (4).

7.1 Algorithmic parameters

The initial points for the case of cold-start are set \(x^0\), \(s^0\) both to unity and \(y^0\) to zero. The feasibility tolerances in (43) are set as \(\epsilon _p=\epsilon _d=10^{-6}\) when the linear systems (17) are solved using direct methods. Otherwise \(\epsilon _p=\epsilon _d=10^{-5}\). For the optimality tolerance, we use loose tolerances in the first few member adding iterations and then tighter in the last ones, i.e., \(\epsilon _{opt}=[10^{-2},10^{-2},10^{-2},10^{-2},\) \(10^{-3},10^{-4}]\), and then always \(10^{-5}\), unless specified. We use \(\tau =0.95\) in (7) for the step lengths and \(\beta =0.001\) in (12) for the the member adding criteria.

7.2 The preconditioner

In case of the cold-start, we use the simple preconditioner (29) as long as \(\mu >10^{-3}\) since there is a relative uniformity in the diagonal elements of the matrix \(\tilde{D}\) in (18). However, when \(\mu \le 10^{-3}\) or in general when the warm-starting strategy is invoked, we use the splitting preconditioner (26). To determine the threshold parameter \(\delta \) in (23), recall that m is the number of non-fixed degrees of freedom and \(n(\gg m)\) is the number of bars. We then set \(\delta \) to be the smallest of the largest m diagonal elements in \(\tilde{D}\) in (18) for the two-dimensional problems, and the smallest of the largest \(\left[ m/3\right] \) elements for the three-dimensional problems. This choice is based on performing many numerical experiments and at this stage we do not have any theoretical justification why we needed a larger number of the diagonal elements in \(\tilde{D}\) for two-dimensional problems compared to that of the three-dimensional problems. We have included Fig. 4 to give an insight into the eigenvalue distribution of the unpreconditioned \(\tilde{B}\tilde{D}\tilde{B}^T\)and preconditioned \(M^{-1}\tilde{B}\tilde{D}\tilde{B}^T\) matrices for small size two- and three-dimensional problems Example 5a I and Example 5c I in Table 1. The distributions are shown for the final linear programs in the member adding scheme and the last interior point iteration. The histograms display the number of eigenvalues of a given magnitude. The Fig. 4a and c show that eigenvalues of the upreconditioned matrices are spread over a large range roughly between \(10^{-7}{-}10^{8}\) for the two-dimensional and \(10^{-6}{-}10^{6}\) for the three-dimensional problems. However, for the preconditioned matrices the distribution clusters within the interval roughly 0.003–2 for the two-dimensional problem and 0.01–2 for the three-dimensional problem, see Fig. 4b and d. Moreover, most of these eigenvalues are clustered near 1.

Eigenvalue distributions of the unpreconditioned \(\tilde{B}\tilde{D}\tilde{B}^T\) and preconditioned \(M^{-1}\tilde{B}\tilde{D}\tilde{B}^T\) matrices for the problems Example 5a I and Example 5c I in Table 1 for the final LPs and during the last interior point iterations. Logarithmic scale for eigenvalue magnitude is used. a Example 5a I: Eigenvalue distribution of \(\tilde{B}\tilde{D}\tilde{B}^T\). b Example 5a I: Eigenvalue distribution of \(M^{-1}\tilde{B}\tilde{D}\tilde{B}^T, \ \hbox {nnz} (M)/\hbox {nnz}(\tilde{B}\tilde{D}\tilde{B}^T)=0.2003\). c Example 5c I: Eigenvalue distribution of \(\tilde{B}\tilde{D}\tilde{B}^T\). d Example 5c I: Eigenvalue distribution of \(M^{-1}\tilde{B}\tilde{D}\tilde{B}^T, \ \hbox {nnz} (M)/\hbox {nnz}(\tilde{B}\tilde{D}\tilde{B}^T)=0.0915\)

For the preconditioned conjugate gradient method (pcg), we set the maximum number of iterations to 100 when the simple preconditioner (29) is used and to 80 when the splitting preconditioner (26) is used. The tolerance is set to \(\max \{\min \{10^{-4},0.1||\xi ||_2\},10^{-6}\}\), where \(\xi \) is the residual given as in (4), i.e, we use tighter tolerance for the pcg when iterates are close to optimality.

Remark 5

For all of the two-dimensional problems in our numerical experiments, we always use direct methods for solving the linear systems (17) unless \(n> 4m\) including the case when the option is set to use iterative methods. This is because, direct methods significantly outperform the iterative ones as long as n remains comparable to m. In practice, we observed that this happens only in the first two member adding iterations for the AP3 filtering approach which is our choice.

7.3 The warm-start

If the option to use the warm-starting strategy is set to ’on’, then it is activated as early as in the third member adding iteration. We use the solutions obtained with tolerance \(\varepsilon _{opt}=0.1\) for warm-starting the third and fourth, \(\varepsilon _{opt}=0.01\) for the fifth and sixth, and \(\varepsilon _{opt}=0.001\) for the seventh and the other subsequent problem instances in the member adding iterations. This is because, the similarity between the problems increases as we approach the last stages of the member adding iterations.

Remark 6

When the problems are too close, i.e., when only very few members are added the initial points used in warm-starts often correspond to optimality tolerances which are smaller than the prescribed ones 0.001–0.1. In that case, we save the solution obtained after the third interior point iteration.

7.4 Problem data

For the numerical experiments, we solve the problems displayed in Fig. 5. The loads are all nodal of unit magnitude. The dimensions of the design domains are \(1\times 2\), \(1\times 2\), \(2\times 1\times 1\), and \(1\times 1\times 3\) of unit lengths. The problems are solved for three levels of nodal density distributions. The problem instances are named as Example 5a I, Example 5a II, Example 5a III, Example 5b I and so on. Their statistics are given in Table 1. The number of degrees of freedom is based on the problem formulation (10), i.e, for the variables \((a,q_\ell ^{+},q_\ell ^{-}).\) See also Remark 7. In all cases, we consider equal tensile and compressive strengths of unity. The optimal designs are given in Fig. 6 where the bars shown are those with cross-sectional area \(\ge 0.001a_{\max }\).

Remark 7

Note that the size of the corresponding standard linear program of the form (1) with the matrix A in (14) can be obtained from Table 1. For example, for the three-dimensional problem Example 5c III with 163, 452, 240 bars, the corresponding standard linear program (1) will have 653, 808, 960 primal variables and 163, 506, 471 constraints. Of course, by applying the member adding technique we avoid the need of dealing with problems of such excessive sizes.

Optimal designs for the problems in Fig. 5. The bars shown are those with cross-sectional area \(\ge 0.001a_{\max }\)

7.5 Starting structures

We always start with the structures shown in Fig. 1a for two-dimensional problems and Fig. 1b for three-dimensional problems and then upgrade these structures based on the member adding procedure described in Sect. 3.1.

8 Numerical results

The reported CPU times correspond only to solving the optimization problems. In Tables 2, 3, 4, 5, 6 and 7, the label “Final num. of bars” refers to the number of bars considered in the linear programming problem formulation of the last member adding iteration and not the number of bars with non-zero cross-sectional area.

8.1 The filtering approaches

We compare the three filtering approaches AP1, AP2, and AP3 in the member adding process described in Sect. 3.1 applied to test problem Example 5a. It is solved for three nodal densities

using the interior point method described in this paper where the linear systems (17) are solved using direct methods, and the warm-start strategy is used. The numerical results are presented in Tables 2 and 3. In the first table, we present all approaches that additionally include the case when the problem is solved for all potential member bars. In the second table, we compare only AP2 and AP3 as the problem is larger in this case. In general, the results illustrate two outcomes. The first one is the efficiency of the member adding method, i.e, it obtains solutions using approximately \(1\%\) of all the possible members and it needs significantly less time than a method which uses all potential bars, see columns 6 and 7 in Table 2. The second observation is that the approach AP3 seems to outperform the others. It obtains solutions faster by using fewer member adding iterations keeping the sizes of the problems small enough, somewhere between those obtained by strategy AP2 with \(\alpha =0.1\) and \(\alpha =1\). We have also noticed this behaviour has been consistent for the other examples. Based on these results, AP3 becomes our method of choice and we follow this approach in all of the next examples.

8.2 Cold-start versus warm-start

In Table 4, we present numerical results to compare the warm-start and cold-start strategies. The linear systems (17) are solved using direct methods. As it can be seen in columns 5 and 10, the use of the warm-start strategy reduces the computational time. This is achieved by reducing the number of interior point iterations, see Table 5.

Remark 8

In the early member adding iterations, warm-starting strategy is able to save merely a few interior point iterations. However, in later stages of the member adding, when only a few new members are added and the problem instances do not change significantly, the warm-starting technique is very successful. In Table 5 the full history of the interior point iterations is reported for a number of examples with cold-start and warm-start strategies used. Additionally, in Fig. 7 we present the corresponding optimal designs obtained with cold start and warm start strategies, respectively. The reader will observe that there is no noticeable difference in the optimal solutions obtained with these two variants of the starting strategies.

Remark 9

We have observed that, the efficiency of the warm-start strategy further improves when the iterative methods are used to solve the linear systems. This is because it promotes the early start of the more efficient splitting preconditioner.

8.3 Direct methods versus iterative methods

In this section, we present numerical results to make comparisons between the use of the direct and iterative methods to solve linear system (17). For direct methods, we use the Matlab built-in function chol to find the Cholesky factorization of the coefficient matrix. Once again, we consider some of the problems listed in Table 1 and report their solution statistics in Table 6. The computational times reported in columns 4 and 8 demonstrate the efficiency of the iterative methods specially for the three-dimensional problems, see the CPU times for Example 5d II in Table 6. There are two main reasons why their efficiency for two-dimensional problems is not as pronounced as for three-dimensional ones. Firstly, as pointed out in Sect. 7, we needed more non-zero entries of the diagonal matrix \(\tilde{D}\) to determine the splitting preconditioner for the two-dimensional problems. This makes the preconditioner more dense and more expensive. Secondly, the linear systems solved to compute the predictor and corrector directions require relatively larger number of pcg iterations for two-dimensional problems, see columns 11 and 12 of Table 6. These pcg iterations seem to be reduced if we consider a denser preconditioner, but this leads to longer run times.

It is worth mentioning that three-dimensional problems are more relevant for practical applications. However, they involve more complicated grids and this causes a quick loss of sparsity in computations. The use of iterative linear algebra solver produces more spectacular savings over direct methods in this case.

8.4 Large-scale problems

In this section, we consider solutions of all the problem instances listed in Table 1 including the ones with the finest nodal density distributions. We include numerical results when the problems are solved by a commercial solver MOSEK (version 7) [27] with Matlab interface. This is to give an insight into the overall performance of the interior point method of this paper and the techniques employed in reference to existing solvers. For MOSEK, we set the algorithm to interior point method, the maximum number of interior point iterations to 70, pre-solve ’on’, the primal and dual feasibility tolerances \(10^{-6}\), a member adding dependant optimality tolerance \([10^{-2},10^{-2},10^{-2},10^{-2},\) \(10^{-3},10^{-4}]\), and then always \(10^{-5}\). This is similar to the settings presented in Sect. 7 for our implementation.

Remark 10

The numerical results in Table 7 for using MOSEK and our version of interior point method should not be considered as a direct comparison between the two solvers for many reasons. There are important differences between these two solvers. First, the implementations use different programming languages. Moreover, MOSEK uses direct methods to solve linear systems in its interior point implementation. In addition, the linear systems are reduced only to the normal equation systems (9) (or (15) for the truss layout problems of this paper) and not further to the smaller system (17). MOSEK uses powerful presolving which may significantly reduce the problem size and as a result simplify the normal equations. For example, MOSEK’s CPU with pre-solve turned ‘off’ for the first eleven problems in Table 7 is 75, 250, 522, 214, 605, 1890, 99, 785, 8413, 221, and 9555 s. Our solver does not use pre-solve. Finally, MOSEK uses its own default initial point and does not use a warm-start point.

The numerical results presented in Table 7, particularly columns 4 and 8, show that the IPM of this paper is competitive for two-dimensional problems and very efficient for three-dimensional problems, see the last four rows of these columns. Once again as mentioned in the discussion on direct versus iterative methods, most practical applications are spacial, i.e., three-dimensional problems, and they are more challenging to solve due to their excessive size. Therefore, having an efficient tool such as the one presented in this paper able to solve three-dimensional problems is paramount.

Remark 11

Note that for a very dense nodal distribution, the solution of test problem Example 5a is expected to be \(\pi \) [10], and those of problems Example 5b and d are expected to converge to 7.78480 and 19.67476 which are the corresponding analytic solutions to the exact least-weight truss layout problems as shown in [31] and [34], respectively.

9 Conclusions

We have described a primal-dual interior point method that exploits the algebraic structure of multiple-load plastic layout optimization of truss structures. The method is supported with a warm-start strategy that benefits from the closeness of the problems after performing few member adding iterations. Moreover, large linear systems arising in the interior point method are solved using Krylov type iterative method. The numerical results in Sect. 8 illustrate the overall efficiency of the method to solve large-scale problems. The method excels on three-dimensional problems which are more challenging due to high connectivity of the grids involved, and are significantly more important for practical engineering applications than two-dimensional problems.

References

Achtziger, W.: Truss topology optimization including bar properties different for tension and compression. Struct. Optim. 12(1), 63–74 (1996)

Achtziger, W.: Multiple-load truss topology and sizing optimization: some properties of minimax compliance. J. Optim. Theory Appl. 98(2), 255–280 (1998)

Achtziger, W., Bendsøe, M., Ben-Tal, A., Zowe, J.: Equivalent displacement based formulations for maximum strength truss topology design. IMPACT Comput. Sci. Eng. 4(4), 315–345 (1992)

Ben-Tal, A., Bendsøe, M.P.: A new method for optimal truss topology design. SIAM J. Optim. 3(2), 322–358 (1993)

Bendsøe, M., Sigmund, O.: Topology Optimization: Theory, Methods and Applications. Springer, Berlin (2003)

Chai, J.S., Toh, K.C.: Preconditioning and iterative solution of symmetric indefinite linear systems arising from interior point methods for linear programming. Comput. Optim. Appl. 36(2), 221–247 (2007)

D’Apuzzo, M., De Simone, V., di Serafino, D.: On mutual impact of numerical linear algebra and large-scale optimization with focus on interior point methods. Comput. Optim. Appl. 45(2), 283–310 (2010)

Dorn, W., Gomory, R., Greenberg, H.: Automatic design of optimal structures. J. de Mécanique 3, 25–52 (1964)

Gilbert, M., Darwich, W., Tyas, A., Shepherd, P.: Application of large-scale layout optimization techniques in structural engineering practice. In: 6th world congresses of structural and multidisciplinary optimization (2005)

Gilbert, M., Tyas, A.: Layout optimization of large-scale pin-jointed frames. Eng. Comput. 20(8), 1044–1064 (2003)

Gondzio, J.: Warm start of the primal-dual method applied in the cutting-plane scheme. Math. Program. 83(1), 125–143 (1998)

Gondzio, J.: Interior point methods 25 years later. Eur. J. Oper. Res. 218(3), 587–601 (2012)

Gondzio, J., González-Brevis, P.: A new warmstarting strategy for the primal-dual column generation method. Math. Program. 152(1), 113–146 (2015)

Gondzio, J., González-Brevis, P., Munari, P.: Large-scale optimization with the primal-dual column generation method. Math. Program. Comput. 8(1), 47–82 (2016)

Graczykowski, C., Lewiński, T.: Michell cantilevers constructed within trapezoidal domains: Part IV: complete exact solutions of selected optimal designs and their approximations by trusses of finite number of joints. Struct. Multidiscip. Optim. 33, 113–129 (2007)

He, L., Gilbert, M.: Rationalization of trusses generated via layout optimization. Struct. Multidiscip. Optim. 52(4), 677–694 (2015)

Hemp, W.: Optimum Structures. Clarendon Press, Oxford (1973)

Hestenes, M.R., Stiefel, E.: Methods of conjugate gradients for solving linear systems. J. Res. Natl. Bur. Stand 49, 409–436 (1952)

Jarre, F., Kočvara, M., Zowe, J.: Optimal truss design by interior-point methods. SIAM J. Optim. 8(4), 1084–1107 (1998)

Levy, R., Su, H.H., Kočvara, M.: On the modeling and solving of the truss design problem with global stability constraints. Struct. Multidiscip. Optim. 26(5), 367–368 (2004)

Lewiński, T., Zhou, M., Rozvany, G.I.N.: Exact least-weight truss layouts for rectangular domains with various support conditions. Struct. Optim. 6(1), 65–67 (1993)

Lewiński, T., Zhou, M., Rozvany, G.I.N.: Extended exact least-weight truss layouts-Part II: Unsymmetric cantilevers. Int. J. Mech. Sci. 36, 399–419 (1994)

Lewiński, T., Zhou, M., Rozvany, G.I.N.: Extended exact solutions for least-weight truss layouts-Part I: cantilever with a horizontal axis of symmetry. Int. J. Mech. Sci. 36(5), 375–398 (1994)

Lübbecke, M.E., Desrosiers, J.: Selected topics in column generation. Oper. Res. 53(6), 1007–1023 (2005)

Mehrotra, S.: On the implementation of a primal-dual interior point method. SIAM J. Optim. 2(3), 435–449 (1992)

Michell, A.G.M.: The limits of economy of material in frame structures. Phil. Mag. 8(47), 589–597 (1904)

MOSEK ApS: The MOSEK optimization toolbox for MATLAB manual Version 7.1 (Revision 63) (2015). https://docs.mosek.com/7.1/toolbox/index.html

Pedersen, P.: On the optimal layout of multi-purpose trusses. Comput. Struct. 2(2), 695–712 (1972)

Rozvany, G.I.N., Bendsøe, M.: Layout optimization of structure. Appl. Mech. 48(2), 41–119 (1994)

Rozvany, G.I.N., Hill, R.: Optimal plastic design: superposition principles and bounds on the minimum cost. Comput. Methods Appl. Mech. Eng. 13(2), 151–173 (1978)

Rozvany, G.I.N., Sokół, T., Pomezanski, V.: Fundamentals of exact multi-load topology optimization—stress-based least-volume trusses (generalized Michell structures)—Part I: plastic design. Struct. Multidiscip. Optim. 50(6), 1051–1078 (2014)

Saad, Y.: Iterative Methods for Sparse Linear Systems. Society for Industrial and Applied Mathematics, Philadelphia (2003)

Sokół, T., Rozvany, G.I.N.: On the adaptive ground structure approach for multi-load truss topology optimization. In: 10th world congresses of structural and multidisciplinary optimization (2013)

Sokół, T., Rozvany, G.I.N.: A new adaptive ground structure method for multi-load spatial Michell structures. In: 3rd Polish congress of mechanics and 21st computer methods in mechanics (2015)

Stolpe, M.: Truss optimization with discrete design variables: a critical review. Struct. Multidiscip. Optim. 53(2), 349–374 (2016)

Stolpe, M., Svanberg, K.: A stress-constrained truss-topology and material-selection problem that can be solved by linear programming. Struct. Multidiscip. Optim. 27(1), 126–129 (2004)

Wright, S.J.: Primal-Dual Interior-Point Methods. SIAM, Philadelphia (1997)

Acknowledgements

The research was supported by EPSRC Grant EP/N019652/1.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Weldeyesus, A.G., Gondzio, J. A specialized primal-dual interior point method for the plastic truss layout optimization. Comput Optim Appl 71, 613–640 (2018). https://doi.org/10.1007/s10589-018-0028-9

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-018-0028-9