Abstract

The cellular automata model was described by John von Neumann and his friends in the 1950s as a representation of information processing in multicellular tissue. With crystalline arrays of cells and synchronous activity, it missed the mark (Stark and Hughes, BioSystems 55:107–117, 2000). Recently, amorphous computing, a valid model for morphogenesis in multicellular information processing, has begun to fill the void. Through simple examples and elementary mathematics, this paper begins a computation theory for this important new direction.

Similar content being viewed by others

1 Introduction

Multicellular information processing is the computational basis for morphogenesis in living organisms. How can a process compute a pattern while working in a multicellular medium which is lacking a rigidly defined architecture for sharing information? The process is distributed over a network of similar cells, growing and dividing at different speeds, and reacting to different sets of neighbors. Chaotic irregularities in neighborhood structure, computational speed and in cell-to-cell communication seem to fly in the face of the strict order and control usually expected of computational processes. The creation of order in an asynchronous, randomly structured network of cells is the mystery of amorphous computing.

This paper presents a mathematical framework for amorphous processes which gracefully supports routine calculations, proofs of theorems and formal computation theory. First, the model is defined. Then more than a dozen processes are described. Their behavior is explored mathematically and by careful proofs of basic theorems. Useful mathematical structures (e.g., the state-transition graph, computations as Markov chains) develop out of applications of the model. The examples are seen to illustrate important programming styles. An algebraic approach to complexity is demonstrated through detailed calculations. The last half of the paper begins with proofs of non-computability results and an excursion into classical recursion theory. Finally, I conclude with a methodical construction of TearS—a complex dynamic amorphous process.

The wonderful possibility of extending computation theory to distributed systems such as these came to me in conversations with Professor John McCarthy (1978, Stanford University, Palo Alto, CA). Subsequently, I have received significant help in developing and publishing these ideas from Dr Leon Kotin (Fort Monmouth, NJ), Dr Edmund Grant (Tampa, FL), two very helpful referees and the editor of this journal. I sincerely thank all six of you.

… organisms can be viewed as made up of parts which, to an extent, are independent elementary units. … [A major problem] consists of how these elements are organized into a whole …” (John von Neumann 1948)

[A] mathematical model of a growing embryo [is] described … [it] will be a simplification and an idealization, … cells are geometrical points … One proceeds as with a physical theory and defines ‘the [global] state of the system. (Alan Turing 1952)

An amorphous computing medium is a system of irregularly placed, asynchronous, locally interacting computing elements. I have demonstrated that amorphous media can … generate highly complex pre-specified patterns. … [I]nspired by a botanical metaphor based on growing points and tropisms, … a growing point propagates through the medium. (Daniel Coore 1999)

2 The model

A fixed finite state automata A is used to characterize the computational behavior of each cell in a multicellular network. The initial and accepting states are not used, so the automaton is represented by

where Q is the set of cell-values, Q + is the set of input values (describing multisets of values of neighboring cells), α is the value transition function (or relation in the non-deterministic case)

giving an active cell’s next value, and input is a function (or relation) which reduces the values of neighbors to a value in Q +.

The network consists of a set C of cells connected by a set \(E\subseteq C^2\) of edges. (C, E) is assumed to be a finite simple graph—i.e., edges are undirected (cd ∈ E if dc ∈ E), there are no self-edges (\(cc \notin E\)), and there are no multiple edges. Edges represent communication (with neighbors) (Fig. 1). Using E ambiguously, the set of cells neighboring a given cell c is denoted E(c) = {d | cd ∈ E}, and when c is included E +(c) = E(c) ∪ {c}. The cell-values seen in E(c) give us a multiset

which characterizes c’s environment. In the theory (C, E) is a variable. The input function reduces multisets M to

Q + is a finite set of values describing multisets of Q, so it may be convenient to include Q in Q +. The cells of E(c) are anonymous (i.e., they have no addresses and so cannot be distinguished) so the various assignments giving for example M = {1, 0, 0} are not distinguished by input.

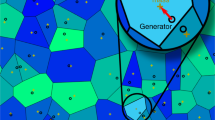

A network (C, E) of 200 cells on a torus for HearT (Sect. 7)

The term state is reserved for networks. A function s: C→ Q indicating values s(c) ∈ Q for cells c is a (network) state. The multiset M of neighboring values at c is now

But c sees only input(s[E(c)]) ∈ Q +. The set of all states is a set exponent

—a standard notation motivated by

\((Q^C)^\mathbb{N},\) is the set of all infinite sequences of states.

An automaton A describes how each cell changes its value. Given a state s, an active cell c will change its value from s(c) to α(s(c), input(s[E(c)])). Communication is based on c reading information from its environment, rather than neighbors sending messages to c, so there will be no input buffers and no overflow problems.

Cell-activity in amorphous computing is asynchronous. Specifically, a state change is determined by the activity of a random set \(\sigma\subseteq C\) of cells. Given s and σ the next state s′ is

This step is denoted s ⇒ σ s′. Write s ⇒ s′ when the transition is possible for some σ. Usually (if α and input are functions) non-determinism is restricted to the random choice of σ, and so s ⇒ σ s′ and s ⇒ σ s′′ implies s′ = s′′.

A schedule \(\sigma_0, \ldots,\sigma_n, \ldots\) of cell activity from an initial state s 0 determines a computation

Computations may be visualized as infinite paths through the tree of all finite sequences of states ordered by sequence-extension. If at some point s n ⇒ σ s n for all σ (equivalently s n ⇒ C s n ) then s n is a halting state and the computation is said to halt. Whenever s(c) ≠ s′(c) for s ⇒ c s′, the cell c is said to be unstable in s.

The measurement of behavior is based on the probability of activity P a (σ) and the initial-state probability P i (s). Often P a (σ) = δ ||σ|| · (1 − δ)||C−σ|| where δ is the probability of a single cell being active and P i (s) = ||Q||−||C||. The probability of a computation step s ⇒ s′ is

where ζ = {d ∈ C | s ⇒ d s} is the set of cells stable in s and ρ = {c | s(c) ≠ s′(c)} the set of cells that must change their value. P s (s,s′) = 0 if not s ⇒ s′. For every \(s, 1=\Upsigma_{t\in Q^C}P_s(s,t).\) The probability of a finite sequence being generated as a computation is

For example P c {〈s 0, s 0〉} = P i (s 0) · P s (s 0, s 0) = ||Q||−||C|| · (1 − δ)C-ζ, where ρ is empty and (C − ζ) is the set of cells not stable in s 0 (and so not active).

Finally the probability measure μ on the set \((Q^C)^\mathbb{N}\) of all infinite sequences \({\overline s}\) of states begins on the basic open sets \({\mathcal O}^{\langle s_0, \ldots s_m\rangle}\) of infinite sequences extending 〈s 0,… s m 〉

and continues on to the Borel sets using Boolean operations and limits. For example, in a few minutes one can work from this definition to the measure of \({\mathcal C}\), the set of all computations, to get \(\mu({\mathcal C})=1\) and so the infinite set \((Q^C)^\mathbb{N}-{\mathcal C}\) of infinite sequences which are not computations has measure 0. \((Q^C)^\mathbb{N}\) is an infinite set as large as the set of real numbers ℜ.

The analysis presented here sees behavior in the context of the set of all computations. The probability of success or failure is the μ-measure of corresponding sets of computations. Footnote 1 The potential decisions, which step-by-step generate branches filling out these sets of computations, should be viewed as thermodynamic events. In many ways, the set of \(2^{\aleph_0}\) potential computations is analogous to the decimals of [0,1].

Finally, there are issues of permissible information. If we are to study amorphous computing then the introduction of information that is not computed is as forbidden to us as sneezing into a petri dish would be to a microbiologist. Thus, synchronous activity, serial activity and waves of activity are special cases falling outside of the ideal. The ideal I am speaking of is mathematical (the basis of mathematical tractability), it is not necessarily what we see in Nature.

Homogeneous programming has every cell using the same program—this is to avoid hiding information in the program assignment. But the cell program can have irrevocable branches which lead to different cells committed to different subprograms; this is acceptable because the pattern is computed from a random initial state in a uniform way.

These concerns lead to processes that I call absolutely amorphous. Absolutely amorphous processes are given the information in (Q, Q +, α, input) only. They are important to the theory, but are not common in Nature. Daniel Coore’s model (2005) is amorphous but not absolutely amorphous. It allows some structure outside of α and input—e.g., non-random initialization of cells, cells with individual clocks, Footnote 2 and wave-like cell activity. After the non-computability result in Sect. 9, models that are not so absolutely amorphous take center stage.

Each (C, E) represents a particular architecture for sharing information. In order to study amorphous processes, (C, E) must vary freely as the general architecture-free theory evolves. Presently, (C, E) does not vary while a process is running; except in TearS, which is dynamic in that cells may die, new cells may be added and cells may move.

The investigations presented here build on this model—demonstrating its generality in examples, its tractability in calculations and proofs, and its power as a foundation for a computation theory. Except where credit is given, the analysis, theorems and proofs presented here are the work of the author.

3 2PartitioN

Imagine programming a living cell. Colonies of your cells eventually develop links Footnote 3 allowing neighboring cells to exchange information. You hope that the cell–cell communication will lead to a global behavior for the colony—even though the architecture and the speed with which cells execute their program is not under your control. Nevertheless, using only your ability to program a cell, you hope to orchestrate the behavior of the colony.

The first programming experiment is named 2PartitioN. In it, cells are finite-state automata with values \(Q=\{0,1\}\) and with the ability to read the collective values of neighbors. According to the program, an active cell will change its value if it has a neighbor with the same value. In other words, if an active cell c with value s(c) has a neighbor d with s(c) = s(d), then it will change its value to 1 − s(c).

2PartitioN is defined by Q = {0, 1}, Q + = Q ∪ {01},

and for every multi-set M of neighboring cell-values

On C5, a ring of five cells, 2PartitioN never halts because a circuit of odd length cannot avoid a 00 or 11 edge. A halting state exists if and only if the colony’s network is bipartite. Footnote 4 i.e., our process can find a partition. This is true for every finite network. Thus, the network is a free variable, as it must be in amorphous computing, and 2PartitioN is a general program for determining bipartiteness. The input is the network.

Given \(X\subseteq C\) the external boundary, ∂X, of X, is defined

Theorem 1

Halting is Possible for 2PartitioN For bipartite (C, E), halting states exist and every \(s_0\Rightarrow_{\sigma_0}\ldots\Rightarrow_{\sigma_{m\text{-1}}}s_m\) has an extension

with s m+N halting. If (C, E) is not bipartite, then halting is impossible. Footnote 5

Proof

Assume that (C, E) is bipartite. For s m , let \(X_m\subseteq C\) be a maximal connected set over which cd ∈ (E∩ X 2 m ) implies s m (c) ≠ s m (d), then

defines s m+1. If s m is not halting, then X m ≠ C and ∂X m is a non-void set of unstable cells, and s m+1(d) = 1 − s m (d) = 1 − s m (c) = 1 − s m+1(c) for every d ∈ ∂X m . Choose X m+1 to be a maximal connected extension of X m ∪ ∂X m over which s m+1(c) ≠ s m+1(d)

Continue with \(s_{m+1} \Rightarrow_{{\partial X}_{m+1}} \; s_{m+2}\) etc. Since \(X_m\subset X_{m+1}\subset \cdots \subseteq C\) the process reaches C before N = ||C|| steps. A fixed-point X m = X m+1 implies that \(\partial X_m\subseteq X_{m+1}-X_m\) is empty, and so X m = C. At this point s m+N is halting. This computation is just one of infinitely many. □

Another approach to proving such theorems begins with a halting state r. Then set X m to be the maximal set (not necessarily connected) of cells c for which s m (c) = r(c). If s m is not halting, then ∂X m is non-void and we may continue as above.

Theorem 2

Non-Halting States are ⇒-Connected Given any (C, E) and any pair s 0, t of states, with s 0 non-halting, a computation \(s_0\Rightarrow\ldots\Rightarrow t\) from s 0 to t exists.

Proof

W.l.o.g, assume cd ∈ E such that s 0(c) = s 0(d). Let X 0 be a maximal connected subset of s −10 (0) = {c|s 0(c) = 0}, then \(s_0 \Rightarrow_{{X}_{0}}\,s_1. \) If X 0 has more than one cell, then every cell in X 0 is unstable, so \(X_0\subseteq s_1^{-1}(1). \) For odd j, let X j be the maximal connected subset of s −1 j (1) which extends X j-1; for even j, let X j be the maximal connected subset of s −1 j (0) which extends X j{-1. Then \(s_j \Rightarrow_{{X}_{j}}\,s_{j+1}. \) When s −1 k (0) = C, we set X k = t −1(1) and get \(s_k \Rightarrow_{{X}_{k}}\,t.\) □

Theorem 3

2PartitioN Halts on Bipartite Networks Given a bipartite (C, E) and random computation s 0 ⇒ ··· ⇒ s m ⇒ ··· for 2PartitioN

Proof

If cells are active with probability 0.5, then P{σ is active} = 0.5||σ|| · 0.5||C−σ|| = 0.5||C||. In general, if every cell c has a probability of activity 1 > δ c > 0, then there is a δ > 0 for which

The probability of random activity taking a computation from s 0 to t in at most ||C|| steps (as in Theorem 1) is at least δ ||C||. So for N = k||C||,

Since \(\lim_{k\rightarrow\infty}(1-\delta)^k = 0, \) the probability of never halting is 0.□

Of course this does not mean that every computation on a bipartite network halts. There are infinitely many non-halting computations—just start with a non-halting s 0 and an edge ab ∈ E with s(a) = s(b), then exclude a, b from \(\sigma_0,\ldots\sigma_m,\ldots\). Something similar is seen in basic measure theory—μ([0,1]) = 1 and μ(R) = 0 for the set R of rationals, so

and still there are infinitely many numbers that are not irrational. Calculus, with infinite decimals as a metaphor for infinite computations and the set measures as metaphor for probability, is a part of my conceptual framework for amorphous computations.

In the space of all infinite computations, only three things are needed to deduce that some property (say halting) occurs with probability 1. First, every finite computation must have extensions with that property. Second, there must be an upper bound on the number of extra steps to get these extensions (this is a consequence of the finiteness of C and Q). Third, once an extension with the desired property is reached, every extension of the successful extension will have the property (true for halting states).

0,1-Lemma

Let A be a process and \(\Upomega\) be a property of computations. If (1) extensions of finite computation with property \(\Upomega\) also have \(\Upomega\), and (2) every finite computation has an extension with property \(\Upomega\); then with probability 1, A′s computations eventually have property \(\Upomega.\)

There may be infinitely many computations which never satisfy \(\Upomega\), but this lemma tells us that we will never see them. The fairness condition stated in Section 2 of Aspnes and Ruppert (2007) describes the expected non-determinism in a way similar to this Lemma, then later states “an execution will be fair with probability 1”. This paper, which came to my attention from my referees, seems to be moving toward the same measure-theoretic view of non-determinism used here.

4 BeatniKs and 3PartitioN

Here we have two simple variations on 2PartitioN. The first, BeatniKs, is a relaxed version which can have halting states which are not accessible, and so this process has a probability of halting which is strictly intermediate between 0 and 1. The second, 3PartitioN solves the NP-complete problem of identifying 3-partite graphs.

Beatniks After World War II people who tried to be different might be called “beatniks”. Could everyone be a beatnik? Let Q = {0, 1}, Q + = Q,

and for multisets M = s[E(c)]

where the random choice is evaluated every time that c is active. This process has halting states on networks which are bipartite or almost bipartite. Let Kn denote the complete graph on n vertices. On K(2m) a state s is halting if and only if ||s −1(0)|| = m, so K6 has 20 halting states. \(K3,K5,\ldots K(2m+1)\) have no halting states. For i > 2, Ki is not bipartite.

Theorem 4

BeatniKs May Not Halt There are no halting states on complete networks with 2m + 1 cells. Every tree with more than one cell has halting states. However, even when halting states exist they may be ⇒-inaccessible from other non-halting states. When such halting states exist the probability of halting is strictly between 0 and 1.

Proof

Every state s on an odd complete network K(2m + 1), will have at least m + 1 cells of a given value—say 0. For any 0-valued cell c, s[E(c)] has at least half of its values equal to s(c) and so input = 1 − s(c) or input = random({0, 1}), so c is unstable. At least half of the cells in any state on K(2m + 1) are unstable. There are no halting states.

Given a tree, construct a halting state by assigning 0 to an arbitrary first cell, then 1 to its neighbors, then 0 to their neighbors, etc.

A binary tree T7 = [w, [u 1, [v 1, v 2 ] ] , [v 3, [u 2, u 3]]] on C = {u 1,…v 1,…,w} has four never-halting states r 1, r 2, r 3, r 4 defined by

r 3 = 1 − r 1 and r 4 = 1 − r 2. In each state, w is unstable, while the u i and v j are stable. Sets {r 0, r 1} and {r 3, r 4} are closed under ⇒. Still, being a tree, T7 has halting states t 1 and t 2 = 1 − t 1. These halting states are inaccessible from r 0, r 1, r 2, r 3. Initial states could be halting or never-halting and so, on such trees,

□

It is not uncommon to see “something happens with probability 1” or, “… with probability 0”. But in Beatniks on trees like T7 the probability of halting is strictly between 0 and 1. This suggests that our work could go beyond traditional 0,1-laws and into analysis Footnote 6 using Lebesgue integration Footnote 7 of real functions on the product space \((Q^C)^\mathbb{N}\) with measure μ (Sect. 2).

3PartitioN Using a transition function α on Q = {0, 1, 2} which allows a cell c to be stable if \(s(c)\notin s[E(c)], \) tripartite graphs can be partitioned in a way similar to 2PartitioN on bipartite graphs. Although they are not what we think of as being amorphous, every circuit \(C2, C3,\ldots Cm,\ldots\) has a halting state. Footnote 8 While complete graphs \(K4, K5,\ldots Kn,\ldots\) have no halting states. Footnote 9 Tripartite networks have at least six halting states.

This process is interesting because the problem of finding a partition for a tripartite graph is NP-complete (Garey and Johnson 1979). And so our eventual proof that 3PartitioN halts on tripartite (C, E) could be taken as a demonstration of the computational power of this model. Each network represents one instance of this family of problems. Since networks are free variables, one may think of them as being the input data structures. There is little intelligence in 3PartitioN. Instead this process is executing a blind search of the state space (which will take exponential time) until a state satisfying a simple test is reached. However, 2PartitioN’s global action is not NP-complete.

Seven types of neighborhoods are described Q + = {0, 1, 2, 01, 02, 12, 012}. Let

Theorem 5

Halting for 3PartitioN If (C, E) is tripartite, then for every s 0 there exists a halting computation \(s_0\Rightarrow_{\sigma_0} \cdots \Rightarrow_{\sigma_{N-1}}s_N\ldots\) with s N appropriately partitioning (C, E) and N ≤ 2||C||. 3PartitioN almost always (i.e., with probability 1) halts on tripartite (C, E).

Proof

Let (C, E) have a halting state t. If s m is not halting, let

and \(s_m \Rightarrow_{\sigma_m}\;s_{m+1}\). Since unstable cells appear as edge pairs, σ m is non-void. No cell c can change its value more than twice before it matches t(c) and is dropped from σ m . So, the process halts (possibly at t) in at most \(2\|C\|\) steps. □

At first glance, this theorem seems to say that this NP-complete problem can be solved in \(<2\|C\|\) steps. Obviously false. Remember that we started with an answer then designed a computation to lead us to an answer. We didn’t have to use a halting state in the proof of halting for 2PartitioN. So it is no surprise that this computation is short and to the point. The hard part is to find t. If we could always guess the right computation then tripartition would be easy, and we would have something like P = NP. Footnote 10

Non-determinism plays a key role in this proof. Footnote 11 There are many ways to see that randomness can add real power to algorithms. Another is based on comparing the asynchronous state-transition graph (Q C, ⇒) to its synchronous subgraph (Q C, ⇒ C ) which is often not even connected. Since computations correspond to paths through their corresponding graph the possibilities for asynchronous computations are far greater than for synchronous. Recently, Nazim Fates has made a similar point “Randomness Helps Computating” for cellular automata [11].

5 The state transition graph (Q C, ⇒, T)

Computations can be viewed as paths through the state space. Asynchronous activity often gives computations access to nearly every state.

From an automaton (Q, Q +, α, input) for cells and a network (C, E) defining neighborhoods, (Q C, ⇒) is the state transition graph. The state-transition graph may contain halting states which eventually stop random paths. For other processes, computations may be trapped by attractors—minimal disjoint non-void subsets of Q C which are closed under ⇒. Attractors are seen in HearT, HearT* and SpotS3.

2PartitioN is an unrestricted search which eventually stumbles into a halting state. Such searches take time which is exponential in \(\|C\|: \) add one cell to C and the number of states (and the expected search time) increases by a factor of ||Q||. Search time is on the order of ||Q||||C||. A computation could reach a state s one cell-value away from a halting state and still wander away from the solution losing all of the ground gained. This is the nature of the undirected random search.

\(U(s)=||\{c\ |\ c\; \hbox{is unstable in }\; s\}||\) determines a gradient on the state space leading down to halting states. Giving a process the ability to move down such a gradient will dramatically improve the expected halting time; ideally, while preserving the power of asynchronous activity. This must be done locally for the process to remain amorphous.

The state space also provides a framework for algebraic calculations. From activity probabilities p(σ), we can define transition probabilities

Expected values can be defined and calculated. For example, the expected halting time of computations starting at s, h(s) (here defined on states s, not computations \({\overline{s}}\) as in Sect. 4) is defined recursively by equations

for unstable s and halting r. If halting states exist these equations may be solved. Using the uniform probability \(q(s)=\|Q\|^{-\|C\|}\) that s is initial, the expected halting time for the process is

This can be expressed by an equation using the transition matrix T.

Imagine states (linearly ordered, or with integer codes) as indices for vectors and matrices. Define the transition matrix T, by

Probability vectors \({\overline q}\) are column vectors indexed over Q C. Let \({\overline q}_s=q(s)\) be the probability that s is an initial state. Then,

that t is the mth state of a random computation. Examples of these algebraic calculations are given in Sect. 6.

Every process involves three graphs—the cell-communications graph (C, E), the value-transition graph (Q, →) defined by α, and the state-transition graph (Q C, ⇒). A state s:(C, E)→ (Q, →) is a homomorphism if it preserves edges—i.e., if

In 2PartitioN, every state is a homomorphism because (Q, →) is complete.

Homomorphisms are similar to continuous functions. Homomorphisms also exist between (C, E) and itself, or (Q C, ⇒) and itself. In these cases, a one-one homomorphism is an automorphism. \(\mathcal{H}\subset Q^C\) is the set of states which are homomorphisms. Any minimal non-void subset of Q C which is closed under ⇒ is an attractor. Often, attractors are subsets of \(\mathcal{H}\)—e.g., HearT and 2PartitioN. On C4, HearT has one attractor which contains 168 homomorphisms (mechanically counted).

Now, in addition to infinite branches in a ⇒-tree (Sect. 2), and decimals in [0, 1], we have paths through the state graph (Q C, ⇒, T) as metaphors for computation.

6 Four programming styles

2PartitioN may be thought of as a random search mechanism with a definition (in local terms) of a halting state. Search continues in response to a failure of a state to satisfy the definition. 2PartitioN* is a gradient-descending process whose computations tend to move down the instability gradient of (Q C, ⇒, T) until the definition is satisfied. A third style can be seen in 2PartitioN #—a non-homogeneous. Footnote 12 version of 2PartitioN which never activates a given cell. Search is now restricted to states extending the given cell’s original value. This is motivated by the idea of a crystal growing from a “seed”. A fourth style uses control on activity. In 2PartitioNw, cell activity occurs in a wave rolling through the network. A wave of activity is used by Turing (1952) in his solution to the leopards’ spots problem.

2PartitioN* For the instability gradient, we might try

and α(state,input) = 1 − input. But this process fails on the tree T7 of Theorem 4. The correct definition follows.

Let P{M = 0} be the fraction of M’s values equal to 0, define

and α(state, input) = input. For s 0 on T7, {s 0,s 1} is no longer closed under ⇒. A state s is halting if and only if

which is equivalent halting for 2PartitioN. But is 2PartitioN* faster?

Using the algebraic methods described in Sect. 5 on C6 with activity probabilities p(c) = 2−1 and Q

C indexed as follows

we calculate

Footnote 13 transition probabilities for 2PartitioN* (2PartitioN) as…

we calculate

Footnote 13 transition probabilities for 2PartitioN* (2PartitioN) as…

The expected halting times h(s) for 2PartitioN* are defined by equations

Equivalently, working in matrices and vectors with these indices, T*’s values include

with the symmetry \(T_{j,i}^{\ast} = T_{65-j,65-i}^{\ast}\). The expected halting times, for t halting and s non-halting, are defined using matrices as

where

where  is the vector of all 1’s and \({\overline h}\) is the vector of expected halting times—e.g., \({\overline h}_{22}=0\)—all indexed over Q

C. Solve these equations, then for the initial state use \({\overline q}=<\cdots 2^{-6},\cdots>\) to get

is the vector of all 1’s and \({\overline h}\) is the vector of expected halting times—e.g., \({\overline h}_{22}=0\)—all indexed over Q

C. Solve these equations, then for the initial state use \({\overline q}=<\cdots 2^{-6},\cdots>\) to get

Add the edge 14 to C6 to get a network with slightly higher average cell degree—call it C4C4. Repeat the algebra and the speed-ups are better

So algebraic analysis shows a significant speedup, on these small networks. I have no algebra for 2PartitioNw, but simulations suggest

and this method of waves has been demonstrated by Coore (1999).

Theorem 6

2PartitioN* Halting On a bipartite network, 2PartitioN* almost always halts.

Proof

Similar to the proof of halting for 2PartitioN except that (due to the non-determinism) ∂X n and ∂X n+1 may not be disjoint, so \(X_0\subseteq \cdots X_m\subseteq X_{m+1}\subseteq \cdots C.\)

If k is the maximal degree of cells in (C, E), then the expected number of times that an s-unstable cell c, must be active before s(c) changes is at most \(k=\frac{1}{k}1+\frac{1}{k}\frac{k-1}{k}2+\cdots+\frac{1}{k}(\frac{k-1}{k})^j(j+1)+\cdots.\) For some \(\epsilon > 0,\) these computations will halt in k||C|| steps with probability \(\ge \;\epsilon.\) And so, computations of length mk||C|| fail to halt with probability \(< (1- \epsilon)^{m}.\) Over the long run, these processes fail to halt with probability \(0=\lim_{m=\infty}(1-\epsilon)^m.\)□

Theorem 7

2PartitioN # 2PartitioN # halts on bipartite nets.

Proof

The original proof for 2PartitioN works here.□

For 3PartitioN*, I have no gradient. A successful gradient for 3PartitioN would enable the process to halt in (expected) polynomial time—polynomial in ||C|| · log2(||Q||).

7 Patterns in attractors

Three versions of SpotS, for spatial patterns, and HearT*, for temporal patterns, are developed in this section. SpotSi were inspired by Turing (1952).

SpotS1 has values r for red and y for yellow. A red cell is not stable until its neighbors are all yellow. A yellow cell is not stable until it has a red neighbor. If reds are not tightly packed, then yellow surrounds a yellow cell, making it unstable, it becomes red.

Theorem 8

SpotS1 Halts On every (C, E), SpotS1 will halt.

Proof

First, build a halting state from a first red cell c 1 by coloring ∂{c 1} yellow, then choose a second red cell from c 2 ∈ ∂({c 1} ∪ ∂{c 1}) and on until a halting t has been constructed. Second, use t to construct a computation satisfying the necessary three properties (Sect. 3), finally conclude that almost every computation halts. □

SpotS2 This process wraps each red cell in a ring of blue cells and then constructs a minimal yellow background, with some white where unavoidable. White surrounded by white is unstable and becomes red. We require (1) reds to have blue neighbors only, (2) blues to have exactly one red neighbor, (3) yellows to have at least one blue neighbor, and (4) whites to have yellow neighbors but no blue neighbors. The first requirement guarantees that yellows have no red neighbors. Given Q = {r, b, y, w} and Q + = Q ∪ {o}, define

Halting states exist on every network, so computations eventually halt. The only flaw is that blue rings, for different red cells, are allowed to touch. SpotS3 corrects this problem (Fig. 2).

SpotS3 detects touching blue rings. Dynamic indices (hidden variable) are introduced Q = {r1, r2, r3, b1, b2, b3, y, w}. Invisible changes \(r1\rightarrow r2\rightarrow r3\rightarrow r1\rightarrow\ldots\) and \( b1\rightarrow b2\rightarrow b3\rightarrow b1\rightarrow\ldots\) advance red indices while neighboring blues keep up. So that a stable blue bi will be at most one behind its rj center—i.e., j = i or j = (i + 1)mod3. Touching blue rings follow different red centers and eventually have an index conflict—i.e., a bi will see its rj neighbor and a bk neighbor k = (j + 1)mod3. This is indicated by input = o. A destabilized spot is deconstructed.

Let Q + = Q ∪ {o} then, for j = (i + 1)mod 3 and k = (j + 1)mod 3,

This process eventually settles into an attractor whose states have fixed color assignments and changing indices on red and blue values (Fig. 3).

HearT* is a gradient following process which develops a temporal pattern by coordinating cellular oscillators. HearT* is not intended to halt, instead computations enter attractors composed of states which are homomorphisms of the network into (Q, →). The gradient approaching these attractors is determined by the number of edges broken by s.

Given Q = {0, 1, …c, 9} and Q + = Q, define

\(B_s(c) = \|\{d\in E(c)\ |\;\hbox{neither}\;s(c)\rightarrow s(d),\;\hbox{nor}\,s(d)\rightarrow s(c)\}\|\) counts edges at c broken by s. When applied to

\(B_s^{c} (c)\) counts edges broken by advancing s(c). Once HearT* reaches a homomorphism, subsequent states will be homomorphisms. The original HearT differs in that

This removes the B-gradient used to guide HearT* to an attractor. HearT and HearT* have the same attractors But before entering an attractor B s occasionally increases—even for HearT*. Footnote 14

In the plot at Fig. 3, we see computations for HearT* and HearT on the 200-cell network shown in Fig. 1. Both processes begin at the same state, but HearT*’s search (blue) reaches an attractor and begins coordinated oscillations in fewer than 500 steps, while HearT is still searching (red) after 2,000 steps. This plot shows ||s −1 n (0)|| as a function of n.

The table below gives another view of self-organization by HearT*. The initial state s

0 has about 20 cells for each value. At step 200, cells are competing for values of 1 and 4. After step 400, the colony settles in on a single value.

Finally, morphogenesis for HearT* is seen in Fig. 4 as an orbit through a space of value-count pairs (x

m

, y

m

) = (||s

−1

m

(9)||, ||s

−1

m

(0)||) for m = 0…1,500. A counter-clockwise, outward spiral begins at s0 near (20,20). At the bottom, motion to the right, is due to increasing the number of cells whose value is 9. Then, motion to the top-left shows cells moving from 9 to 0. Increased organization is seen as increased radius for the spiral.

Finally, morphogenesis for HearT* is seen in Fig. 4 as an orbit through a space of value-count pairs (x

m

, y

m

) = (||s

−1

m

(9)||, ||s

−1

m

(0)||) for m = 0…1,500. A counter-clockwise, outward spiral begins at s0 near (20,20). At the bottom, motion to the right, is due to increasing the number of cells whose value is 9. Then, motion to the top-left shows cells moving from 9 to 0. Increased organization is seen as increased radius for the spiral.

Strogatz (2003) has written on systems of oscillators which vary continuously and so are not as simple as our finite-state automata. He mentions a conjecture by Peskin asserting that “[global] synchronization will occur even if the oscillators are not quite identical”. Given this, we might ask “if cells in HearT are reduced from ten values to nine values, or increased to eleven; will the process continue to oscillate?”. I conjecture “yes”, as long as the nine’s don’t touch the ten’s.

HearT* is robust in the face of colony growth, and cell death. Living samples of cardiac tissue develop a heart-beat without centralized control. Footnote 15 An interesting experiment, after such a colony has self-organized and begun to beat, would be to cool (thus slow) the cells on one half of the culture but not the other. If cells in the warmer half slow their beat to match their cooler mates, then these living tissues are, like HearT, behaving to preserve continuity of values with neighbors—just like our homomorphisms (Fig. 4).

8 Algebraic calculations

OddParitY is defined on Q = {0,1} with Q + = Q by

An s is halting if and only if every s[E +(c)] has odd parity, or

On C6, OddParitY has four halting states: 111111, 100100, 010010, 001001 ; C10 has one, and K4 has eight. But, based on previous proofs, it is hard to imagine a general proof of halting for this process.

Theorem 9

OddParitY Halting Halting states exist on every (C, E).

Proof

(This proof is based on Sutner (1989).) Work in linear algebra with Q

C as the vector space, mod2 arithmetic in Q and dimensions indexed by \(c\in C. \) Halting is defined by

where E is the network’s adjacency matrix,

Footnote 16

I is the identity matrix and

where E is the network’s adjacency matrix,

Footnote 16

I is the identity matrix and  is the all-1s vector.

is the all-1s vector.

If (E + I) is invertible then  otherwise range(E + I)≠ Q

C and so

otherwise range(E + I)≠ Q

C and so

If

then

then

has a solution s which is halting. Now prove that

has a solution s which is halting. Now prove that

is in the range.

is in the range.

Let  be the all-0s vector and

be the all-0s vector and  be the subvector of v formed by restricting indices to C

t

. For

be the subvector of v formed by restricting indices to C

t

. For  in kernel(E + I) let

in kernel(E + I) let

(C

t

, E

t

) is formed by deleting t’s 0-valued cells, so

(C

t

, E

t

) is formed by deleting t’s 0-valued cells, so  (from t being in the kernel) implies

(from t being in the kernel) implies

then, since

then, since  every d has odd degree.

every d has odd degree.

Edges are counted twice when summing cell-degrees, so with ||E t || equal to the number of edges in E t

since degree

E_t

(d) is always odd, \(\left\|C_t\right\|\) is even,

and

and

Finally, OddParitY always has a halting state.□

Finally, OddParitY always has a halting state.□

Sutner’s proof has (C, E) as a free variable and so it is not sensitive to the size of the network. I find this proof’s power surprising (Fig. 5).

In 2009 and 2010, my students Arash Ardistani and Robert Lenich independently calculated the expected halting time as a function E(p) of p (the uniform probability of cell activity, previously denoted δ) for processes on C4 and several other small networks. Here is how it was done with C4-states \(s1,\ldots s16\) indexed as in Sect. 6 The expected halting time hi from a non-halting si satisfies

and hj = 0 for halting sj, where for example P c {s3 ⇒ s4} = p 2(1 − p)2 + p(1 − p)3 = p(1 − p)2, etc. Solve these 16 equations for the hi to get equations such as

Now, use these p-terms in \(E(p)=\sum_{i=1}^{16}\frac{1}{16} hi \ \) to get

Which is optimized by solving \(0=\frac{d}{dp} E(p)\) for p. The solution p = 0.4064 gives E(0.4064) = 6.636. E(p) is plotted above for 2PartitioN computations on C4 starting (1) from s 0 = [0, 0, 0, 0], and (2) from a (uniformly) random initial state s 0. We see

because p = 1 corresponds to synchronous activity and p = 0 corresponds to no activity, neither of which solves the problem. So the fastest process is asynchronous (i.e., 0 < p < 1). All other processes on all other nets showed the expected halting time going to infinity as p approached 0, and (most of processes) as it approached 1.

9 Limits on amorphous computability

Imagine a process, call it ElectioN, which eventually halts with exactly one cell in a computed value. I will prove that no program exists which computes the desired state in the absolutely amorphous model. This non-computability result is analogous to the non-computability, by Turing Machine, of the halting problem’s solution. The problem of election in a distributed process has been discussed since Le Lann (1977) and recently in dynamical amorphous processes Footnote 17 by Dereniowski and Pelc (2012).

In the following, a halting state s for ElectioN on (C, E) is assumed to exist. Then a larger network (C′, E′) is constructed with a halting state s′ for which \(\|s^{-1}(a)\|\ne 1\) for every \(a\in Q. \) By contradiction, no program exists for computing ElectioN’s halting states in an absolutely amorphous environment (Fig. 6).

Theorem 10

ElectioN is not Absolutely Amorphous No program exists which, independent of the underlying net, almost always Footnote 18 halts in a state s with ||s −1(a)|| = 1 for some \(a\in Q. \)

Proof

Assume ElectioN has a program, and that (C, E) has an s which is halting for that program. By assumption, ||s −1(a)|| = 1 for some a. Let C′ be the set of 0,1-indexed copies of cells in C—i.e.,

Choose a non-void subset \(F\subset E\) which is not a cut-set Footnote 19 of (C, E), then define edges on C′ by

(C′, E′) is connected. Construct s′ by s′(c 0) = s′(c 1) = s(c) for every \(c\in C. \) From the construction, we have

for every \(c\in C, \) so \(input_{s^{\prime}}(c0) = input_{s^{\prime}}(c1) = input_{s^{\prime}}(c)\) and

Thus, s′ is halting on (C′, E′), ||s −1(a)|| is even for every a. No value occurs exactly once. By contradiction, ElectioN is not absolutely amorphous.Footnote 20□

When a unique value or token is needed, it can be provided in s 0; but then the initial state is not random and the process is not absolutely amorphous.

The trick of Theorem 10 can be used to prove that there is no absolutely amorphous process, Not2PartN (a compliment for 2PartitioN), which halts precisely on networks which are not bipartite. And, to prove that there is no absolutely amorphous process, StricT3PartN, which recognizes networks which are tripartite but not bipartite. Proof sketch: assuming that Strict3PartN exists, a halting state s exists on C3 = ({a, b, c}, {ab, bc, ca}). Join copies C31 and C32 by crossing the bc edges (producing b 1 c 2 and b 2 c 1 in place od b 1 c 1 and b 2 c 2) to construct C6, and define a state t on C6 defined from s by t(a i ) = s(a), t(b i ) = s(b), t(c i ) = s(c). Obviously a 1,a 2 are stable, but what about the b’s and c’s? For \(b_1,\ldots\)

so t is stable at b 1. The remaining cells are the same, and so t is a halting state. But C6 is bipartite - contradiction.

To go further into recursion theory these processes must be expressed in the integer framework of Kleene’s partial recursive functions. I cannot expect to carry everything into recursion theory because the random choice function is not Turing computable. But the deterministic part, of what has been developed here is computable, and since the structures are finite they can be coded Footnote 21 eventually into \({\mathbb{N}. }\)

10 TokeN, GradienT and RaiN

A token is a unique value which moves through a network, from one cell to a neighbor, without being assigned to more than one cell at a time. Assuming that s 0 has \(s_0(a)=T\in Q\) for exactly one cell and s 0(d) = q for all others, TokeN is a process whose computations move T randomly through (C, E) and without duplication. Further, TokeN is shown to be self-stabilizing in the sense of Dijkstra.

TokeN First, the movement of T is described informally as follows.

-

(1)

Neighbors b, c of s(a) = T cycle \(q\rightarrow r\rightarrow s\rightarrow q\) while \(s(a)=T\rightarrow T\) as long as s[E(a)] does not contain exactly one r (i.e.,receiver), but \(s(a)=T\rightarrow T1\) is allowed when the neighborhood contains exactly one r. All others, do \(q\rightarrow q. \)

-

(2)

The neighborhood of s(a) = T1 may have changed at the moment of \(T\rightarrow T1, \) so \(T1\rightarrow T\) (the advance is retracted) if s[E(a)] does not still contain exactly one r. But if s[E(a)] still has exactly one r = s(b), then the token holder advances one more step \(T1\rightarrow T2=s(a). \) Neighbors of s(a) = T1 never change their value while s(a) = T1, T2, so a new r cannot appear among neighbors of a after T1.

-

(3)

For neighbors of s(a) = T2, the token is passed to b the singular receiver \(r \rightarrow T. \) At \(a, T2\rightarrow T2\) as long as \(T\notin s[E(a)], \) but \(T2\rightarrow q\) after s(b) = T.

Now we go back to (1), except that T has passed from a to b.

This process uses values Q = {q, r, s, T, T1, T2}; inputs T, qrs, r!qs are used in stage 1 and T1, T2 for stages 2 and 3. Thus Q + = Q ∪ {qrs, r!qs}.

Dijkstra (1974) describes a network stabilization problem. Certain actions must be performed serially by the processors in the network. This requires a network procedure which manages a token (which grants the privilege to act). But the network is prone to failures which create a state with more than one token—an unstable state. If TokeN is used as a token manager on Dijkstra’s network then (given enough time) it will, with probability 1, merge excess tokens until only one remains. To prove this imagine a finite sequence of states \(s_0,\ldots, s_m\) is which s m has two Ts. A finite extension \(\ldots s_m \Rightarrow \cdots \Rightarrow s_{m+n}\) can always be found which moves these tokens to a place where they share a neighbor d. Then extend the computation to \(\ldots s_m\Rightarrow\cdots\Rightarrow s_{m+n}\Rightarrow\cdots\Rightarrow s_{m+n+o}\) which ends with both tokens being simultaneously passed to d—thus merging them. There is only one token in s m+n+o and by the 0,1-Lemma, this merger of multiple tokens happens with probability 1.

GradienT N Let \(Q=\{0,1,2,\ldots,N-1\}\) up to some large N, Q + = Q and input(M) = min(M)modN and alpha(value,input) = (input + 1)modN for M = s[E(c)]. Given networks with exactly one cell r (for root) inactive, and states s having 0 = s(r) and 0 < s(c) for c ≠ r, this process eventually assigns to c a value equal to the length of the shortest path connecting c to r. After value N − 1, the counting starts over at 1. Subsequent processes may appear to pass values down-gradient to r, by allowing values to be read from up-gradient cells.

RipplE given a state \(s\in\{0,1,2\}^C, \) signals the presence of a 2 to the rest of the network (imagine 0s) by propagating out 2s, then reducing 2s to 1s, then 1s to 0s—all moving away from the original s 0(c) = 2. Values circulate \(0\rightarrow 2\rightarrow 1\rightarrow 0. \) With Q + = {0, 1, 2, 01, 12, 02, 012} define

The first two lines in α’s definition apply to cells just before, and at the leading edge of the ripple. In the remaining lines, the presence of a 1 indicates that the ripple has passed.

In RaiN, new 2-values spontaneously appear (as input, not as computed values) in states during this computation and trigger their own ripples (like rain drops on a pond). Unfortunately, if they appear near a 1, they will degenerate (third line above) without a ripple. So a pre-2 value 3 will represent the rain drop. We need only add 3 to Q and two lines to α

Input will be blind to 3, and the new 3 is preserved until its neighbors settle down to 0s. RaiN is used in XHalT?.

11 Composition and other operations

Operations on processes—product, serialization, and composition—are presented by example.

TokeN 2 is the product of TokeN (Q T, Q T+, α, input) with itself. This construction uses Q TT = (Q T )2 and Q + TT = (Q + T )2—pairs of values from TokeN. Projections are (sq)1 = s and (sq)2 = q for \(sq\in Q_{TT}, \) and \(M_1=\{v\ |\ vw\in M\}, \) etc. for \(M\subset Q_{TT}. \) The program is completed by

(T and TT are used as subscripts on Q to avoid confusion with the set exponent Q C.)

To have tokens move through (C, E) independently, activity in the first and second dimension must be independent. For σ = [σ 1,σ 2] where \(\sigma_1,\sigma_2\subset C, \)

Usually, process products are a framework for the exchange of information (between the processes). But not in TokeN2.

SeriaL Y is the token-activated serial-execution of Y. This process could as well be called if-TokeN-then- Y. Information from the first process (the presence of the token at a cell) is used to determine the cell activity which executes Y. Since there is only one token, Y experiences serial execution.

For example, let Y be 2PartitioN, and T2P be SeriaL2PartitioN. The languages are Q T2P = Q T × Q 2P and Q + T2P = Q + T × Q +2P . Transition and input, for \(s:C\rightarrow Q_{T2P}\) and M = s[E(c)] on (C, E), are

If both processes are active at σ and a cell \(c\in\sigma\) holds the token, then

X HalT? is the product of X with a process which copies the X’s old value from s(c)1 to s(c)2 before X assigns a new value to s(c)1, and finally RaiN (which spreads news of some s(c)1 ≠ s(c)2 to other cells by s(c)3 = 3). After X has a halting state in s 1, this process will have solid 0s in s 3. For RaiN, let Q R = {0, 1, 2, 3} and Q + R = {0, 1, 2, 3, 01, 02, 12, 123}.

Set Q XH? = Q X × Q X × Q R and Q + XH? = Q + X × Q + X × Q + R , then

where α R is RaiN, and

for \(M\subset Q_{XH?}. \) After X halts, RaiN will spread 0s to every s(c)3.

(Y ∘ X) is the composition of processes Y and X with shared values Q X = Q Y . It uses XHalT? to initiate Y. Set Q Y ∘ X = (Q XH?) × Q Y and treat these values as having projections—\([value_{11},value_{12}]\in Q_X^2, \) \(value_{13}\in Q_R, value_2\in Q_Y\) so value 1 = [value 11, value 12, value 13]—then define

and for Q + Y ∘ X = Q + XH? × Q + Y and \(M\subset Q_{Y\circ X}, \)

Y runs on the intermediate values computed by X, producing nonsense, until X reaches its halting state (indicated by 0 = value 13). Finally, working from X’s halting state, Y’s calculations break away and continue on their own. For Y, there may be many false starts, but the values and inputs from these false starts are forgotten each time RaiN signals (by 0 < value 13) a change in X. Communication from XH? to Y is seen in the first lines of each definition. If X becomes trapped in an attractor without ever halting, then Y continues to generate nonsense. So the composition succeeds only if X halts and then Y halts working from the state left by X.

12 TearS of the Sun

The author worked with Dr Leon Kotin (Fort Monmouth, NJ) in the 1970s and 1980s to develop early versions of this process—see (Stark and Kotin 1987) for an informal presentation. In the past two or three decades many others e.g., Hubbell and Han (2012) have further developed amorphous sensor networks.

A network with a moving cell r is represented as (C n , E n ) where E n (c) − {r} is more-or-less constant for c ≠ r and E n (r) is variable, \(n=0,1,\ldots\) (Stark and Kotin 1987). With r as its root and N large, GradienT N is constantly re-computing the cell-to-root distances. A global input \(x_n:C_n\rightarrow\{0,1,\ldots\}\) of sensor reports—x n (c) = 0 representing no alarm—is maintained at the cells. Occasionally, the movement of unknown individuals (i.e., biological, infra-red emitters) past a cell c is detected and expressed as 0 < x n (c). These sensing cells are probably stationary. The objects being detected are not a part of the network. A mechanism for moving information, describing the location (relative to r) of alarms, down gradient to r is included. I call this canopy intelligence process TearS (a variation on RaiN). The information consists of value 1 the nature of the alarm and value 3 the distance to the nearest alarmed cell.

Let \(Q=\{0,1\ldots\}\times\{0,1,\ldots,N\}\times\{0,1,\ldots,N\}, \) where N is at least the diameter of the network. The structure of \(s(c)=value\in Q\) is broken down into s(c)1 = x n (c) sensor input at c, s(c)2 is computed by GradienT N to be c’s distance from r, and s(c)3 the distance from \(s^{-1}(\{1,\ldots\})\)—the set of alarmed cells. TearS is defined by

and for \(M\subset Q\)

The root is not necessarily the only changing part of the network. Cells die and randomly-positioned replacements are rewoven into (C n , E n )—using this simple program. With a little more work the information passed to the root could include the threat’s size and structure e.g., Han (2012).

TearS processes a dynamic stream of data x n in constantly changing network (C n , E n ). The name was inspired by a similar process depicted in the movie Tears of the Sun (Fuqua 2003). Half-way through the movie, thousands of solar-powered infra-red sensors, C − {r}, are dropped into the canopy of a forest. Using radio-frequency packet-passing, they begin building a network E n . A lap-top r carried by the hero is included in the network. The environment takes its toll, requiring new sensors to be dropped in as old sensors retire—(C n+1,E n+1). The network re-weaves itself so that the hero is always informed of the position of the advancing bad guys.

13 Conclusion

\(\ldots\) The greatest challenge today in all of science, is the accurate and complete description of complex systems. Scientists have broken down many kinds of systems. The next task is to reassemble them, at least in mathematical models that capture the key properties of the entire ensembles. (Wilson 1998)

My hope is to see the development of a powerful computation theory for issues of biological information processing. The absolutely amorphous model is mathematically tractable and approximates both mature biological tissues and the informal notion of amorphous computing seen in current literature. This brings insights obtained through pure mathematics close enough to reality to be biologically relevant—just as Turing’s leopards’ spots problem (Turing 1952) and its solution highlighted the value of reaction-diffusion mechanisms in theoretical biology.

It is clear that the methods which I called blind search is not biologically realistic—a leopard embryo cannot invest geological time into computing its spots. This demonstrates the importance of gradients on (Q C, ⇒) and activity organized into waves as a means of directing development. The entropy gradient championed by Schrödingier (1944) may be the most promising, since Shannon entropy (Shannon 1998) equates morphogenesis to information-reduction. These issues are a part of computational thermodynamics (Bennett 2003; Feynman 1996).

Non-homogeneous processes are central to biology. Perhaps models developed in this formalism can suggest a need for non-homogeneity in toy organs. Footnote 22

Classical computation theory can offer guidance, Footnote 23 but is the powerful integer-coding used classically impossible or inappropriate Footnote 24 for amorphous computation?

I cannot believe that amorphous computing is, as some have speculated, completed. Three billion years of evolution have left us with fantastic processes which need mathematical modeling.

Notes

Since Floyd (1967a, b), and continuing with Broy (1986), Ward and Hayes (1991), and others, attention had been focused on individual runs/computations. Infinitely often these runs are successful (a result of angelic decisions) and infinitely often they fail (a result of demonic decisions). This approach to understanding non-deterministic processes is as inappropriate as viewing real numbers one decimal at a time. For arithmetic, viewing decimals in this way might have made the existence of irrationals a subject of debate. For processes we see something similar in Dijkstra (1988) and Schneider and Lamport (1988).

My assumption that activity is random has mathematical advantages (see the proof of Theorem 3), but it allows extreme differences between the activity levels of healthy cells—differences that would not be seen Nature.

Cell-to-cell edges may correspond to gap-junctions in animal tissue. Gap junctions are non-directed channels between the cytoplasms of neighboring cells which allow the exchange of small molecules (up to mw = 2,000). Viruses are too large to pass through gap junctions, so if our model is to be biologically true, program fragments should not pass between cells. This excludes many tricks seen in recursion theory. Besides gap-junctions, cell-to-cell communication may be hormonal (broadcast), mechanical, and electrical. Physically independent bacteria communicate. In fact amorphous information sharing between members of a species is ubiquitous in Nature.

A graph/network (C, E) is bipartite if C can be partitioned into C 0, C 1 so that every edge xy of E connects a cell in C 0 to a cell in C 1. C 0 = s −1(0) and C 1 = s −1(1) for a halting s. Being bipartite is a global property—if true, it cannot be determined by examining less than the whole graph/network.

This theorem does not say that the expected halting time is N ≤ ||C||.

“von Neumann thought that automata mathematics should be closer to the continuous and should draw heavily on analysis as opposed to [the] combinatorial approach” (Burkes 1970). Theorem 3 is an example of a 0,1-law.

For the analytical approach, think of \((Q^C)^\mathbb{N}\) as being analogous to the unit interval [0, 1]⊂ ℜ, and computations corresponding to decimals in \(\{0,1,\ldots 9\}^\mathbb{N}\). Consider the partial function \(h:(Q^C)^\mathbb{N}\rightarrow \mathbb{N}\) which, for each \({\overline s}=\langle s_0,\ldots s_i,\ldots,\rangle, \) is defined

$$ h({\overline s}) = \left\{\begin{array}{ll}\min i\ [s_i is \; a \; halting \; state ] & \hbox{if}\;{\overline s}\; \hbox{is a halting computation}, \\ undefined & \hbox{otherwise.} \end{array}\right. $$For a process which halts with probability 1, the expected halting time is

$$ \int\limits_{Q^{C}} h({\overline s}) d \mu({\overline s}). $$This would be the sort of analytical result that von Neumann had in mind.

Alternate 0,1 value assignments as you move around Cm, until the last cell which is set to 2.

Any three cells with values 0,1,2 form a triangle, so the fourth cell cannot be stable with one of these values.

The input s 0 consists of x = ||C|| · log2(||Q||) bits of information, so P and NP would be sets of computations of length p(x), where p is a polynomial.

I conjecture that, if the non-determinism seen in σ-selection is pseudo-random, rather than strictly random, then there can exist a tripartite graph and s 0 for which 3PartitioN fails. The failure would of course be due to the computed \(\ldots\sigma_m,\ldots\) containing a pattern which perpetuated instability. This could also be true of halting for 2PartitioN. To give the reader something to work with, I assume that pseudo-random means computable using memory which is linear in ||C|| + ||Q||—as if the process were being simulated in a machine which generates the \(\ldots\sigma_m,\ldots\) with this bound on memory.

“Non-homogeneous” because one cell is not executing the α used by the other cells. Not absolutely amorphous because the designation of an inactive cell takes place outside of α, input. The gradient sensitivity of 2PartitioN* is, on the other hand, defined within input and based only on local information.

Imagine s4 ⇒ σ s5. In 2PartitioN, σ = {1, 2, 3} is only possibility, so T 5,4 = 2−6 = 16 · 2−10. But in 2PartitioN* a second possibility is σ = {1, 2, 3, 4}, and in each cell has a 2−1 chance of receiving the appropriate input, so the probability of the transition is \(T_{5,4}^{\ast}\) = 2−62−3 + 2−62−4 = 3 · 2−10. For the transition s6 ⇒ σ s6, eight cases make T 6,6 = 2−3 and 32 cases make \(T_{6,6}^{\ast}\) = 9 · 2−5 > 2−2.

From s = 0131 on C4 we have B 0131 = 2. There will be three, out of 16 possible next-states, which move up-gradient—B 0242 = 4, B 0241 = 3, B 0142 = 3.

See videos of tissues it at http://www.youtube.com/watch?v=Y5uKMM8Od9g or http://www.youtube.com/watch?v=BJTFeBGO_i0 or Google “cardiomyocytes beating in vitro”.

Indexed over C, E c,d = 1 if cd is an edge, =0 otherwise.

Dereniowski and Pelc (2012) capture dynamics by allowing identical anonymous agents to move through a given fixed graph. They answer the question “for which initial configurations of agents is leader election possible". Agents which are Turing machines which are initially given an upper bound on the size of the graph. They show “leader election is possible when the initial configuration is asymetric and agents can learn [the asymetry], regardless of the actions of the adversary [demon]", so their result is not inconsistent with the non-computability result proved here.

Given a measure μ on set of events, we say that an action almost always succeeds if and only if the set of successes has measure 1, and the set of failures has measure 0.

\(F\subseteq E\) is a cut-set for the connected graph (C, E) if (C, E − F) is not connected

In a 1980 paper, Angluin (1980) proved there exists no election algorithm for a class of graphs containing both a graph G and a strict covering G′ of G [by γ]. Her proof (not unlike this proof) carried computation steps on edges ab of G back to γ−1(ab) of G′ to reach a contradiction [on G′].

Let \({\mathbb{N}^*}\) be the least set containing \({\mathbb{N}}\) and closed under the formation of lists. Most of the model described in Sect. 2 can be developed in \({\mathbb{N}^*}\)—i.e., C = [0, 1, …p] is a set of cells, \(E\subseteq C^2\) is a list of pairs, Q = [0, 1, …q], Q + = [0, 1, … r] for q ≤ r and \(\alpha\subset Q\times Q^+\times Q\) is a list of ordered triples. Multisets are represented as counting vectors indexed over Q—i.e., [0, 3, 0, 0, 1, 0] represents {1, 1, 1, 4} when q = 5. We have put no upper bound on the degree of cells, so Input has an infinite domain and so it must remain a defined but computable recursive function. Only the random choice function is excluded, but our processes’ deterministic state-to-halting-state functions can be defined random choice. Details are available in Stark (2013).

As a toy organ, consider three homogeneous processes—α on X, β on Y and γ on Z—with (C, E) partitioned by connected subgraphs

and

and

separated by the cut set

separated by the cut set

. Can we design a computational need for Y? Perhaps state-changes over X are filtered by Y so that Z sees only unusual events within X. Could something like this lead to a theoretical justification for the evolution of organs?

. Can we design a computational need for Y? Perhaps state-changes over X are filtered by Y so that Z sees only unusual events within X. Could something like this lead to a theoretical justification for the evolution of organs?For example, like automata, the amorphous model has limited memory, but does the elegant proof that the palindromes of a given language cannot be recognized by an automaton carry over to amorphous processes? Or, could it be that the C 1 C 2 construction used as a counterexample to ElectioN, is a version of the construction of a palindrome failure?

(C, E) offers some coding ability, but using it may be inconsistent with the amorphous philosophy.

References

Angluin D (1980) Local and global properties of networks of processors. In: Proceedings of 12th symposium on theory of computing

Aspnes J, Ruppert E (2007) An introduction to population protocols. Bull Eur Assoc Theor Comput Sci 93:98–117

Bennett CH (2003) Notes on Landauer’s principle, reversible computation, and Maxwell’s Demon. Stud Hist Philos Sci B 34(3):501–510

Broy M (1986) A theory for nondeterminism, parallelism, communication and concurrency. Theor Comput Sci 45:1–61

Burkes A (1970) Essays on cellular automata. University of Illinois Press, Urbana

Coore DN (1999) Botanical computing: a developmental approach to generating interconnect topologies on an amorphous computer. PhD thesis, MIT, Cambridge

Coore D (2005) Introduction to amorphous computing. In Banâtre J-P, Fradet P, Giavitto J-L, Michel O (eds) Unconventional programming paradigms, vol 3566 of Lecture notes in computer science. Springer, Berlin, pp 99–109

Dereniowski D, Pelc A (2012) Leader election for anonymous asynchronous agents in arbitrary networks. arXiv:1205.6249

Dijkstra EW (1974) Self-stabilizing systems in spite of distributed control. CACM 17(11):643–645

Dijkstra EW (1988) Position paper on “fairness”. Softw Eng Notes 13(2):18–20

Feynman RP (1996) In: Hey AJG, Allen RW (eds) Feynman lectures on computation. Addison-Wesley, Boston

Floyd RW (1967a) Assigning meanings to programs, vol 19. American Mathematical Society, Providence, pp 19–32

Floyd RW (1967b) Nondeterministic algorithms. J ACM 14(4):636–644

Fuqua A (producer) Varma SR, Lasker A, Cirillo P (writers) (2003) Tears of the sun. SONY Pictures

Garey MR, Johnson DS (1979) Computers and intractability: a guide to the theory of NP-completeness. W. H. Freeman and Company, New York

Hubbell N, Han Q (2012) Dragon: detection and tracking of dynamic amorphous events in wireless sensor networks. IEEE Trans Parallel Distrib Syst 23(7):1193–1204

Le Lann G (1977) Distributed systems—towards a formal approach. North Holland, Amsterdam

Schneider FB, Lamport L (1988) Another position paper on “fairness”. Softw Eng Notes 13(3):18–19

Schrödinger E (1944) What is life? Cambridge University Press, Cambridge

Shannon CE (1998) The mathematical theory of communication. University of Illinois Press, Chicago

Stark WR (2013) Amorphous processes in the context of the partial recursive functions, Unpublished

Stark WR, Hughes WH (2000) Asynchronous, irregular automata nets: the path not taken. BioSystems 55:107–117

Stark WR, Kotin L (1987) Thoughts on mechanical societies: or distributed computing in random and changing architectures. Congr Numer 60:221–241

Strogatz SH (2003) SYNC: how order emerges from chaos in the universe, nature, and daily life. Theia, New York

Sutner K (1989) Linear cellular automata and the Garden-of-Eden. Math Intell 11(2):49–53

Turing AM (1952) The chemical basis of morphogenesis. Philos Trans R Soc 237:5–72

von Neumann J (1948) The general and logical theory of automata. In: Taub AH (eds) John von Neumann, collected works. Pergamon Press, Oxford

Ward N, Hayes I (1991) Applications of angelic determinism. In: Bailes PAC (ed) 6th Australian software engineering conference, pp 391–404

Wilson EO (1998) Consilience. Knopf, New York

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution License which permits any use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

About this article

Cite this article

Stark, W.R. Amorphous computing: examples, mathematics and theory. Nat Comput 12, 377–392 (2013). https://doi.org/10.1007/s11047-013-9370-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11047-013-9370-0

and

and

separated by the cut set

separated by the cut set

. Can we design a computational need for Y? Perhaps state-changes over X are filtered by Y so that Z sees only unusual events within X. Could something like this lead to a theoretical justification for the evolution of organs?

. Can we design a computational need for Y? Perhaps state-changes over X are filtered by Y so that Z sees only unusual events within X. Could something like this lead to a theoretical justification for the evolution of organs?