Abstract

Individual technical components are usually well optimized. However, the design process of entire technical systems, especially in its early stages, is still dominated by human intuition and the practical experience of engineers. In this context, our vision is the widespread availability of software tools to support the human-driven design process with the help of modern mathematical methods. As a contribution to this, we consider a selected class of technical systems, so-called thermofluid systems. From a technical point of view, these systems comprise fluid distribution as well as superimposed heat transfer. Based on models for simple fluid systems as extensively studied in literature, we develop model extensions and algorithmic methods directed towards the optimized synthesis of thermofluid systems to a practical extent. Concerning fluid systems, we propose a Branch-and-Bound framework, exploiting problem-specific characteristics. This framework is then further analyzed using the application example of booster stations for high-rise buildings. In addition, we demonstrate the application of Quantified Programs to meet possible resilience requirements with respect to the systems generated. In order to model basic thermofluid systems, we extend the existing formulation for fluid systems by including heat transfer. Since this consideration alone is not able to deal with dynamic system behavior, we face this challenge separately by providing a more sophisticated representation dealing with the temporal couplings that result from storage components. For the considered case, we further show the advantages of this special continuous-time representation compared to the more common representation using discrete time intervals.

Similar content being viewed by others

1 Introduction

In light of the European Union’s (EU) greenhouse gas emission reduction goal and its commitment under the climate agreement reached at the COP21 climate conference in Paris, the heating and cooling sector holds great potential for achieving these objectives. According to a report prepared by the Executive Agency for Small and Medium-Sized Enterprises (2016), heating and cooling accounted the EU’s biggest energy use with 50% of final energy consumption in 2012, which corresponds to 546 MtoeFootnote 1 and it is expected to remain that way. In this regard, the ‘EU Heating and Cooling Strategy’ announced by the European Commission points out that demand reduction and the deployment of renewable energy and other sustainable sources can reduce fossil fuel import and guarantee energy supply security, while ensuring an affordable supply of energy for the end user. In the EU, 45% of the energy consumed for heating and cooling is used in the private sector, 36% in industry and 18% in services. The authors of the report assume that each of these sectors has the potential to reduce demand and increase efficiency, especially considering that 75% of the fuel these sectors consume still comes from fossil sources. The decarbonization of the heating and cooling sector is therefore essential to meet the EU’s energy and climate change objectives. However, the sector is currently still fragmented and characterized by outdated and inefficient equipment, thus offering a high degree of potential for improvement (Executive Agency for Small and Medium-Sized Enterprises 2016). At the same time, the distribution of fluids, especially water, also has a high potential for saving energy and reducing emissions. The European Commission identified that water pumps in commercial buildings, for drinking water supply, in the food industry and in agriculture alone consumed an estimated quantity of 169 TWh/a in the EU-25 countries in 2000 (Betz 2017; Falkner 2008). In his dissertation, Betz (2017) further explains that the energy consumption of all pumps is reported with 300 TWh/a and the savings potential is estimated to be 123 TWh/a which indicates that, compared to a net electricity production of 2777 TWh/a in the EU-25 countries in 2000, about 10.8% of the net electricity production is currently fed into pump drives.

With regard to the design of technical systems, empirical studies suggest that the initial decisions, i.e. combining the intended functionality, layout, used components as well as the expected loads for the future use, make up 70–85% of a system’s total lifespan costs (VDI 2884 2015). In this setting, two important approaches can be identified. On one hand, there is the mathematical optimization which has produced solid results in the area of design-related tasks regarding technical (flow-based) systems using both linear and non-linear optimization. Examples are the optimization of water (D’Ambrosio et al. 2015) and gas networks (Domschke et al. 2011). All those have in common that mathematicians and engineers have chosen a specific subtask with the goal of designing a technical system which was extensively examined regarding special characteristics in the problem structure and solved successfully with the help of advanced algorithms. On the other hand, there is the system simulation in engineering sciences. Simulation tools like ModelicaFootnote 2 or Matlab/SimuLinkFootnote 3 are often used in this context. For these, components of technical systems are typically described by differential equations and component catalogs consisting of templates are used and adapted to the considered application. Furthermore, it is important that the system topology is largely predefined.

However, despite the efforts made in both areas, the design of technical systems, especially in early stages of development, is still dominated by human intuition. Hence, our aim is to provide tools for engineers to guide their intuition by the use of quantitative, modern mathematical methods during the system design process in the form of applicable software. The advantage of this approach is that the considered methods, in contrast to conventional procedures, guarantee for global optimality within the model. The use of the tool should be similar to known simulation environments. Therefore, the goal is not to identify and solve an engineering problem but to provide engineers with tools to describe and solve their problems. In particular, they should be able to formulate tasks in their own technical language. The difference, however, is that there is no simulation of an existing system but the selection of a system from a large number of implicitly described systems, as it is done on the basis of individual studies in the field of mathematical optimization. Yet, in contrast to the thematically strongly focused individual studies, our long term goal is the development of a generic tool.

Currently, we focus on a class of technical systems designed to cover applications where a combination of fluid distribution and heat transfer is required. These systems incorporating the subtasks of heating and cooling as well as transporting fluids can be summarized under the general term ‘thermofluid systems’. However, our starting point is a simplification of thermofluid systems, so-called fluid systems which are restricted to the distribution of fluids. These provide the foundation for this paper since they have been subject of extensive research in the past. In this regard, mathematical models as well as algorithms for the design and operation have been developed. We therefore provide a short overview of this topic. Note that although gas networks generally belong to the class of fluid systems, further considerations primarily focus on the application to water networks.

In general, the optimization tasks considered in literature involve optimal operation problems, optimal design problems and combinations of both. The optimal operation task aims at operating fixed components over a certain time horizon in such a way that the customer demands are satisfied while the operation costs, typically arising from the components’ power consumption, are minimized. For the optimal design task, it has to be noted that in literature the design problem usually also assumes a fixed underlying network topology (D’Ambrosio et al. 2015). The design task is therefore restricted to component sizing, e.g. choosing appropriate diameters for the pipes of the network. However, for the remainder of this paper the term ‘design’ also refers to the task of finding optimal network topologies, which is often also referred to as layout problem (De Corte and Sörensen 2013) or synthesis level (Frangopoulos et al. 2002).

In the context of optimal operation decisions, Gleixner et al. (2012) examine the optimal stationary operation of water supply networks. The optimization of dynamic water supply systems with a given layout is addressed by Morsi et al. (2012) who introduce a Mixed-Integer Linear Programming (MILP) approach based on the piecewise linearization of non-linear constraints. Geißler et al. (2011) use a similar approach for the optimization of dynamic transport networks which, in addition to water supply network optimization, has also been applied to the example of transient gas network optimization. For a deeper insight into the optimal operation of water supply networks, we refer to Martin et al. (2012) where the topic is investigated in detail. The optimal design of water distribution networks is studied by Bragalli et al. (2012) who use a MILP approach to select pipe diameters from a predefined finite set of possibilities. Besides optimizing water networks with a given layout, Fügenschuh et al. (2014) examine the optimal layout for the application example of sticky separation in waste paper processing. In their paper, a Mixed-Integer Non-Linear Program (MINLP) for the simultaneous selection of the network topology as well as the optimal settings of each separator for the steady state is proposed. A comparable approach based on a MINLP formulation to design decentralized water supply systems for skyscrapers is used in Leise et al. (2018).

Recently, there have also been increased efforts to include resilience considerations for the design of water distributions networks. The resilience of water distribution networks from a topological perspective based on an implementation of the K-shortest paths algorithm is examined in Herrera et al. (2016). Furthermore, in Meng et al. (2018), an analysis framework for studying the correlations between resilience and topological features, exemplified for water distribution networks, is proposed. However, these are simulative approaches. In the context of (non-)linear programming, Altherr et al. (2019) investigate decentralized water distribution networks in high-rise buildings, using Branch-and-Bound to exploit the special tree-structure of the considered networks in order to obtain K-resilient systems, i.e. the operation can be ensured if at most K components break down.

Besides global optimization methods, a wide range of heuristics, especially metaheuristics, have been applied in literature. In the context of the design of water distribution networks, this includes (but is not limited to) Genetic Algorithms (Savić and Walters 1997), Simulated Annealing (Cunha and Sousa 1999), Tabu Search (Cunha and Ribeiro 2004) and Ant Colony Optimization (Maier et al. 2003). For further insight, we refer to De Corte and Sörensen (2013) and Mala-Jetmarova et al. (2018). Additionally, it should be noted that many approaches do not use an explicit mathematical formulation. Instead external solvers such as EPANETFootnote 4 are often applied to check for hydraulic feasibility (Altherr et al. 2019). An example for the combination of metaheuristics and (non-)linear programming is shown in Cai et al. (2001). The authors use a Genetic Algorithm to fix variables in their non-linear optimization model for water management resulting in a linear formulation. However, it should be noted that even if heuristic approaches may often times yield good solutions, optimality cannot be guaranteed. In order to be able to overcome this disadvantage one possibility is the application of dual methods to provide a reference measure for the solutions found by (meta-)heuristics. In this regard, Altherr (2016) uses Simulated Annealing and Dynamic Programming to obtain solutions for the design of hydrostatic transmission systems and, importantly, also provides dual bounds obtained via Lagrangean Relaxation to assess the primal solutions.

With regard to thermofluid systems, contributions exist which examine the optimization of heating, ventilation and air conditioning (HVAC) systems. Note that besides other methods being also commonly applied, we focus on the application of (non-)linear programming techniques. For example, in Pöttgen et al. (2016) the generation-side of an already existing heating circuit at a conference center in Darmstadt (Germany) is examined and the authors propose alternative system designs. In Gustafsson (1998), another approach is taken. Instead of the HVAC system, the corresponding building is retrofitted based on life-cycle analysis.

Furthermore, there is the research area of Model Predictive Control (MPC) for HVAC systems. In MPC, a system model is combined with forecasts of external parameters and the resulting optimization problem of finding control decisions is typically solved online and in real time (Risbeck 2018). In this context, Risbeck et al. (2015) examine the optimized equipment usage of a central heating and cooling plant including thermal energy storage systems. In Risbeck et al. (2017), the authors further propose a framework for optimizing the operational planning of HVAC systems in commercial buildings considering both a central plant as well as the building subsystem. Similar applications have also been studied in Deng et al. (2013) and Kashima and Boyd (2013). While most of these works aim to provide an optimal control focusing on the online and real-time aspect, the emphasis in this paper is on the design aspect and the integration of estimated load data is rather used in order to evaluate favorable system designs for the intended use. However, for a more detailed overview of MPC and its application to HVAC systems, we refer to Afram and Janabi-Sharifi (2014).

Another adjacent topic is the synthesis of energy systems, typically operating as cogeneration systems for the simultaneous production of heat and power or trigeneration systems with coupled cooling (Andiappan 2017). For instance, a MILP approach for the selection and sizing of a smart building system is presented in Ashouri et al. (2013). Apart from just selecting and sizing, the authors also determine operating strategies in parallel to compare different configurations. Another contribution with regard to the synthesis of energy systems is given in Voll et al. (2013). Here, a framework for the automated superstructure generation and optimization of distributed energy supply systems based on a MILP formulation is proposed. While contributions in this field also focus on heating and cooling (combined with power generation), the approaches intentionally consider a higher level of aggregation for the synthesis task than it is the scope of this paper. For further insight into the optimization approaches for energy systems, we therefore refer to Andiappan (2017).

Beyond typical flow networks, system design approaches have also been applied to the optimization of other technical systems such as gearboxes (Altherr et al. 2018b) and lattice structures (Reintjes et al. 2018).

In contrast to most approaches, our major challenge is to be able to model the synthesis of fluid-based systems in a general and consistent way similar to the widespread simulation environments such as Modelica or Matlab/Simulink and at the same time to be able to perform algorithmic optimization. The focus is that due to a modular principle, system designers should have the possibility to pick out relevant elements for their application and extend or modify them if necessary. All elements should be based on the same foundation, as it is common for the above mentioned simulation tools. With this in mind, however, the development of suitable models and methods for the design of general thermofluid systems to a practical extent is a visionary challenge. Therefore, the decomposition into sub-challenges, as shown in Fig. 1, is necessary. Starting in the upper left corner with the basic fluid system model, we can unfold our investigation in two different dimensions. The first dimension is the extension of the fluid system model in order to include additional features. This comprises the consideration of uncertainty, in particular resilience, heat transfer as well as dealing with dynamic system behavior. The second dimension is the degree of implementation, from the formulation of suitable models and model extensions for which instances can be solved on a laboratory scale with the help of standard solvers, to the development of sophisticated heuristics and algorithms exploiting the system-specific features for handling larger instances, to the validation of proposed solutions by means of detailed simulation.

The sub-challenges examined in this paper are indicated by tiles with the specification of a section number. Resulting from these selected sub-challenges, the following research questions arise:

-

1.

How can engineering knowledge be integrated into the solution process through domain-specific primal as well as dual methods and thus improve it compared to the use of standard solvers?

-

2.

How can the approach be extended to include, if necessary, the consideration of resilience as an additional technical requirement for individual synthesis tasks?

-

3.

How can heat transfer and especially the resulting need to consider dynamic effects, which are highly relevant for many engineering applications, be integrated effectively into the existing model framework?

In order to address these questions and to provide substantial progress for the overall vision, our contributions in this paper are:

-

We develop heuristics for the system synthesis of fluid systems and combine them in a Branch-and-Bound framework which yields promising results and allows the synthesis of larger systems compared to the use of standard solvers. Also, we highlight the need for dual bounds in order to evaluate the primal solutions. Another special aspect of the heuristics is the integration of implicit engineering knowledge about the specific properties of the considered technical systems for the algorithmic optimization, thus pointing out the potential of the interdisciplinary approach.

-

We propose an approach which enables the consideration of resilience in the considered setting as a subsequent design decision, hence it is also possible to increase the resilience of existing systems.

-

We present extensions that allow the integration of heat transfer and dynamic effects for the synthesis of thermofluid systems. In order to handle the dynamic effects a novel approach which considers a variable length of time steps is presented. The extent to which the associated restrictions are reasonable can only be decided from an engineering perspective which again underlines the necessity of the interdisciplinary approach.

The paper contains research which has been partly presented in conference proceedings, see Hartisch et al. (2018), Weber and Lorenz (2017), Weber and Lorenz (2019a), Weber and Lorenz (2019b) and Weber et al. (2020), and is organized as follows: Sect. 2 provides a deeper insight into a possible software tool for engineers to design technical systems as well as the associated workflow. After the general technical and physical background as well as selected system components are discussed in Sect. 3, the basic MILP formulation for the design of fluid systems, as described in literature, is presented in Sect. 4. Based on this formulation, we propose a Branch-and-Bound framework containing a relaxation based on technical problem-specific characteristics in Sect. 5. The framework is then applied to the example of booster stations for high-rise buildings to solve practical examples and the performance of the framework is discussed using these instances. The application case of booster stations is used again in Sect. 6 to demonstrate an approach based on Quantified Programming in order to meet resilience requirements with respect to given system designs as they can be generated by the presented Branch-and-Bound framework. In Sect. 7, we extend the existing fluid system model by including heat transfer in order to describe basic thermofluid systems. A further important extension, the consideration of dynamic system behavior, is discussed in Sect. 8. Whereas the models and extensions previous to Sect. 8 only consider a sequence of system states without temporal couplings, we present an approach for the description of the dynamic system behavior, e.g. caused by storage components, with a focus on its practical applicability. Finally, to conclude the paper, we discuss our results and directions for future research in Sect. 9.

2 Supporting the system design process by optimization

We aim to establish quantitative, modern mathematical methods during the system design process by developing specialized tools for engineers. Apart from transferring these methods and projecting them onto the application of designing technical systems, a systematic workflow with a strong focus on providing engineers with the methodical procedures to exploit the corresponding quantitative methods is required. Here, we therefore discuss a suitable systematical design approach for this project and present a possible software implementation.

2.1 Systematical design approach

In an attempt to automatically find optimal pump system designs, Pelz et al. (2012) propose a systematical design approach in order to combine planning and engineering approaches with mathematical optimization. By guiding the designer through specific steps, the approach prepares the generation and solution of an optimization program and structures the application of the optimization results to reality. This approach divides the problem development process into seven steps which can be split into two phases—a deciding phase and an acting phase:

DECIDING

-

1.

What is the system’s function?

-

2.

What is my goal?

-

3.

How large is the playing field?

ACTING

-

4.

Find the optimal system!

-

5.

Verify!

-

6.

Validate!

-

7.

Realize!

The degree of detail is continuously refined from step to step. Steps 1, 6 and 7 describe the common planning process of an engineer or system designer and are further supplemented by additional intermediate steps relevant to the system design in order to streamline the planning process, facilitate the communication between the interest groups involved and therefore catalyze the generation of optimal solutions.

The first step is to determine the system’s function. For the function all relevant components of the system which are involved in the fulfillment of its purpose as well as the load history are of importance. Typical functions are, as in this paper, the transport of material, heating or cooling.

Subsequently, in step 2, the intended primary goal has to be concretized. This step is of great importance since the goal massively influences the final solution of the problem. The goal can vary depending on the interest groups involved. For example, the goal of an investor can be a low net expense. The operator, on the other hand, could consider a high availability, while a state institution could focus on a low energy consumption or low pollutant emissions as a priority. Since these goals can be conflicting, a completely different system may emerge depending on the goal but one that is optimal in its area. For this reason, the definition of the goal must also be seen as a subjective influence on the optimal system and must therefore be formulated in agreement with all relevant interest groups. This step is often neglected in practice.

The third and last step of the deciding phase is to determine the size of the playing field. This is the framework in which a system is to be optimally designed. In the case of a technical system, the components must be preselected. An algorithm takes over the task of selecting components from a pool of different components and making optimal use of them for the overall system. The delimitation of the playing field represents an important restriction for the possible solutions and must be carefully defined in mutual consultation with all interest groups. It must be clear that the approach will only find technical solutions that are part of the playing field and therefore will not replace human imagination or find creative solutions beyond the possible solutions. This underlines the intention of this approach which has to be seen as a decision tool and should not replace the decision maker.

At the end of the deciding phase, all decisions by the users which influence the optimal system have been made. Thus, the formulation of the system’s requirements is completed.

After the requirements for the system have been defined, the next step is the computation of a system proposal. This is done by setting up mathematical models and applying algorithms to solve them. The consideration is not limited to the components themselves but also to their design and it depends heavily on the defined playing field. Thus, a system proposal can be found with regard to topology and control parameters and is then converted into a physical model. With this approach, a global optimum with respect to the initial decisions made in the deciding phase cannot always be found within reasonable time. In general, however, it is not necessarily a question of finding the optimal system but of generating the best possible system and being able to estimate its quality. In practice, systems that are proven to be among the best percentage of possible solutions are often more than sufficient.

Following the algorithmic search for a system, the suggested solution needs to be reviewed and verified by the system designer. This is done by models with concentrated parameters, so-called 0D-models (Betz 2017), and stands in close interaction with the previous step.

Finally, the two last steps of the second phase are the validation of the system with experiments or higher-dimensional computational models and the subsequent realization.

2.2 Software framework

In Pelz et al. (2012) and Saul et al. (2016), a realization of the approach described above is presented. In this context, we focus on how the desired workflow, illustrated in Fig. 2, can be implemented by the use of software.

The process starts with a user, who is typically an engineer or system designer, defining the system requirements as well as the possible degrees of freedom for the system according to the design approach presented above. For this purpose, we propose a customized graphical user interface (GUI), as sketched in Fig. 3. On the left sidebar the user can select components from a component catalog and drag-and-drop them onto the drawing board. The component catalog is connected to a database in which the components are stored with their corresponding technical descriptions and characteristics. Degrees of freedom can be expressed by using ‘optional’ components and connections as indicated by the light grey highlighted symbols in Fig. 3. After the implicit system structure has been defined, the load related requirements to be met by the system, i.e. certain load scenarios as well as the intended objective, cost or energy minimization, can be specified in the corresponding submenus of the ‘Data’ tab. In this context, each load scenario describes a set of measurement points of the system that must reach certain values at defined points in time.

After the necessary requirements and degrees of freedom have been defined, the next step of the intended workflow is the creation of a MILP instance from the graphical representation. This also involves the intermediate step of a higher-level model file that aims for easier human readability. The mathematical program can then be solved using a combination of standard MILP techniques and state-of-the-art solvers as well as problem-specific algorithms and procedures.

Following this, the optimization result can be saved and reinterpreted graphically. Finally, the user is able to customize and examine the proposed system according to the last three steps of the design approach presented above.

3 Physical and technical background

While fluid systems only deal with the distribution of fluids, thermofluid systems fulfill two subtasks, the distribution as well as heating and cooling of fluids. The relevant physical quantities to describe such systems are the volume flow \(\dot{V} \; [\mathrm{m}^3/\mathrm{s}]\), the pressure H [bar] (or [m]), the heat flow \(\dot{Q}\) [J/s] and the temperature \(T \; [^\circ \,\mathrm{C}]\). While the pressure is related to the distribution, the heat flow and the temperature are necessary to describe heating and cooling and the volume flow couples both subtasks. Besides that, there are different groups of technical components involved to fulfill the individual subtasks. The relevant physical relationships necessary to describe the system behavior as well as selected technical components used in such systems are briefly explained below.

3.1 Continuity equation

All fluid distribution systems must satisfy the continuity equation. It states that the transported mass through a flow tube remains constant in the case of steady state flows. This criterion meets the general principal of mass conservation which claims that the inlet mass flow must be equal to the outlet mass flow. In fluid mechanics, this can be expressed as follows with \(\dot{m}\) representing the mass flow, i.e. the time derivative of mass, \(\rho\) representing the density of the fluid, v representing the flow velocity and A representing the cross-sectional area of the flow tube (Munson et al. 2009):

If the term \(v \cdot A\) is replaced by the volume flow \(\dot{V}\), the equation can be stated as:

In the case of incompressible fluids—like water—the relation can be simplified because of the pressure-independent density:

This relation holds for ideal systems without losses and is applicable for a system itself as well as for single components.

3.2 Bernoulli’s equation

Furthermore, Bernoulli’s equation, which is derived from the general conservation of momentum, applies. For steady state motions of frictionless (ideal), incompressible fluids that are not effected by external forces except for gravity, the Bernoulli energy equation holds (Munson et al. 2009):

Here, v is the fluid flow velocity at a point on a streamline, g is the acceleration due to gravity, z is the elevation of the point above a reference plane, H is the pressure at the chosen point and \(\rho\) is the density of the fluid. If this equation is multiplied by \(\rho\) and g, this results in the Bernoulli pressure equation:

Furthermore, the pressure increase (or decrease) \(\varDelta H_C\) must be considered separately if a pressure-modifying component is used between the points 1 and 2:

3.3 Specific heat formula

The physical quantities related to heating and cooling are coupled by the specific heat formula. In this regard, the specific heat is the amount of heat per unit mass required to raise the temperature by one Kelvin (or degree Celsius) (Incropera et al. 2007). The relationship is typically expressed as:

Here, c is the specific heat. As an example, the specific heat of water—the common substance with the highest specific heat—is about 4182 joule per kilogram and Kelvin at a temperature of 20°C. However, the relationship does not hold if phase changes occur due to the fact that heat added or removed during a phase change does not change the temperature. By assuming a constant density \(\rho\) of about one kilogram per liter, the mass can be replaced in terms of volume with \(V = m/\rho\). Furthermore, the above equation can be stated on a flow rate basis with T being the temperature difference with respect to a predefined reference temperature. Taking these considerations into account, Eq. (7) can be rewritten as:

3.4 Mixing of fluids

If (possibly different) fluids with different temperatures are mixed, the mixing temperature \(T_{M}\) of |N| fluids being mixed can be calculated as:

As a simplification for water, the mass can be estimated by \(m = V \cdot \rho\) with the assumption of \(\rho \approx 1\). Additionally, since only one kind of fluid is mixed and the specific heat terms \(c_i\) in each summand of both sums are assumed to be uniquely constant, they cancel each other out. Furthermore, the equation can be formulated on a flow rate basis and the numerator can be rewritten using Eq. (8):

3.5 Components

There is a wide variety of different components used in thermofluid systems depending on the respective field of application. Again, a general distinction can be made between components related to the distribution, such as pumps or valves, and those related to heating and cooling. For the latter, two ideal sources of thermal energy can be distinguished: ideal heat sources and ideal temperature sources. An ideal heat source is able to deliver a constant, predefined heat flow independent of the inlet or outlet temperature as well as the volume flow. An example for components which can be seen as ideal heat sources are simple heaters. An ideal temperature source, in contrast, can maintain a predefined temperature at its outlet independent of the heat flow required as well as the inlet temperature and the volume flow. It therefore produces a constant absolute temperature. Components which can be modeled as an ideal temperature source are for instance heat exchangers for district heat as examined in Pöttgen et al. (2016). In this paper, all heating and cooling components are associated with one of the two thermal energy sources.

In the following, the operation principles and the procedure for modeling two exemplarily selected component types, pumps as representatives of the distribution side and chillers as examples for the heating and cooling side, are described.

3.5.1 Pumps

In general, pumps have an opposite relation between their volume flow and pressure increase. With increasing volume flow the possible pressure increase decreases. Additionally, the power consumption P of pumps increases with increasing volume flow. There are basically three different classes of pumps, resulting from their speed control. These are pumps with constant speed, with stepped speed control and pumps with continuously variable speed control. The operation of a constant speed pump is fairly straightforward. For speed controlled pumps the possible pressure increase as well as their power consumption rises with increasing rotational speed n if the volume flow is held constant. This can be described by the so-called affinity laws:

Fixing any two of those variables determines the remaining ones. For variable speed controlled pumps this relation is manifested in their respective characteristic curves, see Fig. 4. The operation can be described by quadratic and cubic approximations with regression coefficients \(a_i,\, b_i,\, c_i\) and \(d_i\) to determine the pressure increase and power consumption for a given flow-speed-tuple (Ulanicki et al. 2008)

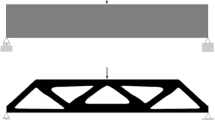

Single pumps or whole subsystems can be connected pairwise either in series or in parallel. If, on one hand, modules are connected in series, the total pressure increase results as the sum of the single pressure increases, while the flow through them remains constant. If, on the other hand, modules are connected in parallel, the pressure increase remains constant and the total volume flow through both modules is the sum of the single volume flows.

3.5.2 Chillers

Many different chiller types exist. However, a rough classification between two types, vapor absorption and vapor compression chillers, can be made. In the following, we concentrate on the latter. This type can again be subdivided into centrifugal, reciprocating, scroll and screw chillers by the compressor technologies used. Finally, those can be further classified into water-cooled and air-cooled chillers, depending on a chiller’s heat sink. All those types have in common that the cooling is realized by a circular process consisting of four subprocesses, as shown in Fig. 5. In the first step, the internal refrigerant enters the evaporator as a liquid-vapor mixture and absorbs the heat of the cooling medium returning from the heat source (1). The vaporous refrigerant is then sucked in and compressed while the resulting heat is absorbed by the refrigerant (2). During the subsequent liquefaction process, the superheated refrigerant enters the condenser, is cooled by the ambient air or water of a cooling tower and liquefies again (3). Finally, in the expansion process, the pressure of the refrigerant is reduced from condensing to evaporating pressure and the refrigerant expands again (4).

To model the specific operation of a chiller, the ‘DOE2’ electric chiller simulation model, as examined in Hydeman et al. (2002), can be used. This model is based on the following performance curves:

The \(CAP_{FT}\) curve, see Eq. (14), represents the available (cooling) capacity Q as a function of evaporator and condenser temperatures. The \(EIR_{FT}\) curve, see Eq. (15), which is also a function of evaporator and condenser temperatures describes the full-load efficiency of a chiller. Finally, the \(EIR_{FPLR}\) curve, see Eq. (16), represents a chiller’s efficiency as a function of the part-load ratio PLR, see Eq. (17). For the \(CAP_{FT}\) and \(EIR_{FT}\) curve, the chilled water supply temperature \(t_{chws}\) is used as an estimate for the evaporator temperature and the condenser water supply \(t_{cws}\) and outdoor dry-bulb temperature \(t_{oat}\) are used for the condenser temperature of water-cooled and air-cooled chillers, respectively. With Eqs. (14)–(17) it is possible to determine the power consumption P of a chiller for any load and temperature condition by applying Eq. (18). The operation of a given chiller is therefore defined by the regression coefficients \(a_i,\, b_i,\, c_i,\, d_i,\ e_i\) and \(f_i\), the reference capacity \(Q_{ref}\) and the reference power consumption \(P_{ref}\) (Hydeman et al. 2002).

Within the scope of our research, chillers can be assigned to the group of temperature sources. The chilled water supply temperature is therefore assumed to be independent of the inlet temperature and the volume flow. Depending on the application the condenser water supply or outdoor dry-bulb temperature may be assumed to be constant.

4 Fluid systems (Tile 1.1)

All fluid systems have two things in common: First, each system contains a fluid that moves through a system of connected pipes and other components. Second, a pressure difference in the system causes fluids to move. Hence, pressure is the driving force in fluid systems. Nevertheless, it should be noted that the focus of this paper is on water-based systems. The density of water is assumed to be constant which is a common simplification. However, for gas networks this simplification is typically not applicable (Geißler et al. 2011). Therefore, different models and methods have to be used to tackle these optimization problems.

The general system synthesis task considered in this paper can be stated as follows: Given a construction kit of technical components as well as a technical specification of load collectives, compare all valid systems and choose the one for which the lifespan costs—the sum of purchase costs and the expected energy costs—are minimal. In this context, a system is called a valid system if it is able to satisfy every prospected load. We assume that the transition times and therefore also the transition costs between the load changes are negligible compared to the total costs. Hence, corresponding models can be stated as quasi stationary. Each load out of the load collective is called a load scenario. A load scenario consists of two components: the time interval of the system’s operational life for this scenario as well as the demanded values for the respective physical quantities at certain points in the system.

The decision making can be abstracted in two ways. On one hand, it can be stated using linear (and non-linear) constraints as a MI(N)LP. Hence, the decisions of the optimization problem can be described by variables: first and second stage variables. In the first stage the optimization program must decide whether a component is needed and thus bought. In the second stage, a bought component can be turned on/off and possibly speed controlled to cover all load scenarios during the system’s operation. On the other hand, the problem can be abstracted as a source-target-network \((G,S_G,T_G)\) with a complete graph \(G = (V,E)\), vertices V and edges E, whereas \(S_G,T_G \in V\) are distinguished sets of vertices, namely the sources and the sinks of the network. An edge represents a component from the construction kit and a vertex represents a possible connection between components. The complete graph of the construction kit, consisting of all components that may be installed, plus the sources and sinks contains every possible system. Therefore, each system can be modeled by a subgraph of the complete graph representing the decisions made for the system.

The optimization model for fluid systems presented here is based on the models available in literature (see e.g. Betz 2017; Geißler et al. 2011; Pelz et al. 2012; Pöttgen and Pelz 2016). It serves as a starting point for the step-by-step extension according to Fig. 1. All variables and parameters used are shown in Table 1.

The objective of the optimization model is to minimize the sum of investment costs and expected energy costs over a system’s lifespan, see Objective (19). A component can only be used to satisfy a load scenario if it is installed, see Constraint (20). If a component is operational, its volume flow is reasonable or vanishes otherwise, see Constraint (21). Similarly, the pressure head must be reasonable at each port, see Constraint (22). Due to the law of flow conservation, the volume flow has to be preserved at all vertices, except for the sources and sinks, see Constraint (23). If a component is operational, the pressure propagation has to be ensured. In case of pumping components the pressure increase caused by the component increases the pressure at its outlet and therefore the adjacent system pressure, see Constraints (24) and (25). For non-pumping components the pressure increase \(\varDelta h_{i,j}^s\) is typically 0. Constraints (26)–(28) enable the setting of target values for the volume flow and pressure at certain points in the system. The generally non-linear operating behavior of components and the determination of their respective operating points is represented by Constraints (29) and (30). For the example of pumps, the associated relationships are shown in Sect. 3.5.

In principle, the model presented above is a MINLP due to the non-linear relationship resulting from the non-linear constraints for describing the component behavior. Unfortunately, MINLPs are in general hard to solve or even intractable (Geißler 2011). The corresponding constraints are therefore piecewise linearly approximated to make them accessible for MILP techniques. The implementation is straightforward and the linearization techniques used follow those presented in Vielma et al. (2010).

5 Algorithmic synthesis of fluid systems (Tile 1.2)

In the following, we present our contribution to the algorithmic synthesis of fluid systems on a larger scale, i.e. an algorithmic system design process for instances of practical interest. The goal is to generate ‘good’ systems in reasonable time. In this context, ‘good’ refers to solutions with a desirable objective function value and the runtimes should allow for practical applicability. However, the usual procedure to simply generate the corresponding MILP and to solve it using a standard MILP solver fails to solve such instances in reasonable time because of the inability to provide strong dual bounds. Therefore, we develop a problem specific approach exploiting the special system characteristics by primal and dual heuristics. In order to maintain a certain practical relevance, we examine the application case of so-called booster stations. According to the principles of Algorithm Engineering, as explained in Sanders (2009), this is an important feature since applications play an important role for the development of algorithms and serve as realistic inputs for meaningful experiments. In addition, as in this case, not all future applications for the algorithms to be included to a library are known in advance, therefore providing algorithms validated on related applications with realistic inputs is an important factor (Sanders 2009).

The basic idea is as follows: Use both the MILP and the graph view simultaneously and benefit from both. On the primal side, we use heuristics, especially local search algorithms, to obtain good primal solutions. In this paper, we focus on Simulated Annealing but other local search algorithms, e.g. Genetic Algorithms or Tabu Search, are possible, too. In this step, the graph representation is used to define neighborhoods and the MILP representation is used to evaluate the quality of the generated systems. On the dual side, we use a heuristic which is based on problem specific and technical knowledge to relax the generated MILP. Doing so, we obtain lower bounds. Finally, both heuristics are combined in a Branch-and-Bound framework to close the optimality gap between the primal and dual solutions. Thus, we can obtain provable optimal solutions for the system design.

5.1 Primal heuristic: Simulated Annealing

The implemented Simulated Annealing algorithm follows Boussaïd et al. (2013) with some modifications: Previous calculations are saved and a penalty term for non-valid system topologies is implemented. The algorithm is used to find good topologies for the first stage of the two-staged optimization problem (the topology problem) as described in Sect. 4. After generating a topology, the binary first stage variables are fixed in the MILP. Afterwards, the second stage (the operation problem) is solved optimally for the chosen topology regarding the different load scenarios using a standard solver. For the topology decision only series-parallel networks, as defined in MacMahon (1890), are considered to ensure that only technically sound topologies are generated, e.g. each technical component has at least one successor and one predecessor in the network.

The problem specific neighborhood function necessary for Simulated Annealing consists of four single neighborhoods, similar to the operators used in Altherr (2016). These are the replace (\(N_{Replace}\)), the swap (\(N_{Swap}\)), the add (\(N_{Add}\)) and the delete neighborhood (\(N_{Delete}\)):

Illustrative examples for the respective neighborhoods described below are shown in Fig. 6.

-

\(N_{Replace}\): A component \(p_i\), in the case of booster stations a pump, of the set of bought components—a subset of the available construction kit—is selected randomly and replaced by a component \(p_j\) from the set of unbought components. The previous predecessors and successors of \(p_i\) are the new predecessors and successors of \(p_j\). This neighborhood can only be created if the network consists of at least one component and there is at least one unbought component.

-

\(N_{Swap}\): Two different components \(p_i\) and \(p_j\) of the set of bought components are selected randomly. \(p_i\) and \(p_j\) swap positions in the network. The previous predecessors and successors of \(p_i\) are the new predecessors and successors of \(p_j\) and vice versa. This neighborhood can only be created if the network of bought components consists of at least two components.

-

\(N_{Add}\): A component \(p_i\) of the set of unbought components is selected randomly and it is decided whether \(p_i\) is connected in series or in parallel. If \(p_i\) is connected in series, a component out of the set of bought components, a source or a sink is selected. If a source or a sink is selected, \(p_i\) is connected in series behind the source or before the sink. If a component \(p_j\) is selected, \(p_i\) is connected before or behind \(p_j\). The source, the sink or \(p_j\) becomes the new predecessor or the new successor of \(p_i\). Furthermore, \(p_i\) adopts their previous successors or predecessors. If \(p_i\) is connected in parallel, a component \(p_j\) of the set of bought components is selected. All predecessors and successors of \(p_j\) become the predecessors and successors of \(p_i\) as well. This neighborhood can only be created if the set of unbought components consists of at least one component and in the case of a parallel connection if the set of bought components consists of at least one component.

-

\(N_{Delete}\): A component \(p_i\) of the set of bought components is selected randomly and is deleted from the network. If a predecessor \(p_{i,p}\) or a successor \(p_{i,s}\) of \(p_i\) only has \(p_i\) as its successor or predecessor, a successor or predecessor of \(p_i\) is selected randomly. It then becomes the new successor or predecessor of \(p_{i,p}\) or \(p_{i,s}\). This is necessary to ensure the flow conservation. Otherwise the connection is deleted without substitution. This neighborhood can only be created if there is at least one component in the set of bought components.

To generate a starting solution, a simple heuristic is used which is based on \(N_{Add}\) to obtain valid solutions. First, a minimal network including only the sources and sinks is considered. If this network is already a valid solution, it is accepted as the starting solution. Otherwise, components are added until a valid topology is generated. If the set of unbought components is empty and the solution is still not valid, the whole network is deleted and the procedure starts again with a minimal network until a valid solution is found.

For the considered problem, non-valid solutions have no associated costs. If the costs were set to \(+\infty\), the algorithm would never accept them as the current solution. In this case, it would not be possible to reach every solution in the solution space with the defined neighborhood function. To avoid this, a penalty term is introduced assigning costs to non-valid solutions. If a solution is non-valid, double the costs of the starting solution are used instead. This approach has two advantages: First, the costs are low enough that non-valid solutions can be used as current solution in the algorithm and second, high enough that they should be greater than the costs of all valid solutions.

The critical steps for the runtime of the algorithm are the calculations for the optimal operation mode for the found topologies performed by the MILP solver. To enhance the runtime of the algorithm a list is created which holds the last solutions. Every time a calculation is needed, the list is checked first whether this topology has already been calculated. If not, the system is added to the list. If the list reaches the defined maximum size, the oldest entry is deleted such that new solutions can be stored.

In addition, a cooling schedule has to be determined. We use an exponential cooling function \(T(t) = T_0 \cdot \alpha ^{ t}\) which is widely used in literature (Boussaïd et al. 2013). Here, \(T_0\) is the starting temperature and t indicates the number of temperature reductions performed. The parameter \(\alpha\) is a value between 0 and 1. It influences the slope of the cooling function. A threshold value \(T_{stop}\) acts as a termination criterion. As soon as the temperature falls below this threshold value, the algorithm terminates. Furthermore, the number of iterations per temperature level has to be chosen in such a way that the search space is explored sufficiently. These parameters have to be determined experimentally depending on the specific problem. For our experiments a value of \(\alpha = 0.9\) showed good results ensuring a balance between runtime and exploration of the search space. The start temperature \(T_0\) was set to 10,000. For the considered instances, especially with regard to the dimensions of the occurring costs, this proved particularly suitable to ensure both sufficient diversification and intensification. With regard to the dimension of expected costs, \(T_{stop}\) was set to 10. Hence, at the end of the algorithm almost exclusively cost improvements are accepted in order to ensure intensification. To establish a balance at each temperature level, 100 iterations were carried out per temperature level. This proved to be favorable to explore the search space. At lower values the search space is reduced too much and at higher values the algorithm starts to cycle.

5.2 Dual heuristic: problem-specific relaxation

A simple LP-relaxation, i.e. dropping the integrality constraints, is not suitable to obtain strong lower bounds. For that reason, an approach is presented which uses problem specific knowledge to meet this requirement, see Algorithm 1.

In the first step the original problem is relaxed by disabling the coupling constraints which connect the buy (\(b_{i,j}\)) and the operation variables (\(a^s_{i,j}\)) of the components for all load scenarios, i.e. only bought components can be used to satisfy the load scenarios:

Note that in the case of the booster stations considered in this paper the term ‘components’ corresponds to pumps. Afterwards, the problem is split into |S|-many subproblems, one for each load scenario. The remaining buy variables in all subproblems are substituted by the suitable operation variables. Afterwards, each of the |S| subproblems is split again into two sub-subproblems. The respective problems represent the optimization tasks for minimizing the energy costs and the investment costs for one single load scenario s. The new objective functions for the sub-subproblems are:

For each of these \(2 \cdot |S|\) problems the optimal solution is determined by a MILP solver. A lower bound is composed of the sum of the energy costs and the maximum of all investment costs for each load scenario:

This is obviously a valid way to obtain lower bounds: The energy costs for one load cannot be lower than those which arise for the decoupled case because this is also the configuration with minimal costs for the original problem in the given load scenario. Therefore, the sum of these energy costs cannot be higher than in the original problem. Given the fact that the optimal system for the original problem must be able to operate in each load scenario, the investment costs cannot be lower than the maximum of the individually computed investment costs for each decoupled load scenario because this is the configuration with minimal costs to serve the ‘most challenging’ load scenario.

5.3 Closing the gap: Branch-and-Bound

Based on the basic Branch-and-Bound algorithm, as described in Clausen (1999), a framework using problem specific knowledge to obtain optimal solutions for the considered minimization problem is presented, see Algorithm 2. Branch-and-Bound belongs to the class of exact solution methods. It is a widespread method for solving large, combinatorial optimization problems. The complete enumeration of such problems is impractical because the number of possible solutions grows exponentially with the problem size. However, the advantage of the Branch-and-Bound is that parts of the solution space can be pruned. For this a dynamically generated search tree is used.

Initially, this search tree only consists of one node, the root node which represents the whole search space of the original problem. Typically, a feasible solution for the root problem is calculated beforehand and becomes the initial best known solution. Otherwise, the best known solution value is set to \(+\infty\) if a minimization problem is considered. In this paper, we use the solution of Simulated Annealing as described in Sect. 5.1. Note that the best known solution value is used as a synonym for the global upper bound.

In each iteration of the algorithm an unexplored (active) node, representing a specific subproblem, is processed. An iteration contains three steps: selecting a node, dividing the solution space of this node into two smaller subspaces (branching) and calculating the bounds for the arising subproblems. The selection of a node follows a certain selection strategy. Here, we use the best-first-search selection strategy, for which always the node out of the set of active nodes with the lowest bound is selected. For these nodes one or more so-called conflicting components exist. These are components which are used for operation in the relaxation but their costs are not part of the investment costs of the relaxation. After the selection, branching is performed and two child nodes are generated by introducing additional constraints in order to divide the solution space. The branching rule for the active nodes is defined as follows: A component out of the set of conflicting components of this node is selected randomly. For one of the subproblems, an additional constraint is added which sets the binary buy-variable of the selected conflicting component to 0, i.e. the component is not part of the system. For the other subproblem, an additional constraint which sets the buy-variable to 1, i.e. the component is part of the system, is added instead. Hence, the search space is split into two smaller disjoint search spaces. Note that if a buy-variable is set to 0, the selected conflicting component is not bought and therefore cannot be used for operation. As a result, any solution with an operation-variable associated with this component not equal to 0 would be inherently infeasible for the original problem due to Constraint (20) of the MILP for fluid systems. Therefore, the operation-variables associated with these components in the respective subproblems are fixed to 0. In the opposite case, the operation-variables are not effected by such a restriction.

Afterwards, the bounds of the newly generated nodes are calculated immediately. This is called the eager evaluation strategy, whereas for the so-called lazy strategy, the bounds of the child nodes are not calculated until the respective node is selected and the nodes are selected according to the bound of their parent node. The bounds of the nodes are determined by solving the relaxation defined in Sect. 5.2 for the given subproblem. If the solution of the relaxation of a node is a valid solution for the original problem, its value is compared to the currently best known solution and the better solution is kept. In this implementation, a solution of the relaxation is valid for the original problem if only those components are used for operation which are also bought. This means that their purchase costs are part of the investment costs of the system according to the explanations given in Sect. 5.2. If the bound is worse than the best known solution, no further exploration for this subtree is needed because the subproblem contains no better solutions for the original problem than the currently best known solution. The same applies if there are no feasible solutions for the subproblem. Otherwise, if none of these three cases occur, the node becomes part of the set of active nodes since the corresponding subproblem may still contain better solutions than the currently best known solution.

The search terminates if there are no active nodes left. The currently best known solution at this point is the provable optimal solution to the original problem since there are no subproblems left which could contain a better solution and the union of their disjoint search spaces equals the search space of the original problem.

An exemplary illustration for branching in the case of the application to booster stations is given in Fig. 7. The procedure starts from the root node \(N_0\) with initial best known solution \(z_{best}\) resulting from using the objective value of the solution produced by Simulated Annealing \(z_{SA}\). Branching is performed on the buy-variables of the conflicting pumps as described above, here represented by \(b_{Px}\). The node indices indicate the sequence of the node creation. Furthermore, the example includes all three termination criteria: the solution of the relaxation is also a valid solution for the original problem (\(N_3\), \(N_7\)), the subproblem is infeasible (\(N_4\), \(N_8\)) or the bound obtained by the relaxation \(\underline{z}_x\) is worse than the currently best found solution (\(N_5\)).

5.4 Application to booster stations

To validate the developed approach, test instances with a realistic character were designed. For this, the application example of so-called booster stations is used.

A booster station, also referred to as pressure booster system, is a network of either one type or different types of typically two to six single rotary pumps. A main field of application is the supply of whole buildings or higher floors, especially in high-rise buildings, with drinking water if the supply pressure provided by the water company is not high enough to satisfy the demand at all times. Typically, a distinction between three different system concepts is made. These concepts are booster stations with cascade control, with continuously variable speed control of one pump and with continuously variable speed control of all pumps. In this paper, we concentrate on the third concept, booster stations with continuously variable speed control of all pumps. For this concept, the number of active pumps as well as their speed depends on the required volume flow. Because of the continuously variable speed control of all pumps, a very constant inlet pressure occurs and it is possible to compensate high supply pressure fluctuations even if a malfunction occurs or a pump is failing. There is no sudden pressure increase because the other pumps can step in. Furthermore, we focus on a connection concept in which the booster station is connected to the water supply directly and no discharge sided pressure vessels are used. If necessary, so-called normal zones are implemented. These can be served by the supply pressure itself and are therefore not connected to the booster station. This can be used to avoid overpressure for lower floors. For all other floors overpressure is avoided by installing reducing valves if necessary.

A booster station system primarily consists of four types of components: pumps, pipes, pressure reducers and valves. Furthermore, each system has at least one source and one sink. In this paper, we focus on the pumps of booster stations and consider the other components implicitly. Hence, the presentation is simplified to a switchable interconnection of pumps which form a connected network. The relevant physical variables are: the volume flow \(\dot{Q}\) through the pumps, the pressure head \(\varDelta H\) generated by the pumps, their power consumption P and their rotational speed n.

All calculations are based on DIN 1988-300 (2012) and DIN 1988-500 (2011). Furthermore, the planning horizon was set to 10 years with assumed mean energy costs of 0.30 Euro per kWh.

To generate the test instances, different characteristics were varied and combined:

-

the height and usable area of the buildings

-

the intended use of the building with the corresponding load profile

-

the conditioning of hot water

-

the available pump kit

This results in 24 different instances. The names of the instances are derived from the abbreviations for the respective characteristics. In the following, these characteristics are specified.

Buildings: Two different fictional buildings are used. Both are high-rise buildings but vary in two characteristics: The first building (B15) is 15 floors high and each floor has a usable area of 350 sq. m. The second building (B10) is 10 floors high and has a usable area of 700 sq. m for each floor. This means that different pressure increases and maximum volume flows are required as the building’s height and usable area effect the pressure losses and demanded volume flows.

Intended use: The buildings are either used as a hypothetical hospital (H), a residential (R) or an office building (O). All usage types differ regarding their furnishing and consumption behavior. Hence, different maximum volume flows, pressure losses and load profiles occur. Depending on the usage four or five load scenarios are distinguished.

Hot water conditioning: The conditioning of hot water either occurs in so-called centralized storage water heaters (C) or decentralized group water heaters (D). These concepts result in different pressure losses along the piping.

Available pump kit: For each test instance one of two disjoint pump kits with five pumps each is available. All of them are speed-controlled single rotary pumps and taken from the Wilo-Economy MHIEFootnote 5 model series, see Fig. 8. The first kit includes the types from 203 to 403 of the model series (1) and the second kit the types from 404 to 1602 (2) with different prices and characteristics.

As a summary, Table 2 shows the peak loads for the different test instances in terms of the maximum volume flow \(\dot{V}^{max}\) and necessary pressure head \(\varDelta H\). Note that there are always two test instances for each of the 12 entries since they are used with two different pump kits. For the partial loads, which depend on the considered building type, Table 3 shows the different scenarios with the relative time shares F of the operational lifespan for which these scenarios are expected to occur and the associated relative volumes flows \(\dot{V}/\dot{V}^{max}\).

5.5 Computational study

In order to validate the developed approach, we conducted a computational study using the 24 test instances introduced in Sect. 5.4. All calculations were performed on a MacBook Pro Early 2015 with a 2.7 GHz Intel Core i5 and 8 GB 1867 MHz DDR3 memory, using CPLEX Optimization Studio 12.6 as MILP solver.

5.5.1 Solutions

In this section, the quality of the solutions found by the primal and dual heuristics is presented.

Simulated Annealing: Table 4 shows a summary of the performance for the presented implementation of Simulated Annealing in all test instances. The best solution found by Simulated Annealing is represented by \(z_{SA}\). The lower bound \(\underline{z}\) is calculated using the dual heuristic and the optimal solution \(z^*\) is obtained via Branch-and-Bound. The relative gap between the solution of Simulated Annealing and the lower bound \(gap_{\underline{z}}\) is defined as \((z_{SA}-\underline{z})/\underline{z}\). The relative gap between the best solution obtained by Simulated Annealing and the actual optimal solution \(gap_{z^*}\) is defined as \((z_{SA} - z^*) / z^*\).

The mean value of \(gap_{\underline{z}}\) for all test instances was \(9.27\%\) with a standard deviation of \(6.37 \%\). In 14 out of 24 cases the optimal solution was found by the implemented Simulated Annealing algorithm. The mean value for \(gap_{z^*}\) was \(0.69 \%\) with a standard deviation of \(1.08 \%\). However, if the optimal solution was not found, the mean value of \(gap_{z^*}\) was \(1.65 \%\) with a standard deviation of \(1.1\%\).

Lower bounds: Furthermore, the lower bounds were compared to the optimal solution. Table 5 summarizes the results for all test instances. Again, \(\underline{z}\) is the dual bound and \(z^*\) is the optimal solution obtained by Branch-and-Bound. The relative gap between the initial dual bound and the optimal solution, denoted by gap, is defined as \((z^*-\underline{z})/z^*\). The mean value of gap was \(7.45 \%\) with a standard deviation of \(5.23 \%\). The maximum of gap was \(19.92 \%\), while the minimum was only \(0.54 \%\).

5.5.2 Runtime

In this section, the runtimes of all three procedures are presented. It should be noted that the runtime of the Branch-and-Bound framework includes the runtime of Simulated Annealing as it generates the starting solution for the procedure. An overview of the runtimes for all test instances for Simulated Annealing (SA), the dual heuristic to generate initial lower bounds (LB) and Branch-and-Bound (B&B) is given in Table 6.

Simulated Annealing: The Simulated Annealing algorithm took on average 475 s to terminate. High deviations occurred. The maximum runtime was 2412 s, while the minimum runtime was only 85 s. This results from the fact that the MILP solver needs much more time to solve the operation problem if the created neighborhood is large in terms of many bought components.

Lower bounds: Generating lower bounds took on average 661 s. The longest runtime was 1582 s, while the shortest runtime was only 208 s. Note that in most cases this was comparable to the time the Simulated Annealing algorithm took to terminate. Hence, this circumstance allows a timely examination of a solution found by Simulated Annealing in practice.

Branch-and-Bound: The average runtime for generating optimal solutions was 9969 s. The maximum runtime was 21,473 s and the minimum runtime only 4148 s. If the initial upper bound found by Simulated Annealing was already the optimal solution, the average runtime was 8804 s and therefore \(31.75 \%\) faster than in the opposite case where the average runtime was 11,599 s.

6 Resilient system design (Tile 2.1)

As an extension to the approach presented above one can enhance the resilience of technical systems by adding possible breakdown scenarios. The concept of resilience is of great interest since it cannot only be applied to control uncertainty during the design phase, but it is also applicable for the system’s operation. Instead of designing systems that are robust with respect to specific single ‘what-if’ assumptions made beforehand during the design phase, resilient system design aims at building systems that perform ‘no matter what’ (Altherr et al. 2018a). In this context, resilience of a technical system is the ability to overcome minor failures and thus to avoid a complete breakdown of its vital functions. A possible failure of the system’s components is one critical case the system designer should keep in mind.

In this context, optimization under uncertainty can be used in order to describe and increase resilience of technical systems (Altherr et al. 2018a). Prominent solution paradigms for optimization under uncertainty are, inter alia, Stochastic Programming (Birge and Louveaux 2011), Robust Optimization (Ben-Tal et al. 2009), Dynamic Programming (Bellman 2003) and Sampling (Gupta et al. 2004). In the early 2000s the idea of universally quantified variables, as they are used in Quantified Constraint Satisfaction Problems (Gerber et al. 1995), was picked up again (Subramani 2004)—coining the term Quantified Integer Program (QIP)—and further examined (Ederer et al. 2011; Lorenz et al. 2010). QIP gives the opportunity to combine traditional Linear Programming formulations with some uncertainty bits. Hence, a solution of a QIP is a strategy for assigning existentially quantified variables such that some linear constraint system is fulfilled. By adding a minmax objective function one must further find the best strategy (Ederer et al. 2011).

In this spirit, for our contribution to the design of more resilient technical systems, we consider the following special case: Starting from a valid network configuration \((G,S_G,T_G)\) that is able to satisfy the desired loads of any scenario \(i \in S\), we are allowed to add some additional components to make the system more resilient against breakdowns.

More concrete, we define \(I := E\) as the set of initial components, A as the set of additional components and try to find a subset \(A' \subseteq A\) such that \(G' := ((V,I \cup A'),S_G,T_G)\) fulfills resilience in the following sense: For each scenario \(i \in S\) it has to be ensured that if a single component \(e \in I\) is affected by a breakdown, a valid combination in \(G'' := ((V,(I \cup A') \backslash \{e\}),S_G,T_G)\) must exist such that the demanded load in scenario i can always be satisfied.

The set of additionally bought components \(A'\) must be selected such that the lifetime costs of the resulting system, i.e. investment costs and operational costs, are minimal. Hence, a multistage optimization problem arises: Design or adapt the system (stage 1) such that for each anticipated load scenario (stage 2) we can find the optimal operation point (stage 3) and ensure for each breakdown case (stage 4) that the functionality of the system is ensured (stage 5).

Since the system design process can be conducted in several consecutive steps the arising problem is a multistage optimization problem. Hence, we make use of a Quantified Mixed-Integer Linear Program (QMIP) to find optimal system configurations with increased resilience.

It should be noted that although applied to booster stations in this paper, the approach can be abstracted for a variety of technical system using the general representation of so-called process networks as shown in Hartisch et al. (2018). Furthermore, similar to the concept of K-resilience examined in Altherr et al. (2019), the simultaneous breakdown of multiple pumps can be considered if necessary.

6.1 Quantified Programming

Quantified Mixed-Integer Linear Programming is a direct and formal extension to Mixed-Integer Linear Programming utilizing uncertainty bits. In QMIPs the variables are ordered explicitly and they are quantified either existentially or universally resulting in a multistage optimization problem under uncertainty:

Let there be a vector of n variables \(x = (x_1, \ldots , x_n)^\top \in \mathbb {Q}^n\), lower and upper bounds \(l \in \mathbb {Q}^n\) and \(u \in \mathbb {Q}^n\) with \(l_i \le x_i \le u_i\), a coefficient matrix \(A \in \mathbb {Q}^{m \times n}\), a right-hand side vector \(b \in \mathbb {Q}^m\) and a vector of quantifiers \(\mathcal {Q} = (\mathcal {Q}_1, \ldots , \mathcal {Q}_n)^\top \in \{\forall , \exists \}^n\). Let \(I \subset \{1,\ldots ,n\}\) be the set of integer variables and \(\mathcal {L}_i = \{x \in \mathbb {Q} \mid (l_i \le x \le u_i) \wedge (i \in I \Rightarrow x \in \mathbb {Z})\}\) the domain of variable \(x_i\) and let \(\mathcal {L}=\{x \in \mathbb {Q}^n \mid x_i \in \mathcal {L}_i\}\) be the domain of the entire variable vector. Let the term \(\mathcal {Q} \circ x \in \mathcal {L}\) with the component wise binding operator \(\circ\) denote the quantification vector \((\mathcal {Q}_1 x_1 \in \mathcal {L}_1, \ldots , \mathcal {Q}_n x_n \in \mathcal {L}_n)^\top\) such that every quantifier \(\mathcal {Q}_i\) binds the variable \(x_i\) to its domain \(\mathcal {L}_i\). We call \((\mathcal {Q},l,u,A,b)\) with

a Quantified Mixed-Integer Linear Program (QMIP).

Note that the objective function is actually a minmax function alternating according to the quantifier sequence: Existential variables are set with the goal of minimizing the objective value while obeying the constraint system, whereas universal variables are aiming at a maximized objective value. For more details, we refer to Wolf (2015). QMIPs allow a straightforward modeling of multistage optimization problems and the domain of universal variables might be modeled explicitly using a second linear constraint system (Hartisch et al. 2016).

Solutions of QMIPs are strategies for assigning existentially quantified variables such that the linear constraint system \(Ax \le b\) is fulfilled. One way to deal with quantified programs is to build the corresponding deterministic equivalent program (DEP) (Wolf 2015; Wets 1974) and to solve the resulting MILP using standard MILP solvers. Further, a novel open-source solver for QMIPs is available performing an enhanced game tree search (Ederer et al. 2017).

6.2 Application to booster stations

In order to build on the results of Sect. 5, the application example of generating cost-efficient resilient booster stations out of non-resilient ones is examined here. The requirements for the considered case of resilient booster stations are manifested in DIN 1988-500 (2011). It states that booster stations must have at least one stand-by pump. If one pump breaks down, the system must be able to satisfy the peak flow and thus all demanded loads at any time. In contrast to related contributions (cf. Altherr et al. 2019), a further requirement mentioned in DIN 1988-500 is considered. This requirement states that in order to avoid stagnation water, an automatic, cyclic interchange between all pumps including the stand-by pumps is necessary. Therefore, all pumps have to operate at least once in 24 h. This additional requirement is strongly connected to the cost-efficiency goal.

In this example the relevant costs for a booster station are the investment costs for the stand-by pumps as well as the operational costs of the overall system over a predefined lifespan. As the breakdown cases are expected to only take place in a small amount of time compared to the lifespan, due to short repair times, they do not significantly affect the operational costs of the system and are therefore neglected. However, the requirement for all pumps to operate once in 24 h, i.e. in at least one of the daily repeating load scenarios, massively affects the operational costs. Given this circumstance, it is not a trivial task to determine by which stand-by pumps the system should be extended in order to obtain a cost-optimal system.

Theoretically, a set of pumps or entire subsystems can be connected either in parallel or in series. However, according to today’s practice only parallel connections are favorable from a technical point of view (Betz 2017). As mentioned in Pöttgen and Pelz (2016) two major reasons exist for considering parallel arrangements: Firstly, heavy part loads caused by the deactivation of single pumps are avoided. Secondly, in case of failure of a single pump the remaining system components are not directly affected and retain their full functionality. Although serial arrangements are generally conceivable, the resulting control strategies between two operating points are very difficult to realize in practice. We make use of this circumstance to obtain significantly smaller pump networks by using only parallel connections to demonstrate the approach. Figure 9 shows such a network with four parallel pumps.

6.3 Optimization model