Abstract

The advantages of multidisciplinary design are well understood, but not yet fully adopted by the industry where methods should be both fast and reliable. For such problems, minimum computational cost while providing global optimality and extensive design information at an early conceptual stage is desired. However, such a complex problem consisting of various objectives and interacting disciplines is associated with a challenging design space. This provides a large pool of possible designs, requiring an efficient exploration scheme with the ability to provide sufficient feedback early in the design process. This paper demonstrates a generalized optimization framework with rapid design space exploration capabilities in which a Multifidelity approach is directly adjusted to the emerging needs of the design. The methodology is developed to be easily applicable and efficient in computationally expensive multidisciplinary problems. To accelerate such a demanding process, Surrogate Based Optimization methods in the form of both Radial Basis Function and Kriging models are employed. In particular, a modification of the standard Kriging approach to account for Multifidelity data inputs is proposed, aiming to increasing its accuracy without increasing its training cost. The surrogate optimization problem is solved by a Particle Swarm Optimization algorithm and two constraint handling methods are implemented. The surrogate model modifications are visually demonstrated in a 1D and 2D test case, while the Rosenbrock and Sellar functions are used to examine the scalability and adaptability behaviour of the method. Our particular Multiobjective formulation is demonstrated in the common RAE2822 airfoil design problem. In this paper, the framework assessment focuses on our infill sampling approach in terms of design and objective space exploration for a given computational cost.

Similar content being viewed by others

1 Introduction

Following several decades of continuous development, the aerodynamic design of conventional aircraft configurations has matured; seemingly reaching a plateau. Development is achieved through many small and slow disciplinary improvements while—still as a typical industrial practice—a leading discipline dictates the design of the rest of them. However, this approach results to an inferior performance in terms of efficiency of the subsystems (due to the dominant discipline constraints) but in terms of overall system performance as well. Surpassing the current performance plateau requires a re-definition of the way conceptual and preliminary design is performed in order to take advantage of the synergy between the disciplines. An improvement is achieved when the subsystems are developed in parallel under a multidisciplinary formulation, guided by one or multiple global merits. A demonstration of this is found in the superiority of aerostructural wing design over aerodynamic wing design Chittick and Martins (2009).

Problems like the aerostructural wing design can be quite complex and costly, providing the application ground for techniques like surrogate modelling Liem et al. (2015). Surrogate modelling is typically used to decrease the computational expenses associated with a High Fidelity (HF) analysis, or/and guide the design Kipouros et al. (2007) as in Surrogate Based Optimization (SBO) problems. In this sense, LeGresley LeGresley and Alonso (2000) used a POD method to approximate the pressure distribution within an airfoil design application, while Mauery and Korte Simpson et al. (2001) used a Kriging model for aerospike nozzle design. Parr et al. (2012) used Kriging to guide the design of a constrained satellite boom and a wingbox. A combination of metamodelling and adjoint methods that provide reasonably inexpensive sensitivity information, allows the efficient development of Gradient Enhanced Kriging (GEK) or Co-Kriging approximations Chung and Alonso (2002).

The high potential of the metamodelling-based approach led to an effort of improving the accuracy of the established methods, as in the case of the Radial Basis Functions (RBF) Mullur and Messac (2005) and Co-Kriging Han et al. (2012). An improvement can be attained by dividing the design space to create local metamodels. Following this idea, Liem Liem et al. (2015) applied the concept of Mixture of Experts Jacobs et al. (1991); Masoudnia and Ebrahimpour (2014)(MoE) using Kriging, GEK and RBF, trained locally in different design space subregions defined by clustering algorithms. Nelson et al. (2007) proposed their Kriging model that uses Low Fidelity (LF) and High Fidelity simulations within a TreedGaussianProcess partitioning. The use of Multifidelity (MF) numerical tools is another promising way of accelerating a complicated optimization problem. This idea has been extensively explored in recent years and good examples of formulations have been developed (Bandler et al. 1994; Jarrett and Ghisu 2012; Alexandrov et al. 2001, 1998) and applied Choi et al. (2008); Chung and Alonso (2002); Rumpfkeil and Beran (2017). Multifidelity methods are of course not applied only within a Surrogate Based Optimization framework and can be successfully integrated in Gradient-Based methodologies as well. An example of this can be found in the work by Bryson and Rumpfkeil (2018) where Multifidelity results are used to approximate the Hessian matrix within a BFGS Trust Region (TR) based optimizer. Other applications include Multidisciplinary optimization Rodríguez et al. (2001), aircraft stability Park et al. (2017) and uncertainty optimization which is closely linked to Multifidelity techniques and surrogate modelling Peherstorfer et al. (2018); Chaudhuri et al. (2018).

In this paper, we present a methodology originally developed to cover the needs of single and multiobjective multidisciplinary optimization Kontogiannis et al. (2017). However, thanks to its generalized formulation, its application range extends well beyond the limits of aerospace design and it should in fact prove efficient to any design process associated with computationaly demanding performance analysis. In our aerospace design applications, as in any industrial application, the potential hidden in multidisciplinary interaction is maximized once the optimization framework is applied early in the design stage. As such, extensive conceptual design space exploration is offered while providing reliable engineering feedback to guide the rest of the design and fine-tuning optimization studies. To satisfy such exploration and global optimality requirements, our tool uses the Expected Improvement (EI) criterion Jones (2001). Under this SBO plan, the trained model is not used directly to find the minimum since this would be the optimum of a poor representation of the actual design space. Instead, EI indicates the design point to be sampled next, given its value and uncertainty predictions, therfore guiding the optimization. The EI method requires the use of a Gaussian Process model like Kriging or Co-Kriging. Here, a modification of Kriging was developed to accommodate MF data points. Although Co-Kriging can be used as an MF version of Kriging, it was avoided due to the complexity and cost increase associated with the tuning of the additional hyperparameters. A use of a global optimizer is necessary for the solution of the EI optimization subproblem, as it is acknowledged that the EI space is multimodal Jones et al. (1998). Two solutions of different fidelities are defined for the infill analysis. Selecting the appropriate analysis method uses a criterion which depends on the design space representation and can be directly adjusted by the engineer. As a result, this method can be used in different stages of the design focusing either in very rapid conceptual tradeoff studies or in a more detailed design study.

Before proceeding to applying the method to aerostructural design problems, the performance and characteristics of our methodology are examined. In this paper, simple 1D and 2D test cases are used to visually demonstrate the fundamental developments of the freamework, while the scalable Rosenbrock function Rosenbrock (1960) provides an overview on the scalability attributes of the approach. The formulation of the Multidisciplinary Design Optimization (MDO) problem as implemented in our methodology, is assessed using the popular Sellar function MDO test case Sellar et al. (1996). As a typical industrial design example, the RAE2822 case is being used to test our Multiobjective (MO) formulation, with a focus on our infill sampling approach.

In Sects. 2 and 3 the methodology of the framework is described in more detail: information regarding the surrogate generation and its modification into a MF SBO plan, details regarding the suboptimization process, as well as the handling of the constraints are provided. Section 4 displays the framework through well-known 1D and 2D test cases used to validate the work. The methodology is applied to the Rosenbrock and the Sellar MDO test function in Sect. 5, which describes the case set up formulations and the respective results. Section 6 reviews the impact of our parallel infill sampling approach in a MO RAE2822 airfoil problem and a summary outlining the conclusions from this work as well as the future work is provided in Section 7.

2 Methodology

The proposed methodology constitutes the core of the optimization framework aiming towards MDO problems.Footnote 1 Since multiple disciplines are typically associated with conflicting objective functions, it is developed so that it can handle MO problems.

2.1 Surrogate modelling and SBO in an MF context

Recent state of the art MF approaches suggest using the results from LF and HF tools, \(y_{LF}\) and \(y_{HF}\) respectively, to follow the relationship below Forrester and Keane (2009),

A typical application of this decomposition within an SBO framework Jones (2001) is the trust region Demange et al. (2016a, b), Jarrett and Ghisu (2015) approach involving the generation of a locally accurate error surrogate (typically generated by an RBF model) to correct the LF value according to eq.1. However, such an optimization methodology is not appropriate for our needs for two main reasons. It does not offer the exploration characteristics required during a conceptual design stage study. Furthermore, it demands a high number of LF analyses as the LF tool is directly called by the optimizer during the suboptimization stage. Such an approach, even for low cost LF tools, becomes prohibitively expensive for an MO MDO study. To minimize the suboptimization costs we are using metamodels as their occasional training is cheaper—for a reasonable number of training data points. The optimizer then calls a surrogate model predictor which is of course cheaper than an LF tool. Therefore, in the global SBO methodology that we present, MF information is directly implemented in the surrogate model generated. A popular metamodelling technique for MF SBO is Co-Kriging Forrester et al. (2007) which, unlike Kriging, uses both LF and HF point correlation to compose a unified MF covariance matrix. However, such an approach includes the tuning of additional hyperparameters, increasing the likelihood estimation cost quadratically to the order of the matrix. We therefore consider that Co-Kriging covariance matrix operations make it too expensive for real industrial MDO applications at which we aim.

Instead, to reduce computational expenses while aiming for global HF optimality, we propose a novel MF modified Kriging based model (MF modKriging). The computationally efficient RBF model cannot provide global exploration within an SBO framework Forrester et al. (2008). In the next paragraphs, we present how we modify ordinary Kriging so it can accommodate MF information and be used instead of the more expensive Co-Kriging model.

Throughout this work—as typical in most MF research efforts, only HF results are considered to be accurate within the numerical framework. LF simulations are inaccurate, do not provide additional information Kennedy and O’Hagan (2000) and despite being efficiently used to guide the optimization process, they are associated with a non constant error \(e({\mathbf {x}})\) defined in Eq. 1. Therefore, to guide towards HF optima, our modified Kriging model should be an interpolation through HF points and a regression through LF ones; according to the associated error of the latter. This error is either estimated by a simple RBF Forrester and Keane (2009) or a Kriging model using Eq. 1, given a sampling of both LF and HF points. For this, the space filling Latin Hypercube Sampling (LHS) method with a Morris-Mitchell maximin approach Morris and Mitchell (1995); Johnson et al. (1990) is used. The sampling requires m points analyzed only by the LF tool at \({\mathbf {x}}_{LF}\), and n new points analyzed with both the LF and HF tool at \({\mathbf {x}}_{HF}\). As such, we define the complete objective value vector \({\mathbf {y}}\), and the Error vector \({\mathbf {e}}\) consisting of n data, derived by Eq. 1

If RBF is used to model the Objective Function (OF) error, then in matrix form we have,

When Gaussian kernel functions are used, the Gram matrix \({\mathbf {R}}\) consists of elements of the form,

where the smoothness parameter p is set to \(p=2\). Alternatively, to the author’s experience Mátern functions provide an accurate and robust choice [40]. The parameters vector \(\varvec{\alpha }\) is found by a Cholesky decomposition and back-substitution. The error associated with the use of the LF tool can be now calculated in any design point. Below, we show how the error estimation is used in MF modKriging. Initially, consider the standard ordinary Kriging, that uses the correlation matrix,

The elements of this matrix estimate the correlation of the data points by modelling the function as a Gaussian process:

Here, d is the number of the design variables and \(\theta _l\) and \(p_l\) are the shape and smoothing parameters respectively, that need to be defined.

We generate the Kriging predictor by optimizing the set of \(\mu\), \(\sigma ^2\), \(\theta _l\), \(p_l\) parameters. The mean \(\mu\) and variance \(\sigma ^2\) are easily optimized in a deterministic manner Forrester et al. (2008). Finding the optimum shape and smooth parameter (\(\hat{\theta _l}\) and \(\hat{p_l}\)) however, requires a stochastic optimization process aiming at maximizing the Likelihood Estimation function \(\lambda\) given by,

Therefore, the problem of optimizing the hyperparameters is defined by,

Finally, the Kriging predictor takes the form,

where \(\varvec{\psi }\) is the correlation vector associated with the point to be predicted.

The Kriging predictor is not used directly since we apply the Kriging model to provide the next sampling point under the EI Jones (2001) plan. This approach can lead to global optimality without a prohibitively costly design space exploration and requires information regarding the metamodel’s Mean Squared Error (MSE). This is given by,

MSE is zero in training data points and it increases between them due to value uncertainty. The above formulation is used to construct an estimator of the expected improvement of the objective function in any design space point, given the current minimum value \(y_{\min }\). In any point where \({\hat{s}}({\mathbf {x}}) \ne 0\), this is expressed as,

where \(\Phi\) is the cumulative distribution function,

and \(\phi\) is the probability density function,

In Eq. 11, erf is the error function expressed as,

The infill point \(x^*\)\(\in D \subset R^d\) is then the solution of the suboptimization problem,

So far, we have presented the ordinary Kriging, used extensively in the literature. However, this cannot accommodate data resulting from MF analyses. Our MF Kriging modification uses a simple way to superimpose LF and HF information within the model. Specifically, we want to include this MF information in the Kriging predictor (Eq. 15) and MSE (Eq. 17) expression, therefore transforming the EI into an MF EI.Footnote 2

For MF modKriging, we define the correlation matrix to use only the LF training data of our MF vector \({\mathbf {y}}\), so that:

The Kriging predictor \({\hat{y}}\) now takes the following form:

where \({\hat{\mu }}\) is the Kriging optimized mean value calculated as,

The HF information is recovered by \(e({{\mathbf {x}}})\), which is a surrogate prediction of the LF tool error, defined by Eq. 1. Therefore, Kriging predictor now interpolates HF points and fits LF ones depending on their predicted error. However, since the EI estimation is also dependent on the MSE, complete HF information recovery demands the alteration of the MSE equation as:

where the model variation \(\sigma ^2\) is given by,

with n being the number of sampling data points. In Eq. 17, the \(\hat{s^2_e}\) term is the MSE of the error metamodel, essentially expressing the uncertainty that arises from the LF tool correction model. The MSE quantity is typically provided by Kriging models through Gaussian based correlation matrix operations, as above. Therefore, \(\hat{s^2_e}\) is formulated in a straightforward way if a Kriging model is used for the LF error prediction. However, as it is frequently stated in this paper, the optimization framework should have the minimum computational costs. As such, we often use RBF for the LF error prediction. In this case, by using a Gaussian kernel we can relate the Gram matrix to the Correlation matrix used in Kriging models. By comparing Eqs. 4 and 6 we observe that Gram matrix is identical to the Correlation matrix in the special case that: (1) we define the training data points to be correlated with each other in the same way for all design coordinates (isotropic model), and (2) this correlation coincides with the correlation defined in the Gaussian RBF kernel through the constant shape parameters \(\theta\) and p. Namely, for a case where \(\theta = \theta _1= \theta _2, \cdots \theta _d\) and \(p=2\), the following holds,

This essentially implies that the RBF model used to express the error of the LF tool is equivalent to a Kriging model which has the aforementioned correlation characteristics. Therefore, we can use it to predict a distribution of the MSE, with exactly the same “assumptions” used in an RBF interpolation model (that is, isotropic shape parameter). As such, \(\hat{s^2_e}\) can now be calculated as,

where the variance of the error model \(\sigma _{e}^2\) can be calculated using eqs.17,18, by setting \({\varvec{\Psi }} = {\mathbf {R}}\) and \(\varvec{\psi }=\varvec{r}\).

In the generic case where a Kriging model has \(\theta _1 \ne \theta _2, \ldots \theta _d\), its predicted MSE distribution will divert from the one similarly predicted by RBF. Nevertheless, such a prediction disagreement is of exactly the same nature as the disagreement between an RBF and a trained Kriging model value predictor.

The importance of using Eqs. 17 and 20 lies in the fact that they restore a zero MSE value to the HF data points and a non-zero finite value to the LF ones. Apart from performing regression on the LF data according to their predicted errors—and interpolation through HF data—the method can now distinguish between the uncertainty characteristics of the LF/HF points in terms of MSE, and eventually EI (which is our value of interest). The loss of LF/HF data correlation (as exploited in Co-Kriging) is compensated by the reduction of the overall model training costs.

2.2 MF infill sampling plan

As shown, our use of existing MF tools deviates from other popular methods like trust region and space mapping (Alexandrov et al. 1998; Leifsson and Koziel 2010). Furthermore, contrary to typical EI practice, our infill points are not always sampled using the HF tool. Here, we are using a straightforward plan which allows the engineer to adapt the method to his needs and to the stage of the design process. The solution of the suboptimization problem leads to a potential LF or HF infill analysis. If the error predicted on the infill point is lower than an error threshold defined by the engineer, then the LF tool is considered to be “accurate enough” and a LF analysis is performed. Hence, computational expenses are avoided for points for which the LF tool is reliable enough for the purpose of the design stage, as LF and HF tools would show no significant difference. Nevertheless, when a predefined number of consecutive LF infill samplings is surpassed, both HF and LF infill sampling is performed. It is critical to ensure that the error estimation model is updated frequently enough to allow for reliable predictions in all interesting areas. Failure in updating the error surrogate would lead to an excessive number of LF infill samplings, as the predicted error may be very low in unexplored areas.Footnote 3 Furthermore, bad error prediction significantly deteriorates the reliability of the y predictor, tricking EI into exploring fictionally promising points.Footnote 4

This method is appropriate for an industrial environment due to its error and iteration limits, which allow the engineer to use it either for a conceptual or a more detailed design. High error threshold and iterations limits lead to a quick optimum tradeoff study in a conceptual design stage. The inverse would be preferable in later design stage studies.

2.3 Optimizer

In our implementation, for an MO problem involving p objectives the suboptimization problem is expressed explicitly as,

Therefore, a pareto front of this cheap MO optimization problem—which is solved by standard MO optimizers as discussed in the next paragraph— provides k infill points which can be then sampled in parallel. Obtaining multiple infill points in a such a cheap manner more than compensates any potential—limited—expenses due to the MO search. Such an explicit MO suboptimization process approach is not typical in MO SBO methods Forrester and Keane (2009); Kontogiannis (2018), however we have found that it is this explicit formulation that guarantees a wide pareto front, while offering the advantage of parallel infill analyses.

For MO cases, the suboptimization problem is solved by our own Multi Objective Particle Swarm Optimization (MOPSO) code.Footnote 5 This is an MO version Alvarez-Benitez et al. (2005) of the PSO algorithm which when applied in the suboptimization process, provides the dominant points based on the maximum EI of each objective. The leader of the swarm is selected using the concept of pareto dominance classifying it as a Pareto Dominance MOPSO type (see Durillo et al. (2009) for an overview of other approaches). In our implementation, a selection method is added to the ones already proposed in Alvarez-Benitez et al. (2005), able to provide a point to promote diversity and guarantee a wide and uniformly distributed EI pareto front. The optimizer was validated using the Fonseca and Fleming function to ensure pareto uniformity and global optimality. The complete optimization methodology is summarized in Fig. 1.

3 Constraints

The effect that the constraint handling methods have to the methodology’s convergence behaviour might be critical for industrial applications. Such design problems are typically dominated by constraints, and their computational cost demands a good understanding on the way convergence and feasibility is achieved. This is especially the case in optimization studies with limited budget where both final result but also feasibility during the optimization convergence is desired.

In this sense, two methods have been implemented to handle the constraintsFootnote 6 within the suboptimization process, being summarised in Fig. 5. The first approach falls in the general category of penalty methods, specifically applied within an SBO environment. A penalty is applied to points for which the surrogate model estimation of the constraint value violates the constraint limit. A penalty can be applied in various forms Forrester and Keane (2009), but experience has showed that penalizing the point by simply setting EI to zero is effective. This way, the suboptimization process is steered to design vectors that are feasible according to the current metamodel predictions. On the downside, this method depends a lot on the initial constraint surrogate accuracy. Suppose a simple RBF or Kriging constraint model which is not accurate due to limited or badly distributed training data points: the SBO plan will gradually sample points to the area currently believed to contain the optimum. In this case, unexplored areas will be under-sampled leading to inaccurate constraint estimations with no mechanism enforcing a sampling in the unexplored region.Footnote 7 This condition will sustain itself: during the suboptimization process, points that should not be penalized might be penalized (or the opposite) and a potentially promising yet unexplored design space region will not be visited. Therefore, the local knowledge of the constraint function in this area will not be improved. This increases the initial sampling requirements, which translates to cost.

The second approach requires a Kriging surrogate for the constraint function, allowing the calculation of probability of improvement (PI) within the suboptimization process. By using the constraint limit instead of the current minimum value in the probability of improvement formulation, one can create the probability of feasibility (PF) Forrester and Keane (2009). Therefore, the infill plan can be altered to create the Feasible Expected Improvement Criterion (FEIC) simply by multiplying PF with EI as shown in Eq. 21. Hereafter, to avoid any abbreviation-related confusion, this method is simply referred to as “Feasibility”.

where the probability of feasibility for an inequality constraint problem of \(g({\mathbf {x}}) \le g_{c}\) and the MF-corrected MSE \(\hat{s^2}\) is expressed as,

The infill point is the one maximizing this combined criterion and the improvement is steered towards regions that are predicted to be feasible and promising. The drawback discussed in the penalty method approach is not present in the feasibility method, as PF uses information from the MSE of the model itself. This is a mechanism that enforces the sampling of unexplored regions, in which the constraint function is not sufficiently accurate. The result is a constraint metamodel that not only favours global search, but—being more accurate—also leads to feasible infill sampling designs during the optimization process. This approach has shown to be robust and effective, however there is a cost associated with the mandatory generation of a Kriging model for the constraint.

It should be also noted, that in the cases where the constraint values result from MF tools, the constraint metamodel should be able to accommodate MF data. Therefore, MF modRBFFootnote 8 or MF modKriging should be used instead RBF or ordinary Kriging.

4 Framework demonstration

The presented methodology is assessed and fine-tuned on a 1D and 2D test case commonly presented in the literature. The 1D case demonstrates the attributes of the modified surrogate model. The 2D case uses the Branin function to illustrate the MF modKriging ability in providing global optimality under the selected SBO plan even in a sparse sampling.

4.1 1D - Test case

The simple 1D problem is described by the following analytical function, which acts as the HF function we want to approximate.

This test function allows us to visualize the effect of the error model and its shape parameter near the LF points. We also illustrate how the EI distribution changes when the MF modified Kriging is used. Fig. 3a, b simply showcase how ordinary Kriging cannot be used when MF tools are being used; the proposed modification leads to a more accurate representation of the design space as well as a more “efficient” EI distribution. Evidently, the ordinary Kriging cannot distinguish between LF and HF points since no MF information is provided, hence interpolating both. In our modified version however, only the HF points are interpolated while the LF points are fitted, providing a better estimation of the design space. The effect of the shape parameter is also apparent in that figure. For too low \(\theta\) values, the kernel function tends to be very wide. In these cases, the error information is highly diffused and may decrease the predictor accuracy. However, in high dimensionality problems, this is rarely the case as the design points are not quite dense. Therefore, the usage of even very low shape parameters in the error model would lead to an impact radius of the basis function which will not be significantly larger than the average euclidean distance between the design points in the multidimensional space (examples of radial basis functions definitions can be found in [40]). In fact, the only condition under which a local error prediction would significantly affect and reduce the value predictor accuracy (through the linear superposition of Eq. 1) would be the the HF sampling is not being “space filling” enough.Footnote 9 Moreover, an appropriate \(\theta\) value can be a priori determined (or when Kriging is used for the error correction it is automatically calculated).

The LF values were defined by us so to correspond (percentage-wise) to errors of LF models typically observed in engineering design. Furthermore, to emphasize the error model effect, the LF and HF points were deliberately selected by us in an “unlucky” manner so that they both underestimate and overestimate the HF results in order to lead to a challenging non-monotone error distribution. In low x regions, the LF tool underestimates the true values but the opposite happens in high regions. Despite that, the surrogate exploits the error information successfully.

Figure 3b describes the improved EI as a function of the shape parameter. MF modKriging increases the dominance of the global optimum versus the local ones in this multimodal EI space. This accelerates the convergence of a global stochastic optimizer like the one employed in this framework. We explain the above statement by acknowledging the effect of the error MSE in the EI space. The objective value predictions affect the MSE estimation (as can be seen from Eq. 17). However, the same equation implies how the MSE also uses information inherent to the correlation matrix \(\Psi\). This is done through the parameters \(\theta _{LF}\), \(p_{LF}\) that help define the matrix, which uses only LF results and as such, the correlation matrix itself \(\Psi\) in MF modKriging does not include LF/HF correlation information. Therefore, information regarding the uncertainty of LF points could only be provided through the additional error MSE term as discussed in the previous section. This additional error MSE term includes the uncertainty of the error model itself (that is the model of the HF/LF value difference) in a similar fashion; through the corresponding correlation matrix. Hence, as intended, when the error MSE term is used in Eq. 17, the MSE of a LF point is no longer zero, since in reality the LF analysis—deterministic—is associated with an error. This corrected MSE of Eq. 17 corrects the EI space through Eq. 10, so that LF points have now a finite EI value as shown in Fig. 3b.

4.2 2D - Test case

4.2.1 Problem formulation

The performance of the MF modified Kriging model in optimization is assessed using the multimodal 2D Branin Forrester et al. (2008) function described by:

The above test function represents the LF tool, and features three global minima, an attribute which makes it appropriate for testing the convergence of the method. Its value is doubled so that it scales with the modified Branin function below, which we use as a HF tool:

This function features a wider global optimum area, allowing a direct comparison of how the design space topology affects the optimization convergence.

The LF analysis introduces multimodality which serves to showcase how the MF modified Kriging properly exploits LF data while avoiding getting stuck in a local minimum or an LF global minimum instead of the HF one. To demonstrate the methodology’s behaviour in high dimensionality problems with sparse sampling, a total of five HF and 16 LF points were used in our sampling. This analogy therefore aims to simulate a high dimensionality problem by using a ’training data (TD) over number of dimensions ratio’ similar to the ones found in such complex problems. Of course, it maintains our purpose of clear visualization, validation as well as low cost suitable for code development.

4.2.2 Kriging modification effect on design space prediction

The error model success in capturing the error trends is vital for the success of the methodology as well. Hence, Fig. 4 displays that even a simple RBF model using five Training Data (TD) suffices in predicting a smooth error distribution of accurate patterns. A close match between our metamodel trend and the OF analytical function is also observed. The LF information is effectively exploited so that the model representation is close to the analytical HF function, not displaying any LF associated minima.

4.2.3 Fine tuning the parameters of the methodology

Multiple optimization studies were performed to assess the methodology and the use of the error MSE term within the EI calculation as well as to fine-tune the subprocess and hyperparameters tuning optimizer details. Each case was executed 200 times to ensure reliability since factors like “sampling luck” had to be cancelled out. For clarity, fewer results are shown.

Tuning the Kriging hyperparameters requires a gradient free Toal et al. (2008) optimizer to locate the global maximum of the likelihood function. The required costs for a reasonably accurate Kriging representation are high but not prohibitive for small or even mid dimensionality problems. However, in a highly dimensional problem with many training data points, extensive exploration should be avoided as training becomes a significant cost of the SBO. Out of four pyOpt Perez et al. (2012) optimizers examined and the SciPy implementation of the Nelder-Mead Non-Linear Simplex algorithm (N-M), N-M and Mixed Integer Distributed Ant Colony Optimization (MIDACO) proved to be the most robust and efficient. Both methods were efficient in terms of computational expenses compared to other gradient free methods. Despite not being strictly speaking a global optimizer, the N-M algorithm was consistent in finding effective hypertuning solutions and given its slight cost advantage over MIDACO, it was selected for tuning the MF modKriging model.

The suboptimization process requires a global optimizer since the EI evaluation cost is negligible and the maximum EI point is sought in a multimodal space. It was found that the ALPSO and MIDACO algorithms were the most effective exploration algorithms leading to the best infill design points sharing similar computational expenses. For the remaining of this work, ALPSO is used.

Within the MF modified Kriging, HF information (which in industry is considered to be information from a later design stage) is introduced earlier in the design stage by the error model. This leads to a more efficient infill sampling process, and a faster convergence, confirmed by the findings of Fig. 2. Regardless of the initial objective function value (which depends on the “luck” of the initial sampling), the modified Kriging was proved to be more robust in the infill sampling process, providing promising design points consistently. MF modKriging using 5 HF/16 LF training points was more efficient than the MF method using ordinary Kriging with 21 HF training points. Referring again to Figs. 3b, 4 the optimizer knows more about the design space, has less uncertainty in some of its areas, reduces the respective MSE, which in turn makes the EI distribution more accurate. In most cases, as the one shown, the MF methodology equipped by MF modKriging could reach almost HF optimality in fewer infill iterations. Co-Kriging is similarly effective, having fewer but more drastic reductions in the OF. However, this simple 2D case is not appropriate to reliably compare it against MF modKriging since the major cost source of both methods lies in the tuning of the hyperparameters in higher dimensionality problems. More insight is provided in the next test case. The MF infill plan described previously leads to wider plateaus in convergence history as only a HF infill is accepted as a reliable improvement. Despite that, since the number of HF infill points is reduced, the total elapsed time is decreased even if the infill iterations required for optimality are increased.

The effect of the error MSE term is displayed in Fig. 5 as well. Evidently, introducing the “artificial” MSE term is not critical for the convergence of the method. However, if introduced it provides extra information regarding the uncertainty of the LF error correction model, leading to faster convergence.

5 Analytical test cases

Before progressing to aerostructural design applications, two analytical test cases are being used to provide the necessary feedback for the efficient implementation of the framework to such multidisciplinary problems. The first case is the Rosenbrock function which is useful in the development of an optimization methodology as:

-

In its original 2-Dimensional form, it provides a challenging problem with the optimum located in a narrow flat valley.

-

In its extended form, it can be used to examine the scalability characteristics of the optimization framework. This is especially important for a surrogate based optimization methodology, which is very sensitive to the dimensionality of the problem.

The second case is the Sellar MDO test function, which features the characteristics of a true multidisciplinary problem, involving global and local design variables, state variables and constraints. This makes it perfect for the development and assessment of different MDO formulations and their implementations in a new optimization methodology.

5.1 Framework scalability using Rosenbrock function

A multidisciplinary problem, such as the aerostructural design of a wing, is by definition associated with an increase in the number of design variables. The application of this optimization framework—conceptual design introducing preliminary design information—and most importantly the use of Surrogate Based Optimization (SBO) techniques, fundamentally limit the problem’s dimensionality. Therefore, before applying the method to an MDO problem directly, it is important to assess what can realistically be achieved through the method while maintaining its efficiency in its application range.

The extended Rosenbrock function is appropriate for this role due to its scalability characteristics and is thereafter used as the HF function:

Since the methodology requires multifidelity analyses, a LF “version” of this function needs to be defined. This should not change the main characteristics and trends of the original one but must be inaccurate in terms of value. This is achieved with the use of scaling coefficients.

The challenges of the Rosenbrock function are twofold:

-

It features a flat and narrow valley leading to the global optimum. The reduced objective functions values and the small function gradients makes it difficult for optimization methods to descend to the optimum point.

-

In high dimensions, the property of unimodality vanishes Shang and Qiu (2006), as local minima appear.

The scalability attribute of the framework is assessed by successively increasing the dimensionality of the extended Rosenbrock function, through varying N from \(N=2\) to \(N=64\). The quantification of the method’s scalability is based on the value of the optimum identified and the HF calls required.Footnote 10 Since this SBO approach is non-deterministic, the standard deviation of the required computational costs is also taken into account. Due to the non-deterministic elements of the method as well as its explorative behaviour, the accurate identification is not guaranteed, especially in high dimensions. Therefore, the method is assessed based on the number of HF calls required to reach a specific reduction ratio, given the maximum value of each dimensional case. For the needs of this analysis, given the challenging flat nature of the high dimensionality design space as well as our verification studies using standalone off-the-self gradient free optimizers, two levels of reduction ratios are set as “good enough”, \(f_{opt}/f_{max} = 2 \times 10^{-3}\) and \(f_{opt}/f_{max} = 5 \times 10^{-4}\). Although the euclidean distance from the optimum is calculated in addition to the required number of HF calls, the former is only used as a reference. The euclidean distance cannot similarly be considered as a figure of merit since (as shown) it is not representative of the quality of the final result which of course also depends on the local gradient of the design space in the vicinity of the points. Increasing the dimensionality demands more HF calls to reach the reduction threshold as expected, as well as locating a point further away from the actual minimum. However, achieving a finer reduction ratio—in low dimensionality—does not result to an observed reduction in the distance from the optimum, confirming the reasoning that euclidean distance is not a reliable criterion for optimization convergence.

The methodology is compared against an ALPSO based optimization, an HF EI approach and a Co-Kriging based MF EI process. The effect of the error correction model (RBF or Kriging) is also assessed. Each problem is solved 200 times to minimize the impact of the stochastic elements of the method, with the variation of the number of HF calls being depicted in the error bands around the mean value in Fig. 6a and c. For each case, the number of HF points in the initial sampling is equal to the dimensionality, with the LF points used being triple than the HF ones. The sampling cost is included in the analysis.

From Fig. 6.a it is evident that when the dimensionality is higher than 8-D, a very low function value cannot be obtained, and hence Fig. 6.b is limited to a dimensionality of 8. In fact, especially when Co-Kriging is used as the MF surrogate model, the method fails in cases more complicated than 2-D. The rest of the methods depict an almost linear increase of the required HF calls, with the HF EI approach requiring more computational resources in the 4-D than in the 2-D case. ALPSO can ultimately locate the optimum in all problems, but its cost is prohibitive even for low to middle fidelity MDO purposes.

The increase of the dimensionality leads to an increase of the maximum deviation of the required cost observed by our multiple runs. This is a direct result of the methods searching until the minimum is successfully located.

When a lower improvement is considered sufficient for our exploration needs, as in Fig. 6c, then the interest is focused in finding the design trends. In this case, all methods except for MF EI Co-Kriging, can locate a satisfying improvement. Here, a few interesting observations can be made. Low dimensionality problems require a cost that may be similar or even a bit higher than the one of middle dimensionality cases. This unexpected trend results from the methods exploiting the initial sampling after a low number of infill samplings. When converged though, they reduce the OF to a value significantly lower than the limit set—which is not the case in higher dimensions. High dimensionality is associated with a linear cost increase. The reason behind this consistent convergence behaviour, is that the respective sampling can provide sufficient information about the trends of the function. Therefore, with the function being smooth and having a flat hypersurface, one infill sampling is enough to lead to the satisfying improvement of \(f_{opt}/f_{max} = 2\times 10^{-3}\). Following this improvement however, and despite the fact that all following infill samplings are robust in providing low values, a reduction ratio of \(5\times 10^{-4}\) cannot be achieved . The inherent exploration attribute of the EI method, although necessary for MF conceptual studies, dictates a continuous search through the high dimensional design space.Footnote 11 As such, following a few infill samplings, an exploitation oriented method would be more efficient, taking advantage of the trends near the optimum point. Nevertheless, if the interest is to identify design trends with a reduced uncertainty early in the design stage, then this approach consistently satisfies this requirement. Furthermore, this linear cost increase trend makes the prediction of the problem’s total cost a straightforward process, which in turn suggests a feasible and efficient parameterization strategy. For instance, given a typical computational budget for an early stage tradeoff study, the above analysis suggests that our particular multidisciplinary problem should not exceed 40 design variables.

The average distance in terms of normalized euclidean metric for each case is shown in Fig. 6b and d. As a general trend, with the increase of the dimensionality the distance between the resulting points and the optimum is also increased. This is not observed in very low dimensionality. There is no direct correlation between the HF calls required, the minimum value found and the distance from the global optimum. A small euclidean norm does not imply a similarly low value, as this correlation depends on the topology of the design space. Therefore, in such complex non-linear (and potentially multimodal Shang and Qiu (2006)) design spaces, this norm cannot be used as reliable performance metric in future studies.

5.2 Sellar MDO test case

The Sellar function is designed to resemble a typical MDO problem, including attributes such as disciplinary variables, state variables, global variables and of course objective function and constraints. The Sellar problem used in this work is described by:

The LF variation of this problem is generated by slightly altering the discipline subproblems through the introduction of scaling coefficients. Therefore, the LF disciplinary problems become:

In this problem, the global design variables are \(z_1\) and \(z_2\), with the local variable being \(x_1\), affecting only discipline 1.

The difference between the Sellar problem usedFootnote 12 and the original one lies in the objective function. In this version, it is the local variable \(x_1\) used in the nonlinear deisgn variable term of the objective function. In the original problem from Sellar et al. (1996), the global variable \(z_1\) was used for the respective term. The various formulations should be treated with caution, as different authors also use different notation. Here, the OpenMDAO notation is used. In the problem formulation that we tackle, the global minimum value is f = 3.18339 and in the optimum point the constraint associated with discipline 1 is active.

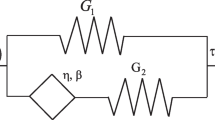

The Sellar function is used as a template to examine how disciplinary interaction affects—or not—the behaviour of the methodology. The latter is developed and implemented in a flexible way so that any HPC workflow—single disciplinary or multidisciplinary—can be attached to it. This is essential especially for big multinational industrial environments, in which distributed MDO architectures are appropriate. As such, industrial disciplinary tools are completely autonomous and any design framework should be able to treat them as a black box. To examine how the optimization methodology is affected by the multidisciplinary formulation of the problem, Multidisciplinary Feasible (MDF) and Asymmetric Subspace Optimization (ASO) architectures are implemented and compared in terms of computational cost. These processes are extensively described in Fig. 7 using the concept of XDSM diagrams Lambe and Martins (2012), developed specifically for MDO problems.

The process begins by an LHS sampling followed by the training of the Kriging model. The suboptimization procedure provides the next infill point; the one that maximises the expected improvement. The infill analysis which follows (treated as a black box in the industry) is the element which diversifies the two formulations. In MDF, disciplines 1 and 2 are iteratively solved within a simple MDA whereas in ASO the disciplinary analysis 1 is substituted by an optimization with respect to \({\mathbf {x}}\). This process is sometimes referred to in this work as ASO loop (in contrast to the MDA loop).

Our interest is to examine the convergence behaviour for both the objective function as well as the constraints, including an evaluation of the respective computational costs. For a complete study, we compare our MF EI methods against two other approaches: a gradient free optimizer (ALPSO) and the HF EI approach. All of the methods act on the system level. In the case of the ASO formulation, ALPSO and SLSQP has been employed for the disciplinary optimization in order to examine the effect of this subprocess to the efficiency of the formulation (Fig. 8).

It is evident that ALPSO optimizer requires more computational resources to locate the minimum, which in the case of MDF cannot be located at all. When the SBO methodology is applied, no more than ten HF Multidisciplinary Analysis (MDA) calls are required to reach a satisfactory convergence, regardless of the approach or the MDO formulation. In the case of MDF, the HF EI is more efficient compared to the MF approach, which still locates the optimum area. The opposite is true when ASO is being used, as the MF approach requires almost half of the HF MDA called by HF EI. Regarding the MDO formulation, ASO is consistently superior over MDF. However, it should be stressed that the above speaks only half the truth. When comparing different MDO formulations, the number of required HF MDA calls is representative of the true computational cost only in the case that the rest of disciplinary calls—or optimizations in the case of ASO—are of negligible cost. This is not always the case, but when this criterion is met ASO is indeed more efficient than MDF as it improves disciplinary analysis load balance Chittick and Martins (2009) in addition to reducing the dimensionality of the system level optimization problem. However, in cases where the discipline optimized in the inner disciplinary optimization (in this case discipline 1) is not significantly cheaper to analyse than the other disciplines, ASO stops being efficient. In the present case, if discipline 1 represents a cheap discipline so that the inner disciplinary optimization has a computational cost similar to a discipline 2 analysis, ASO would be superior to MDF. This is the case in an aerostructural optimization; a structural analysis is far cheaper than an aerodynamic analysis, with the respective disciplinary optimization cost being in the order of magnitude of an aerodynamic analysis. Of course, the cost of the optimizer in the disciplinary process is also of great importance, affecting the total number of disciplinary calls. ALPSO—being a global method—requires at least an order of magnitude more disciplinary iterations than SLSQP. In the context of aerostructural optimization, this would make an ALPSO based disciplinary optimization inefficient for the ASO formulation. Therefore, it is not surprising that local gradient based optimizers are more appropriate for this role, as they require fewer iterations while being robust in dimensionality increase (in the case of adjoint based methods).

Since this problem is a constrained one, including constraints for each of the disciplines, it is very important to examine in detail how each of the two different mdo formulations tackles constraints to converge to a feasibile solution.

-

In the case of the MDF architecture, a single optimization process exists: the MF EI methodology, acting in the system level. Therefore, the constraints are imposed through the expected improvement suboptimization process either using penalty or feasibility method, as discussed earlier. The system level therefore provides the next infill point which is a point most promising of improving the objective and satisfying the constraints at the same time.

-

In the case of the ASO architecture, the optimization process is split in the system and the disciplinary level as displayed in Fig. 7b. The system level suggests only a part of the new design, the one affected by the global design variables (in this case \(z_1\) and \(z_2\)). The rest is defined from the convergence of the disciplinary process. Similarly, the system level (acting on the reduced dimensionality design space) can only force the satisfaction of the constraint associated with the discipline involving only global variables (in this case discipline 2). The feasibility of constraint 1 depends on discipline 1, which is optimized with respect to the local variable x during the disciplinary process.

To sum this up, in MDF the system level enforces both constraints while in ASO it is the disciplinary process who takes care of enforcing constraint 1. Since constraint 1 is active in the optimum point of this problem, the behaviour of the constraint handling method has a significant effect to the overall performance of the problem. The typical convergence of the constraints is presented in Fig. 9 and discussed for the two architectures.

5.3 A note on constraints

MDF formulation typically requires a number of iterations before the challenging constraint 1 is satisfied. This is due to the initially inaccurate RBF models and the aggressive nature of the penalty method, as discussed above. After satisfying this, the RBF models are accurate enough to provide feasible infill points. Following the convergence of the objective function, oscillations are present in the constraints’ values. Since the EI method has provided a significant improvement of the objective function (due to its mild exploitation attributes), an exploration is initiated to the rest of the design space for further potential solutions. In the ASO case, constraint 1 is satisfied directly from the first iteration. This is because it is explicitly calculated in the disciplinary level in every MDA iteration. Therefore, the disciplinary variable x is chosen so that it optimises the discipline 1 given that in each loop the design point satisfies the constraint 1. The constraint 1 value is constant to 0 in every iteration showing that constraint 1 is indeed active in the optimum. With the x variable defined and “frozen” by the disciplinary optimization, EI method provides more limited exploration as proven by the almost constant value of constraint 2.

It is noted that in this problem, the feasibility method was not examined since it requires training a Kriging process to approximate each of the constraint function. Since the constraints are linear, Kriging becomes unstable and fails due to matrix singularities. The RBF based penalty method is employed instead.

5.4 A note on the multidisciplinary analysis loop

In Fig. 7a and b, the two different sampling procedures were overviewed. As mentioned, MDA is an inherently iterative procedure and its treatment separates the MDF and ASO. In ASO, discipline 1 is not analyzed but optimized with respect to the local variable x. Nevertheless, the rest of the iterative process is unchanged. Two of the most common mathematical approaches to iteratively solve a coupled system of functions are the Jacobi and the nonlinear Gauss-Seidel method. Their application in MDO problems has been analyzed by Kennedy and Martins (2010) and the reader is referred to their work for details of these two solution methods. In short, in Jacobi the updates of the state variables follow this progression:

Here, disciplines 1 and 2 can be solved concurrently since they depend on the previous iteration n solution of the coupling discipline.

In Gauss-Seidel, the solution of the coupled system takes the form,

In this approach, the solution takes advantage of the existence of the solution of discipline in the \(n+1\) step. Updated information is inserted in the system accelerating the process. However, this approach demands a sequential iterative procedure. In the case of ASO, the \(y_1^{n+1}\) analysis is simply an optimization given the corresponding state variable of discipline 2, \(y_2^n\).

The Sellar function is being used as a test case to gain feedback before proceeding to a heavy aerostructural optimization. Therefore, in Fig. 10 the performance of these two MDA solution techniques is compared for both MDF and ASO architectures.

It is evident that despite its sequential nature, the Gauss-Seidel method is more computationally efficient since the updated information drastically accelerates the convergence. What is equally interesting, is the effect of the architecture to the convergence of the MDA/ASO loop. The disciplinary optimization has a positive impact on the convergence of the coupled analysis. However, this cannot be considered as true for any multidisciplinary problem since it depends on the effect of the local variable on the discipline as well as the dependency between the disciplines.

6 Multiobjective optimization

Since the presented methodology constitutes the core of a numerical framework to be extended to MDO problems, in which disciplines are also typically associated with multiple conflicting objectives, optimization under such a MO formulation should be performed. The MO formulation provides a vast amount of useful information for the industry, as several potential optimum configurations are readily available in the engineer’s disposal. The pareto front can also feed later-stage local tradeoff studies, constituing element very important for decision making.

6.1 RAE2822 test case

A typical transonic airfoil design problem—as is the popular RAE2822 case—is solved to briefly demonstrate the methodology’s capability in tackling an MO problem that is more closely related to standard industrial ones. The aim of this work is to initially assess the methodology in terms of design and objective space exploration as well as to compare our parallel infill sampling approach against standard practice (see Sect. 2.2). Detailed information on how our method’s compares against an exploitation optimization scheme is provided in Kontogiannis et al. (2020), and a comparison against similar exploration approaches (Gradient Free MO optimization, Co-Kriging etc.) is scheduled for a follow-up paper.

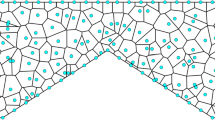

6.2 Problem formulation

For this airfoil design problem, Free Form Deformation (FFD) shape parameterization Sederberg and Parry (1986) was used. The control points displacement define the deformation of the surface in the X,Y direction—as shown through the deformation example of a ’random design’—, with the bound values being dependent on the design requirements (see Fig. 11). To approach the complexity of industrial applications, eight active control points were used creating a 16D design space. The operating conditions of this design problem are provided in Table 1.

The HF analyses are performed using the commercial solver ANSYS Fluent [52], providing the global aerodynamic coefficients. We use a structured C-type grid, fully resolving the boundary layer with a \(y^+\) value in the order of 1. Special care was also taken to ensure grid robustness to geometry changes that arise across the optimization process. In terms of LF analyses, in this paper we provide the results arising from a partially converged Forrester et al. (2006) ANSYS Fluent simulation, stopped after 800 iterations and using the same numerical setup as the HF tool. More information on this approach as well as the impact of additional LF tools are being provided in a follow-up paper.

6.3 Results

6.3.1 Comparison of EI infill sampling methodologies

The airfoil design problem described above, allowed to assess how our proposed sampling method behaved. This was done through a comparison of the standard MO EI methodology for improving the pareto front (see Forrester et al. (2008) and Eq. 33 from “Appendix A” for more details), against our parallel infill EI methodology of Sect. 2.2. This is within the scope of the demonstration included in this paper; further comparisons between our method and other Kriging/Co-Kriging-based ones, constraints handling approach and LF tool impact are provided in a follow-up paper.

The methods are compared in Fig. 12 in terms of their respective pareto front results, for a defined computational budget. Since the only difference between the methods is found in the suboptimization process, the sampling, surrogate training and infill analyses costs are identical. Therefore, the comparison is based on the number of high fidelity infill analyses.Footnote 13

The standard infill methodology can locate highly efficient tradeoff points in the mid \(C_l\) region—close to the datum point. In the low and high \(C_l\) objective space however, it is evident that this EI formulation does not exhibit the desired exploration characteristics. On the other hand, the proposed parallel formulation develops a wide pareto front of efficient configurations especially in the low Cl region. This is an expected result as the standard “EI for improving the Pareto Front” method is designed to achieve just that, find new dominating designs. This does not necessarily translates to new designs being associated with an extended design or objective space exploration, rather than just being dominant over the previous pareto points. As such, a more balanced exploitation/exploration behaviour is observed. In our newly proposed approach however, the points used for infill sampling are explicitly selected to be the ones which are not only promising but also diverse in terms of objective function value. Another significant advantage of our method is its inherent characteristic to provide multiple infill points, improving load balancing. In this case infill points are set to three, and as such, the effective computational cost to introduce this design information is a third of that of the standard method. Having established the behaviour of both approaches, it should be stated that there is the potential for a hybrid methodology using these two in sequence. Within such a concept, the proposed parallel infill can be initially used to satisfy exploration requirements followed by the standard method after a “wide-enough” front has been developed. This will provide a more local refinement, improving the performance of the initial pareto front points.

7 Conclusions

A shift to a multidisciplinary design approach in the aerospace industry is necessary for the efficiency of future aircraft configurations to be improved. However, in order for interdisciplinary synergies to be exploited, it is crucial that the process is applied in an early design stage where a wide design space is available for exploration. We proposed a multiobjective multifidelity surrogate based optimization framework to achieve fast and reliable multidisciplinary optimization studies in the conceptual design stage. The paper also introduces a novel modification of the ordinary Kriging metamodel, improving the design and Expected Improvement space in the presence of Multifidelity data if Co-Kriging is not used. The paper highlights the importance of the low fidelity tool’s capability of capturing the correct trends; hence leading to a smooth error space. Such a space can be effectively approximated by metamodels, making the high fidelity correction beneficial compared to High Fidelity Expected Improvement method. In view of future aerostructural design applications, the extended Rosenbrock function was employed to examine the framework’s scaling characteristics. Our method is a clear improvement over an indicative gradient free approach in the initial stages of the exploration. However, none of the methods is able to find the exact global optimum in a reasonable cost. As a rule of thumb, even for exploration cases where the exact optimum is irrelevant, the number of design variables should not surpass 40. The effectiveness of the method in multidisciplinary problems was demonstrated using the Sellar function. Comparing the multidisciplinary feasible and asymmetric subspace optimization architectures showed the superiority of the latter, when an efficient inner disciplinary optimization is available. The method is also implemented in a—beneficial for the industry—Multiobjective formulation which provides additional information and supports decision making and further tradeoff studies. A direct comparison of our infill sampling approach and the standard one demonstrates their difference in their design as the latter offers a better improvement on the pareto points near the datum point whereas the former offers an extended objective space exploration. Furthermore, multiobjective problem results and analysis are presented in follow-up papers tackling constrained aerodynamic and aerostructural design problems.

Notes

Its generalized formulation however makes it relevant to any such design problem associated with a computationally challenging analysis.

A Kriging model can also incorporate MF data by adding the error prediction for each LF point in the correlation matrix diagonal, functioning as a regularization parameter, similarly to fitting a noisy signal. Physically, this means that we consider the inaccuracy of the LF model to be inducing noise in the objective function space. However, despite being successful in improving the objective function approximation, such an approach is numerically unstable in high error values. This leads to failure in calculating the MSE and cannot be used in a EI SBO context.

This is true in RBF models with kernel functions of asymptotic behaviour. It does not hold in linear or cubic kernel cases.

A poor error model can deceive the EI plan, leading to inefficient exploration or convergence to LF optimality.

As demonstrated later, for single objective problems ALPSO is used.

This discussion focuses on inequality constraints. Equality constraints can be similarly tackled by transforming them into inequality ones using tolerance parameters.

Since the penalty method simply uses the constraint value prediction and not any information from the MSE of the model, it effectively and fundamentally lacks the mechanism to allow a more global search. Undersampled areas will have randomly accurate constraint predictions, making the success of the infill sampling (constraint-wise) random as well. This is in contrast with the alternative method presented, which since using the MSE of the constraint model itself, it fundamentally ’tries’ to improve the global accuracy of the constraint model while of course trying to improve the objective as well.

Although not presented in this paper, the RBF model can be easily modified to an additive MF modRBF, similarly to the way MF modKriging was modified.

Using LHS sampling in this work does not allow such a condition.

In these test functions used to develop the methodology, we implicitely make the same assumption frequently made in the MF literature; that the cost of the LF tool is negligible compared to the HF one. As such, we only assess the cost using HF calls. This assumption is valid in most of the realistic problems we have solved and presented with this methodology. However, in industrial problems where this condition does not hold, the LF cost is included in the total cost of the process and presented explicitely. This is not the case in this journal paper.

Of course such an exploration will never be complete, since dense exploration in high dimensions is infeasible due to the curse of dimensionality.

It can be found in the OpenMDAO site, http://openmdao.org/releases/0.13.0/docs/tutorials/mdao/intro.html.

The suboptimization problem of EI maximization and EI pareto front generation is solved by ALPSO and MOPSO algorithms respectively. For this dimensionality, and given the cheap algebraic formulation of EI, the observed difference between the two suboptimization processes in terms of elapsed time was negligible.

Abbreviations

- \(\varvec{\alpha }\) :

-

Radial Basis Functions parameters vector

- \(\mathbf {R}\) :

-

Gram matrix used in Radial Basis Function models

- \({{\varvec{\Psi }}}\) :

-

Correlation matrix used in Kriging models

- \({\psi }\) :

-

Correlation vector used in Kriging predictor

- \(\hat{\theta _l}\) :

-

Optimum Kriging shape parameter provided by the training of the model

- \(\lambda\) :

-

Likelihood estimation function

- \(\mu\) :

-

Mean of Kriging model

- \(\sigma ^2\) :

-

Variance of Kriging model

- \(\varvec{r}\) :

-

Gram matrix vector

- \(\sigma ^2_{e}\) :

-

Variance of the error surrogate model

- \(\theta\) :

-

Radial Basis Functions shape parameter

- \(\Phi (x)\) :

-

Cumulative distribution function

- \(r_{ij}\) :

-

Element of the gram matrix

- \(\phi (x)\) :

-

Probability density function

- \(\psi _{ij}\) :

-

Element of the correlation matrix

- \(\mathbf {e}\) :

-

Vector of error training data

- \(\mathbf {y}\) :

-

Vector of objective function values

- D :

-

Design space

- e :

-

Low fidelity tool error

- \(f_{HF}\) :

-

High fidelity function result

- \(f_{LF}\) :

-

Low fidelity function result

- p :

-

Radial Basis Functions smoothing parameter

- \(\hat{p_l}\) :

-

Optimum Kriging smoothness parameter provided by the training of the model

- \(\hat{s^2}\) :

-

Mean squared error of the Kriging model

- \(\hat{s^2_e}\) :

-

Mean squared error of the error surrogate model

- \(\hat{y}\) :

-

Surrogate model predictor

- \(y_{HF}\) :

-

Objective function value of the high fidelity tool

- \(y_i^*\) :

-

The objective function value of a member of the pareto set

- \(y_{LF}\) :

-

Objective function value of the low fidelity tool

- \(y_{min}\) :

-

Minimum objective function value found

- y :

-

Objective function value

- ALPSO:

-

Augmented Lagrangian Particle Swarm Optimization

- ASO:

-

Asymmetric Subspace Optimization

- BFGS:

-

Broyden–Fletcher–Goldfarb–Shanno algorithm

- EI:

-

Expected Improvement

- FEIC:

-

Feasible Expected Improvement Criterion

- GEK:

-

Gradient Enhanced Kriging

- HF:

-

High Fidelity

- LF:

-

Low Fidelity

- LHS:

-

Latin Hypercube Sampling

- MDA:

-

Multidisciplinary Analysis

- MDF:

-

Multidisciplinary Feasible

- MDO:

-

Multidisciplinary Design Optimization

- MF:

-

Multifidelity

- MO:

-

Multiobjective

- MoE:

-

Mixture of Experts

- MOPSO:

-

Multiobjective Particle Swarm Optimization

- MSE:

-

Mean Squared Error

- N-M:

-

Nelder-Mead Non-Linear Simplex Method

- OF:

-

Objective Function

- PF:

-

Probability of Feasibility

- PI:

-

Probability of Improvement

- RBF:

-

Radial Basis Functions

- SBO:

-

Surrogate Based Optimization

- TD:

-

Training Data

- TR:

-

Trust Region

- XDSM:

-

EXtended Design Structure Matrix

References

Alexandrov NM, Dennis JE, Lewis RM, Torczon V (1998) A trust region framework for managing the use of approximation models in optimization. Struct Optim 15:16–23. https://doi.org/10.1007/BF01197433

Alexandrov NM, Lewis RM, Gumbert CR, Green LL, Newman PA (2001) Approximation and model management in aerodynamic optimization with variable-fidelity models. J Aircraft 38(6):1093–1101. https://doi.org/10.2514/2.2877

Alvarez-Benitez JE, Everson RM, Fieldsend JE (2005) A MOPSO algorithm based exclusively on pareto dominance concepts. In: International conference on evolutionary multi-criterion optimization EMO 2005: evolutionary multi-criterion optimization, 3410, pp 459–473. https://doi.org/10.1007/978-3-540-31880-432

ANSYS FLuent 14.5. User’s manual. ANSYS Inc

Bandler JW, Biernacki RM, Chen SH, Grobelny PA, Hemmers RH (1994) Space mapping technique for electromagnetic optimization. IEEE Trans Microwave Theory Tech 42(12):2536–2544. https://doi.org/10.1109/22.339794

Bryson DE, Rumpfkeil MP (2018) Multifidelity quasi-newton method for design optimization. AIAA J 56(10):4074–4086. https://doi.org/10.2514/1.J056840

Chaudhuri A, Lam R, Willcox K (2018) Multifidelity uncertainty propagation via adaptive surrogates in coupled multidisciplinary systems. AIAA J 56(1):235–249. https://doi.org/10.2514/1.J055678

Chittick IR, Martins JRRA (2009) An asymmetric suboptimization approach to aerostructural optimization. Optim Eng 10(1):133–152. https://doi.org/10.1007/s11081-008-9046-2

Choi S, Alonso JJ, Kroo IM, Wintzer M (2008) Multifidelity design optimization of low-boom supersonic jets. J Aircraft 45(1):106–118 10.2514/1.28948

Chung HS, Alonso JJ (2002) Design of a low-boom supersonic business jet using cokriging approximation models. In: 9th AIAA/ISSMO symposium on multidisciplinary analysis and optimization, Atlanta, Georgia, AIAA, pp 2002–5598. https://doi.org/10.2514/6.2002-5598

Chung HS , Alonso JJ (2002) Using Gradients to construct cokriging approximation models for high-dimensional design optimization problems. In: 40th AIAA aerospace sciences meeting and exhibit, Reno, Nevada, AIAA, pp 2002–0317. https://doi.org/10.2514/6.2002-317

Demange J, Savill AM, Kipouros T (2016) Multifidelity optimization for high-lift airfoils. In: 54th AIAA aerospace sciences meeting, AIAA Scitech Forum, San Diego, California, AIAA Paper, pp 2016–0557. https://doi.org/10.2514/6.2016-0557

Demange J, Savill AM, Kipouros T (2016) A multifidelity multiobjective optimization framework for high-lift airfoils, AIAA AVIATION Forum. In: 17th AIAA ISSMO multidisciplinary analysis and optimization conference, Washington, DC, Paper AIAA, pp 2016–3367. https://doi.org/10.2514/6.2016-3367

Durillo JJ, Garcia-Nieto J, Nebro AJ, Coello CA, Luna F, Alba E (2009) Multi-objective particle swarm optimizers: an experimental comparison. In: International conference on evolutionary multi-criterion optimization EMO 2009: evolutionary multi-criterion optimization, 5467, pp 495–509. https://doi.org/10.1007/978-3-642-01020-039

Forrester AIJ, Bressloff NW, Keane AJ (2006) Optimization using surrogate models and partially converged computational fluid dynamics simulations. Proc R Soc A Math Phys Eng Sci 462(2071):2177–2204. https://doi.org/10.1098/rspa.2006.1679

Forrester AIJ, Keane AJ (2009) Recent advances in surrogate based optimization. Progress Aerosp Sci 45(1–3):50–79. https://doi.org/10.1016/j.paerosci.2008.11.001

Forrester AIJ, Sobester A, Keane AJ (2007) Multi-fidelity optimization via surrogate modelling. Proc R Aeronaut Soc 463(2088):3251–3269. https://doi.org/10.1098/rspa.2007.1900

Forrester AIJ, Sobester A, Keane AJ (2008) Engineering design via surrogate modelling: a practical guide. Wiley, Hoboken

Han Z, Zimmerman R, Görtz S (2012) Alternative Cokriging method for variable-fidelity surrogate modeling. AIAA J 50(5):1205–1210. https://doi.org/10.2514/1.J051243

Jacobs RA, Jordan MI, Nowlan SJ, Hinton GE (1991) Adaptive mixtures of local experts. J Neural Comput 3(1):79–87. https://doi.org/10.1162/neco.1991.3.1.79

Jarrett JP, Ghisu T (2012) An approach to multi-fidelity optimization of aeroengine compression systems. In: 12th AIAA aviation technology, integration, and operations (ATIO) conference and 14th AIAA/ISSM, Indianapolis, Indiana, 17–19 September, AIAA Paper, pp 2012–5634. https://doi.org/10.2514/6.2012-5634

Jarrett JP, Ghisu T (2015) Balancing configuration and refinement in the design of two-spool multistage compression systems. ASME J Turbomach. https://doi.org/10.1115/1.4030051

Johnson ME, Moore LM, Ylvisaker D (1990) Minimax and maximin distance designs. J Stat Plan Inference 26(2):131–148. https://doi.org/10.1016/0378-3758(90)90122-B

Jones DR (2001) A taxonomy of global optimization methods based on response surfaces. J Global Optim 21(4):345–383. https://doi.org/10.1023/A:1012771025575

Jones DR, Schonlau M, Welch WJ (1998) Efficient global optimization of expensive black-box functions. J Global Optim 13(4):455–498. https://doi.org/10.1023/A:1008306431147

Kennedy MC, O’Hagan A (2000) Predicting the output from complex computer code when fast approximations are available. Biometrika 87(1):1–13. https://doi.org/10.1093/biomet/87.1.1

Kennedy GJ, Martins JRRA (2010) Parallel solution methods for aerostructural analysis and design optimization. In: 13th AIAA, ISSMO multidisciplinary analysis optimization conference 13–15, Fort Worth. Texas AIAA paper, pp 2010–9308. https://doi.org/10.2514/6.2010-9308

Kipouros T, Molinary M, Dawes WN, Parks GT, Savill M, Jenkins KW (2007) An investigation of the potential for enhancing the computational turbomachinery design cycle using surrogate models and high performance parallelisation, GT2007-28106. In: Proceedings of ASME Turbo Expo 2007 power for Land, Sea and Air, Vol 6, pp 1415–1424. https://doi.org/10.1115/GT2007-28106

Kontogiannis SG, Demange J, Savill MA, Kipouros T (2020) A comparison study of two multifidelity methods for aerodynamic optimization. Aerosp Sci Technol 97(2):273–284. https://doi.org/10.1016/j.ast.2019.105592

Kontogiannis SG, Savill MA, Kipouros T (2017) A multi-objective multi-fidelity framework for global optimization. In: 58th AIAA/ASCE/AHS/ASC structures, structural dynamics, and materials conference, AIAA SciTech Forum, Grapevine, Texas, AIAA Paper, pp 2017–0136. https://doi.org/10.2514/6.2017-0136

Kontogiannis S (2018) Conceptual multidisciplinary design via a multiobjective multifidelity optimisation method. PhD thesis, Cranfield University

Lambe AB, Martins JRRA (2012) Extensions to the design structure matrix for the description of multidisciplinary design. Anal Optim Process Struct Multidiscip Optim 46(2):273–284. https://doi.org/10.1007/s00158-012-0763-y