Lane Departure Warning Mechanism of Limited False Alarm Rate Using Extreme Learning Residual Network and ϵ-Greedy LSTM

Abstract

:1. Introduction

1.1. Motivation

1.2. Related Work

1.3. Contributions

- (1)

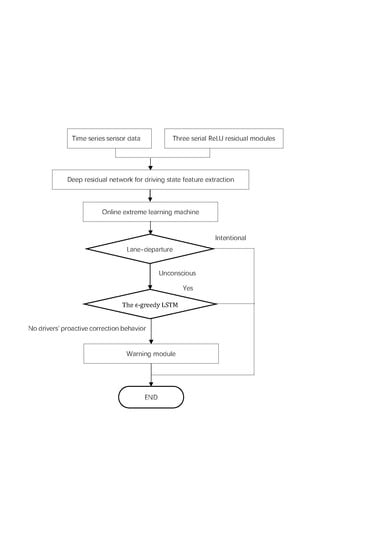

- We applied a new learning framework termed Extreme Learning Residual Network (ELR-Net) that combines ResNet and ELM to classify drivers’ lane-departure consciousness (ILCB or ULDB). The driver’s intention to change the lane can be accurately identified at 1.3 seconds before the vehicle crosses the lane marking [27]. ELR-Net can accurately determine the driver’s intention to change lanes.

- (2)

- We developed ϵ-greedy-based long short-term memory (ϵ-greedy LSTM) module to forecast the vehicle’s upcoming lateral distance to infer the chance of PCSD. ϵ-greedy LSTM can accurately predict driver’s departure intention.

- (3)

- We correspondingly proposed an LDWM to whether a warning should be given to the driver based on the algorithm of classification and prediction.

2. Lane Departure Warning Mechanism

2.1. Selection of Sensor Input Parameters

2.2. Time to Lane Crossing (TLC)

2.3. Excessive False Alarm Rate

2.4. Evaluation Criteria for PCSD

3. Methods

3.1. Extreme Learning Residual Network

3.2.1. Activation Function in Residual Block

3.2.2. Global Average Pooling Layer

3.2.3. ELM for Multiclass Classification

3.2. ϵ-greedy LSTM

4. Analysis and Discussions of Experiment Result

4.1. Data Collection

4.2. Training and Test Set

4.3. Classification Performance of ELR-Net

4.4. Prediction Performance of ϵ-Greedy LSTM

4.5. Overall Performance of the Proposed LDWM

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Sternlund, S. The safety potential of lane departure warning systems—A descriptive real-world study of fatal lane departure passenger car crashes in Sweden. Traffic Inj. Prev. 2017, 18, S18–S23. [Google Scholar] [CrossRef] [Green Version]

- Cicchino, J.B. Effects of lane departure warning on police-reported crash rates. J. Saf. Res. 2018, 66, 61–70. [Google Scholar] [CrossRef]

- Eriksson, J.; Landberg, J. Lane Departure Warning and Object Detection through Sensor Fusion of Cellphone Data. Master’s Thesis, Chalmers University of Technology, Göteborg, Sweden, 2015. [Google Scholar]

- Gaikwad, V.; Lokhande, S. Lane Departure Identification for Advanced Driver Assistance. Proc. IEEE Trans. Intell. Transp. Syst. 2015, 16, 910–918. [Google Scholar] [CrossRef]

- Chen, M.; Jochem, T.; Pomerleau, D. AURORA: A vision-based roadway departure warning system. In Proceedings of the 1995 IEEE/RSJ International Conference on Intelligent Robots and Systems, Human Robot Interaction and Cooperative Robots, Pittsburgh, PA, USA, 5–9 August 1995. [Google Scholar]

- Narote, S.P.; Bhujbal, P.N.; Narote, A.S.; Dhane, D.M. A review of recent advances in lane detection and departure warning system. Pattern Recognit. 2018, 73, 216–234. [Google Scholar] [CrossRef]

- Deng, W.; Xu, J.; Zhao, H. An Improved Ant Colony Optimization Algorithm Based on Hybrid Strategies for Scheduling Problem. IEEE Access 2019, 7, 20281–20292. [Google Scholar] [CrossRef]

- Mammar, S.; Glaser, S.; Netto, M. Time to line crossing for lane departure avoidance: A theoretical study and an experimental setting. IEEE Trans. Intell. Transp. Syst. 2006, 7, 226–241. [Google Scholar] [CrossRef] [Green Version]

- Agamennoni, G.; Worrall, S.; Ward, J.R.; Neboty, E.M. Automated extraction of driver behaviour primitives using Bayesian agglomerative sequence segmentation. In Proceedings of the 2014 17th IEEE International Conference on Intelligent Transportation Systems ITSC, Qingdao, China, 8–11 October 2014. [Google Scholar]

- Satzoda, R.K.; Trivedi, M.M. Drive analysis using vehicle dynamics and vision-based lane semantics. IEEE Trans. Intell. Transp. Syst. 2015, 16, 9–18. [Google Scholar] [CrossRef] [Green Version]

- Bocklisch, S.F.; Beggiato, M.; Krems, J.F. Adaptive fuzzy pattern classification for the online detection of driver lane change intention. Neurocomputing 2017, 262, 148–158. [Google Scholar] [CrossRef]

- McCall, J.C.; Wipf, D.P.; Trivedi, M.M.; Rao, B.D. Lane change intent analysis using robust operators and sparse Bayesian learning. IEEE Trans. Intell. Transp. Syst. 2007, 8, 431–440. [Google Scholar] [CrossRef]

- Wang, Z.; Yan, W.; Oates, T. Time Series Classification from Scratch with Deep Neural Networks: A Strong Baseline. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 1578–1585. [Google Scholar]

- Fawaz, H.I.; Forestier, G.; Weber, J.; Idoumghar, L.; Muller, P.-A. Deep learning for time series classification: A review. Data Min. Knowl. Discov. 2018, 33, 917–963. [Google Scholar] [CrossRef] [Green Version]

- Wang, F.; Jiang, M.; Qian, C.; Yang, S.; Li, C.; Zhang, H.; Wang, X.; Tang, X. Residual attention network for image classification. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Kim, J.; El-Khamy, M.; Lee, J. Residual LSTM: Design of a deep recurrent architecture for distant speech recognition. In Proceedings of the 18th Annual Conference of the International Speech Communication Association, Stockholm, Sweden, 20–24 August 2017; pp. 1591–1595. [Google Scholar]

- Huang, G.B.; Zhou, H.; Ding, X.; Zhang, R. Extreme learning machine for regression and multiclass classification. IEEE Trans. Syst. Man Cybern. Part B Cybern. 2012, 42, 513–529. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ding, S.; Zhao, H.; Zhang, Y.; Xu, X.; Nie, R. Extreme learning machine: Algorithm, theory and applications. Artif. Intell. Rev. 2015, 44, 103–115. [Google Scholar] [CrossRef]

- Angkititrakul, P.; Terashima, R.; Wakita, T. On the use of stochastic driver behavior model in lane departure warning. IEEE Trans. Intell. Transp. Syst. 2011, 12, 174–183. [Google Scholar] [CrossRef]

- Zhou, Y.; Xu, R.; Hu, X.F.; Ye, Q.T. A lane departure warning system based on virtual lane boundary. J. Inf. Sci. Eng. 2008, 24, 293–305. [Google Scholar]

- Batavia, P.H. Driver-Adaptive Lane Departure Warning Systems; Carnegie Mellon University: Pittsburgh, PA, USA, 1999. [Google Scholar]

- Wang, W.; Zhao, D.; Han, W.; Xi, J. A learning-based approach for lane departure warning systems with a personalized driver model. IEEE Trans. Veh. Technol. 2018, 67, 9145–9157. [Google Scholar] [CrossRef] [Green Version]

- Tan, D.; Chen, W.; Wang, H. On the Use of Monte-Carlo Simulation and Deep Fourier Neural Network in Lane Departure Warning. IEEE Intell. Transp. Syst. Mag. 2017, 9, 76–90. [Google Scholar] [CrossRef]

- Yang, H.; Pan, Z.; Tao, Q. Robust and Adaptive Online Time Series Prediction with Long Short-Term Memory. Comput. Intell. Neurosci. 2017, 2017. [Google Scholar] [CrossRef] [Green Version]

- Altche, F.; De La Fortelle, A. An LSTM network for highway trajectory prediction. In Proceedings of the IEEE Conference on Intelligent Transportation Systems (ITSC), Yokohama, Japan, 16–19 October 2017. [Google Scholar]

- Choi, J.Y.; Lee, B. Combining LSTM Network Ensemble via Adaptive Weighting for Improved Time Series Forecasting. Math. Probl. Eng. 2018, 2018. [Google Scholar] [CrossRef] [Green Version]

- Hua, Y.; Zhao, Z.; Li, R.; Chen, X.; Liu, Z.; Zhang, H. Deep Learning with Long Short-Term Memory for Time Series Prediction. IEEE Commun. Mag. 2019, 57, 114–119. [Google Scholar] [CrossRef] [Green Version]

- Kumar, P.; Perrollaz, M.; Lefevre, S.; Laugier, C. Learning-based approach for online lane change intention prediction. In Proceedings of the IEEE Intelligent Vehicles Symposium, Gold Coast, Australia, 23–26 June 2013. [Google Scholar]

- Glaser, S.; Mammar, S.; Sentouh, C. Integrated driver-vehicle-infrastructure road departure warning unit. IEEE Trans. Veh. Technol. 2010, 59, 2757–2771. [Google Scholar] [CrossRef]

- Wang, W.; Zhao, D. Evaluation of Lane Departure Correction Systems Using a Regenerative Stochastic Driver Model. IEEE Trans. Intell. Veh. 2017, 2, 221–232. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- Duan, Z.; Yang, Y.; Zhang, K.; Ni, Y.; Bajgain, S. Improved deep hybrid networks for urban traffic flow prediction using trajectory data. IEEE Access 2018, 6, 31820–31827. [Google Scholar] [CrossRef]

- Williamson, A.; Chamberlain, T. Review of on-Road Driver Fatigue Monitoring Devices; NSW Injury Risk Management Research Centre, University of New South Wales: Kensington, Australia, 2005. [Google Scholar]

- Tavenard, R.; Malinowski, S.; Classification, C.E.; European, S. Cost-Aware Early Classification of Time Series. In Proceedings of the Joint European Conference on Machine Learning and Knowledge Discovery in Databases, Riva del Garda, Italy, 19–23 September 2016; Springer: Cham, Germany, 2016. [Google Scholar]

- Pang, X.; Zhou, Y.; Wang, P.; Lin, W.; Chang, V. An innovative neural network approach for stock market prediction. J. Supercomput. 2018, 1, 1–21. [Google Scholar] [CrossRef]

- Sun, G.; Song, L.; Yu, H.; Chang, V.; Du, X.; Guizani, M. V2V Routing in a VANET Based on the Autoregressive Integrated Moving Average Model. IEEE Trans. Veh. Technol. 2019, 68, 908–922. [Google Scholar] [CrossRef]

| Driver | # of Events | Total Time (min) | Average Time(s) | Driver | # of Events | Total Time (min) | Average Time(s) |

|---|---|---|---|---|---|---|---|

| 1 | 564 | 207.04 | 22.02 | 12 | 584 | 204.31 | 20.97 |

| 2 | 550 | 197.17 | 21.52 | 13 | 608 | 212.09 | 20.93 |

| 3 | 313 | 109.88 | 21.07 | 14 | 569 | 200.07 | 21.09 |

| 4 | 285 | 107.38 | 22.62 | 15 | 540 | 190.66 | 21.17 |

| 5 | 311 | 108.37 | 20.88 | 16 | 542 | 197.72 | 21.89 |

| 6 | 470 | 170.35 | 21.75 | 17 | 454 | 166.10 | 21.95 |

| 7 | 360 | 130.44 | 21.78 | 18 | 488 | 177.23 | 21.78 |

| 8 | 278 | 96.93 | 20.88 | 19 | 473 | 173.72 | 22.01 |

| 9 | 268 | 94.43 | 21.15 | 20 | 513 | 189.03 | 22.11 |

| 10 | 320 | 110.78 | 20.79 | 21 | 423 | 153.63 | 21.78 |

| 11 | 605 | 211.68 | 20.98 | Average | - | - | 21.49 |

| Algorithm | Cost Loss | Classification Accuracy | Computation Time (s) |

|---|---|---|---|

| MLP | 0.132 | 0.88 | 0.025 |

| FCN | 0.080 | 0.91 | 0.3171 |

| ResNet | 0.084 | 0.91 | 0.2547 |

| ELR-Net | 0.071 | 0.94 | 0.0831 |

| Model | Detail | Loss Function | Runtime (/epoch) | RMSE |

|---|---|---|---|---|

| Linear | Dense layer + Softmax | MSE | nearly 8s | 20.3094 |

| LSTM | LSTM layer + Softmax | MSE | nearly 170s | 11.5631 |

| ϵ-greedy LSTM | LSTM layer + Softmax | MSE with ϵ-greedy | nearly 100s | 5.5904 |

| Algorithm | False Warning Rate (%) | Correct Warning Rate (%) | Warning Accuracy (%) |

|---|---|---|---|

| The basic TLC | 14.1 | 75.2 | 87.7 |

| TLC-DSPLS | 2.3 | 96.1 | 97.4 |

| The proposed LDWM | 1.2 | 98.8 | 99.1 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, Q.; Yin, H.; Zhang, W. Lane Departure Warning Mechanism of Limited False Alarm Rate Using Extreme Learning Residual Network and ϵ-Greedy LSTM. Sensors 2020, 20, 644. https://doi.org/10.3390/s20030644

Gao Q, Yin H, Zhang W. Lane Departure Warning Mechanism of Limited False Alarm Rate Using Extreme Learning Residual Network and ϵ-Greedy LSTM. Sensors. 2020; 20(3):644. https://doi.org/10.3390/s20030644

Chicago/Turabian StyleGao, Qiaoming, Huijun Yin, and Weiwei Zhang. 2020. "Lane Departure Warning Mechanism of Limited False Alarm Rate Using Extreme Learning Residual Network and ϵ-Greedy LSTM" Sensors 20, no. 3: 644. https://doi.org/10.3390/s20030644